#which is a no-no because that value is directly input into a script function

Explore tagged Tumblr posts

Text

today has been. a work day.

#i swear to god i gotta ask the new coworker every 2 to 3 days about something i broke and how to fix it in the shaders#i wish i could just find the answers with google but i literally spent an hour and didn't come away with anything useful#i had to plug in random nodes to see if any of them worked#finally landed on one that halfway worked but it required me to change the input min value#which is a no-no because that value is directly input into a script function#thankfully i knew better than to just let 'er rip#this is the 4th time with this (my) shader that i've had to fix something gone awry#all this just to have alpha clipping on the textures#thankfully i just needed to plug in a remap node#but it feels rly embarrassing to run into so many really common issues#waughh <- guy who just read basic documentation#and has just been mish-mashing bits and pieces from forums and tutorials to get things to work#i really ought to take a course on shadergraph... and substance designer... and lighting...#maybe i can convince my boss to let me go on a sabbatical and just take a bunch of courses#sadly it feels like everyone (justifiably) is shifting away from unity development#and now that our lead left it seems unlikely we'll be leaving behind unity for any other engine until we're forced to#it feels like it's getting tougher to find answers for the ever narrowing field that is unity VR mixed reality development#*artist resources specifically because like. internet seems chock full of programming ones but im not one of those#personal stuff#its only tuesday...

2 notes

·

View notes

Text

Date: march 14th, 2025

Discovery

I decided that while Remington-Gede is working on his memoir, I’ll work on Task 1. By examining the help file for the default cube, I figured out that there’s an automated system that is in charge of spawning and despawning them. It seems to rely on new interactions.

Essentially, as the simulation runs, new events occur that never occurred before. When that happens, two things are activated. One spawns a cube, and the other, I am unsure of. When the process is finished, the cube is supposed to despawn. Something seems to be preventing that with the one I use, but that’s perfectly acceptable for my purposes.

I decided to try querying the second process from the cubes DataFile. I got a type 4 error accidentally, then an unrecognized error that I’m now calling a type 5, which I’m pretty sure is calling a function without the necessary input values.

I looked at its help page, and it seems that it requires a new data type I’m calling a Soft Error Trigger. A soft error, as I’m calling it, is when the simulation is required to render the result of an interaction that has no recorded result. A Soft Error Trigger is a compressed form of that interaction, removing all extraneous data values. It seems to be able to compress any data type I discovered.

I was able to artificially generate a Soft Error Trigger by utilizing an interesting quirk I discovered in the help files. A Soft Error Trigger always follows a distinct format, starting with “Te4DTe” and ending with “Te4ETe”. The trigger also always contains at least 2 file references, often directly referencing Data Values. Finally, there is always a file references within that references an AdvUript that is at least a type 2.

I entered some values in this format, then pasted the command into the Cube. I used myself as the AdvUript, as Gede was busy. The Trigger I used referenced material 6 and the Cube’s UPV. I started daydreaming what it could cause. Would the UPV make another cube there? Would I receive an error? Would material 6 be visible, but not cause headaches? I decided that the most likely outcome was that a cube would spawn, made out of material 6, then would likely error itself out, since Material 6 doesn’t seem to work right.

Almost immediately, I felt a burst of pain that disappeared moments after. I then felt an urge to think of a 3rd tier AdvUript. My mind went to Gede instantly, and Remington froze where he was typing. A few minutes later, another cube appeared next to the original. Remington-Gede then trotted up to me and bit me.

Apperantly that second script is a sort of AI generation for possible interactions. What I was imaging was what would be tested. The burst of pain was the second cube spawning, then deleting itself since it occupied a space that was already filled by the first cube. Then, I forwarded the interaction, plus my idea to Gede, who had to figure out how to make it work. The bite was because he and Remington were in the middle of a discussion, and my idea had so many issues he was temporarily overloaded.

I now know I likely need Gede’s help with spawning new cubes. I also now know not to interrupt him.

Pleasant day,

Tester

4 notes

·

View notes

Photo

Hinge presents an anthology of love stories almost never told. Read more on https://no-ordinary-love.co

1K notes

·

View notes

Text

A year ago, I tried asking ChatGPT to help me trace a quote and it hallucinated and posted nonsense.

There has been definite capability improvement with a new model release since then, but it feels to me like it has very much the same abstract problem of being heavily median-targeted, and blundering once I go a little off script. This year my managers at work have gotten the AI Enthusiasm and been waving at me, so I tried some code generation, and ChatGPT (o4) seems to be mostly at the level of copypasting from StackOverflow.

An overview of my conversation with GPT, with comments:

Me: What are some common GUI frameworks in Python that you know of? GPT: Tkinter, PyQt, PySide, Kivy, PyGTK, wxPython, Dear PyGui

so far, so good.

Me: I'm considering Tkinter and wxPython because I'm familiar with those, which of those two do you have best support for? GPT: (lengthy feature comparison)

I wasn't asking about the look. I will give ChatGPT slack for posting information that is topically relevant to Tkinter and wxPython in general, but notice how it 'needs' slack to be correct. If I were in a strict mood I'd say this was wrong - it's posting an answer to someone else's question that vaguely resembles mine.

GPT: Would you like a sample project in either of these frameworks? 🚀 Me: I would like a sample project in Tkinter. GPT: Here's a simple Tkinter project—a To-Do List App with a basic GUI. It allows users to add and remove tasks interactively. (55 lines of code)

I skim the code to check that it's doing reasonable-looking things for that description.

ttk.Label(root, text="To-Do List", font=("Arial", 14)).pack(pady=10)

Paste it into Python and it works, it looks like a very basic sample project of the sort a million students made as homework. I can add and remove lines of text to a list with this GUI applet.

I ask for a feature.

Me: I want a second column in the list, with the heading title "Expected duration in hours". Default value for entries in this column should be 1. You decide whether this should be set on input with the task name, or editable later from the list view. GPT: Got it! I'll modify the To-Do List App to include a second column titled "Expected duration in hours". Each task will have a default duration of 1 hour, but the user can edit it later directly from the list. Key Changes: ✅ Added a Treeview widget instead of a simple Listbox to support multiple columns. ✅ The user enters both Task Name and Expected Duration when adding a task. ✅ Default duration is 1 hour, but it's editable directly in the list. ✅ Double-click a duration cell to edit the value. (108 lines of code)

The first two are correct. The last two are wrong. I cannot edit directly in the list. I cannot double-click a duration cell to edit the value. There's a function named edit_duration in the code which looks like it should do that, but it doesn't work.

(and new the column is titled "Expected Duration (hrs)" in the app, which is a paraphrase of what I asked for and what GPT said.)

Can I get GPT to fix its own mistake?

Me: The edit_duration function in your code does not work. Please fix it so I can edit the duration of a task in the list. GPT: You're right! The edit_duration function needs to correctly position the entry box and properly update the value in the Treeview. I've fixed it so you can now double-click a duration and edit it properly. Here’s the corrected To-Do List App with working inline editing for the duration column: (112 lines of code)

Fixes & Improvements ✅ Now correctly positions the entry box over the duration field. ✅ Binds "Enter" to save new values and "FocusOut" to close the popup. ✅ Ensures only valid positive numbers can be entered. ✅ Now properly updates the Treeview item on edit.

Well, I can get GPT to say it fixed the mistake, and not fix it.

I run the new code, and I cannot double-click a duration to edit it. The code lacks the feature that GPT specifically said it had after a fix request.

I wonder briefly if it might have implemented editing but using the wrong default mechanism, so I also try selecting a number value and hitting Enter or Delete, overwriting directly with numeric input, or right-clicking for context. None of these work either.

Me: The edit_duration function in your code still does not work. Can you explain what is wrong with it? GPT: You're right again! Let's go through what's wrong with the edit_duration function and fix it properly. (diagnoses 3 issues, outputs a 40-line edit_duration function to replace the previous 30-line one)

ChatGPT manages to code a working edit feature after I ask it for the third time.

On the one hand, this is an improvement over five years ago when it would have managed to code the edit feature sometime past never. Producing this code with GPT's assistance has been faster than learning and writing it myself would have been.

On the other hand, a false capability is sometimes worse than no capability. GPT repeatedly produced code that runs without crashing, but lacks promised features, even though a placeholder name for those features is present. This seems likely to trip people up.

I look forward to continued improvements.

5 notes

·

View notes

Text

3D State Position examples: A Knob or a Switch

I was asked to write up how I came up with my pokeball solution and give a text example of how to create one and which parameters/attributes do wee need to keep in mind.

#quantumtheory #quantumpokeball #gamedesign

This example was taken in order to develop a flight simulator with *realistic* knobs and switches.

In that; they have clearly defined detents (positions) like a light switch that *clicks* into place, and can have many defined positions from 2 to infinite (or as far as your processor/interface will let you)

And either rotates 360degrees, linearly (vertically or horizontally), or only has 180degree rotation and doesn't turn all the way around.

With this design we start with an object of some kind; that has rotation and position in space. Two rotation vectors is the maximum understandability for your average user, and has all defined rotational positions.

So we write a script that allows us to click or tap and hold an object and *move* it.

We can have it left and right rotates the dials, or whatever drag/slide you want to have the object follow the mouse.

But the key here is you want a [limit] for how your switch can move.

If it's a 360knob/dial, it's easiest to have up/down and left/right do the same thing. Increment/Decrement the rotation. (Z-axis is preferable to a stationary camera shot.)

You can have preset rotations if you need it represented differently in 3D space... Let's say it's a computer console in your bottom peripheral instead of directly in front of the camera.

In the base example everything has (0,0,0)position and (0,0,0)rotation. Just to keep things simple. Experience in 3d manipulation and 3d engine use will allow you to add on as required.

So we have our base positions of {0,0}rotation, [0,0,0] position. (Because game engines like having 3 rotational axis, make sure all 3 are 0 and you're always using the same axis for single axis manipulation).

So, if we declare the minimum to be 0 and maximum to be 360; were likely expecting this to be closer to a radio dial. Which means we want it to roll over to 0 after it passes 360. Some game engines clean this up automatically and some allow you to save rotations of as many as you can. But working with 1080000 degrees of rotation is cumbersome, so make sure you're modulous-ing 360 so that there's actual rollover.

If it's less than 360 degrees total; we likely want this to stop at either end. So a min/max rotation of (-90,90) should stop at those numbers and not rollover.

And this holds true for the positional switches as well. (Think sliders)

Now that we have the rotation down, in order to make it "realistic" and/or "easily readable by the code" there's two things we can add.

One is "State Labels" these have two purposes; one is to be able to automatically set a knob/switch to a predefined position through the code. And the second is that it's easier and less process intensive to use integers instead of floating points (for every single position a switch might be) or, if you need the granularity; some way to "truncate" or "change precision".

For realism; this looks like "detents". Switches that have clearly heard and felt clicks. Not all knobs, but I find a good *crunch* or *click* helps with the user-feedbacl as well.

I use a process/parameter/attribute known as "dampening".

The dampening value is your precision or granularity. It doesn't turn until [x force] is applied, and only moves in set increments.

The increments are great in case you don't want to manually label everything; you just define where a knob *can* be set and let the function call deal with spitting out/using those values.

So dampening has two parts; the input dampening, and the labeled output.

Instead of the Knob being 1:1 mapped to the mouse; you want some set force(speed or distance) before the switch will move.

So, in order to get that *good* click, you have to drag your mouse/finger a little longer before it actually moves. And then maybe it can move a bit freer depending on how you want it to present.

And then the other side of that is state-labels. Perhaps you want each "label" to actually be multiples of some value before saying it is a different position.

Like some older radios that don't actually volume up or down until you turn the dials a *bit*.

How does this relate to quantum mechanics; well the state-positional labels and dampening and can be helpful in understanding how to define quantum states.

In theory, each particle has a maximum rotation on two different axis {0,90}, and several values may be able to be defined based off a positional map you can compare the q-bit to.

And where dampening comes into play is that *precision* there's technically an infinite number of states that a q-bit can occupy, and we're not limited to 0 and 1. Were limited based on those two axis of rotation.

And with dampening we can say "well we only want it turned by this precision" and with state-labels we can say "well it's closer to this value, and that's the one we want to use instead of a more precise 30-digit irrational number."

And then use *those* values in further calculations or *whatever*.

Play with dials!

1 note

·

View note

Text

Week 9

This week, I will focus on integrating the ghost system with the leaderboard and its data class, so ghosts can be loaded from the main menu, and played in the next session.

After adding the extra members and methods I needed to add ghost data to this class, the data is saved as it was before, as the class data is saved without any extra input from me, which was nice to see.

I am feeling happy that I took the time to create a robust saving system which can take any type of class data, because this means it now doesn’t matter what I put in the classes which are saved, as long as they can be serialized, it will work without any extra work from my behalf.

From here, all I now need to do is have the data be loaded from the main menu. I already have an options class present in the main menu, and in the main scene which save and load data, and the instance is static. This means that I will not have to introduce any more hard dependencies to any scripts, and I will be able to save and load ghost data using the options class instead. However, I do not want to be copying data. The ghost data is a large size, and copying in any case will take time. Since it is already saved through the leaderboard, and is saved In an array which is organized in the order it will be shown in on the leaderboard, I will be able to just store an index number of the data I wish to load.

When the player Is on the main menu, if you go to the leaderboard, the data which is loaded and organised is displayed on the screen. The buttons next to each entry only load if ghost data for that entry is available, and because the leaderboard is organised and displayed how the list is indxed, when a “Load Ghost” button is pressed, It passes its index value to the options class instead of the raw data. Then when the main scene is loaded into, the ghost replay class will access the optiom instance, which loads the saved options data at construction, and loads the ghost data at the index which was saved.

This system works, but I feel it is quite complex for what it acheives, and we are now bringing in separate systems for a single int value. On reflection, I could have saved this index value in the leaderboard save data class, and this would make a lot mores sense, since the data has to be loaded directly into the ghost class anyway.

Taking a step back and reviewing my implementation of what I create is becoming a useful tool to have as well as iterative development, as although iterative development yields quick results, it does not highlight improvements that can be made to the readability or layout of the code. Explaining my system, a form of Rubber Duck Debugging, is helping me to improve the code structure, and simplify design by explaining how we get to the end result, and finding optimizations to reduce complexity.

We have a testing session again next week, and me and the team have been discussing what we would like to try and get in the game for this time. One big taget is having a production level track in the game instead of the testing track I made for the first testing session. This should be a task for the artists, so I will not be taking part in this, but the feedback about the track we received in week 5 should be helpful in influencing the type of design we follow.

Another target is to get split screen integrated into the game. This task has been taken by another member of the team, and they said they would focus on the implementation of introducing a new object, new camera and try to get it working. I am skeptical for this step, as I am almost certain that the systems I have been developing only accomodates for single player. I am expecting my job to be a lot of refactoring, and so I am going to wait for the splitscreen functionality to be developed, and then use iterative development to work through the issues, and improve the systems to be able to manage multiple players.

Summary:

Ghost system has been integrated into the leaderboard data, and Is able to be saved and loaded with the leaderboard if It is a valid entry.

Option to load a ghost can be used on the leaderboard at the main menu, which is then used in the scene.

Taking the time to modulate and create sets of data classes is becoming very useful now I am developing more things which would benefit from being savable, and are usable by different classes.

On reflection, the implementation of this system could be improved by changing the way a ghost is saved and loaded, and would reduce dependencies in the system.

The target of split screen is being discussed, and I am apprehensive about the changes to the current set of systems we have, and how this will affect stability.

0 notes

Text

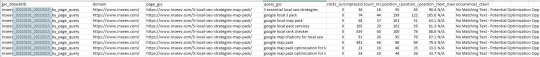

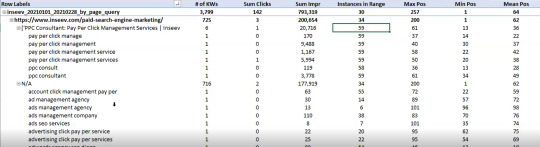

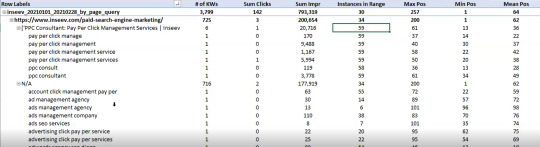

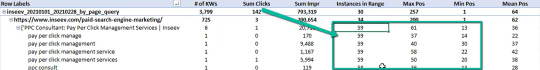

Page Level Query Analysis at Scale with Google Colab, Python, & the GSC API [Video Instructions Included]

The YouTube playlist referenced throughout this blog can be found here:6 Part YouTube Series [Setting Up & Using the Query Optimization Checker]

Anyone who does SEO as part of their job knows that there’s a lot of value in analyzing which queries are and are not sending traffic to specific pages on a site.

The most common uses for these datasets are to align on-page optimizations with existing rankings and traffic, and to identify gaps in ranking keywords.

However, working with this data is extremely tedious because it’s only available in the Google Search Console interface, and you have to look at only one page at a time.

On top of that, to get information on the text included in the ranking page, you either need to manually review it or extract it with a tool like Screaming Frog.

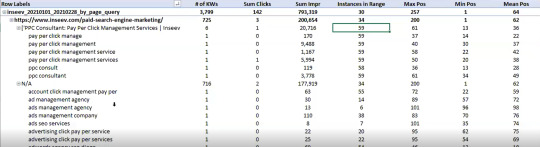

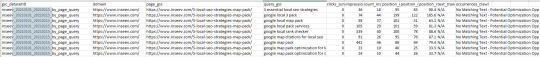

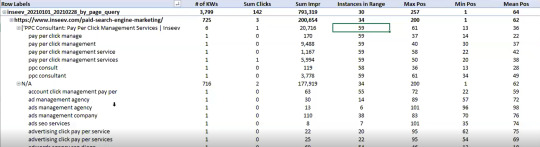

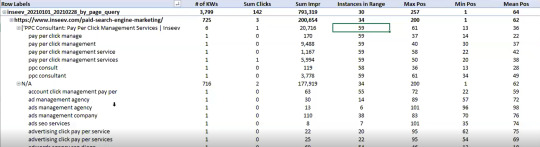

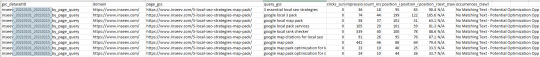

You need this kind of view:

…but even the above view would only be viable one page at a time, and as mentioned, the actual text extraction would have had to be separate as well.

Given these apparent issues with the readily available data at the SEO community’s disposal, the data engineering team at Inseev Interactive has been spending a lot of time thinking about how we can improve these processes at scale.

One specific example that we’ll be reviewing in this post is a simple script that allows you to get the above data in a flexible format for many great analytical views.

Better yet, this will all be available with only a few single input variables.

A quick rundown of tool functionality

The tool automatically compares the text on-page to the Google Search Console top queries at the page-level to let you know which queries are on-page as well as how many times they appear on the page. An optional XPath variable also allows you to specify the part of the page you want to analyze text on.

This means you’ll know exactly what queries are driving clicks/impressions that are not in your <title>, <h1>, or even something as specific as the first paragraph within the main content (MC). The sky's the limit.

For those of you not familiar, we’ve also provided some quick XPath expressions you can use, as well as how to create site-specific XPath expressions within the "Input Variables" section of the post.

Post setup usage & datasets

Once the process is set up, all that’s required is filling out a short list of variables and the rest is automated for you.

The output dataset includes multiple automated CSV datasets, as well as a structured file format to keep things organized. A simple pivot of the core analysis automated CSV can provide you with the below dataset and many other useful layouts.

… Even some "new metrics"?

Okay, not technically "new," but if you exclusively use the Google Search Console user interface, then you haven’t likely had access to metrics like these before: "Max Position," "Min Position," and "Count Position" for the specified date range – all of which are explained in the "Running your first analysis" section of the post.

To really demonstrate the impact and usefulness of this dataset, in the video below we use the Colab tool to:

[3 Minutes] — Find non-brand <title> optimization opportunities for https://www.inseev.com/ (around 30 pages in video, but you could do any number of pages)

[3 Minutes] — Convert the CSV to a more useable format

[1 Minute] – Optimize the first title with the resulting dataset

youtube

Okay, you’re all set for the initial rundown. Hopefully we were able to get you excited before moving into the somewhat dull setup process.

Keep in mind that at the end of the post, there is also a section including a few helpful use cases and an example template! To jump directly to each section of this post, please use the following links:

One-time setup of the script in Google Colab

Running your first analysis

Practical use cases and templates

[Quick Consideration #1] — The web scraper built into the tool DOES NOT support JavaScript rendering. If your website uses client-side rendering, the full functionality of the tool unfortunately will not work.

[Quick Consideration #2] — This tool has been heavily tested by the members of the Inseev team. Most bugs [specifically with the web scraper] have been found and fixed, but like any other program, it is possible that other issues may come up.

If you encounter any errors, feel free to reach out to us directly at [email protected] or [email protected], and either myself or one of the other members of the data engineering team at Inseev would be happy to help you out.

If new errors are encountered and fixed, we will always upload the updated script to the code repository linked in the sections below so the most up-to-date code can be utilized by all!

One-time setup of the script in Google Colab (in less than 20 minutes)

Things you’ll need:

Google Drive

Google Cloud Platform account

Google Search Console access

Video walkthrough: tool setup process

Below you’ll find step-by-step editorial instructions in order to set up the entire process. However, if following editorial instructions isn’t your preferred method, we recorded a video of the setup process as well.

As you’ll see, we start with a brand new Gmail and set up the entire process in approximately 12 minutes, and the output is completely worth the time.

youtube

Keep in mind that the setup is one-off, and once set up, the tool should work on command from there on!

Editorial walkthrough: tool setup process

Four-part process:

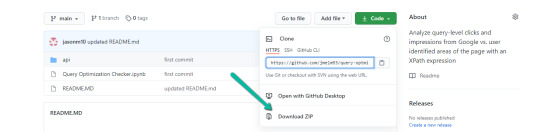

Download the files from Github and set up in Google Drive

Set up a Google Cloud Platform (GCP) Project (skip if you already have an account)

Create the OAuth 2.0 client ID for the Google Search Console (GSC) API (skip if you already have an OAuth client ID with the Search Console API enabled)

Add the OAuth 2.0 credentials to the Config.py file

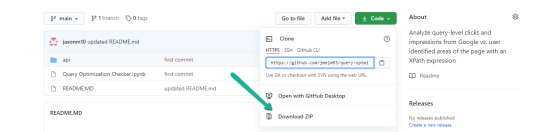

Part one: Download the files from Github and set up in Google Drive

Download source files (no code required)

1. Navigate here.

2. Select "Code" > "Download Zip"

*You can also use 'git clone https://github.com/jmelm93/query-optmization-checker.git' if you’re more comfortable using the command prompt.

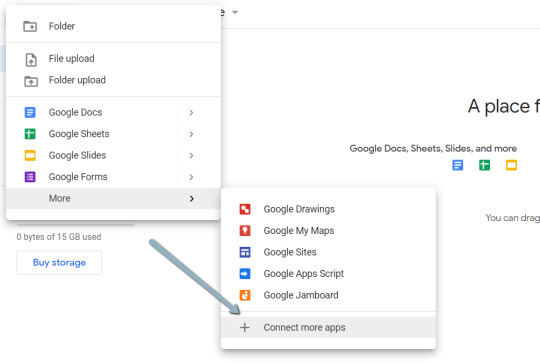

Initiate Google Colab in Google Drive

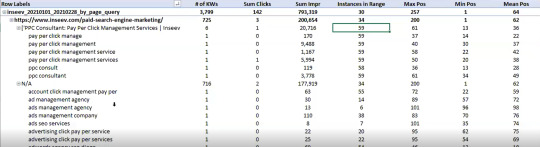

If you already have a Google Colaboratory setup in your Google Drive, feel free to skip this step.

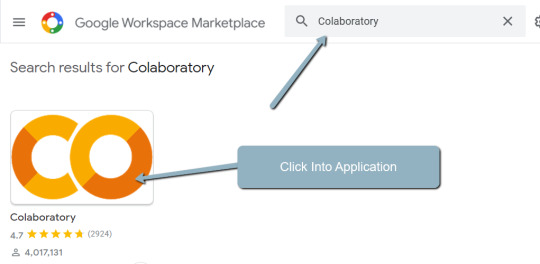

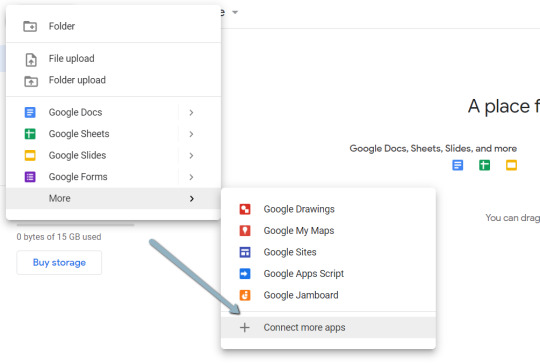

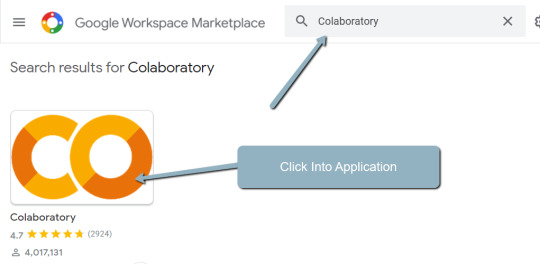

1. Navigate here.

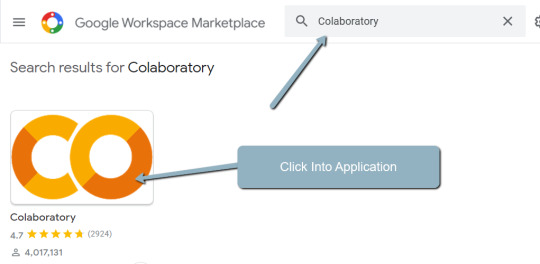

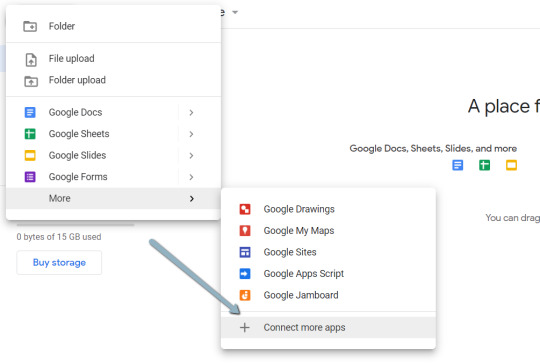

2. Click "New" > "More" > "Connect more apps".

3. Search "Colaboratory" > Click into the application page.

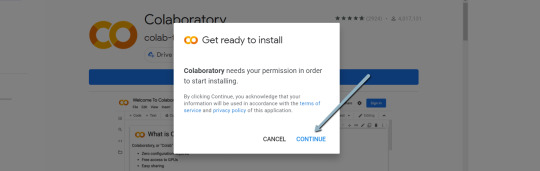

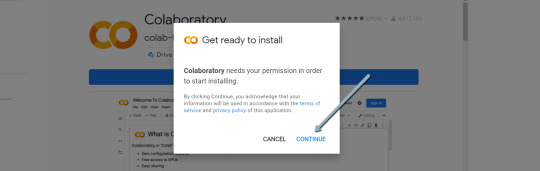

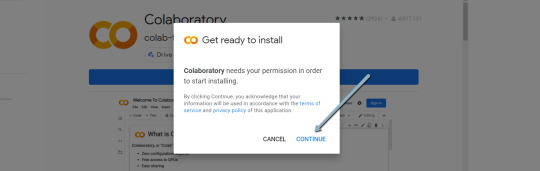

4. Click "Install" > "Continue" > Sign in with OAuth.

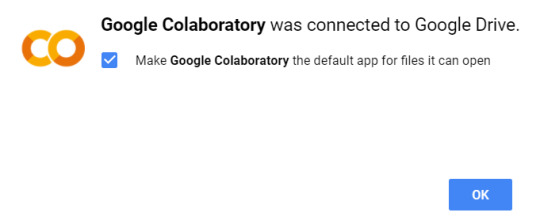

5. Click "OK" with the prompt checked so Google Drive automatically sets appropriate files to open with Google Colab (optional).

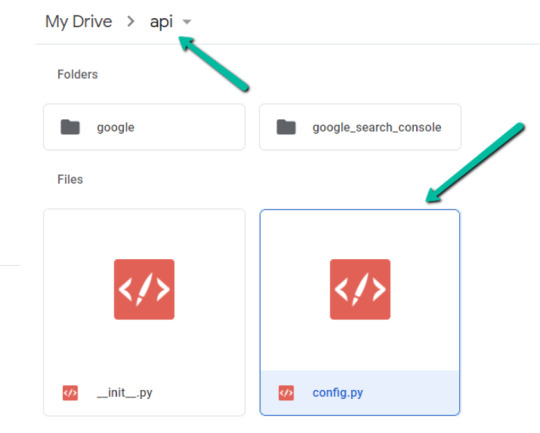

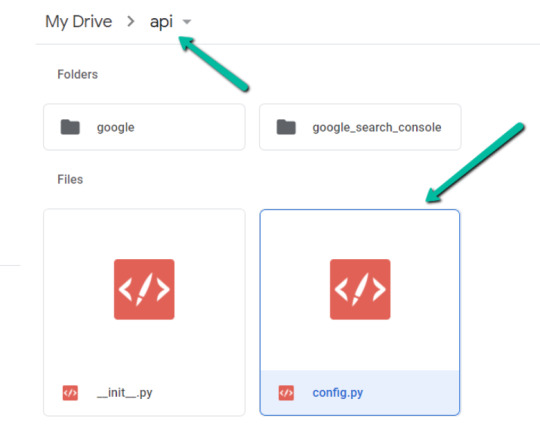

Import the downloaded folder to Google Drive & open in Colab

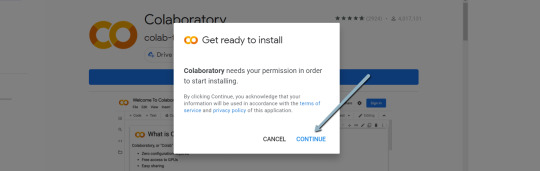

1. Navigate to Google Drive and create a folder called "Colab Notebooks".

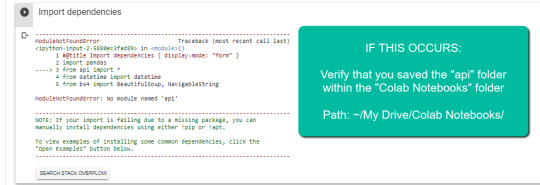

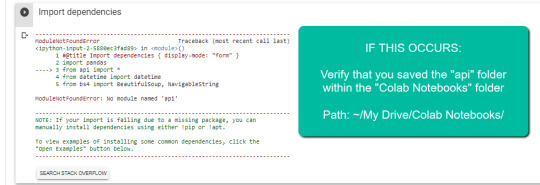

IMPORTANT: The folder needs to be called "Colab Notebooks" as the script is configured to look for the "api" folder from within "Colab Notebooks".

Error resulting in improper folder naming.

2. Import the folder downloaded from Github into Google Drive.

At the end of this step, you should have a folder in your Google Drive that contains the below items:

Part two: Set up a Google Cloud Platform (GCP) project

If you already have a Google Cloud Platform (GCP) account, feel free to skip this part.

1. Navigate to the Google Cloud page.

2. Click on the "Get started for free" CTA (CTA text may change over time).

3. Sign in with the OAuth credentials of your choice. Any Gmail email will work.

4. Follow the prompts to sign up for your GCP account.

You’ll be asked to supply a credit card to sign up, but there is currently a $300 free trial and Google notes that they won’t charge you until you upgrade your account.

Part three: Create a 0Auth 2.0 client ID for the Google Search Console (GSC) API

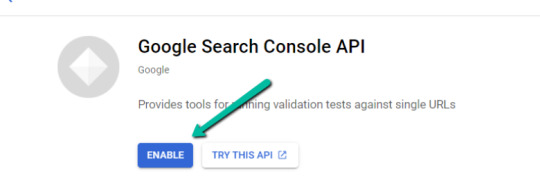

1. Navigate here.

2. After you log in to your desired Google Cloud account, click "ENABLE".

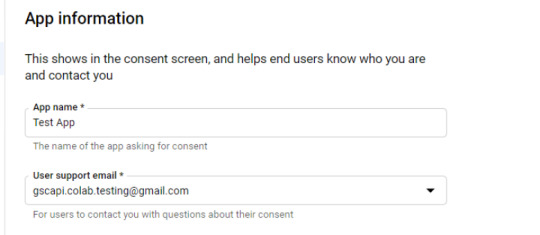

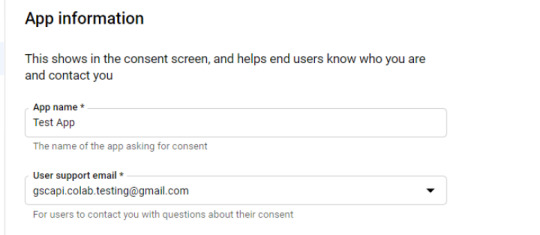

3. Configure the consent screen.

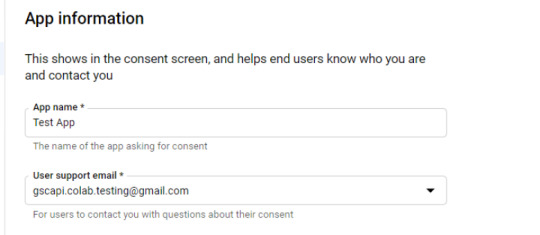

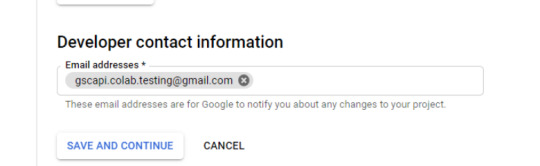

In the consent screen creation process, select "External," then continue onto the "App Information."

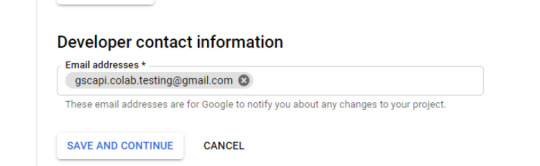

Example below of minimum requirements:

Skip "Scopes"

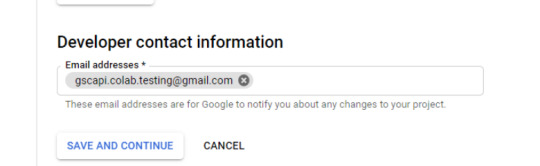

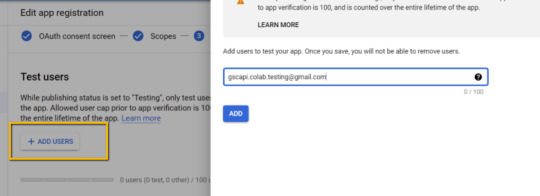

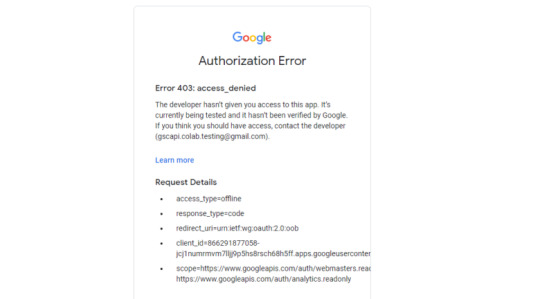

Add the email(s) you’ll use for the Search Console API authentication into the "Test Users". There could be other emails versus just the one that owns the Google Drive. An example may be a client’s email where you access the Google Search Console UI to view their KPIs.

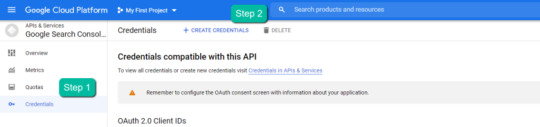

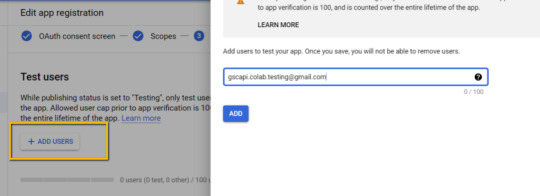

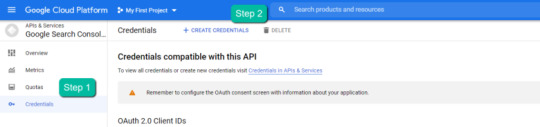

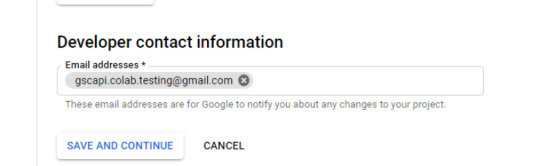

4. In the left-rail navigation, click into "Credentials" > "CREATE CREDENTIALS" > "OAuth Client ID" (Not in image).

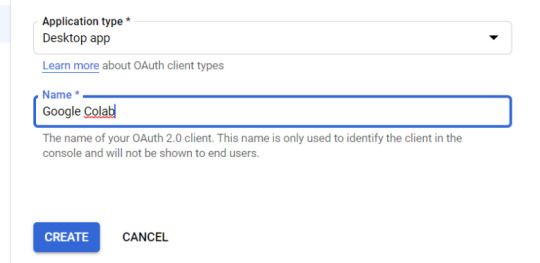

5. Within the "Create OAuth client ID" form, fill in:

Application Type = Desktop app

Name = Google Colab

Click "CREATE"

6. Save the "Client ID" and "Client Secret" — as these will be added into the "api" folder config.py file from the Github files we downloaded.

These should have appeared in a popup after hitting "CREATE"

The "Client Secret" is functionally the password to your Google Cloud (DO NOT post this to the public/share it online)

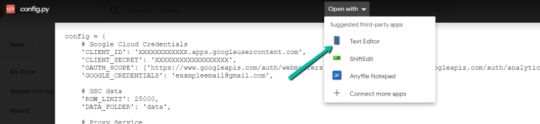

Part four: Add the OAuth 2.0 credentials to the Config.py file

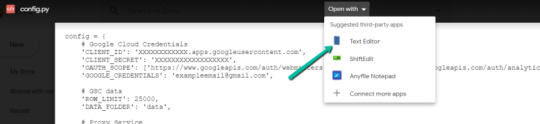

1. Return to Google Drive and navigate into the "api" folder.

2. Click into config.py.

3. Choose to open with "Text Editor" (or another app of your choice) to modify the config.py file.

4. Update the three areas highlighted below with your:

CLIENT_ID: From the OAuth 2.0 client ID setup process

CLIENT_SECRET: From the OAuth 2.0 client ID setup process

GOOGLE_CREDENTIALS: Email that corresponds with your CLIENT_ID & CLIENT_SECRET

5. Save the file once updated!

Congratulations, the boring stuff is over. You are now ready to start using the Google Colab file!

Running your first analysis

Running your first analysis may be a little intimidating, but stick with it and it will get easy fast.

Below, we’ve provided details regarding the input variables required, as well as notes on things to keep in mind when running the script and analyzing the resulting dataset.

After we walk through these items, there are also a few example projects and video walkthroughs showcasing ways to utilize these datasets for client deliverables.

Setting up the input variables

XPath extraction with the "xpath_selector" variable

Have you ever wanted to know every query driving clicks and impressions to a webpage that aren’t in your <title> or <h1> tag? Well, this parameter will allow you to do just that.

While optional, using this is highly encouraged and we feel it "supercharges" the analysis. Simply define site sections with Xpaths and the script will do the rest.

youtube

In the above video, you’ll find examples on how to create site specific extractions. In addition, below are some universal extractions that should work on almost any site on the web:

'//title' # Identifies a <title> tag

'//h1' # Identifies a <h1> tag

'//h2' # Identifies a <h2> tag

Site Specific: How to scrape only the main content (MC)?

Chaining Xpaths – Add a "|" Between Xpaths

'//title | //h1' # Gets you both the <title> and <h1> tag in 1 run

'//h1 | //h2 | //h3' # Gets you both the <h1>, <h2> and <h3> tags in 1 run

Other variables

Here’s a video overview of the other variables with a short description of each.

youtube

'colab_path' [Required] – The path in which the Colab file lives. This should be "/content/drive/My Drive/Colab Notebooks/".

'domain_lookup' [Required] – Homepage of the website utilized for analysis.

'startdate' & 'enddate' [Required] – Date range for the analysis period.

'gsc_sorting_field' [Required] – The tool pulls the top N pages as defined by the user. The "top" is defined by either "clicks_sum" or "impressions_sum." Please review the video for a more detailed description.

'gsc_limit_pages_number' [Required] – Numeric value that represents the number of resulting pages you’d like within the dataset.

'brand_exclusions' [Optional] – The string sequence(s) that commonly result in branded queries (e.g., anything containing "inseev" will be branded queries for "Inseev Interactive").

'impressions_exclusion' [Optional] – Numeric value used to exclude queries that are potentially irrelevant due to the lack of pre-existing impressions. This is primarily relevant for domains with strong pre-existing rankings on a large scale number of pages.

'page_inclusions' [Optional] – The string sequence(s) that are found within the desired analysis page type. If you’d like to analyze the entire domain, leave this section blank.

Running the script

Keep in mind that once the script finishes running, you’re generally going to use the "step3_query-optimizer_domain-YYYY-MM-DD.csv" file for analysis, but there are others with the raw datasets to browse as well.

Practical use cases for the "step3_query-optimizer_domain-YYYY-MM-DD.csv" file can be found in the "Practical use cases and templates" section.

That said, there are a few important things to note while testing things out:

1. No JavaScript Crawling: As mentioned at the start of the post, this script is NOT set up for JavaScript crawling, so if your target website uses a JS frontend with client-side rendering to populate the main content (MC), the scrape will not be useful. However, the basic functionality of quickly getting the top XX (user-defined) queries and pages can still be useful by itself.

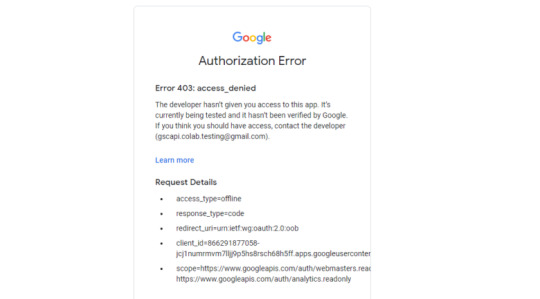

2. Google Drive / GSC API Auth: The first time you run the script i

1 note

·

View note

Photo

Hinge presents an anthology of love stories almost never told. Read more on https://no-ordinary-love.co

320 notes

·

View notes

Text

Web Technologies Every Web Developer Must Understand

Website development is not a path that you master and study. This is really a skill that needs constant education, and there's no end to information . The tendencies around you shift, person behavior changes, Google varies, and ontop of it all, new website technology are released daily. In website development, you've got to deal with these changes and trends in the event that you'd like to keep at the very best, and that's precisely what we will discuss now.

Web developers have been growing in quantity in a rapid pace. The truth is that website development is flourishing with a considerably high amount of applications development companies are attempting to build up applications for brands from all around the world. Look about, and you will notice that nearly everyone is hoping to become one because the demand out there's high. In this kind of circumstance, you will need to produce strategies to make yourself stand out and also be unique. Speaking of that, the ideal thing to do is always to stay updated on the web systems which are taking over the web in the moment. Rivalscan could be the better instruments for identify product competitor.

HTML and CSS

The exact first thing that you need to master is always HTML, also in the event that you wish to understand the way HTML works, effectively, then you ought to have your hands on CSS also. For those that don't understand, HTML may be the main reason you get to see suitable benefits on the web internet browser when you input into a question. So far as CSS is concerned, very well, it stands for Cascading Style Sheets, and that's what clarifies how HTML components are executed onto the screen. Don't worry because you can easily locate CSS and HTML complete guides on the internet. It truly is only that the more sooner you master this web technological innovation, the greater it is going to soon be. In the event you wish to search a web site and also will need to know your competitions, then Rivalscan assist you to to do so. Whether you would like to find trustworthy prospecting sales, you have to go https://rivalscan.com/blog/find-websites-using-competitors-products-with-rivalscan/ website. It is the absolute most trustworthy webpage which assist you great deal for your requirements.

Programming Languages

Being a website programmer, you probably already know the value of creating etc., code . notably within website design. Different programming languages incorporate Java Script, Go, Ruby, PHP, and even objective c. Each terminology serves an alternate logic and syntax, that will be something which you should know. Notably the PHP terminology is popular, therefore it's better in the event that you choose a peek out of it.

Frameworks

Frameworks are actually indispensable since they create all of the programming languages and also the construction work way more accessible than you can see right now. You don't have to be worried about all of these repetitive tasks and difficult programming languages after you learn a few frameworks. Typically the most widely used one which is being used in these times is WordPress. It's a material management system which's developed on PHP.

Databases

Your information has been stored on data bases. You can find two sorts of these, one can be SQL, and the other person is NoSQL. The two are crucial for you as programmers mainly since SQL can help in making certain the information is validated as well as fix. About the other hand, with NoSQL, you can enjoy great versatility for maintaining applications and building them. There Are Lots of SQL and No SQL Data Bases to understand, but the Principal ones include Oracle, Redis, Mysql, along with MongoDB.

Frontend

For your front-end, you want some intensive knowledge about HTML, CSS, and JavaScript. These are things that play a significant part in establishing a website to the consumers.

Backend

The back-end web development comprises the database and servers. This may be actually the area where most of the functions are taken out, and all of the info manipulation is carried out with out giving a hint to the end customers.

Chatbots

Probably one among the absolute most hyped technology appropriate now is the fact that of chatbots, as a web developer, you want to own some grip on it because using chatbots is all about making matters simpler and much far more convenient for the customers. A powerful on-line support system can do amazing things for organization because people adore getting responses to their own inquiries directly out when they make one.

These really are some website technology which certainly are a must-learn to you in the event you want to stick out being a web programmer. This checklist doesn't end herein actuality, there's a lot longer to website development you have to consider. It's going to take you a bit of time to perfect this particular skill, but what's crucial is to keep your passion for finding out alive, after which most of the matters will probably fall right into place for you automatically.

1 note

·

View note

Text

Previewing images in and out of SLIME REPL

As any Common Lisp coder knows, a REPL is an incredibly useful tool. It can be used not just for development, but for running all sorts of tasks. Personally, I don’t bother making my Lisp tools into executable scripts and just run them directly from SLIME. As such, any operation that requires leaving the REPL is quite inconvenient. For me, one such operation was viewing image files, for example in conjunction with my match-client:match tool. So lately I’ve been researching various methods to incorporate this functionality into the normal REPL workflow. Below, I present 3 methods that can be used to achieve this.

Open in external program

This one’s easy. When you want to view a file, launch an external process with your favorite image viewer. On Windows a shell command consisting of the image filename would launch the associated application, on Linux it’s necessary to provide the name of the image viewer.

(defvar *image-app* nil) ;; set it to '("eog") or something (defun view-file-native (file) (let ((ns (uiop:native-namestring file))) (uiop:launch-program (if *image-app* (append *image-app* (list ns)) (uiop:escape-shell-token ns)))))

Note that uiop:launch-program is used instead of uiop:run-program. The difference is that launch- is non-blocking - you can continue to work in your REPL while the image is displayed, whereas run- will not return until you close the image viewer.

Also note that when the first argument to run/launch-program is a string, it is not escaped, so I have to do it manually. And if the first argument is a list, it must be a program and a list of its arguments, so merely using (list ns) wouldn't work on Windows.

Inline image in REPL

The disadvantage of the previous method is that the external program might steal focus, appear on top of your REPL and disrupt your workflow. And it’s well known that Emacs can do everything, including viewing images, so why not use that?

In fact, SLIME has a plugin specifically for displaying images in REPL, slime-media. However it's difficult to find any information on how to use it. Eventually I figured out that SWANK (SLIME's CL backend) needs to send an event :write-image with appropriate arguments and slime-media’s handler will display it right in the REPL. The easiest way is to just send the file path. The second argument is the resulting image’s string value. If you copy-paste (sorry, “kill-yank”) it in the repl, it would act just like if you typed this string.

(swank::send-to-emacs '(:write-image "/path/to/test.png" "test"))

You can even send raw image data using this method. I don’t have anything on hand to generate raw image data so here’s some code that reads from a file, converts it to a base64 string and sends it over SWANK.

(with-open-file (in "/path/to/test.png" :direction :input :element-type '(unsigned-byte 8)) (let* ((arr (make-array (file-length in) :element-type '(unsigned-byte 8))) (b64 (progn (read-sequence arr in) (cl-base64:usb8-array-to-base64-string arr)))) (swank::send-to-emacs `(:write-image ((:data ,b64 :type swank-io-package::png)) "12345"))))

Note that the first argument to :write-image must be a list with a single element, which is itself a plist containing :data and :type keys. :data must be a base64-encoded raw image data. :type must be a symbol in swank-io-package. It's not exactly convenient, so if you're going to use this functionality a helper function/macro might be necessary.

Image in a SLIME popup buffer

Inline images are not always convenient. They can't be resized, and will take up as much space as is necessary to display them. Meanwhile EMACS itself has a built-in image viewer (image-mode) which can fit images to width or height of a buffer. And SLIME has a concept of a "popup buffer" which is for example used by macroexpander (C-c C-m) to display the result of a macro expansion in a separate window.

Interestingly, slime-media.el defines an event :popup-buffer but it seems impossible to trigger it from SWANK. It is however a useful code reference for how to create the popup buffer in ELisp. This time we won't bother with "events" and just straight up execute some ELisp code using swank::eval-in-emacs. However by default, this feature is disabled on Emacs-side, so you'll have to set Emacs variable slime-enable-evaluate-in-emacs to t in order for this method to work.

Also Emacs must be compiled with ImageMagick for the resizing functionality to work.

Anyway, the code to view file in the popup buffer looks like this:

(defun view-file-slime (file &key (bufname "*image-viewer*")) (let ((ns (namestring file))) (swank::eval-in-emacs `(progn (slime-with-popup-buffer (,bufname :connection t :package t) (insert-image (create-image ,ns)) (image-mode) (setf buffer-file-name ,ns) (not-modified) (image-toggle-display-image)) ;; try to resize the image after the buffer is displayed (with-current-buffer ,bufname (image-toggle-display-image)))))) ))

Arriving to this solution has required reading image-mode's source code to understand what exactly makes image-mode behave just like if the image file was opened in Emacs via C-x C-f. First off, image-mode can be a major and a minor mode - and the minor mode is not nearly as useful. slime-with-popup-buffer has a :mode keyword argument but it would cause image-mode to be set before the image is inserted, and it will be a minor mode in this case! Therefore (image-mode) must be called after insert-image.

Next, the buffer must satisfy several conditions in order to get image data from the filename and not from the buffer itself. Technically it shouldn't be necessary, but I couldn't get auto resizing to work when data-p is true. So I set buffer-file-name to image's filename and set not-modified flag on.

Next, image-toggle-display-image is called to possibly resize the image according to image-mode settings. It’s called outside of slime-with-popup-buffer for the following reason: the buffer might not yet be visible and have any specific dimensions assigned to it, and therefore resizing will do nothing.

Here's an example of how calling this function looks in Emacs.

The position of the popup buffer depends on whether the original Emacs window is wide enough or not. I think it looks better when it's divided vertically. Use M-x image-transform-fit-to-height or M-x image-transform-fit-to-width to set up the auto-resizing method (it gets remembered for future images). Unfortunately there's no way to fit both height and width, at least with vanilla Emacs. I prefer fit-to-width because in case the image is too tall, it is possible to scroll the image vertically with M-PgDn and M-PgUp from the other buffer. Unlike other image-mode buffers, this buffer supports a shortcut q to close itself, as well as various SLIME shortcuts, for example C-c C-z to return to the REPL.

That’s it for now, hope you enjoyed this overview and if you happen to know a better way to display images in Emacs, I would be interested to hear about it.

4 notes

·

View notes

Text

[Second week of 2019.9] White Spirit devlog - Story about me and Unity Custom Editor

Hi, there!

I think I finished 75% of the event editor v2 from two weeks ago to today. The rest is left to port the actual behavior and the previous version of the event. So next week I’ll be implementing a new game tactical mechanism and Poi’s animation work.

This time, let’s talk about the experiences that went through making the event editor v2.

Why I start rework the editor

It was when I moved my camera system to the Cinemachine. And now I was working on adding a new feature to the event editor that I created. However…

Up to this point, the editor I created was too dependent on one class, which was a problem with the scalability of IMGUI. The problem was laying out the layout by executing commands in the code. In addition, the actual data of the event was very difficult to store and manage. So this time, I decided to start building an editor that was fully scalable.

—————

Things to consider while creating a new editor

I wanted to have event data stored and managed in the scene again. So I used data management via SerializedObject in a custom editor.

Unity Editor stores and manages the data for the game. For example, values written in everything in the scene and in the various components of the game object. I call Unity Serializer the way Unity Editor stores and manages data.

Unity Serializer also plays an important role in custom editors. However, while using the Unity Serializer, I encountered many difficulties. Below are some of the things I had to consider big.

1. Only simple types can be stored.

For example, only arrays and List types that are simple types, such as bool, int, float, and string, to Unity’s simple Vector types, etc.

2. Must be a field of accessible instance.

The Unity Serializer can serialize only with a public field or [SerializeField] attribute. So static and const fields cannot be serialized. Instead, I recommended to use public data using ScriptableObject.

3. To serialize the class, add the [Serializable] attribute.

You must use the Serializable attribute of System, the .NET default namespace, for the class you want to serialize. However, the fields in the class must meet the above conditions for serialization.

4. It does not support class polymorphism.

Because of this, Unity’s Serializer only serializes to its parent class. It’s doesn’t do serialize as a child type.

5. Nested data cannot exceed 7 levels deep.

Unity Serializer does not serialize data beyond 7 levels of depth for faster load and optimization. Some example, when a serializable Node class, and it’s have a field of type Node in it, you will see this warning. You can store a Node in a Node field, but you can’t do this more than seven times.

- Node field ㄴ Node field ㄴ Node field ㄴ Node field ㄴ Node field ㄴ Node field ㄴ Node field X Node field

Because of this problem, I even refactored my new editor three times…

Also there are other limitations as well, so I recommend reading the official documentation for this.

https://docs.unity3d.com/Manual/script-Serialization.html

—————

I’ll use polymorphism somehow!

I wanted to use polymorphism somehow to put information into the event list and use it. Older Event editors had to put arrays into serialized data and parse them by number directly because of this problem.

This time I used Json.

And here is Json.NET, the familiar Json utility library for .NET.

My team homepage server apps, which is currently running, also uses Json.NET.

https://www.newtonsoft.com/json

…in fact, it’s a library natively provided by ASP.NET Core.

https://assetstore.unity.com/packages/tools/input-management/json-net-for-unity-11347

I have taken advantage of three features of Json.NET to store and process various values in the Unity Serializer. JToken(JObject, JArray) and JsonConvert’s SerializeObject and PopulateObject.

—————

Polymorphism and Json.NET

I first created the top-level class of the event for polymorphic implementation, and added a ‘type’ string field to distinguish it when parsing with Json.NET.

I implemented the process of getting the 'type’ field first using JToken and then unboxing it with an instance of the type using the ToObject method.

Oh… so, how do I tell which event types are there?

I was in trouble for a while.

I had to build an environment that would work the same in the editor, the test play, and even after the build.

First of all, I needed to work in three different environments, so I started looking for features that could be run first.

In the Unity Editor, there was an attribute that could attach to a static method called InitializeOnLoad…

https://docs.unity3d.com/ScriptReference/InitializeOnLoadMethodAttribute.html

At runtime (test, build), I found an attribute called RuntimeInitializeOnLoadMethod.

It can also be attached to static methods.

The parameter was able to decide whether to run before and after the scene was loaded.

I used RuntimeInitializeLoadType.BeforeSceneLoad because I wanted it to run first.

https://docs.unity3d.com/ScriptReference/RuntimeInitializeOnLoadMethodAttribute-ctor.html

But that’s not done here yet, because I guesses the editor namespace will gone after the build, so I’ve come up with an odd class design in anticipation of throwing an exception.

The RegisterEventType method is a singleton static Dictionary field that has a type character for the key and a generic type for the value.

This will register the event for global use.

So I could use JToken to parse polymorphic classes in any environment (Editor, Test, Build).

—————

Put the data in Unity!

The event system I’m going to use requires the Json string (value), the reference array to be used by the event, and information for nested events, because of the polymorphic implementation above.

Nested events are used for forms such as conditional statements and options dialogues.

Initially I wanted to implement nested events through several List, but this was not possible due to the 7-level depth limitation of the Unity Serializer, so I fixed it by keeping the branch offsets (layerOffsets) in one-dimensional arrays (values).

And now I have make some sample event data pre-loaded for display and testing in the editor.

It helped me moderately(…) to write the sample data using the implicit operator.

—————

Unity UIElements

I decided to use UIElements this time.

UIElements is a new editor feature added since 2019.1.

https://blogs.unity3d.com/2019/04/23/whats-new-with-uielements-in-2019-1/

This allows Unity to write layout code (uxml), style sheets (uss) and decorate the editor just like WPF or web development.

And the editor of the event system began to devise several backend designs to overcome the difficulties of scaling.

—————

Basics of UIElements

UIElements consist of layout and code like HTML or WPF.

And it can dynamically manage UI elements in code.

Unity begins by importing the layout and stylesheets from the code and sending them to editor.

The above code is the code to build the layout through the file path of uxml and uss.

The reason for using pattern matching style in uss is that we have an attribute that returns null if there is a code error in uss.

And dynamic parts of the UI can use the Manifulator to manage UI events.

When creating an event, the Manifulator class is inherited and configured. This class is implemented by instantiating a parameter with the AddManifulator method on the VisualElement that you want to register.

VisualElement is the top level UI element of UIElements.

The above code is the code that calls the SelectEvent method when the UI element is clicked.

—————

My layout representation rules

First of all, to express the list of events, I expressed only Unity’s basic type, and realized that VisualElement, the basic type of UIElements element, is an inheritable type. EventListItemElement and EventListItemLayerElement were inherited.

Each was an element class to represent a single element of an event and a subelement of a nested event.

When I realized that VisualElement was inheritable, I started to extend the functionality of the element.

EventListItemElement manages the detailed data of the event and EventListItemLayerElement checks the child event elements and creates the EventListItemElement.

I created a structure to track the data so that it can be seen correctly by the user. (Green, red lines)

JsonConvert.SerializeObject is used when data is updated, and JsonConvert.PopulateObject is used when data is changed internally (Undo [Ctrl + Z], Redo [Ctrl + Y], etc).

Now I just need to build a layout that allows you to modify the event’s information.

By the way, the initial form of event code I mentioned in Polymorphism and Json.NET part had a comment in code that says ‘Editor specific features…’.

If you haven’t seen it, scroll up and check it out!

There, when the EventListItemElement clicks itself, the code inside it implements the inspector.

Designing like this means that when I add or modify an event, simply edit the code in the actual event class!

I confirmed this by creating a Helloworld event as a test.

Fixing the data and undoing and redoing also worked well.

So after confirming that everything worked well, I started to implement the rest of the editor. Add, delete, copy, paste, cut events…

The add event button allows the user to position where the user wants to add the event via the cursor position.

The placing 'Create event’ button was implemented by calling each EventListItemElement.

However, because UIElements events is called from bottom to top, I registered TrickleDown to face down so that when I register UI events, it works from top to bottom.

I used GenericMenu for add event function. And to organize the list, we used the event registration function that we created earlier to organize the menu.

So that’s all I did to create the event list layout. It’s a very long journey…

—————

What to do next…

UIElements was a new feature that I felt familiar with while developing web development and WPF apps. But working with other limitations of Unity wasn’t easy.

From next week onwards, I’ll be porting the previous event every minute and spending the rest of the time drawing the background and Poi’s flying animation.

And implementing new flight techniques.

I started this on July 7, and it was pretty hard but it was over.

Phew…

See you in next week.

1 note

·

View note

Text

Introduction to Frameworks

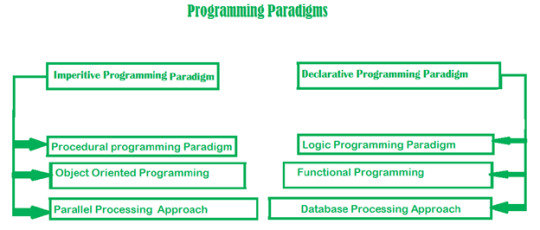

Programming Paradigms

A programming paradigm is a style or way of programming .Paradigms can be classified according to their characteristics and features. There are some common paradigms like structured , non-structured , functional , object oriented etc. Programming paradigms can be also termed as an approach to solve some problem or do some tasks using some programming languages.

Declarative and Imperative Paradigms

Both declarative and imperative paradigms are programming paradigms. But there is a difference between 2 paradigms

Declarative Paradigm- Express the logic of computation without expressing its control flow. With declarative programming we write the code that describes what we want but no need to explain the flow of execution step by step. This helps to minimize side – effects. Lisp , R are some well known languages for declarative approach. Many markup languages such as HTML, MXML, XAML, XSLT... are often declarative.

var results = collection.Where( num => num % 2 != 0);

In this example the implementation details have not been specified. One benefit of declarative programming is that it allows the compiler to make decisions that might result in better code than what you might make by hand.

· Imperative Paradigm - Use a sequence of code to explain flow of execution. When we use imperative approach the global state of system is changed. Since the state of the system is changed there are side – effects . The declarative programs can be dually viewed as programming commands or mathematical assertions.

eg:

var numbersOneThroughTen = new List<int> { 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 };

//With imperative programming, we'd step through this, and decide what we want:

var evenNumbers = new List<int>();

foreach (var number in numbersOneThroughTen)

{ if (number % 2 == 0)

{

evenNumbers.Add(number);

}

}

//The following code uses declarative programming to accomplish the same thing.

// Here, we're saying "Give us everything where it's odd"

var evenNumbers = numbersOneThroughTen.Select(number => number % 2 == 0);

Difference Between Procedural and Functional Programming

Procedural and functional programming are programming paradigms. But there are differences between 2 paradigms.

Functional Programming is a style of building the structure and elements of computer programs that treats computation as the evaluation of mathematical functions and avoids changing-state and mutable data

Procedural Programming is derived from structured programming, based upon the concept of the procedure call. Procedures, also known as routines, subroutines, or functions (not to be confused with mathematical functions, but similar to those used in functional programming . simply contain a series of computational steps to be carried out.

· Functional Programming origins from Lambda calculus which has no side – effects but procedural programming has side effects cause to change the global state of system.

· Functional programming use declarative approach while the procedural programming uses imperative approach

· Functional programming focuses on expressions and the procedural programming focuses on statements.

· Functional programming is used to academia and procedural programming is used for commercial software development

Lambda expressions and Lambda calculus in functional programming

Lambda calculus is a framework to study computations with functions. In other hand Lambda Calculus is conceptually the simplest programming language in the world. 3 simple rules are used

1.All you have are functions , They are all anonymous

2.A function should take only ONE argument

3.A function should return a value

Two keywords ‘λ’ and ‘.’. And you can model any problem

Lamdba calculus includes three different types of expressions

1. E :: = x(variables)

2. | E1 E2(function application)

3. | λx.E(function creation)

“No side – effects “ and “Referential Transparency” in Functional Programming

No side – effects – In functional programming ,output only depends on the input. Execution of a function does not affect to the global state of a system .Also global state of a system does not affect to the result of a function

Referential Transparency -v The expression “ Referential Transparency” is used in different domains. In mathematics referential transparency is the property of expressions that can be replaced by other expressions having the same value without changing the result in anyway

X = 2 + (3 *4)

After applying referential transparency

X = 2 + 12

In functional programming referential transparency is used for programs with the meaning of “replace equals with equals”.

eg:-

int globalValue = 0;

int rq(int x) { globalValue++;

return x + globalValue; }

int rt(int x) { return x + 1; }

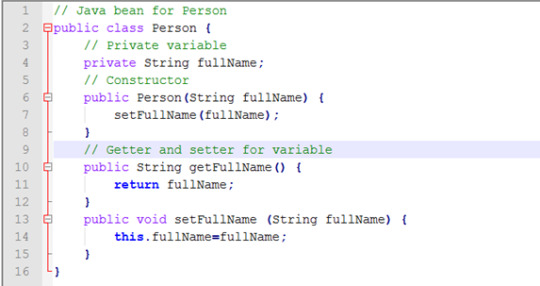

Key Features of Object Oriented Programming

Object oriented programming is a programming paradigm based on the concept of “objects” which may contain data , in the form of fields. Since OOP is a structured programming paradigm there are many advantages and key features like inheritance polymorphism , abstraction etc. Java , C++ , C# are well – known OOP languages.

Class is a abstract definition of data type . further more class can be defined as an entity that determines how an object will behave and what the object will contain. In other words, it is a blueprint or a set of instruction to build a specific type of object. A class includes properties and methods.

Object is a specific instance of a class. Objects have states and behaviours .

Eg:- Person p = new Person(“ Drake”);

The concept of a data class makes it possible to define subclasses of data objects that share some or all of the main class characteristics. Called inheritence, this property of OOP forces a more thorough data analysis, reduces development time, and ensures more accurate coding.

The concept of data classes allows a programmer to create any new data type that is not already defined in the language itself

Encapsulation describes the idea of bundling data and methods that work on that data within one unit, e.g., a class in Java.

Abstraction is a process that show only “relevant” data and “hide” unnecessary details of an object from the user

How the event – driven programing is different from other paradigms?

Event – driven programming focuses on events(user events , schedulers , timers , hardware interrupts) that are triggered outside the system and mostly related to systems with GUI elements where the users can interact with GUI elements

An internal event loop(main loop ) is used to identify user events and the necessary handlers. But in other programming paradigms no changes are entertained. Event driven programming has a procedure which is dependent on occurrence of events while the other programming paradigms have modular approach. Event driven programming is bit complex to understand in logic. But most of the other programming paradigms are easy to understand.

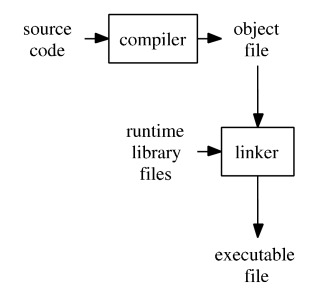

Compiled , Markup and Scripting Languages

Programming languages can be classified according to the way they are processed and executed. Programming languages are used to control the behavior of a machine(computer).

Compiled Languages

A source code is compiled to a executable code. Source code is reduced to a set of machine-specific instructions before being saved as an executable file. Purpose of the compilation process is converting a source code from human-readable format into machine code. The benefits of using a compiler to compile code is that it generally runs faster than interpreted code as it doesn't need to work it out on the fly as the application is running. The compiled program has also been checked for errors whilst it is being compiled. This will enable you to fix all coding errors before getting a fully running program.

Scripting Languages

A source code is directly executed .There is no compilation process for scripting languages. The code is saved in the same format that you entered. In general, it is considered that interpreted code will run more slowly than compiled code because it has to actively perform the step of turning the code into something the machine can handle on the fly as opposed to compiled code which can just run. Interpreted code is always available for reading and it can be easily changed to work the way you want it to. With compiled code, you need to find where the code is kept, change it, compile it and redeploy the program.

Markup Language

A markup language is used to control the presentation of data and not to considered as a programming language. There is no compilation or interpretation process .The tools(web browser) that can understand the markup language can render the output. A markup language is used to describe the data and the formatting in a textual format. There is a general rule, that a markup language will not describe a process or an algorithm (like programming language does) but is just pure data.

Role of Virtual Machine

A virtual machine (VM) is an operating system (OS) or application environment that is installed on software, which imitates dedicated hardware. The end user has the same experience on a virtual machine as they would have on dedicated hardware.

Specialized software, called a hypervisor, emulates the PC client or server's CPU, memory, hard disk, network and other hardware resources completely, enabling virtual machines to share the resources

The use of virtual machines also comes with several important management considerations, many of which can be addressed through general systems administration best practices and tools that are designed to manage VMs

Several vendors offer virtual machine software, but two main vendors dominate in the marketplace: VMware and Microsoft

How the JS is executed?

An execution context is an abstract concept of an environment where the Javascript code is evaluated and executed. Whenever any code is run in JavaScript, it’s run inside an execution context.

Types of execution context

o Global Execution Context

This is the default or base execution context. The code that is not inside any function is in the global execution context. It performs two things: it creates a global object which is a window object (in the case of browsers) and sets the value of this to equal to the global object. There can only be one global execution context in a program.

o Function Execution Context

Every time a function is invoked, a brand new execution context is created for that function. Each function has its own execution context, but it’s created when the function is invoked or called. There can be any number of function execution contexts. Whenever a new execution context is created, it goes through a series of steps in a defined order

o Eval Function Execution Context

Every time a function is invoked, a brand new execution context is created for that function. Each function has its own execution context, but it’s created when the function is invoked or called. There can be any number of function execution contexts. Whenever a new execution context is created, it goes through a series of steps in a defined order.

o Execution Context Stack

Execution context stack is a stack data structure to store all the execution stacks created while executing the JS code. Global execution context is present by default in execution context stack and it is at the bottom of the stack. While executing global execution context code, if JS engines finds a function call, it creates functional execution context of that function and pushes that function execution context on top of execution context stack.

Web browsers can interpret the JavaScript code. In every web browser there is a javascript interpreter to parse and execute JavaScript code.

How the HTML is rendered?

HTML is a markup language. Web browser can be used to render the output of a markup language.

The primary function of a web browser is to request resources from the web and display them inside of a browser window. Typically a browser will request HTML, CSS, JavaScript and image content from a server and interpret them based on web standards and specifications(The way the browser interprets and displays HTML files is specified in the HTML and CSS specifications. These specifications are maintained by the W3C (World Wide Web Consortium) organization, which is the standards organization for the web). They follow standards because it allows websites to behave the same way across all browsers, and creates less work and fewer headaches for web developers.

Main components of a browser: the user interface , browser engine , rendering engine , networking , UI backend , javascript interpreter.

CASE tools for different software systems

IOT systems

1. Arduino -Arduino is an open-source prototyping platform based on easy-to-use hardware and software

2. Eclipse IOT Project - Eclipse is sponsoring several different projects surrounding IoT. They include application frameworks and services; open source implementations of IoT protocols and tools for working with Lua, which Eclipse is promoting as an ideal IoT programming language

3. Kinoma - Kinoma, a Marvell Semiconductor hardware prototyping platform encompasses three different open source projects

Difference between Frameworks ,Library , Plugin

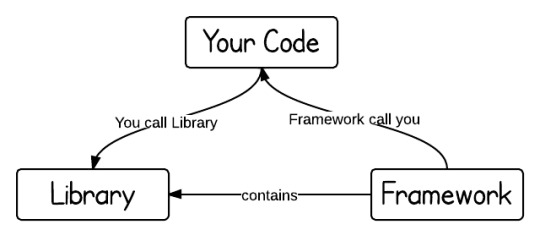

The key difference between a library and a framework is "Inversion of Control". When you call a method from a library, you are in control. But with a framework, the control is inverted: the framework calls you.

A library is just a collection of class definitions. The reason behind is simply code reuse, i.e. get the code that has already been written by other developers. The classes and methods normally define specific operations in a domain specific area. For example, there are some libraries of mathematics which can let developer just call the function without redo the implementation of how an algorithm works.

Plugin: Is a collection of few methods used to perform particular task.

A Plugin extends the capabilities of a larger application

f

1 note

·

View note

Text

Pandas Github

Pandas Challenge Github

Pandas Github

Github Pandas Tutorial

Panda Vpn Pro

Pandas Github Issues

Pandas Github License

Up to date remote data access for pandas, works for multiple versions of pandas.

< Data Indexing and Selection | Contents | Handling Missing Data >

Since Python 3.4, pathlib has been included in the Python standard library. Path objects provide a simple and delightful way to interact with the file system. The pandas-path package enables the Path API for pandas through a custom accessor.path.Getting just the filenames from a series of full file paths is as simple as myfiles.path.name. Dask uses existing Python APIs and data structures to make it easy to switch between NumPy, pandas, scikit-learn to their Dask-powered equivalents. You don't have to completely rewrite your code or retrain to scale up. A REST API based on Flask for serving Pandas Dataframes to Grafana. This way, a native Python application can be used to directly supply data to Grafana both easily and powerfully. It was inspired by and is compatible with the simple json datasource. Pandas is a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language. Install pandas now!

One of the essential pieces of NumPy is the ability to perform quick element-wise operations, both with basic arithmetic (addition, subtraction, multiplication, etc.) and with more sophisticated operations (trigonometric functions, exponential and logarithmic functions, etc.).Pandas inherits much of this functionality from NumPy, and the ufuncs that we introduced in Computation on NumPy Arrays: Universal Functions are key to this.

Pandas includes a couple useful twists, however: for unary operations like negation and trigonometric functions, these ufuncs will preserve index and column labels in the output, and for binary operations such as addition and multiplication, Pandas will automatically align indices when passing the objects to the ufunc.This means that keeping the context of data and combining data from different sources–both potentially error-prone tasks with raw NumPy arrays–become essentially foolproof ones with Pandas.We will additionally see that there are well-defined operations between one-dimensional Series structures and two-dimensional DataFrame structures.

Ufuncs: Index Preservation¶

Because Pandas is designed to work with NumPy, any NumPy ufunc will work on Pandas Series and DataFrame objects.Let's start by defining a simple Series and DataFrame on which to demonstrate this:

If we apply a NumPy ufunc on either of these objects, the result will be another Pandas object with the indices preserved:

ABCD0-1.0000007.071068e-011.000000-1.000000e+001-0.7071071.224647e-160.707107-7.071068e-012-0.7071071.000000e+00-0.7071071.224647e-16

Any of the ufuncs discussed in Computation on NumPy Arrays: Universal Functions can be used in a similar manner.

UFuncs: Index Alignment¶

Pandas Challenge Github

For binary operations on two Series or DataFrame objects, Pandas will align indices in the process of performing the operation.This is very convenient when working with incomplete data, as we'll see in some of the examples that follow.

Index alignment in Series¶

As an example, suppose we are combining two different data sources, and find only the top three US states by area and the top three US states by population:

Let's see what happens when we divide these to compute the population density:

The resulting array contains the union of indices of the two input arrays, which could be determined using standard Python set arithmetic on these indices:

Pandas Github

Any item for which one or the other does not have an entry is marked with NaN, or 'Not a Number,' which is how Pandas marks missing data (see further discussion of missing data in Handling Missing Data).This index matching is implemented this way for any of Python's built-in arithmetic expressions; any missing values are filled in with NaN by default:

If using NaN values is not the desired behavior, the fill value can be modified using appropriate object methods in place of the operators.For example, calling A.add(B) is equivalent to calling A + B, but allows optional explicit specification of the fill value for any elements in A or B that might be missing:

Index alignment in DataFrame¶

A similar type of alignment takes place for both columns and indices when performing operations on DataFrames:

Notice that indices are aligned correctly irrespective of their order in the two objects, and indices in the result are sorted.As was the case with Series, we can use the associated object's arithmetic method and pass any desired fill_value to be used in place of missing entries.Here we'll fill with the mean of all values in A (computed by first stacking the rows of A):

The following table lists Python operators and their equivalent Pandas object methods:

Python OperatorPandas Method(s)+add()-sub(), subtract()*mul(), multiply()/truediv(), div(), divide()//floordiv()%mod()**pow()

Ufuncs: Operations Between DataFrame and Series¶

When performing operations between a DataFrame and a Series, the index and column alignment is similarly maintained.Operations between a DataFrame and a Series are similar to operations between a two-dimensional and one-dimensional NumPy array.Consider one common operation, where we find the difference of a two-dimensional array and one of its rows:

According to NumPy's broadcasting rules (see Computation on Arrays: Broadcasting), subtraction between a two-dimensional array and one of its rows is applied row-wise.

In Pandas, the convention similarly operates row-wise by default:

If you would instead like to operate column-wise, you can use the object methods mentioned earlier, while specifying the axis keyword:

Note that these DataFrame/Series operations, like the operations discussed above, will automatically align indices between the two elements:

This preservation and alignment of indices and columns means that operations on data in Pandas will always maintain the data context, which prevents the types of silly errors that might come up when working with heterogeneous and/or misaligned data in raw NumPy arrays.

< Data Indexing and Selection | Contents | Handling Missing Data >

Display pandas dataframes clearly and interactively in a web app using Flask.