Don't wanna be here? Send us removal request.

Text

Video Annotation: Transforming Visual Data into AI-Ready Insights

As AI technology develops, so does its ability to receive and interpret visual data. The emergence of autonomous vehicles maneuvering around congested roads and security systems recognizing potential threats in real-time, AI understanding video data is transforming businesses across the globe. For AI to interpret visual data efficiently, it needs one essential piece of information: annotated video data.

Video annotation is a key aspect in converting unstructured visual data into structured information, which AI systems can understand, learn and utilize. Annotating video data is an important part of training machine learning (ML) models to perform tasks such as object detection, facial recognition, and activity recognition.

What is Video Annotation?

Video annotation is simply the act of adding labels or metadata to the frames or specific objects in a video file. These annotations are important to help AI models identify certain features, actions, or patterns in the video and by tagging important subjects or objects within the video such as people, objects, cars, and specific actions which could include gestures and other event, annotated video files become powerful training materials for AI systems.

Video annotation, in contrast to image annotation, incorporates the unique challenges inherent in moving imagery, including tracking objects over time, among other considerations requiring specific methodologies to confirm the validity of this analysis.

Why is Video Annotation Important for AI?

The significance of video annotation for AI should not be underestimated, for this may serve enhanced purposes as follows:

Augmenting Visual Comprehension for AI Models: In order to analyze video material, AI needs to clearly "understand" the significance of what it is seeing in each frame and over time.

Enhancing the Referencing of Objects and Activities: Whether you are observing videos of pedestrians using Autonomous Vehicles or a video recording of suspected fraudulent activity, annotated video data can facilitate the distinction of object activities or referring to certain objects or activities.

Training for Complex Situations: Certain applications like medical imaging or sports analytics have data that involves complex scenes that require understanding context, sequences of events, and behaviors. Annotated video datasets provide a structure for building AI systems that not only learn from a single frame but also learn from the relationships of frames.

Powering Action Recognition and Event Detection: Video annotation in AI gives the AI the means to learn to detect actions in video clips like recognizing waving from a person or a car making a turn. By labeling actions and events from the video clips, video annotation builds AI models to learn how to recognize and understand the same events in real-life situations.

How is Video Annotation Done?

Video annotation is a very painstaking task that incorporates human intervention and advanced technologies. Below are some basic methods used in the annotation of video data.

Object Detection and Tracking: In video annotation, object detection is the term used to represent the process of recognizing and tagging objects in each frame. Once the objects are tagged, tracking using algorithms will be done from frame to frame.

Activity Recognition: Annotators tag actions within the video, e.g. a person walking, running, or using an object. This helps the AI model recognize and classify several actions when it exhibits new video data. Activity recognition in video is valuable in several fields such as security, sports analysis and medicine.

Semantic Segmentation: If the level of detail is more granular, video annotation can also use semantic segmentation to classify pixels in a frame by their role in the scene (e.g. roads, pedestrians, cars). Semantic segmentation is commonplace in autonomous driving, where the AI model must separate the different objects and surfaces.

Event tagging: Event tagging applies annotations to a specific occurrence or pattern occurring in video clips, such as a crime, medical emergency or other unexplained occurrence. Annotated videos that highlight such events can be a huge asset in training AI systems in real-time event detection.

Applications of video annotation in AI

Video annotation is not constrained to one or two industries, there are wide possibilities of applications of video annotation across many industries, all of which can explore advantages of AI-enabled systems interpreting and analyzing video content. Here a few examples of video annotation applications.

Autonomous Vehicles: Self-driving cars use video annotation to identify and observe objects such as pedestrians, road signs, and other vehicles. Annotated video footage can be used to train AI models to successfully navigate complex experiences.

Surveillance and Security: Video annotation can help identify and recognize suspicious activities in security by identifying and recognizing unauthorized access or criminal activity. Video annotation can improve the ability of security systems to react to new threats in real-time.

Healthcare and Medical Imaging: Medical video and video from surgeries, can be annotated and be helpful for an AI system to identify and study symptoms, anomalies, or conditions. The use of video annotation in healthcare can help with early diagnosis and treatment planning.

Sports Analytics: Sports organizations use video annotation in sports analytics, to identify and analyze movements of players and players' metrics and performance. The use of video annotation can help coaches and analysts gain insights that can facilitate working on the team's strategies and the player's performance.

Retail and Consumer Behavior: Retailers can harness the use of video annotation for analyzing consumer behavior by documenting where consumers are looking and tracking foot movements in a store. This information provides data for retailers to make informed decisions on store layout, product placement, and marketing activities.

Issues with Video Annotation

Video annotation is important, but it offers challenges:

High Volume and Complexity: Annotating video data is hard work, especially with large amounts of footage requiring labels; annotating complex scenes that have multiple actions or objects adds to the challenge.

Cost and Resources: Depending how much work is needed, if the need for human annotators requires manual labeling large video datasets, it can be resource heavy and costly. Good news is, many companies help to alleviate readability costs and readability time with AI assisted annotation tools.

Accuracy and consistency: Consistent, accurate video annotation can be important for training reliable AI Models, as the annotators must perform the task correctly and remain consistent, shaping expectations for annotation guidelines from one video, to the next, and from scenario to scenario.

The Future of Video Annotation in AI

As AI Technologies will always continue to advance, the demand for video data that has being annotated will remain growing. Although AI tools can help promote and quicken the label process, human annotation is still required to name high-quality, higher-dimensional data representations.

Ultimately, video annotation has a promising future where improvements to accuracy, scalability, and efficiency can be achieved while promoting video annotation across fields such as autonomous driving, stimulation training, healthcare, entertainment, and many, many more.

Conclusion

Video annotation fuses the gap between video content to actionable data and is a fundamental process of AI-based visual recognition. Through video annotation, AI systems can understand and comprehend video and cinematic data.

Therefore, video annotation is fulfilling and fulfilling opportunities for industries around the world. Video annotation is a fundamental process, enabling a world with artificially intelligent systems that can intelligently recognize, react, and predict what happens in the world.

Visit Globose Technology Solutions to see how the team can speed up your face image datasets.

0 notes

Text

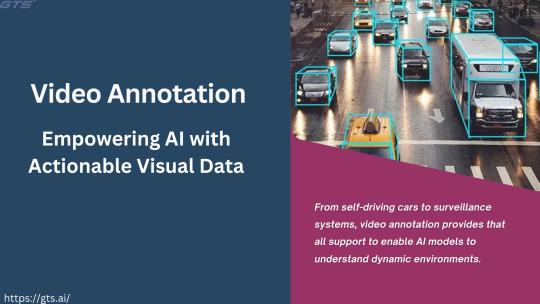

Video Annotation: Empowering AI with Actionable Visual Data

It is the known truth that AI thrives on data, and with computer vision, video data assumes center stage. But just any raw video footage is not what you want for training machine learning models involving recognition of intricate visual environments. This is where video annotation slowly comes into play-dictating the means of converting unstructured video content into actionable labeled data that completes the loop of AI-based applications.

From self-driving cars to surveillance systems, video annotation provides that all support to enable AI models to understand dynamic environments. In this article, we'll explore what video annotation is about, its process, challenges, and how it can change the AI landscape.

What is Video Annotation?

Video annotation is the method of tagging a video with meaningful metadata that lets machine learning models identify, predict, and even recognize objects, motions, or activities taking place in the video. This process allows AI systems to use video data within a real-life context when making interpretations.

Depending on the sophistication of the task, annotated videos may come in different forms, such as bounding boxes, polygons, key points, or semantic segmentation. These labels provide the AI with structured information that lets it learn how objects are spatially related, their trajectories, and the evolution of temporal relationships among video frames.

The Importance of Video Annotation in AI

Video annotation is vital for building a bridge between one piece of video footage and functional AI models. Here is why it is a necessary:

Enabling Object Detection and Tracking: Through annotated video data, AI models can actually identify and trace objects across different frames. Such ability is critical when creating and implementing such systems as self-driving cars, where the system should be able to observe a moving object such as a pedestrian, vehicles, and road signs all at once.

Improved Action Recognition: AI systems trained with annotated video data could identify and categorize every human activity. This opens new avenues for sports analysis, security, and even healthcare in areas like fall detection for elderly persons.

Enhanced Predictive Capabilities: Annotations assist the models in learning temporal sequences that can help in inferring events based on observed trends. In traffic monitoring, AI predicts congestion trends and alerts highway patrol officers of incidents.

Supporting Real-Time Decision Making: Annotation in video data ensures an AI decision-making capability in real-time with scenarios built around drone piloting or robotic surgery, and in turn lessens the risk considerably.

Video Annotation Techniques

How videos are annotated is determined by the needs of the AI model and the complexity of visual data being considered. Some standard techniques include:

Bounding Boxes: Bounding boxes are used to mark out objects in the video. They give a rectangular boundary to the object, which works best for applications like detection and tracking of the object.

Polygon Annotation: For a more irregular shape of the object, polygon provides a more accurate boundary. This helps greatly in detecting complex shapes or crowded scenes.

Key Point Annotation: Key points are specific features such as facial landmarks, joint positions, or edges of the object to be tracked. This is very useful in pose estimation and activity recognition.

Semantic Segmentation: In this, every pixel in a frame of the video is assigned a class label, giving detailed context for activities such as mobile navigation and environment mapping.

3D Cuboids: Cuboids extend the annotation in three dimensions, thus allowing AI systems to view depth and understand 3D spatial relations.

Challenges of Video Annotation

Despite being critical, video annotation presents peculiar challenges:

High Resource Demand: Annotating video data is exhausting and resource-intensive, considering that every frame needs to be labeled. With most sophisticated AI models needing, by and large, a few thousand hours of footage to be annotated.

Ensuring Consistency: Consistency across annotations is vital for avoiding confusion for the model. Variation in labeling guidelines or human error may create a negative impact on model accuracy.

Dealing with Complexity: Occlusions, low lights, and cluttered environments exist in whatever video data come from the real world to contribute further challenges to the final quality of annotation.

Privacy Concerns: When dealing with sensitive video material like surveillance or medical footage, it is important to follow laws of data protection and privacy for the annotation process.

Best Practices for Effective Video Annotation

With great anticipation, these best practices should be followed by organizations, helping overcome those challenges and lead to a final high-quality annotated output:

Develop Clear Guidelines: Consistency starts with a well-defined set of instructions for annotators. This minimizes ambiguity and improves accuracy.

Leverage Automation: Use AI-powered annotation tools to automate repetitive tasks, such as object tracking, thus lessening the burden placed on human annotators.

Train Annotators: Good annotators are crucial in helping to tackle the complexities offered, ensuring that their job is aligned with the project objectives.

Quality Checks: Strong validation and vetting must be executed to check for apparent mistakes that should have been otherwise caught.

Diverse Data Sharing: Annotate videos from various settings, such as medical, pedestrian, and so forth, to enhance the models' generalizability.

Applications of Video Annotation in AI

Video annotation has made unbeatable advances in multiple industries:

Autonomous Vehicles: Annotated videos help self-driving systems to detect and respond to the state of roads, obstacles, and pedestrians.

Healthcare: AI models trained using annotated medical videos help during surgeries, diagnose conditions, and monitor patients. Retail: Video annotation allows AI-driven analytics to track customer behavior and manage inventory.

Security and Surveillance: Annotated footage used to train models for real-time identification of suspicious activities or face recognition.

Entertainment: Annotated videos help AI work to analyze movements during a competitive sporting event to give coaches actionable insights.

The Future of Video Annotation in AI

As the technology behind AI continues to develop, the role of video annotation is going to keep expanding. New trends include:

Real-Time Annotation: With new tools and algorithms being developed, annotations are able to go in real-time and thus speed up model training by a great degree.

Crowdsourced Annotations: New platforms engaging thousands of annotators are helping to combat very large datasets.

Synthetic Data Generation: AI can now produce synthetic annotated video data, thus decreasing the reliance on real-world shooting.

Conclusion

Video annotation provides a bridge between raw visual data and actionable AI. By bringing context and structure into videos, video annotation enables machine learning models to interpret dynamic environments accurately.

With the evolution of both tools and the techniques, video annotation is going to stay put and become pivotal in unlocking new capabilities and stretching the boundaries of the employers of intelligent systems.

Visit Globose Technology Solutions to see how the team can speed up your video annotation projects.

0 notes

Text

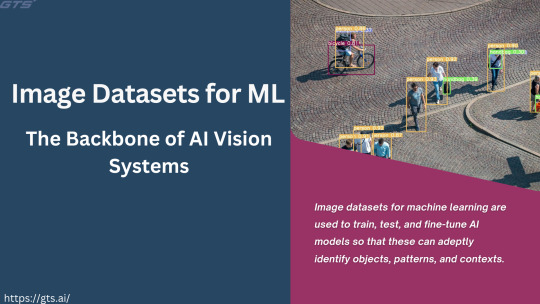

Image Datasets for Machine Learning: The Backbone of AI Vision Systems

In recent years, Artificial Intelligence (AI) has made great strides, especially in computer vision. The AI-aided vision systems are transforming industries-from facial recognition and medical diagnostics to driving vehicles and product recommendation in e-commerce. However, the success of such models relies almost completely on one element: fine image datasets.

Image datasets for machine learning are used to train, test, and fine-tune AI models so that these can adeptly identify objects, patterns, and contexts. In this article, we will look into what these image datasets represent, how to curate such datasets, and how they contribute to the development of AI vision systems.

Why Image Datasets Matter for AI Vision

Machine learning models are trained to find patterns in vast amounts of labeled data. The performance of AI vision systems heavily depends on the quality, diversity, and quantity of image datasets. Here is why image datasets are critical:

Improvement of Model Accuracy: A well-labeled dataset empowers AI models to accurately identify objects and categorize images. The further along diversity and high quality get in a dataset, the more capable the AI becomes in generalizing toward real-life situations.

Minimization of the Bias Factor in AI Models: If AI models are trained on imbalanced datasets, there is bound to be a bias. For instance, a facial recognition system trained mainly on light-skinned individuals might fail to perform well on darker-skinned persons. With properly curated datasets, such biases can be minimized for fair AI outcomes.

Improvement of AI Flexibility: AI models trained on varied datasets can adapt well to different environments, lighting conditions, and angles, making them more robust in real-life applications.

Powering Innovation Across Industries: AI-driven computer vision is finding its utility in detecting diseases in healthcare, monitoring crop health in agriculture, providing surveillance for security, and cultivating customer insights in retail, meaning high-quality image datasets are ushering the revolution.

Challenges in Building Image Datasets

Having analysed the context around the reasons prohibiting high-quality data set development, some of the challenges include:

Data privacy and ethical concerns: With an increase in the need for more security for data, organizations have to develop their operations considering these privacy regulations, such as the Roman Catholic Church, GDPR, and HIPAA, when handling sensitive images such as sensitive medical scans and personal pictures.

Large data size and complexity: With millions of high-resolution images needing processing and storage, it takes almost supercomputer-level strength of computational power and cloud storage solutions to resolve such scared data.

Annotation costs and time: Dictionary annotation is labor-intensive and costly; many furl companies have relied on AI-assisted annotation.

Dataset bias and representation: Training AI models on diversified data sets will help minimize or mask bias, creating policiability across different demographics and environmental conditions.

Popular Image Datasets Used in AI Research

There the handful of large open-source datasets that have been hotbeds for the advancement of AI vision systems. A few are given below:

ImageNet: One of the most recognized datasets is composed of 14+ million labeled images for object detection and classification purposes.

COCO (Common Objects in Context): With 330,000+ images with many objects per image, the COCO dataset provides a large-scale resource for segmentation and scene understanding.

OpenImages: Google's OpenImages dataset consists of many millions of annotated photos that cover an astonishing variety of categories and complex scenarios.

MNIST: An introductory dataset of handwritten digits used extensively to train AI on digit detection.

CelebA: The CelebA dataset contains over 200,000 celebrity faces and is fielded for facial recognition and attribute prediction.

Industries Benefiting from Image Datasets in AI

Healthcare: AI-enabled imaging systems diagnose conditions like cancer, pneumonia, and eye disease using carefully annotated images of diseases.

Autonomous vehicles: Self-driving cars depend on extensive data sets to identify pedestrians, vehicles, stop lights, and traffic signs to ensure safe processes and navigation.

E-Commerce and retail: AI models take in images of the products to create various recommendation systems for virtual try-ons and inventory tracking.

Security and surveillance: Facial recognition AI works by creating large datasets of images to identify people and strengthen security monitoring.

Agriculture: AI models and satellite or drone imaging would be used to find crop diseases and determine soil and irrigation conditions.

Future Trends in Image-Datasets of AI Vision

Synthetic datasets: With Generative AI taking center stage, synthetic datasets could be created to imitate real-world scenarios, bringing down the price tag for data collecting.

Real-Time Data Annotation: Edge computing, combined with the ingestion of images by AI models, will allow real-time annotation of images and greater speed and efficiency.

Federated Learning: This technique entails federated learning in AI models to train decentralized image datasets without exchanging sensitive data, thus providing better privacy.

Ethical AI Development: Developers will aim to develop future AI systems utilizing transparent and bias-free datasets for a fair representation across genders, ethnicities, and geographical regions.

Conclusion

Image datasets are the backbone upon which AI vision systems have made progress in several industries. The continued evolution of AI will drive the demand for high-quality, diverse, and ethically sourced datasets.

AI's accuracy and fairness will improve with advanced curation techniques, minimized bias in datasets, and the addition of synthetic data, toward a smarter and more inclusive future. The role of image datasets in determining the next generation of AI vision technology can't be underestimated.

Visit Globose Technology Solutions to see how the team can speed up your image dataset for machine learning projects.

0 notes

Text

Face Image Datasets: Pioneering the Future of AI in Facial Recognition

Facial recognition, which is rapidly developing with AI, has been one of its greatest game-changers. These technologies are changing the way we engage with digital spaces-from unlocking smartphones to enhancing security measures. At the heart of this technological breakthrough lie the face image datasets-these are huge collections of annotated facial data that allow for building and fine-tuning models of facial recognition.

This article examines the crucial relationship between face image datasets and the growth of AI, the challenges they represent, and the roads they may forge for the future of technology in facial recognition.

Why Face Image Datasets Are Essential

Facial recognition systems are based on machine learning algorithms that have become trained to identify, verify and analyze human faces. These systems require diversity and quality of data in order to function effectively. In this regard, face image datasets provide training material for these algorithms, allowing them to learn patterns, find features, and improve their accuracy over time.

Without these datasets, AI models would not generalize with accuracy about the diverse populations, lighting conditions, and facial expressions. The quality of the data in comparison to the variety goes directly toward determining the performance and reliability of facial recognition.

Key Applications of Face Image Datasets

Security and Surveillance: Facial recognition is heavily based on the face image datasets for improving security systems. Most airport, bank, or government-based securing transactions go unnoticed because of this highly trained technique.

Personal Devices: From unlocked smartphones to laptops, this kind of feature works with face datasets to perform really well with user authentication.

Healthcare: Facial recognition from datasets is taking preliminary steps into healthcare. Applications can be cited such as use for having checkups on facial adult features to genetic disorder detection as well as monitoring patients' emotional states.

Retail and Marketing: Using face image datasets to allow AI systems to analyze customer demographics, monitor in-store behavior, and provide personalized experiences.

Entertainment: In gaming and augmented reality, facial recognition enhances immersion by tracking user expressions and integrating them into virtual environments.

Challenges in Working with Face Image Datasets

Despite their importance, face image datasets present several challenges that researchers and developers must address.

Data Bias: Many data sets are not representative of the world population. Under-representations of certain ethnic groups, age groups, and genders can lead to biased AI models having difficulty performing with some demographic.

Privacy Concerns: The collection and use of facial data give rise to considerable privacy concerns due to obtaining consent and complying with laws like GDPR when the face image datasets are created and put to use.

Quality of Data: The accuracy of models may be hampered, given the fact that some datasets may contain low-resolution images, inconsistent annotation quality, or not so favorable lighting conditions, and therefore are dependent mostly on quality, well-labeled data.

Dataset Size: Training AI models in facial recognition requires large volumes of data. Nonetheless, acquiring vast amounts of data to be processed is highly resource-intensive and laborious.

Ethical Implications: The misuse of facial-recognition technology such as mass surveillance or clandestine tracking strengthens the call for pursuing ethical practices in dataset creation and usage.

Best Practices for Using Face Image Datasets

To mitigate these drawbacks and forge a reliable face-recognition model, the best practices might be considered.

Diverse Picture Sets: Include pictures in the training sets to represent various demographics, lighting conditions, and facial expressions to assist with model generalization and fairness.

Data Privacy: Make sure that datasets are collected with consent and that privacy laws are adhered to; anonymize data where possible to keep people's identities safe.

Data Augmentation: New datasets can be introduced using cropping, rotation, and color adjustment to enhance the dataset. This enhances the diversity of data without additional captivation efforts.

Model Performance: Models must be evaluated periodically against other benchmarking datasets so as to determine the degree to which they contain any form of bias or inaccuracies, and take the necessary adjustments.

Cross-border Cooperation: Cooperate with organizations all over the world to access a diverse pool of datasets through which facial-recognition technology can be strengthened to make it globally applicable.

The Future of Face Image Datasets

The landscape of facial recognition technology is constantly evolving, with face image datasets at its core.

Synthesis of Data: To tackle the problem of limited data and privacy, researchers make use of synthetic face images generated through AI. These synthetic datasets recharge other datasets capable of mimicking real-world datasets while keeping everything private.

Real-Time Data: New applications such as live emotion detection and crowd analytics necessitate datasets that support real-time processing and analysis.

Multimodal Integration: The fusion of face images with other biometric data such as voice or gait could yield improvements in recognition accuracy and increase the scope of possibilities within AI.

Ethical AI Development: The future of face recognition lies in the building of proper and ethical frameworks to support the creation of datasets, ensuring fairness, transparency, and responsible use.

Conclusion

Face image datasets form the very basis of facial recognition technology; they help push the growth curve across different fields. The datasets can help AI comprehend and analyze nuances of the human face in security, healthcare, entertainment, and retail.

While we grapple with issues of bias, privacy, and ethics, it's imperative that diversity, quality, and fairness are prioritized in creating and using datasets. That's on the road to completely unlocking facial recognition technology in our societal benefit.

Visit Globose Technology Solutions to see how the team can speed up your face image dataset projects.

0 notes

Text

Face Image Datasets: Advancing AI in Facial Recognition and Analysis

Facial recognition technology provides the backbone of modern artificial intelligence, enabling innovations from security to personalized marketing. In many instances, the backbone of this technology is formed by face image datasets-an important resource that fuels the training and development of AI models capable of accurately identifying and analyzing human faces.

The article discusses the importance of these face image datasets, the nature of their construction, problems in their application, and how they will radically turn the evolution of AI-based applications.

The Role of Face Image Datasets in AI

Face image datasets are collections of images that are meant to teach machine learning algorithms how to detect, recognize, and classify the features of faces. These datasets are paramount to enabling AI models to perform operations such as facial verification, emotion recognition, and age prediction. Through their vast analysis of annotated face images, AI systems are able to learn patterns, facial structures, and variances amongst human faces.

For instance, mobile phones use datasets of facial recognition systems that have included most aspects such as facial expressions, angles, and lighting conditions. This is how the model achieves high accuracy, even in real life. Without face image datasets, these systems could never be equipped for the task of distinguishing between faces or adapting to new scenarios.

Key Characteristics of High-Quality Face Image Datasets

Not all face image datasets may be created equal. A good quality dataset presents with certain characteristics that enhance its performance in enhancing AI model training:

Diversity: A diversity-enabled dataset represents images of individuals belonging to various ethnicities, ages, genders, and geographical backgrounds. Diversity ensures that AI systems have fairness in conjunction with being inclusive thereby minimizing the chances of bias in their outcomes.

Annotation: Detailed descriptions accompany every image by means of certain proffering such as semantic facial landmarks, expressions, general lighting influence, or obstructions like spectacles or facial masks. The task of these annotations is to train the supervised algorithms to be used for recognizing specific features as determined within the criteria.

Volume: Large datasets with millions of images improve the generalization ability of AI models. The more data an algorithm processes, the better it can handle variations and unseen scenarios.

Real-World Conditions: High-quality datasets have images taken in different environments like different lighting, weather, and camera angles, thus helping the AI adjust to practical real-world conditions rather than ideal conditions.

Steps in Creating Face Image Datasets

Data Collection: The first step is the gathering of images from a variety of sources. This can include public databases, social media, or perhaps through collaboration with certain organizations. Ethics surrounding permissions to those whose images are used must be adhered to with this phase.

Annotation: Once the images are gathered, they must be annotated and provided with relevant comments. The points on the face to be marked include the eyes, nose, and mouth in order to define landmarks. Annotations may also include indications of age group, expression, or any adornment that might help the model carry out a specific task.

Cleaning and Filtering: Raw data mostly contains irrelevant data, duplicate images, or images which are of poor quality. Cleaning is the process where all these non-academic details are removed, ensuring that whatever is left in the dataset is meaningful and applicable to the task.

Dataset Balancing: Balancing is a method whereby, by virtue of an underrepresentation of specific people in a database, we cannot get the AI trained. Cleanup needs to ensure that the image distribution is done evenly across the demographics.

Applications of Face Image Datasets

Security and authentication: Face image datasets trained by facial recognition systems verify the identity of the users on smartphones, laptops, and access control systems. Together these datasets help create a model that authenticates the identities of users correctly, enhancing security while creating an enjoyable user experience.

Personalized marketing: AI systems based on face image datasets have been devised to analyze customer demographics such as age and gender and to deliver focused promotions in retail and advertising. Such personalization improves customer engagement and satisfaction.

Healthcare: Face recognition technology is applied in healthcare, such as the diagnosis of genetic disorders by analyzing facial features. Systems for emotion detection can monitor patients' mental status by identifying stress or changes in mood states.

Surveillance: Facial recognition systems are invariably acquired by law enforcement agencies trained on massive datasets to recognize people in public spaces. Such systems provide assistance to the investigation of crimes, search for missing persons, and management of crowds.

Social Media and Entertainment: Face image datasets facilitate features like automated tagging in photos, filters, and avatar generation, augmenting user experiences on social media and gaming applications.

Challenges in the use of face image datasets

Privacy issues: Facial data gathering raises ethical issues regarding privacy and consent. Misuse or unprotected access to these datasets could jeopardize individuals' private information, so it is crucial to follow applicable data protection regulations such as GDPR.

Data bias: AI models trained on datasets that lack diversity could be biased. For instance, a facial recognition system trained on mostly male faces might be incapable of identifying females accurately. Deliberate efforts should be put into dataset generation and validation in order to combat bias.

Data security: A big-scale face image dataset should be dealt with in a secure manner and robust security measures should be employed to prevent breaches or misuse.

Scalability and computational costs: Scalability absorbs more resources as the dataset becomes larger, and more resources are required to train the model from that data. Efficient techniques for dealing with data are necessary to address the issue of scalability.

The Future of Face Image Datasets

Database with facial images continues to grow following the emerging trends in artificial intelligence. Here are some of them:

Synthetic Data: AI-generated synthetic datasets are scaling down reliance on real-world datasets bearing privacy considerations while keeping a high-quality standard.

Multimodal Integration: Combination of facial data with voice or text inputs to develop more comprehensive and accurate AI systems.

Federated Learning: Models are trained in their local surroundings on user devices, thereby minimizing the need to transfer sensitive data on centralized servers.

These innovations promise face image datasets to pack higher power, security, and inclusivity in the coming years.

Conclusion

Face image datasets have led the way in recognition and analysis of faces, assisting AI systems in attaining newer standards of precision and versatility. They are reshaping industries, from device security to healthcare, improving lives.

As demand for intelligent systems increases, face image datasets will continue to remain an important resource in pushing the envelope in AI innovation.

Visit Globose Technology Solutions to see how the team can speed up your facial recognition projects.

0 notes

Text

Data Collection for Machine Learning: The Foundation of Smarter AI Models

The backing up of artificial intelligence (AI) and machine learning (ML) depends enormously on data collection for machine learning. Collecting high-quality data becomes the base to support building of smarter, more accurate AI models that can take on real-world challenges. Their success relies on data to predict behavior, diagnose diseases, or enable autonomous driving.

The article discusses the significance of the contribution of data collection within the machine learning framework, containing information about forming the finest possible dataset and how innovations in data collection shape the future of AI.

Why Data Collection Matters in Machine Learning

Machine learning models learn to recognize patterns from data. If datasets are not diverse, representing the problem field of X and are of poor quality, then almost any state-of-the-art algorithm will fail on them. Data collection is the procedure involving the acquisition, organization, and preparation of raw data for model training. It transforms unstructured information into actionable resources that machines can understand and process.

Key Contributions of Data Collection to AI

Basis of Training: Data is used to "train" the machine learning models for the algorithms. This training enables them to learn the correlation, detect anomalies, and run predictions.

Accuracy and Precision: The quality and relevance of collected data directly impact the accuracy of a machine learning model. High-quality datasets help reduce noise and other errors so that the model can be practically effective.

Scalability: Data collection offers a way for scalability. A variety of datasets of reasonable sizes permits the training of more complex ML algorithms to handle increasingly challenging tasks and scenarios.

Types of Data in Machine Learning

Data collection for machine learning covers different domains, and each type serves specific purposes. The main types include:

Structured Data: This is a set of data that exists within some organized framework such as tables, databases, or even spreadsheets. Such examples include customer purchase histories, sensor recordings, or inventory details.

Unstructured Data: Unstructured data presents raw information without a clear organization. This means images, videos, text, and audio. It requires thorough processing and labeling before one can use it for training machine learning models.

Time-Series Data: These kinds of time-based data capture more info for applications such as stock market predictions and IoT warnings.

Real-Time Data: Real-time data streams are the essence of the applications in autonomous vehicles or fraud detection since timely and accurate data gathering ensure a sensitive priority.

Synthetic Data: This is data produced artificially in such a way that it reflects or resembles real-world data scenarios. It is complementary to traditional data and helps in overcoming challenges of privacy and scarcity.

Best Practices for Data Collection

Data collection is not just data collation but gathering of the proper mix of organized, ethical, and efficient data. Best practices guarantee that your datasets become machine-learning-ready:

Define the Objectives: Before one collects data, identify the purpose and goals of machine learning models. Understanding what problem is going to be solved will help steer toward more decisive and balanced actions on how much data to collect and the type of data.

Incorporate Diversity and Representation: Datasets should represent as wide a range of the users or scenarios they hope to encompass. For facial recognition systems, data could be photographs of varying age groups, ethnicities, and environment lighting.

Data Quality: Inaccurate models arise due to poor data quality. Methods such as data cleansing and normalization should remove errors, duplicates, or irrelevant entries.

Annotation and Labeling of Data: A model for machine learning utilizes annotation or labeling to interpret the data sensibly. In an image dataset, bounding boxes or tags may identify the objects; or, in сaсe of a text dataset, tag emotions for tasks of sentiment analysis.

Keep to Ethic Standards: Respecting user privacy and abiding by data protection regulations such as GDPR is key. Data collection should occur only through ethical principles such as taking consent and anonymizing sensitive information.

Make the Most of Automation: Implementing AI tools and automation can place a very low workload on the data collection process and gain more time for persons dealing with bigger projects.

Challenges of Data Collection

Data collection for machine learning is by no means without its brunt. The most common challenges are:

Data Scarcity: In some instances, there could be such scarce relevant data. That is common with industries such as health or autonomous driving in a particular environment due to privacy restrictions and narrow data for the industry in question.

Data Bias: Biased models are created by skewed and unrepresentative datasets. In particular, a language model trained solely on English may have difficulties making sense of other languages.

Quality and Quantity: Finding a place between the size of data and quality is difficult. While having larger data sets can enhance accuracy, low-quality data can mislead the model.

Data Storage and Processing: Dealing with large volumes of data require powerful storage and processing systems which may be very costly and resource-consuming.

Privacy Risk: Collecting user data usually raises ethical and legal issues. Transparency and compliance with data protection regulations need to be ensured to avert misuse.

Future Trends in Data Collection for Machine Learning

With the technology growing, the methods and tools for data collection will change. Given below are some of the contemporary movers and shakers in the field:

Synthetic Data Generation: AI systems are trained with synthetic data mostly when real data is scarce or too delicate. These datasets impersonate real stories and configurations, a much safer and scalable alternative.

Crowdsourcing: Platforms like Amazon Mechanical Turk are making data annotation and collection on a grand scale possible by tapping into human contributors.

IoT-Cross integration: The Internet of Things (IoT) is becoming a major data source, providing continuous streams of real-time information for machine learning applications.

Federated Learning: This method enables models to be trained across decentralized datasets, diminishing the need for central data collection and enhancing privacy.

Self-Supervised Learning: The models are now capable of learning with unlabeled training sets, lowering the importance of annotated data and cutting costs.

Conclusion

Data collection is the single most crucial element in any machine learning exercise. From goal orientation to issues of ethical compliance, each step of the data collection process contributes to AI model performance and reliability. As machine learning gains power and evolve over the years, how the data shall be collected and prepared shall also see evolution.

By steering the organization to realize innovation in quality, diversity, and innovation, smart AI systems can have a significant real-world effect. Synthetic data and federated learning advances signal that the future of our information collection-possible AI innovation is bursting with new prospects.

Visit Globose Technology Solutions to see how the team can speed up your data collection for machine learning projects.

0 notes

Text

Face Image Datasets: Advancing AI in Facial Recognition and Analysis

Facial recognition technology provides the backbone of modern artificial intelligence, enabling innovations from security to personalized marketing. In many instances, the backbone of this technology is formed by face image datasets-an important resource that fuels the training and development of AI models capable of accurately identifying and analyzing human faces.

The article discusses the importance of these face image datasets, the nature of their construction, problems in their application, and how they will radically turn the evolution of AI-based applications.

The Role of Face Image Datasets in AI

Face image datasets are collections of images that are meant to teach machine learning algorithms how to detect, recognize, and classify the features of faces. These datasets are paramount to enabling AI models to perform operations such as facial verification, emotion recognition, and age prediction. Through their vast analysis of annotated face images, AI systems are able to learn patterns, facial structures, and variances amongst human faces.

For instance, mobile phones use datasets of facial recognition systems that have included most aspects such as facial expressions, angles, and lighting conditions. This is how the model achieves high accuracy, even in real life. Without face image datasets, these systems could never be equipped for the task of distinguishing between faces or adapting to new scenarios.

Key Characteristics of High-Quality Face Image Datasets

Not all face image datasets may be created equal. A good quality dataset presents with certain characteristics that enhance its performance in enhancing AI model training:

Diversity: A diversity-enabled dataset represents images of individuals belonging to various ethnicities, ages, genders, and geographical backgrounds. Diversity ensures that AI systems have fairness in conjunction with being inclusive thereby minimizing the chances of bias in their outcomes.

Annotation: Detailed descriptions accompany every image by means of certain proffering such as semantic facial landmarks, expressions, general lighting influence, or obstructions like spectacles or facial masks. The task of these annotations is to train the supervised algorithms to be used for recognizing specific features as determined within the criteria.

Volume: Large datasets with millions of images improve the generalization ability of AI models. The more data an algorithm processes, the better it can handle variations and unseen scenarios.

Real-World Conditions: High-quality datasets have images taken in different environments like different lighting, weather, and camera angles, thus helping the AI adjust to practical real-world conditions rather than ideal conditions.

Steps in Creating Face Image Datasets

Data Collection: The first step is the gathering of images from a variety of sources. This can include public databases, social media, or perhaps through collaboration with certain organizations. Ethics surrounding permissions to those whose images are used must be adhered to with this phase.

Annotation: Once the images are gathered, they must be annotated and provided with relevant comments. The points on the face to be marked include the eyes, nose, and mouth in order to define landmarks. Annotations may also include indications of age group, expression, or any adornment that might help the model carry out a specific task.

Cleaning and Filtering: Raw data mostly contains irrelevant data, duplicate images, or images which are of poor quality. Cleaning is the process where all these non-academic details are removed, ensuring that whatever is left in the dataset is meaningful and applicable to the task.

Dataset Balancing: Balancing is a method whereby, by virtue of an underrepresentation of specific people in a database, we cannot get the AI trained. Cleanup needs to ensure that the image distribution is done evenly across the demographics.

Applications of Face Image Datasets

Security and authentication: Face image datasets trained by facial recognition systems verify the identity of the users on smartphones, laptops, and access control systems. Together these datasets help create a model that authenticates the identities of users correctly, enhancing security while creating an enjoyable user experience.

Personalized marketing: AI systems based on face image datasets have been devised to analyze customer demographics such as age and gender and to deliver focused promotions in retail and advertising. Such personalization improves customer engagement and satisfaction.

Healthcare: Face recognition technology is applied in healthcare, such as the diagnosis of genetic disorders by analyzing facial features. Systems for emotion detection can monitor patients' mental status by identifying stress or changes in mood states.

Surveillance: Facial recognition systems are invariably acquired by law enforcement agencies trained on massive datasets to recognize people in public spaces. Such systems provide assistance to the investigation of crimes, search for missing persons, and management of crowds.

Social Media and Entertainment: Face image datasets facilitate features like automated tagging in photos, filters, and avatar generation, augmenting user experiences on social media and gaming applications.

Challenges in the use of face image datasets

Privacy issues: Facial data gathering raises ethical issues regarding privacy and consent. Misuse or unprotected access to these datasets could jeopardize individuals' private information, so it is crucial to follow applicable data protection regulations such as GDPR.

Data bias: AI models trained on datasets that lack diversity could be biased. For instance, a facial recognition system trained on mostly male faces might be incapable of identifying females accurately. Deliberate efforts should be put into dataset generation and validation in order to combat bias.

Data security: A big-scale face image dataset should be dealt with in a secure manner and robust security measures should be employed to prevent breaches or misuse.

Scalability and computational costs: Scalability absorbs more resources as the dataset becomes larger, and more resources are required to train the model from that data. Efficient techniques for dealing with data are necessary to address the issue of scalability.

The Future of Face Image Datasets

Database with facial images continues to grow following the emerging trends in artificial intelligence. Here are some of them:

Synthetic Data: AI-generated synthetic datasets are scaling down reliance on real-world datasets bearing privacy considerations while keeping a high-quality standard.

Multimodal Integration: Combination of facial data with voice or text inputs to develop more comprehensive and accurate AI systems.

Federated Learning: Models are trained in their local surroundings on user devices, thereby minimizing the need to transfer sensitive data on centralized servers.

These innovations promise face image datasets to pack higher power, security, and inclusivity in the coming years.

Conclusion

Face image datasets have led the way in recognition and analysis of faces, assisting AI systems in attaining newer standards of precision and versatility. They are reshaping industries, from device security to healthcare, improving lives.

As demand for intelligent systems increases, face image datasets will continue to remain an important resource in pushing the envelope in AI innovation.

Visit Globose Technology Solutions to see how the team can speed up your facial recognition projects.

0 notes

Text

Data Collection for Machine Learning: Powering the Next Generation of AI

Artificial Intelligence (AI) has arisen as the most decisive force across industries, from health to finance, entertainment to logistics. At the center of this evolution is one indispensable element: data collection for machine learning. Data is the lifeblood of AI systems, enabling algorithms to learn, adapt, and make intelligent decisions. Without high-quality data collection, even the best-developed AI models would never supply credible and trustworthy outputs.

In this article, we shall explore in detail the significance importance of data collection for machine learning, the methodologies applied, the challenges posed, and how it is propelling the next-generation innovations in AI.

Importance of Data Collection in AI

Data is the fuel behind the entire functioning of machine learning (ML) systems. It is the patterns, relations, and behaviors inferred from the data that allow the systems to make predictions and decisions. The AI model's performance depends on the quantity, quality, and relevance of the collected data. Here is why data collection is the key pillar of machine learning success:

The Training of AI Models: For any task to be accomplished using machine learning algorithms, they need to be trained with representative data first. The training data allows the system to discover patterns that can eventually be generalized to new, unencountered inputs.

Enhancement in Model Accuracy: The inclusion of all the necessary measures in data collection aims to represent actual scenarios closely, thereby minimizing errors and biases within the AI model. Better data gives better outcomes.

Personalization: When it comes to AI systems, personalized services like recommendation engines, customer support assistance, etc., the only way they can personalize such offerings is through data collection.

Fueling Innovation: By initiating continuous and diverse data collection, AI applications are entering into the fields of autonomous cars, personalized medications, natural language processing, and others.

Data Collection Methods for Machine Learning

Good data collection demands a work plan for methodologies customized to the needs of the machine learning model. Some of the common approaches are:

Crowdsourcing: Crowdsourcing involves collecting data from large groups of people, mostly through online platforms. This method is especially powerful for collecting labeled data for tasks like image recognition or natural language processing.

Web Scraping: Web scraping is an automated process of collecting data from websites. This is commonly applied to build datasets for sentiment analysis, trend tracking, and other ML purposes.

Sensor Data: In the IoT applications, data is collected straight from sensors embedded into the like of a smart thermostat, wearables, or autonomous vehicles. This stream of data is valuable in predictive maintenance and real-time decision-making.

User-Generated Data: A number of companies collect the data that is generated by users, for example, interactions with their app, social media posts, or e-commerce transactions. This process is especially important for the provision of personalized services.

Synthetic Data: Synthetic data is data that is artificially generated rather than obtained from real-world sources. It tends to be very useful for the kinds of applications where the real data is scarce or difficult to acquire, such as the medical imaging or infrequent event prediction ones.

The Challenges with Data Collection

Data collection is vital for machine learning, but it faces a lot of challenges. These hurdles have to be removed for effective and ethical AI systems to be conceived.

Data Privacy and Ethics: Collection of personal data typically generates privacy issues, especially with data protection regulations such as GDPR and CCPA. Organizations are required to collect informed consent and comply with stringent data protection protocols.

Data Bias: Bias can creep into AI systems when data is not diverse or representative, particularly for demographics that do not contribute to it. For instance, systems of facial recognition trained mostly on one demographic may not provide good performances across others.

Readily Scalable: The growing need for massive datasets enhances the sophistication of data collection, storage, and operational management. Consequently, ensuring the integrity of the consistency and quality of millions of data points is really a Herculean task.

Annotation and Labeling: Thus, in supervised learning, we need to label collected data, which requires an enormous amount of time and manual labor. Furthermore, automatization of this stage is a huge challenge and may not be accomplished without loss of accuracy.

Real-Time Data Gathering: In such applications as autonomous driving or financial trading, data should be collected and processed in real time and this sets high demands on system infrastructure, as well as the application of exotic and sophisticated technologies.

Best Practices for Data Collection

Here are some best practices that organizations should follow to make data collection more effective:

Define Objectives Clearly: Know which business goals and use cases to pursue by ensuring collected data is appropriate and actionable.

Ensure Diversity and Representation: Use diverse sources and decisively handle possible bias to enhance the robustness of your AI model.

Focus on Data Quality: More than any huge amounts of irrelevant or noisy data, we speak about high-quality data that really matters. Clean and validate the data before using it for training.

Leverage Automation: Automating both data collection and data annotation processes minimizes labor costs and reduces scope for human error.

Maintain Ethical Standards: User privacy and data protection must always come first. Follow the law and ask for permission to use the data.

Applications of Data Collection in AI

The scope of data collection embraces numerous industries and applications fueled by AI.

Healthcare: Data collected from medical records, imaging, and wearable devices to train AI systems for diagnostics, treatment planning, and patient monitoring.

Autonomous Vehicles: Autonomous vehicles leverage enormous amounts of data from sensors, cameras, and radars to navigate through driving safely and make real-time decisions.

Retail and E-Commerce: Customer behavior, preferences, and purchase history dictate personalized recommendations, inventory optimization, and targeted marketing.

Natural Language Processing: Text and speech data are collected to develop AI models for language translation, sentiment analysis, and virtual assistants.

Financial Services: AI systems are trained to help with fraud detection, risk assessment, and algorithmic trading using historical transaction data and market trends.

The Future of Collection of Data

With the advancements in machine learning technologies, modern data collection practices bend to the will of new requirements. Trends emerging include federated learning for AI models to learn from decentralized data without violating privacy and synthetic dataset-generation practices meant to lessen dependence on real-world data.

Besides, advanced versions of edge computing and IoT would make real-time data collection and processing possible, paving the way for more dynamic and adaptive AI systems.

Conclusion

Data collection for machine learning is at the core of AI innovations. By ensuring that the data is diverse, of high quality, and ethically sourced, organizations make the best of their AI systems.

With challenges being countered and technologies evolving, the importance of data collection assumes cardinal stages on their own, fueling the next generation of AI applications that change industries and enrich lives around the globe.

Visit Globose Technology Solutions to see how the team can speed up your data collection for machine learning projects.

0 notes

Text

Video Annotation: Transforming Motion into AI-Ready Insights

AI and ML have changed the way people interact with digital content while video annotation is at the focal point of such transformation. It enables everything from detecting pedestrians by autonomous vehicles to real-time checking of threats in surveillance systems, but the interpretation of movement by artificial intelligence is highly dependent on high-quality annotated video data.

In simple terms, it is labeling video frames to add context for AI models. Annotated videos teach AI systems more effectively to identify underlying patterns, behaviors, and environments by marking objects, tracking movement, and defining interactions. Thus, they become smart, fast, and reliable decision-makers.

Why Video Annotation Matters in AI Development

The models that really grow from data are AI, and videos share much richer, dynamic information as compared to static images with no motion. It will provide motion, depth, and continuity, allowing AI to do things like:

Understand movement and object tracking in real-world environments.

Analyze complex interactions between people, objects, and the environment.

Improve decision-making in real-time AI applications.

Enhance accuracy by considering multiple frames rather than isolated snapshots.

These capabilities make video annotation essential for applications ranging from self-driving cars to sports analytics and healthcare diagnostics.

Types of Video Annotation Techniques

The efficiency of any AI model depends on the detail and number of techniques used to annotate the video. Each method caters to a particular type of AI, allowing the model to get exactly the type of data required in its learning process.

Bounding Box Annotation: This method involves drawing rectangular boxes around the objects identified in every frame. Bounding boxes provide an intuitive and effective training method for AI models to detect and recognize objects in the scene.

Semantic Segmentation: Semantic Segmentation is a more complex technique, but it involves the assignment of a category label for every pixel in the frame of a video. With semantic segmentation, the AI reaches a level of understanding of video content that matches that of a human, thus being able to better recognize objects.

Keypoint Annotation: This method usually annotates important points on an object for applications ranging from human pose analysis to face recognition AI. This annotation helps the AI understand body language and gesture by mapping joints and satellite trajectories.

Optical Flow Annotation: The optical flow technique tracks motion in terms of pixel displacement between two adjacent frames. It allows AI models to analyze past trajectories and ascertain the future possibilities of odors.

3D Cuboid Annotation: Unlike bounding boxes, 3D cuboids give depth perception, enabling AI to recognize object dimensions and spatial relationships. This annotation type ensures that AI understands object placement in three-dimensional space.

Challenges in Video Annotation

In spite of their tremendous advantages, video annotation has some annoyances that compromise AI model training farther on.

Huge Volume of Data: Videos contain thousands of frames, making annotation a small-scale arm-bending job. Streamlining the workflow with artificial or AI-assisted annotation could somehow help in this respect.

Motion Blur and Occlusion: Moving objects are magnificently difficult, if not next to impossible, to annotate. This is mainly because they can obscure themselves or become blurred by the movement. Some frame interpolation methods support increased annotation accuracy.

Data Privacy and Compliance: Video content may contain a lot of sensitive data from its very nature. Hence, it is subject to regulations of GDPR, CCPA, and the like. Such proper anonymization methods lead to ethical AI development in practice.

Annotation Consistency: Labeling must be consistent throughout frames and datasets. High variability leads to machine learning bias, so rigorous quality control is necessary.

The Future of Video Annotation in AI

Even as AI improves, techniques will change for video annotation. Various trends in motion analysis of video include:

AI-assisted annotation: Annotating objects with the assistance of AI in an automatic way and less manual effort.

Self-supervised learning: AI learns from unlabeled data off a video.

Real-time annotation: AI easily merges to the live video feed in real-time.

Federated learning: AI models trained over many sources from the edge of the client together, preserving privacy.

These developments will only increase the accuracy of AI, help ensure less training time for AI systems, and support enhanced AI application efficiency.

Conclusion

Video annotation is the backbone of AI-powered motion analysis, as they provide the training data required by machine learning models. With the ability to label objects, movements, and interactions correctly, AI systems can interpret and understand reality with improved accuracy.

From autonomous vehicles and healthcare to sports analytics and retail, video annotation is transforming industries and driving the path to AI innovation for the future. As annotation techniques develop, AI will continue to learn, adapt, and revolutionize the way we interact with visual data.

Visit Globose Technology Solutions to see how the team can speed up your video annotation projects.

0 notes

Text

Image Datasets for Machine Learning: Powering the Next Generation of AI

Artificial intelligence (AI) is revolutionizing industries by allowing a machine to process and analyze visual information. Self-driving cars, medical diagnostics, and facial recognition-all rely heavily on machine learning models, trained with a huge amount of image data.

Image datasets for machine learning make an important bedrock for several modern-day AI applications by providing properly annotated visual data for machines to learn about identifying objects, patterns, and environments.

An Overview of Image Datasets in Machine Learning

An image dataset is a data set containing labeled or unlabeled images that are used to train or validate AI models. Typically, the datasets involve a wide range of computer vision problems that include object detection, segmentation, and facial recognition.

Good datasets only get better at making predictions in an AI model through improvements in bias, generalization across contexts, or cleaner training datasets. An image dataset is curated from data collection, annotation, enhancement, and optimization so that the AI system trains in the best way possible.

Types of Image Datasets

Some types of datasets based on their purpose and way of labeling are:

Labeled Datasets: In these datasets images are annotated with specific bounding boxes, object-names, or segmentation-masks; these are a must for any kind of supervised model.

Unlabeled Datasets: These mostly comprise of just raw images with no annotations and are used in unsupervised learning and in self-supervised learning.

Synthetic Datasets: Images generated by AI to offset the scarcity of real-life images whenever it's too hard or expensive to gather actual images.

Domain-Specific Datasets: These datasets cater to more specific industries like medical imaging datasets used for disease diagnosis, or satellite image datasets used for geospatial analysis.

The Role of Image Datasets in AI Development

The efficiency and efficacy of AI models rest fundamentally on the liveliness and variety of data in their training sets. Therefore, here are a few reasons why image datasets are pivotal for the growth of AI:

Object Recognition and Classification Accuracy: There are AI models that take in images and work on recognizing and classifying objects therein. By producing properly annotated datasets, the machines learn very well to recognize the widest variety of objects under differing lighting conditions, at different angles, and in distinct environments.

Enabling Computer Vision Applications: Computer vision applications-four notable ones are autonomous cars, robotics, and augmented reality-depend on the AI ability to decipher images in real-time. Deep datasets guarantee that these systems will function correctly in differing situations in real life.

Improve Facial Recognition and Biometrics: Facial recognition systems use large image datasets to identify and authenticate individuals. To remove bias and improve accuracy across ethnic groups, ages, and lighting conditions, diversity in datasets needs to be ensured.

Contributing Towards Medical Imaging Diagnostics: AI is now revolutionizing the healthcare scene by interpreting medical images-X-Rays, MRIs, and CT scans-to detect disease and other abnormalities. Large annotated datasets are one of the prime requirements for training AI models for diagnosis to help doctors.

Enabling Intelligent Retail and E-Commerce: Image datasets allow recommendation systems and visual search engines to recognize product items, make findings on customer preferences, and enhance the overall online shopping experience.

Challenges in the Building of Image Datasets

It is not straightforward making high-quality datasets for images, yet this is often among the most tedious tasks:

Data Gathering and Privacy Issues: Although there is a lot of data collection required for many purposes, privacy issues such as data collection on face recognition create a conflict of ethics and become legal issues.

Annotation Complexity and Cost: Much needed during this stage of model development, manual labeling of images is both laborious and expensive. Advanced techniques like AI-assisted annotation and task swapping can substantially add to the speed of this operation.

Data Imbalance and Bias: AI models can form biases based on the characteristics of the datasets. That could lead to ineffective predictions. Ensuring representation across various demographics and experiences is crucial.

Scalability and Storage Limitations: As AI models become increasingly sophisticated, more sophisticated datasets increase the associated costs of storage and processing. Efficient data management techniques would be needed in order to operate with image datasets of this scale.

Optimizing Image Datasets for Machine Learning

To develop better models in AI, datasets should perform augmentation using:

Data Augmentation: Such as rotation, flipping, and color adjustments to increase variety of dataset.

Active Learning: Where an AI model can suggest the most informative images, hence saving time neurosurgeons spend in labeling.

Automated Labeling Tools: So using annotation tools that are AI-powered could speed up the dataset labeling process.

Dataset Standardization: To align the collection with image resolutions, formats, and annotation structures to facilitate a smooth AI training process.

The Future of AI's Image Datasets

With AI growing at great pace, that implies a great insatiable appetite for high-quality image datasets. These trends will shape the future of dataset development:

Synthetic Data Generation: AI will generate synthetic images to plug in gaps within real datasets reducing data collection costs.

Self-Supervised Learning: A model that can learn from images without labels to reduce the need for manual labeling.

Bias Reduction Methods: New practices are developed in order to detect and mitigate bias in datasets to ensure fair and ethical AI applications.

3D Image Datasets: Advanced AI models must be trained on 3D image datasets for applications in robotics, AR/VR, and healthcare.

Conclusion

Datasets of images are probably one of the main sources that provide a basis for advancing AI in computer vision, healthcare, autonomous systems, and facial recognition. As AI advances, however, the focus of development must lie in improving data quality, reducing bias, and allowing scaling up.

With enhanced data collection, modern annotation methods, and automation, AI systems in the future might become accurate, effective, and ethically responsible. If we invest in quality image datasets today, this would help propel intelligent, data-driven AI systems in the future.

Visit Globose Technology Solutions to see how the team can speed up your image dataset for machine learning projects.

0 notes

Text

Face Image Datasets: Advancing AI in Facial Recognition and Analysis

Facial recognition technology has evolved rapidly, transforming industries such as security, healthcare, and personalized marketing. At the core of this transformation lies face image datasets, the essential building blocks that enable artificial intelligence (AI) to learn, recognize, and analyze human faces with remarkable accuracy.

High-quality face datasets help train AI models to detect identities, recognize emotions, and even assess age or gender. In this article, we explore how face image datasets are shaping the future of AI-driven facial recognition and analysis.

The Role of Face Image Datasets in AI Development

AI-powered facial recognition systems rely on extensive datasets of human faces to function effectively. These datasets serve multiple purposes, including:

Training AI Models – Machine learning algorithms need vast amounts of labeled facial images to recognize patterns in human faces.

Improving Accuracy – A diverse dataset helps minimize errors and biases, ensuring better recognition across different demographics.

Advancing Security Systems – Facial recognition is used in surveillance, fraud detection, and access control systems.

Enhancing User Experience – AI-driven personalization, such as facial filters in social media apps, depends on high-quality image datasets.

Without well-structured face image datasets, AI systems would struggle with misidentifications, leading to inaccurate results and potential security risks.

Sourcing and Preparing Face Image Datasets

1. Collecting Facial Data

Face image datasets can be sourced from:

Public datasets released by research institutions.

Crowdsourced contributions, where volunteers submit images.

Web scraping, although it raises ethical concerns.

2. Data Annotation and Labeling

For AI to understand facial features, datasets need proper annotation, including:

Bounding boxes to define facial regions.

Landmark detection to identify key facial points (e.g., eyes, nose, mouth).

Expression labeling to categorize emotions.

3. Data Augmentation

To increase dataset diversity and prevent overfitting, AI developers use data augmentation techniques, such as:

Rotating and flipping images to simulate different angles.

Adjusting brightness and contrast to handle lighting variations.

Adding artificial noise to make models more robust.

By preparing and augmenting data properly, AI models achieve higher accuracy and reliability.

Challenges in Face Image Datasets

While facial recognition technology offers incredible potential, working with face image datasets comes with significant challenges:

1. Bias in Facial Recognition

AI models trained on biased datasets may struggle with accurate recognition across different demographics. Studies have shown that some facial recognition systems perform poorly on people of color due to insufficient diversity in training data.

Solution: Ensuring datasets include ethnically and geographically diverse images helps reduce bias and improve accuracy.

2. Privacy and Ethical Concerns

Facial recognition technology raises privacy issues, especially when data is collected without consent.

Solution: Adhering to strict data protection regulations, such as GDPR and CCPA, and obtaining explicit user consent for data collection.

3. Deepfake and Security Risks

Face datasets can be misused to create deepfake videos, leading to misinformation and fraud.

Solution: Developing AI models capable of detecting synthetic images and implementing anti-spoofing techniques in recognition systems.

Addressing these challenges ensures ethical and responsible use of AI in facial recognition.

Real-World Applications of Face Image Datasets

1. Security and Authentication

Face image datasets power biometric authentication systems, such as:

Facial unlock features on smartphones.

Airport security checks using face scanning.

Fraud detection in banking and financial transactions.

2. Healthcare and Wellness

AI-driven facial analysis is used in:

Medical diagnostics, detecting conditions like Parkinson’s disease.

Mental health assessments, analyzing facial expressions for emotional well-being.

3. Retail and Personalized Marketing

Retailers use facial recognition to:

Analyze customer demographics for targeted advertising.

Enhance in-store experiences by identifying returning customers.

The ability to process and analyze facial images is transforming multiple industries.

The Future of Face Image Datasets in AI

The next phase of AI in facial recognition will focus on:

Bias-free datasets for fair and inclusive AI.

Real-time face analysis for security and behavioral insights.

AI-powered emotion recognition to improve human-computer interaction.

As technology evolves, ensuring data privacy, ethical AI use, and unbiased datasets will be critical for shaping a responsible future for facial recognition.

Conclusion

Face image datasets are the driving force behind AI-powered facial recognition and analysis. From security applications to healthcare innovations, the ability to accurately detect and analyze faces is revolutionizing industries.