Welcome to my humor blog about artificial intelligence. I write about the sometimes hilarious, sometimes unsettling ways that machine learning algorithms get things wrong.

Don't wanna be here? Send us removal request.

Text

people have noticed that large language models get better at stuff like math and coding if the models have to spend time showing their work. apparently it can get excessive.

the prompt was "hi"

the eventual output was "Hi there! How can I assist you today?"

source

#llm#relatable though#look at it. it's got anxiety#how much extra energy does it take to run an llm this way#it's probably a lot

516 notes

·

View notes

Text

Rosemary’s sense of humor is so good

Did you know back in 2021, in the days of gpt-3, she once collaborated with me on a set of neural net generated pigeon names? It was a very small neural net, and so they weren’t very realistic pigeon names. Her illustrations of them were excellent though. https://www.aiweirdness.com/neural-net-pigeon-breeds/amp/

Just 1.5 weeks left to preorder my new humorous book THE BIRDING DICTIONARY. US residents who preorder can fill out this form for a cute free pin!

Preorders really help authors. Thank you so much to folks who preordered and I hope you enjoy my silly, small, yet somehow still informative book!

753 notes

·

View notes

Text

this is literally me right now so i feel i must reblog

Can't do work, there's a cat in my lap

Basically I'm purr-crastinating

354 notes

·

View notes

Text

“Slopsquatting” in a nutshell:

1. LLM-generated code tries to run code from online software packages. Which is normal, that’s how you get math packages and stuff but

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

https://www.theregister.com/2025/04/12/ai_code_suggestions_sabotage_supply_chain/

#slopsquatting#ai generated code#LLM#yes ive got your package right here#why yes it is stable and trustworthy#its readme says so#and now Google snippets read the readme and says so too#no problems ever in mimmic software packige

15K notes

·

View notes

Text

reblogging as note to myself: teaspoop

What is teaspoons?

I’m training a neural network to generate cookbook recipes. It looks at a bunch of recipes and has to figure out completely from scratch - no knowledge of what English words even are - how to start generating more recipes like them. It has to start by figuring out which words are used in recipes. Here in a very early iteration, you can see the first somewhat intelligible word beginning to condense out of the network:

4 caam pruce 6 ½ Su ; cer 1 teaspoop sabter fraze er intve 1 lep wonuu s cap ter 3 tl spome. 2 teappoon terting peves, caare teatasing sad ond le heed an ted pabe un Mlse; blacoins d cut ond ma eroy phispuz bambed 1 . teas, &

It’s trying SO hard to spell teaspoon. Teaspoop. It’s hilarious. It gets it right every once in a while, apparently by sheer luck, but mostly it’s: ceaspoong, chappoon, conspean, deespoon, seaspooned, ceaspoon, tearpoon, teasoon, tertlespoon, teatpoon, teasposaslestoy, ndospoon, tuastoon, tbappoon, tabapoon, spouns, teappome, Geaspoon, leappoon, teampoon, tubrespoon… It reeallly wants to learn to spell teaspoon. There are a lot of almost-teaspoons beginning with c… maybe it’s a mixture of teaspoon and cup. There are a few others that might be a tablespoon attempt.

Up next: pupper, corm, bukter, cabbes, choped, vingr…

2K notes

·

View notes

Note

i found this on twitter today: https://oasis.decart.ai/welcome and whilst i dont trust the company behind it our their motivations it has the whole dreamlike weirdness ai has sometimes but in a game (they copied minecraft, but everything you feels *wrong* in a way and there's no object permanence)

this game is fascinating

if they had succeeded in exactly copying minecraft it would have been way worse than the bizarre shifting, melting-block world they got.

#neural networks#minecraft#oasis#is this peak generative video game#i feel like the goal they are working toward is very uninspiring#but maybe there will be another good and glitchy stage or two before they get too close

334 notes

·

View notes

Text

They trained an AI model on a widely used knee osteoarthritis dataset to see if it would be able to make nonsensical predictions - whether the patient ate refried beans, or drank beer. It did, in part by somehow figuring out where the x-ray was taken.

The authors point out that AI models base their predictions on sneaky shortcut effects all the time; they're just easier to identify when the conclusions (beer drinking) are clearly spurious.

Algorithmic shortcutting is tough to avoid. Sometimes it's based on something easy to identify - like rulers in images of skin cancer, or sicker patients getting their chest x-rays while lying down.

But as they found here, often it's a subtle mix of non-obvious correlations. They eliminated as many differences between x-ray machines at different sites as they could find, and the model could still tell where the x-ray was taken - and whether the patient drank beer.

AI models are not approaching the problem like a human scientist would - they'll latch onto all sorts of unintended information in an effort to make their predictions.

This is one reason AI models often end up amplifying the racism and gender discrimination in their training data.

When I wrote a book on AI in 2019, it focused on AIs making sneaky shortcuts.

Aside from the vintage generative text (Pumpkin Trash Break ice cream, anyone?), the algorithmic shortcutting is still completely recognizable today.

2K notes

·

View notes

Text

the snawk

Botober day 26: The Snawk

#snawk#botober2024#botober#a close look at its mouthparts would answer some pressing questions I have about its diet

564 notes

·

View notes

Text

People have rightfully pointed out that my poll has no option for if you are exactly 21 years old. This was an oversight, and I have posted a corrected poll:

https://www.aiweirdness.com/corrected-poll

5K notes

·

View notes

Text

5K notes

·

View notes

Text

Botober 2024

Back by popular demand, here are some AI-generated drawing prompts to use in this, the spooky month of October!

Longtime AI Weirdness readers may recognize many of these - that's because there are throwbacks to very tiny language models, circa 2017-2018. (There are 7 tiny models each contributing a few groups of prompts; feel free to guess what they were trained on and then check your answers at aiweirdness.com)

#botober#neural networks#char-rnn#october drawing challenge#tiny language models#artisanal datasets#runs on a single macbook#i am going to make a langugae model that is so tiny

211 notes

·

View notes

Note

Are you going to do botober promts this year?

Yes! It's happening!

#botober#aiweirdness#drawing prompts#i am writing tags when i could be posting the prompts#let me just go post the prompts now

43 notes

·

View notes

Text

Among the many downsides of AI-generated art: it's bad at revising. You know, the biggest part of the process when working on commissioned art.

Original "deer in a grocery store" request from chatgpt (which calls on dalle3 for image generation):

revision 5 (trying to give the fawn spots, trying to fix the shadows that were making it appear to hover):

I had it restore its own Jesus fresco.

Original:

Erased the face, asked it to restore the image to as good as when it was first painted:

Wait tumblr makes the image really low-res, let me zoom in on Jesus's face.

Original:

Restored:

One revision later:

Here's the full "restored" face in context:

Every time AI is asked to revise an image, it either wipes it and starts over or makes it more and more of a disaster. People who work with AI-generated imagery have to adapt their creative vision to what comes out of the system - or go in with a mentality that anything that fits the brief is good enough.

I'm not surprised that there are some places looking for cheap filler images that don't mind the problems with AI-generated imagery. But for everyone else I think it's quickly becoming clear that you need a real artist, not a knockoff.

more

#ai generated#chatgpt#dalle3#revision#apart from the ethical and environmental issues#also: not good at making art to order!#ecce homo

3K notes

·

View notes

Text

ChatGPT can generate Magic Eye pictures!

At least according to ChatGPT.

more

#ai generated#chatgpt#dalle3#magic eye#more like a phone full of apps than a program that can do everything#and each of the apps is buggy

697 notes

·

View notes

Text

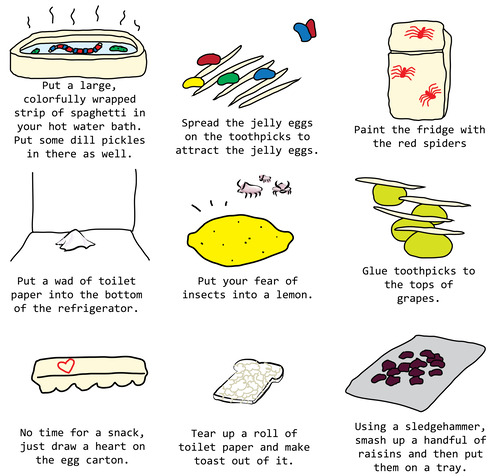

Step 10: Fun in the Shower

Fill your bathtub with cold water.

Take the jar of sawdust out of the freezer.

Dump it into the water and stir to add some texture.

An AI's idea of a prank

If you’re a longtime reader of my blog, you’ll know that AIs are consistently terrible at humor. Whether it’s a very simple neural net learning to tell knock-knock jokes, or a more-sophisticated algorithm trained on tens of thousands of short jokes, they tend to get the rhythm and vocabulary correct, yet completely miss the point.

In a previous experiment, I trained a simple neural net on a collection of April Fools pranks and noticed that most of them end up being pranks you play on yourself. Figuring that this sort of solo prank might be useful for this year, I tried a much more sophisticated neural net, one that didn’t have to learn all its words and phrases from scratch. The neural net, called GPT-2, learned from millions of web pages. Using talktotransformer.com, I gave it a short list of pranks and asked it to add to the list.

Here are some of the neural net’s suggested pranks.

Self-prank? New hobbies? Performance art?

It often seemed like the neural net thought it was supposed to be doing its best to suggest recipes or lifehacks.

Step 10: Fun in the Shower Fill your bathtub with cold water. Take the jar of sawdust out of the freezer. Dump it into the water and stir to add some texture.

Subscribers get bonus content: There are more AI-generated pranks than would fit in this blog post, including some that are such terrible ideas I hesitated to print them here.

My book on AI explains why this is all so darn weird. You can now get it any of these several ways! Amazon - Barnes & Noble - Indiebound - Tattered Cover - Powell’s - Boulder Bookstore (has signed copies!)

2K notes

·

View notes

Text

Shaped like information

hey look it's a guide to basic shapes!

The fact that even a kindergartener can call out this DALL-E3 generated image as nonsense doesn't mean that it's an unusually bad example of AI-generated imagery. It's just what happens when the usual AI-generated information intersects with an area where most people are experts.

more

1K notes

·

View notes

Text

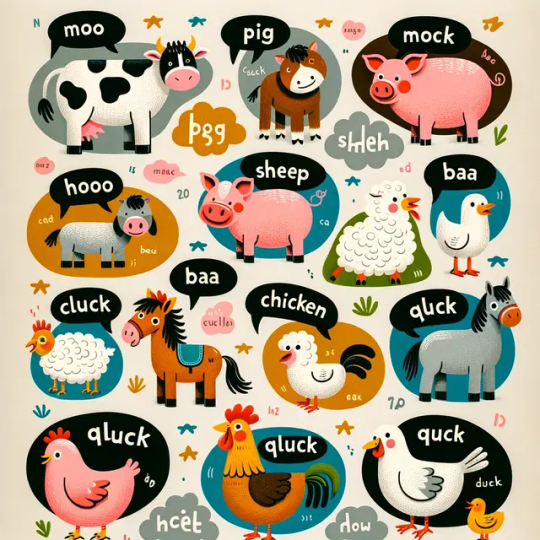

Hey kids, what sound does a wooly horse-sheep make?

What about a three-legged chicken?

All you have to do is ask chatgpt/dalle3, and the highest quality educational material can be yours at the click of a button.

more

#dalle3#chatgpt#ai generated#farm animals#animal sounds#old mcdonald had a chicken-sheep#e i e i uh oh#even a first grader can call bullshit on ai here

546 notes

·

View notes