Don't wanna be here? Send us removal request.

Text

Week 8 - CertUtil and key length

I actually did this earlier in the term but did not post about it.

Recently, I was downloading a program and as programs often do, it had a hash for the end-user to check that the program being downloaded had not been altered. I had seen this relatively often but had only really started thinking about it since taking this course.

So I decided to look up HOW to actually test this hash value as I believe that even if most end-users knew the purpose of this hash code (they probably don't know this either) they definitely wouldn’t know how to test the hash code.

To test this code the certutil command is utilised on Windows (through either PowerShell or command prompt). The method is outlined below:

Open the terminal of your choice (either PowerShell or Command Prompt on Windows)

Type the below command format (if you have the WHOLE file path) - sorry for the crappy indenting...

certutil -hashfile “<insert file path here>” <insert hash function here>

e.g. certutil -hashfile “C:\Users\<enter username here>\Downloads\<file.exe to be checked>” SHA256

Alternatively, you can use the “cd” command to navigate to the correct folder and then just use the filename (rather than the filepath)

e.g. certutil -hashfile “<file to check hash of>” SHA256

After I actually ran this script, I (rather stupidly) wondered why the key length looked so short. So I used the “len” function in Python to tell me the lengths of the SHA256 and SHA512 hash, which were 64 and 128 respectively.

This puzzled me as if a character is 8 bytes... A SHA256 hash should have a 32bit length (i.e. 256 / 8) and the SHA512 should only have a 64bit length (i.e. 512 / 8). So I did some additional research to find out that these hashes are in hexadecimal format (i.e. only 4-bits per character, rather than 8).

0 notes

Text

Week 8 - Tutorial Summary and Reflection - Presentation Week!

Context

This was a shorter tutorial as Something Awesome presentations took up most of the class!

Suppose you are the friendly Major M from the base who can see the alien A but who cannot see the invisible man X. Q: What would you M do to get from X his report on the Alien's (A's) planet? Note: Consider that the report may contain information including:

Is the alien A to be trusted?

Is there anything urgent we should do based on the information you obtained in your trip to the alien planet?

Tutorial Notes Diffie Hellman - The problem is authentication and as such, this method is susceptible to MITM attacks.

Certificate Authority (CA) - try and create some sort of trusted third-party?

Take the “invisible man” into another room to create a shared secret. Encrypt the messages so that ultimately if the message (when you decrypt it) is gibberish, you know that the message is not legitimate.

However, this means that authentication is NOT an issue... But the alien can prevent us from getting a valid message.

Asymmetric encryption? This was seen to add no value.

Issues with this I didn’t really think about the fact that the communication was done purely in each parties head (live)... I.e. Encryption is not valid unless someone has a brain capable of doing encryption without the aid of a computer.

Other Groups Merckle puzzles

Create some sort of code - i.e. Get the alien to say “Oranges” if... Get the alien to say “Apple” if...

Maybe use a similar thing... Accept the code word could be said whether the alien has told the truth or lied... So first the alien would relay the message (true or false) and then after the message, you have to prompt the alien to say the secret code. This code would have to be re-agreed every time.

Learnings Just simply to think outside the box. Think about everything you have learnt from Merkle puzzles, to MAC, to encryption and try to see which characteristics of each technology might be useful. Then see if some sort of hybrid solution can be concocted. Every technology or idea offers something different to a situation, many will often be needed to try and create a good solution. Basically, don’t assume you already know the solution (or the best way to go about things), as this limits creativity and innovation. Continue to come up with better ideas and never be satisfied with what you have.

0 notes

Text

Week 8 - Security Everywhere

Recently I have stumbled upon a news article outlining an EM attack causing widespread blackouts across the country of Venezuela. From this, I remembered the tutorial discussion from a few weeks ago regarding threats to Australia in a “cyberwar” - with a consistent point being the security of our national infrastructure. So I thought, are we susceptible to the same types of attacks? Or at the very least, how much are we doing to protect against these sorts of threats?

I have since found an AFR article outlining the growing concern of attacks against Australian Infrastructure and some recommendations from consulting firm Accenture (blog).

The current recommendations that I can find are:

Conducting national cybersecurity exercises (led by the ACSC)

Contingency plans (encompassing media response, backup systems/capabilities, etc) - Accenture blog

Trusted Networks - Accenture blog

Standardised risk frameworks and approaches to ensure that infrastructure utilises “best practises” - Accenture blog

My analysis:

National Cybersecurity Exercises I think that these can be a good way to go about increasing the security of the nation’s infrastructure. These could basically be likened to penetration testing that occurs in an organisational setting. However, it will be interesting to see how this is run... An attacker going after these systems will most likely try to disrupt the availability of these resources (much like the Venezuela attack) to create havoc. Obviously, the government/ACSC does not want to take down the nation's systems for multiple days during the exercise so will they be able to use the level of malice that an opposing nation would exercise? If not, maybe they won’t be testing with the “mind of an attacker”. However, having eyes on the system and putting the system under scrutiny can only have positive effects in the long-term as the system will surely be hardened as a result when flaws are found.

However, I believe that these should be run more often as the threat landscape is constantly evolving. Alternatively, maybe another organisation in the government or private sector could be responsible for testing and securing national infrastructure (or a certain scope of critical infrastructure). This constant testing would try to provide greater assurance that these systems are secure.

Contingency Plans This will definitely help as no matter the measures taken to secure a system, the defenders should never assume that their solution is impervious or perfect. As such, the nation should prepare for the worst and create contingency plans and potential backup capabilities for critical systems (if financially viable).

Trusted Network (Information Security Manual) and standardised risk frameworks Utilising “best practice” can definitely never hurt... This ensures that the systems are at least getting adequate-good protection against attackers. However, I have heard many talks from industry speakers, that exclaim that the question is NOT “have we been hacked?” it is “how can we find the hack/exploit?”, exemplifying that no, or at least very few organisations’ security personnel, believe that their systems are 100% secure (and rightfully so). I am sure some of these organisations also comply with Information Security Manual... Furthermore, considering that infrastructure will likely be targetted by nation-states, I might argue that these best practises may not help much. Often, nation-states have significantly more resources than a “lone attacker” (or even hacktivist groups) that would be the standard adversary for a private organisation. It is known that the NSA collects 0-day exploits, as such we can assume that malicious nation-state actors would also have a wealth of 0-day exploits and resources at its disposal. An example of this is the Sony Pictures attack that took place years ago after the creation of the movie “The Interview”.

As such, best practice is definitely a good starting point, especially with a stringent framework like the Information Security Manual, as long as these certifications do not give any heightened, and likely false, “sense of security”.

Summary Overall, this is a hard problem to solve, and I definitely don’t have all the answers, especially with so many crucial infrastructure systems that could be targetted throughout Australia. I do think that these recommendations are definitely a step in the right direction, however, I believe that other measures should be taken rather than relying so heavily on standard “best practice” principles - especially after taking into account that the main adversary to these systems would likely be nation-states or nation-state sponsored hackers.

I am not sure about the feasibility/suitability of this, but one possibility might be to conduct cross-government penetration tests within the “Five Eyes” (as one flaw in the weakest link could expose confidential data from ALL of the nations in the “Five Eyes”) - this should provide at least some form of a collective goal to mitigate malicious intent. Additionally, the other members of the “Five Eyes” should at least be relatively trusted parties, as you’re willing to share important and confidential data with them.

0 notes

Text

Week 7 - End of Something Awesome - Final Update

Personal Grading (tl;dr) Project 1 (web crawler): HD 90 Project 2 (cyber upskilling): Probably HD 83-88

Project 1 - Python Web Crawler

Updates I have thoroughly increased the usability of the program by allowing the crawl to start again from where it left off! This was a lot more work than I thought it would be but I have tested the functionality and it works!

What I have achieved over the term High-level achievement overview: This project involved creating a web crawler that could be utilised in the information-gathering phase of a social engineering (or “hacking” attempt) on a specific organisation. This crawler can find new links from the starting point and load these pages to find more email addresses. Additionally, all information is stored in an SQLite Database for easy querying using SQL.

Overall, I believe I definitely achieved a HD grade for this project as not only did I meet all my criteria but I went above and beyond to improve the usability of the application. I am very happy with this result especially considering that I mistakenly assumed that the project would be due at the END of week 8 (not the start) essentially giving me one less week to complete each of my projects.

Proposed Timeline End of Week 2 - Get approval End of Week 3 - Successfully establish a network connection using python (probably by spoofing a web browser/sending a GET request to get a web page) End of Week 5 - Retrieve and store the HTML in Python and be able to search through the HTML to find objects of interest (emails, hyperlinks, etc) End of Week 6 - Be able to store the emails/information of interest in Python and make the program automatically propagate to other webpages. Test this web crawler out on multiple websites and ensure that it still works (some websites may deal with hyperlinks and webpages differently). End of Week 7 - Be able to output the information of interest to Excel/.txt file so that this information could be used to start a spear-phishing attack. End of Week 8 - The product is finished, clean-up code and test the final product.

Personal Marking Criteria PS - Have a program that can get information from a single webpage and not being able to output this information to a .txt file/excel. CR - Have a functioning web crawler that can get information from the web (self-propagating) but not be able to output this information to a .txt file/excel DN - Have a functioning web crawler that can get information from the web and output this information to a .txt file/excel HD ACHIEVED - Have a functioning web crawler that can get information from the web and output this information to a .txt file/excel. This web crawler has been thoroughly tested and works on a variety of different websites (regardless of how hyperlinks, etc are designed)

Project 2 - Cyber Security Upskilling

Updates This week, I have installed a Kali Linux virtual machine and have been using this to test some of the content I have been learning.

To add to this, this week I also completed two Cybrary Labs about scanning and network discovery (allowing me to get a bit more “hands-on” experience with the commands I have been learning - in an environment that provides me with a network to scan).

I have also read THREE more chapters from the Penetration Testing Essentials textbook and written extensive blog posts on the content. The first half of Chapter 6 was very complimentary content to the two aforementioned Cybrary Labs so this was combined into the same blog post.

What I have achieved over the term High-level achievement overview: I have read 8 chapters of the Penetration Testing Essentials textbook (and have summarised most of the learnings in blog posts). Additionally, I have completed 2 online labs from Cybrary about network discovery and scanning. To complement this, I have also installed a Kali Linux VM on my laptop to practise some of the learnings from both the labs and the textbook.

As such, I believe that I have “just” scrapped a high-distinction band or gotten an 80+ (a high-band distinction) for what I have completed. Although, considering that I have completed this one week earlier than my schedule (as I assumed this was due at the END of week 8) I think this leans towards a HD rather than a D.

Personal Marking Criteria PS - Finish 6 chapters of the Penetration testing essentials textbook and blog about them, complete nothing else (average of one chapter a week). CR - Finish 8 chapters of the penetration testing essentials textbook, blog about them and nothing else OR learn one interesting skill AND finish 6 chapters of content from the textbook DN - Finish 10 chapters of the textbook and blog about them OR learn one interesting skill AND finish 8 chapters of content from the textbook HD - Finish 12 chapters of the textbook and blog about them (2 chapters a week on average) OR learn one interesting skill AND finish 10 chapters of content from the textbook OR learn one interesting skill, try implementing something technical from the learnings (blog about this) AND read 8 chapters of the textbook (with blog posts).

1 note

·

View note

Text

Week 7 - Chapter 8: Cracking Passwords

Different techniques to crack a password:

Dictionary attacks - A list of commonly used words are tested against the password. Files containing these lists of words can be downloaded online.

Brute force - trying every possible combination until you guess the correct password. If you’re performing an online attack this might not be possible as most modern websites will “lock” the account after a small number of failed login attempts.

Hybrid attack - Using a dictionary attack but try common substitutions (e.g. 1 = i, ! = i, o = 0, etc) and suffixes (e.g. “123″, “1234″, “!”, etc).

Passive attacks - Sniffing network traffic to find passwords.

Offline attacks - Obtaining the credentials stored in the system. The passwords could be stored in plain-text (very rare), using a weak hash function like MD5 (would hope that this is rare at this day and age but this has been proven incorrect multiple times). If the passwords are hashed using a strong hash, rainbow tables can be used. If the passwords are salted and hashed brute force will probably have to be performed (the advantage of this is that this attack is offline, so the accounts will not be locked out so many combinations can be tried).

Social Engineering - Manipulating humans to give out information that they shouldn’t.

Potential attack methods Passive Attacks Network sniffing using Wireshark (or equivalent) - There are many older protocols that still send passwords in an unencrypted form including FTP, Telnet, SMTP, POP3 (although many companies would’ve hardened these capabilities with SSH or other technologies). Additionally, newer protocols like VOIP is often susceptible to sniffing.

Man in the middle attacks - Acting as the intermediary between 2 parties communicating. This means that you catch all the traffic and then forward it on to the correct recipient so that the two parties think their communication is working.

Active Attacks Password Guessing - how it sounds...

Malware - including everything from viruses, trojans, ransomware to keyloggers and spyware.

Offline attacks WinrtGen (rtgen in Kali) can be downloaded (used) to generate Rainbow Tables (can be used for a variety of hashes including SHA-1, MD5, SHA256, SHA512).

Once this is generated, you can download rcrack_gui.exe (rcrack in Kali) to try to match your rainbow table hashes to a .txt file.

Non-technical attacks Trying default passwords:

https://cirt.net/passwords

http://www.defaultpassword.us/

http://www.passwordsdatabase.com/

https://w3dt.net (has a LOT of tools, not just default passwords)

http://open-sez.me/

https://www.routerpasswords.com/

https://www.fortypoundhead.com/tools_default.asp

Guessing - ...

Stealing passwords by exploiting USB autorun:

PSPV.exe - this software exploits autorun to try and find locally stored passwords in files.

USB Rubber Ducky - as autorun is often no longer allowed on hardened systems, this USB can be used. It is not recognised as a USB but as a keyboard. Most systems automatically install packages from human interface devices so you can execute code to do whatever you want.

0 notes

Text

Week 7 - Chapter 7 - Vulnerability Scanning

Vulnerability - a weakness or lack of protection present within a host, system or environment.

Vulnerability scanners are commonly used by businesses, but can also be used by penetration testers. Basically, they are computer programs that can search for specific vulnerabilities or weaknesses in anything from a single host, to an entire network. This scanners commonly look for things like open ports, vulnerable coding in a program, unpatched systems, etc.

Although the more sophisticated vulnerability scanners can generally check across a wide variety of existing vulnerabilities, they must be regularly updated so that their database is up-to-date with the latest known attacks. However, even if these tools are up to date, they generally check for known vulnerabilities, so new 0-day vulnerabilities will obviously not be found by these scans. As such, it is of key importance to not get overconfident when these scanners don’t find a vulnerability (or don’t find any high-critical risk vulnerabilities)...

Recommendations:

Vendor selection is important as every scanner has different functionalities, from the vulnerabilities they can detect, the types of systems they can scan, what they look for in these scans, whether or not they provide suggestions for vulnerability remediation when one is found, it’s reporting capabilities, etc.

Scans should be run periodically (across all systems containing sensitive data and other important/critical infrastructure) and vulnerability scanning software should be kept up to date.

When any major change is made to a system or a new system is being integrated into a company’s environment, there should be vulnerability scans being run throughout these projects to ensure that the company isn’t putting itself under any additional risk.

For every vulnerability found there should be documentation on how this risk can/will be mitigated (or whether the risk should be accepted).

SLAs should be created that include timeframes that vulnerabilities found should be mitigated withing. E.g. Critical vulns in 10 days, High vulns in 20 days, medium vulns in 45 days, low vulns in 90 days...

0 notes

Text

Week 7 - Tutorial Summary & Reflection

Context We were asked to read a few articles relating to government surveillance and think of arguments both for and against it. In class, each student was assigned to either “for” surveillance or “against” it (the student did not choose the side they were on).

I was chosen in the team to argue FOR surveillance.

My Notes from class

Pro surveillance

Why the government should collect data?

· Identify people of interest to keep the community safe

· Defend against terrorism

· Smarter planning for infrastructure

· Innocent people shouldn’t have much to hide…

· Missing people 3000 children found

· Reduce crime rates

· Safety more important than privacy

Method:

· Surveillance purposes only – AND only Government institutions

· Scope of data:

o Phone metadata

o Internet usage

o Email addresses

o Facial Recognition

o Location

o Criminal Record

Thinking about ways to address common concerns

· People don't trust the government to manage data

o Keep access management logs

o Air-gapped facilities

· How many people have access to this data? – This should be logged (see above point) and in compliance with the principle of least privilege

· Inaccuracies with the technology

o Only use technology that doesn’t look for certain identifiers that prone to bias (including skin colour, gender etc).

o Need to be able to test software for bias and minimise it

· Repurposing of information

· Privacy concerns

o This solution would NOT BE MASS Surveillance

Final Arguments for the first round of debate

Terrorism, Crime rate down

· Type1/Type 2 errors

· Invest in lots of R&D

· Regulate constantly

· The system can only provide information and NOT take law into its own hands

Missing person

Privacy

The other team started the debate with (we had no time to come up with counter-arguments - these are just my personal notes before speaking - although some arguments were created on the fly...)

What they said?

· Blanket data retention laws

o

· People are already known to authorities

o What about terrorist attacks? This might be true for criminals but not necessarily terrorists - esp with the new threat of “homegrown terror”.

· Privacy and data misuse

· Can we trust the government when we don’t know what they’re doing?

o

· Long-term effects of collecting biometric data is not known

o

Round 2

· Good intentions now… what about the future?

o This problem should be minimised as the “people” actually vote in the government

· Misidentify people

o The system only provides information… Does not act on the information so it will still go through verification processes and human hands

o Utilise an unbiased algorithm that doesn’t look for certain indicators (including skin colour, etc)

o Heavily regulated and tested

· Privacy issue

o Google… FaceBook… Not the government??

· Potential for misuse

· Focussing on everyone (not just “criminals”)

o

· Why store data for a few years?

o When looking at threats like terrorist attacks/plots and the like, to get a complete picture of the story you need to be able to see not just super recent data, but older data as well.

Learnings When asked which group “won” the debate and had the most convincing argument, I naturally said it was my team, which brings me to an interesting learning. If you are fighting for a particular point of view, you HAVE to believe it. As such, no matter what position you are in you MUST consider that you have an unconscious bias FOR what you’re doing/arguing. Especially if the scenario is for a job which means that you have a vested interest in the success of what you’re doing. This biases you (cognitive dissonance), to minimise this sometimes you need to take a step back, remove yourself from the position you are in, and think about the issue at hand.

0 notes

Text

Week 7 - Chapter 6: Scanning and Enumeration (Part 2)

Detecting the operating system of the target system

Fingerprinting can be used to identify the operating system used by the target. This can be done passively (e.g. sniffing traffic) or actively (e.g. craft your own packets to see the response). Obviously passive techniques reduce the chance of your activity on the network is protected.

Using nmap:

nmap -sS -O example.com

Banner grabbing is a method to gain information about the OS used on a system. Some examples of information found and the commands used can be found here.

Trying to stay hidden or anonymous One of the key things while performing a penetration test is to stay hidden (if replicating a real attacker). This means not being detected by IDS/IPS... Or if you are detected, your identity is kept anonymous

One more way that this could be achieved is to use a proxy which acts as a “middle man” of sorts which provides a variety of benefits:

Anonymise traffic

Can be used to filter traffic both in and out of the proxy

Proxies can be set up with a variety of different and free proxies used online. You can find one by typing in “’proxies” on a google search (and by changing your proxy settings o your browser).

Alternatively, Tor can be used (although I believe that downloading and installing this browser puts you on a “blacklist” of sorts).

Extracting Information from the data gathered There are many different ports of interest that can be used...

Additionally some functions can be exploited, for example, SMTP servers (email servers) can be queried to verify usernames utilising the “VRFY”, “RCPT” and “EXPN” commands.

If the old NetBIOS is available on the system this is an easily exploitable API by using the “nbstat” utility (on Windows). Additionally, NULL sessions could be used to gain access to certain folders on the server.

0 notes

Text

Week 7 - Learnings Update - Cybrary Network Discovery & Preliminary Scanning Labs AND Chapter 6 Scanning and Enumeration

Network Discovery Lab & Preliminary Scanning Lab Chapter 6 - Scanning and Enumeration

There were a few different commands that were taught throughout these labs. Below is a quick and basic “cheat sheet” of most of the things covered.

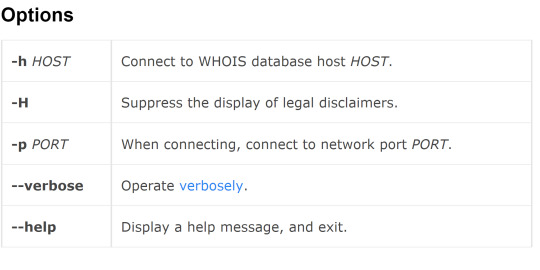

Whois - Queries the “whois” database. This finds information including domain name, IP address blocks, name servers, etc.

However, after also reading my Penetration Testing Essentials textbook, I realised that the chapter that I am up to also coincides with this content and as such, this post will be updated with the learnings from the textbook.

whois example.com

Source: https://www.computerhope.com/inix/uwhois.htm

dig (Domain Information Groper) - This command is used to query domain name servers. Some interesting commands are listed below

dig example.com +short (this will only give back the IP address) dig -x <IP Address here> +short (resolves the domain name) dig example.com SOA (start of authority)

traceroute - traces the journey that a packet sent from your machine takes to get to the server/address in question

traceroute example.com traceroute -I example.com (using ICMP packets) traceroute -U example.com (using UDP packets) tcptraceroute example.com (using TCP packets)

When you have an IP address in question the easiest way to find if the host is live at this address is to use the “ping” command (which sends ICMP packets):

ping <IP address here>

However, this command can only complete one IP address at a time. To perform a “ping sweep” of many IP addresses angryip.org OR nmap can be used - I will focus on nmap. NOTE: Zenmap GUI can be downloaded which acts as a GUI for nmap.

Nmap - is used to scan a network for live hosts and open ports

nmap -sT -vv example.com

-v = verbose output -vv = even more verbose output -sT = uses TCP connect scan (full open connect) -sU = UDP -oA = output to all formats (i.e. normal, XML, greppable) -A = aggressive network scan - DEFINITELY only use this with networks you have permission with

However, to try and be more covert different features in nmap can be utilised (there are many more packet combinations but these are a few interesting examples).

nmap -sP -vv example.com (i.e. this is the “ping sweep” mentioned earlier - so this command sends ICMP packets to every host to test if they are “live”) nmap -sS -vv example.com (i.e. this is a “stealth scan” as it only half opens a TCP connection - however it is less accurate than the full open scan) nmap -sA -vv example.com (i.e. this just sends an “ACK” packet without the original “SYN” request - if this is filtered out by the target system it often means that there is a firewall - if you receive a “RST” request the firewall is not present of not filtering these packets)

As far as firewalls are concerned, a potential way to get past them is to fragment the packets you’re sending so that the whole packet cannot be as easily determined by the firewall. This can be done by adding the “-f” flag to nmap.

To output files to files (.gnmap and .txt):

Example: nmap -sT example.com > host_list.gnmap grep open | **perform transformations here** > new_file.txt

To add additional information into the previous file:

echo “<new information to insert>” >> new_file.txt

0 notes

Text

Week 7 - Stack Frames and their components

To understand buffer overflows and reverse engineering I need to learn more about stack frames. So this post will be dedicated to learning more about these and the components commonly listed in the stack frames of a function.

Firstly when a function is called the parameters for that function are the first items to be added to the stack frame (done before a function is called denoted by “push”). Secondly, the return address from the calling function is added to the stack (denoted by “call”). Thirdly, the ebp and esp are pushed onto the stack. After this, local variables are pushed onto the stack (denoted by “mov” as storage space should have already been allocated for the variables).

0 notes

Text

Week 7 - Java vs C

I am completing this course as a general education course to expand my technical understanding and skillsets. I am from an Information Systems degree where we do NOT learn any C (or much at all about how a computer or software actually works)...

In my tutorials for the past 2 weeks, my tutor has talked about reverse engineering and also about memory corruption attacks (specifically, buffer overflow attacks). Although I understood most of the discussions in class I am going to revise and learn more about some foundational topics that will aid my understanding of the course (which will be split up into 3 posts).

This post will be about the differences between the language I have learnt, Java, and the language learnt throughout the UNSW School of Computer Science.

Java vs C (some of the major differences) Object-orientation - Java is an OO programming language whereas C is a procedural programming language.

Interpreted or compiled - Java is interpreted by the JVM interpreter line-by-line whereas C is compiled into an executable file.

Storage management - there is automatic garbage collection in Java, whereas in C, all data storage and the release of such storage must be done manually.

Write once, run “anywhere” - Java code is interpreted by a JVM, meaning that any machine with a JVM installed can run a Java program (and the vast majority of systems have Java installed (or at least can have Java installed). C programs, however, must be written differently depending on the OS or application type you’re programming.

0 notes

Text

Week 6 - Something Awesome Update

Updates The web crawler now dumbs to an SQLite database. This solution was ultimately chosen due to the flexibility of SQL. For example, if the crawler spreads too far (for example to YouTube or other social media websites) and returns a lot of false-positive emails, these can be easily filtered out using the Sequel Query Language.

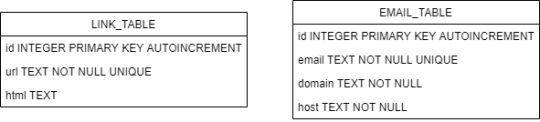

The data structure of my application currently includes 2 tables:

The only outstanding task I would like to complete is an adjustment to how the program works (although I am not sure if I will be able to get it working before the deadline). The adjustment would improve the usability of the application and allow it to “pick back up” the crawl where it ended last time.

Currently, I am 1 week ahead of schedule. I have achieved my HD grade but I am trying to further improve upon my deliverable.

Proposed Timeline End of Week 2 - Get approval End of Week 3 - Successfully establish a network connection using python (probably by spoofing a web browser/sending a GET request to get a web page) End of Week 5 - Retrieve and store the HTML in Python and be able to search through the HTML to find objects of interest (emails, hyperlinks, etc) End of Week 6 - Be able to store the emails/information of interest in Python and make the program automatically propagate to other webpages. Test this web crawler out on multiple websites and ensure that it still works (some websites may deal with hyperlinks and webpages differently). End of Week 7 - Be able to output the information of interest to Excel/.txt file so that this information could be used to start a spear-phishing attack. End of Week 8 - The product is finished, clean-up code and test the final product.

Personal Marking Criteria PS - Have a program that can get information from a single webpage and not being able to output this information to a .txt file/excel. CR - Have a functioning web crawler that can get information from the web (self-propagating) but not be able to output this information to a .txt file/excel DN - Have a functioning web crawler that can get information from the web and output this information to a .txt file/excel HD - Have a functioning web crawler that can get information from the web and output this information to a .txt file/excel. This web crawler has been thoroughly tested and works on a variety of different websites (regardless of how hyperlinks, etc are designed)

Project 2 - Cyber Security Upskilling

Updates I have read the 5th chapter of the penetration textbook. Not only was this blog post significantly more lengthy, I actually tried many of the solutions and websites throughout the chapter to learn how these tools could potentially be used.

It was quite scary how many websites exist for conducting people searched in the US...

Since I have detoured from my original plan it is admittedly hard to gauge my success... However, I believe that I have probably achieved a PS-CR as of the end of this week.

Next week (the final week), I intend to work towards DN or above! Regardless of the grade achieved I will continue to research and learn more about the world of security.

Personal Marking Criteria PS - Finish 6 chapters of the Penetration testing essentials textbook and blog about them, complete nothing else (average of one chapter a week). CR - Finish 8 chapters of the penetration testing essentials textbook, blog about them and nothing else OR learn one interesting skill AND finish 6 chapters of content from the textbook DN - Finish 10 chapters of the textbook and blog about them OR learn one interesting skill AND finish 8 chapters of content from the textbook HD - Finish 12 chapters of the textbook and blog about them (2 chapters a week on average) OR learn one interesting skill AND finish 10 chapters of content from the textbook OR learn one interesting skill, try implementing something technical from the learnings (blog about this) AND read 8 chapters of the textbook (with blog posts).

0 notes

Text

Week 6 - Learnings Update - Chapter 5 - Gathering Intelligence

Gathering intelligence about a company you’re trying to penetrate is very important as it can allow you to understand a variety of things including:

Technical Information - Things like the operating systems they use, versions of the web servers they use, applications used, IP address ranges.

Administrative information - Things like employee names, directories, phone numbers, vendor information, corporate policies.

Physical information - locations of facilities, social interactions, details of people and where they go.

This can be done in a few ways:

Passive - information gathering strategies that the results in minimal to no engagement is established with your target.

Active - These attacks involve engaging your target (potentially alerting them if it goes wrong) [e.g. calling the company/social engineering]

OSINT (the textbook lists three but personally, I would include this as a passive technique)...

Searching Websites

A good place to start is to view the companies website. This can be done using BlackWidow in Windows or using the wget command in Linux and Windows. For example:

sudo wget -m https://<website name> -m for “mirror” which downloads the website

sudo wget -r --level=1 -p https://<website name> -r for “recursive” which downloads the webpage plus one level into each component on the page

Finding hidden subdomains is another good way of trying to find additional information. A useful tool for this is www.netcraft.com (which can analyse a domain and find subdomains). To find websites that don’t exist anymore use the “wayback machine” (search on Google).

Using Google

cache keyword displays a cached version fo the website stored in google and not the current version.

link lists any webpages that contain a link to the webpage.

allinurl and allintitle may also be useful.

Additionally, the Google Hacking Database can be found at https://www.exploit-db.com/google-hacking-database.

People Searching

There are many sites that can be used for “people searching” but most of these are only relevant to the US.

Location Searching

Shodan.io - to find things such as webcams that are insecure! NOTE: Keep in mind that even if a camera or device in this uses a default password and you get into the system, this is STILL “being a dick” and is breaking the law (as you are not authorised to access to this system).

Google Street View

Social Networking Websites

Tumblr, Twitter, Google+, Facebook, LinkedIn, Instagram, etc

Echosec is an interesting website that allows you to see the location of posts from a specific person in a certain area.

Other areas of Interest

Financial services - if a company is publically listed there will be a lot more public information about this company on finance websites. Additionally, you may find out about some of your targets partner firms.

Job boards - Looking at these may reveal information about the systems they use (through the in-house skills they require). It may also tell you if they are currently vulnerable (i.e. they are looking for system admins, cyber architects, etc).

Emails - WhoReadMe and PoliteMail.

Technical information - whois command!

0 notes

Text

Week 6 - Web Crawler Update

I have updated my program to be able to dump all of the emails and links into an SQLite database (by importing sqlite3).

I have also made a few basic queries to retrieve the data out of the database, however, I need to think of some more helpful statistics I could use...

Additionally, I would like to add the ability to start where I left off (if the crawl is cancelled part-way through or the program has an issue). This was not in my proposed marking criteria but I think that this would improve the usability of my program.

The update and comparison to my marking criteria will be posted on Sunday.

0 notes

Text

Week 6 - Tutorial Summary & Reflection

Context

We were to imagine that we were a part of a cyber-war against one of the superpower nations. The first step was to consider some of the attacks that could threaten our country. The findings are listed below (both from class and my preparation work):

Part 1 - Listing Attacks

Assets

Information

Power

People

Infrastructure

Attacks

Sabotage of:

Communications networks

Public infrastructure (think power, water, etc) – Stuxnet, etc

DDOS on computer networks and ISPs – this could lead to further information and communicational breakdowns

If they target the private sector instead:

Banking outages and economic instability (think about if a nation-state hit every major bank with ransomware, essentially taking down a country’s financial and monetary flows)

Mass surveillance & espionage

Military data

R&D (both government & private)

Finding a country’s weaknesses (links to the public infrastructure point stated previously)

Uncovering certain information about a country’s inhabitants (their beliefs, habits, etc)

Fake news and miscommunication:

Potentially use fake news or propaganda to make enemy governments turn against their own governments (weakening the enemy state)

Alternatively, use this to strengthen your cause and make people defect from their own country

Rigging political debates, elections, etc

Threat of allied intelligence

- Five eyes

Governments creating mandatory backdoors into communications

Interfering with fibre optic cables

DNS Root server

Transatlantic cable

Hack the power grid

Spear phishing attack on the government to blackmail for secret information

Take control of automated systems including drones

Compromise military communications

Send false data

F18 Growlers have electronic warfare suites inbuilt

False flag attacks (framing other people)

Attribution on the internet is difficult

Information warfare through the release of confidential emails

DNC email server records

DOS entire frequencies of communication

Part 2 - 10 recommendations

My Group Assets

Government Secrets

Public Infrastructure + utilities (universities, power grid)

Military assets

People

International relationships

Economy

Recommendations (priority order)

Independent regulation of security for both private and public sectors (to harden all networks from cyber attacks. As a part of this, the ACSC would get the power to conduct these regulations and have increased funding. They would also conduct mandatory, external penetration testing of critical organisations.

Educate the people about cybersecurity to make them less vulnerable to phishing. This would also include educating them about topics like fake news and how to identify a fake news article (to try and stop miscommunication and keep national stability)

Utilise air-gapped facilities for critical infrastructure (both public and private). For example military, banking, water and power (if they need to be on the network at all), etc.

The worst recommendation we could come up with was to cut all political ties and become a neutral state like Switzerland (lol).

Other Groups (priority order)

Group 1

Take the power grid off the internet

Protect transatlantic cables

Build our own network

(Worst Idea) Don’t use autonomous devices - embrace the dark age

Group 2

Backup communication systems for military

Fallback procedures for military

Backup power and a more resilient power grid

(Worst Idea) Ravens... “they can’t be hacked”

Group 3

Remove major infrastructure from the internet

Don’t outsource infrastructure

Have shared interests with the superpower in question (stop the attack from happening)

(Worst Idea) Implement the Chinese Firewall

Learnings

Not sure if this is always a bad thing, but my group really focussed on low-level ideas and implementation details... This prevented us from quickly generating ideas during this case study. For the exam at the very least, I should remember to think more high-level as worrying about implementation details results in being too unsure about the HOW and not the WHAT.

0 notes

Text

Week 6 - Security Everywhere

A key issue in Australia at the moment is that of mandatory “back-doors”. As such, the government want to be able to read encrypted messages being sent by individuals to aid the fight against terror and to allow them to increase the security of the country.

However, Amazon has recently come out criticising this move saying that a security vulnerability cannot be created for just one party or technology... Creating a vulnerability for one party is just weakening the security of the technology for all parties.

I believe that mandatory backdoors are NOT the way forward. Firstly, I agree with Amazon in that the creation of weaknesses could (and almost definitely will) be exploited by malicious parties. Secondly, if applications with large user bases are forced to implement these measures (think Whatsapp, Telegram, etc), the parties of interest to the government will probably stop using the technologies -it’s would be public knowledge that the government can access the messages sent on these platforms with a warrant. The only thing that this Act would achieve is moving criminal groups to lesser-known messaging applications (or even end with the criminal communities CREATING THEIR OWN messaging applications - which won’t be weakened by the government regulations in question).

As such, the only impact I can see this act having is reducing the freedoms of the people, and making these, currently-secure messaging applications less popular among regular people and criminals alike.

0 notes

Text

Week 5 - Security Learnings Update (Something Awesome project 2)

This will be an update on the things I have learned throughout studying the Penetration Testing Essentials book.

Chapter 2 This chapter mainly covered the basics of networking. This included a look at:

The OSI Model - How data and traffic flows from an application through the internet, to cabling and ultimately is received by another application o someone else's computer.

TCP vs UDP - TCP is where all packets transferred are verified (i.e. the message order is ensured) by using headers and verifying a connection using a “three-way handshake”. UDP, on the other hand, does NOT acknowledge and ensure packets reach the destination and leaves this to upper-level protocols (but it is more lightweight in its communication).

Ports - common ports that are open and used for specific protocols.

IDS and IPS - Items on the network that you're attacking that may prevent your movement throughout the network (or at least notify that something odd is occurring in the network)

Chapter 3 This chapter mainly talked about cryptography.

Public Key Infrastructure (e.g. RSA)

Symmetric key infrastructure (e.g. Triple DES, AES)

Hashing (e.g. SHA-1, SHA-2, MD5)

This chapter re-covered a lot of what I had already learnt throughout the course.

Chapter 4 This chapter focussed on the penetration testing lifecycle:

Creating the scope of the penetration testing exercise (this can include things like infrastructure, applications, physical security, social engineering, etc)

Gathering information (OSINT and public information, DNS Information, common weaknesses in that OS/setup the company is using, etc)

Scanning and Enumeration (e.g. port scanning, vulnerability scanning, ping sweeping, etc)

Getting access to the system (penetrating the system)

Maintaining access

Covering the tracks of the attack

Documenting your findings so that issues can be fixed

The textbook also covered EC-Councils framework as well.

0 notes