Don't wanna be here? Send us removal request.

Text

The Journey of the Narrator

This is a master post of my journey creating this project and also reference to past related projects:

1) Space, Image and Sound

2) Hypernarration > Unsteady

3) Hypernarration and IoT, An idea on a grander scale

4) Oh what a few days can change.

Here is also a link to the playtesting playlist:

Playtesting the Narrator

And if you really want to go deep, Links to posts up to 2 years ago for projects that lead me to this Project:

Narrator Resources

0 notes

Text

Oh what a few days can change.

User testing is really freakin’ helpful by the way. Do it as soon as you can, learn from my mistakes.

Bligh and Matthew have already made some pretty decent blogs about this testing so I'm gonna try not to make too many of the same points since there's a chance you already know about it. However, there are some points I’d like to address in terms of future improvements since they on my mind and relevant to studio work.

Solo vs Cooperative experience:

In the times I've talked about the Hypernarration Project the thoughts have always stemmed from a solo, personally customised experience. (e.g. You would open the door of your fridge and it plays a custom tune, something funny or over the top) The Narrator was something you’d tailor to yourself and honesty, I can still see that being entirely viable.

However, a new branch has opened. Cooperative operation is an avenue I was kinda ignorant to not consider, It’s literally one of the few membership services I have. I pay for the privilege to be cooperative online with my PlayStation. Of course, turning your friends into a drumkit would be fun. Duh.

I'm not trying to be overcritical of my past choices I had my reasons and they still hold. If I follow the path to rework this project and make it more cooperative I will run into a few problems:

Firstly and most importantly, what happens to the Adaptive Audio?

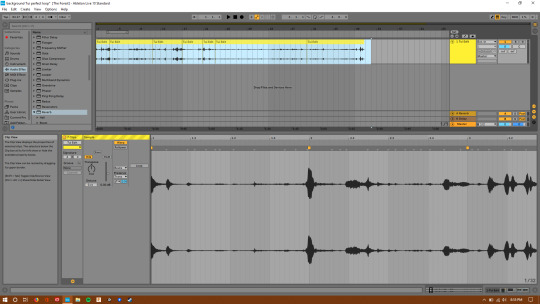

Adaptive audio in the context of The Narrator branches into two areas: sound effects (drumkits), and the adaptive music (overarching theme tune)

Having the adaptive audio tracks pushed into a less important role to allow more playful interactions with the drumkits means the original ambition of “bringing adaptive audio out of videogames and into the real world” also loses focus somewhat. The interconnection won’t be as strong as I first hoped.

Secondly, how do we treat personalisation?

Giving the project to two people at the same time would make the outcoming sound more general than I first expected, is that a good thing?

Lastly, the playfulness changes the outer appearance and interaction of the project.

It makes less sense to have an interactive object that is more fiddly and shaped to subvert expectation (e.g. a tree) when the focus becomes the sound purely and not the relation to the object. In our playtests the users would even connect themselves to the objects so they themselves become the interaction, shown here:

youtube

There’s a lot of reflection to be had here. I really gotta work on what's to come from all of this. I may be coming across as overly critical and upset with the outcome, I just want to say thats not the case. The fact that so many people were having fun with our project is a huge relief and I’m overjoyed with the outcomes. I just do feel a need to be critical of the implications of these results and what comes next.

0 notes

Text

Hypernarration and IoT, An idea on a grander scale.

Video games attach sound to a suite of events, places, interactions and occurrences.

Hypernarration is the act of enhancing real-life interactions with a network of digital reactions.

Put two and two together and I believe there is conceivably unending potential for personal audio customisation at home, work and beyond. By harnessing the new power of IoT devices. Compare it to Hue BulbsTM, To set up the bulbs a “Bridge” is needed. This Bridge acts as a hub for all light connections and each bulb can be changed via a phone app thru the hub.

Now imagine instead of lightbulbs, there are various sensors with customisable audio cues. You open the fridge and it sounds like a chest from the legend of Zelda, you turn on the shower and it sounds like a calm waterfall, you can tell when one of your roommates specifically is arriving home because the imperial march plays. The possibilities are entertaining, engaging and humorous.

0 notes

Text

Hypernarration > Unsteady

As this project has evolved over time, so has it’s predicted outcome and potential. Unsteady was a name for a project that was more visually inclined, and now we find ourselves without digital visuals entirely.

This project is almost a form of Hypernarritve (Casado de Amezua, 2016) which describes “Digital objects, usually texts, with a branching structure where the reader continually makes choices between sequential plot paths, so it offers multiple narratives that operate together as a network instead of linear sequences.”

But what our project offers is not that of a branching narrative, rather, a potential network of branching reactions. A narration to your story. But, there is no word for this, there is no word for an act of instantaneous narration of the most non-linear of plots, and sure our project is currently limited to the scope of playful reactions and over excavations. This isn’t yet a classic example of narration. Morgan Freeman himself isn’t describing your feelings or what is happening at the moment. But never say never ;]

But, I want to put forth this description of our overarching concept.

Hypernarration

Hypernarration is the act of enhancing real-life interactions with a network of digital reactions. That’s a working definition.

Casado de Amezua, E. (2016). Hypernarrative. [online] Medium. Available at: https://medium.com/dictionary-of-digital-humanities/hypernarrative-f4f1924a2668 [Accessed 1 Oct. 2019].

0 notes

Text

Texture Troubles

Back after the break this week has got me more focused on the tasks I need to complete. I’ve needed more motivation to blog and try harder in general and not wanting to let my teammates down has been a reason that's taken a bit too long to realise. But it’s unsurprisingly effective.

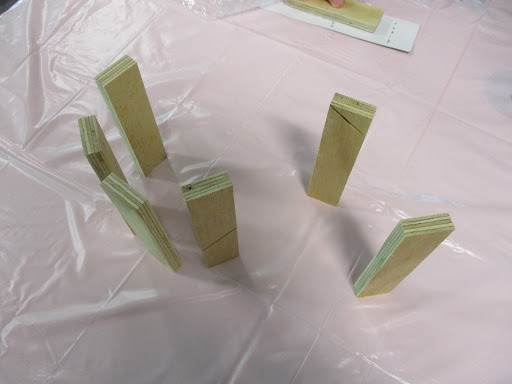

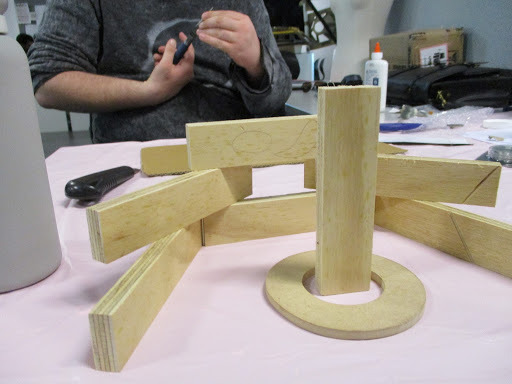

This week we’ve broken back into the Physical/Visual of the project, Kevin and James have been hard at work getting the installation into VR space. The installation, which has once again moved on from planks, has taken a natural shape once more.

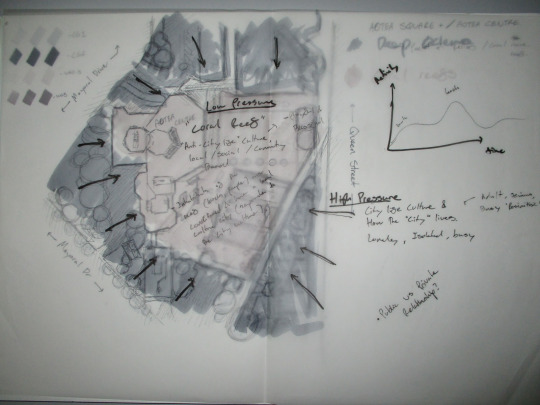

As shown in James’ illustrations, the new Coral Shapes resemble that of a bonsai tree with big disks. I personally love this design for both it’s function and form. It’s otherworldly while retaining this organic and familiar feel. The shape itself can provide shelter from sunlight and rain to our target demographic of lunch-eaters.

However this more open design does come at a sacrifice, it’s much harder to control what is hidden to the observer. The way we combat this is by creating an “enclosed” formation, with an exterior that is more in theme with the stonework of Aotea Square and the inner Coral Aesthetic.

While this was being replicated in Unity by Kevin and James, I worked on creating some “swatches” of fabric/textures to add the feeling element to the demonstration.

After spending far too many hours poorly hand-stitching the main texture I ran out of time to not go simple with my other ideas, however. I’m happy with my outcomes.

The first from the left is what I expect to use as a basis for the texture of the coral. The small cell inspired look is as close as I can get to replicating the intricate and organic look of real coral, my only gripe is with it both looking and feeling like a bunch of mini pillows. I was hoping to subvert expectation and that test isn’t clever in that regard. In the middle is a regular sponge that's been torn to feel rougher than it was. I didn’t think much further than relating sponge to the coral reef theme and just hope it could push the feel into that mindset, it could be interesting wet too? And lastly, my favourite to feel, literally a bag made out of the same material as the first that is filled with pebbles and stuffing. It is the closest to feeling different than it looks that I’ve gotten and it’s fantastic.

I’m excited to see how this all comes together on Monday.

2 notes

·

View notes

Text

Space, Image and Sound.

With Unsteady we are planning to achieve a symbiosis of Sound, Imagery and Space using adaptive audio, real life items and interactive inputs.

We hope this will provide a dynamic interaction that explores cooperation and interpretation of an experience.

Unsteady, In short, is an Auditory enhancement of everyday life, using Techniques such as adaptive audio, human circuits and the subversion of expectations.

I will talk below about the current state of the Audio, Visual and Interactive elements of the project below and discuss how we got there.

Audio-wise, my objectives have stayed mostly the same throughout the project. I was initially inspired by this hilarious and amazing video by Noodle on YouTube (Link Here). It got me into a deep dive of Adaptive Audio and why its so great for building atmosphere and creating a seamless audio experience.

My current plan in the overarching scope of the project is to have an Adaptive backing track to inform the player with mood and setting using the tuned background. The goal here is to create something seamless and if ultimately unnoticeable, I did my job well.

The Visual aspect of the project has by far undergone the most changes over time. Originally not being part of the project at all. I was bad in treating the visual aspect as a tack on. This is because when Matthew wanted to join Bligh and I was unsure of what else would need to be covered, so we decided to add a complementary visual aspect. Matt having decent experience with visuals.

This plan included a visualiser that would morph to the music and hopefully add to it. However, this was a pretty misguided decision. We want to take the Adaptive Audio out of the screen and into the real world. This choice defeated the original purpose of the project.

Hence we’ve taken a step back to address this oversight. Bligh explains the process well in his post here. We’ve kinda ended up making Visual and Interactive one and the same now that we plan to use interactive objects. Speaking of:

From the start our project has been pretty physically engaging. our main input was the

0 notes

Text

Big ol’ Coral (Interaction & Play)

After a few weeks you’d kinda hope you have a grip on the ideas you have. I’m comfortable enough where I am now to communicate the ideas and themes that have been circling through my group.

firstly, we are focused on the idea of amplifying the feeling of being in a state-of-mind altering space. Specifically the mental shift of being on a path to being at a destination. James initially explained Aotea Square to Kevin and I in a very informed way. It made us question how the space is used, ending on an Analogy that the Space does mimic that of a Coral Reef.

So how does that Work?

I feel like even these weeks down the track the Coral Reef analogy still holds up as a core idea because of how cut and dry it is. The Analogy goes. Aotea Square is a bastion of calmer, social and time out energy. Aotea Square, much like a coral reef, is one of few open-spaced destinations amongst the rush and fast pace of the city surrounding it.

In order to activate this space, we need to be aware of the predetermined role it has, the Coral Reef analogy is a quick and thoughtful way to convey this.

How are we activating the space?

To be critical of our process thus far (week 5). We’ve been stuck internally on the process of polishing our concept with little to no outsider input to inform our decisions. This week was carried by the ideas left with us from Ben who broke down our concept till we could create a meaningful product that informs the more ‘playful’ side of the project we had so far been lacking.

To be very brief and Incremental about out conceptual process would be beneficial so I’ll break it down here:

Initially, we began expanding the coral reef idea into physical space. Ideally creating objects of unexpected behaviour/texture to invoke a child-like sense of discovery and play. To be blunt, a strange thing in Aotea Square would interest people?

We took the idea of Discovery deeper and gave the foreign objects a source and origin. As you progressed into the space towards the source, the amount and randomness of the objects would also grow. we called this the “Annihilation method” wherein the movie with the same name has a similar progression system.

Then, progressing the idea of an origin, a tower. A point to reach. A destination. We didn’t know what to create, but we were in the initial steps when we reach the point of Ben’s input.

Ben threw us the the best kind of spanner. Together we thought more deeply on the idea as a whole, “why give away the end? Why show people what to expect when you can give them a taste and an urge to figure it out?”

This was what we were missing. I personally feel as if we were focused almost entirely on the outcome and not the process of obtaining it. So, now that we are here. What have we got?

The current outcome:

We are basing our new structure heavily on points addressed by Ben, partially obscuring the inner of the structure with an outside that blends into the surroundings. Keeping to the theme of Progression, we also want in incorporate this idea of a Fibonaccian approach, perhaps even a direct “Golden Ratio”.

Whatever we end up at we have a strong start. Kevin and James worked well on the structure with my little input.

I attempted to focus on what the content inside the structure is, I've found going back to my old project Lumi to be insightful. Link Here

0 notes

Text

Another one half done.

Pīwakawaka at open studio was satisfying to see. Despite my grievances, there was something insightful about seeing my family enjoying and congratulating me on something I had considered an at times disappointing process. Despite not having a story we had the research, concept and backing of significant people in AUTs Cultural department. Despite not having a bunch of levels to test problem-solving we had tight controls that were fun enough alone. Our core idea was to create a New Zealand game, and even though what we created had not even a hint of the story we wanted to showcase. A Fantail danced on the screen and the sounds of a New Zealand forest filled our section of open studio, and people noticed. Although I personally didn’t receive any critical feedback the general response was positive, viewers were even pointing out the fact there was a fantail to others. Having the audience connect with something straight out of New Zealand was our goal and we still achieved that. I have learnt much that will inform my next project, and proper planning is something I will be focusing on next time.

0 notes

Text

27 Different Sound Files.

When making the sounds I divided what was needed into three categories, Backing Tracks, Forest Ambience and Fantail.

Click either of these to access the sounds.

Backing Tracks

Using long drawn out tones and lack of depth in tracks I created, I left lots of room to be filled out with actual sounds of the forest. Something I had discussed briefly with Clint. Just enough to be a theme, not enough to overwhelm.

Forest Ambience

The forest ambience was definitely the most challenging to manage, I went through multiple versions of trying to replicate the space, for example, the same sound in a dense forest is different from a more open space, reverb and mono is mostly taken into account for this, fine-tuning is tedious but worth the result.

Fantail

Much like the forest Ambience, The sounds for the fantail were originally from the Department of Conservation which they have allowed public use with modification. I took a fantail recording and after processing to rid the file of background noise, I chopped up the file to arrange the different calls into kinds of “Emotion“ for the character.

Implementing the sounds into the game was a last minute process, I really do wish there was more time to fine tune it but the end result has a good mix!

0 notes

Text

Making Maps

Mechanics are hard to build maps around in a fluid state if you don’t know what could be cut. A whole portion of a map can feel purposeless if you design it around a mechanic that no longer exists. Looking back through my map designs I can already see this.

Parts 2,4 and 5, if included in our final product, would have been without purpose.

2: Both hazards and limited dashes were removed from the mechanics, a majority of puzzle solving is made by having limits and consequence, finding creative ways to utilise what you have to solve them. With unlimited dashes, we would need to create artificial boundaries and invisible walls on the map.

4. The wind was never created or utilised as a mechanic. I saw it as a way to, if need be, create a more “natural“ invisible wall and have an interesting pushback and variance in the flying mechanics.

5. Pecking was implemented but never as something to destroy object only pick them up, if the cave system was implemented it would only be to play around in.

All of the mechanics and dangers weren’t really helpful in the long run, but this whole implementation made me think deeper about what we can do to create boundaries that felt justified in a 2D space. A fun exercise in practice.

However, in the end, my level design was scrapped as others took over the job to create a demo level and I was left to sound design (the other skill I had originally brought to the table). This was decided on as a group and I still stand by the fact that with the time we had left, this was a good decision.

0 notes

Text

Maori Influence (By white people)

From the start, I felt like the content the group wanted to include in the story was walking a thin line. The concepts had always been heavily Maori inspired and despite growing up, learning and living with the culture I’ve been worried about the appropriation of it. At face value, we are a bunch of white people making a game about Maori Culture. I suggested we stay inspired by the myth (you can’t truly have an NZ game without some Maori influence) and limit trying to directly portray something we have a limited understanding of and be as respectful as possible.

We concluded that if we want to tell a Maori story we absolutely need to consult Maori officials with a deeper understanding of myths and thoughts on how to implement these themes. We ended up meeting with learning and teaching adviser Herewini Easton at CfLAT for consultation thanks to a meeting set up by Melissa, I will leave a link to Matt’s post on the meeting here.

*Photo provided by Melissa Gordon.

After this meeting, I had a clearer idea of how to go about my sound design, the sounds of the forest should take priority.

We were doing everything right still, even with the reassurances I still couldn’t help but worry this wasn’t our place and I still gotta work on that.

0 notes

Text

Building a Game Backwards

I've been asked to build the world before mechanics when mechanics should shape the world.

Level Design is definitely not an easy task, but it was something I hoped to take on board and accomplish along with Sound Design.

It made sense at the time, I had the most experience in the field with last years game and it is a part of game development I am easily the most passionate about. But there is kind of an unspoken golden rule to game design, you gotta have solid mechanics before you even start making levels “Let the mechanics shape the world, not vice versa.” In the progress of making this game, I had been asked to do versa. It’s the one major thing I never planned for. I never had this problem with Realm of Order because it’s base mechanics were already there and already solid. What do I even do the first few weeks?

Mechanics and Level Symbiosis.

The person I spent the most time working with Max, easily the hardest and most consistent worker in the group to his credit. He built the game from the bottom up and I tried (sometimes failed) to be there to help him with whatever I could despite my still limited knowledge of unity. I mostly wanted to be there to make sure I knew what features will be there to include diversity in my maps so we could have a better flow.

0 notes

Text

Transmedia Narratives, a summary

Transmedia Narratives is definitely a new and important way to experience a story.

My past experience with online twitter narratives and youtube mysteries proved that fact right out the gate. But being introduced to the massive, sprawling phenomenon the SCP community created was definitely next level for me. It was so easy to get lost in the pages and pages of creatures, things and places each with their own sometimes dangerous oddities. Even just becoming a part of that is an extension of the transmedia narrative. The “Beautiful Mind” project is my extension, it is the SCP Foundation testing behaviours of close to life Human A.I. interacting with dangerous SCPs to counteract the real-life loss that occurs much too often.

I do really Enjoy the premise I've created, as both an extension and possible franchise of its own. making accessible puzzles and hoping people enjoy the mystery. But if there was one thing I wish I tried, that would be creating a connection between the virtual and online world, it could’ve been as simple as sticking out a QR code in town. Cicada 3301 accomplished this around the world, in the first portion of one of their second puzzles, and they have the Mythos of being arguably one of the internets biggest mysteries. Something out of my experience range, but definitely a goal to aim for.

Below are all of my posts related to the class:

From Clues to Action.

Connecting the Dots.

Is Stenography too hardcore?

Where I'm at with Transmedia.

The Layout Formula For Jonah.

Creating a Fake A.I.

I do really Wish I had taken this idea into a group project, I feel like it had more potential than I alone could hope to accomplish at this time, the idea itself and the processes I’ve gone through can I believe be translated into other Mysteries or Product interest. The experience is valuable, But having somebody else to bounce ideas with and refine with is something I would absolutely change.

0 notes

Text

From Clues to Action.

Before I touched on the problems I had come across with Twitter and the move to Instagram. Visually I never quite expected to develop clues quite as layered as I have. I had mostly intended the whole narrative to be more story based than the tag along, follow the trail kinda setup I have now.

But the more I think about it the more it makes sense.

Originally I placed Jonah’s Story as an Extension type of transmedia. The extension of the pre-existing SCP “Franchise”. I planned to create a story that built on the world so many other creators have come together to create. But doesn’t it make sense to give my story the ability to have its own franchise? Opening up my methods to more than just blog-like updates will give me more reach, more opportunity to dive down the Jonah rabbit hole, that's really exciting to me.

The story feature is the second and last step in the lifecycle of Jonah, given some time to figure out the SCP in the posts. Players are now able to interact with Jonah in the classic choose your own adventure style.shown in the examples below.

0 notes

Text

Connecting the Dots.

I’m here to spoil the current state of the ARG so miss this one if it’s something you want to figure out yourself. The Instagram can be found here.

However, below is the process I have developed to aid players in the story, which I do consider important.

Currently, on Instagram, I’ve run through some loops. Definitely not as many as I would've wanted to, but things happen.

Needless to say, I've had a good response. People have interacted with Jonah which has helped me figure out the character a bit better.

But as I get into that, let me run you through what is currently active.

Starting with Jonah’s Instagram itself, I have managed to amass a small following of mostly classmates and few people totally in the dark about the project:

This is the first post leading into the latest SCP Interaction. A glitched up photo of a cat saying “Hello there! Hows it going //scale 1##9//?”. The name of the cat a mystery that is necessary to identify the current SCP.

The next clue is posted, due to the downscaling and unpredictable quality, Instagram uploads photos with, hiding data in the code of a photo is mostly unachievable. Thus I’ve come to QR Codes, they’re entirely visible and because of their widespread use, much more accessible. The only downside is that not many alternatives exist other than MaxiCode or DataMatrix which in my opinion have the same problem. And a Qr Code to solve every puzzle seems like it would become quite boring over time.

However, being prompted to listen to Jonah’s favourite song you scan the QR Code.

You’ve been taken to a SoundCloud page of Jonah’s, the song itself is a heavily altered cover of David Bowie's “The Man Who Sold the World”. This song doesn’t help with the current SCP hunt, but it does add to Jonah’s personal story arc. “As the persona in the song has an encounter with a kind of doppelgänger, The lyrics are also cited as reflecting Bowie's concerns with splintered or multiple personalities.” This whole area of thought is somewhere I’d like to explore with “Jonah”.

However, more importantly, the QR Code leads you onward, with the description “//the notes//” you continue down the rabbit hole.

On a new page on Music Theory, the mystery cat’s name is solved, the missing name is revealed, “Hello there! Hows it going //scale 1##9// Dorian?”

With this new information, you can search in the SCP Foundation Wiki, “Dorian” and the second result shown is the relevant SCP, SCP-607 is a large grey male American Shorthair cat of indeterminate age with a collar reading "Dorian".

On SCP-607′s page, you now have access to potentially life-saving information for Jonah as he interacts with the latest experiment. But, of course, this is all optional.

This is the typical logic route that stems from proper posts on Jonah’s wall, the actual outcomes and voting made throughout the format of Instagrams Story’s which I will demonstrate in the next post.

0 notes

Text

Is Steganography too hardcore?

Steganography, as mentioned before, is the practice of concealing a file, message, image, or video within another file, message, image, or video.

This is difficult to accomplish without considering my audience.

Who is my audience?

Reflecting the ideas and methods I've recently had, my audience was a potential group of clever internet Cryptographers and Steganographers. But that isn't the SCP community, and it is highly unlikely. The SCP community is much more casual, shouldn’t I make my content accessible and fun much like the rest of the SCP catalogue?

Yes and No.

No, because I lose some of the core ideas from one of my inspirations, Cicada 3301 which, the very story itself can only come from those brilliant Cryptographers and Steganographers that actually made it through the gauntlet.

Yes, because it’s worth losing the long drawn out, sometimes unsolved mysteries for a story anyone with a bit of time can follow and have genuine fun with. Relative to my own skills as well? I don’t have the experience or skills necessary to create such a complex web of crypto-clues, so why not make it more accessible?

I do believe that traditional and transmedia narratives are one and the same, they both follow a story. With access to the “linear paper trail” of a transmedia narrative, it too can be read like a book.

0 notes

Text

Where I’m at with Transmedia.

Just to tie the bit I’ve blogged on, the crisis I had mentioned earlier is the whole trying to find the audience. I do have a few options.

Reaching out to the wider SCP community and trying to get some level of activity, the same idea with the ARG (Alternate Reality Game) community. Or potentially moving the Twitter narrative as a whole onto a different platform, the platform of choice being Instagram.

Firstly you might be wondering why I’m heavily relying on some kind of activity. The reason being the formula shown on my previous blog is dependant on multichoice like one of the books with the varying paths except the reader is the followers. Otherwise, I’ll be stuck in a perpetual loop of terminating Jonah due to lack of interaction.

Moving to Instagram seems to be the best choice for immediate effect, I can’t be sure that anyone from the wider community would become interested within the timeframe needed for me to progress the story in any way before the end of the semester. Whereas I have asked classmates to join in, to the chime of “I don’t have twitter, but I could do Instagram.” I have responded.

Now moving to Instagram is not without its challenges, luckily for me, they do have a poll system, much like Twitter. There is also a written element. However! Instagram as a form of media revolves around Photos, something I had never really considered. I do believe I could make it work and add a deeper element, Enter Steganography.

Steganography is the practice of concealing a file, message, image, or video within another file, message, image, or video. Sounds scary, but the tool https://www.openstego.com/ could make it a lot more possible. But taking the idea to a more base level, visually corrupting an image and hiding information.

Using programs like:

Image Glitcher

Image Glitch Tool

or even a Hex Editor?!

I can attempt to hide information in multiple ways. So my progress now is planning the move to the new platform.

0 notes