Note

I always suggest Fierce Deity stuff because I'm ridiculous that way but a FD Zora might be fun, actually.

BUT YOU’RE SO CORRECT EVERY TIME WITH THE FIERCE DEITY

627 notes

·

View notes

Text

how well would the Undersiders get along with the Woe, do you think? What about Breakthrough?

Been awhile since I’ve done this:

AMA on Worm, Ward, Twig, Pact, Pale, Claw, and Seek

92 notes

·

View notes

Text

Been awhile since I’ve done this:

AMA on Worm, Ward, Twig, Pact, Pale, Claw, and Seek

92 notes

·

View notes

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

209 notes

·

View notes

Text

PLEASE JUST LET ME EXPLAIN REDUX

AI {STILL} ISN'T AN AUTOMATIC COLLAGE MACHINE

I'm not judging anyone for thinking so. The reality is difficult to explain and requires a cursory understanding of complex mathematical concepts - but there's still no plagiarism involved. Find the original thread on twitter here; https://x.com/reachartwork/status/1809333885056217532

A longpost!

This is a reimagining of the legendary "Please Just Let Me Explain Pt 1" - much like Marvel, I can do nothing but regurgitate my own ideas.

You can read that thread, which covers slightly different ground and is much wordier, here; https://x.com/reachartwork/status/1564878372185989120

This longpost will; Give you an approximately ELI13 level understanding of how it works Provide mostly appropriate side reading for people who want to learn Look like a corporate presentation

This longpost won't; Debate the ethics of image scraping Valorize NFTs or Cryptocurrency, which are the devil Suck your dick

WHERE DID THIS ALL COME FROM?

The very short, very pithy version of *modern multimodal AI* (that means AI that can turn text into images - multimodal means basically "it can operate on more than one -type- of information") is that we ran an image captioner in reverse.

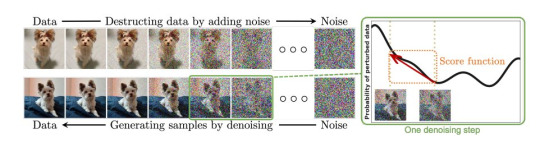

The process of creating a "model" (the term for the AI's ""brain"", the mathematical representation where the information lives, it's not sentient though!) is necessarily destructive - information about original pictures is not preserved through the training process.

The following is a more in-depth explanation of how exactly the training process works. The entire thing operates off of turning all the images put in it into mush! There's nothing left for it to "memorize". Even if you started with the exact same noise pattern you'd get different results.

SO IF IT'S NOT MEMORIZING, WHAT IS IT DOING?

Great question! It's constructing something called "latent space", which is an internal representation of every concept you can think of and many you can't, and how they all connect to each other both conceptually and visually.

CAN'T IT ONLY MAKE THINGS IT'S SEEN?

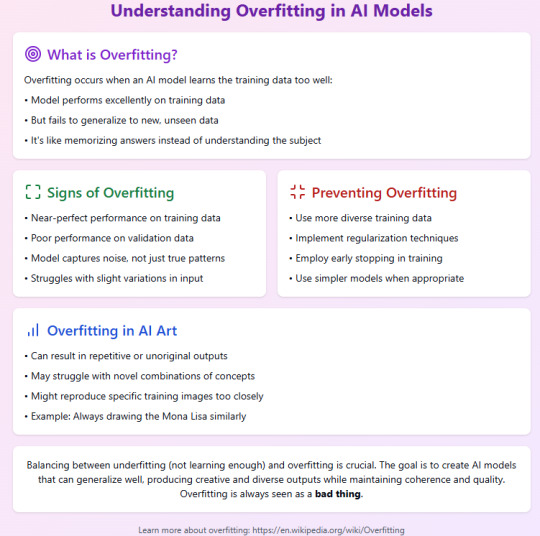

Actually, only being able to make things it's seen is sign of a really bad AI! The desired end-goal is a model capable of producing "novel information" (novel meaning "new").

Let's talk about monkey butts and cigarettes again.

BUT I SAW IT DUPLICATE THE MONA LISA!

This is called overfitting, and like I said in the last slide, this is a sign of a bad, poorly trained AI, or one with *too little* data. You especially don't want overfitting in a production model!

To quote myself - "basically there are so so so many versions of the mona lisa/starry night/girl with the pearl earring in the dataset that they didn't deduplicate (intentionally or not) that it goes "too far" in that direction when you try to "drive there" in the latent vector and gets stranded."

Anyway, like I said, this is not a technical overview but a primer for people who are concerned about the AI "cutting and pasting bits of other people's artworks". All the information about how it trains is public knowledge, and it definitely Doesn't Do That.

There are probably some minor inaccuracies and oversimplifications in this thread for the purpose of explaining to people with no background in math, coding, or machine learning. But, generally, I've tried to keep it digestible. I'm now going to eat lunch.

Post Script: This is not a discussion about capitalists using AI to steal your job. You won't find me disagreeing that doing so is evil and to be avoided. I think corporate HQs worldwide should spontaneously be filled with dangerous animals.

Cheers!

1K notes

·

View notes

Note

Is the ChatGPT version for the Chum art you showed behind a paywall, or is it the free version?

do you know if there’s a specific name for the Chum art style of bold colors and thick black lines? I would love to see if I could try and re-create it in my own AI stuff

The character reference sheets are made in the style of (but without using names) Paul Dini and 90s-early 2000s era superhero animated television shows. I specifically emphasize exaggerated shape language and stylized proportions to give characters distinctive silhouettes without paying much heed to humanistic design philosophy (Niles Nolan, I don’t remember if I posted the recent version, has legs twice the length of his torso, arms to his knees, and is like 9-10 heads tall, for example). I know people may think because I use ai that I’m just pulling a slot machine but each character design decision is made with a very specific eye and I will constantly inpaint tiny sections for hours to get specific shapes or faces or poses that I want. But generally I am trying to ape that transitional period between Gargoyles/Spiderman TAS that whole era and then like early Man of Action (Ben 10 original run, generator Rex), I think with an eye towards the exaggerated shape language of like… Transformers Animated, Samurai Jack, stuff like that. Oh! Batman: The Brave and the Bold is a good example too.

Tl;dr “90s and 2000s action cartoons” is what I am aping but it’s a blend of the stuff I watched growing up rather than one particular style.

(The ChatGPT is just a temporary fun diversion to stuff like “okay now make it live action”)

38 notes

·

View notes

Text

picture i was making for a LORA but it goes hard so ill show it to you anyway. lora girl.

49 notes

·

View notes

Text

the heavy world’s upon your shoulders

will we burn on or just smolder

354 notes

·

View notes

Text

writing dialogue for Astarion in like a normal text editor is very difficult actually because it feels like all his dialogue should be created with WordArt

37K notes

·

View notes

Text

A pair of siblings from California inspired by the Cholo culture, a moped living in Havana Cuba, a beast form jaguar part of a Amazon rainforest tribe, and a llama best transformer that lives in the mountains of Peru

2K notes

·

View notes