Text

Big Data in Bioinformatics

Big data in action — A populated server room in a business data center. Adapted from a photo by Manuel Gessinger for Pexels.

When a biologist sets out to do research on something like the function of certain genes, they typically focus first on their main question: which genes affect a phenotype? They might then ask themselves what tools they’ll need to conduct experiments. Geneticists might use DNA sequencers, cell cultures, or assays. These techniques might be perfect for working on a single strain of bacteria or a single gene, but research today involves much larger tasks. With the rise of the information age, researchers have accumulated such an excess of research data that it’s no longer practical to manually analyze or sort through it.

This excess is widely known as big data. Science editor Vivien Marx of Nature magazine mentions that big data was once only relevant in astronomy and particle collider physics. However, the rapid advancement of technology in areas like genome sequencing has brought an explosion in data on the scale of petabytes. For scale, if laptops have 500 gigabytes of storage on average, a single petabyte can store 2000 times that volume. With such an exponential growth, how do biology researchers work with so much data?

How to train your algorithm

Before we can understand large-scale data on the order of petabytes, we must first look at the fundamentals of data analysis on a smaller scale. Algorithms―ways of managing and sorting information with modern computers―are a key factor in modern data analysis. The field that concerns algorithms is known as machine learning.

Traditionally, biologists perform “wet” lab work done by hand. Penders' genomics team studies nutrigenomics, which concerns the relationship between diet and genetics. One of the wet techniques they use is manually analyzing proteins with gels. Plotting genetic data after genome editing is another kind of wet lab work, as proteomics veteran Udeshi explains. But each case has something in common: they stop working after a certain point. Individually observing dots on a gel to identify proteins is only efficient until you have thousands of tests to perform, and plotting points only works as long as Excel doesn’t crash from data overload.

Gel electrophoresis — Common “wet” technique used to manually separate nucleic acids and proteins by mass. Taken from an IBBL article.

Despite how commonplace wet lab techniques are, Penders stresses that “dry” bioinformatics techniques can’t be left out of the picture in genomics. Computer algorithms are indispensable no matter which field a researcher studies, whether it be in Penders' nutrigenomics research or in Udeshi's proteomics work. Algorithms are unique since they facilitate sorting through large data sets and minimize wasted data. It can be easy for researchers to pick out the most relevant data and discard the rest, but algorithms are able to find associations invisible to the unaided eye.

When it comes to algorithms, statistical techniques are an essential part of the equation. Conventional methods of statistical analysis are best suited for data sets with less variables and are parametric, meaning they rely on bell-shaped, normal distributions. On the other hand, some statistical techniques are nonparametric. This special characteristic makes studying larger, multi-variable data sets easier, and lets researchers highlight relationships in noisy, ambiguous data sets.

For instance, Gonzalez-Recio's animal scientist team uses a nonparametric technique to study genomics in cows. This algorithmic technique, known as "gradient boosting" or “random boosting”, was devised by the researchers to better analyze subtle genetic differences called SNPs, or single nucleotide polymorphisms.

Of course, most algorithms require a base to learn from. In Gonzales-Recio's study, the boosting algorithm was fed a genomic test data set, only eventually gaining predictive ability after many iterations of machine learning.

Gradient boosting — Relies on gradual, sequential training with new models. The error circles represent discarded data that doesn't fit the model. By Bradley Boehmke from the University of Cincinnati.

Typically, chemists and biologists perform nuclear magnetic resonance or NMR on proteins in order to understand their structure, but this can be time-consuming and impractical on a larger scale. Another machine learning study by Kumar et al. involves feeding a chemical database into an algorithm to teach it how to classify proteins. The researchers' new algorithm provides an alternative to NMR by allowing low-level prediction without the need for NMR data. NMR still continues to be a mainstay lab technique for the foreseeable future, but this application of machine learning shows promise for the future of biochemistry and proteomics.

It’s easy to see now how computational algorithms and machine learning can reveal new perspectives in bioinformatics and genomics research. But only so much information can be analyzed by individual labs and computers. What happens when biologists need to deal with even larger sets of data?

Head in the clouds

Enter the era of cloud computing. The term cloud computing refers to rentable, customizable, online solutions for storage and processing power. The construct called The Cloud in this scenario is a network of decentralized, global data centers that allocate resources to users as needed. If this is difficult to picture, consider cloud services as virtual, remote-controlled, miniature computers. Although the field of cloud computing has only seen its advent in the past 2 decades, cloud computing is playing an increasingly crucial role in bioinformatics.

Cloud computing is magnitudes more powerful and efficient in comparison to local storage and analysis at individual labs or systems. With their expertise in computational biology, Langmead and Nellore emphasize the versatility of cloud computing in particular. Researchers can easily rent out and unload industry-grade resources at a relatively low cost, and tailored cloud services are available for every specific need. Companies such as Google and Amazon provide cloud solutions adapted for genomics and the life sciences as well.

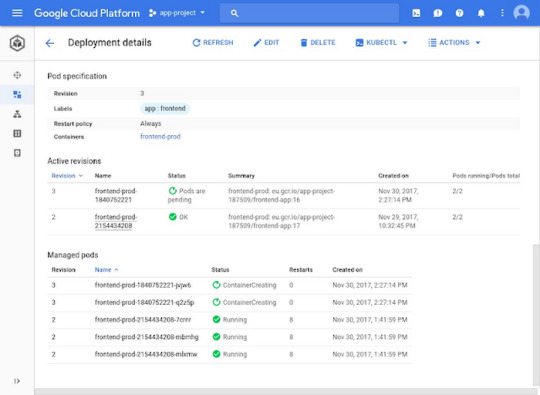

Google Cloud — Cloud services can be used to deploy virtual, customizable computing units. Screenshot of a cloud control panel where users can customize their units. By Maks Osowski.

To illustrate, a genomics researcher might deploy a platform as a service (PaaS) instance to run RNA analysis software on. Not only would they be able to run their own set of tools on their own digital workspace, but they would also be able to reproduce and access their data anywhere. This confers special advantages for teams. Team members working on a genomics research project would be able to access the same virtualized workspace remotely, and manipulate data in real time.

Aside from on-demand cloud services, a variety of large databases exist online for genomic and genetic information. The National Center for Biotechnology Information, or NCBI, hosts a database called dbGaP with data gathered from research on phenotypes and genotypes, while gnomAD thoroughly documents genetic sequences along with statistical information and allelic frequencies. These databases may not represent big data on their own, but they serve as an important fraction in the big data equation. These databases contribute to the extensive, modern library of research data and serve as useful tools for biologists.

BLAST — A comprehensive database for genetic and protein sequences run by the NCBI. Screenshot of front page, featuring links to the search engines.

Resurfacing from a deep dive

Ultimately, many biologists who specialize in genomics, proteomics, and adjacent fields find themselves in a liminal space between the duality of “old” biology and “new” biology. Some may meet the idea of big data and bioinformatics with resistance. Others may welcome the change with open arms. But one thing is for sure: as researchers develop new technologies in bioinformatics and data science, researchers must adapt to working with increasingly larger data sets. The prevalence of big data forces older and newer biologists alike to realize the often-neglected importance of computer science and statistics.

It is undisputable that the new era of big data has brought much uncertainty, but in turn the era has also given us the rise of modern solutions. The advent of machine learning, algorithms, and cloud computing to automate processes that we would previously do manually has shifted the starting point of research from being inference-based to data-driven. Researchers are now able to compute results they would have never been able to a decade ago with their technological ceiling.

Whether or not these new technologies seem foreign to you, it's important to recognize that they aren't meant to be feared. As we make new discoveries in the world of science, we invent new approaches to how we research and test our ideas. Change breeds uncertainty and fear, but adaptation is an essential catalyst for future innovation and discovery. And if those discoveries end up changing us and our world in turn, we'd certainly be wise to welcome them with open arms. ■

0 notes