Don't wanna be here? Send us removal request.

Text

Challenges

There were definitely a lot of challenges we faced as a group, I don’t solely believe it was because of online learning, but I believe it was a large contributor.

Starting off, we all felt that the NGV brief was very vague, and I felt we all had some different interpretations of what they wanted. This was great when we were brainstorming as we had such broad ideas and had heaps to choose from when narrowing it down, but it felt like a huge roadblock when executing the video project. I felt like we all felt intimidated and unprepared, even though everyone was researching and organising their own roles, nothing ever felt like it was “correct”. I feel like the NGV project wasn’t really meant for Digital Media students, but more for designers who created solutions such as architecture or apps, but I felt like it was too late for me to request to do something else. We spent so much time researching design solutions, we weren’t left with enough time to execute the project, yet we felt like we had to have something solidified for the NGV proposal. I was proud of the work we had done for the presentation and I had enjoyed creating the sounds, yet we all still felt like we had missed the mark with what they wanted, especially after listening to the other presentations.

After the presentation, we pushed forward with the 360 video concept, but it was looking like it was less and less likely to be achievable. The Melbourne lockdown meant Harry and Yee were left to their own resources and weren’t able to access the high quality computers and equipment at RMIT. Their computer’s were letting them down with the rendering speeds, and shutting down all the time, it just wasn’t feasible to attempt such a large project. It was quite disheartening as I had already spent such much time figuring out who to encode 360 sound to a 360 video -- but I’m glad I had the push to learn it for myself, so I can reach out to 360 artists for collaboration as it’s not a daunting task for me anymore.

In order to adapt to the situation, we decided to create a standard 2D video, but included isometric animation for a 3D feel when watching.

It all fell off the rails again when Harry’s building was out of internet for a few weeks, we were only able to get minimal updates, so I wasn’t able to make sure my sounds were fitting to the movement for every scene. But we got there in the end! Overall, the most out of time was the narration, so I rearranged to be more rhetorical and minimal. The end product is a great strong 2 minutes, but I do wish it was a little longer considering how much effort everyone put it.

0 notes

Text

Sound Design Process

Whilst researching how to create and then encode a 360 soundscape for a 360 video, I collated past field recordings and recordings sourced from Freesounds.org that had a creative commons license, meaning the user had uploaded the sounds for into the public domain to be used freely with no copyright or crediting needed. I tried to keep it to a minimum, but as I wanted the ambience to be rich and wide, I needed to source some more city sounds as I didn’t have as much as I thought in my own field recordings. Under normal circumstances I would opt to record all of my sounds used, to get practise and to avoid all copyright, but due to the Melbourne lockdown I was only able to record in my local area, and at the time of sourcing I was quite uncomfortable with going out in public as I didn’t want to catch covid myself.

Coming back to the start of my process, when brainstorming scenes for the video, I brainstormed soundscape ideas. I knew I wanted to create a rich ambient space to help place the audience into our hypothetical world, so I decided I wanted to use real life atmos recordings. I used a spreadsheet to help me deconstruct each scene and find what sounds I would need, a trick I learnt from the Sound Design course within the Bachelor. I started broad with each scene, and slowly found the sounds I needed so I could have a high quality atmosphere for the world. After this, I searched through my existing recordings before using keywords from my spreadsheet to find the most relevant ambient recordings on freesounds.

I also knew I needed to articulate bubble and text movements to guide the audience’s attention. When examining the infographic videos that the visual team had found for references, I heard lots of whooshes, clicks and typing sounds. So I had some sound design days where I recorded different whoosh textures. I did this by simply flicking around different objects right in front of the microphone -- I used paper, cardboard, a fry pan, a water bottle, a book and some dvd’s. After finding the best sounding ones from each object, I time stretched, reversed, and transposed to higher and lower frequencies so I had lots to choose from when I needed to add them into the video.

When it came to constructing, I layered the ambience heavily -- separating it into city sounds, nature sounds and people sounds so I could bring them in and out depending on where the “camera” would be. When the camera moves, I didn’t just automate the volume, but I would eq the groups, so when we zoom into the live music scene, the voices raise in volume but I also bring out the lowpass filter to include more high frequencies. This follows the basic principles of sound acoustics -- low (bass) frequencies are longer in length, so travel a further distance than high frequencies, which are shorter in length, explaining why you can hear the “doof doof” of the bass when someone in your neighbourhood is having a party, but you can only hear everyone yelling and having a good time when you’re closer to the source!

Below I’ve included a snippet of the lowpass automation with the city sounds “group” to show how even that alone creates a zoom effect into a building.

youtube

For the narration, we wanted an AI sounding voice, like what you would hear on a government video. So, I recorded in myself performing in a soft tone, and then subtly added different pitches underneath so it sounded a little warped. I also added the same reverb that I did for the sound effects so it felt like it was in the same world.

youtube

0 notes

Text

Researching

For the major project, I was involved with the brainstorming and researching aspect, and was the sound designer and mixer for the execution of the project.

When being assigned to this major project, we had a few meetings to brainstorm our most ambitious goals for the major project, sharing each other’s works and strengths and weaknesses.

This is seen throughout our google drive where we documented every meeting.

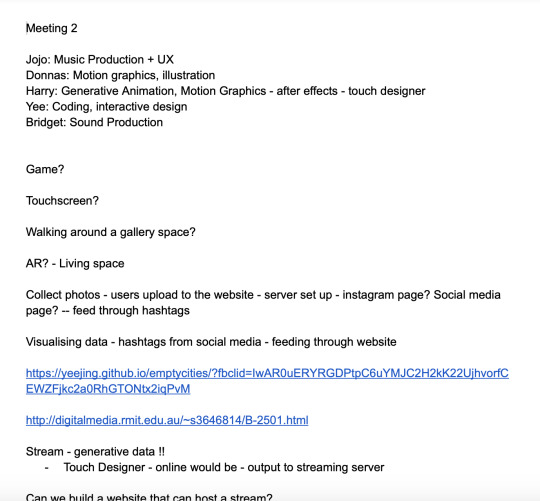

Meeting 1 + 2: We started very broad, with multiple project based ideas that span across differing disciplines and slowly narrowed it down to a narrative video based work instead of an interactive based work, like gaming or a touchscreen installations. On the document above, you can see examples of us sharing our strengths, and that we discussed the options we had thought about in our first meeting. In the screengrab below, which is a continuation of the above document, you can see that we discovered gaming and interactive wasn’t in anybody’s strengths so we wouldn’t be able to do a great job at executing the project, so we came to the conclusion to create a prerendered experience, like a click based experience or 360 VR.

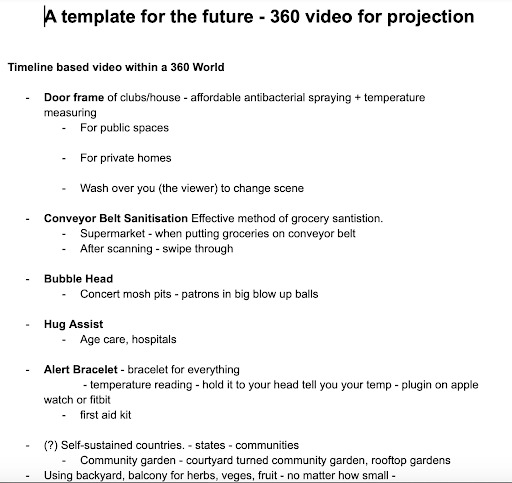

Meeting 3: So took a few days away to brainstorm by ourselves. In our next meeting, we pushed to create an informative video. Above you can see some of the scene ideas we brainstormed together. We wanted to make the video stand out, so we decided to create a 360 VR experience so the audience/user could feel immersed within the future world we are predicting. Below is ways we would execute this as a group:

Meeting 4: during meeting 4, we brainstormed some more ideas for scenes whilst researching some actual design responses that had been made throughout the pandemic already.

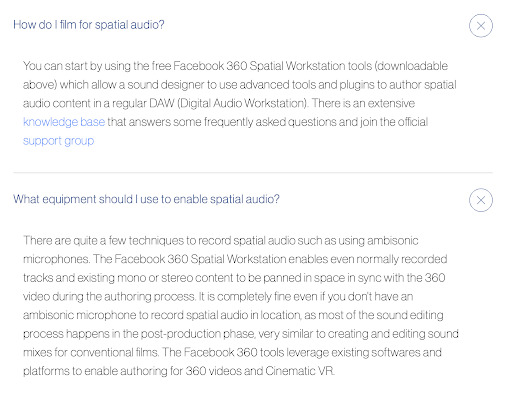

Over the next few weeks, we went into preparation for the first Milestone of the PDF proposal. So we solidified our scene ideas through extensive research and found some inspirations and references for similar infographic videos. We had confirmed we were doing a 360 video, so everyone was on their way to learn how to execute it for their own discipline in the best way possible. As a sound designer, I wanted to enhance the experience of 360, but I also needed to grab the audience’s attention to get them to look at a specific spot. I was confused with how to do this, as I was worried the audio would be all over the place when a user puts on a VR headset. I had mixed for 5.1 within RMIT’s ‘the POD’, so I had some experience in structuring for an immersive experience, but never for 360 video. So, I watched a lot of videos on YouTube to figure out the process I would need to do after recording and collating sounds.

The most helpful videos:

Envelop for Live Software Intro

Envelop for Live - 360 Introduction (headphones required)

Free binaural panning plugin in Ableton Live 9

Ableton Live 10 Multi-Channel/Surround/Ambisonics Audio

Ambisonics in Ableton Live 10 Tutorial Part 4: Bouncing with Audified RecAll

Facebook 360 Spatial Workstation Tutorials

I found lots of helpful videos to create a 360 sonic atmosphere on Ableton, but I found out I wasn’t able to export it all within Ableton. The best DAW to encode audio for 360 video is Reaper with the Facebook 360 video encoder, Reaper has a 60-day free trial so I downloaded it in the hopes to figure out if I could get it done, and the FB360 video encoder was free. As Ableton is my preferred DAW to create sound design, and I had all my go-to plugins on their I researched how to create a 360 environment within it first, to then export into Reaper. The most seamless plugin I found was Envelope’s E4L plugin, which can export 16 channels for a rich sonic space. I then found out Abelton doesn’t support exporting 16 channels, so I would have to download another software to create a virtual audio device to export out of Ableton and into another DAW, the best for this was Rogue Amoeba’s Loopback.

Whilst researching the best ways to encode audio for 360 video, I was also still researching various design responses from the covid-19 disruption and the impact covid-19 has had on our everyday lives. As well as assisting others with their topics of research, my main focus was entertainment and lifestyle. I researched why it was important to keep live music thriving for the new covid normal. I did this by reading some existing articles about covid-19’s impact on the live music scene within Australia, and it linked me to the Report on the inquiry into the Australian music industry by House of Representatives, Standing Committee on Communications and the Arts. I found out the contributes $15.4 billion to the Australian economy, generating 65,000 full and part-time jobs, which I highlighted for our pitch to the NGV. I also researched the benefit live music has on our mental health, stating that festivals are traditionally a place that fosters creativity and belonging.

0 notes

Video

Narration: reverb for emphasis on power and emotion

Sound Design: to highlight significant points

youtube

Infographic inspiration

2 notes

·

View notes