Tips & Tricks from Datacenter Experts with emphasis on DCIM and related matters. datacenter.mayahtt.com | Contact Us

Don't wanna be here? Send us removal request.

Text

Last Outpost: The Rise of the Edge Datacenter

Gangnam Style is a song by South Korean artist Psy that, in 2012, was viewed two billion times on YouTube. It is a textbook case of a video going viral. As such, it can be pointed to as a tangible reason for the rapid growth of edge datacenters. As their name implies, edge datacenters are located away from the main centers of the internet. They are built in so-called tier two markets, such as St-Paul, Minneapolis, or Phoenix, and serve to move the edge of the internet closer to users.

Traditionally, data was stored in hyperscale datacenters in cities like New York City, Los Angeles, or San Francisco. When browsing the internet, users from smaller cities connected to one of these hyperscale datacenters and streamed data over the backbone transport link between the two cities. However, with the advent of video streaming, cloud storage, and online gaming, the latency and bandwidth limitations of this system have forced providers to turn to edge datacenters.

Phoenix, Arizona is a great example of this trend. Prior to 2012, Phoenix’s 1.6 million people were served by a datacenter 350 miles away in Los Angeles [1]. When a video, such as Gangnam Style was viewed by Phoenicians, the entire file had to be transferred the 350 miles from LA. The next time it was viewed it had to be transferred again. This caused a huge amount of unnecessary traffic between the two nodes increasing latency, costs, and bandwidth consumption.

The creation of an edge datacenter in Phoenix solved this problem. Instead of having to transport the video from LA every time it was viewed, the file could be cached locally. Users experienced improved performance, while providers saved money that would otherwise be spent transferring the file. Intelligent systems at edge datacenters determine the files that are most popular in a given region, and dynamically cache them at a local edge datacenter to minimize traffic and transfer time.

There are difficulties to address when transitioning from a hyperscale to an edge model. For example, creating multiple smaller datacenters risks eliminating the economy of scale benefits enjoyed by hyperscale datacenter operators. Fixed costs and personnel cannot easily be spread over geographically dispersed sites, meaning that more operators, managers, and technicians must be hired per megawatt. Luckily, installing a DCIM is an easy way to help minimize these costs.

A DCIM streamlines maintenance processes and monitoring, while minimizing the human intervention needed to maintain a datacenter. This allows sites to rely on comparatively fewer employees, lowering costs and reducing the risk of downtime. For companies looking to operate competitively priced edge datacenters, using a DCIM solution is a must.

[1] BIG INTERVIEW: Clint Heiden, CCO, EdgeConneX. (2015, July 2). Retrieved January 09, 2018, from http://www.capacitymedia.com/Article/3467698/BIG-INTERVIEW-Clint-Heiden-CCO-EdgeConneX

0 notes

Text

Big Data and its Impact on Data Centers

The current rate at which data is generated is the fastest in human history. Over the last two years, more data has been generated than over the preceding 200,000 [1]. Although only about 0.5% of this data is ever actually analyzed and used [2], it is still having profound effects across industries. According to a 2014 Gartner survey, 73% of organizations have invested in big data or are planning to by 2016 . This proliferation of data is revolutionizing the world, but some questions remain: where is all of this data coming from, and what does it mean for the future of the data center industry?

Figure 1: Visualization of Data Flows

Traditionally, much of the world’s data has been generated directly by human interactions, for example:

40,000 Google searches performed per second

1 trillion photos taken per year

300 hours of video uploaded to YouTube every minute [2]

With the rise of the Internet of Things (Iot), however, that trend has been changing. Smart devices have begun generating staggering amounts of data without any human interaction. From modern jet engines that produce up to 1TB of data per flight [4] to autonomous cars that pump out 4TB per day [5], the amount of data generated by 2020 is expected to rise to 1.7 MB per second, per person.

This increase in data generation is self-reinforcing and unlikely to slow any time soon. As more data is gathered, more uses for the data are found, which in turn causes more data gathering. This makes an end to this cycle in the near future unlikely.

Accordingly, data centers must prepare for the huge increase in demand they are sure to see over the coming years. As the amount of storage and computing power required increases, and users continue to accept less and less downtime, the data center market will have to evolve. Luckily, new technologies promise to streamline data center management. The Internet of Things, augmented reality, artificial intelligence, and predictive maintenance all promise to reduce downtime, costs, and risk for data center operators. Properly managed data centers will remain engines of growth and profitability for years to come. On the other hand, improper management and a hesitation to adopt the newest trends will surely disadvantage data centers in the long run.

As data flows from existing sources continue to increase and new sources begin producing troves of new data, the data center industry is sure to remain in high demand for years to come.

0 notes

Text

Industrial AI Applications – How Time Series and Sensor Data Improve Processes

(This article was written by TechEmergence Research and originally appeared on: https://www.techemergence.com/industrial-ai-applications-time-series-sensor-data-improve-processes/)

Over the past decade the industrial sector has seen major advancements in automation and robotics applications. Automation in both continuous process and discrete manufacturing, as well as the use of robots for repetitive tasks are both relatively standard in most large manufacturing operations (this is especially true in industries like automotive and electronics).

One useful byproduct of this increased adoption has been that industrial spaces have become data rich environments. Automation has inherently required the installation of sensors and communication networks which can both double as data collection points for sensor or telemetry data.

According to Remi Duquette from Maya Heat Transfer Technologies (Maya HTT), an engineering products and services provider, huge industrial data volumes don’t necessarily lead to insight:

“Industrial sectors over the last two decades have become very data rich in collecting telemetry data for production purposes… but this data is what I call ‘wisdom-poor’… there is certainly lots of data, but little has been done to put it into productive business use… now with AI and machine learning in general we see huge impacts for leveraging that data to help increase throughput and reduce defects.”

Remi’s work at Maya HTT involves leveraging artificial intelligence to make valuable use of otherwise messy industrial data. From preventing downtime to reducing unplanned maintenance to reducing manufacturing error – he believes that machine learning can be leveraged to detect patterns and refine processes beyond what was possible with previous statistical-based software approaches and analytics systems.

During our interview, he explored a number of use-cases that Maya HTT is currently working on – each of which highlights a different kind of value that AI can bring to an existing industrial process.

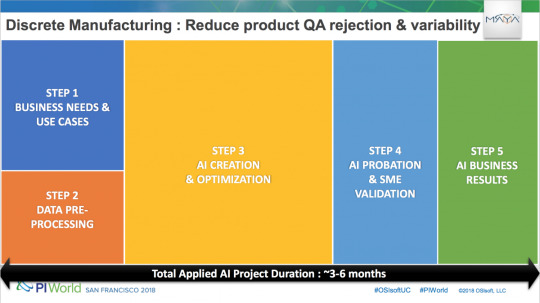

This point is reiterated in an important slide from one of his recent presentations at the OsiSoft PIWorld Conference in San Francisco:

Use Case: Discrete Manufacturing

Increasing throughputs and reducing turnaround times are some of the biggest business challenges faced by the manufacturing sector today – irrespective of industry.

One interesting trend here was that individual machine optimization was the most common form of early engagement with AI for most manufacturers – and much more value can be gleaned from simultaneously optimizing the combined efficiencies of all the machines in a manufacturing line.

“There has been little that has been done to really help that industry to go beyond automation and statistical-based procedures, or optimizing individual machines.

What we find in our AI engagements is that – at the individual machine level – there is little that can be done, as it’s been fairly optimized… if we look at where AI can help significantly, it’s at looking at the production chain as a whole or combining different type of data to further optimize a single machine or a few machines together.”

In a typical discrete manufacturing environment today, we would likely find that there are over ten manufacturing stages and that data is collected at each stage from a plethora of devices. Remi explains that in such a scenario AI can today track correlations between many machines and identify patterns in the industrial data which can potentially lead to overall improved efficiency.

He adds some color here with a real-life example from one of Maya HTT’s clients. He elaborates that for one particular discrete manufacturer’s industrial process which faced high rejection rates, Maya HTT collected around 20,000 real-time telemetry variables and by analysing the data along with manufacturing logs and other non real-time production data, the AI platform was able to find what led to the high rejection rates.

“A discrete manufacturing process with over 10 manufacturing stages – each collecting tons of data. Yet towards the end of the process there were still high rejection rates… In this case we applied AI to look at various processes throughout this ‘food chain’.

There were 20,000 real-time telemetry variables collected, and by correlating them and combining them with production data, the AI was able to learn which combinations and variance led to the rejection rate. In this particular case the AI was able to remove over 70% of the rejection issues.”

This particular AI solution was able to quickly recognize faulty pieces so the machine operators could remove them from the process thereby reducing the work that the quality assurance team had to handle and leading to saved material and less real work hours spent detecting or correcting faulty pieces.

What this means in real business terms is that the manufacturer used fewer raw materials, had a lot less rework hours, which essentially translated to saving of half a million dollars per production line per year. “We estimated a savings of over $500,000 per year in this particular case”, said Remi.

Use Case: Fleet Management

Another such use-case that Remi explored was in fleet management or freight operations in the logistics sector where maintaining high fuel efficiency for vehicles like trucks or ships is essential for cost-savings in the long run.

Remi explains that AI can enable ‘real-time’ fleet management which would otherwise be difficult to achieve with plain automation software. Various patterns identified by AI from data, like weather or temperature conditions, operational usage, mileage, etc can potentially be used to pick out anomalies and recommend what the best route or speed would be in order to operate at the highest efficiency levels.

Remi agreed that no matter the mode of transportation used (trucks or ships, for example), some of the more common emerging use-cases for AI in logistics are around overall fuel consumption analysis for fleets.

“When you have a large fleet, it’s possible to mitigate a lot of loss by finding refinements in fuel use.” In cases of very large fleets, AI can simulate and potentially mitigate huge loss exposure by reducing fuel consumption for the fleet.

Use Case: Engineering Simulation

The last use-case that Remi touched on was 3D engineering simulations for design in the field of computational fluid dynamics.

Traditional simulation software can be fed input criteria like flow rates of fluids and can generate a 3D simulation which usually takes around 15 minutes to be rendered and involves a long, iterative, and often manual process.

Maya HTT’s team claims that it was able to deliver an AI tool for this situation resulting in getting simulation results time falling to less than 1 second. Maya HTT claims that this improvement of over 900x in efficiency gains (from 15 minutes to 1 second) during the design process enabled design teams to explore far more design variants than previously possible.

Phases of Implementation for Industrial AI

When asked about what business leaders in the manufacturing sector interested in adopting AI would need to do, Remi outlines a 5 step approach used at Maya HTT:

The first step would be a business assessment to make sure any target AI project will eventually lead to significant business impact.

Next businesses would need to understand data source access and manipulations that may be needed in order to train and configure the AI platform. This may also involve restructuring the way data is collected in order to enhance compatibility.

The actual creation and optimization of an AI application.

Training and conclusions from AI need to be properly explained and understood. The operational AI agent is put to work and final tweaks are made to improve accuracy to desired levels.

Delivery and measurement of tangible business results from the AI solution.

In almost all sectors we cover, data preprocessing is an underappreciated (and often complex) part of an AI application process – and it seems evident that in the industrial sector, the challenges in this step could be significant.

A Look to the Future

Remi believes that for the time being, industrial AI applications are safely in the realm of augmenting worker’s skills as opposed to wholly replacing jobs:

“At least for the short-medium timeframe… we augment people on the shop floor in order to increase throughput and production rates.”

He also stated that the longer-term implications of AI in heavy industry involve more complete automation – situations where machines not only collect and find patterns in the data, but take direct action from those found patterns. For example:

A manufacturing line might detect tell-tale signs of error in a given product on the line (possibly from a visual analysis of the item, or from other information such as temperature and vibration as it moves through the manufacturing process), and remove that product from the line – possibly for human examination, or simply to recycle or throw out the faulty item.

A fleet management system might detect a truck with unusual telemetry data coming from its breaks, and automatically prompt the driver to pull into the nearest repair shop on the way to the truck’s destination – prompting the driver with an exact set of questions for the diagnosing mechanics.

He mentions that these further developments towards autonomous AI action will involve much more development in the core technologies involved in industrial AI, and a greater period of time to flesh out the applications and train AI systems on huge sets of data over time.

Many industrial AI applications are still somewhat new and bespoke. This is due partially to the unique nature of manufacturing plants or fleets of vehicles (no two are the same), but it’s also due to the fact that AI hasn’t been applied in the domain of manufacturing or heavy industry for as long as it has been in the domains of marketing or finance.

As the field matures and the processes for using data and delivering specific results become more established, AI systems will be able to expand their function from merely detecting and “flagging” anomalies (and possibly suggesting next steps), to fully taking next steps autonomously when a specific degree of certainty has been achieved.

About Maya HTT

Maya HTT is a leading developer of simulation CAE and DCIM software. The company provides training and digital product development, analysis, testing services, as well as IIoT, real-time telemetry system integrations, and applied AI services and solutions.

Maya HTT are a team of specialized engineering professionals offering advanced services and products for the engineering and datacenter world. As a Platinum level VAR and Siemens PLM development partner and OSIsoft OEM and PI System Integration partner, Maya HTT aims to deliver total end-to-end solutions to its clients.

The company is also a provider of specialized engineering services such as Advanced PLM Training, Specialized Engineering Consulting for Thermal, Flow and Structural projects as well as Software Development with extensive experience implementing NX Customization projects.

0 notes

Text

Predictive vs. Preventive vs. Reactive Maintenance

Everything humans have created, from carts to buildings to trains, has required and continues to require regular maintenance in order to stay operational. For millennia, this maintenance has either been in reaction to equipment failure (reactive) or part of regular, scheduled checks (preventive). With the dawn of the information age and the Internet of Things (IoT), a third type of maintenance has surfaced that promises to revolutionize the whole concept, especially in relation to data center operations: predictive maintenance.

Reactive maintenance is the simplest type of maintenance, wherein users replace components only once they have failed, making it one of the least effective ways to minimize downtime and overall costs. No routine checks are performed to attempt to detect pending failures. This means that the costs of maintenance are effectively zero until the day the equipment fails. However, when the equipment eventually does fail, there will be unavoidable downtime until it is replaced. There may also be damage to other components, depending on the nature of the failure. The initial costs of enacting such a protocol may be negligible, but the final costs will surely be the largest of all possible maintenance approaches.

Preventive maintenance is the most common approach and involves regular, scheduled checks that verify if the equipment is liable to fail soon. If it is, then it is replaced; otherwise nothing is done. This decreases the risk of failure: a United States government study found 12-18% cost savings could be obtained by employing a preventative approach rather than a reactive approach (ii); however employing personnel to periodically check each and every piece of equipment is expensive and, unless a problem is detected, the check would be effectively unnecessary. A better strategy is to take the best of both maintenance types with no checks performed unless there was a reasonable level of certainty the equipment is approaching a point of failure.

Predictive maintenance strives to achieve this ideal. In predictive maintenance, sensors continuously monitor the equipment, and computer programs analyze this data to make predictions about when the equipment is likely to fail. When the computer detects an anomaly that indicates an imminent failure, maintenance personnel are dispatched to perform the necessary repairs. While the investment required to transition to a predictive approach is substantial, longer-term potential savings promise to dwarf the initial investment by a factor of ten. This is the case for the oil and gas industry, according to a study by the strategy consulting firm Roland Berger .

Prospective implementers should also take into account the potential efficiency increases and downtime decreases. A study by the United States government put the production efficiency increases at 20-25% and the downtime decreases at 35-45%. This is in addition to a 70-75% decrease in breakdowns and a 25-30% reduction in maintenance costs . All in all, it is clear that any company able and willing to make the initial investment would be well served.

Until recently, implementing predictive maintenance in data centers would have been impossible, even for companies able and willing to spend the money. Prior to the computing revolution, measuring and processing the amount of data required would not have been possible. There are countless stories of data centers performing manual temperature walkthroughs to attempt to catch any anomalies, but these approaches are too granular to offer any real improvements. With the advent of powerful computers, wireless sensors, and artificial intelligence programs, however, the benefits of predictive maintenance are ready to be reaped by those brave enough to make the initial investment.

Data centers are good candidates to be early adopters of these improvements. As technology companies, being on the cutting edge of technology revolutions makes a lot of sense. The processing power and storage required to interpret data should not be difficult for data centers to provide, and most new data center assets arrive at the site already equipped with sensors. The only thing missing is a real-time monitoring program to read and store the data, and an artificial intelligence to analyze it. With the penalties for downtime being so steep, the benefits to data centers are surely larger than for most other industries.

The information age created the need for data centers. Now, as time goes on, it is providing data centers with not only an immense trove of data to process and store (in the form of billions of devices being monitored by the Internet of Things) but also with a means of keeping costs low and ensuring downtime is minimized. Humanity has been relying on the twin pillars of reactive and preventative maintenance for millennia it’s time to embrace predictive maintenance as the future.

Field Service Management Blog. (September 15). Retrieved December 12, 2017, from https://www.coresystems.net/blog/the-difference-between-predictive-maintenance-and-preventive-maintenance Operations & Maintenance Best Practices (Rep.). (2010, August). Retrieved December 12, 2017, from Federal Energy Management Program website: https://energy.gov/sites/prod/files/2013/10/f3/omguide_complete.pdf

0 notes

Text

GDPR and its Impact on Data Centers

In May of 2018, new European data protection laws are set to come into effect in response to growing concerns over user privacy. Called the General Data Protection Regulation (GDPR), these laws regulate, among other things, how data is stored and processed. They will require datacenters to delete user information upon request and will hold operators to a certain level of physical site security.

Although at first glance these regulations appear to only impact European datacenters, this assumption is not correct. According to Benjamin Wright, attorney and instructor at the SANS institute, the regulations impact any datacenter storing or processing the data of Europeans [1]. He says operators will have to ensure that they are either GDPR compliant or do not handle European data. This will require a more granular control over data storage and processing than is currently the industry standard. Companies must be very careful to ensure European data is handled only by approved datacenters; otherwise they may be subject to fines of up to 20 million Euros or 4% of annual global revenue, whichever is higher [1].

Datacenters will likely begin treating GDPR compliance as a selling point for firms looking to do business in Europe. This was the case with the Amazon Web Services’ (AWS) datacenters to which Dropbox moved its operations in 2016 [2]. This means non-compliant datacenters will lose out on customers wishing to store or process European data. For those companies that do want to comply, compliance will not be a trivial matter. It will require datacenters to, among other things, conduct detailed risk assessments and report any data breaches to affected customers within 72 hours.

Another aspect of the GDPR for which datacenters must prepare is the rise of personal data erasure requests. These requests, enshrined into law in the GDPR, allow users to force datacenters to delete any and all of their stored personal data . Datacenters must be ready to process a large volume of requests in a quick and efficient manner; but current technology may not be ready to handle this use case.

In fact, Gartner, an IT research firm, believes that by the end of 2018 50% of datacenters will remain non-compliant with the terms of GDPR [3]. This means they will not be able to process or store European data without risking massive fines. Clearly the 50% of firms that adapt quickly to the new regulations, either by conforming to them or removing European data, will be at an advantage over the other 50%.

Author: Thomas Menzefricke

[1] Maria Korolov | Aug 01, 2017. (2017, August 02). What Europe's New Data Protection Law Means for Data Center Operators. Retrieved December 27, 2017, from http://www.datacenterknowledge.com/security/what-europe-s-new-data-protection-law-means-data-center-operators

[2] Smolaks, M. (2016, September 23). Dropbox moves into European data centers to comply with regulation. Retrieved December 27, 2017, from http://www.datacenterdynamics.com/content-tracks/colo-cloud/dropbox-moves-into-european-data-centers-to-comply-with-regulation/96990.fullarticle [3] Forni, A. A., & Van der Meulen, R. (2017, May 3). Gartner Says Organizations Are Unprepared for the 2018 European Data Protection Regulation. Retrieved December 27, 2017, from https://www.gartner.com/newsroom/id/3701117

0 notes

Text

The Future of Net Neutrality

With the December 14th repeal of net neutrality, it is tempting to think that the United States Federal Communications Commission (FCC) has put an end to the net neutrality debate once and for all. However, such an interpretation is both premature and misinformed. Despite the FCC deciding by a 3-to-2 party-line vote to repeal the net neutrality provisions, proponents of a repeal should not necessarily begin celebrating. There are still a few hurdles to clear before net neutrality can be declared well and truly dead. Until then, users should not expect any significant changes to the internet to occur.

The most immediate hurdle for the FCC to clear will be the upcoming legal challenges. Immediately after the decision, New York attorney general Eric Schneiderman declared that he would lead a lawsuit to prevent the repeal[1]. His lawsuit, which will be joined by other states, will argue that the decision process was corrupted by millions of fake public comments left on the FCC website in support of the repeal. However, this argument may run into trouble as the FCC can reasonably claim to have disregarded blatantly fake or repetitive comments. There has also been some talk of states taking up the FCC’s mantle and regulating the internet themselves, although the FCC would fight this tooth and nail.

A more credible legal argument can be made based on the federal requirement that agencies not make “arbitrary and capricious” decisions. With the now-repealed net neutrality rules having only been passed in 2015, the FCC is vulnerable to a charge of having acted capriciously. However, the burden of proof will rest on net neutrality proponents: federal agencies are allowed to change their minds about previous regulation[2].

Another attack on the appeal may come through the United States congress. Congress could use the Congressional Review Act to undo the regulation, although this will be difficult with Republicans in control of all branches of the federal government. Despite the fact that according to a Morning Consult poll a majority of Republican voters support net neutrality[3]it is still seen as a Democratic issue. Still, several Republican lawmakers have already opposed the FCC’s change[4] and this could represent an avenue to a potential compromise.

Even if the repeal survives legal and governmental challenges it is unlikely internet service providers (ISPs) will make any substantial changes in the near future. Making drastic changes would likely be extremely unpopular with consumers and could galvanize opposition to the repeal. Additionally, until the repeal has been fully cemented into law it is unlikely ISPs will want to risk making substantial structural changes lest net neutrality provisions be re-instated.

Short-term the FCC has struck a significant blow in opposition of net neutrality. In the longer-term, it is unclear whether the repeal will hold up in court, be replaced by a different law, or simply not have much of an impact. Consumers, content providers, and ISPs will surely continue to watch the drama unfolding across the country with bated breath.

Author: Thomas Menzefricke

[1] Looper, Christian de. Net neutrality in 2018: The battle for open internet is just beginning. TechRadar, TechRadar, 27 Dec. 2017, www.techradar.com/news/net-neutrality-in-2018-the-battle-for-open-internet-is-just-beginning

[2]Finley, K. (2017, December 14). The FCC Just Killed Net Neutrality. Now What? Retrieved December 29, 2017, from https://www.wired.com/story/after-fcc-vote-net-neutrality-fight-moves-to-courts-congress/

[3] Graham, E. (2017, November 29). Majority of Voters Support Net Neutrality Rules as FCC Tees Up Repeal Vote. Retrieved December 29, 2017, from https://morningconsult.com/2017/11/29/strong-support-net-neutrality-rules-fcc-considers-repeal/

[4] Congressional Republican opposition to Pai's net neutrality rollback mounts. (2017, December 12). Retrieved December 29, 2017, from https://demandprogress.org/congressional-republican-opposition-to-pais-net-neutrality-rollback-mounts/

0 notes

Text

What Net Neutrality means for Data Centers

When on December 14th, 2017 America’s Federal Communications Commission (FCC) voted 3-2 to remove network neutrality provisions it made a change that could have far reaching impacts on the datacenter world. Hailed by some and derided by others, the net neutrality repeal is controversial to say the least. But what will its impact on datacenters be?

Net neutrality is the idea that all traffic on the internet should be treated equally. The now-repealed provision stipulated that an internet service provider (ISP) could not charge customers differently based on the content they consumed. One gigabyte of data, it said, must be treated the same whether it came from Netflix or The New York Times. Proponents of net neutrality’s repeal argued that this, in effect, amounted to a subsidy of high bandwidth consuming sites by lower bandwidth consuming sites. Opponents of the repeal argued that ISPs would take this opportunity to extract more money from consumers, which would stifle growth. They would prefer that the internet be regulated as a utility, similar to electricity.

In the short term, datacenters are unlikely to see many changes due to net neutrality’s repeal. Customers may become more cautious as they wait to see what the ISPs do, but most things will remain the same. Longer term, however, the industry could face substantial upheaval.

As Frederic Paul of Network World points out, the nascent internet of things (IoT) which promises to generate troves of new data could suffer if ISPs decide to force users or providers to pay extra to have data transmitted quickly and reliably[1]. This could stifle innovation, as smaller providers find themselves unable to pay the required fee to get their products off the ground. Additionally, consumers may be less likely to purchase IoT-enabled technology due to its increased price.

If the opponents to net neutrality’s repeal are correct, higher prices are also in store for datacenter customers as ISPs begin charging them extra to transfer higher bandwidth content. This need not necessarily have a negative impact on the datacenters themselves, but it is unlikely datacenters will benefit from their customers paying higher ISP fees.

At a minimum, one potential consequence at least for datacenters in the United States may be that firms move their data to international datacenters in order to minimize their interactions with American ISPs. By moving data to European datacenters, for example, companies could avoid interacting with American ISPs unless their data is being accessed from America. This would minimize the fees that could be levied by the American ISPs.

On the whole, it is difficult to predict what the impact of net neutrality’s repeal will be on datacenters. Much will depend on how ISPs choose to wield their newfound powers. However, datacenter operators are well advised to continue monitoring ongoing developments in order to stay appraised of emerging trends.

Author: Thomas Menzefricke

[1] Paul, F. (2017, November 22). Will the end of net neutrality crush the Internet of Things? Retrieved December 27, 2017, from https://www.networkworld.com/article/3238016/internet/will-the-end-of-net-neutrality-crush-the-internet-of-things.html

0 notes

Text

Added value of 3D View

The history of visual representation in engineering and navigation from early cartography to sophisticated blueprints, and simple diagrams to elaborate mind maps attests to the communicative value of integrating quantitative and qualitative information in an accessible, visual format. One of the questions asked of a DCIM solution is whether or not 3D representation brings any added value.

Perhaps a more nuanced (and slightly philosophical) way to pose the question is as follows: Is software meant to reduce reality or represent its most useful elements in a clear and reliable manner? DCIM operations are, generally speaking, mission critical, and operators cannot rely on simplistic representations and jump from one tool to another to collect scattered, de-contextualized information and still expect to take quick and effective action.

In DCIM, 3D representation at its most basic level provides users a familiar way of seeing. In addition, 3D renderings allow a seamless navigation between various areas of a data center through a plurality of vantage points most of which are inaccessible to conventional video cameras. Furthermore, 3D view settings are flexible by design. They allow customized positioning, ‘bookmarking’ favorite views, and exporting screenshots that include pertinent asset information. More importantly, a model allows a better grasp of relationships. It is one thing to know that a number of alarms have just triggered, it is quite another to see the event in context, detect patterns, apply filters (such as a heat map), run a visual report, and identify correlations and causal relationships.

It is especially the possibility of augmenting 3D models with visual reports that makes virtual reality compelling: this overlapping of real-time data, asset status, contextual information, and location enables quick access to a holistic view of data centers. With a model in sight, data center operators may better appreciate changes at row, room, and site levels and make informed decisions with regards to capacity planning, while minimizing ‘blind spots’ caused by the unavailability of key data. It is no coincidence that we use the word ‘perspective’ to refer to physical and conceptual dimensions (we say, ‘to draw in perspective’ and ‘to put an idea into perspective’).

To summarize, 3D view helps DCIM operators monitor, analyze, communicate, and make decisions more efficiently. Currently, DCIM software allows users to study their data centers at any point in the future, not just now. Looking forward, these forecasting capabilities will become more advanced and especially insightful when overlaid onto the 3D model. But there are other possibilities on the horizon. Software models may integrate room details along with positions of on-sight technicians using location trackers and enable instant communication and collaboration between various operators; or send a drone to scan the RFID of a particular asset with a mouse click; or connect with asset placement machines and move physical objects while the operator receives live information on their screen display.

There’s a lot more to 3D than meets the eye.

Author: Oubai Elkerdi

0 notes

Text

Augmented Reality: Dog Filters to Data Centers

When Pokémon Go took the world by storm in 2016—reaching ten million downloads in a week[i] and doubling Nintendo’s share price[ii]—it was a declaration to the world: augmented reality (AR) is finally here. When, a year later, Apple released what its Senior VP of Worldwide Marketing called the “first smartphone designed for augmented reality”[iii] the AR race was truly on.

AR refers to the overlaying of virtual graphics onto the real world using a headset, phone, or other digital device. Although your first impressions of AR may be gimmicky (think Snapchat’s ubiquitous filters), it has enormous real world potential—as was demonstrated by Oracle at their Modern Customer Experience conference. Here, a technician diagnosed and repaired a broken slot machine[iv] by following virtual prompts overlaid onto the physical world. The technician was left free to use his hands and did not have to waste time leafing through manuals. The evolution of AR has far reaching implications for data center operations and could herald the beginning of a revolution.

Figure 1: Technician’s View

Simplified maintenance is the most obvious potential data center AR application. Picture the following scenario: Your network operations center receives an alert that a server cannot be reached. You dispatch a technician wearing a head-mounted display (picture a more mature version of Google Glass) to look into the issue. She is guided to the correct rack by lines hovering in the air (see Figure 1). When she reaches the rack, the server in question begins flashing red. She troubleshoots the issue guided by simple, visual cues answered by a nod or verbal response. Soon the problem has been found: a cable is unplugged. The correct port flashes red and the cable is highlighted. She repairs the connection and returns to her office. The entire process takes less than five minutes and requires no paper. A record of her actions (for example a video recorded by the head-mounted display) is saved for future analysis.

Figure 2: Real-Time Asset Information

Maintenance is just one possible use case for AR in datacenters. One can also imagine viewing real-time asset information popups while walking through a datacenter (see Figure 2). Reserved/forecasted assets could be shown in their proper locations before they are even installed in the data center. Or, wires could be highlighted to simplify the tracking of physical connections between racks, rows, or even rooms. The possible applications of AR are limited only by our imaginations.

Author: Thomas Menzefricke

[i] Molina, Brett (July 20, 2016). "’Pokémon Go’ fastest mobile game to 10M downloads". USA Today. Archived from the original on July 20, 2016. Retrieved July 21, 2016.

[ii] Yahoo Finance – Business Finance, Stock Market, Quotes, News. (n.d.). Retrieved October 13, 2017, from https://finance.yahoo.com/,

[iii] Schiller, P. (2017, September 12). Augmented Reality. Speech presented at Apple Special Event in California, Cupertino. Retrieved October 13, 2017, from https://www.youtube.com/watch?v=VMBvJ4MTXzc

[iv] Wartgow, J., Bowcott, J., Brydon, A., & Freeman, D. (2017, April 26). Next Generation of Customer Service. Speech presented at Modern Customer Experience in Nevada, Las Vegas. Retrieved October 13, 2017, from http://www.zdnet.com/article/augmented-reality-field-service-proof-points-in-the-enterprise/

0 notes

Text

5 DCIM Security Questions You Should be Asking

When it comes to choosing a DCIM, security, the ability to prevent vulnerabilities and robust data segregation are a few of the most important factors. With ransomware and hacking becoming more and more prevalent, using a DCIM that employs security measures can mitigate some of the risks associated in implementing such a system.

How robust is the DCIM’s access control? There are many aspects to consider here, but we’ll touch on the obvious requirements: restricting access to data (objects), and restricting access to actions.

Firstly, users should be assigned specific roles for managing different aspects within a datacenter and be provisioned the proper DCIM features accordingly. Any professional system should allow for proper segregation of data and job functions, so that access to privileged information is only granted to an authorized user. Furthermore, the configuration of such a system should be granular. DCIM administrators should not only be able to grant access to certain sets of assets in the datacenter, but should also be able to configure rules that manage access to entire rooms, or even single ports on a datacenter asset.

In addition, a robust access control system shouldn’t only control who has access to what, but who has access to features and operations within the DCIM. For environments where security is a top priority, being able to dictate who can manage the data is highly important. The ability to ensure that only authorized users can access and make changes to the datacenter, while preventing unauthorized actions, should be included in any acceptable DCIM offering.

Does the DCIM store log information on user activity? Sometimes, some of the biggest threats can come from within an organization. A disgruntled employee might decide that they want to sabotage the production network. There needs to be a way of tracking this kind of activity. Besides legal and business requirements, in some instances, audit logs are needed to see who accessed what system in case there was a security breach. A robust DCIM would allow for adequate data preservation for data, such as user logins and tracking changes. Activity monitoring should be part of any DCIM, especially for roles that provide privileged access to sensitive information.

How does the DCIM keep data confidential? When it comes to data encryption, you might think that the temperature reading of a server is mundane information, but what about the type of devices used, or the users within the system? The more information an attacker knows, the easier it is for them to target a particular system or network. Data encryption should, therefore, be an integral part of any DCIM. In addition, data transmission between various nodes and systems should use industry standard encryption wherever possible.

Does the vendor use security best practices in their software development? During the software development lifecycle, there are phases where the development should follow industry certifications. If the DCIM uses a web application or web client, does it follow the Open Web Application Security Project (OWASP) guidelines? OWASP is a vendor neutral organization that includes a mandate for improving software security.

The following are some of OWASP‘s best practices to help develop more secure web applications:

Development of the DCIM should be housed around the Confidentiality, Integrity and Availability (CIA Matrix) of the information.

The software development should be compliant with industry regulatory requirements, such as Sarbanes Oxley, Health Insurance Portability and Accountability Act (HIPAA) and should follow proper data classification.

The DCIM should include options for recovery in case of hardware failures, or due to catastrophic events. This recovery can be achieved by data redundancy with failover spanning across different sites, all the while maintaining the data transmission securely.

Is the vendor obliged in writing to inform the client or notify about a breach or known vulnerabilities within their software?

Despite the precautions taken to secure software, there can never be a 100% security guarantee. That is why a good DCIM vendor would advise its clients of any security issues or breaches as soon as they are discovered within their software. Unfortunately, depending on contract negotiations, this is not a requirement on the part of the vendor. In order to make sure that your organization is covered, it would be ideal for vendors to provide something in writing that requires the vendor to divulge this information to the client.

0 notes

Text

IoT and the Future of Data Centers

In the 40 years since the first appliance - a Coke machine at Carnegie Mellon University - was connected to the internet; the internet has grown up. Today, 53.6% of the world’s population enjoys internet access, almost 30% have smartphones , and the typical American consumes nearly two hours of smartphone media per day . Still, until now, the possibilities inherent in Carnegie Mellon’s network connected Coke machine have never been properly explored. Enter the Internet of Things (IoT).

A network of devices replete with embedded sensors and chips, the IoT allows for the collection and exchange of data about and between disparate devices. Such devices range from smart thermostats to jet engines, which can produce 1TB of data per flight . The number of IoT devices in use globally is expected to increase from 6.4 billion to between 20.8 and 30 billion by 2020 (see Figure 1) catalyzing revolutions in everything from transportation to manufacturing.

Figure 1: Estimated Number of Connected Devices by 2020 (billions)

One subset of the IoT, the Industrial Internet of Things (IIoT), will have a direct impact on the management of data centers. The IIoT applies the power of the IoT to industry in order to increase production efficiency and minimize downtime. Current spending on the IIoT is conservatively estimated at $20 billion, but is predicted to grow to $514 billion by 2020 with a potential to generate $15 trillion in value by 2030vi.

Broadly, the impact of the IoT on data centers falls into two categories: efficiency improvements and load increases. To improve efficiency Data centers will start to employ predictive instead of preventative maintenance, which is expected to reduce maintenance costs by 30%vii. At the same time, data centers will have to cope with vast new flows of data. IoT traffic is forecast to triple to almost 2.2ZB by 2020 . This will require both an expansion of existing data center capacity, as well as a move towards distributed edge data centers.

Using IoT for predictive maintenance

The shift towards predictive maintenance will revolutionize how work is carried out in data centers and beyond.

Predictive maintenance uses IoT capable devices to collect data about assets and feed it into machine learning algorithms that are capable of predicting when assets will fail. Instead of being reactive (performing corrective maintenance after failures have occurred) or preventative (performing maintenance at regularly scheduled intervals), assets will be able to signal to technicians when they require maintenance. With the emergence of the IoT, data can now be fed from devices into data center infrastructure management (DCIM) systems, where machine learning algorithms can identify patterns that indicate an asset is at risk of failing. By combining this capacity with the real-time monitoring and change management capabilities of a DCIM, operators can perform maintenance before failures even occur. This minimizes downtime and avoids unnecessary maintenance cycles.

A survey by the Aberdeen Group predicts a 13% YoY decrease in maintenance costs as well as a 24% increase in the return on assets, purely by switching to a predictive maintenance model . The costs of such predictive technologies, for example, sensors and computing power, are recouped several times over by the decrease in maintenance costs, downtime, and risk of system failure. In 2013, a single failure at Amazon resulted in 30 minutes of downtime and cost the company an estimated $2 million . Predictive maintenance promises a revolution in how data centers are maintained in addition to substantial cost savings. Data center operators should be eager to embrace the changes brought about by the arrival of the IoT.

Managing load increases using IoT

Efficiency improvements will be especially vital in addressing the IoT’s second major impact on the data center industry: load increases. The huge amount of data generated by billions of internet connected devices will strain data center resources. Cisco estimates the amount of data generated will increase from 145ZB in 2015 to 600ZB in 2020 (although about 90% of this is ephemeral and will never be transmitted)vii. In a 2014 paper, Gartner argues that the WANs linking data centers to customers are sized for the amount of data generated by humans but not for machine to machine (M2M) interactions . Fabrizio Biscotti, research director at Gartner, argues that "IoT deployments will generate large quantities of data that need to be processed and analyzed in real time […] leaving providers facing new security, capacity and analytics challenges.”x Data centers will have to grow more efficient even as they expand their offerings. Additionally, Gartner VP and analyst Joe Skorupa argues that due to the global distribution of IoT data, handling and processing all of it at a single site will be neither technically nor economically viable . All this suggests a move away from traditional hyperscale data centers and towards increased edge deployments will be desirable in the years to come. Operators will have to acquire the tools and expertise required to efficiently manage multi-site, distributed infrastructures.

Figure 2: Data Generated by Connected Devices (in ZB)vii

With the rise of the Internet of Things, the next 5 to 10 years promises a revolution in the design, maintenance, and use of data centers across the world. As humanity comes to rely on smooth access to data and calculating power,especially with the advent of driverless vehicles, downtime will become increasingly unacceptable. Luckily, sensors and artificial intelligence promise to open up a new frontier in predictive maintenance for those data centers prescient enough to make the switch. This, combined with a move towards edge data centers, will allow the industry to cope with the massive, globally distributed influx of data. It has been 40 years in the making but the Internet of Things is finally here and it is here to stay.

Author: Thomas Menzefricke

The "Only" Coke Machine on the Internet. (n.d.). Retrieved October 12, 2017, from https://www.cs.cmu.edu/~coke/ Sanou, B. (2017, July). ICT Facts and Figures 2017. Retrieved October 12, 2017, from http://www.itu.int/en/ITU-D/Statistics/Documents/facts/ICTFactsFigures2017.pdf Mobile Phone, Smartphone Usage Varies Globally. (2016, November 23). Retrieved October 12, 2017, from https://www.emarketer.com/Article/Mobile-Phone-Smartphone-Usage-Varies-Globally/1014738 Howard, J. (2016, July 29). Americans at more than 10 hours a day on screens. Retrieved October 13, 2017, from http://www.cnn.com/2016/06/30/health/americans-screen-time-nielsen/index.html Palmer, D. (2015, March 12). The future is here today: How GE is using the Internet of Things, big data and robotics to power its business. Retrieved October 12, 2017, from https://www.computing.co.uk/ctg/feature/2399216/the-future-is-here-today-how-ge-is-using-the-internet-of-things-big-data-and-robotics-to-power-its-business Floyer, D. (2013, June 27). Defining and Sizing the Industrial Internet. Retrieved October 12, 2017, from http://wikibon.org/wiki/v/Defining_and_Sizing_the_Industrial_Internet Cisco Global Cloud Index: Forecast and Methodology, 2015-2020 (White Paper). (2016). Retrieved October 12, 2017, from Cisco website: https://www.cisco.com/c/dam/en/us/solutions/collateral/service-provider/global-cloud-index-gci/white-paper-c11-738085.pdf Six steps to using the IoT to deliver maintenance efficiency. (n.d.). Retrieved October 12, 2017, from https://i.dell.com/sites/doccontent/shared-content/data-sheets/en/Documents/DELL_PdM_Blueprint_Final_April_8_2016.pdf Clay, K. (2013, August 19). Amazon.com Goes Down, Loses $66,240 Per Minute. Forbes. Retrieved October 12, 2017, from https://www.forbes.com/sites/kellyclay/2013/08/19/amazon-com-goes-down-loses-66240-per-minute/#4ffd2605495c Biscotti, F. et al (2014, February 27). The Impact of the Internet of Things on Data Centers. Retrieved October 12, 2017, from https://www.gartner.com/doc/2672920/impact-internet-things-data-centers Sverdlik, Y. (2014, March 18). Gartner: internet of things will disrupt the data center. Retrieved October 12, 2017, from http://www.datacenterdynamics.com/content-tracks/servers-storage/gartner-internet-of-things-will-disrupt-the-data-center/85635.fullarticle

0 notes

Text

DCIM: The future of forecasting & capacity planning

DCIM providers have long advertised DCIM as an ideal tool for industry professionals due to its specialty in asset monitoring and planning. However, there is a remnant from DCIM’s predecessor the BMS or building management system - that most DCIM solutions have yet to shake: the exclusive focus on managing existing assets and not sufficiently planning for future states. A true DCIM is one that allows the user to manage potential or planned datacenter assets along with existing assets in order to establish capacity metrics that influence future business decisions.

In many ways, a run-of-the-mill DCIM is a simple extension of BMS systems. The assets managed in these DCIMs are tailored to the IT world and will occasionally throw in some limited real-time monitoring capabilities in an attempt to distinguish itself from the crowd.

There are, however, some simple questions asked by business professionals that most DCIM providers cannot answer:

How many more full SKUs can I fit in my datacenter?

Do I have enough power allocated to my data hall to cover my future expansion?

When will I run out of resources in accommodating all these promised projects?

By simply managing existing assets, industry professionals are caught flat-footed by these questions. How will I know when I run out of resources when I am only presented with information on my current inventory? To what degree can I expect to expand if all I know is that I am currently under my capacity limit? To answer these questions, attention must be shifted from simply managing inventory to managing capacity.

How does one manage capacity in any application? Fundamentally this boils down to knowing the maximum amount of a group of resources a given product can support. For example, cinemas know they can fit 200 people in any given theatre because they have provided 200 seats.

Think of these “seats” in datacenters as potential locations for cabinets to be placed. For example, if 4 cabinets exist in a row but the row has the potential for 16 a common DCIM solution would simply say “There are 4 cabinets in that row.” A true DCIM solution would say “There are 4 cabinets in that row with a potential for 16.” Datacenter Clarity LC believes in switching focus from managing the 4 existing cabinets to managing the 16 potential cabinet positions while expressing that 4 of those positions have been filled.

There are numerous benefits to this solution. By simply reporting on a percentage of filled cabinet positions, the denominator in any one capacity metric has been identified. Instead of simply stating “This row is using 100kW of power” you can now state that “This row is using 100kW of the 450kw available for this region.” These metrics can be applied to power requirements, cooling requirements, and even U-space utilization in the cabinets themselves, thus creating a powerful engine for capacity planning.

In addition to capacity metrics, users can plan their future projects by reserving cabinet locations for future use. If there is a known project that is scheduled for operation in July of next year, an operator can reserve the exact number of cabinet positions required from start date to end date, while also detailing the resources required from those individual positions (power requirements, cooling requirements, etc).

On a small scale, this is useful for general operations, but when this forecasted usage is rolled up, managers can generate powerful reports and dashboards that can forecast the usage required from their datacenter for years to come. The questions posited above can now be answered.

By taking a big step away from the BMS philosophy, DCIM solutions can finally answer the questions on the minds of business professionals planning for their future. It is clear that forecasting capacity metrics should be the centerpiece of any mature DCIM product.

Author: Oliver Foster

0 notes

Text

Are We Secure?

The National Crime Agency (NCA), in the UK, has ring the alarm that online criminals are often ahead of the available security services and, by extension, of whatever security measures are implemented on site. (http://www.proactiveinvestors.com.au/pdf/create/news/details/127976)

That prompted Corero’s COO, Dave Larson to react by saying “There is a false sense of security in many organisations that if you are compliant, then you are secure, and I don't think those two things necessarily equate.”

It is therefore important that we understand what the dangers are and how vulnerable we are. We can then decide the steps needed to satisfy our risk tolerance. That brings to mind an old “military humor” joke:

If the order “SECURE THE BUILDING” was given, then…

The MARINE CORPS would assault the building, kill everyone in it and possibly blow it up. The ARMY would put up defensive fortifications, sand bags and barbed wire. The NAVY would turn off the lights and lock the doors. The AIR FORCE would take out a three-year lease with an option to buy the building.

In previous articles, we have touched on the security aspects of the Data Center (“InDCent exposure” and “Risky business” - http://dcim.tumblr.com/) but the topic is still sensitive enough to discuss it further.

When we debate the issues of cyber security, we usually see it as the realm of the IT people. They are the one dealing with viruses and hackers attacks, are they not?

There are, however, areas that are not under direct control of IT. Electromechanical equipment and building management systems (BMS), for example, belong to the Industrial Control Systems (ICS) domain and, wouldn’t you know, say that security vulnerabilities also exist there.

An interesting report came out last year from IBM that raises awareness on security attacks targeting ICS (http://www-01.ibm.com/common/ssi/cgi-bin/ssialias?subtype=WH&infotype=SA&htmlfid=SEL03046USEN&attachment=SEL03046USEN.PDF). Countries like Canada and the U.S. are experiencing the brunt of these attacks by being targeted most frequently. Worldwide attacks of this type have almost doubled since 2011, when the existence of Stuxnet (https://en.wikipedia.org/wiki/Stuxnet) became widely known. You may remember that the Stuxnet worm was responsible for the sabotage of Iran’s nuclear centrifuges and was the first malware found to include programmable logic controllers (PLCs) code.

Stuxnet and other similar malware are an extremely sophisticated form of hacking that is thought of having been supported by some governments’ highest levels and it has spurred of course safety concerns. From what is known at the moment, they spread mostly through either phishing emails or contaminated USB drives, which brings up the necessity to educate users on being suspicious of mail attachments or accessing USB drives surreptitiously left behind by malicious individuals.

In addition, many enterprises used to count on “security by obscurity” to avoid attacks, but that strategy is becoming much less effective. Search engines like SHODAN (https://www.shodan.io/) are building databases on the types of devices connected to the Internet that are easily searchable, thereby shedding an unfortunate light on that sought after obscurity.

And there is no shortage of scary security reports, such as the Dell Security, Annual threat report (http://www.netthreat.co.uk/assets/assets/dell-security-annual-threat-report-2016-white-paper-197571.pdf ) where very interesting statistics are given.

It is easy to imagine that, in the future, mandatory auditing of ICS will begin to be requested more frequently, possibly stemming from organizations such as the Industrial Control Systems Cyber Emergency Response Team (ICS-CERT).

The push for more stringent security on industrial infrastructures makes sense especially in light of some high profile attacks such as the one on the New York Dam (http://time.com/4270728/iran-cyber-attack-dam-fbi/) , on a European energy company (http://www.theregister.co.uk/2016/07/12/scada_malware/) or the one that targeted the Ukrainian power company (http://www.reuters.com/article/us-ukraine-crisis-malware-idUSKBN0UI23S20160104).

As IT professionals, we just love to connect things together, and the revolution brought about by the “Internet of Things” is definitely appealing to our senses. Nonetheless, we have to be careful. The National Institute of Standards and Technology (NIST) has published a good document giving helpful guidance in that regard: http://nvlpubs.nist.gov/nistpubs/SpecialPublications/NIST.SP.800-183.pdf.

The same IBM report mentioned earlier, also gives suggestions for the best practices to apply. Defining and Identifying your ICS resources is of course paramount. Also, establishing security roles, responsibilities and authorization levels for IT, management, administrative staff and third-party users.

Strengthening access controls, regular audits, intrusion detections, among others, are tasks that can help secure your ICS resources and, by extension, mitigate or even prevent attacks.

As providers of Datacenter Clarity LC©, we are proud of the built-in security features that support the desired best practices required by this insecure new world. The compartmentalization of security roles, for example, makes it not only more ruggedized against external hits but also ideal in a colocation environment, where third parties need some type of access but must be secured nevertheless. Since malicious and non-malicious insiders cause more than 50% of the attacks, it makes sense to have a secure framework whenever and wherever possible.

There are, unfortunately, no magic wand against security attacks; it is an on-going process. An excellent document from the U.S. Department of Homeland Security (https://ics-cert.us-cert.gov/sites/default/files/documents/Seven%20Steps%20to%20Effectively%20Defend%20Industrial%20Control%20Systems_S508C.pdf ) suggests a few strategies to counteract cyber intrusions and we can easily realise that a holistic approach is necessary.

Monitoring, managing accesses, proper configuration/patch management, these are areas where a correctly deployed DCIM solution can help. Technology infrastructure such as data centers are increasingly complex and tools geared toward its management are sorely needed.

And to stay protected from malicious or unintentional attacks we can use all the help we can get.

0 notes

Text

Zombie Servers Running

In a previous post (http://dcim.tumblr.com/post/152857978208/risky-business) we hinted that a secure Data Center could be the safest place to be in case of a zombie attack. That may have been a bit of wishful thinking on our part. Many datacenters are actually already plagued with zombie servers exhibiting the energy sucking behaviour of a vampire.

A study from 2015 from the Anthesis Group (http://anthesisgroup.com/wp-content/uploads/2015/06/Case-Study_DataSupports30PercentComatoseEstimate-FINAL_06032015.pdf) claims that up to 30% of servers are basically “comatose”. The study considers those servers that rarely exceed 6% CPU usage on average over the course of a year.

While these servers provide little or no useful work, they still consume a fair amount of power. A report from the National Resources Defense Council (https://www.nrdc.org/sites/default/files/Saving-Energy-Server-Rooms-FS.pdf) estimates that a typical server uses on average 5 to 15 percent of their maximum capability, while still consuming 60 to 90 percent of their peak power.

Those findings are, of course, one of the drivers towards virtualisation and consolidation. Such strategies are definitely more efficient in many ways, certainly energy wise.

Why, then, the Anthesis study above estimates over 3 million zombie servers, just in the U.S., cumulatively drawing almost 1.5 gigawatts?

Probably because in many cases, the move towards virtualisation has been gradual, maybe one service at the time was migrated and nobody thought of turning the old server off once all services have been moved. Possibly a new software platform has been deployed and users slowly migrated until nobody is using the old software and the server running it is just waiting there, like an old man hoping for a visit from his busy children living too far away…, so sad!

Alas, unused servers do not have an impact just on space and power utilization, but also on power outlets and network ports that as such become otherwise unavailable.

A fun and exciting option would be to turn the suspected zombie server off and then wait for the phone to ring. But who are we kidding? Us, the IT people, are not renowned for our wit and sense of humour, so we will lean towards a less mischievous approach. Something, like… a plan.

First step is, undeniably, to identify the idle servers. Brute force could be used here. A list, maybe a spreadsheet, probably exists already, enumerating all the servers. From that, we can start removing the obviously operational ones and then connecting to each remaining ones to verify their state. Time consuming at its finest.

An easier method would require the deployment of power monitoring tools. We could measure the servers’ workload over time. After a while, we could have a pretty clear indication of which ones are not pulling their weight. It would have the added advantage of creating a baseline if applied to the totality of the servers. Future idle servers would then be recognised.

This approach, is a reactive one. It would be better to integrate it as part of the decommissioning process of a server.

For a more suitable process, establishing a workflow that integrates the intervening participants becomes necessary.

This is where a DCIM platform can become an important instrument to accomplish these objectives. When fully implemented, it provides a real time view of the assets, their location and ownership information and also where they are connected.

Up-to-date documentation is then available, at the time when a server, or any other asset, is decommissioned. You will also be informed of all the related components that will be impacted by the change as well.

As such, it makes sense to let go of wooden spikes, garlic necklaces or silver bullets and invest instead on a DCIM deployment project. That way, your team will be able to identify and track all zombie servers in real-time.

0 notes

Text

DCIM Hyper-Evolution

At the time of writing this, we have just started 2017. As it is tradition, we could spend some time to reminisce on what we have accomplished last year. Or, if we give in to deeper thoughts on how we got here, we could rethink our life decisions.

It seems the best idea would be to gaze ahead… far ahead.

We will be going opposite of the past. Having marveled at the many innovations that have changed our lives, why don’t we have some fun in wondering what the future, no matter how distant, holds for us and our trade.

When looking at DCIM, we should take stock of the current situation and assess what is working and what has not yet reached the full potential. Presently, several challenges are relieved with the current crop of DCIM offerings, namely by providing answers to questions like: what do I have and where is it? (Asset Management). How is it configured and where is it connected? (Connectivity Management). Or: Am I running out of stuff and do I have enough power for it? If I get more stuff, where do I put it? (Capacity Management). Has somebody moved my stuff? How can I explain to somebody where to put it? (Change Management).

Other tasks like Power and Environmental Monitoring and Energy Management are also fairly covered.

Even so, the current wish list of what DCIM should provide is still quite long and getting longer (i.e. http://searchdatacenter.techtarget.com/tip/Evolving-DCIM-market-shows-automation-convergence-top-ITs-wish-list). And those wishes usually command the offers. If we examine these wishful demands, we can perhaps extrapolate what future versions of DCIM platforms might bring about, essentially propelling ourselves in the realm of wild speculation.

For example, would it not be great if we had automated asset localisation? Currently, some short term solutions use RFID tags, others use tags to physically connect to cabinet management controller. One could dream that one day a tiny, oh-so-precise GPS tag could be embedded in any type of asset that could continuously broadcast its position. Perhaps the most popular dream among IT or Data Center managers is the asset auto-discovery. In the future, as soon as a server is connected, it would report for duty by transmitting its properties (CPU, RAM, disks and any other pertinent information) and of course its location and its operating temperature.

Such functionalities would finally align the data center with the dynamic nature of IT management

We have been making parallels between cloud services and Power Utilities from which we draw energy on demand. From the cloud we now use computing on demand. Maybe in due course, as with the Power grid where we can inject back our energy surplus, we will one day be able to put compute cycles that we don’t use back into the cloud and lease them to others. That would certainly complicate things by creating temporary ownership of assets.

Predictive Analytics anyone? (http://searchbusinessanalytics.techtarget.com/definition/predictive-analytics). By foreseeing potential issues, it could even auto-correct problems and outages with a dynamic and automated reconfiguration of the network and the assets connected to it and of course reporting on it. The data center could inform us on its current level of reliability. Essentially telling us how it “feels” today.

We are speaking of feelings on purpose. DCIM solutions are implementing “expert systems” techniques that will inevitably evolve into Artificial Intelligences one day. How else can we hope to manage the coming complexity? One often-cited request from clients is the integration with other data and assets management tools, such as order management systems, financial software, automated charge backs and/or trouble tickets systems possibly equipped with Root Cause analysis algorithms. Keeping track and extracting useful reports from such heterogeneous integrations will be quite the challenge and may need the help of an A.I. (https://en.wikipedia.org/wiki/Artificial_intelligence). Yes, in the future we may shorten “Data Center“ to just “Data” like the Star Trek character, and we will be able to speak to it (although it’s unlikely it will ever understand a joke).

There is some agreement that the data centers of the future may have the dual nature of both concentrated and dispersed. As of now, dispersion is used mostly for disaster recovery, but it could also be used to take advantages of power rates and availability. For example, this could mean activating and disengaging resources where it is most advantageous (assuming of course that the communications infrastructure of the future will be high performance and ubiquitous). A protocol may develop so that data centers can exchange with external and dynamic systems. The wearable technology being developed today may become not only a client for reporting and storing data, but also an extension of the data center itself.

With the Software Defined Data-Center approach (SDDC http://www.webopedia.com/TERM/S/software_defined_data_center_SDDC.html), the future Data Center will acquire extreme virtualization functionalities that will support the dynamic character we are seeking. EMC is already starting to simulate the DC infrastructure (http://www.theregister.co.uk/2016/02/05/emc_can_simulate_a_data_center/ )

We can continue to speculate and make conjectures on the makeup of the data center in a far-fetched future; how it will evolve and what it will transform into. Eventually it will make sense that a planetary integration will take place where optimal resources utilization and energy management will be implemented. Whether or not humans will allow it remains uncertain, but we are in the domain of wild imagination right now.

Whether these Sci-Fi scenarios will come to fruition in one form or another, it is indubitable that a management platform will have to be in place. It will be difficult to survive said future otherwise. Will it still be called DCIM? Well, we do know that the Dark Side of the Force will have to use it for the Evil Empire to succeed…

0 notes

Text

DCIM’s Return On Investment

If we are being honest, we have to admit that the implementation of a DCIM solution is not for the faint of heart. Projects of this kind are rife with challenges beyond simply the technical. While many actually enjoy this kind of disruptive evolution in their own environments, the challenges tend to also become political and logistical.

It is then not surprising that many CFOs think thrice before embarking on what they expect to be a very difficult and frustrating journey.

Software manufacturers started to categorize their wares as Data Center Infrastructure Management solutions shortly after the Power Usage Effectiveness metric was introduced (circa 2007). At the time, many were trying to be primarily glorified dashboards for PUE. However, they have evolved to cover much more than that. Especially in light of some of the PUE shortcomings (see previous post: http://dcim.tumblr.com/post/144655269758/the-lackluster-power-usage-effectiveness-metric).

Nevertheless, PUE had the advantage of being simple to understand. Furthermore, its metrics, in many ways, could be worked with and improved upon with many of the tools provided by the facilities and their BMS systems. As stated above, however, DCIM has evolved and expanded in areas not covered by BMS (see post: http://dcim.tumblr.com/post/147710267208/dcim-or-bms-using-the-proper-tool-for-the-proper )

So, what is a CEO/CFO to do? How can they decode the value of the DCIM offerings available? How can they identify the key drivers that would justify a DCIM implementation? Or delaying one?

In many ways, CFOs are strange and wild creatures, akin to untameable Dragons. One should approach them with extreme caution. Fortunately, an ancient technique exists to persuade them not immediately devour any and all who dare to approach them. It starts by uttering the magic words “RETURN ON INVESTMENT.”

Of course, this will only hold them still for a few moments; typically just long enough to feed them with the details on how and why they should accomplish said ROI.

Indeed, are there any savings in DCIM? If affirmative, it makes the ROI calculation so much simpler and attractive.

Let us look at some areas where very tangible savings may lie in wait.

Reducing OpEx is definitely a big opportunity for achieving significant economies. Decreasing power consumption, for example, can have a very quick and substantial impact to the bottom line (another magical phrase). DCIM can definitely assist in identifying the power usage hogs.

Rationalizing new and existing investments will help get the most out of the infrastructure. This is part of efficient planning that in turn can assist the agile enterprise.

Monitoring and maintenance. The proactive scheduling of preventive maintenance or the monitoring of the vital signs of the infrastructure will maintain the high availability of business services. Outages can tarnish reputations and shake customer confidence. Savings here are a little harder to evaluate, but VERY real nonetheless.

Improved productivity of the IT staff can either reduce the workload or enable them to accomplish more which will lead to increased revenues. In addition, the expertise and knowledge of the infrastructure will eventually be accumulated within the DCIM and will protect against loss of important personnel. It will also facilitate staff training.

The above is a fraction of DCIM potential benefits. Basic needs apply to almost every situation, but requirements vary among vastly different environments. The targets and goals for each implementation have to be properly evaluated.

Datacenter Clarity LC provides a ROI Calculator tool (http://datacenter.mayahtt.com/dcim-roi-calculator/) that can help dragons assess the epic battle that lies ahead.

0 notes

Text

Risky Business

The human species has a healthy aversion for risk, a trait that we share with the rest of the animal kingdom. Such behavior is actually considered an evolutionary adaptation. Primitive humans living through the harshness of the Stone Age had to be considerably more survivalist than today. Any tools or skills they possessed would have been comparably indispensable and to risk losing any could have been fatal. As such, risk-takers of the tribes had to constantly weigh the odds when deciding to gather food or explore new areas..

Sometimes, however, you can take the person out of the Stone Age, but not the Stone Age out of the person. Today, the chance of getting eaten by a predator has of course greatly diminished (unless we mean it figuratively by a fierce competitor). That said, the world has become infinitely more complex and the risk assessment for potential losses has gotten way more complicated.

Nonetheless, assess we must. We still feel the need to evaluate the safest action we are going to take, even if we are not always very good at it. Some of us, for example, prefer to travel thousands of miles by car instead of hopping on a plane because we are scared of the latter, even though it is demonstrably safer.