Call me Sage or Knux ~ he/him ~ 25 ~ white ~ furry ~ the catboy thing and the dragonkin thing are not ironic thanks 🌿 I block as I please and I love all my mutuals 🌿 ✨ I made my own header and icon ✨ everyone follow my gf @bunnygirlteeth right now immediately 💜 captainbritish and royal-rage and dragongirltongue are pretty damn cool too ✨✨ You may want one of my sideblogs instead of my main: STIMS: chew-doodle 🔹 CURATED AUDIO: jasfeta 🔹 KIN: altern1a 🔹 AESTHETICS: soulstealingginger

Last active 60 minutes ago

Don't wanna be here? Send us removal request.

Text

Animal Logic. Richard Barnes. 2004-2008.

11K notes

·

View notes

Text

There’s a new (unreviewed draft of a) scientific article out, examining the relationship between Large Language Model (LLM) use and brain functionality, which many reporters are incorrectly claiming shows proof that ChatGPT is damaging people’s brains.

As an educator and writer, I am concerned by the growing popularity of so-called AI writing programs like ChatGPT, Claude, and Google Gemini, which when used injudiciously can take all of the struggle and reward out of writing, and lead to carefully written work becoming undervalued. But as a psychologist and lifelong skeptic, I am forever dismayed by sloppy, sensationalistic reporting on neuroscience, and how eager the public is to believe any claim that sounds scary or comes paired with a grainy image of a brain scan.

So I wanted to take a moment today to unpack exactly what the study authors did, what they actually found, and what the results of their work might mean for anyone concerned about the rise of AI — or the ongoing problem of irresponsible science reporting.

If you don’t have time for 4,000 lovingly crafted words, here’s the tl;dr.

The major caveats with this study are:

This paper has not been peer-reviewed, which is generally seen as an essential part of ensuring research quality in academia.

The researchers chose to get this paper into the public eye as quickly as possible because they are concerned about the use of LLMs, so their biases & professional motivations ought to be taken into account.

Its subject pool is incredibly small (N=54 total).

Subjects had no reason to care about the quality of the essays they wrote, so it’s hardly surprising the ones who were allowed to use AI tools didn’t try.

EEG scans only monitored brain function while writing the essays, not subjects’ overall cognitive abilities, or effort at tasks they actually cared about.

Google users were also found to utilize fewer cognitive resources and engage in less memory retrieval while writing their essays in this study, but nobody seems to hand-wring about search engines being used to augment writing anymore.

Cognitive ability & motivation were not measured in this study.

Changes in cognitive ability & motivation over time were not measured.

This was a laboratory study that cannot tell us how individuals actually use LLMs in their daily life, what the long-term effects of LLM use are, and if there are any differences in those who choose to use LLMs frequently and those who do not.

The researchers themselves used an AI model to analyze their data, so staunch anti-AI users don’t have support for there views here.

Brain-imaging research is seductive and authoritative-seeming to the public, making it more likely to get picked up (and misrepresented) by reporters.

Educators have multiple reasons to feel professionally and emotionally threatened by widespread LLM use, which influences the studies we design and the conclusions that we draw on the subject.

Students have very little reason to care about writing well right now, given the state of higher ed; if we want that to change, we have to reward slow, painstaking effort.

The stories we tell about our abilities matter. When individuals falsely believe they are “brain damaged” by using a technological tool, they will expect less of themselves and find it harder to adapt.

Head author Nataliya Kosmyna and her colleagues at the MIT Media Lab set out to study how the use of large language models (LLMs) like ChatGPT affects students’ critical engagement with writing tasks, using electroencephalogram scans to monitor their brains’ electrical activity as they were writing. They also evaluated the quality of participants’ papers on several dimensions, and questioned them after the fact about what they remembered of their essays.

Each of the study’s 54 research subjects were brought in for four separate writing sessions over a period of four months. It was only during these writing tasks that students’ brain activity was monitored.

Prior research has shown that when individuals rely upon an LLM to complete a cognitively demanding task, they devote fewer of their own cognitive resources to that task, and use less critical thinking in their approach to that task. Researchers call this process of handing over the burden of intellectually demanding activities to a large language model cognitive offloading, and there is a concern voiced frequently in the literature that repeated cognitive offloading could diminish a person’s actual cognitive abilities over time or create AI dependence.

Now, there is a big difference between deciding not to work very hard on an activity because technology has streamlined it, and actually losing the ability to engage in deeper thought, particularly since the tasks that people tend to offload to LLMs are repetitive, tedious, or unfulfilling ones that they’re required to complete for work and school and don’t otherwise value for themselves. It would be foolhardy to assume that simply because a person uses ChatGPT to summarize an assigned reading for a class that they have lost the ability to read, just as it would be wrong to assume that a person can’t add or subtract because they have used a calculator.

However, it’s unquestionable that LLM use has exploded across college campuses in recent years and rendered a great many introductory writing assignments irrelevant, and that educators are feeling the dread that their profession is no longer seen as important. I have written about this dread before — though I trace it back to government disinvestment in higher education and commodification of university degrees that dates back to Reagan, not to ChatGPT.

College educators have been treated like underpaid quiz-graders and degrees have been sold with very low barriers to completion for decades now, I have argued, and the rise of students submitting ChatGPT-written essays to be graded using ChatGPT-generated rubrics is really just a logical consequence of the profit motive that has already ravaged higher education. But I can’t say any of these longstanding economic developments have been positive for the quality of the education that we professors give out (or that it’s helped students remain motivated in their own learning process), so I do think it is fair that so many academics are concerned that widespread LLM use could lead to some kind of mental atrophy over time.

This study, however, is not evidence that any lasting cognitive atrophy has happened. It would take a far more robust, long-term study design tracking subjects’ cognitive engagement against a variety of tasks that they actually care about in order to test that.

Rather, Kosmyna and colleagues brought their 54 study participants into the lab four separate times, and assigned them SAT-style essays to write, in exchange for a $100 stipend. The study participants did not earn any grade, and having a high-quality essay did not earn them any additional compensation. There was, therefore, very little personal incentive to try very hard at the essay-writing task, beyond whatever the participant already found gratifying about it.

I wrote all about the viral study supposedly linking AI use to cognitive decline, and the problem of irresponsible, fear-mongering science reporting. You can read the full piece for free on my Substack.

958 notes

·

View notes

Text

Hey, you!

Do you enjoy clangen? Are you autistic about genetics, evolution, and adaptations?

Do you also maybe want to start one of those clangen blogs or comics, or something like them?

Then I have the game for you! That’s right, baby. This is a Niche: a genetics survival game recruitment post.

I have been DEEPLY autistic about this game for years, so now you have to be too. I have over 200 hours in it.

It’s almost like a hybrid of spore and clangen- you breed and evolve your group of creatures (nichelings), explore different environments and adaptations, endure different kinds of weather, gather or hunt for food, and fend off predators.

It has both more and less control than clangen does, especially if you want to write a story based off of your playthrough.

It has more control over your individual creatures actions, relationships, genetics, appearance and breeding.

It has less control over your environment, predators and weather, but that depends on how much you mess with the sandbox settings.

There’s also a ‘mutation menu’ so you can make a specific creature’s offspring more likely to mutate certain genetic traits. You can start with all of them unlocked, or progressively unlock them as you go along.

(And there’s a huge family tree as well, to keep track of your creatures relations.)

The sandbox settings give you control over:

-What does or doesn’t spawn, from predators and other animals, to rocks and grass

-damage and healing multipliers

-the genes and appearance of the creatures you start with. Hand-pick every one, or have them as many as you like set to random!

-the island you start on, from grasslands, to swamps, savannas, mountains and archipelagos, to a little bit of everything, or even on the back of a giant whale!

-how many days your creatures different life stages last, for how long they’re helpless babies, teens, or adults. You can make your creatures live for just a few days, or hundreds.

-you can set ‘win conditions’ for challenges,

-and turn on a blind gene mode where you cannot see individual creatures genetics.

-a tribe size limit, so you can only have so many creatures

-and how much food and nesting material you start with as well!

The creatures are mostly dog-like, but there are cat features you can exclusively/mostly use as well. (Which is called ‘purr snout’, and when a creature purrs, any other creatures directly next to them will heal from any damage they have over the next few days. It’s fucking awesome)

Or, you can just creatively interpret their in-game designs however you like!

They have a lot of varying features- here’s some of my favorite nichelings from my own games.

The game has its own randomly-assigned names, but you can change them at any time.

(And you can also change the color of the gems on their chest as well, which represent how many ‘moves’ each creature has left.)

You control every one of your nichelings, so you can creatively interpret and create their personalities and relationships as much as you like, but random things still happen throughout the world as well.

There’s also a main ‘story mode’ aside from the sandbox, which is pretty fun too. (And also acts as a comprehensive tutorial.)

The game is 18 dollars on steam, but it’s been around for awhile, so it probably goes on sale often enough? I have no idea. I could run it without issues on my shitty old laptop, even in the poor thing’s final years, so you don’t need a really good computer to play it.

If you want to watch someone play it first, I highly recommend ’Seri! Pixel biologist!’. She has done a BUNCH of different playthoughts and challenges, from when the game was in super early access all the way up until its full release, and afterwards.

She also loves warrior cats, and builds a lot of story into her games. Here’s the playlist for her story mode play-through!

Also, if anyone does start a niche-based comic or something. PLEAAASE tell me I wanna seee it!!!!! :o)) thank you for your time goodbye

10 notes

·

View notes

Text

The European Court of Human Rights (ECHR) has ruled that the Czech Republic violated the rights of trans people by forcing them to be sterilised. Requiring transgender men and women to undergo sterilisation procedures in order to gain legal recognition of their gender identity meant the central-European country had breached international law, the court decided on Thursday (12 June).

Continue reading.

526 notes

·

View notes

Text

Hi, I'm going to try and start this a little earlier to avoid previous issues. We need to raise $700 for rent. We're two disabled trans women that help @rickybabyboy run his account, please help if you can.

ID: Orange and white tabby yawning very big

$285/$890 raised

Edit: had to get cat food, medicine and some groceries

Edit 2: Got dinged for work costs (virtual machine I have to maintain for a couple projects)

Venmo: AGIEF

Paypal: [email protected]

Ko-fi:

1K notes

·

View notes

Text

447 notes

·

View notes

Text

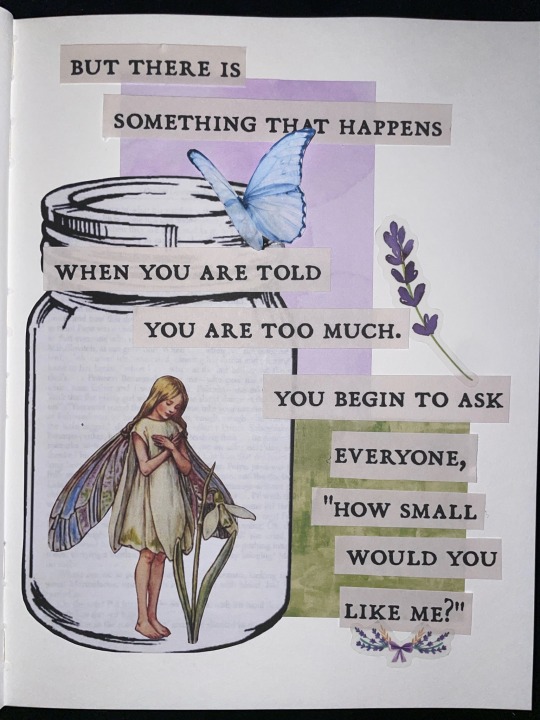

"but there is something that happens when you are told you are too much. you begin to ask everyone, "how small would you like me?""

22K notes

·

View notes

Text

every wikipedia entry for a comic book character is like

Classic Era: Professor Two-Apes was created when a bored alien glued two gorillas together with a magic rock. He later turned to evil when a colleague took credit for his research. In his debut appearance, Professor Two-Apes turned the Eiffel Tower into a banana.

Modern Era: Tu-Apes was the result of years of painful animal experimentation. He killed the doctor who created him, stole the blood-stained diploma off his wall, and now wears it around his neck. In the Conflagration crossover event, he was seen being beaten to death with one of his own spines. He was later resurrected by Satanists and currently suffers from a debilitating heroin addiction.

44K notes

·

View notes

Text

every time you make freezer food for dinner instead of buying takeout like you actually want you should earn two hundred dollars cash and a round of applause

55K notes

·

View notes

Text

21K notes

·

View notes

Text

various lesbian banners saved by the lesbian herstory archives

7K notes

·

View notes