Text

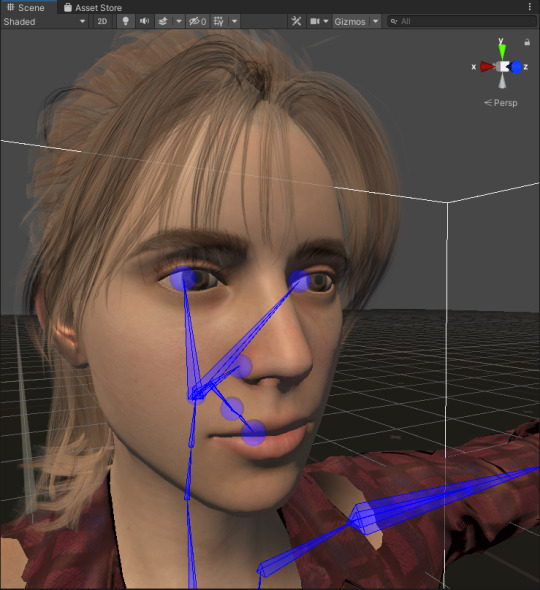

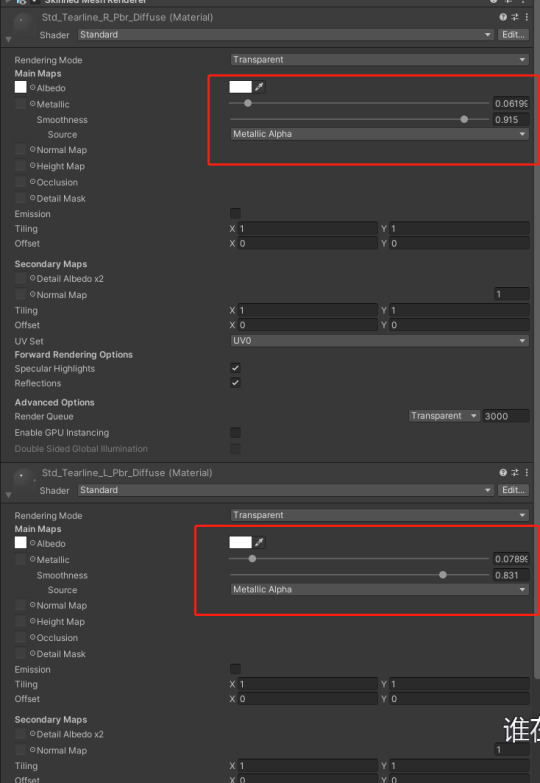

Realistic Texture Render Issue in Unity

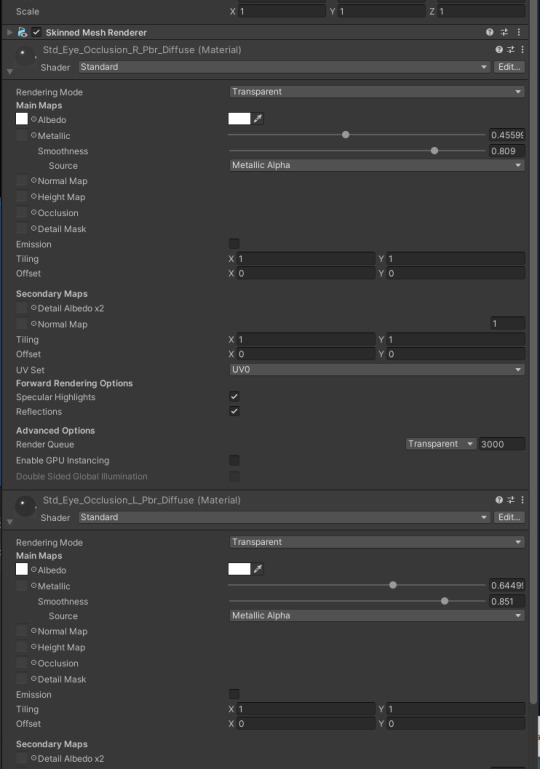

It's very realistic in the modelling software, but in unity it's a big discount, I used the official render plugin (https://www.reallusion.com/character-creator/unity-auto-setup.html) and change it to PRB texture but it only work ok, didn't work very well either.

[Eye Texture Adjustment]

[Face Texture Adjustment]

0 notes

Text

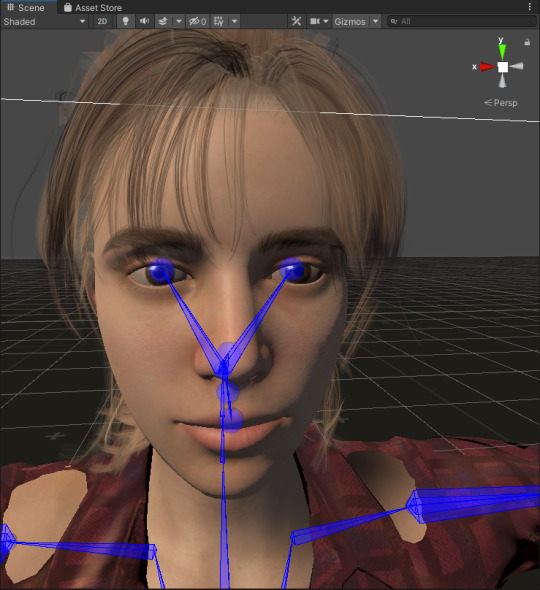

Full Body Tracking with Face in VR

youtube

Techincally, solved most of the problems. The next step is all about Maya work : )

0 notes

Text

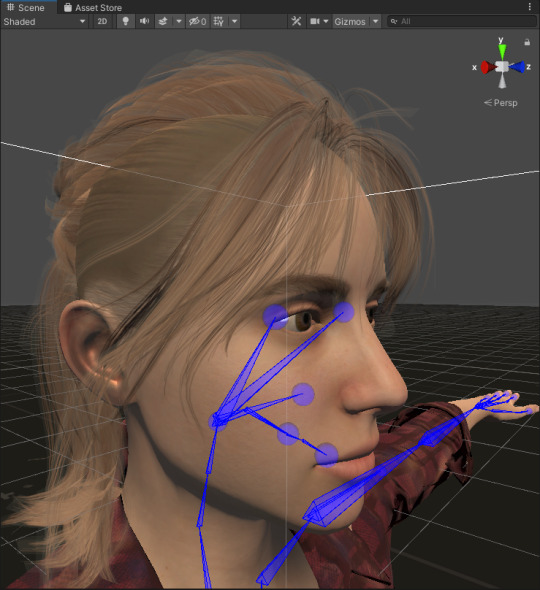

Full Body Tracking with VIVE tracker progress

Finally, by using a github library (https://github.com/Megasteakman/VRMocap), I implemented the full body tracking with ARKit facial tracking. Show as the video.

[High fidelity motion & High fidelity Apperance]

This full body tracking with facial tracking is the highest fidelity motion capture that I can implement with hardware I have. That can work on realistic character like metahuman.

youtube

[Mocap Data and Playback Function]

1. Can the Mocap data recorded? Yes.

2. What Mocap data format?

Body: FBX

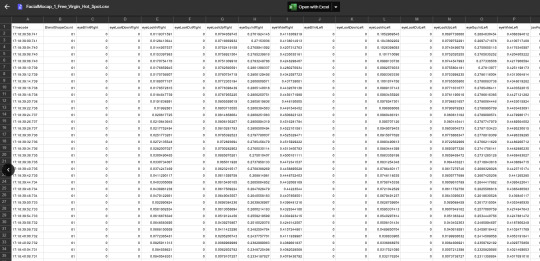

Facial: Excel

[iPad Remote]

Remote camera works on iPad.

[Further Work]

1. Callibration

Better callibration need to be done, to achieve Mocap movement more naturally.

2. High Fidelity Mocap work on Low Fidelity Apperance

I am working on test the cartoon model.

3. Low Fidelity Mocap

MocapForAll can work on MetaHuman which means Low Fidelity Mocap & High Feidelity Mocap has implemented.

4. VR Mode

It is only for Mocap now, still crashing in VR mode.

0 notes

Text

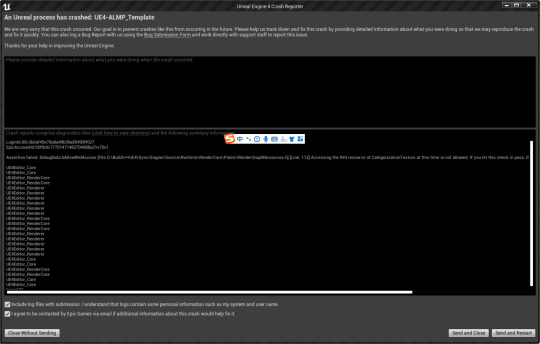

UE4 Crash Problem with PC&VR views and Metahuman together

I try to implement that to make two players working together, one uses PC (this can track face by ARkit or even tracking full body potentially) and another use VR Vive with full body and facial trackers.

youtube

Seperately functional test projects like the video are quite good. But when I merge everything together, for example, changing characters to metahuman like below, the project just always very easy crashs when playing.

As seems like streaming rendering problem, I try to lower the texture quality but not so useful.

0 notes

Text

ARkit: Combining User Face-Tracking and World Tracking

This is the sample xcode that can track the user’s face through iPhone front camera and at the same time that displays an AR experience with the rear camera. That’s can be a way to solve the issue that AR face tracking can only see own face. Potentially, the avatar in VR can be seen through the iPhone side too.

The question now is how to combine the AR and VR? It's a bit difficult to develop an AR prototype that can be connected to UE4 or Unity and at the same time, also to combine VR.

But maybe it could be a further study.

Ref:

https://developer.apple.com/documentation/arkit/content_anchors/combining_user_face-tracking_and_world_tracking

0 notes

Text

UE4 Real Time Facial Mocap character in VR Environment

youtube

Try something like multiplayer. With AR kit, character can be a real time mocap agent to talk with real time mocap VR avatar. It works well.

The issues:

1. the mocap agent is based on iPhone, so the person who act as agent can’t see the VR avatar. Which I’m thinking to solve by two players and stream a camera view for agent.

2. the facial tracker for UE4 lost some files when I import the plugin, I’ve tried many times with different methods but it still doesn’t work. To solve this, I will look into Unity ARkit facial mocap too.

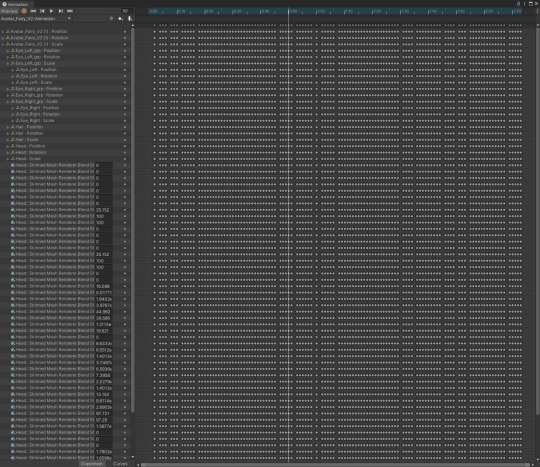

Solved 2ed issue!!!!

Stupid issue for plugin files. Just choose show plugin folders in Content browser view options T . T

0 notes

Text

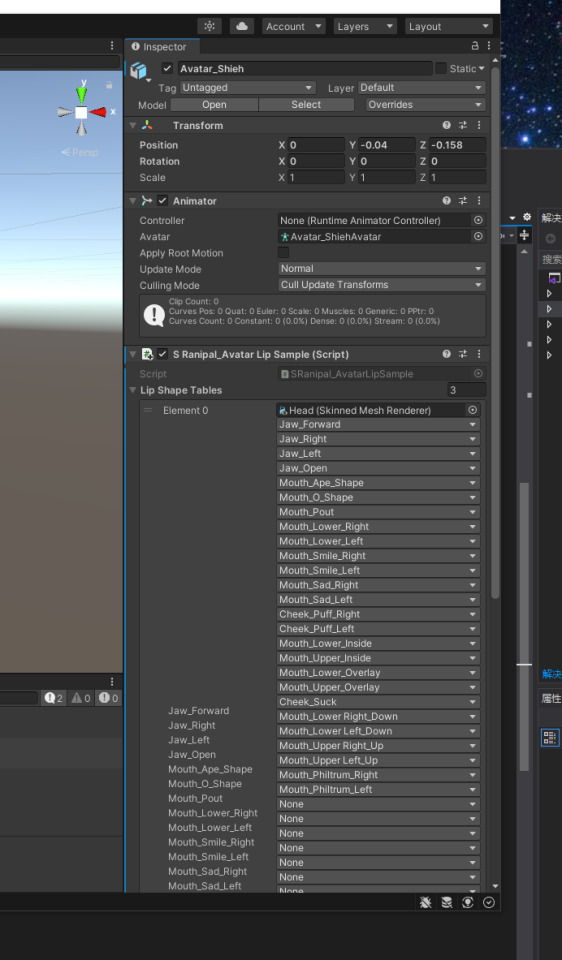

Facial Real Time Mocap Recording Data

The eye and lip mocap works well on the same model now.

Successed recorded the mocap data in play mode, it can be the limitation is only in editor version. I tried to export the data into a txt file that can work in application, but Unity always crashed. I’m still looking for solution for this.

youtube

The recording will work really well in the same model, but the recording file will not suit for every model and if put on models with different children gameobject setting can be not working well.

youtube

youtube

Next step is head rotation and arms IK, also need to decide about metahuman using.

0 notes

Text

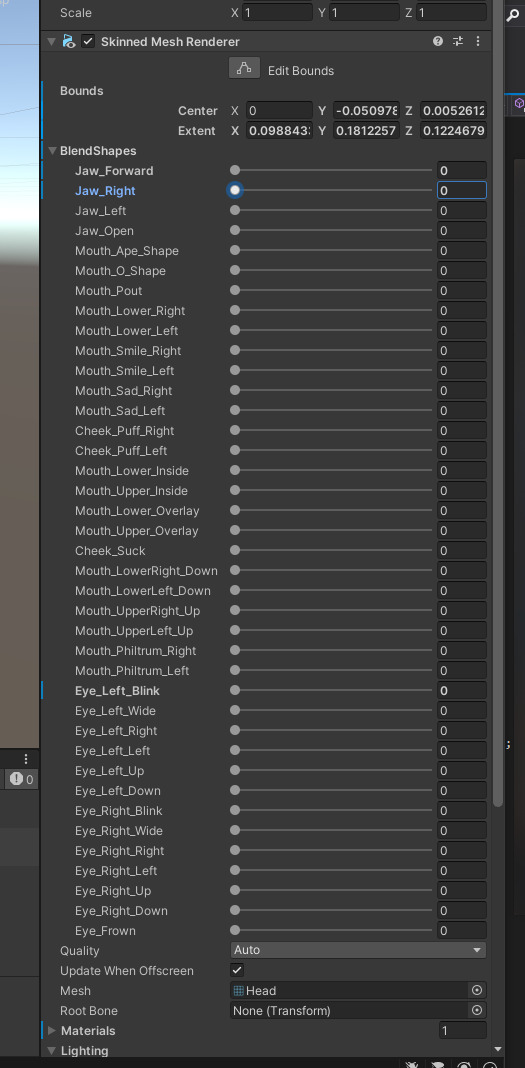

VIVE Pro Eye and Lip Tracking in Unity

Explore how the VIVE Pro Eye and lip tracking SDK works in Unity. The general workflow in Unity is clear now. It may take a little more time to sort out details about the code in the example scene, and then see which way can be used to record and playback the model.

0 notes

Text

Vive Pro Eyetracking not working | Debugged

[Bugs]

1. Vive doesn't work when connected to my PC, guessing probably something wrong with my GPU?

2. Found eye-tracking VR setting on a plugin setting is unticked, but other details may be causing problems and need to be looked at more closely.

[Debugs]

1. Devices Problem

Blue screen of death when using SteamVR with my computer, but it works well on another.

2. Note

After tick the setting, the eye-tracking also has to be calibrated, but need to operate both on the computer and in the VR headset, so remember to tap on the computer side to confirm!

[Neos VR]

It works much better now. VIVE Facial Tracker can capture 38 blend shapes across the lips, jaw, teeth, tongue, cheeks, and chin. I am thinking that if Eye Open can be done with the eyebrow action, maybe there would have been a chance to make eye tracking more vivid. I'm going to work on the Vive SDK for Unity or UE4 tomorrow. Also, I need to take some time to look into the range of rigged models too.

0 notes

Text

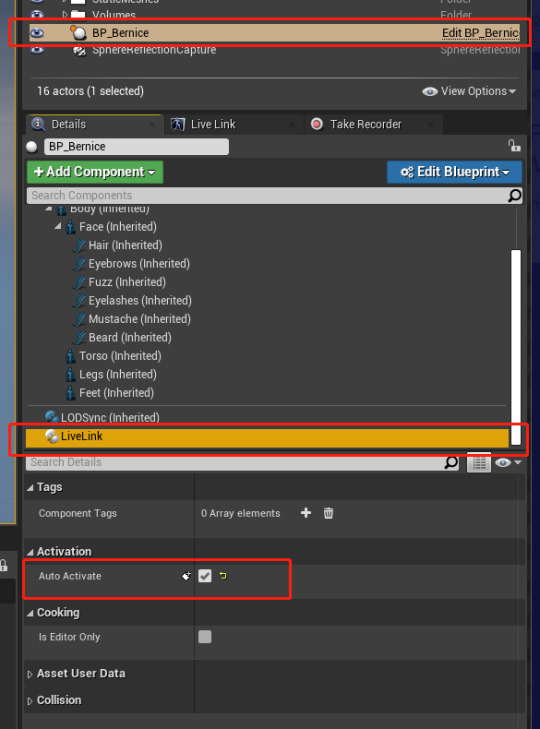

iPhone Facial UE4 & Metahuman | Debugged

The UE4 official video gives a very good and complete overview of the facial mocap applied on MetaHuman, although there are some details not mentioned in the official video. There are more detailed explanations in this tutorial, or if need to understand hand or body tracking later this may be useful.

The brief workflow is

1, create MetaHuman model (can be personalized) and export it to UE4.

2, iPhone Live Link Face set up

3, The plugins installs and settings in UE4

Here's what I think is easy to overlook or where I've made mistakes:

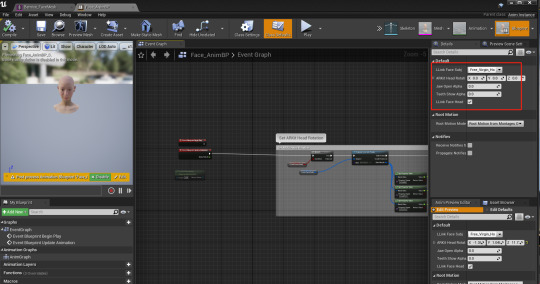

① metahuman model needs to add the live link animation skeleton to make it play in real-time as follow.

② the LLink Face Subj in the face Rigging Blueprint, which need to change Animation mode to use Animation Blueprint first.

③ I spent a long time solving only recording one frame problem. Although it is a stupid mistake, because I didn't think it was a mobile problem at all. The timecode on the iPhone has to match the UE4 timecode, just change the timecode setting to NTP that will work.

[Mocap Data]

The blendshape data can be exported directly from the iPhone with csv file, and recording in UE4 will also give a range of the whole head and face animation data.

[The final look]

The playback in UE4 is here

[Tomorrow Task]

Try to solve the Vive eye-tracking problem, spend some time to figure out why my computer can not run Vive. Hope luckily do something on Vive MoCap data.

1 note

·

View note