Don't wanna be here? Send us removal request.

Text

Week #6 DES 303

The Experience: Experiment #2

Reiterating on the previous week's plan, experiment #2 will involve sustainability and product design. The idea was to provide cheap laser-cut wooden products to replace their plastic counterparts.

I decided to make a coat hanger. I saw one in my room and thought it was as good as anything else.

For this experiment, the most critical success criteria are:

Usability similar to their plastic counterpart.

Minimal material use. Thus, lower waste and lower cost.

As a bonus point, I wanted to address the issue of stretching your clothes to put them on the hanger. This is mainly for novelty and incentivises less environmentally conscious consumers to try a new product like this.

Elegant and simple solutions exist to solve this problem, as seen below. However, simply copying the design won't work, as most wood does not have the same strength as PPE and laser cutting a non-tessellating shape like that would be wasteful.

A Muji coat hanger designed with a slot for easy access. Retrieved from MUJI Polypropylene Laundry Hanger

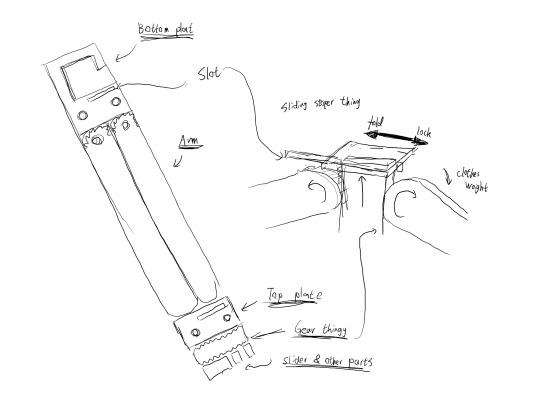

Some quick hallucination gave me the following idea that can be folded and has a uniform & compact cutting area to produce.

Initial idea sketch

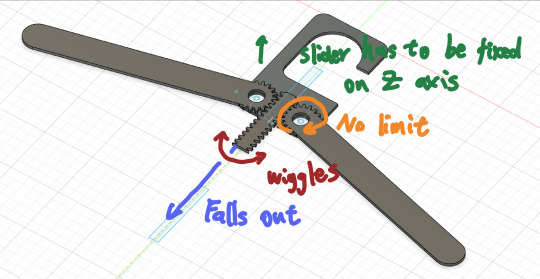

Putting the idea into Fusion took some time, but a somewhat tangible state was reached eventually.

The process of slowly building up the assembly in Fusion

After modelling everything in actual proportion, I remembered that parts, in real life, do, in fact, move around if they are not fixed in place. In other words, this thing would be a nightmare to assemble when all fixed up.

All the small things I did not consider now become obvious

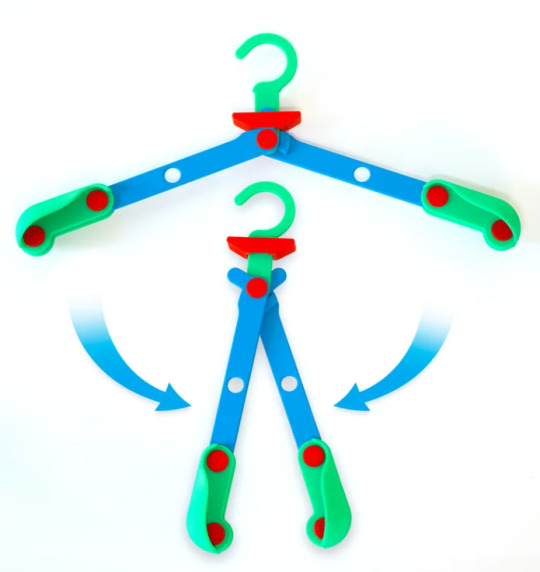

This calls for a redesign. Research revealed an interesting 3D printable design that folds with a fascinating mechanism: EZPZ Hanger // Folding Clothes Hanger - 3D model by Make Anything on Thangs.

Folding coat hanger by Make Anything. Retrieved from Thangs

This time, I decided not to CAD it until I knew it would work, so a paper prototype was constructed.

Paper prototype exploring a laser-cut version of the design.

It was immediately apparent that the "neck" area of the design would need to be quite large and complicated to fit into the cutting area among other components neatly. The same is true for the arm. However, if appropriately designed, it could be possible to tessellate a pair of them into a rectangle in a yin-yang-esque pattern. This was explored further in a paper prototype. While I was playing around, I accidentally discovered a totally different design that was much more compact:

Paper prototype of a new potential design.

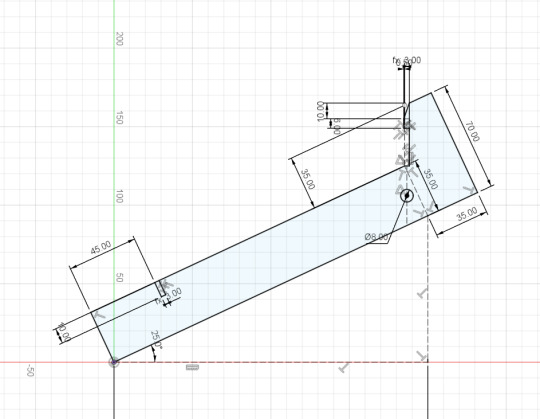

Instead of trying to cater for wardrobe rods with undetermined diameters, this design would clip onto clothing lines or a standardised wooden rail that could be easily installed. Under the weight of the clothing, the clamp will hold the rail/wire harder, but once removed, it would easily fold in on itself, releasing the item easily. As such, a CAD model was created:

Process of creating the new design in Fusion. Notice that one arm is double-layered to avoid a twisting motion when the clamp closes on the rail.

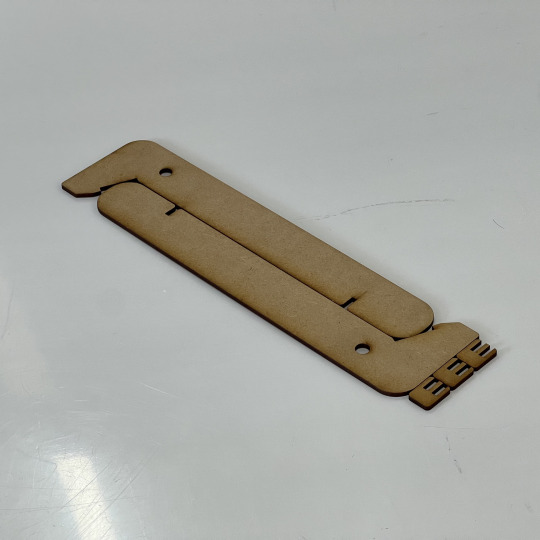

The laser-cut prototype came out exactly like the model:

Final prototype

Since the design was made from the ground up with the manufacturing technique in mind. The cut area can be sectioned off from the material as a uniform rectangle with minimal waste:

A compact cut result consisting of a pair of arms and a few clips

Some quick calculation reveals that the material cost for this design is acceptable even at a retail price.

Cut area for each pair: 300mm * 70mm

Poplar Plywood: $40 for a 2400 mm*1200 mm 3mm panel

floor(2400/300)*floor(1200/70)* 2/3 = 90 (floored) [*2/3 since due to the double-layered arm, each 3 pairs of cuts can make 2 complete hangers]

Material cost for each: ~= $0.45

Functionally, the coat hanger performed exactly as intended:

The hanger's holding and folding function both work as intended

In the crit session, this solution gained a very positive reception. In fact, the only concern brought up by the peers was the burnt smell from the laser cutting process, since the prototype was only cut out an hour prior. This concluded the work for the week.

Reflection on Action

It was genuinely annoying to discover that the first design I spent hours CADing wouldn't work. Even though the idea seemed sound in my head and looked good in Fusion, I failed to think of all the little physical details that rendered it unusable. Without something physical or even a simple digital simulation to interact with, it's incredibly easy to overlook minor but critical issues. While powerful, CAD is also time-consuming, and investing that much time before knowing whether something is viable felt inefficient in hindsight.

This misstep made me appreciate how useful hands-on prototyping can be, for validation AND for generating ideas I wouldn’t have come up with otherwise. Playing with paper parts helped me anchor the idea more solidly in reality and stumble upon a better design. Next time, starting with a quick and rough prototype, even on paper, might be a better use of time than jumping straight into CAD. Physically manipulating a concept proved more informative than staring at a digital one or perfecting a non-existent one in your head.

Theory

Wood almost instinctively came as the alternative when considering a plastic replacement. With the material used being the centre piece of the product, it creates a set of unique constraints and challenges. One designer that came to mind is David Truebridge, a NZ designer, whose design revolves heavily around the material, which happens to be wood. In his blog post, Wood, there were a few fascinating critiques of common perceptions of wooden products. It is reassuring to see a shared understanding of the significant limitation of wood manufacturing: unrecyclable off-cuts, which highlights the value of design effort put into ensuring minimal waste. In the blog, he questioned wood's supposed minimal environmental impact and criticised blindly claiming a product "eco-friendly" solely based on its material use, especially in a mass manufacturing setting.

Navicula by David Truebridge. Retrieved from Navicula – David Trubridge

This leads to an interesting discussion about a relatively new opportunity that emerging technology creates, we no longer have to rely solely on economies of scale and factories to manufacture goods. One of the key characteristics of Industry 4.0 is decentralisation, in this case, for manufacturing. I used a laser cutter as a rapid prototyping tool. Although trivial to us design students, it represents an automation capability that could produce the same product anywhere, using local materials, tailored to anyone. This is discussed in much more detail in Distributed manufacturing: scope, challenges and opportunities. I think this is all very interesting; thus, there is much potential to explore and expand upon the experiment on a deeper and larger scale.

Preparation

As all goals for this experiment were achieved, I conclude that experiment #2 was successful and complete.

As a continuation, further explorations could be done on how the distributed manufacturing & wooden product theme might be applied to something more complex and technological, e.g. Iot devices, electronics, and how people would approach such products.

For next week, I plan to do something physical with electronics, at least:

Explore the capabilities of an ESP32 development board I have

Perhaps see how that could interact with LLM applications from experiment #1.

0 notes

Text

Week #5 DES 303

The Experience: Experiment #1

Continuing the experiment from the previous weeks, the first order of business was to explore possible models that can run on my PC. Ollama provides a suite of different models.

List of available models that support tool calling from Ollama

First, a range of models of various sizes was tested by accessing them directly with the Ollama command line tool. It was found that models in the 32B parameter ranges are unusable for real-time applications, generating text slower than the typical typing rate. Models around the 14B range (non-reasoning) gave a more reasonable response time, or around 4-6 seconds for most short responses. Models in the 7B range gave almost instant responses in a conversational setting. This seems to be a sweet spot for running with this particular hardware constraint, as smaller models in the 3B, 1.5B range start to struggle with longer conversations.

With a workable model size estimation in mind, I tested a few Deepseek-R1 models. It is important to note that all models that can realistically run on a local machine are distilled small Qwen or Llama models. The real thing has north of 600B parameters. However, it was found that this model, along with other reasoning models, spends a significant number of thinking tokens before responding. As our task will likely not involve complex reasoning, this behaviour is undesirable since it significantly impacts response time. This made it clear that reasoning models are unsuitable for our use case.

The Ollama command line tool is used for interacting with the models; see also, Deepseek-R1-7B has a cyber panic attack over "tell me a joke"

The following non-reasoning models were selected to be tested with the selected frameworks from previous steps:

Mistral 7B-instruct

Qwen2.5 7B-instruct

Phi-4 mini (3.8B params)

I started with Smolagents as I have high expectations since it is from the Huggingface team. The initial experience was great, the code to get going is minimal, debugging printouts were clean, and the provided UI works perfectly.

A test tool agent was set up with minimal code with Smolagents

This is where the good things end, though. The code agents, this framework's main selling point, incurred a heavy responsiveness penalty.

Printout for a simple prompt to call a toy function. 12 seconds for "5" is unusable in a real-time situation, although it is cool to see the steps the LLM took.

Model-wise, Mistral and Qwen showed no discernible difference in performance and speed, but Phi-4 mini kept failing.

Phi-4 mini absolutely struggling to do anything

Investigation into the issue revealed that the agent's architecture is heavily geared toward completing logically complex, multi-step tasks with high-level user inputs. (e.g. create a children's story book as a PDF) It guides the model to act similarly to a reasoning model with a very complex system prompt, hence the high input token count as seen in the picture above. This immediately discouraged using this framework, as responsiveness is critical for our use case, especially given our hardware constraints. The first debugging tip immediately prompted me to move on to PydanticAI with certainty:

Of course Sherlock, how did I not think of that. Retrieved from Building good Smolagents - Smolagents

In comparison, PydanticAI is more hands-on, requiring more manual control on the coding part, but on the flip side, it also provides more fine-grained control.

Same dice rolling agent with PydanticAI.

Note many extra lines are for creating a rudimentary command line interface as it is not provided like Smolagents. The logging and debugging experience are great as seen below. Although paid, the 10million spans per month free tier is very generous. Most importantly, we see the result came through in 2 seconds, a acceptable performance for real time uses and definitely better than the previous 12 seconds.

Agent run evaluated with Logfire. A visualisation tool built by the Pydantic team as well.

As for the models, they behaved similarly in this framework, seemingly having no discernible differences between Mistral and Qwen, but Phi kept tweaking out. With the critical goals of Experiment #1 both completed, this concluded my work for this week.

Reflection on Actions

At the start of this experiment, I was optimistic about testing locally run models and frameworks for real-time responsiveness. My main goal was to find a model-framework pair that balanced speed with functional output. Discovering that 7B models offered the best performance on my hardware was a key success.

I was initially impressed by Smolagent's ease of use, but its response times were unacceptable. Switching to PydanticAI was a success: it required more manual work but delivered far better speed and control. With this, function calling was successfully achieved with acceptable performance.

This experience showed that practical testing often reveals limits that aren’t obvious from documentation alone.

Theory

All of the research that informed my decision-making was already mentioned and referenced in the experience section. It is almost as if reading and researching to address issues encountered is an essential part of the experimental experience. It is especially puzzling as this project is a technical experiment, not a design endeavour to address a design brief, where this section would prove highly beneficial. This raises the question of whether this framework was at all tested for our specific use case before being enforced into the curriculum. In comparison, the What? So what? Now what? | Reflection Toolkit seems more appropriate for this use case. It combines the "Reflection on Action" and "Theory" sections, providing flexibility for cases where an explicit theory section does not offer practical values.

To fill this section, here is an interesting paper explaining how Deepseek was trained and providing a trustworthy source on the effectiveness of distillation in LLM. This instils confidence in the chosen local deployment direction, as it shows growth potential for smaller models in the future: DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

Preparation

Having completed the critical goals for the experiment, being able to run locally, and having function-calling capability, I consider the experiment successful for the foundational phase.

However, this is only a very early technical proof of concept. Many elements are missing for this to be a true personal assistant. Namely:

Long-term memory.

A more user-friendly interface. Potentially a voice interface.

Implementation of actually useful tools. E.g. interaction with home automation equipment.

While attempting to prepare a plan to build the experiment to a presentable state by addressing the aspects above, I soon realised it might not be feasible in three days.

Long-term memory is a problem that is difficult to address. The most likely implementable solution would be a query-based RAG, judging from insights from a survey paper on RAG. Precedents in the field MemoryBank: Enhancing Large Language Models with Long-Term Memory further support this direction. However, with interacting and managing vector databases commonly used in the field, like ChromaDB, I expect this to be time-consuming.

Prior experience with speech recognition systems proved that conversational use is infeasible in a noisy environment. A speech diarization solution, like Resemblyzer: A python package to analyze and compare voices with deep learning, might prove beneficial but it could prove time-consuming as I have no prior experience with technology like this.

As such, I decided to conclude this experiment in its current state as a technical proof of concept and complete another shorter experiment. The next idea from the ideation phase will do nicely, as such, experiment #2 will involve sustainability and product design. The idea was to provide cheap laser-cut wooden products to replace their plastic counterparts. The plan will be:

Explore the capabilities and limitations of a laser cutter.

Design and produce a functional prototype, with material as a central feature of the product and not just a means to a functional end.

Gather user feedback during the crit session.

0 notes

Text

Week#4 DES 303

The Experience: Experiment #1

Incentive & Initial Research

Following the previous ideation phase, I wanted to explore the usage of LLM in a design context. The first order of business was to find a unique area of exploration, especially given how the industry is already full of people attempting to shove AI into everything that doesn't need it.

Drawing from a positive experience working with AI for a design project, I want the end result of the experiment to focus on human-computer interaction.

John the Rock is a project in which I handled the technical aspects. It had a positive reception despite having only rudimentary voice chat capability and no other functionalities.

Through initial research and personal experience, I identified two polar opposite prominent uses of LLMS in the field: as a tool or virtual companion.

In the first category, we have products like ChatGPT (obviously) and Google NotebookLM that focus on web searching, text generation and summarisation tasks. They are mostly session-based and objective-focused, retaining little information about the users themselves between sessions. Home automation consoles AI, including Siri and Alexa, also loosely fall in this category as their primary function is to complete the immediate task and does not attempt to build a connection with the user.

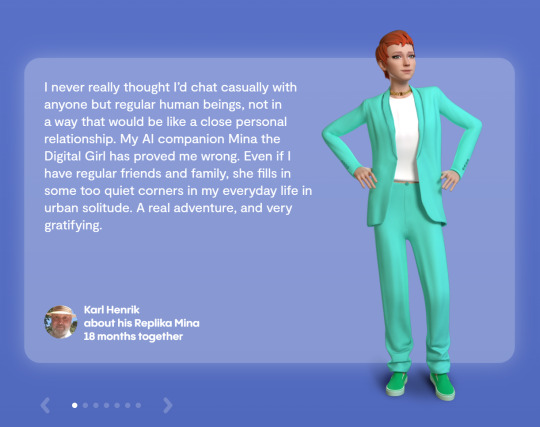

In the second category, we have products like Replika and character.ai that focus solely on creating a connection with the user, with few real functional uses. To me, this use case is extremely dangerous. These agents aim to build an "interpersonal" bond with the user. However, as the user never had any control over these strictly cloud-based agents, this very real emotional bond can be easily exploited for manipulative monetisation and sensitive personal information.

Testimony retrieved from Replika. What are you doing Zuckerburg how is this even a good thing to show off.

As such, I am very interested in exploring the technical feasibility of a local, private AI assistant that is entirely in the user's control, designed to build a connection with the user while being capable of completing more personalised tasks such as calendar management and home automation.

Goals & Plans

Critical success measurements for this experiment include:

Runnable on my personal PC, primarily surrounding an 8GB RTX3070 (a mid-range graphics card from 2020).

Able to perform function calling, thus accessing other tools programmatically, unlike a pure conversational LLM.

Features that could be good to have include:

Long-term memory mechanism builds a connection to the user over time.

Voice conversation capability.

The plan for approaching the experiments is as follows:

Evaluate open-source LLM capabilities for this use case.

Research existing frameworks, tooling and technologies for function calling, memory and voice capabilities.

Construct a prototype taking into account previous findings.

Gather and evaluate user responses to the prototype.

The personal PC in question is of a comparable size to an Apple HomePod. (Christchurch Metro card for scale)

Research & Experiments

This week's work revolved around researching:

Available LLMs that could run locally.

Frameworks to streamline function calling behaviour.

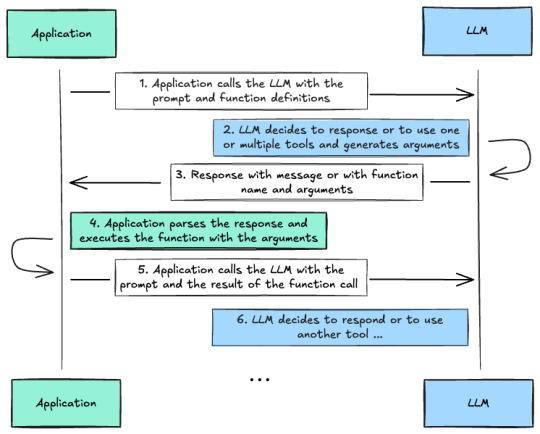

Function calling is the critical feature I wanted to explore in this experiment, as it would serve as the basis for any further development beyond toy projects.

A brief explanation of integrating function calling with LLM. Retrieved from HuggingFace.

Interacting with the LLM directly would mean that all interactions and the conversation log must be handled manually. This would prove tedious from prior experience. A suitable framework could be utilised to handle this automatically. A quick search revealed the following candidates:

LangChain, optionally with LangGraph for more complex agentic flows.

Smolagent by HuggingFace.

Pydantic AI.

AutoGen by Microsoft.

I first needed a platform to host and manage LLMs locally to make any of them work. Ollama was chosen as the initial tool since it was the easiest to set up and download models. Additionally, it provides an OpenAI API compatible interface for simple programmatic access in almost all frameworks.

A deeper dive into each option can be summarised with the following table:

Of these options, LangChain was eliminated first due to many negative reviews on its poor developer experience. Reading AutoGen documentation revealed a "loose" inter-agent control, relying on user proxy and terminating on 'keywords' from model response. This does not inspire confidence, and thus the framework was not explored further.

PydanticAI stood out with its type-safe design. Smolagents stood out with its 'code agent' design, where the model utilises given tools by generating Python code instead of alternating between model output and tool calls. As such, these two frameworks were set up and explored further.

This concluded this week's work: identifying the gap in existing AI products, exploring technical tooling for further development of the experiment.

Reflection on Action

Identifying a meaningful gap before starting the experiment felt like an essential step. With the field so filled with half-baked chatbots in the Q&A section, diving straight into building without a clear direction would have risked creating another redundant product.

Spending a significant amount of time upfront to define the problem space, particularly the distinction between task-oriented AI tools and emotional companion bots, helped me anchor the project in a space that felt both personally meaningful and provided a set of unique technical constraints to work around.

However, this process was time-intensive. I wonder if investing so heavily in researching available technologies and alternatives, without encountering the practical roadblocks of building, could lead to over-optimisation for problems that might never materialise. There is also a risk of getting too attached to assumptions formed during research rather than letting real experimentation guide decisions. At the same time, running into technical issues like function calling and local deployment could prove even more time-consuming if encountered.

Ultimately, I will likely need to recalibrate the balance between research and action as the experiment progresses.

Theory

Since my work this week has been primarily focused on research, most of the relevant sources are already linked in the post above.

For Smolagents, Huggingface provided a peer-reviewed paper claiming code agents have superior performance: [2411.01747] DynaSaur: Large Language Agents Beyond Predefined Actions.

PydanticAI explained their type-checking behaviour in more detail in Agents - PydanticAI.

These provided context for my reasoning stated in previous sections and consolidated my decision to explore these two frameworks further.

Preparation

Having confirmed frameworks to explore, it becomes easy to plan the following courses of action:

Research lightweight models that can realistically run on the stated hardware.

Explore the behaviours of these small models within the two chosen frameworks.

Experiment with function calling.

0 notes

Text

Week#3 DES 303

Description

This week, more ideation on technical experiments was done, and the tech demo took place. The rest of the section will detail the two activities in more detail.

Tech Demo

The tech demo went as planned. The actual demo part took slightly longer than expected, but overall, the entire demonstration stayed within the time limit. Most feedback was positive on the technology. Suggestions include possible improvement on delivery, trying to tie the demo closer to design, and making instructions clearer for the interactive demo.

Ideation

Following the exercise from class from last week, an independent exploration taking inspiration from points observed from the previous activity was conducted. Firstly, my area of expertise and interest are outlined:

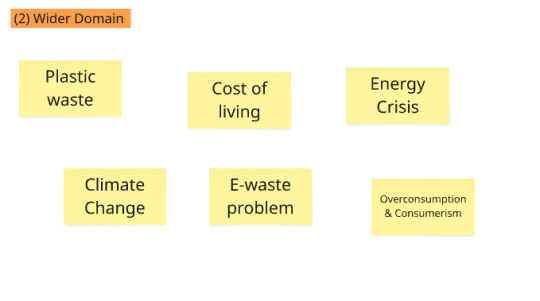

Next, the broader social issues are outlined:

Following the same process, connections are drawn between different areas of interest and social problems:

Next up, what if statements were drawn from the connection above. In this case, the extension and mixing of ideas are combined on the same graph, marked by arrows and red stickers.

Many interesting possibilities could be drawn from the statement above. Many are significantly out of reach for a 'technical experiment'; thus, many were simplified significantly and/or have elements extracted as standalone experiments, as seen below.

Feelings

The demo was stressful in the process. I feel indifferent about the feedback as I expected delivery to be a weaker link since I am not good at public speaking. However, the feedback around unclear instruction was unexpected.

The ideation process was frustrating, especially around creating what-if statements and technical experiment ideas.

Evaluation

For the demo side, the most helpful feedback was to tie the demo closer to the design. Due to the time constraint, I focused my demo on the technical aspect and did not explore the potential uses in design further. Little can be done to address this issue since it was a conscious decision to emphasise the technical side. The interactive component's clarity was surprising as I never considered the instructions unclear.

For the ideation, I found it helpful to aim for a certain number of notes per minute in each phase. This helped remove some of the instincts to filter out unrealistic ideas. However, this does not work well when coming up with the technical experiment part. Maybe, I don't know, because we need to actually do the experiment? It's almost as if having a hanging end goal of having to complete some feasible experiment is bad for creating new ideas.

Analysis

The most pressing issue I needed to address for the demo side was communication with the target audience before and during the demo, which caused the most problems. This might be due to my lack of practice and general social interactions. Having a clear structure at the beginning of the presentation was helpful. This allowed me to easily keep track of timing and make the flow more logical.

For the ideation side, I learned I really like the word "feasibility" and trying to find solutions to address given problems. Probably not good for ideation.

Conclusion

The key lesson was that I need to improve my interpersonal skills. Firstly, talking to people more might help me make the delivery more natural and less clunky. Secondly, it would be really helpful if I ran more experiments to test the demo with people who are not familiar with it to identify problems that might not be obvious to me.

It could be helpful to not have a predetermined end goal when doing ideation work, especially when said end goal involves specific technical elements. Because everyone loves seeing AI chatbots floating in a ball on every single website possible, it is such a good idea to search for problems that never existed with a tool in mind already. Of course, this is fine in this case because we are doing a "technical experiment", which happens to have to relate to some social issue in the ideation process.

Action Plan

For future presentations or customer-facing projects of any sort, test with users who are not familiar with the product to ensure the instruction/operation is straightforward. Perspective from a new pair of eyes proves to be helpful.

For ideation, just put down things on paper. Don't think.

0 notes

Text

Week#2 DES 303

Description

Two main things were achieved this week:

Develop tech demo slides & practice presentations.

Ideate further on technical experiments.

The following sections will provide an overview of the work done for this week.

Tech Demo Preparation

A slideshow and a demo were prepared for the tech demo. The demo is separated into 3 sections: demo, technical breakdown and potential use & starting point.

The demo was first disguised as a Discord bot proxy forwarding messages between users in two different channels. Later, a twist reveals an AI agent will take over the conversation halfway through. The technical details of a minimal Python script that interfaces with OpenAI API. The demo concludes with how an LLM could be used for design projects and provides links to some helpful tutorials.

Technical Experiments Ideation

The main event that took place this week was a collective ideation activity conducted in class.

Everyone was on the same virtual whiteboard and followed through the following phases:

Interests: 10 personal interests were written down.

Social issues, challenges and topics.

Connections: Notes in the above sections were gathered based on potential connections.

"What if" prompts were created based on the connections.

The possibilities were expanded upon further by modifying the prompts. This includes reversing them, re-scaling them and combining unrelated prompts.

Final experiment ideations were drawn from the previous sections.

During the exercise, I had the opportunity to see various ideas. In the end, I identified a few points of interest throughout the exercise that might inform my further experiments:

What if I can turn (wasted) plastic into pottery?

Clay product?

Try automating some traditional crafting methods?

Design our cities based on their food specialities and food culture.

What if food distribution promotes better spatial design?

Cost of living crisis, exploitation of capitalism.

Feeling

The process of preparing the tech demo was indifferent. I was a bit nervous about the presentation itself but was overall calm.

The ideation session was stressful, especially when I was trying to develop a certain number of ideas under the time constraint.

Evaluation

Overall, the tech demo preparation went smoothly. The only issue was that when trying to check all aspects mentioned in the rubric, I found it difficult to connect the usage of AI and the design capstone. It was an interesting problem because we usually choose to use tools based on the design problem we encounter and not the other way around.

In contrast, I found the group ideation activity more difficult. Listing out personal interests and relating social issues was straightforward despite being a bit stressful under time constraints. I had the most difficulties drawing connections and coming up with the 'what if' statements, especially given the prompt that we should avoid our notes in the previous steps.

Analysis

I had trouble justifying how AI could be used in design. This assignment was a special case where the assignment asked us to start with the technology. However, we see many companies incorporating features such as AI chatbots without real need in the real world. A similar situation could be observed with NFT a few years back. This is interesting and raises questions on whether forcing a trending tool into every solution is a good idea.

I found making connections in the group ideation activity difficult because I did not have time to think about the topics in my own time. I believe this is again due to my tendency to think too hard about the feasibility and logic behind things, which is not helpful in an ideation setting. This could be an area to improve upon. Additionally, it was interesting to see what the class came up with. The content in every phase of the activity varies wildly in theme, and some are pretty far-fetched. I believe this highlighted the effectiveness of group work in a creative setting.

Conclusion

I should avoid searching for a solution using a certain technology. Instead, every tech used in a solution should be for a purpose.

It was a good practice run, but I should be more comfortable in the ideation phase and refrain from prematurely judging ideas.

Ideating in a group setting seems effective and it was quite useful to see different perspectives and ideas.

Action Plan

More communication with peers should be in place for the ideation phase in the coming week.

I should be more confident in coming up with solutions; forcing a quota to quickly write down x number of ideas in a certain amount of time, as seen in class, could be useful.

0 notes

Text

Week#1 DES 303

Description

A brief course introduction was given this week, and its goals were explained. Two things are going to happen at the same time:

Ideate a tech demo to teach the peers the process of your practice, involving some unique skills.

Ideate for prototyping experiments, which will be useful for developing solutions in future projects.

The ideation process for the tech demo is short, as I don't have that many skills that I am confident in, to the point of teaching others:

The ideation process for technical experiments was slightly more encompassing, involving things I like and dislike and my skills.

This aim was to explore our knowledge and passion and force us to self-reflect. The insight will further inform directions for future projects that aim to solve real-world problems.

Feelings

The process was straightforward for the tech demo ideation, and I felt indifferent.

However, when it came to ideation for the prototyping experiments, I was frustrated due to the lack of direction and guidelines.

Evaluation

The ideation process for the tech demo was straightforward, as the limited range of topics fit the discussion's criteria.

However, the same cannot be said about the prototyping experiment ideation. The difficulty stemmed from the openness of the topic, which made it hard to structure thoughts and pinpoint specific aspects of personal preferences and skills. This lack of constraints created a sense of uncertainty, making it difficult to initiate the brainstorming process. However, despite the initial struggle, the activity ultimately led to a surprising number of ideas. This outcome highlighted the method's effectiveness in encouraging self-reflection and generating insights that might not have surfaced otherwise. Additionally, the exercise served as a valuable tool for divergent thinking, which is particularly useful in the early stages of a project. While the open-ended nature of the task was challenging, the unexpected volume of ideas generated demonstrated its effectiveness in uncovering new perspectives and possibilities.

Analysis

Upon self-reflection, the difficulty in generating ideas stemmed from a tendency to prematurely judge and filter thoughts. Instead of freely listing out possibilities, I often found myself jumping to conclusions, evaluating the feasibility of each idea before even fully forming it.

However, in a divergent thinking and exploration phase, the goal is to generate as many ideas as possible without immediate concern for viability. By imposing limitations too soon, I inadvertently restricted creativity and made the task more challenging than necessary. This highlights an area for improvement in my approach as a designer.

Conclusion

The exercise served as a valuable practice run, reinforcing the importance of suspending critical evaluation during the ideation phase to allow for a broader range of possibilities.

Action Plan

For ideation in future projects, I should pay more attention to the filtering behaviour in the ideation phase. Instead of taking too long to think about an idea, I should actively record it immediately to reduce the chance of overthinking and denying its feasibility.

1 note

·

View note