Globose Technology Solutions Pvt Ltd (GTS) is an Al data collection Company that provides different Datasets like image datasets, video datasets, text datasets, speech datasets, etc. to train your machine learning model.

Don't wanna be here? Send us removal request.

Text

How AI is Transforming the Data Annotation Process

Introduction

In the rapidly advancing landscape of Artificial Intelligence (AI) and Machine Learning (ML), data annotation is essential for developing precise models. Historically, the process of data labeling was both time-consuming and labor-intensive. Nevertheless, recent innovations in AI are streamlining the data annotation process, making it quicker, more efficient, and increasingly accurate.

What are the specific ways in which AI is revolutionizing Data Annotation? Let us delve into this topic.

What is Data Annotation?

Data annotation is the process of labeling data (images, text, audio, video) to train AI models. It enables machines to understand and interpret data accurately.

In Computer Vision, images are annotated with bounding boxes, segmentation masks, or key points.

In Natural Language Processing (NLP), text is labeled for sentiment analysis, named entity recognition, or intent detection.

In Speech Recognition, audio files are transcribed and labeled with timestamps.

AI models rely on high-quality annotated data to learn and improve their performance. But manual annotation is often expensive and slow. That’s where AI-powered annotation comes in.

The Impact of AI on Data Annotation

1. Automation of Annotation Processes through AI

AI-driven technologies facilitate the automatic labeling of data, thereby minimizing the need for human involvement and enhancing overall efficiency.

Pre-trained AI models possess the capability to identify patterns and perform data annotation autonomously.

Object detection algorithms can create bounding boxes around objects in images without the necessity for manual input.

Speech recognition AI can swiftly transcribe and annotate audio recordings.

For instance, AI vision models can be employed to automatically annotate images within datasets used for self-driving vehicles.

2. Active Learning for Enhanced Labeling Efficiency

Rather than labeling every individual data point, artificial intelligence identifies the most significant samples for human annotation.

This approach minimizes unnecessary labeling efforts.

It prioritizes challenging instances that necessitate human expertise.

Consequently, it accelerates the entire annotation process.

For instance, in the field of medical imaging, AI supports radiologists by pinpointing ambiguous areas that demand expert evaluation.

3. AI-Supported Human Annotation

Rather than substituting human effort, AI collaborates with human annotators to enhance efficiency.

AI proposes labels, while humans confirm or adjust these suggestions.

Annotation tools leverage AI-driven predictions to accelerate the labeling process.

This approach minimizes fatigue and reduces errors in extensive annotation initiatives.

For instance, in natural language processing, AI automatically suggests sentiment labels, which human annotators then refine for precision.

4. Synthetic Data Generation

In situations where real-world data is limited, artificial intelligence can produce synthetic data to facilitate the training of machine learning models. This approach is beneficial for the detection of rare objects. It finds applications in fields such as autonomous driving, robotics, and healthcare AI. Additionally, it diminishes the reliance on manual data collection.

For instance, AI can create synthetic faces for training facial recognition systems, thereby alleviating privacy issues.

5. The integration of AI in crowdsourcing enhances the quality of data annotation by:

Identifying discrepancies in human-generated labels.

Evaluating annotators according to their precision.

Guaranteeing the production of high-quality annotations on a large scale.

For instance, the combination of Amazon Mechanical Turk and AI facilitates the creation of extensive, high-quality labeled datasets.

The Prospects of AI in Data Annotation

As artificial intelligence advances, the automation of data annotation will become increasingly refined. Self-learning AI systems will necessitate minimal human involvement. AI-driven data augmentation will facilitate the creation of more comprehensive datasets. Additionally, real-time annotation tools will improve the efficiency of training AI models.

Conclusion

AI is transforming the landscape of data annotation, rendering it quicker, more intelligent, and scalable. Organizations that utilize AI-enhanced annotation will secure a competitive advantage in the development of AI models.

Are you looking to optimize your data annotation workflow? Discover AI-driven annotation solutions at GTS AI!

0 notes

Text

Image Data Annotation for Agriculture: Revolutionizing Crop Monitoring

Introduction

The field of agriculture is experiencing a digital revolution through the incorporation of AI-driven technologies. A notable development in this area is the process of Image Data Annotation, which significantly improves precision farming and crop surveillance. Utilizing annotated images from agriculture, AI models are capable of assessing crop health, identifying diseases, and refining yield predictions.

What is Image Data Annotation in Agriculture?

Image data annotation in agriculture refers to the process of labeling visual information, including satellite imagery, drone captures, and photographs of fields, to facilitate the training of machine learning models tailored for agricultural purposes. These annotations enable artificial intelligence systems to detect patterns, classify crop types, and evaluate field conditions with a high degree of precision.

Categories of Image Data Annotation in Agriculture:

1. Bounding Box Annotation – This method identifies plants, weeds, and pests to enable automated detection.

2. Semantic Segmentation – This technique distinguishes between crops, soil, and weeds within an image.

3. Keypoint Annotation – This approach marks essential points on plants to monitor their growth.

4. Polygon Annotation – This method provides accurate outlines of fields and crop areas.

5. 3D Cuboid Annotation – This technique assists in estimating the height and structure of plants.

How Image Data Annotation is Transforming Crop Monitoring

1. Enhanced Crop Health Assessment

AI-driven models utilize annotated images to identify early indicators of diseases, nutrient shortages, and pest problems, allowing farmers to take timely action.

2. Identification of Weeds and Pests

Automated systems for weed detection leverage annotated datasets to differentiate between crops and harmful plants, minimizing the necessity for manual weeding and reducing pesticide application.

3. Yield Forecasting and Harvest Efficiency

Through the analysis of annotated images, AI models can project crop yields, enabling farmers to organize harvest timelines effectively and enhance overall productivity.

4. Targeted Irrigation and Soil Assessment

Image data annotation aids AI-based irrigation systems by monitoring soil moisture and plant hydration levels, promoting efficient water management.

5. Adaptation to Climate and Weather Changes

Utilizing annotated climate and weather data, AI models can foresee unfavorable conditions, assisting farmers in taking preventive actions against droughts or severe weather events.

The Importance of Professional Data Annotation Services

High-quality image annotation services, such as those offered by GTS AI, play a crucial role in the effective training of AI models. These services provide:

Scalability – The ability to manage extensive volumes of agricultural data with efficiency.

Accuracy – Superior annotations that enhance the precision of models.

Cost Efficiency – Minimizing manual labor expenses in the agricultural sector.

Customization – Bespoke annotation solutions tailored to meet specific agricultural requirements.

Conclusion

Image data annotation is revolutionizing the agricultural landscape by facilitating AI-driven crop monitoring, disease identification, and yield forecasting. As technological advancements continue, precision farming will further develop, equipping farmers with data-informed insights for sustainable agricultural practices.

For innovative image annotation solutions, consider GTS AI to transform your agricultural AI models today.

0 notes

Text

Automated Image Data Annotation: Can AI Replace Human Labelers?

Introduction

Artificial intelligence (AI) is revolutionizing various sectors at an extraordinary rate, and Image Data Annotation is among those affected. Historically, human annotators have taken on the task of labeling images to facilitate the training of machine learning models. Nevertheless, the emergence of automated image data annotation prompts an important inquiry: Is it possible for AI to completely supplant human labelers?

This discussion will delve into the advantages, drawbacks, and future prospects of AI-driven annotation.

Image Data Annotation Explained

Image data annotation involves the assignment of pertinent metadata to images, facilitating their comprehension by artificial intelligence models. This process is essential in various computer vision applications, including:

Object Detection (e.g., autonomous vehicles)

Facial Recognition (e.g., surveillance systems)

Medical Imaging (e.g., AI-supported diagnostics)

E-commerce (e.g., categorization of products)

Historically, human annotators have been responsible for manually tagging images to guarantee accuracy and contextual relevance. Nevertheless, advancements in AI technology are increasingly automating this task.

AI-Driven Annotation: A Transformative Innovation

Automated image annotation utilizes machine learning algorithms and deep learning frameworks to classify and label images on a large scale. The benefits include:

Rapid Processing: AI can analyze thousands of images within minutes, greatly minimizing the time required for annotation.

Economic Efficiency: By implementing automation, organizations can significantly reduce expenses associated with hiring and training human annotators.

Adaptability: AI annotation is particularly well-suited for extensive datasets, making it an excellent choice for companies dealing with vast quantities of visual information.

Uniformity: AI mitigates the effects of human fatigue, ensuring consistent labeling throughout datasets.

Can AI Fully Substitute Human Labelers?

While AI offers numerous advantages, it encounters significant obstacles in fully supplanting human annotators:

Contextual Comprehension: AI often finds it challenging to accurately interpret intricate scenes, emotions, and ambiguous objects. For instance, distinguishing between a toy gun and a real firearm may necessitate human intuition.

Management of Edge Cases: In sectors such as healthcare and autonomous driving, erroneous annotations can result in severe repercussions. Human oversight is essential to validate labels generated by AI.

Bias in AI Systems: Automated annotation tools may reflect biases present in their training data, resulting in inaccurate or unjust outcomes. Human involvement is crucial in addressing and reducing these biases.

The Future: Collaboration Between AI and Humans

The future of image data annotation is not about replacing human labelers; instead, it embraces a hybrid model in which:

AI manages extensive, repetitive annotation tasks.

Humans oversee, enhance, and rectify the labels produced by AI.

This synergy guarantees precision, productivity, and the ethical advancement of AI technologies.

Conclusion

Although AI-driven annotation is transforming the industry, the role of human expertise remains crucial for achieving high-quality image data annotation. The optimal approach is to integrate both AI automation and human insight for superior outcomes.

At GTS AI, we combine AI-assisted annotation with professional human validation to provide high-quality, accurate, and scalable annotation services for businesses across diverse sectors.

Seeking professional image annotation services? Explore GTS AI today!

0 notes

Text

Optimizing Text to Speech Models with the Right Dataset

Introduction

Text-to-Speech Dataset technology has experienced considerable progress in recent years, finding applications in areas such as virtual assistants, audiobook narration, and tools for accessibility. Nevertheless, the precision and naturalness of a TTS model are largely influenced by the dataset utilized during its training. Selecting an appropriate dataset is essential for enhancing the performance of a TTS system.

Significance of High-Quality TTS Datasets

A meticulously assembled dataset is essential for training a TTS model to produce speech that closely resembles natural human communication. The primary characteristics that constitute a high-quality dataset are:

Variety: The dataset must encompass a range of speakers, accents, and intonations.

Sound Quality: Audio samples should be devoid of background noise and distortions.

Linguistic Representation: An effective dataset incorporates a broad spectrum of phonemes, vocabulary, and sentence constructions.

Annotation Precision: Well-labeled data with phonetic and linguistic annotations enhances the accuracy of the model.

Key Factors to Consider When Choosing an Appropriate Dataset

1. Specific Domain versus General Datasets

The choice of datasets should align with the intended application, as domain-specific datasets are often necessary. For instance, a text-to-speech (TTS) model designed for healthcare applications must utilize speech data that includes medical vocabulary, whereas a conversational AI system thrives on informal and expressive speech patterns.

2. Inclusion of Multilingual and Multispeaker Data

To develop a robust and scalable TTS system, it is crucial to integrate multilingual datasets featuring a variety of speakers, accents, and dialects. This approach ensures that the model can produce accurate and natural-sounding speech suitable for a diverse global audience.

3. Balancing Dataset Size and Quality

Although larger datasets can enhance performance, it is vital to maintain high quality. Inaccurately transcribed or noisy data can adversely affect speech synthesis. Striking a balance between quantity and quality is essential for achieving the best outcomes.

4. Open-Source versus Proprietary Datasets

Numerous open-source datasets are available for TTS training, including LibriSpeech, Mozilla Common Voice, and LJSpeech. However, for commercial purposes, proprietary datasets offer enhanced control over data quality and the ability to customize the dataset to specific needs.

Future Developments in TTS Data Acquisition

AI-Enhanced Data Augmentation

Artificial intelligence tools are increasingly utilized to improve and broaden datasets through the generation of synthetic voices and the application of noise reduction methods, thereby facilitating superior model training.

Speaker Customization

The demand for personalized TTS models is on the rise, necessitating datasets that include recordings specific to individual speakers to refine and customize voice outputs.

Real-Time Data Acquisition

The emergence of interactive voice applications is driving advancements in real-time data collection methods, which enhance the adaptability of TTS systems by perpetually updating models in response to user interactions.

Conclusion

Choosing the appropriate dataset is crucial for optimizing Text-to-Speech models in terms of accuracy, fluency, and naturalness. By prioritizing quality, diversity, and domain-specific data, organizations can develop state-of-the-art TTS systems that fulfill the requirements of contemporary applications.

For further details on premium speech data collection services, please visit:

0 notes

Text

Video Box Annotation: Enhancing AI Understanding of Motion and Action

Introduction

Video box annotation is the process of labeling specific objects or actions within video frames using bounding boxes. It is a key technique used in training AI models to recognize and track objects across sequences of images in videos. This type of annotation is essential for applications like object tracking, action recognition, and video-based surveillance.

How Does Video Box Annotation Work?

In video box annotation, annotators place rectangular boxes around objects of interest in individual frames of a video. These boxes are used to track the movement of objects over time, providing valuable data for AI models to learn how to detect and follow objects in motion. This method is commonly used for:

Object tracking: Identifying and following objects throughout a video.

Action recognition: Annotating movements or activities within a video.

Video surveillance: Monitoring and detecting suspicious activities.

Importance of Video Box Annotation

Accurate video box annotation allows AI models to perform tasks such as autonomous driving, security surveillance, and sports analytics. By training on annotated video data, AI systems become capable of understanding the temporal aspect of visual information and recognizing patterns in motion.

Conclusion

Video box annotation is an essential tool in creating datasets for training AI models that analyze video content. By labeling moving objects, this technique helps develop systems capable of tracking and recognizing actions in dynamic environments. Visit Here.:

0 notes

Text

Building Better AI Models with High-Quality Video Data Collection

Introduction

Artificial Intelligence (AI) is transforming numerous sectors, including self-driving cars and medical diagnostics. A vital aspect of effectively training AI models is the access to high-quality video data. The collection of video data allows AI systems to comprehend movement, identify patterns, and execute real-time decision-making. This article will examine the significance of Video Data Collection and outline best practices to guarantee the quality and efficiency of AI model training.

The Importance of Video Data in AI Advancement

Video data plays a crucial role in the training of AI models across various domains, including:

Autonomous Vehicles: AI systems depend on comprehensive video data to identify traffic signals, recognize pedestrians, and make informed driving choices.

Security and Surveillance: High-resolution video data facilitates real-time object detection, facial recognition, and the identification of anomalies.

Healthcare and Medical Imaging: AI-driven diagnostics utilize video data to assess patient movements, identify irregularities, and enhance the accuracy of surgical procedures.

Retail and Consumer Insights: AI models utilize video data to analyze shopper behavior within stores, thereby optimizing product placement and refining sales strategies.

Best Practices for Collecting High-Quality Video Data

1. Establish Clear Objectives

Prior to initiating video data collection, it is essential to articulate the objectives of the AI model. This clarity ensures that the data gathered meets the specific needs of the AI system.

2. Utilize High-Resolution Cameras

To capture intricate details, video data should be recorded with high-resolution cameras. This facilitates more accurate detection of objects and patterns by AI models.

3. Ensure Data Diversity and Representation

AI models achieve better performance when trained on varied datasets. Collecting video data from different environments, lighting conditions, and perspectives enhances model robustness and minimizes bias.

4. Implement Effective Data Annotation

Precise video annotation is vital for the training of AI models. Employing methods such as bounding boxes, keypoint tracking, and semantic segmentation can significantly improve labeling quality and boost model performance.

5. Employ Automated Data Collection Tools

Utilizing automated data collection tools and drones can significantly increase the efficiency and scalability of video data gathering, thereby reducing manual labor and potential errors.

6. Adhere to Privacy Regulations

It is imperative that video data collection complies with data privacy regulations such as GDPR and CCPA. Employing anonymization techniques and obtaining informed consent from individuals can aid in maintaining compliance.

7. Optimize Data Storage and Management

Efficient infrastructure is necessary for storing and managing large quantities of video data. Utilizing cloud storage solutions and implementing data compression techniques ensures effective processing and accessibility.

Conclusion

The collection of high-quality video data serves as the cornerstone for developing resilient AI models. By adhering to best practices, including the establishment of clear objectives, promoting diversity, implementing effective annotation, and ensuring compliance with privacy regulations, organizations can significantly improve the performance and dependability of their AI systems. Allocating resources towards appropriate tools and methodologies will result in AI models that are precise, efficient, and capable of scaling.

For professional video data collection solutions, please explore GTS AI Video Dataset Collection Services.

0 notes

Text

How to Choose the Right Data Annotation Tools for Your AI Project

Introduction

In the fast-paced domain of artificial intelligence (AI), the availability of high-quality labeled data is essential for developing precise and dependable machine learning models. The selection of an appropriate Data Annotation tool can greatly influence the success of your project by promoting efficiency, accuracy, and scalability. This article will provide guidance on the important factors to evaluate when choosing the most suitable data annotation tool for your AI initiative.

Understanding Data Annotation Tools

Data annotation tools play a crucial role in the labeling of datasets intended for machine learning models. These tools offer user-friendly interfaces that enable annotators to tag, segment, classify, and organize data, thereby rendering it interpretable for artificial intelligence algorithms. Depending on the specific application, these annotation tools can accommodate a range of data types, such as images, text, audio, and video.

Key Considerations When Selecting a Data Annotation Tool

1. Nature of Data to be Annotated

Various AI initiatives necessitate distinct forms of annotated data. Prior to selecting a tool, it is crucial to identify whether the annotation pertains to images, text, videos, or audio. Some tools are tailored for specific data types, while others provide capabilities for multi-modal annotation.

2. Features for Accuracy and Quality Control

To guarantee high-quality annotations, seek tools that offer:

Integrated validation mechanisms

Consensus-driven labeling

Automated error detection

Quality assurance processes

3. Scalability and Automation Features

As AI projects expand, manual annotation may become less efficient. Opting for a tool that includes automation features such as AI-assisted labeling, pre-annotation, and active learning can greatly enhance the speed of the process while ensuring accuracy.

4. Compatibility with Machine Learning Pipelines

It is vital for the tool to integrate smoothly with current machine learning workflows. Verify whether the tool supports APIs, SDKs, and data format compatibility with platforms like TensorFlow, PyTorch, or cloud-based machine learning services.

5. Cost and Pricing Models

Annotation tools are available with various pricing options, which include:

Pay-per-use (suitable for smaller projects)

Subscription-based (best for ongoing initiatives)

Enterprise solutions (designed for extensive AI implementations)

Evaluate your financial resources and select a tool that provides optimal value while maintaining quality.

6. Security and Compliance

In projects that handle sensitive information, security and compliance are paramount. Verify that the tool complies with industry standards such as GDPR, HIPAA, or SOC 2 certification. Features such as encryption, access controls, and data anonymization can enhance security measures.

7. User Experience and Collaboration Features

A user-friendly interface and collaborative capabilities can significantly boost productivity. Look for tools that provide:

Role-based access control

Real-time collaboration

Intuitive dashboards

8. Support and Community

Dependable customer support and an active user community are essential for addressing technical challenges and enhancing workflow. Investigate tools that offer comprehensive documentation, training materials, and responsive support teams.

Widely Utilized Data Annotation Tools

The following are some prominent data annotation tools designed to meet various requirements:

Labelbox (Comprehensive and scalable solution)

SuperAnnotate (AI-enhanced annotation for images and videos)

V7 Labs (Optimal for medical and scientific datasets)

Prodigy (Ideal for natural language processing projects)

Amazon SageMaker Ground Truth (Highly scalable with AWS integration)

Conclusion

Choosing the appropriate data annotation tool is essential for the success of your AI initiative. By evaluating aspects such as data type, accuracy, scalability, integration, cost, security, and user experience, you can select a tool that fits your project specifications. The right choice will not only enhance annotation efficiency but also improve the overall effectiveness of your AI model.

For professional advice on data annotation solutions, please visit GTS AI Services.

0 notes

Text

Common Challenges in Video Box Annotation and How to Overcome Them

Introduction

As artificial intelligence (AI) and machine learning (ML) progress, video box annotation has emerged as an essential procedure for training AI models in areas such as object tracking, action recognition, and autonomous decision-making. Applications ranging from self-driving vehicles to security monitoring rely on precisely labeled video data, which allows AI systems to detect and track objects with high efficiency. Nevertheless, the process of video box annotation presents various challenges that can adversely affect the accuracy and performance of models.

This blog will examine some of the prevalent challenges associated with Video Box Annotation and discuss effective strategies to address them, utilizing expert annotation services such as GTS AI.

1. Motion Blur and Low-Quality Frames

Challenge:

Videos frequently exhibit motion blur resulting from swift object movements, inadequate lighting, or abrupt camera transitions. This phenomenon complicates the precise annotation of objects, resulting in discrepancies in the placement of bounding boxes.

Solution:

Implement frame interpolation methods to refine the clarity of blurred segments.

Utilize frame selection techniques to bypass low-quality frames, concentrating on those that are clear.

Employ AI-driven image enhancement tools to improve the sharpness of video frames prior to the annotation process.

2. Object Occlusion and Overlapping

Challenge:

In video footage, objects often overlap, which can obscure their visibility and complicate the accurate tracking of their movements. This issue is particularly pronounced in security surveillance and densely populated environments, where occlusion presents a significant challenge.

Solution:

Implement instance tracking algorithms to forecast the movements of objects, even during periods of temporary concealment. Utilize sophisticated interpolation methods to approximate the positions of obscured objects. Enhance annotation processes by incorporating multiple viewpoints to bolster object detection precision in scenarios involving occlusion.

3. Consistency Across Frames

Challenge:

It is crucial to ensure that bounding box sizes and positions remain consistent throughout the frames when labeling objects in a video. Any discrepancies may lead to training inaccuracies, thereby diminishing the effectiveness of the AI model.

Solution:

Employ automatic keyframe interpolation to uphold uniformity in annotations.

Establish quality control measures to examine and rectify any inconsistencies in bounding boxes.

Provide training for annotators on standardized guidelines to guarantee consistency.

4. Monitoring Rapidly Moving Objects

Challenge:

Objects in motion at high speeds, including vehicles and drones, can shift positions quickly between frames, complicating the accurate annotation of their trajectories.

Solution:

Enhance the frame rate sampling to obtain a greater number of frames per second for more precise tracking.

Employ optical flow methods to assess motion direction and forecast the paths of objects.

Adopt semi-automated annotation tools to enhance accuracy in scenarios involving fast-moving entities.

5. Managing Extensive Datasets

Challenge:

Video datasets frequently encompass vast amounts of data, comprising thousands of individual frames. The manual annotation of such extensive datasets is not only labor-intensive and resource-demanding but also susceptible to human inaccuracies.

Solution:

Utilize AI-driven annotation tools to expedite the annotation process.

Adopt data augmentation strategies to minimize the reliance on extensive manual labeling.

Engage specialized annotation service providers, such as GTS AI, to ensure efficient handling of large-scale annotation tasks.

6. Bounding Box Drift

Challenge:

Over time, bounding boxes may experience shifts or "drift" as a result of inconsistencies in the annotation process, which can compromise the accuracy of object tracking. This problem frequently arises when annotators manually label each individual frame.

Solution:

Implement interpolation-based tracking methods to facilitate seamless transitions across frames.

Utilize AI-powered auto-correction techniques to identify and rectify drifting bounding boxes.

Perform multi-tiered quality assessments to ensure the precision of annotations.

GTS AI's Approach to Addressing Video Box Annotation Challenges

At GTS AI, we focus on delivering high-precision object tracking annotation tailored for AI and machine learning applications. Our offerings effectively tackle the complexities associated with video box annotation by employing:

AI-Enhanced Annotation Tools – Boosting both efficiency and accuracy.

Skilled Annotators – Guaranteeing precision and uniformity throughout frames.

Rigorous Quality Control Measures – Minimizing errors and enhancing dataset dependability.

Tailored Solutions for Extensive Datasets – Accelerating AI training and implementation.

Conclusion

The process of video box annotation plays a vital role in the training of AI models for tasks such as object detection, tracking, and behavior analysis. Nonetheless, issues such as motion blur, occlusion, and bounding box drift may compromise the accuracy of the data. By utilizing sophisticated annotation methods, AI-driven tools, and professional annotation services, these obstacles can be successfully mitigated.

For superior video annotation solutions, consider GTS AI’s object tracking annotation services today!

0 notes

Text

Enhancing Security & Surveillance with Image Data Annotation

Introduction

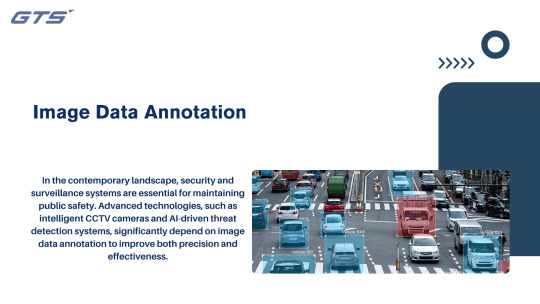

In the contemporary landscape, security and surveillance systems are essential for maintaining public safety. Advanced technologies, such as intelligent CCTV cameras and AI-driven threat detection systems, significantly depend on image data annotation to improve both precision and effectiveness. By utilizing annotated images to train AI models, these security systems are capable of identifying objects, recognizing irregularities, and even forecasting potential threats in real time.

What is Image Data Annotation in Security & Surveillance?

Image Data Annotation in the context of security and surveillance refers to the process of labeling images or video frames to facilitate the training of artificial intelligence models. These models are designed to identify individuals, vehicles, objects, and potentially suspicious behaviors. This procedure is essential for the advancement of sophisticated surveillance technologies, which encompass:

Facial recognition systems

Intrusion detection mechanisms

Crowd monitoring and anomaly identification

License plate recognition (LPR)

Detection of weapons and threats.

Key Image Annotation Techniques for Security AI

1. Bounding Box Annotation

This technique is employed to identify and monitor objects such as individuals, vehicles, or weapons. It aids artificial intelligence in distinguishing between typical and suspicious behaviors.

2. Polygon Annotation

This method allows for more accurate labeling of objects with irregular shapes. It is particularly effective for recognizing weapons, individuals wearing masks, or items that appear to be abandoned.

3. Semantic Segmentation

This approach classifies each pixel within an image to differentiate various components. It is crucial for analyzing crowd density and detecting unauthorized intrusions.

4. Keypoint Annotation

This technique pinpoints specific anatomical landmarks to facilitate gesture and posture recognition. It is instrumental in identifying atypical movements or signs of aggressive conduct.

5. Optical Character Recognition (OCR) Annotation

This method is utilized for recognizing license plates and reading security identification badges. It supports automated tracking of vehicles in restricted zones.

How Image Data Annotation Strengthens Surveillance Capabilities

1. Accelerated Threat Identification

AI-enhanced surveillance systems can promptly detect potential threats, such as unauthorized entries or suspicious behaviors, enabling security teams to act swiftly.

2. Enhanced Facial Recognition

Superior image annotation significantly improves the accuracy of facial recognition, facilitating the identification of individuals in real-time, even under challenging lighting conditions.

3. Streamlined Monitoring & Decreased Human Intervention

Automated security solutions minimize the necessity for manual oversight by highlighting unusual activities, allowing security personnel to concentrate on genuine threats.

4. Improved Public Safety

From airports to smart urban environments, AI-driven surveillance provides enhanced security by monitoring large gatherings and identifying potential risks before they develop.

Why Opt for GTS AI for Image Data Annotation?

At GTS AI, we focus on delivering high-precision annotation services for images and videos, particularly in the realm of security and surveillance. Our skilled team guarantees meticulous labeling, which enhances the performance of AI models, enabling security systems to operate with greater intelligence and proactivity.

Conclusion

As AI-driven surveillance continues to evolve, the importance of image data annotation has significantly increased within the security sector. Annotated data plays a crucial role in identifying threats and improving facial recognition capabilities, allowing security systems to function more efficiently and effectively. To develop more advanced security solutions, consider GTS AI’s image annotation services today.

0 notes

Text

TTS Datasets in Healthcare: Enhancing Accessibility and Patient Communication

Introduction

In the healthcare sector, the importance of effective communication cannot be overstated when it comes to providing high-quality patient care. Recent developments in artificial intelligence (AI) have led to significant advancements in Text-to-Speech Dataset technology, which is fundamentally enhancing accessibility and communication among patients, physicians, and healthcare professionals. The availability of high-quality TTS datasets is vital for training AI models capable of producing natural, human-like speech. This article examines the transformative impact of TTS datasets on the healthcare industry, particularly in terms of improving accessibility and facilitating better communication with patients.

The Significance of TTS in Healthcare

Text-to-Speech (TTS) technology facilitates the transformation of written text into spoken words through AI-driven systems, providing numerous advantages within the healthcare sector, including:

Increased Accessibility: Assists visually impaired individuals and those with reading challenges in obtaining essential medical information.

Enhanced Patient Involvement: Customized voice assistants support patients in navigating their treatment plans and adhering to medication schedules.

Streamlined Medical Communication: AI-based voice systems aid in sending appointment reminders, managing prescription refills, and delivering test result notifications.

Diverse Language Support: TTS models developed with varied datasets promote effective communication across multiple languages and dialects.

Significance of High-Quality TTS Datasets

The effectiveness of TTS technology in the healthcare sector hinges on the availability of diverse and high-quality speech datasets. Essential elements in the creation of robust TTS datasets encompass:

Variety of Speaker Voices: Including a range of genders, ages, and accents promotes inclusivity.

Training in Medical Terminology: Accurate pronunciation of intricate medical terms boosts the precision of AI-generated speech.

Clear Audio Data: High-quality recordings enhance the clarity of synthesized speech.

Contextual Speech Modeling: Grasping the context of dialogues contributes to making AI voices sound more natural and empathetic.

Applications of Text-to-Speech (TTS) in Healthcare

Virtual Health Assistants

Artificial intelligence-driven virtual assistants offer voice-guided support for medication management, symptom assessment, and patient education.

Assistive Technology for Individuals with Disabilities

Patients with visual impairments gain advantages from TTS-enabled tools that vocalize medical records, prescription information, and laboratory results.

AI-Enhanced Telemedicine Solutions

TTS technology improves telehealth services by transforming text-based medical diagnoses and instructions into spoken language, facilitating better comprehension for patients.

Intelligent Healthcare Devices

Wearable technology and smart home health monitors utilize TTS to deliver real-time health updates, emergency notifications, and wellness reminders.

Challenges in TTS Data Collection for Healthcare

While the benefits of text-to-speech (TTS) technology in healthcare are significant, the process of collecting and training TTS datasets presents several challenges, including:

Data Privacy Issues: Adhering to regulations such as HIPAA and GDPR to protect patient information.

Scarcity of Medical Voice Data: The limited availability of high-quality speech data that encompasses healthcare-specific terminology.

Accent and Language Challenges: The necessity to train TTS models to accommodate various regional accents and languages.

Preserving Naturalness in Speech: Striking a balance between clarity, intonation, and emotional resonance in synthesized speech.

Conclusion

The outlook for TTS technology in healthcare is optimistic, driven by advancements in artificial intelligence, deep learning, and natural language processing. Developments in personalized voice synthesis and support for multiple languages will significantly improve patient interactions and accessibility. By utilizing high-quality TTS datasets, healthcare organizations can create more effective AI-driven solutions that address communication barriers and enhance patient care.

For further information on TTS dataset collection in healthcare, please visit: GTS.AI Speech Data Collection.

0 notes

Text

How Video Data Powers AI: Training Models with Real-World Footage

Introduction

Artificial intelligence (AI) has experienced significant progress in recent years, especially in the realms of computer vision and machine learning. A pivotal element contributing to these developments is the availability of high-quality video data, which is essential for training AI models to identify, analyze, and forecast real-world situations. This article examines the role of Video Data Collection in enhancing AI capabilities, the necessity of varied datasets, and the primary obstacles encountered in the collection of video datasets.

The Significance of Video Data in AI Model Training

Video data plays a crucial role in the training of AI models across various sectors, such as autonomous driving, security monitoring, healthcare, and retail analytics. In contrast to static images, video offers temporal context, enabling AI systems to comprehend motion, behavior, and interaction dynamics. The primary advantages of utilizing video data for AI training include:

Improved Object Detection: Video sequences enable AI to identify and monitor objects with enhanced precision over time.

Action Forecasting: AI can evaluate movement trends to predict forthcoming actions, which is essential for applications like autonomous vehicles.

Dynamic Decision-Making: AI systems can adaptively respond to real-time changes in their environment, thereby increasing their efficacy in immediate applications.

Ongoing Learning: Through sequential data, AI models can more effectively learn and adjust to various environments and situations.

Applications of Video Data in Artificial Intelligence

Autonomous Vehicles

Self-driving vehicles depend on extensive video data captured from real-world settings to train artificial intelligence systems for recognizing obstacles, traffic signals, and pedestrian actions. Annotated video datasets enhance machine learning algorithms, thereby increasing safety and precision.

Intelligent Surveillance

AI-enhanced security systems leverage video data to detect suspicious behavior, identify individuals, and recognize irregularities. Video analytics empower law enforcement and businesses to bolster security protocols through automated observation.

Healthcare and Medical AI

In the medical field, AI models trained on medical video datasets aid in disease diagnosis, patient movement monitoring, and even the execution of robotic-assisted surgical procedures.

Retail and Consumer Insights

Retailers employ AI-driven video analytics to monitor customer movements, refine store layouts, and tailor shopping experiences. AI models scrutinize video recordings to gain insights into consumer behavior and enhance business strategies.

Challenges in Video Data Collection

While video data collection offers significant advantages for AI training, it also poses several challenges:

Data Privacy Issues: It is imperative to comply with ethical standards and regulations such as GDPR and HIPAA when gathering video data.

Complexity of Annotation and Labeling: The process of manually annotating video datasets is labor-intensive and necessitates expert oversight.

Storage and Processing Expenses: The substantial storage requirements and computational power needed for training AI models with video data can be considerable.

Diversity of Data: It is essential to ensure that datasets are diverse, encompassing various environments, lighting conditions, and scenarios to enhance AI generalization.

Conclusion

Video data plays a pivotal role in the advancement of AI, allowing models to effectively process and analyze real-world footage. As AI technology progresses, the demand for high-quality and diverse video datasets will be crucial for enhancing machine learning accuracy and real-time decision-making capabilities. However, overcoming challenges related to data privacy, annotation, and processing will be vital for realizing the full potential of AI.

For further insights on video dataset collection for AI applications, please visit: GTS.AI Video Dataset Collection.

0 notes

Text

Video Box Annotation: Key to Object Tracking and Action Recognition

What is Video Box Annotation?

Video box annotation involves labeling objects within video frames using bounding boxes. This technique is essential for tasks like object tracking and action recognition in computer vision. By tracking objects across video frames, machine learning models can learn to recognize movements, behaviors, and activities over time.

How It Works

Annotators draw bounding boxes around objects in each video frame. These boxes are updated frame by frame to track object movement. The process can be done manually or with semi-automated tools, ensuring consistency across frames.

Applications

Object Tracking: Used in autonomous driving and robotics to track vehicles, pedestrians, and obstacles.

Action Recognition: Helps identify activities in sports or surveillance footage.

Gesture Recognition: Applied in gaming and sign language interpretation for tracking movements.

Benefits

Enhances model accuracy in object tracking and activity recognition.

Supports real-time applications like surveillance and autonomous navigation.

Improves AI's ability to recognize actions and predict movements.

Challenges

Time-consuming, especially for long videos or multiple objects.

Requires high accuracy to maintain consistency across frames.

Difficult in dynamic environments where objects overlap or move erratically.

Conclusion

Video box annotation is crucial for training AI models to understand and interpret video data, driving advancements in fields like autonomous driving, security, and sports analytics. Visit Here:

0 notes

Text

How to Improve Accuracy in Video Box Annotation for AI Models

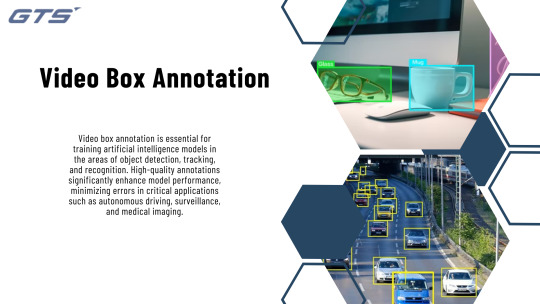

Introduction

Video Box Annotation is essential for training artificial intelligence models in the areas of object detection, tracking, and recognition. High-quality annotations significantly enhance model performance, minimizing errors in critical applications such as autonomous driving, surveillance, and medical imaging. This blog will examine effective strategies to enhance the accuracy of video box annotation for AI models.

Significance of Accurate Video Box Annotation

Accurate annotations influence AI models in several important ways:

Enhances Model Generalization – Assists AI in recognizing objects across various environments.

Lowers False Positives/Negatives – Guarantees that AI delivers dependable predictions.

Enhances Real-time Object Tracking – Vital for applications such as self-driving vehicles and video analytics.

Increases Training Efficiency – Diminishes the necessity for extensive re-labeling and fine-tuning.

Best Practices for Enhancing Accuracy in Video Box Annotation

Utilize High-Quality Video Frames

Selecting high-resolution videos with appropriate lighting and contrast facilitates improved object recognition and annotation precision.

2. Adopt Frame-by-Frame Annotation with Interpolation

Manually annotating each frame can be labor-intensive. Instead, consider using interpolation methods where keyframes are annotated, allowing for the automatic generation and adjustment of intermediate frames as necessary.

3. Utilize AI-Enhanced Annotation Tools

Sophisticated annotation platforms feature AI support, which accelerates the process while maintaining accuracy. Tools such as auto-tracking and predictive bounding boxes significantly enhance precision.

4. Maintain Consistency with Labeling Standards

Establish clear annotation protocols, including:

Uniform bounding box dimensions and placements.

Designated annotation colors for each object category.

Protocols for handling occlusions and partial objects.

5. Implement Active Learning for Ongoing Enhancement

Active learning empowers AI models to identify uncertain instances, facilitating annotators in refining labels and enhancing the quality of the dataset.

6. Tackle Challenges of Occlusion and Motion Blur

Objects can be partially obscured due to occlusion or motion blur. Employing strategies such as:

Annotation from multiple viewpoints.

Consistency checks for temporal tracking.

Contextual labeling based on additional information.

can help alleviate these challenges.

7. Employ Intelligent Data Augmentation

Techniques for data augmentation, including rotation, cropping, and the addition of noise, contribute to the creation of a more varied dataset, thereby increasing the resilience of AI models to real-world conditions.

Tools for High-Precision Video Box Annotation

A variety of tools facilitate accurate video annotation, including:

CVAT (Computer Vision Annotation Tool) – An open-source platform that incorporates AI-assisted annotation capabilities.

LabelImg – A straightforward tool for bounding box annotation applicable to both images and videos.

SuperAnnotate – An AI-driven annotation tool that includes features for quality control.

VIA (VGG Image Annotator) – A web-based tool designed for video annotation.

Conclusion

Enhancing precision in video box annotation is essential for the development of high-performing AI models. By adhering to best practices, utilizing AI-enhanced tools, and optimizing workflows, teams can achieve accurate annotations and create effective training datasets.

For expert object tracking and annotation services, please visit GTS AI.

0 notes

Text

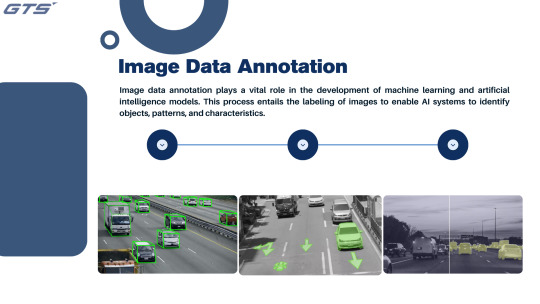

Manual vs. Automated Image Data Annotation: Which One to Choose?

Introduction

Image Data Annotation plays a vital role in the development of machine learning and artificial intelligence models. This process entails the labeling of images to enable AI systems to identify objects, patterns, and characteristics. In the realm of image annotation, organizations and researchers frequently encounter the dilemma of selecting between manual and automated annotation techniques. Each approach presents its own set of benefits and drawbacks, and the optimal choice is contingent upon the particular needs of the project.

Image Data Annotation by Hand

Hand-based annotation entails the involvement of human annotators who assign labels to images according to established guidelines. This method is commonly employed for tasks that demand a high level of accuracy and contextual comprehension.

Benefits

Exceptional Accuracy: Human annotators possess the ability to grasp intricate situations, thereby guaranteeing precise labeling.

Contextual Insight: In contrast to automated systems, humans can decipher ambiguous images and assign relevant labels.

Versatility: This approach is applicable to a range of annotation tasks, such as semantic segmentation, object detection, and keypoint annotation.

Limitation

Labor-Intensive: The process of manual annotation demands considerable time investment, particularly when dealing with extensive datasets.

Financially Burdensome: The expenses associated with recruiting and training annotators can be substantial.

Challenges in Scalability: It becomes challenging to expand efforts for projects involving millions of images.

Automated Image Data Annotation

Automated annotation employs AI-driven tools and algorithms to assign labels to images with minimal human involvement. This approach is frequently utilized in extensive projects that demand rapid execution and high efficiency.

Advantages

Speed and Efficiency: Automated systems can analyze and label thousands of images within a brief timeframe.

Cost-Effectiveness: It diminishes the reliance on human labor, thereby reducing overall project expenses.

Scalability: Particularly suitable for large datasets and repetitive annotation tasks.

Limitations

Reduced Accuracy: AI-based annotation tools may encounter difficulties with intricate or ambiguous images.

Limited Contextual Comprehension: Automated systems might misinterpret objects, resulting in erroneous labels.

Initial Setup Complexity: It necessitates model training and fine-tuning to attain optimal performance.

Selecting the Appropriate Method

The decision between manual and automated annotation is influenced by several factors, including the size of the project, the required level of accuracy, and budget limitations:

For applications demanding high accuracy (such as medical imaging and autonomous vehicles) → Manual annotation is the preferred option.

For extensive datasets where cost-effectiveness is a priority → Automated annotation is the more appropriate choice.

For a combination of both strategies → Utilizing both methods can enhance both accuracy and efficiency.

Conclusion

Both manual and automated image annotation are crucial in the development of artificial intelligence and machine learning. Organizations like GTS AI provide specialized image annotation services to deliver optimal outcomes for various applications. By assessing the specific needs of a project, companies can make well-informed decisions to improve the performance of their AI models effectively.

0 notes

Text

The Future of Image Data Annotation in Deep Learning

Introduction

In the swiftly advancing realm of artificial intelligence (AI) and deep learning, the annotation of image data is essential for the development of high-performing models. As computer vision, autonomous systems, and AI applications continue to progress, the future of Image Data Annotation is poised to become increasingly innovative, efficient, and automated.

The Progression of Image Data Annotation

Historically, the process of image annotation involved significant manual effort, with annotators tasked with labeling images through methods such as bounding boxes, polygons, or segmentation masks. However, the increasing demand for high-quality annotated datasets has led to the development of innovative technologies and methodologies that enhance the scalability and accuracy of this process.

Significant Trends Influencing the Future of Image Annotation

1. AI-Enhanced Annotation: The integration of artificial intelligence and machine learning is transforming the annotation landscape by automating substantial portions of the labeling process. Pre-annotation models facilitate quicker labeling by identifying objects and proposing annotations, thereby minimizing manual labor while ensuring precision.

2. Synthetic Data and Augmentation: The adoption of synthetic data is on the rise as a means to complement real-world datasets. By creating artificially annotated images, deep learning models can be trained with a broader range of examples, thereby lessening the reliance on extensive manual annotation.

3.Self-Supervised and Weakly Supervised Learning: Emerging self-supervised learning techniques enable models to discern patterns with limited human input, moving away from the dependence on fully labeled datasets. Additionally, weakly supervised learning further diminishes annotation expenses by utilizing partially labeled or noisy datasets.

4. Crowdsourcing and Distributed Labeling: Platforms designed for crowdsourced annotation enable extensive image labeling by numerous contributors from around the globe. This decentralized method accelerates the labeling process while ensuring quality through established validation mechanisms.

5. 3D and Multi-Sensor Annotation: As augmented reality (AR), virtual reality (VR), and autonomous vehicles become more prevalent, the demand for 3D annotation is increasing. Future annotation tools are expected to incorporate LiDAR, depth sensing, and multi-sensor fusion to improve object recognition capabilities.

6. Blockchain for Annotation Quality Control: The potential of blockchain technology is being investigated for the purpose of verifying the authenticity and precision of annotations. Decentralized verification approaches can provide transparency and foster trust in labeled datasets utilized for AI training.

The Importance of Sophisticated Annotation Tools

In response to the increasing requirements of AI applications, sophisticated annotation tools and platforms are undergoing constant enhancement. Organizations such as GTS AI provide state-of-the-art image and video annotation services, guaranteeing that AI models are developed using high-quality labeled datasets.

Conclusion

As deep learning continues to expand the horizons of artificial intelligence, the future of image data annotation will be influenced by automation, efficiency, and innovative methodologies. By utilizing AI-assisted annotation, synthetic data, and self-supervised learning, the sector is progressing towards more scalable and intelligent solutions. Investing in advanced annotation methods today will be essential for constructing the AI models of the future.

0 notes

Text

Text-to-Speech Dataset: Fueling Conversational AI

Introduction

In the rapidly advancing world of artificial intelligence (AI) and machine learning (ML), Text-to-Speech (TTS) datasets have become essential for developing voice synthesis technologies. This process involves gathering, annotating, and organizing audio and textual data to train AI models to convert written text into natural-sounding speech. As organizations push the boundaries of innovation, TTS datasets play a significant role in creating systems that enhance accessibility, user experience, and automation.

What is a Text-to-Speech Dataset?

A text-to-speech dataset is a collection of paired audio recordings and their corresponding textual transcriptions. These datasets are crucial for training AI models to generate lifelike speech from text, capturing nuances such as tone, pitch, and emotion. From virtual assistants to audiobook narrators, TTS datasets form the backbone of many modern applications.

Why is a Text-to-Speech Dataset Important?

Improved AI Training: High-quality TTS datasets ensure AI models achieve precision in voice synthesis tasks.

Support for Multilingual Capabilities: TTS datasets enable the development of systems that support multiple languages, accents, and dialects.

Scalable Solutions: By leveraging robust datasets, organizations can deploy TTS-based applications across industries such as entertainment, education, and accessibility.

Key Features of Text-to-Speech Dataset Services

Customizable Datasets: Tailored TTS datasets are created to meet the specific requirements of different AI applications.

Diverse Audio Sources: TTS datasets include varied content such as professional voice recordings, natural conversations, and scripted dialogues.

Accurate Annotations: Precise alignment of text and audio ensures high-quality training, enhancing the performance of TTS systems.

Compliance and Privacy: Ethical practices and privacy compliance are prioritized to ensure secure data collection.

Applications of Text-to-Speech Datasets

Virtual Assistants: Powering conversational AI in devices like smartphones, smart speakers, and chatbots.

Accessibility Tools: Enabling visually impaired users to access written content through natural-sounding speech.

E-Learning: Enhancing online education platforms with engaging audio narration.

Entertainment: Creating lifelike voiceovers for games, animations, and audiobooks.

Challenges in Text-to-Speech Dataset Collection

Collecting high-quality TTS datasets comes with challenges such as capturing diverse voice styles, ensuring data quality, and addressing linguistic complexities. Collaborating with experienced dataset providers can help overcome these hurdles by offering tailored solutions.

Conclusion

Text-to-speech datasets are a cornerstone of conversational AI, empowering organizations to develop advanced systems that generate realistic and expressive speech. By leveraging high-quality datasets, businesses can drive progress and unlock new opportunities in accessibility, automation, and user engagement. Embracing TTS datasets ensures staying ahead in the competitive landscape of AI innovation. Explore text-to-speech dataset services today to fuel the next generation of voice technologies.

Visit Here: https://gts.ai/services/speech-data-collection/

0 notes

Text

Overcoming Challenges in OCR Data Collection for AI Projects

Introduction

Optical Character Recognition (OCR) is one of the most progressive innovations in the way companies digitize data and process information. OCR-based products can be a key to e-documents automatization as well as a source of smart searching options. Therefore, OCR Data Collection is the most crucial part of computing that is highly utilized in different spheres of the business world. Nevertheless, the efficiency of these systems is directly related to the quality and variety of data that is used for training. The things that arise from the use of data collection for the OCR projects are unique challenges which will be taken care of beforehand. In this blog, we’ll look at some of the common OCR data collection challenges and the strategies to overcome them.

The Importance of OCR Data Collection

Machine learning models that OCR systems rely on are fed with a great amount of data coming from images, scans, and handwritten notes. Through properly managing the training data, OCR systems keep the following characteristics:

Improved Accuracy: Identifying various types of fonts, languages, and handwritten texts.

Scalability: Handling different data types and text genres.

Robust Performance: Dealing with diverse real-life situations such as random noises, distortions, and low-resolution images.

Common Challenges in OCR Data Collection

1. Data Diversity

OCR systems are required to identify a broad spectrum of text types, which includes:

Different fonts and styles (such as serif, sans-serif, and cursive).

Languages that utilize distinct scripts (for instance, Arabic, Chinese, and Hindi).

Handwritten text that varies in clarity.

Solution: Assemble datasets that accurately reflect the intended use cases and target demographics. Partner with international data providers to ensure the inclusion of a variety of languages and writing styles.

2. Data Quality

The performance of OCR can be compromised by low-resolution images, distorted scans, and cluttered backgrounds.

Solution: Employ high-quality scanning technology and preprocess the data to improve resolution, eliminate noise, and standardize formats. Utilize data augmentation methods to replicate real-world conditions.

3. Annotation Complexity

The process of manually annotating OCR data can be labor-intensive and susceptible to errors, particularly when dealing with intricate documents that include tables, graphs, or multiple languages.

Solution: Utilize automated annotation tools and collaborate with specialized data annotation services such as GTS.AI. Implement a multi-tiered validation process to ensure precision.

4. Ethical and Legal Compliance

The management of sensitive information contained in documents, such as medical records and financial data, necessitates rigorous compliance with privacy regulations.

Solution: Anonymize sensitive information and adhere to legal frameworks such as GDPR and HIPAA. Secure explicit consent for data utilization when required.

5. Balancing Cost and Scale

The acquisition of extensive datasets can demand significant resources, particularly for OCR projects that involve multiple languages and formats.

Solution: Employ synthetic data generation to enhance real-world datasets. Invest in scalable cloud-based solutions to efficiently store and process large volumes of data.

Strategies for Efficient OCR Data Acquisition

1. Utilize Publicly Accessible Datasets

Investigate datasets that are freely available, such as:

IAM Handwriting Database

Google’s OCR Datasets

Document Understanding datasets from academic institutions

These resources can serve as a robust basis for training OCR models.

2. Tailored Data Collection

For OCR applications specific to certain industries, gather data that meets your particular requirements:

Digitize documents in multiple formats (PDFs, JPEGs, PNGs).

Document real-life situations, including receipts, invoices, and handwritten notes.

3. Invest in Sophisticated Tools

Employ AI-enhanced tools to optimize data collection:

High-resolution optical scanners for superior input quality.

Automated transcription software to generate annotated datasets.

4. Engage with Professionals

Work alongside seasoned service providers such as GTS.AI for:

Tailored dataset development.

Data annotation and validation.

Scalable solutions suitable for extensive projects.

GTS.AI's Role in Enhancing OCR Data Collection

At GTS.AI, we are dedicated to addressing the complexities associated with OCR data collection through customized solutions, which include:

Thorough Annotation Services: Providing services ranging from bounding box creation to transcription, ensuring exceptional accuracy.

Varied Data Collection: Acquiring multilingual and multi-format data to support comprehensive OCR training.

Adherence to Compliance and Ethics: Complying with international privacy regulations and maintaining ethical standards.

Flexible Infrastructure: Capable of handling projects of any scale, from small startups to large enterprise endeavors.

Conclusion

The potential of OCR technology to revolutionize various sectors is significant, yet its effectiveness relies on the quality of the data utilized. By tackling issues such as data diversity, quality, and compliance, organizations can develop datasets that facilitate enhanced AI performance. Collaborating with specialists like GTS.AI guarantees that your OCR initiatives are founded on a robust framework. Are you prepared to harness the full capabilities of OCR? Let us work together!

0 notes