Don't wanna be here? Send us removal request.

Text

Show and Tell 3, October 29

youtube

https://youtu.be/soDP1-r3qko

youtube

https://youtu.be/FlaNjI39r0E

These videos demonstrate our classmates testing our project ahead of the show and tell. Their interaction worked out as we intended. They had to test combinations out and discuss strategy to learn what colors are made with which combination of body parts. After several rounds of attempts they began to remember the necessary combinations and we able to perform their movements faster. Even when they remembered what they need to do, our classmates still had to discuss to make it happen. While we used to screen to prompt action, we succeeded in creating an experience that drew our users into interacting more with each other than the screen.

Show and tell provided us with mixed reactions.

Jens pointed out that there is more to explore in the movements we ask of our users. He suggested there is something to be discovered in how the movements are unusual, potentially silly, and could be socially awkward given that touching others is required. We did consider how our movements are potentially goofy and awkward and saw these aspects as contributing to the necessity of conversation between users. However, perhaps these feelings could be explored and amplified. Maybe making the necessary movements even more absurd or complex would further shape the types of conversations provoked.

Our simplistic visuals were called under designed and the use of the small square as a prompt was criticized. I agree that we did not give much time to considering aesthetics. We prioritized getting something functioning within the time allotted, but that does not mean our aesthetic choices were not motivated. One of the reasons for keeping the visuals really basic was our focus was the interaction between users and less so the interaction with the screen. The square in the center of the screen is small in order to provide more space to show the colors created by the actions of our users. Since this is not a game, the small square functions as a prompt to promote action and collaboration on the part of the users rather than a goal. The colors they create are bigger because that is what is important for them to see--these colors are the information that allows the users to strategize, deduce the colors associated with their bodies, and hopefully remember what they need to do to make certain colors. All this being said, yes we would definitely like to make something more aesthetically pleasing. Working with color blending would make color creation less like hitting a series of buttons. We also would have liked to create methods for painting. Painting would have created something that reflects changes in our users actions over time and would have provided users with something more creative to discuss and on which to collaborate.

The frustrating part was when one professor brought up the word that haunts this entire course: game. What is problematic is this word is merely used to dismiss work. It is never elaborated upon. Why is it game? What is wrong with a game? How could it be changed to not be a game? A game is interactive and we are designing interactions, so I do not see the problem with game mechanics (especially since they are heavily relied upon to generate profit in the field). If everything is a game and you will not tell us what is wrong with that, then maybe our professors should stop considering game-like qualities to be negative.

0 notes

Text

Module 3 week 3 part 2, October 28

We began the day working with Clint. He helped us create a function that calculated distance between any two parts in a much more elegant way. He showed us how to loop through the body arrays while calculating distance so every part is addressed in a short bit of code.

Clint also helped us try to get past working with making colors change with long conditionals. He showed us a method of color blending that involved stacking rectangles and having the distance between body parts change the opacity of the rectangles to blend colors together. This method of changing the colors of the canvas would lead to a more nuanced and rich interaction than just having the canvas suddenly change color when two parts touch. It would also lead to a wider pool of sometime unexpected colors rather than just the six with which we have been working (red, yellow, blue, purple, orange, and green). However, we struggled with understanding how to work with this method and decided to go back to what we were doing before to make sure we had something working in time for show and tell. This color blending method would definitely be more interesting to work with if we had more time.

We also struggled with understanding how to work with Clint’s distance function. It was definitely more elegant than our manual methods but we again had to consider time so we regressed back to our previous distance calculations.

We then met with Jens to discuss how to move forward. He broke down our project into three portions. This helped us conceptualize how to move forward.

Rather than using conditionals to change colors we decided to work with booleans. We created boolean values associated with the colors with which we are work. Then we devised functions that change the color based on the value of the boolean variables. We also wrote a function that generates a random color for the users to attempt to create. Moving forward, the booleans will be used to assess if the users created the correct color and generate a new color for them to create.

Since there are multiple ways to solve any problem in programming, I need to remember to keep it simple. We have been able to get something functional by working with what we know. If I embraced keeping it simple from the beginning we could have arrived at this point much sooner, giving us more time to iterate and to potentially come to grips with the methods Clint showed us to create a richer interaction. I can work efficiently by putting in the added effort of making things work with my limited knowledge even if it is messy, granting myself time to improve and potentially learn much more in the process.

0 notes

Text

Module 3 week 3, October 28

youtube

https://youtu.be/zOyJTdEDLCU

youtube

https://youtu.be/6syjEocK9ac

The videos show how we got the canvas color to change when our body parts came into contact.

We have been struggling with getting any of the sketches to work with calculating distance between body parts and multiple bodies. We tried working with the wrist distance sketch and the multi-body sketch before pivoting to the sending and receiving sketches. We deciding to work with the sending and receiving sketches because Jens modified them to work with multiple bodies so each body is assigned a body ID.

By accessing the body IDs we built two arrays--one for each body. We then pulled out the 12 body parts with which we are working: shoulders, hips, and knees. Our next hurdle was then calculating the distance between any of these parts. We wanted to create a simple function to do this, but could not figure out a way to have the function create individual variables for all 36 combinations of body parts. We took the clumsy and long winded method of calculating all of these distances separately--giving us 36 distance variables with which to work. We made this calculation by finding the hypotenuse of triangles formed by connecting the points of any of the parts we are using. At first, these calculations slowed down functionality considerably, but a student advised us that if we did not take the square root to get the hypotenuse and used the larger numbers instead, it would be faster.

We then used conditionals to change the color of our canvas when any two parts get within a certain distance of one another.

Managing to overcome these hurdles on our own was extremely motivating. Our solutions were clumsy and long winded but we decided to work this way after spending way too much time failing to devise how to elegantly solve these problems. In the future we should just do things the sloppy way first so we have something working. This would give us more time to iterate and potentially inspire us to device superior solutions. Personally, this is progress from the last module, I did not give in to feeling defeated and fought to find solutions.

0 notes

Text

Module 2 Show and Tell, October 28

This post is extremely late, I am sorry. I have had trouble getting a video for this post, hopefully it will be added soon.

We managed to get something working at the last minute. We have a ball that is pushed one direction with low frequency sounds and the other with high frequency sounds. There are two panels on either side and when the ball touches one of the panels, the directions it moves are flipped. There are always two vectors pushing the ball towards the center of the screen. We have two versions, one that provides a user no visual information and one that provides a user with visual information as to what is happening on screen.

This course is focused on two tasks: experimentation and reflection. It is nice that the projects are not graded, freeing us up to experiment without pressure of grades. We are then meant to reflect on our experiments. We tried to incorporate reflection into our presentation.

Our initial idea would have a user fight magnetic forces to make the ball reach the panels. We pivoted away from this for two reasons--we could not get it working and we were not trying to make a game. Fighting the magnetic forces to reach panels would give our interaction a goal. We made it clear in our presentation that these panels were meant to signify that the ball can move in those directions and are definitely not goals. There was not problem to conquer, users can just move this ball back and forth. We also addressed our struggles to get a concept and how this lead to very little iteration or time to focus on aesthetics. We acknowledged these issues to demonstrate we have reflected on the process but to also get them out of the way so we can get some other, more constructive criticism.

Show and tell was extremely disheartening. Our attempts at reflection on our struggles and how we pivoted away from something game-like fell on deaf ears. Our feedback was focused on a lack of iteration, a lack of attention paid to aesthetics, and that it seemed game-like. We did not get any elaboration on how it seemed like a game so we were not sure how we could have avoided this critique. Being told the exact things we already said gave us nothing useful to use in the future. It was frustrating to have the weaknesses we addressed parroted back at us. Our seemingly self-deprecating reflections were included in our presentation, not to make excuses, but to get these out of the way so perhaps we could be critiqued on something we have not considered already and grow as budding designers.

youtube

https://youtu.be/S9jwxMzAOOQ

youtube

https://youtu.be/kJhhwD1V81Y

0 notes

Text

Exploring movement, October 20

youtube

https://youtu.be/1526HP_KYzs

youtube

https://youtu.be/07TCSAxrVMs

Yesterday we took Jens’s advice and explored the movements with which we wanted to work. We began our test by placing pieces of tape labeled with colors on certain parts of our bodies. We then tested cooperating to combine the colors in various combinations and moving the tape to different areas and mixing where the colors are placed.

Then we tested how this works with the computer tracking us. We initially wanted to track our ankles but saw that the computer had trouble with ankles and decided to use our knees instead.

What we discovered is our concept of working with social interaction and cooperation is successful in practice. Much of our interaction is done outside of the computer--it heavily relies on the users interacting with each other. In order to achieve the various combinations we had to talk to each other and plan our movements. We had to hold on to each other and support one another to avoid losing our balance. Everything was about teamwork.

We initially wanted to work with ankles because we though it would be harder to have ankles meet with shoulders than other parts, however we discovered it was relatively simple to kick up to someone’s shoulders, but when we started testing knees, we discovered it was more difficult and required more cooperation to avoid falling.

We tested having to make two colors at once and only having left sides match with left sides and right with right so we would have to move across each other’s bodies. These complications required even more discussion and support, providing us with valuable insight into ways we may elevate the skill ceiling for our project.

I am glad we explored movements. We not only gained insight into how our idea would play out but also learned how it felt to perform and more about how the computer will interpret our movements.

0 notes

Text

Kinesthetic Interaction, October 20

Fogtmann, Fritsch, and Kortbek introduce their concept of Kinesthetic Interaction--a unifying concept for describing the body in motion as a foundation for designing interactive systems. Three design themes help shape kinesthetic interaction: kinesthetic development (acquiring, developing, and improving bodily skills), means (means for reaching a goal), and disorder (challenging kinesthetic sense). Kinesthetic interaction combines three perspectives on movement: physiology, interactive technologies, and kinesthetic experience.

KI is a theoretical foundation and vocabulary for describing the body in motion and how it conditions our experience of the world in the interactions and through interaction technology. Their goal with KI is to facilitate designing and analyzing interactions--to facilitate designing for bodies in motion and encouraging alternative interactions, new bodily potential, and motivate people to actively take part in interactions. “Instead of bodily movement being dictated by the interaction, the interactions are designed based on the potential inherently present within the body.”

This article, far more so than the others, seems to encourage a more complete view of motion. The authors do not ignore the ephemeral aspects of motion but also acknowledge the importance of data.

By focusing on “the potential inherently present within the body”, designers can use bodily motion to create more accessibility options for those differently able. Designs shaped by KI seem less likely to ignore the realities of differently able bodies just to make cool toys for the rich and physically adept. Additionally, their desire to have users take active roles in shaping interactions leads me to believe a design approach shaped by kinesthetic interaction would not forget the bodies of the overweight, elderly, or disabled to favor the physically fit.

0 notes

Text

Materializing Movement, October 20

Hansen and Morrison approach the topic by viewing movement as a design material--just like any other, be it hardware or software. The define movement as embodied communication--the movement of a body is able to convey information.

The authors focus on movement data using Sync--a tool for generating dynamic movement via data visualizations. The goal is to abstract and visualize movement in order to inform the design process.

They argue that non-verbal using the body is largely ignored. Media influences communication, but our communication needs to not depend on media.

Hansen and Morrison want to put the body central to design, but their focus on computer data and linguistics seems to forget the “human aspects”. They are more concerned with breaking movement down into what can be easily defined and visualizing it. This approach does seem practical for utilizing bodily movement as a design material--breaking movement down in this way can be useful for shaping it to meet one’s needs and interpreting it with computers.

A designer needs to consider the people who use their products. Feelings and the ephemeral aspects of human bodily experience are messy and not necessarily useful for meeting goals, but are still important. Do specific motions make people feel uncomfortable? Are they fun? Why would someone want to perform these actions? Is the strain of repeated use not practical for common interactions?

0 notes

Text

Inventing and Devising Movement, October 19

In their paper, Loke and Robertson use a guiding design principle of “making strange” as a basis for their exploration of possible movements for use in design movement based interaction. Their motivation is to create new perspectives and appreciation of bodily movement by challenging/unsettling habitual perceptions and conceptions of the moving body.

They make use of dancers and choreographers to help them device movements. Loke and Robertson describe how to approach bodily movement from both experiential and machine perspectives with an emphasis on specific contexts or domains in which to situation these movements.

When creating new bodily interactions for commercial devices I can see how “making strange” can come into conflict with ease of use while also balancing both the experiential and machine perspectives of each movement. If the movements create an experience far from one’s established perspective of every day movements, would they be more intuitive or less? I can imagine the “strangeness” of the interaction creating some cognitive effort to remember how it is used but I can also conceptualize scenarios when strange movements are so far from everyday experience that they may quickly become associated with the specifically designed interaction to which they have been assigned.

Loke and Robertson’s use of the most dexterous and physically fit bodies to generate potential movements for human computer interactions is where I would push back. Dancers and choreographers could be useful for creating interesting and complex movements for design experiments, but for anything practical or commercial I think it would be best to work with the least physically able bodies--the elderly, heavy people, those with physical disabilities. By working with these less capable bodies, a designer could device movements that the vast majority of potential users could perform with relative ease. Many of our interactions are centered on our hands and I can see bodily interactions as a way to open up accessibility options for those with arthritis, those with only one hand, those whose hands are not as dexterous as they used to be.

0 notes

Text

Start of Module 3, October 19

youtube

https://youtu.be/SBZNuvqsazU

Sorry this post is so late.

Immediately we decided we wanted to work with two bodies for this project. Our main interest is social interaction and cooperation.

Our earliest ideas have to do with two users combining portions of their bodies as the input. One idea we have is to assign instrument tracks to certain body parts that only play when touching another part of another person’s body. The two users could then collaborate to create music, kind of like two DJs working together. This then got us thinking about other forms of feedback for combining parts from two separate bodies. A more simple idea is assigning colors to each part and when they are touched together they create a new color--for example, blue and yellow make green. The two users would collaborate to create new colors, like mixing paint. Perhaps then these created colors can be use to paint on a javascript canvas, so the users can creatively collaborate.

Before starting to work with multiple bodies we worked with the wrist distance sketch--as shown in the video above. We were able to use proximity to make the assigned colors change.

With our goal of social cooperation in mind we need to hone in on what kinds of movements we want to encourage and what motivates them. Do we avoid touching parts that might lead to social awkwardness? Do we lean in to the strangeness of the interaction and encourage silliness? Do we make them difficult so greater dexterity and/or cooperation is necessary?

0 notes

Text

End of week 3, October 12

youtube

https://youtu.be/vph9uqeQumk

youtube

https://youtu.be/3U_AyOiCLdw

We got a late start so our iteration process is relatively non-existent. I need to work on being able to power through roadblocks and learn to combat feeling defeated.

The first video shows the start of our project. We are attempting to to have a ball controlled by sound that a user can move towards magnets represented by the panels on the sides of the screen and have those magnets change polarity upon contact. Speed of the ball is determined by volume.

One of our challenges is working with vectors. If the vector is too strong a user will not be able move the ball, but if the vectors are too weak there will not be any perceivable resistance when moving the ball.

The second video shows our first breakthrough in progressing towards our goal. We are able to control the direction the ball moves. Low frequencies move the ball left and higher frequencies move the ball right. At this point we have not decided any specific type of sound to use, but perhaps that does not matter in the end. One of the complications with working with frequency is that the data received from the microphone must be interpreted. We do not want to be so specific that control is hindered but we need to try to filter out the common frequencies of background noise while also creating a balance between what low and high frequencies move the ball.

I am not sure if we will get the polarity shift change to work. While it is a tricky bit of coding for us, it falls on our poor use a time. How can I get over “designer’s block” in the future? Perhaps one of the answers is to not force it. We spent days watching coding videos and trying to work out ideas in pseudo code--perhaps this overwhelmed us and kept our wheels spinning instead of inspiring us? I know my partner works better at night and I work better during the day. Maybe some more individual time or striking a specific balance could have aided our efforts? Perhaps I should return to some of the brainstorming techniques introduced last year to help generate ideas. However, that does not help to address my coding weakness. Maybe in the summer I can find a coding course to help, but that does not help me in the short term.

I know several of our classmates are working with very specific sounds. Our project started off with tapping/drumming, but in testing it we saw it was easier to use with voice. We should have honed in on a more specific sound for our interaction. If we managed to get working faster we could have tested a variety of audio inputs. There is something to be said to having variety of possible inputs, making it more accessible to different users, but I do wish we better served the objectives of the module by getting more specific.

While much of this entry seems to be beating myself up, I am happy we were able to get something working so quickly in this last week. It shows that we can overcome obstacles and are probably slightly better at coding that we believe--these are things of which to be proud--I just cannot help but to reflect on my weaknesses and will continue to try to find methods for overcoming them.

0 notes

Text

Start of week 3, October 5

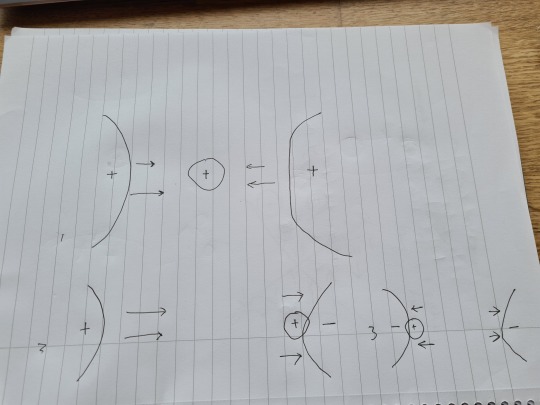

The final week is here, and with it my first image of the module (sorry about that).

Thanks to some great coaching by Clint, we have changed our idea into something more exciting and hopefully better suited to satisfying the project prompt.

Now we have a better grasp of how the code works and what sorts of interactions we should be designing.

Our new concept is a ball with a magnetic charge. It is placed between two magnets. A user must use sound, likely pitch, to move the ball in a direction (only left or right) and fight the magnetic pull or push of the magnets to get it to reach one of the magnets. Upon contact with the ball, the magnet will reverse polarity and the forces acting on the ball change. Skill will be involved in determining how exactly to fight the magnetic forces on the ball to place the ball in the desired location. The included sketch illustrates the concept.

The programming will still be an issue as we are not sure what to do. Hopefully there will be a breakthrough in the next three days. Our backup plan is to use the resources available to us in p5 if we struggle with the JavaScript.

On of my weaknesses when it comes to ideation is my mind (that of a pop culture junkie) immediately thinks in terms of narrative and games. While I hold that games and stories present ample opportunity for meaningful interaction, I acknowledge that these categories are not the aim of everything we design, especially in this class. While there are times I am amble to use these ideas as a springboard to launch me to more appropriate concepts, I need to also adapt to push myself away from this method of ideation. A healthy mix of my natural tendencies and challenging myself seems like it would be most fruitful.

0 notes

Text

End of week 2, October 5

Sorry this post is so late.

Coaching was helpful. Jens clarified the kinds of sounds we should be looking to work with. The sounds should be intentional. Something we choose to do and control rather than something in the background.

I wish this clarification came to us sooner but now we can begin work in earnest.

For the sake of simplicity and having something done in time, we are thinking of making a ping pong ball on a screen that is controlled by volume of tapping. The idea is that the ball has its own gravity that wants to always push it down and each tap makes the ball jump up. Users can develop skill in attempting to keep the ball in the air or in trying to rhythmically bounce it.

I am the first to admit it is not the most exciting idea, but it seems achievable in the time allotted. I regret being so stumped for so long and will strive to do better in the future.

The programming will be a challenge but with what Clint has given us and potentially some ping pong ball code from the internet we can piece something together.

This small bit a progress will serve as motivation to fuel the final push to have something ready for show and tell.

0 notes

Text

Laser cutter workshop, September 29

Again we were told about the functionality of a machine we will not be using. Rather than separate us in small groups to actually do something and learn we were shown a powerpoint.

We won’t be touching the machine, just sending in Illustrator files...we were told the most frustrating part of learning this process is learning Illustrator, but we won’t be taught that...so a day wasted. Everything with the workshop seems to be done in the least useful way possible.

In the end, I guess it does not really matter. I did not start this program to do arts and crafts. I have no interest in engineering or product design. I want to work with GUIs, I want to learn something that will help me not flip burgers my whole life. I want to be able to get a job and no longer have to struggle and live paycheck to paycheck.

0 notes

Text

Easy doesn’t do it, September 29

Djajadiningrat’s text argues that most interactive artifacts neglect the importance of movement. They deny us the opportunity to build physical skill. By moving beyond the button, the authors argue that we can create improved usability and aesthetically rewarding experiences that lessen the cognitive burden on users and are more efficient.

This text comes from a place of privilege and and never mentions anyone who may have trouble interacting with their gimmicky motion devices. Smart devices are expensive, when we move them there is a greater risk of breaking them, and not everyone will be able to replace them, sounds like they want to design for the rich. I do not believe more complex physical actions relieve cognitive burden because the directions needed for learning them will be more complex, which is then even harder for anyone with any sort of mental impairment to learn. Smart devices have done wonders for those with disabilities--enabling them to participate in digital culture with less struggle. A smart phone can be used with one hand, the simple interface does not require a ton of dexterity or physical skill. This allows people with one hand, problems with complex manual manipulation, or arthritis to participate. People with physical limitations are not mentioned at all in the paper.

I do not disagree that creating interactions that require more skill to master could lead to more rewarding and rich experiences, but I also think such interactions would benefit the few and not the many.

While there is certainly a way for physical interactions to move beyond the button, it has not been done yet. Objects that require physicality and movement so far are just gimmicks. They are less reliable and accurate buttons. The Nintendo Wii used wiggling a remote as a button. A pedometer is just a clicker button. Motion sensors, waving, rotating a phone, these are all interactions that function as worse buttons. Someone will eventually come up with something that embraces physicality and surpasses a button, but for now this stuff is a gimmick and I am not interested in reinventing the wheel.

The way I think interaction designers can actually do something profound is through focusing on accessibility, which this article simply ignores.

0 notes

Text

Intelligence without representation, September 29

Dreyfus’s text is not exactly a bachelor level paper. The author explicitly states that it is assumed the reader has familiarity with specific phenomenological texts and then also bring other authors into dialogue with one another. Given we have not read these texts, we did not have the base knowledge required to fully understand what is written. Also, we are often given texts to read that deal with primary sources without ever reading the primary sources...

Dreyfus argues that representation is not always necessary for acquiring and utilizing skills. Not everything is processed conceptually and stored mentally, the body is conditioned to respond to events.

Intentional arc refers to how a body interprets a situation affords certain actions depending on its past experience. Getting a maximal grip refers to how a body reacts and adjusts to situations towards achieving optimal results.

Honestly, I have never jived with phenomenology from my past education experience. I am no expert on the subject so I do not really feel qualified to provide a critique by any means. I am sure there has been much written on this so I am definitely ignorant, but I do not see how writers like Dreyfus claim the mind is absent from the execution of physical actions. He provided the example of a tennis swing. Sure, the learning of a technique might begin mentally but I do not fully believe the mind is not important in the mastery and implementation of physical skills. Our brains do so much without us needing to consciously concentrate on it that I just cannot think of it as only the body acting--I do not really buy this mind/body divide. Again, I am no expert so I am sure plenty of phenomenological texts have addressed these concerns...

0 notes

Text

Week 2 struggles, September 29

Apparently our ideas so far are not exactly what we should be working on. We need to work on something that has its own behavior and requires nuanced control to manipulate. The only example we were given was blowing up a balloon, however another group is doing that for their project. We also know another group is doing a flappy bird. So we started to brainstorm along those lines.

We thought of trying to balance scales, manipulate kinetic sand, navigating hilly terrain, controlling a drone, and several others. However, the problem is all of these ideas are essentially the same as the balloon idea. There is an object that wishes to stay in a certain state and we use an element or elements of sound to fight against that natural state. The balloon is not tied so it wants to be empty and you have to fight against that to blow it up. It is all the same.

We are hoping coaching tomorrow will help us as we have no idea what to do and only really have a week left. This project is completely demoralizing. Being show a few examples really could have helped. It is bad that we are stuck on trying to formulate an idea when the code we are to work with is far more complex than anything we have seen before. A classmate has even asked a professional software developer to look at the code provided and he cannot make sense of it...

Right now we are thinking of getting a drone and having to use sound to keep it aloft. I am not sure we have time for that, if it is too much we will work with a screen instead.

No photos or videos yet because there is nothing to show...

0 notes

Text

End of Week One, September 27

Getting some clarification as to what we are working on proved to be helpful. However, we still have no idea what the code is doing so we are focusing on brainstorming. We want to set a some goals to give us something concrete to focus our efforts on when trying to code.

Our brainstorming efforts started with maybe controlling a device with sound. For example, controlling a drone with clapping. There are so many aspects of the sound of clapping we could potentially hone in on--volume, speed, duration, rhythm, etc. However, not only would this idea be too complex for us, it also reduces sound to a button so we decided to look elsewhere.

Maybe we could work with creating awareness of the consequences of what causes certain sounds. We considered visually representing pollution based on car sounds. However, we did not see potential for interaction here.

Our next avenue of brainstorming proved fruitful. We discussed bringing creating awareness of sounds of which we may not be conscious. One example would be creating a visual representation of productivity through typing sounds. Typing is a rather impressive feat of dexterous skill that we do not typically consider. It is also rich in audio complexity. We could consider cadence, volume, speed, etc. The idea would be someone could be writing a paper and their typing sounds cause drawing on a canvas and when they are finished writing they have a visual representation of the work they have done.

Another example along the same train of though was sleeping sounds. One is not aware of the myriad noises they may make when sleeping--movement, snoring, talking, teeth grinding, etc. By creating a visual representation of how someone sleeps, they could potentially gain insight into how they sleep and what they do while sleeping. However, this idea would be difficult to work on and tweak while we are both awake and at school.

We then thought about fidgeting. I know I often fidget while sitting in a classroom. I am not necessarily aware of the sounds I make when doing this, though these habits may annoy others. We could potentially measure the tapping of feet, tapping of pens, shuffling in a seat, etc. Perhaps drawing attention to annoying habits.

This line of thinking brought us away from the concrete aspects of sound like volume or duration and got us thinking of the abstract values assigned by humans. Sound can be annoying or soothing. Several of the unconscious sounds can be annoying to others. We then began discussing misophonia.

People with misophonia are negatively effected by sound. They could be tremendously annoyed, angered, or physically triggered by seemingly innocuous sounds. People who have not experienced this can struggle to empathize with those who struggle with misophonia.

Misophonia is the lens through which we will approach this project. We aim to target an annoying aspect of sound and use that to frustrate a user. Our goal is to similar what someone with misophonia may feel from these sounds. We believe this lens gives us a lot of freedom in regards to input and output. It serves a purpose in that we hope to create empathy, however, it also is kind of a pointless experiment, as no one is looking to buy a product that is meant to hinder productivity and induce a negative mental state.

Whatever aspect of sound we choose as input (e.g. high pitched sound) will create annoying effects for a user on a computer. Some ideas we had for these annoying effects are changing font size on a website, changing the language of text, sending them to different webpages, or barraging them with alert boxes. We want to make it impossible to concentrate on whatever it is a user is trying to accomplish. We believe these goals are something we can experiment and potentially expand upon.

A potential place to start to is target high pitched sound and have alert boxes pop up in relation to the duration of this sound. This idea is simple enough for us to achieve with our limited coding expertise and then can be expanded upon as we experiment.

0 notes