Don't wanna be here? Send us removal request.

Text

Preservation and Availability

Backups are not piracy. Piracy only preserves games that the pirate doesn't own a legal copy of. If you buy a broken disc (or cartridge), you still have the right to access and use its contents, despite there being no way to directly access them. In such cases, copying from other legal copies of the same game could be a legal option (but if I recall correctly, in the US the DCMA prohibits that). So, copying ROMs from other people could be legal as long as you have the right to have a backup of the game. I remember reading somewhere decades ago that people had the right to have two copies per software license (one for regular usage and another for backup), and when one of the copies gets broken, the remaining copy becomes the "regular usage" copy which you can backup again. I'm not sure if that was under Brazilian law, but in the US the DCMA can get in the way nowadays. The bottom line is, the available amount of licenses is finite, but each license should always give its owner the right to have a working copy - be it an original copy, or a backup. That takes care of preservation. Preservation and availability are different things. Preservation should be a right of the consumer, but availability should be a right of the copyright holder (developer, publisher, etc). If the copyright holders doesn't want to release more copies of the game (i.e. more licenses to own a copy), it's their decision. Preservation doesn't need piracy, and availability is not a consumers' right. Sure, piracy can help people know a lot more games, but it's so easy to legally have tons of games nowadays, that the ones only available through piracy doesn't make much difference. Any people can legally get more games than they could play in a lifetime, all of them being enjoyable games.

0 notes

Text

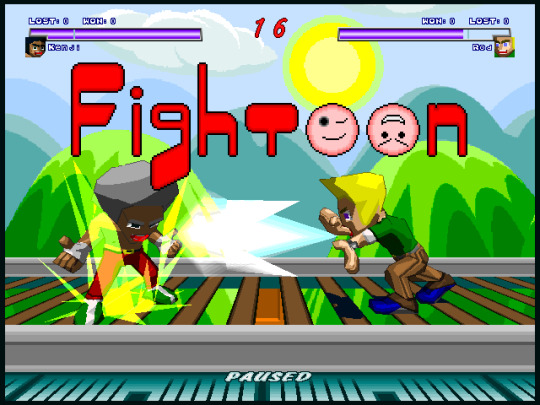

Fightoon beta release

Here are some old Fightoon betas. I forgot to include a license, but you can consider the data files as public domain. The engine is an outdated version of Makaqu. Only the Windows version is included.

Download

0 notes

Text

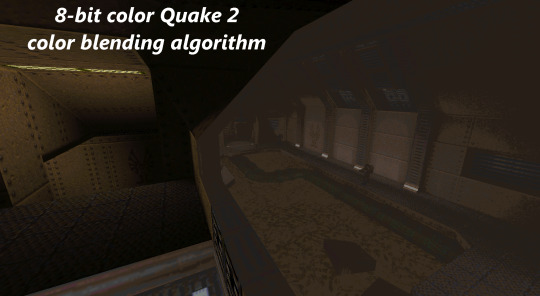

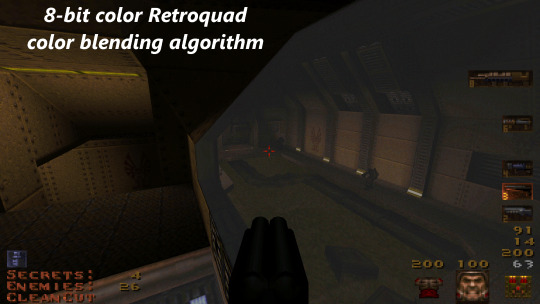

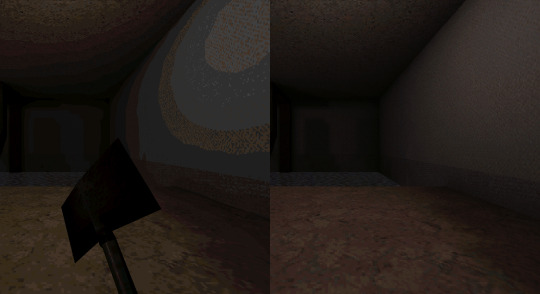

Graphical breakthroughs in the Retroquad engine

(Click here for full size image)

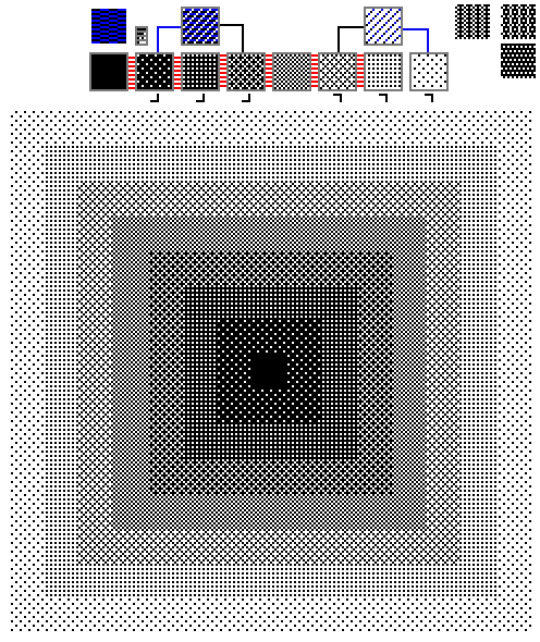

Luminance-Ordered Quadrivectorial Four-Way Quantization. AKA FWQ (Four-Way Quantization).

It's the key for the 8-bit indexed Quadricolor dithering used in the Retroquad engine. This article assumes that you already understand about dithering algorithms such as Floyd-Steinberg and the dithering filter used in the UT99 software renderer.

The Retroquad engine

From the readme file:

Retroquad is a game engine with a 3D software renderer that employs many color algorithms independently created by myself to solve the limitations of 8-bit indexed color systems, pushing their quality above the known possibilities of their current state of art. It is intended to be an engine with all the advantages of 8-bit indexed color 3D software rendering, but without the drawbacks of classic 8-bit color renderers.

Among its innovations are a proprietary quantization algorithm that compiles images in a special format that can be dithered in realtime in 3D space, eliminating the problem of texture pixelization that happens in all quantization algorithms that performs dithering in 2D space. This creates 8-bit color textures that looks incredibly more faithful to their truecolor counterparts and smoother at any distance, eliminating color restrictions for texture artists.

Other improvements already implemented include a smooth multi-step dithered and color-corrected lighting system that is combined with textures in realtime in a per-pixel fashion, a smooth dithered and color-corrected blending system used for multitextured glowmaps and translucent polygons, dithered interpolation of texture frame animations, dithered trilinear texturemapping, anisotropic texturemapping, a molten texturemapping technique that allows liquid textures to melt through all sides of any 3D object in a seamless fashion accross all of its edges, alphamasked textures with semitransparency, scrolling textures, multiplanar scrolling skies with horizon fading, dithered "soft depth" (for smooth shorelines, smooth scenery edges and "soft particle" style sprites), and procedurally generated particles with precomputed dithering.

Planned features for the renderer are dithered colored lighting using a fast 8-bit indexed color pipeline, edge antialiasing using an extremely fast preprocessing technique, dithered fog, texture crossfading (for terrain "blending" and triplanar texturemapping), Unreal-style hybrid skyboxes with alphamasked backgrounds & dynamic skies, perspective correction on character models, and HD texture support on all other parts of the renderer (GUI, HUD, character models and 2D sprites).

Also, its rasterizer doesn't distinguish between my new 8-bit texture format and classic 8-bit textures — both kinds of textures are rendered through the same 8-bit data pipeline, making the code easier to maintain.

The whole engine is being coded in pure C with no usage of processor-specific extensions. Hardware requirements are exactly the same as in classic software-rendered WinQuake, except for more RAM. The lack of processor-specific extensions usage is purposeful, to ensure that this engine remains highly portable and as hardware-independent as possible. Another goal was to remove and replace as much of the old Quake code as possible, to make its code more lean, polished and easier to maintain, hopefully turning it into a completely original engine.

However, multi-core processing was also planned, to improve 1080p performance and hopefully allow for 4K rendering without sacrificing the core goals of the project.

When fully polished, Retroquad should be robust enough for becoming a viable platform for creating new commercial quality independent 3D games that can be very quickly ported to other operating systems, consoles, mobile, and all kinds of obscure hardware and independent hobbyst hardware projects where it would otherwise be technically unfeasible or financially expensive to port them (such as the multitude of Chinese handhelds whose usefulness is limited to emulating old gaming consoles and playing classic software-rendered retro games such as Duke Nukem 3D and Quake).

Another reason for this engine to remain as hardware-independent as possible is to ensure future-proofing for software preservation. Games created using this engine will be able to be easily emulated for many decades in the future, making sure that people will always be able to experience them properly, even if their source is inaccessible or lost.

And finally, the last main reason why Retroquad exists. Since the 90s, I've seen many game engines, graphics chips and software platforms come and go, every one of them becoming obsolete and incompatible over time. I was never able to afford keeping up with them, and my life was always so screwed up that any ambitious project such as a full commercial game would take many years to be completed. Software rendering is the only tech I could use that will surely not suffer from hardware & drivers deprecation, the only tech I can count on to keep developing something and take my time to battle with the depression, poverty, health issues and other roadblocks in life, knowing that when I come back to it, it will still be working. Retroquad was intended to be an engine for people struggling in life, an engine that even if life slows you down and you take decades to create a game, it will still work as intended.

The tech

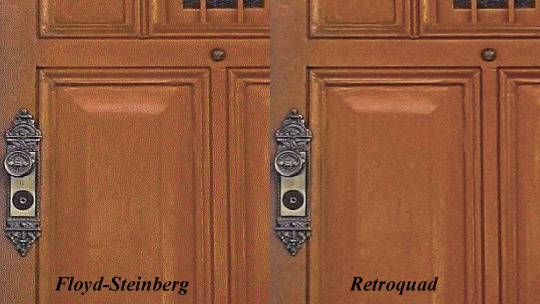

(Dithering comparison | Click here for full size image)

In FWQ, each vector is unidimentional, containing the quantization of the (forward, backward) direction across a single axis (horizontal, vertical). Thus, four way.

The "four way" aspect means each way is unrelated to each other (unlike, let's say, a diagonal vector, which is bidimensionally related to both axes of a horizontal plane). And because of this, each way can be handled independently. The left way is the only one that diffuses error to the left, the up way is the only one that diffuses error upwards, and so on.

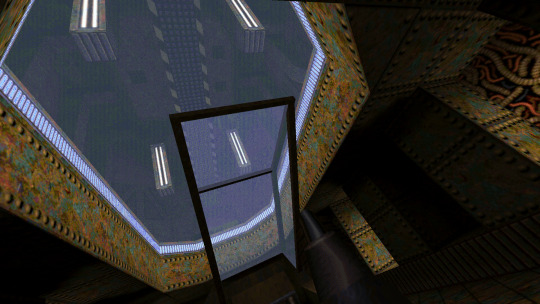

(FWQ cubemap skybox | Click here for full size image)

The unidimensional aspect of each quantization vector also allows the error diffusion to spread seamlessly across cubemaps. This is an unique feature that can't be achieved with any other error diffusion technique because the quantization errors of a cubemap must be spread accross 3 axes, instead of only 2. A cubemap has 3 axes and each cubemap axis has 2 quantization axes, but each cubemap axis shares only 1 quantization axis with each other cubemap axis. The final result is that the error diffusion in each side of the cubemap is an intertwined mix of the error diffusion of its four adjacent sides. For example, the texture of the back side of the cubemap will spread its horizontal error diffusion to the textures of the left side and of the right side, while its vertical error diffusion will be spread to the textures of the bottom side and of the up side, essentially "giftwrapping" the error diffusion across the whole cubemap.

While all this works well for generating image data that can be dithered per-pixel in a tridimensional projection, there's another dimension where it didn't look good: Time.

During animations (camera movement, animated texture frames, texture scrolling), the resulting image would look too noisy. This is because in error diffusion there's no way to control which texel will look brighter and which texel will look darker, unlike in ordered dithering. However, since four-way quantization outputs 4 color indexes for each texel, we can reorder the indexes of each texel by luminance, which will smooth out the brightness variance per pixel and eliminate most of the noise.

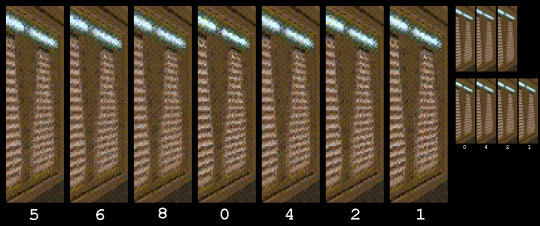

All that was described so far works well for textures that are close to the camera. It works very well when each texel is rendered to several pixels. However, it doesn't look so good for textures that are far away from the camera (with some texels being skipped from a pixel to another) because the error diffusion in them will also be skipped, resulting in an image that's not smooth enough.

Due to this, I've implemented an extra "error splitting" step in the error diffusion, to make the error diffusion be dilluted to both the next neighbor and the farthest next diagonal neighbor on each way, which makes the four-way quantization spread the error correction evenly accross all neighboring texels, effectively making the four-way quantization use an eight-way error diffusion. This looks good on submips, but in some cases it doesn't look so good on mipmap zero, because it reduces the strenght of the error diffusion. Also, because this error splitting technique violates the unrelational aspect of the four ways, it can't be used on cubemaps.

All of this four-way quantization data is stored in a special 8-bit indexed color format called "Quadricolor". In the Quadricolor format, the color of each texel is composed by 4 different subcolors, which means that each texel has 4 different subtexels. This is fundamentally different from direct-color formats such as 24-bit RGB, because while 24-bit RGB colors are divided into 3 different channel values (one for the red spectrum, one for the green spectrum and one for the blue spectrum), quadricolors are divided into 4 different subcolor indexes, with each subcolor index addressing a predefined color value (which in turn can be composed of 3 channel values, for a total of 12 channel values per Quadricolor). While smooth colors can be achieved in direct-color formats by simply modifying the value of each channel directly, the way to smooth out colors in the Quadricolor format is to find out 4 subcolors whose indexes points to palette entries with channel values that are balanced against the channel values of the palette entries of each other subcolor's index in a way that our brain can combine all of them to interpret the intended color.

(Floyd-Steinberg dithered quantization with positional dithering on the left, FWQ with positional dithering on the right | Click here for full size image)

The nature of the Quadricolor format also means that to faithfully display a single quadricolor texel, at least 4 screen pixels are needed, one for each subcolor. This "one subcolor per pixel" aspect of the Quadricolor format allows the rendering engine to dither its subcolors into tileable screenspace patterns that allows each texel to be infinitely expanded without losing its intended color definition (unlike texelspace dithering techniques, whose intended colors lose definition by becoming too far apart when the texels are expanded).

(Decontrast filter iterations | Click here for full size image)

However, when a Quadricolor texture is made of neighboring texels with quadricolors whose intended color spectrum is similar, only 1 pixel is needed for displaying each texel, with the on-screen Quadricolor being a cluster of 4 pixels from 4 different texels, with 1 different subcolor from each texel's quadricolor. This means that a Quadricolor texture can be displayed in native resolution with no significant loss of fidelity. To improve the balance between the subcolors of the quadricolors of neighboring texels and ensure better color smoothness, a special "decontrast" filter was created in the texture compiler to proportionally reduce the contrast between the neighboring colors of the 24-bit RGB source image.

And finally, the Quadricolor format being composed of 4 color indexes also means that the rendering pipeline made for it is fully compatible with regular 8-bit indexed color textures, by simply reading all subcolor indexes from the same offset within the texel.

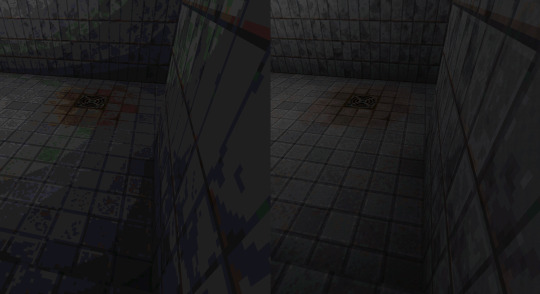

(FWQ 8-bit Quadricolor texture with semitransparent alphamasking; positional dithering disabled on the left side, and enabled on the right side | Click here for full size image)

Another advantage of the Quadricolor format is that it allows for creating semitransparent colormasked colors without an alpha channel. Due to being fragmented into 4 subcolor indexes, each Quadricolor can be fully opaque, fully transparent, or have 3 different stippled semitransparency levels (25%, 50%, 75%) depending on how many of its subcolors are transparent (0, 1, 2, 3 or 4).

(Semitransparent Quadricolor alphamasking | Click here for full size image)

(Filtered hardware alphamasking comparison | Click here for full size image)

This gives smoother borders to colormasked textures, which is not possible in other color formats without an alpha channel.

Textures needs bidimensional RGB error diffusion between at least 4 neighboring colors, because they need all of their colors to be rendered at once (parallel output). But in color maps (for shading, blending, etc.), only a single intensity level is displayed at once (serial output), so they don't need error diffusion and can perform the error correction on the current color instead. The act of performing color correction on the current color is a technique that I call "error mirroring". While error diffusion gets the error value of the currently resulting color output and apply it to the next desired color input, error mirroring gets the error value of the currently resulting color output and apply it back to the currently desired color input, resulting in two subcolors of the same color with their error mirrored between them.

(Old blending | Click here for full size image)

(New blending | Click here for full size image)

(Multi-layered blending | Click here for full size image)

For semitransparencies (including additive glowmaps), the Quadricolor format is used to display not just color correction, but also alpha correction. color maps are a combination of only two RGB colors accross a single serial alpha axis, so they only need two subcolors for error-mirrored RGB color correction, leaving the other two subcolors for alpha correction.

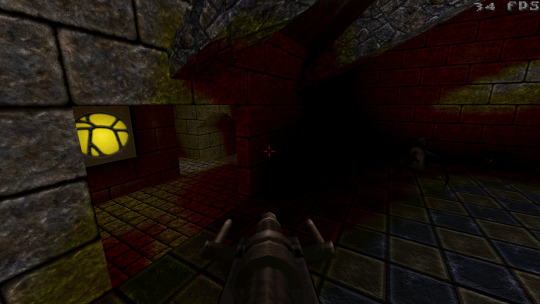

(Lighting comparison | Click here for full size image)

Lighting in Retroquad uses lightmaps, but it doesn't combine the lightmaps with the textures into surface image caches. Surface caches in Retroquad contains only lighting data, which is combined with the textures per pixel in realtime, and because of this they're called "surface lighting caches." This allows the surface cache UV coordinates to be dithered using different offsets, which makes each lixel (lighting pixel) to be spread accros multiple texels (texture pixels), smoothing out the final image better.

(HD texturing comparison | Click here for full size image)

It also allows the engine to support several more features such as HD textures and dynamically mapped texturing effects (scrolling textures, turbulence mapping, melt mapping, etc).

(Lighting comparison | Click here for full size image)

In the lighting, the Quadricolor format stores indexes of subcolors computed with color correction and brightness correction. However, these are only used in the color shading map. The surface lighting cache stores only the lighting level of each lixel, with 8-bit precision.

(Lighting comparison | Click here for full size image)

The lighting is dithered in three steps: surface lighting cache, lixel coordinates and color shading map. The lighting levels are dithered with 16-bit precision during the conversion from the lightmap to the 8-bit surface lighting cache, the per-pixel coordinates of the lixels in the surface lighting cache are dithered during the on-screen rasterization, and finally the lixel values are dithered with 2-bit precision to address the 6-bit levels of the color shading map.

(Tinted light shading map experiment)

Colored lighting can be implemented through an additional 8-bit surface tinting cache map containing tinting information defined by 4 bits for 16 hues and 4 bits for 16 saturation levels, applied before the surface lighting cache. Lixel coordinates would be the same for both the surface lighting cache and for the surface tinting cache, so there would be no extra texturemapping cost for colored lighting. The main challenges would be to keep the banding between the 4-bit levels to a minimum, and to compute color correction, hue correction *and* saturation correction with an acceptable level of quality using only 4 subcolors for each quadricolor.

Color swapping and palette animations are possible to implement in Retroquad by applying a filtered palette during the quantization to restrict the source colors to the scope of the desired color range, and then using a color shading map with those color indexes swapped to the desired ones.

(Particles | Click here for full size image)

In Retroquad, a "particle" is a single colored point of a 3D visual effect image (smoke, stars, pellets, etc), just like a pixel is a single point of a 2D image. A single point doesn't have an individual meaning because each particle is not an image in itself, which is why particles should not be textured. Their visual meaning is given by their behavior and group structure, also known as "particle effect".

Despite particles being not textured, each particle is a point in 3D space, and therefore needs to represent depth by being drawn bigger when closer to the camera. The most appropriate shape for that is a circle, which is only affected by 3D camera position, not by 3D camera angles. Also, because each particle is just a single point in 3D space, and to keep its round shape consistent in any situation, its depth check is performed only once at their center pixel, which means that either the whole particle is visible, or it's completely occluded.

To be consistent with the smoothness of the dithering used in models and sprites, the particles must also be dithered. Also, one of my goals was to make sure the dithering didn't affect the perfectly symmetrical shape of the particles.

Since particles are not textured and have only a single color each, a different dithering optimization was implemented.

In a 4x4 opacity dithering matrix, each line has 4 possible levels of opacity (100%, 75%, 50% and 25% opaque), according to how many pixels are covered. Despite each line having 4 units of boolean values, the total amount of combinations is only 4 (instead of 16), because in the 50% opacity level the opaque pixels should always be apart, and all other possible variations of a same level of opacity can be represented by offsetting its index. By alternating between 2 different dithering opacity lines, 4x4 dithering patterns for 8 opacity levels can be achieved.

To define the area of each opacity level within the screenspace area of the particle, the shape of the particles was segmented into several halos, and each halo has a opacity level assigned to it. Afterwards, each line of the whole particle is scanned, and all line segments with identical line patterns between different halos are grouped into a maximum of 2 different line segments per pattern. This inter-halo pattern aggregation heavily optimizes the amount of iterations needed to draw the whole particle.

And finally, all lines of each pattern line are rasterized by individual functions featuring Duff device loops with some lines skipped to create holes according to the desired pattern. This makes dithered particles extremely fast to draw, faster than non-dithered particles, because instead of performing per-pixel verifications, it just executes less code. This, combined with the single-pixel depth check and all of the procedural steps being precomputed, allows the engine to render thousands of particles with no significant impact on performance.

Final words, and engine release

There are other graphical advancements in Retroquad such as soft depth, melt mapping and multiplanar scrolling skydome with horizon fading. Also, there are many more in-depth details that could be written about what's been revealed in this article so far. However, I'm not in a good condition to write about everything right now.

Retroquad is still far from what I envisioned. Originally, the plan was to release it once at least the BSP renderer had been completely replaced and the bugs were ironed out. It's saddening to know that my vision for it won't be fulfilled, but I don't want it to be completely lost either, so I'm releasing Retroquad as is.

Since last year I lost almost everything in my life. And almost lost my family. We are going to lose our house because I need to sell it to put food on the table, and the water will be cut soon because I've been wrongly blamed in a lawsuit. The future is grim and dedicating spare time to continue doing graphics research & development is not possible anymore.

The Retroquad 0.1.0 release is here: download.

I pride myself in having developed my tech without external help. But that doesn't matter anymore. I need to help my family, and have no means to do so.

If you're grateful for this release, or simply willing to help, I have created a donation campaign here.

6 notes

·

View notes

Text

Retroquad Release Possibilities

Retroquad has been put on hold. Indefinitely.

My last attempt at funding this project didn't come to fruition. A couple years ago it wasn't needed, but since then a series of disastrous events put me deeply in debt, and I can't focus on hobbies anymore. A few months from now I may lose almost everything in my life. There's simply no solution, nothing I can do but despair. Fuck this.

A while back I also tried getting some feedback about Retroquad from people I deeply respect, and a number of key people didn't give me any feedback at all. Nothing.

It's clear that Retroquad simply doesn't matter. Not enough for everyone, and not at all for a number of people whose opinions I value. There's no point in releasing it.

If there's no point in releasing it, it isn't worth the effort to keep working on it while my life is going down the drain. It's just a waste of time.

I'm not even sure if I'll ever try developing any game again. Maybe I'll just quit any kind of computer programming altogether. The last time I tried developing a game I wasted money hiring people to work on a model I can't even use because it had to be segmented and it isn't, so I gave up on the whole project in disgust.

I don't know what will happen with my life, so don't bother. It doesn't matter anymore.

0 notes

Text

Not a game developer

I am not a game developer. I am a tech developer.

I am not even an engine developer. An engine developer can simply collect off-the-shelf software components and assemble them into a game engine. For an engine developer, creating tech isn't a requirement; assembling the tech is. For engine developers, creativity isn't in how the tech is created, but in how it's employed.

There are, of course, different layers of technology. For a game developer, the game engine "is" the tech, because the engine is what the developer is in direct contact with. For an engine developer, the tech are the software libraries that he works with, including third-party libraries and operational system libraries. The layers can go all the way to individual transistors and electronic components, but the point is, I'm making a distinction between people who create new products out of preconceived ideas, and people who creates the new ideas themselves.

A game developer can create new environments and stories to express the game's universe, new gameplay mechanics for the players to improve their problem-solving skills, or new ways for players to socially interact.

An engine developer creates the high-level system that the game runs on.

A tech developer creates software components that can be assembled into an engine.

Tech does nothing on its own. An engine can do some things on its own, but it's not playable on its own. A game, created on top of an engine assembled out of software components, can be fully enjoyed.

I've given up on my plans of creating a game — any game, this time — because I don't really care about expressing anything, I'm not the kind of fun person that creates exciting challenges, and I dread of social interactions. So, I'm not a game developer.

I'm also not a product-oriented guy, so I don't focus specifically on getting the game to run. Which is why I'm not an engine developer.

I care about the inner software subsystems. And I care about doing what no one else was able to do before. I don't focus on what it will be used for, but on what it is able to do, and on how well it does that. The more self-reliant, the better.

I care more about the scientific truths behind the algorithms than about the product-oriented motivations behind them. Because, in my own work, I prize characteristics such as harmony and coherence more than I care about artistic intent.

1 note

·

View note

Text

3D Character Animator Needed

I've bought a human character model that needs some tweaking and a lot of animations.

The model has skeletal animation, but it isn't segmented. I need it to be segmented at the waist like the Quake III Arena models.

Its source formats are Blender, Max, Maya, MilkShape3D, and FBX. Nearly 1750 triangles, already textured.

Some of its stock animations may be reused, if appropriate. I can also provide the animations I've created using the standard player model of Quake 1 as reference for this new model's animations.

If interested, mail me. My gmail address is my nickname.

Payment can be done through either PayPal or Bitcoin. Give me a price, and we'll negotiate.

OUTPUT PLAYER MODEL SPECS

MD3 format, without tags.

Compatible with the QuakeSpasm-Spiked engine.

2 segments (lower body, upper body), split at the waist, Q3A style.

Same width as the Q1 player model.

Animated at 10 fps by default.

If needed, make specific animations at 15, 16 or 20 fps.

ANIMATIONS

(+ = compatible with lower body walking) (* = starts in midair)

On land:

Waiting (getting ready) (may also be used for spawning from teleport)

Standing

Walking

Running

+ Scared (panicking)

+ Pain (2 different animations, one from the left and one from the right)

Death (one falling from the front, and another from the back)

Timeout (giving up)

Victory

+ Punch

Kick

+ Grab enemy

Spin around & throw enemy

Grab item

+ Hold item

Throw item

On air:

Jumping

* Idle looping animation while falling from normal jump (example)

* ledge grabbing & holding

ledge climbing

Rolling jump (video example at 1:38)

* Downwards flying kick (example)

* Upwards flying horizontal spin kick (example)

* Pain

* Death

Landing (player bends his knees for a moment)

Landing pain (player bends his knees and puts his hands on the ground, and then gets up)

Landing fall (player falls fully flat on the ground, and then gets up)

Landing slide (player stands upright to regain equilibrium, and then bends his body to slide down the slope)

In water:

* Dive (from apex of normal jump)

Float (in vertical body position)

Transition between floating and swimming

Swim (in horizontal body position)

Swimming attack (headbutt)

Swimming pain

Swimming death (drowning)

0 notes

Text

Texture usability improvements

Compiled textures now have the original texture's mipmap zero (unscaled image) CRC appended to the filename. This solves most external textures' mismatches. By checking the original unscaled image only, texture compatibility is retained in case the original textures' mipmaps are regenerated (there's a few texture WADs with bad mipmaps out there). Saving & loading recompiled internal textures is implemented. Additive blended lumas looks significantly better on mipmaps than masked lumas, so I'm also implementing an option (enabled by default) to convert the internal textures' masked lumas into blended lumas when recompiling. Internal textures without masked lumas can use all colors of the palette for lighting now (no fullbright limitations). Lit external BSP models can go fully dark now. Previously, there was a minimum amount of lighting set to prevent ammo boxes from going fully black on pitch black areas, but that didn't look good when using textures with lumas. External textures and compiled textures can be disabled in the console now. There's also an option to force the recompiling of textures, which is useful when external TGA textures are modified.

1 note

·

View note

Text

What’s Next

From the top of my head, here’s a short to-do list for 2017. I may not have time to achieve any of these this year.

For BSP models & maps:

External HD texture support for skies;

External HD texture support for liquids — should require turbulence mipmapping, and may not support additive luma;

Implement the last major improvement for texture quality that's planned;

Finish the external texture filepath algorithm;

Save & load mipmaps recompiled from 8-bit textures (depends on the above);

Save & load recompiled skyboxes;

Further speed optimizations, specially on lightmaps;

Colored lighting — lots of work to do on this, but it shouldn't be too difficult now;

Fog — not a priority, but it should happen.

There's also some really difficult tasks I want to do in the BSP renderer, which requires a much deeper learning of 3D math and BSP algorithms, which is why I'm not including them in the list above.

0 notes

Text

32-bit mipmaps, 8-bit alpha and external glow textures

Per-pixel quantization now supports rendering of 8-bit alpha channels. This has been implemented because I've figured out a method with zero impact on performance, piggybacking stippled patterns into the per-pixel quantization data. The tradeoff is that it only supports three levels of transparency, but at least it helps a bit to smooth out the edges of complex alphamasked textures, making those edges look less jaggy and more grainy.

Screenshots:

8-bit alpha channel with no texture coordinate filtering

8-bit alpha channel

And support for external luma/glow textures with 8-bit alpha channels is also in. This also allowed me to generate glow maps from the fullbright texels of the internal 8-bit color textures, use them to generate 32-bit color mipmaps from the internal textures, and compile those for per-pixel quantization.

Screenshots:

Old 8-bit color mipmaps

Normal texture

Per-pixel quantized mipmaps

Per-pixel quantized mipmaps with no texture coordinate filtering

0 notes

Photo

Texels rendered as regular hexagons. These could look better in a number of cases, such as in textures with round images.

However, the pipeline to create proper textures for this would be too complicated, and so far it wouldn’t be worth the effort. Most approaches to convert square texels into hexagonal texels would make them too blurry.

1 note

·

View note

Photo

Lit liquids, before and now.

I’m glad the thick banding is gone.

1 note

·

View note

Text

To-dos

That’s more productive than creating premature to-do lists full of tasks that may become pointless or consume time that would be better spent elsewhere in the game. Developing such an engine and creating a proper game on top of it involves a nearly inhuman amount of things to do, and there’s no way to predict exactly how everything will turn out before the techniques to take care of them are fully established. Trying to define things prematurely may lead to frustration. The key is to keep working, and to keep following the best paths revealed by the results of your work.

And not losing those best paths is also important. This is why there are times in which I mention I’m going to work in something, but end up working in something else — e.g. last year I mentioned I was going to finally implement music playback, but ended up going back to graphics programming. When a idea about how to fix up or to improve something pops up in my head, I usually go to work on it immediately because fresh ideas makes us more motivated, so it’s faster to implement them right away than to implement them later — again, it’s a matter of productivity.

With enough productivity, there should be a point of critical mass where everything will come up together in harmony as a full game. Again, in such a game, trying to establish everything beforehand would be futile — e.g. my texture creation work has shown me that it’ll be more productive to just create the best textures I can and try to figure out the environments that can be created with them later, so I can’t design the levels before enough textures are created. Maybe this general approach could be called “create the best out of the best”, and it should help to balance quality with viability, in detriment of more expensive aspects (e.g. well-made semi-abstract levels instead of poorly-made realistic levels).

0 notes

Text

The Colours Out of Space

This palette system should also operate on a new colorspace I’ve been designing for a while. None of the current colorspaces I’ve found was satisfactory either; broadly speaking, all of them seems to be focused on image reproduction, not on image creation. The HSV colorspace comes close, but isn’t enough.

Nothing is set in stone yet.

1 note

·

View note

Photo

Until recently, I had several simultaneous plans for Retroquad. Now, the only plan is to improve it enough to develop commercial games using it. The rest may eventually happen, but it's not a priority anymore. This also means there isn't much reason to keep writing detailed technical posts. Detailed posts will be written when the first game using this engine is revealed.

0 notes

Text

SV_WriteClientdataToMessage and new features

Some bitflags are always set, which makes them pointless, and some data (e.g. health) doesn’t have a corresponding bitflag. In both cases, this results in data being needlessly sent through the network. To fix this, I’m implementing baselines for all client message data in the Retroquad protocol, through the same framework I’ve developed for normal entities.

I’ve also fixed a bug in SV_SetIdealPitch. That function used sv_client to set the pitch, but sv_client is only valid when that function is called during client spawning. When called by SV_WriteClientdataToMessage, sv_client is always set to the last client entity, no matter which client SV_WriteClientdataToMessage is actually sending. I bet this bug is one of the reasons online players switched over to the mouse+keyboard control scheme at the time.

And I’m taking the opportunity to implement some new features. Among others, these includes zoom (FOV) recording in demos, complete with optional QC support through an entity field, smart assignment of entity shadows (only MOVETYPE_STEP & MOVETYPE_WALK entities will cast shadows when r_shadows is on — these also includes rotfishes and scrags), and chasecam recording in demos (though proper PVS for chasecams is not planned yet). These will help recorded demos to more accurately reproduce the actual experience of the original player.

Sometime I’m also going to implement a client-to-server baseline system.

0 notes

Text

Protocol plans

The reason why it’s taking so long for me to “implement big map support” is because I’m taking the opportunity to also learn everything about Quake’s networking protocol, including other related subsystems. It wouldn’t make sense to do the whole work of implementing protocol support for big maps, only to scrap all that work when getting around to refactor the networking protocol.

First, there has been a huge overhaul on how the engine deals with QC fields used by custom engine features. Now the engine can recognize and save/load those fields even when they’re not implemented in the QC code. The data format used for this was extended to also work with all QC fields sent over the network, and it includes a built-in individual baseline for each networked field of each entity, as well as a fallback data storage for when the field isn’t implemented in both the QC code and the BSP/savegame entities list (this is used for allowing precise frame interpolation timing to be sent even when .frame_interval is absent). It was also optimised to not use string comparisons for access, so stuff like .pitch_speed is a lot faster to process now.

That data format also includes all the required parameters for automatic bitflag processing, as well as for automatic data encoding and messaging. And the ordering of this data during the messaging phase is determined by an array of a different data format used to bind the entity fields to the expected protocol format (similar to a junction table in a many-to-many relationship in data modeling), which means that the messaging can follow the chaotic sequence of any protocol format without any changes in the algorithm.

All of these means that many future features will be able to be implemented with no need to even look at the protocol algorithm. Just add one row of constants to four different arrays, and your custom QC field is network-ready, savegame-ready and BSP-ready.

The only thing I couldn’t fully automate were the conditional algorithms for skipping certain entities, because the vanilla code for these have all kinds of inconsistencies, and some important QC hacks relies on those inconsistencies. The entity number encoding also have all kinds of inconsistencies, but I can just create a pair of non-vanilla-protocol MSG_WriteEntity and MSG_ReadEntity functions to standardize it in Retroquad’s protocol and call it a day.

Right now I’m in the process of eliminating all algorithmic redundancies between SV_WriteEntitiesToClient, SV_CreateBaseline and PF_MakeStatic.

About static entities, MH (DirectQ’s author) has said that there’s a way to simplify their implementation and eliminate a lot of unnecessary specific code. I still have some things to learn about how they work, and hopefully I’ll figure out how to do that.

0 notes

Text

Protocol shenanigans

.effects is sent by SV_WriteEntitiesToClient, but not by SV_CreateBaseline or PF_MakeStatic.

SV_CreateBaseline changes the value of .modelindex, but SV_WriteEntitiesToClient and PF_MakeStatic doesn’t.

SV_WriteEntitiesToClient sends the actual .modelindex value, but SV_CreateBaseline and PF_MakeStatic ignores .modelindex and gets the value from SV_ModelIndex instead.

SV_WriteEntitiesToClient skips entities with empty .model names, but SV_CreateBaseline and PF_MakeStatic doesn’t.

SV_WriteEntitiesToClient and SV_CreateBaseline skips entities with empty .modelindex, but PF_MakeStatic doesn’t.

SV_ModelIndex checks for null-terminated .model names, but SV_WriteEntitiesToClient only checks if the memory address of the .name pointer is null (which means that a self.model explicitly named as “” would pass).

The .punchangle field is sent as chars instead of angles.

In SV_WriteEntitiesToClient the bits are sent before the entity, but in FitzQuake’s SV_CreateBaseline the entity is sent before the bits.

And all this is merely on the server side. The client side surely have its own share of algorithmic inconsistencies.

Unfortunately, compatibility with vanilla demos is a must. The gameplay demos being made out of server messages wasn’t such a good idea in the long run.

Also, some of these inconsistencies are required for QC hacks. Sigh.

The Right Thing To Do™ would be to build a separate system to decode vanilla demos and encode them using the Retroquad protocol. But this doesn’t fix everything, as the QC hacks still needs support.

0 notes