Don't wanna be here? Send us removal request.

Text

Top 5 Data Management predictions for 2023

A new era ensues for Data Management. Are you ready for what the future unfolds? Read these 5 predictions to know more

2023 has arrived, and with it comes a new era of Data Management. With the rapid advancement of technology, data management is becoming increasingly crucial for businesses of all sizes. In this blog, we will explore the top five data management predictions for 2023 and how they will shape how businesses manage their data.

From the rise of AI-driven data management to the emergence of cloud-based solutions, these 5 predictions will help businesses stay ahead of the curve and ensure they take full advantage of their data. By understanding these emerging trends, organizations can gain valuable insights into their operations and create data-driven strategies for success in a highly competitive marketplace.

The one Data Prediction true year-on-year

In 2023 (just like the previous few years), the amount of data businesses generate is expected to increase significantly. Note the various statistics projected:

The global data sphere will grow to 163 zettabytes by 2025 according to a report from nRoad,

A report from IBM projects that data generated by businesses is expected to increase to 40 ZB by 2025.

Data stored in the cloud is expected to increase significantly by 10X, according to a report from Cybersecurity Ventures.

Data generated by the Internet of Things (IoT) is also expected to exceed 73.1 ZB by 2025, based on a report from DataProt.

Finally, the amount of data generated by artificial intelligence (AI) is expected to increase significantly, as stated in a report from Semrush to 32.1 ZB by 2025.

Top Five Data Management Predictions For 2023

So, with all this data accumulating, the value derived from it is an exciting prospect for many companies and industries. With rapid technological advances, the ability to better understand customer behavior, patterns, and trends has become increasingly important. As such, many experts have been predicting what factors will shape the future of data over the next few years. Here are the top five data management predictions for 2023:

1. Increased focus on Unstructured Data

Unstructured data is becoming increasingly important in data management. This type of data is characterized by its lack of structure or organization, making it difficult to process using traditional data management techniques. Examples of unstructured data include emails, social media posts, images, videos, and other types of multimedia content.

In the future, unstructured data is expected to impact data management significantly. This is because the volume of unstructured data is growing much faster than structured data. According to some estimates, unstructured data accounts for over 80% of all data generated worldwide.

New data management techniques and technologies will be required to manage this growing volume of unstructured data. These techniques include:

(a) Data categorization: To make unstructured data more manageable, it can be categorized and tagged with metadata that describes its content, context, and relevance. This can help data managers to organize and retrieve unstructured data more efficiently.

(b) Natural language processing: Natural language processing (NLP) is a type of artificial intelligence (AI) that can help to analyze and understand unstructured data. With NLP, computers can read, interpret, and even generate human language, which can help process unstructured text data.

(c) Machine learning: Machine learning algorithms can be trained to identify patterns and relationships in unstructured data, such as image and video content. This can automate the process of categorizing and tagging unstructured data.

(d) Cloud-based storage and processing: The scalability and flexibility of cloud-based storage and processing can make managing and processing large volumes of unstructured data easier.

2. Value obtained from Data insights

Data insight refers to the meaningful and actionable information that can be extracted from data through analysis and interpretation. It involves using tools and techniques to explore, analyze, and interpret data to identify patterns, trends, relationships, and other vital insights.

The usage of data insights will continue to be increasingly crucial for businesses in the future. Here are some potential ways that data insights could help businesses in the coming years:

(a) Enhanced customer understanding: By analyzing customer data, businesses can gain a deeper understanding of their customer’s behavior, preferences, and needs. This information can be used to improve products and services and to develop more effective marketing and customer service strategies.

(b) Improved operational efficiency: Data insights can help businesses identify inefficiencies in their operations and supply chains, enabling them to streamline processes, reduce waste, and optimize resource allocation. This can result in cost savings and increased productivity.

(c) Better risk management: Businesses can anticipate and manage risk by analyzing market trends and consumer behavior. This can help them avoid potential threats and take advantage of opportunities.

(d) Personalized experiences: Businesses can tailor products and services to individual customers using data insights to understand customer preferences. This can help improve customer satisfaction and loyalty.

(e) New revenue streams: Data insights can help businesses identify new market opportunities, such as untapped customer segments or emerging trends. By using data to develop innovative products and services, businesses can create new revenue streams and stay ahead of the competition.

3. The changing face of data storage solutions.

The future of storage solutions is likely to be shaped by several trends, including the increasing volume of data, the need for faster access to data, and the growing demand for cloud-based storage.

Cloud storage has become increasingly popular in recent years, and this trend will likely continue. It is a cost-effective solution for storing and managing large amounts of data. With the recession at the heels of 2023, cloud storage providers offer flexible pricing plans to help businesses manage costs based on their specific storage needs.

In addition to being cost-effective, cloud-based storage offers other benefits for large businesses, including scalability, security, and accessibility. Businesses can quickly scale their storage capacity as their data needs grow, and they can access their data from anywhere with an internet connection. Cloud storage providers offer advanced security features to protect data from unauthorized access or breaches.

4. Focus on Data Security

Data security is an essential aspect of data management, and it will continue to play a crucial role in the future of data management. As the amount of data generated by individuals and organizations continues to increase, protecting this data from various security threats, including cyber-attacks, data breaches, and insider threats, is becoming more critical.

Data management will likely become more complex, with larger volumes of data being generated, stored, and analyzed. Data security must be more sophisticated to keep up with evolving threats. Additionally, the rise of cloud computing and the increasing use of mobile devices to access data create new challenges for data security, as data is often accessed from outside traditional network perimeters.

Ransomware is malware used to extort money from individuals or organizations by denying them access to their data. Ransomware attacks have become increasingly common in recent years, and they pose a significant threat to the future of data management.

Ransomware attacks can also drive innovation in data security. As attackers become more sophisticated, organizations need to be equally innovative in their approach to data security. This can lead to the development of new technologies and strategies for protecting data from ransomware and other types of cyber threats.

In addition, Ransomware attacks can significantly impact an organization’s reputation and financial stability. Data management practices need to evolve to address the reputational and financial risks of ransomware attacks.

5. Sharing pivotal information is a given

The need to share data with external persons is likely to rise as more organizations collaborate with partners, suppliers, and customers in various industries. For example, businesses may need to share data with external parties for joint projects, supply chain management, and customer relationship management.

Sharing data with external parties can bring many benefits, including increased efficiency, better decision-making, and improved customer experiences. However, it also presents several challenges for data management. Here are a few key factors to consider:

Security: When sharing data with external parties, it’s crucial to ensure the data is protected against unauthorized access, modification, or destruction. This requires robust security measures like access controls, encryption, and monitoring.

Compliance: Sharing data with external parties may involve complying with various data regulations and laws, such as data privacy and security regulations. It’s essential to ensure that data sharing complies with applicable regulations to avoid legal and reputational risks.

Data quality: When sharing data with external parties, it’s essential to ensure that it is accurate, complete, and consistent. This requires establishing clear data quality standards and processes for verifying and validating data.

Data governance: Sharing data with external parties requires a clear data governance framework that outlines who has access to data, how data is managed, and how data quality is maintained. This helps ensure data is used appropriately and consistently across different parties.

How can Vaultastic help?

Vaultastic can help businesses look to the future by addressing all the above concerns:

Unstructured data:

Vaultastic can ingest, store and manage unstructured data from emails, files, and documents, including attachments, metadata, and email conversations, making it easy to search, retrieve and analyze such data when required.

Data insights:

Vaultastic offers advanced search capabilities that allow users to search for specific information within their data archive. It also offers advanced analytics and reporting features that enable users to gain data insights.

Flexible storage solutions:

Vaultastic offers a unique storage tiering solution to segregate frequently used data from rarely used aging data into two separate stores, thus optimizing up to and beyond 60% of costs.

Data security:

Vaultastic protects your data using a shared security model in the cloud. Your data is secured at seven layers, including encryption, access controls, disaster recovery, and more, that help protects critical data from cyber threats, including ransomware.

Sharing information:

Vaultastic offers secure sharing tools that enable collaboration with external parties securely and privately. The solution offers role-based access controls that enable users to control who can access the email data and what they can do with it.

To learn more about Vaultastic, click here.

0 notes

Text

10 ways a cloud archive can help you with Data Governance

Learn about Data Governance and how using a Centralized Cloud Archive improves it

Data is the new currency; it is everywhere and continues to grow exponentially in its various formats — structured, semi-structured, and unstructured. But, whatever the format, businesses cannot afford to slack on the proper accumulation and categorization of data, otherwise known as Data Governance, given that optimum value can be obtained from these data sources. As Jay Baer, a market and customer experience expert, remarked, “We are surrounded by data but starved for insights.”

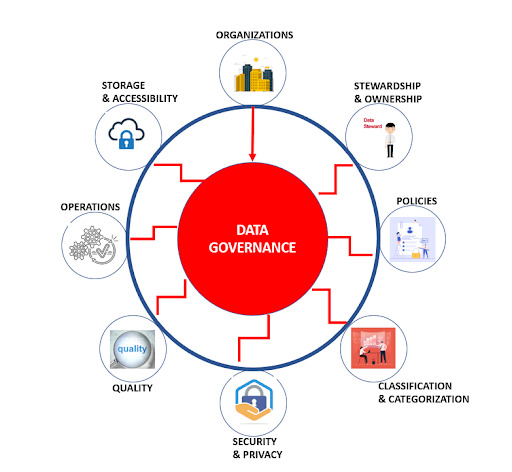

So, what exactly is Data Governance, and what are its key elements?

Imagine that you wanted to rebrand and launch a failing product and needed some insight into achieving this by looking at YTD sales analysis for the previous five years or perhaps customer feedback for those same years. But, the data for your analysis or information is fragmented between different data storage units or lost due to local deletion by error. What a loss regarding revenue, time, insights, and progress.

Here is where the need arises for a proper Data Governance strategy to ensure such irreparable damage does not happen. A proper Data Governance strategy lists the procedures to maintain, classify, retain, access, and keep secure data related to a business. As data grows exponentially daily, mainly fueled by Big Data and Digital transformations, and with an estimated growth of 181 zettabytes by 2025, the need for a proper Data Governance strategy to ensure proper data usage becomes imperative.

Below are four elements that are key to a proper Data Governance strategy:

Data Lifecycle Management:

To prepare a proper Data Governance strategy, one must first understand the circle of life that data goes through. Data is created, used, shared, maintained, stored, archived, and finally deleted. Understanding these core aspects form the main points of Data Lifecycle Management.

For example, John Doe applied for the position of QA Manager. He applied online on the company’s website (creation), and his resume was chosen by his employers (used) and sent to HR to offer him a job (shared). John accepted the job and started work with the company. His details were kept with HR to update records annually and for tax and legal purposes (maintained and stored).

Finally, John retired, and his file was handed to the Data Steward (archived), where it may or may not be kept (deleted) depending on legal retention policies.

Now compare that to the data lifecycle of a draft sales PowerPoint presentation. The presentation will be created, used, shared, and probably deleted in favor of the final version, which will go through the entire Data Lifecycle Management process. Understanding the data is critical; that is where Data Quality Management comes to the fore.

Data Quality Management:

Let’s go back to the example of relaunching a failing brand. You finally found all the pertinent files you have been looking for for the past five years. But interspersed with the sales and promotion figures are files dealing with a final presentation and numerous formats of that presentation leading up to the final format.

What do you keep? What is needed and what is not, and how do you know the difference? This is where Data Quality Management (DQM) comes in. Essential questions to ask about data when observing DQM are:

Is it unique? Are there multiple versions of one file or a final version I must keep? Do the draft copies have important handwritten notes that point to the final version and provide greater insight?

Is it valid? Do I need to keep this data? Is there a possible future use for it?

Is it accurate? Are the files being saved for future use accurate?

Is it complete? Are the files that need to be saved in their entirety?

Is the quality good? Are the quality of the files suitable, providing insightful context in the years to come?

Is it accessible? Are these records properly archived, or are they fragmented? How can we get access to them?

Data Stewardship:

Now that we have answered all those questions, imagine for a moment all this data — structured, unstructured, and semi-structured sitting in data silos or data lakes as one giant beast. Which begs the famous idiom question — Who will bell the cat?

Who will take on this humongous task of Data Quality Management, i.e., classifying, archiving, storing, creating best practice guidelines, and ensuring data security and integrity?

This is where Data Stewardship comes into play. Appointing a sole person or a committee (which is better) to create and oversee all the tasks of Data Management is the optimum choice in the eventual buildup of good Data Governance strategies. The main job of a stewarding committee is to ensure that data is properly collected, managed, accessed when needed, and disposed of at the end of the retention period.

Some essential functions of a data stewarding committee are:

Publishing policies on the collection and management of data ( something that can be achieved faster if DQM practices are already in place)

Educating employees on proper DQM best practices, and providing training on Record Information Management (RIM) policies established by the company, ensuring these training are given after 3 years to stay compliant with existing and new regulations.

Revising retention policies to meet new regulations.

Creating a hierarchical chain of command within the committee based on the classification of records.

Data Security:

Should you keep the data, classify or not, and share it? There are many questions regarding the usefulness and usability of data. But one thing stands out — whatever the reasons, all data usage should be considered secure in the entire Data Lifecycle Management process to the point of its deletion.

Data Security be it encryption, resiliency, masking, or ultimate erasure, tools have to be deployed along with policies to ensure that the company’s data is safe and secure and used by the proper personnel.

The recurring theme we get from all these essential elements is after identifying and classifying data, where can we keep all this data? And while it occurs to most businesses to keep data stored either physically or in-house, the case for a centralized cloud archival system is getting stronger daily. So, should one go for a centralized cloud archival system? Here are a few advantageous arguments that prove a case in point.

Advantages of a Centralized Cloud Archival System:

With internet usability being predominant worldwide and the availability of a company’s intranet to its employees, access to data on a centralized cloud archive has never been easier.

Organizations and departments within organizations can share data and resources more efficiently. Specific data can be quickly discovered with such tools as E-discovery.

As data grows daily, a centralized cloud archive system can meet scalability demands while remaining flexible.

A cloud archive system is more cost-effective in keeping data, meeting the demands of growing data, and having flexibility compared to a local in-house data storage unit, not to mention the office space it will save and the costs of having a built-in infrastructure IT room specifically for this.

Data is stored in a secure, centralized cloud archive system that ensures no unauthorized access. Moreover, it ensures timely data backups and updates to the system and is less likely to be damaged or lost in a local disaster.

As we let the advantages of a centralized cloud archive sink in, here are ten ways it can help businesses in their Data Governance strategies:

10 Ways a Centralised Cloud Archive can improve your Data Governance:

Focus on global policies:

While classification and maintenance of data is a crucial factor in governance, the time factor is as important an element as any other. The question — of how long to retain this data is relevant in the archival process, and the answer is not so black and white.

With local and global policies changing daily when confronted with new and sometimes imposing queries, data retention times vary from year to year. For the people responsible for maintaining or classifying the data, there is a need to store data immediately, pending proper relevance tagged to the data. While in-house storage units can house them temporarily, they are vulnerable to security breaches, deletion due to error, or data fragmentation.

The obvious choice is to store them in a centralized cloud archive with combative features like encryption, security, secure access, flexibility, and scalability.

Data Access and ABAC:

Because data is located in a secure, centralized cloud archive, employees distributed in different geographical locations can access data at any time depending on their time zone, at the same time as other employees, or at multiple times.

But should all employees have access to everything? The Centralised cloud archive ensures that Attribute Based Access Control (ABAC) is in force, ensuring employee rights based on attributes assigned to them. These rights are usually enforced when creating DQM strategies or by Data Stewards based on changing company, local and global policies.

Deduplication:

A centralized cloud archive system has the innate software technology to ensure no data duplication, ensuring only one final copy to file. This is in stark contrast to the in-house data silos, which promote data fragmentation and unnecessary duplication of files.

Self-service for data consumers:

All the data is stored in a single searchable platform, making it easier for consumers to source or explore independently. Self-service access allows consumers to access any data they have permission for without having to request access from the data owners manually.

Removing the need for Physical Infrastructures:

A Centralised Cloud Archive System is much more cost effective than traditional storage methods, as it eliminates the need for businesses to purchase and maintain their data storage infrastructures.

Maintaining transparency and Automated Reporting:

With a Centralised Cloud Archive System, all the stored data is available in a searchable format, making it easier for stakeholders to understand the information and use it for decision-making. This helps in improving transparency and accountability within the organization.

Moreover, with automated reporting, and a data monitoring system in place, there is transparency as to who is obtaining data access, when, and where.

Removing the redundant data:

When a file is no longer needed, has served its retention period, and has been approved for deletion by the data stewarding committee, it is easier to access this redundant data file from the Centralised Cloud Archive and permanently delete it.

Data Durability:

SaaS cloud data archiving platforms that offer high reliability and availability with in-built disaster recovery sites (like Vaultastic does) will drastically reduce the RPO and RTO anxieties of CXO teams. And also eliminate the effort of performing backups of the data.

Data Security:

The Centralised Cloud Archive is more secure than other data warehouses or in-house storage. Cloud-based data archiving platforms like Vaultastic leverage the cloud’s shared security model to provide multi-layered protection against cyber attacks.

Updated patches, two-factor authentication, encryption, and relevant security controls ensure that businesses’ data are kept in a tight vault.

Cost-Optimization:

SaaS data archiving platforms that can optimize costs along multiple dimensions even as data grows daily should favor you. Data will grow continuously, and you want your costs to be kept in check.

Conclusion

More and more businesses are migrating to the Cloud for their solutions, primarily their archiving solutions. The reasons are many — cost-effectiveness, data security, deduplication, better infrastructure, IT support, user-friendly, the list is endless.

Once a business has established its Data Governance strategy and implemented it, the next step is to ensure this data is secured in a proper location.

What better way than the cloud, which is proving its practicality day by day.

If you have your Data Governance strategy in place, Vaultastic, an elastic cloud-based data archiving service powered by AWS, can help you quickly implement your strategy.

Vaultastic excels at archiving unstructured data in the form of emails, files, and SaaS data from a wide range of sources. A secure, robust platform with on-demand data services significantly eases data governance while optimizing data management costs by up to 60%.

0 notes

Text

7 Data Management Best Practices to Adopt in 2023

Are you ready for 2023 and the significant changes in Data Management to come? Read on to find out.

As data management becomes increasingly important in the digital age, staying informed on the latest and best practices is crucial to ensure successful data management within your organization. Businesses need data management for several reasons: better decision-making, increased efficiency, regulatory and compliance needs, improved customer experience, and competitive advantage. But first…

What is Data Management?

Data management is collecting, storing, organizing, maintaining, and utilizing data effectively and efficiently. It involves developing and implementing policies, procedures, and strategies that ensure data is accurate, consistent, and secure and can be easily accessed and used by those who need it.

Data management can include various activities, such as data governance, quality management, security, integration, and analytics. It also involves choosing appropriate technologies for storing and managing data, such as databases, data warehouses, and data lakes, and ensuring they are correctly configured and maintained.

7 Data Management Best Practices for 2023

1. Define data governance policies

Although it may seem obvious, developing clear data governance policies is crucial to ensure that data is appropriately managed. This includes defining data ownership, establishing data security and privacy policies, and creating processes for data management.

Too often, implementing data governance policies has been overlooked by ill-advised or beleaguered staff, resulting in mismanagement or manipulation of data. By defining data ownership under data governance, organizations can determine who is responsible for maintaining the accuracy and security of specific data sets.

In addition to data ownership, data governance policies should establish data security and data privacy policies. This includes identifying the data types that need to be protected and implementing appropriate security measures to prevent unauthorized access or data breaches. Data privacy is also essential, especially when handling sensitive information such as personal or financial data.

Finally, creating processes for data management is essential for effective data governance. This includes identifying the steps to ensure data accuracies, such as data quality control measures and establishing data access, sharing, and disposal procedures. Organizations can ensure their data is managed effectively and compliant with applicable laws and regulations by developing and implementing clear data governance policies. This can help to reduce the risks of data breaches, improve decision-making capabilities, and enhance overall business performance.

2. Design and Implement an Effective Data Quality Management Strategy

A comprehensive data quality management strategy ensures that data is accurate, complete, timely, and consistent, enabling businesses to make informed decisions based on reliable data.

The first step in designing an effective data quality management strategy is identifying the critical data elements essential to business operations. This may include customer data, sales data, financial data, or any other data that is critical to business processes. Once the key data elements have been identified, it is essential to establish data quality standards, such as data completeness, accuracy, and timeliness.

Next, businesses should establish data collection, validation, and correction processes. This may include implementing data validation checks, data cleansing processes, and tools for identifying and correcting errors in data. Additionally, businesses should establish data governance policies and procedures, including roles and responsibilities for managing data quality, and establish a data quality monitoring framework to ensure ongoing compliance with data quality standards.

An effective data quality management strategy also involves cultural and organizational changes. This may include establishing a culture of data quality and ensuring that data quality is a priority at all levels of the organization. It may also involve providing training and resources to employees to help them understand the importance of data quality and how to maintain it. By prioritizing data quality, businesses can make informed decisions based on reliable data and maintain a competitive advantage in their industry.

3. Develop Data Integration Processes:

Data integration combines data from multiple sources to create a unified and comprehensive view of the data. This can help organizations gain insights that may not be possible when working with data in silos. Data integration helps businesses in several ways, primarily by enabling them to make better-informed decisions, improve operational efficiency, and enhance customer satisfaction. Here are some specific ways that data integration can benefit businesses:

Improved data accuracy and completeness:

Data integration helps businesses to consolidate data from multiple sources and ensure that it is accurate and complete. This, in turn, ensures that businesses have access to reliable data, which they can use to make informed decisions.

Enhanced operational efficiency:

By integrating data from different sources, businesses can streamline operations and reduce manual processes. This helps to improve operational efficiency and reduce costs.

Better customer insights:

Data integration can also help businesses to gain a deeper understanding of their customers. By consolidating customer data from multiple sources, businesses can develop a 360-degree view of their customers and use this information to provide more personalized experiences and improve customer satisfaction.

Improved business intelligence:

Data integration can also help businesses to develop more robust business intelligence capabilities.

Enhanced data security:

Data integration can also help businesses to improve data security. By consolidating data in a central location, businesses can better control access to data and ensure that it is secure and protected from unauthorized access.

4. Secure and Private sharing of data

Secure and private sharing of data is an essential aspect of data management. With the increasing amount of data being generated and collected by organizations, ensuring that this data is securely and privately shared with relevant parties is imperative. Secure sharing of data involves protecting the data from unauthorized access, tampering, and theft. On the other hand, private data sharing ensures that only authorized parties have access to the data and that the privacy of individuals whose data is being shared is protected.

Organizations need to implement various data security measures to achieve secure and private data sharing. These measures include data encryption, access control, and authentication. In addition to security measures, organizations must implement privacy-enhancing technologies to protect the privacy of individuals whose data is being shared. These technologies include data anonymization, pseudonymization, and differential privacy.

Businesses can benefit from secure and private data sharing in several ways:

Increased trust

Improved collaboration

Compliance with regulations:

Competitive advantage

Reduced risk of data breaches

And Better decision-making

5. Implementing secure decentralized access for Data Archive Management and Role Transition Optimization.

Data archives contain large amounts of sensitive information, and protecting this data from unauthorized access and theft is essential. Additionally, role transitions, such as employee turnover or changes in job responsibilities, can pose a security risk if not managed properly. Secure decentralized access and role transition optimization ensure that data is protected and accessible only to authorized parties.

Secure decentralized access involves distributing access to data across multiple nodes or locations rather than relying on a centralized server or database. This approach reduces the risk of data breaches and ensures that data is accessible even if one node fails. Role transition optimization involves managing access to data during role transitions to minimize the risk of unauthorized access. This can include revoking access to specific data when an employee leaves the company or changes job responsibilities.

Implementing secure decentralized access and role transition optimization requires careful planning and implementation. Data must be encrypted, and access control mechanisms must be implemented to ensure that only authorized parties can access the data. Additionally, role transition policies and procedures must be established and enforced to ensure data remains secure during role transitions.

Additionally, tracking and auditing data access use is essential to data management. It involves monitoring and recording all activities related to accessing, using, and modifying data. Tracking and auditing aim to ensure that data is being used appropriately and under relevant policies and regulations and to identify and address any potential security risks or breaches.

Implementing tracking and auditing of data access use requires appropriate systems and processes to be in place. These may include access controls, logging mechanisms, and tools for analyzing access logs. It is also essential to establish clear policies and procedures for monitoring and auditing data access use, including who is responsible for monitoring, how data access logs are reviewed, and how identified issues are addressed.

6. Leverage Automation for Improved Efficiency

Automation tools and technologies can help businesses to streamline data processing and analysis, reduce manual effort, and improve accuracy and consistency. One way in which automation can improve efficiency is by automating data entry and processing tasks. For example, businesses can use Optical Character Recognition (OCR) technology to scan and digitize paper documents, such as invoices or purchase orders. This eliminates manual entry and ensures data is entered accurately and consistently.

Another way in which automation can improve efficiency is by automating data analysis tasks. Businesses can use machine learning algorithms or business intelligence software to analyze data automatically and identify patterns or insights. This can help businesses quickly identify trends or opportunities and make informed decisions based on data-driven insights.

Automation can also help businesses to improve data quality by reducing the risk of human error. For example, businesses can use data validation checks to automatically identify and correct errors in data, such as misspellings or incorrect formatting. This helps to ensure that data is accurate and consistent and reduces the risk of costly errors or mistakes.

Furthermore, automation can also help businesses to improve collaboration and communication around data-related tasks. By using collaborative tools and workflows, businesses can automate the process of sharing and reviewing data, and ensure that all stakeholders have access to the latest and most accurate data.

7. Adopting Cloud Computing Technologies

Cloud computing enables businesses to store and process data scalable, secure, and cost-effectively. Software as a Service (SaaS) is a cloud-based delivery model in which software applications are hosted by a third-party provider and accessed over the internet. Here are some of the key benefits of adopting SaaS for data management:

Scalability:

SaaS applications are typically highly scalable, enabling businesses to easily add or remove users as needed. This allows businesses to quickly scale up or down their data management capabilities without costly infrastructure investments.

Cost savings:

SaaS applications are typically offered on a subscription basis, which allows businesses to pay only for the features they use. This can help businesses save on software licensing fees and hardware costs.

Accessibility:

SaaS applications can be accessed from any device with an internet connection, making it easy for employees to access data and collaborate on projects regardless of location.

Security:

SaaS providers typically offer robust security measures to protect against data breaches and cyber-attacks. This includes data encryption, multi-factor authentication, and other security measures that are often beyond the reach of small to medium-sized businesses.

Integration:

SaaS applications are often designed to integrate with other cloud-based services, which can help businesses to streamline their data management workflows and improve efficiency.

Secure Your Data Future with Vaultastic Solutions

Vaultastic is a SaaS cloud-based solution for secure data management and storage. It provides an innovative approach to ensure the safety and security of your data, offering a comprehensive range of features to protect it from unauthorized access, loss, or corruption. With Vaultastic, you can trust that your data is securely stored in the cloud with advanced encryption protocols and multi-factor authentication for added security.

Vaultastic also enables organizations to benefit from a best-practice data management strategy by providing flexible data management capabilities that are easy to deploy and scale. This includes backing up data with automatic, encrypted backups and storing it in multiple locations. Additionally, you can access granular data governance tools to protect sensitive information, while user-friendly dashboards allow quick and easy data usage monitoring.

With Vaultastic’s top-of-the-line security solutions, organizations can be confident that their sensitive information is secure and protected against threat actors. By embracing a best practice data management strategy, organizations can ensure that their data is not only secure but also accessible and managed in a way that is tailored towards their business needs. To learn more, click here.

0 notes

Text

The Importance of Data Retention in GDPR Compliance

In the aftermath of the General Data Protection Regulation (GDPR) going into effect on May 25, 2018, data management has been a top priority for businesses.

If you’ve been monitoring GDPR compliance updates as they unfold, you know that data is at the center of everything.

What does data retention have to do with the General Data Protection Regulation?

Everything.

Data retention is how a company manages and stores user or customer data. With the implementation of GDPR, data retention is more critical than ever.

If you’re still trying to figure out data retention and how it relates to GDPR data retention, you’ve come to the right place.

This blog post will explore everything you need about GDPR data retention and helping your business meet those standards moving forward.

What is GDPR?

First, let’s start with the basics.

GDPR stands for General Data Protection Regulation, and it’s a data protection standard. The GDPR is designed to protect the personal data of EU citizens by regulating how companies can collect, store, and process data.

Specifically, the GDPR will require businesses to be more transparent about collecting and storing data and give users more control over what data is collected and used.

The GDPR data retention policy mandates companies or businesses to employ specific security measures when collecting and storing customer data belonging to EU citizens.

It states that companies must protect user data and offer customers tools like the right to request the deletion of their data. The law also holds businesses accountable for data breaches that may result in stolen data.

Why is Data Retention Important for GDPR Compliance?

When businesses are considering their GDPR compliance strategy, one of the first things they need to do is assess the current data in their database. In other words, they need to conduct a data audit to understand what they are currently collecting and storing and how long they need to keep that data.

There are some clauses of the GDPR data retention policy that you should follow to be compliant with GDPR. It’s essential to keep data as long as you need it for your business, but it’s also crucial to know how long you need to store data to comply with GDPR.

For example, if you have a customer who placed an order six months ago and has an ongoing subscription, you should be able to access that information whenever you need it.

You should also have an easy way to access that data if someone from the government requests it. So, it would be best if you had a data retention policy that defines how long you keep such data.

Why is GDPR Compliance important to a company’s bottom line?

GDPR compliance is more than just having a good reputation. It’s also about protecting your company’s bottom line. In other words, GDPR compliance is crucial because it helps your company avoid hefty fines and meet customer expectations.

GDPR penalties are not to be taken lightly; they can be up to €20 million or 4% of your company’s annual revenue, whichever is greater. You could face severe consequences if your company doesn’t take GDPR seriously.

Also, your customers may take their business elsewhere if they don’t feel their data is secure.

Moreover, customers may be more likely to file a complaint against your company if they don’t feel their data is safe.

Data breaches can also have severe repercussions for your company’s bottom line.

How to Meet GDPR Data Retention Requirements

Given the ever-growing data volume, it appears challenging to securely and safely preserve data in a search-ready form to meet the GDPR data retention requirements.

A scalable cloud data archival solution can mitigate these challenges and help you retain your critical data with tools for on-demand access.

Although the GDPR states that businesses must keep user data for at least ten years, there are no specific guidelines for how long you should retain data.

That’s because every company has different data types and retention requirements.

For example, if your company is focused on e-commerce, it may need to keep data like customer orders, payment information, and shipping information. If your company is focused on B2B services, they may need to keep data like contracts, invoices, and correspondence. No two companies are the same, so there is no one-size-fits-all solution for data retention.

But that doesn’t mean that there isn’t a solution made just for you.

Vaultastic’s new-age flexible cloud data archival and retention helps you comply with GDPR without the hassles or high costs.

Most industries have become increasingly data-driven in the past decade, and that trend is only accelerating.

In the coming years, businesses will trust their data with third parties more than ever. While this increased reliance on outside sources of information creates new growth opportunities, it also exposes businesses to a greater risk of a security breach or accidental data leak.

With so much confidential information at stake, companies need an effective solution for safeguarding their confidential data while enabling faster access when necessary.

To optimally safeguard your company’s confidential data from cyberattackers and accidental leaks, you should implement a secure digital storage solution with the best features, including complaint policies like document management software, encryption software, and data storage services.

Vaultastic’s agile cloud data archival can help you:

Capture and protect emails, files, and web content data automatically from a wide range of data sources.

Set up basic data retention policies that govern how long you should retain your data.

Access on-demand tools for ediscovery and extraction to help respond quickly to queries.

Create more complex retention policies with additional settings like legal hold.

Vaultastic can help you keep your data safe while keeping your costs down. In addition to that, it’s easy to use and integrates seamlessly with your existing systems.

Related: Vaultastic’s GDPR Shared responsibility model explains how Vaultastic helps you at each step.

Don’t spend another second thinking – give Vaultastic a chance to solve all your problems. Explore a 30-day free trial.

0 notes

Text

How Archiving Shields Data from Cyber Security Vulnerabilities

Cybercrime costs the world $6 trillion as per 2022 estimates. That’s more money than the GDP of a few countries.

Organizations must step up cybersecurity measures to protect their business-critical data, which could be critical to their survival.

43% of all cyber-attacks target small businesses, and even a single attack can cause have devastating effects.

A physically separate, secure archive/copy of your business-critical data is your best line of defense against cybersecurity vulnerabilities.

In the following, there’s information on the importance of cyber security, data archiving in cyber security, and how it can help.

What is Vulnerability in Cyber Security?

Cyber security is a blanket term used to describe the practices, processes, controls, programs, and tools used to protect your IT assets and tools.

Vulnerability meaning in cyber security, can be defined as gaps, loose ends, and liabilities in your IT infrastructure that can serve as entry points for attack vectors. You can also read these as “chinks in the armor.”

In an increasingly digitized world, as more data goes online, cybercriminals have more opportunities to try and exploit vulnerabilities in your cyber security to their advantage.

Cyber-attacks are ever-evolving in sophistication and volume, and hence it’s an ongoing challenge to maintain a robust cyber security system for your valuable data.

Different Types of Vulnerabilities in Cyber Security

As the threats evolve, so should cyber security measures.

Your organization can fall prey to many different types of vulnerabilities in cyber security, which can lead to system hijacks and data breaches. Some sources of these vulnerabilities are:

Unpatched or Outdated Software.

System Misconfiguration.

Malicious Threats by Insider.

Missing, Improper, or Weak Authentication credentials.

Incomplete Authorisation policies.

Zero-day Vulnerabilities.

Missing or poor Data Encryption.

What is the Importance of Data Protection in Cyber Security?

Cyber security employs many methods, processes, tools, software, and other protective measures to protect your systems and your most valuable asset – your data.

Robust Data Protection is a foundational line of defense against data theft, damage, and loss among these measures.

An independent, safe, tamper-proof repository of all your data insulates you against the impact of any cyber-attack.

And data protection contributes positively to business continuity, organization growth, and risk mitigation.

Telling customers about how you keep their critical data safe can build trust and reputation.

What role does Email Archiving play in Cyber Security?

Email being the primary form of digital communication and data exchange in an organization, is one of the main targets of cybercriminals.

IDC says that business emails carry 60% of business-critical data. These could be confidential information, trade secrets, company finances, product details, and many other types of confidential information.

Cybercriminals send Phishing Emails, Trojan Horses, Malware, Viruses, and Worms through emails. These phishing emails attempt to attack, steal and destroy your data and cause your organization irreparable damage.

However, cyber security has a solution – email archiving.

Email archival storage in cyber security is a vital aspect of a robust security architecture.

Having a central, consolidated cloud repository carrying a physically separate copy of all your active and legacy email is one of the best ways to safeguard the data.

Related: Why Archive email

How Email Archiving Shields Data from Cyber Security Vulnerabilities

Archiving might sound similar to backup, but it is not. A fundamental difference is that email archiving captures data continuously, as it is generated and not after the fact, whereas a backup is periodic. This difference ensures that archiving captures and preserves ALL your data, irrespective of your users’ actions in their mailboxes.

Related: Difference between Email Archive and Backup

Other notable benefits of cloud-based email archiving:

Records Metadata

When, where, how, and by whom, archiving records metadata, making it a lot more valuable and important. You also have the freedom to decide how detailed you can be with your metadata.

Legal and Compliance readiness

Regulated industries, like healthcare and financial services, must have email and data archiving practices in place. A robust, search-ready email archive can support fast and accurate ediscovery of evidence to support legal and compliance readiness.

Excellent Email Management System

Archiving with self-service access can double up as an email storage management system. Your older emails are removed from the active mailboxes and available in safe archives via a self-service ediscovery application.

Role-based access for additional security

Amongst the top cyber security vulnerabilities is the human aspect. Not every employee needs access to archived data. Organizations must create a hierarchy granting a varied level of access to certain members in the organization. These could be auditors, compliance officers, or members of the legal teams.

Cyber Resilience

Data breaches can result in downtime, which can be very disruptive. Minimizing the visible surface area of data by receding infrequently used critical data to cold stores is a vital aspect of building cyber resilience. Leveraging cloud tools that support storage tiering with robust discovery tools can support your cybersecurity strategy without compromise.

Related: 5 Effective Data Management Strategies: A Guide to Selecting Email Backup, Archiving, or Journaling For your Enterprise

Email Archiving Solutions Must Be Secure

The email and data archiving solution you choose for your organization must be secure. It should encrypt all your data files and protect them from external attacks. The storage location should also be secure yet easy to access for you. The email archiving solution should also protect from internal threats by restricting access, monitoring access requests, and using unauthorized tools and software.

Vaultastic is a next-generation platform that protects your business-critical in the cloud using storage tiering and a multi-layered shared security model at 60% lower costs. Vaultastic is cloud-native on AWS, highly scalable, and seamlessly integrates with all major email service providers.

Final Thoughts

Is cyber security important? Yes. Irrespective of your organization’s size, you have to invest in cyber security. But even that may not be enough. Even the most technically advanced cyber security systems can develop chinks over time. Maintaining a physically separate copy of your data using a cloud email archiving platform is one of the best defenses against relentless cyberattacks. It ensures business continuity and reduces data risk even in a compromise on the primary system.

Why not give Vaultastic a spin? Sign up for a no-obligation 30 day free trial and experience new-age data protection.

0 notes

Text

How storage tiering enables long term email data retention at 60% reduced costs

Why is long-term data retention such a big challenge?

Here are some global statistics about the humongous growth of email data:

In actual numbers,

an average user’s mailbox grows by 4GB every year, and

each business user sends and receives a total of 160 emails a day (as of 2022. Source: Earthweb)

This is BIG data and a big problem too for any long-term data retention strategy.

Managing this high Volume, Velocity, and Variety of emails is challenging for an organization.

How do you cope with this as email boxes grow to their maximum limits on MS 365, MS Exchange, Google Workspace, or other email solutions?

Traditional responses to growing/filling mailboxes can be unproductive

Traditional email preservation methods create fragmentation and make it challenging to locate information on-demand besides the management headaches and escalating costs.

Most cloud email services provide sufficiently large mailboxes suitable to start with but soon fill up.

To counteract this storage overflow, you may need to either purchase a higher plan with additional storage or clean up individual email stores – a task that can take away many productive hours.

Besides being Risky, Costly, and Clunky

Besides the loss of user productivity, there are other business impacts from email storage getting full:

Performance Degradation

Growing mailboxes may overload the email apps and slow down their performance.

Cost Implications

Mailboxes approaching their limits need more resources, either as expensive plan upgrades or the purchase of additional storage.

Data & Compliance Risks

To keep within quota limits, users typically download and delete data to create space in their mailboxes, increasing risks for the organization in maintaining a complete record of exchanging information over email.

Hard to Locate information

Unless the organization has subscribed to an Archival service, the lack of a centralized store makes it very challenging to locate old emails when required as a reference or evidence during an audit, investigation, or litigation.

Related: Why Archive email

Storage tiering is a scalable solution for long term email data retention

Step 1: Capture a copy of all new emails in Vaultastic

Configure journaling from your mail system to ensure a copy of every email sent/received is automatically captured in tamper-proof vaults on Vaultastic. Learn more about how to configure journaling.

Step 2: Migrate historical data from live mailboxes to Vaultastic.

Migrate old data from the users’ mailboxes to Vaultastic using the Legacyflo app. Learn more.

Step 3: Reduce Email Storage using a retention/truncation policy on the live mailbox

Now that a copy of all old and new emails is available in Vaultastic, you can now apply a retention policy on the live mailbox to keep it small. E.g., delete mail older than six months to restrict the growth of the live mailboxes.

Remember, it costs more to store the email data on live mailboxes than in an archival store like Vaultastic, which works hard to optimize storage costs across the entire data life cycle.

Step 4: Access on demand

The users (and supervisors) can now access recent emails via the live mailboxes and all older emails from the Vaultastic Ediscovery console, quickly locating specific pieces of information and documents buried anywhere in the email store. Learn more about the ediscovery app.

Step 5: Further optimize costs by moving infrequently used and aging data to the Open Store

Leverage one more level of storage tiering with Vaultastic’s two stores, viz. the active store for frequently used recent email that is search ready and the Open Store for infrequently used aging data.

You can configure the automatic movement of aging data from the active to the Open Store. Learn more.

[spacer height=”20px”]

Benefits of storage tiering go beyond long-term data retention

[spacer height=”7px”]

📷[spacer height=”7px”]Uniform Performance[spacer height=”7px”] With a consistently maintained lean live mailbox; your users will experience a consistent mail application performance.

📷 [spacer height=”7px”]Compliance Ready [spacer height=”7px”]A central store of email data enables you to respond to any data access request quickly and accurately.

📷 [spacer height=”7px”]Save 30-45% [spacer height=”7px”]By using tiered storage to maintain a lean live mailbox and leveraging powerful cost optimization strategies of Vaultastic; you optimize up to 60% costs.

📷[spacer height=”7px”]Peace of Mind[spacer height=”7px”] A central cloud repository of your email protected with a robust shared security model ensures that your data is safe, immutable and fully secured against any kind of threats.

Conclusion – storage tiering enables long-term email data retention at 60% reduced costs

Using the storage tiering strategy, you benefit from a lean live mailbox, which ensures a consistent mail system performance and prevents cost escalation on your mail solution regarding plan upgrades or additional storage.

In addition, the central, immutable repository, now created on Vaultastic, minimizes data-related risks and makes it easy to discover data of any period on-demand.

By including the storage tiering internal to Vaultastic with your strategy, you can further compress costs to achieve up to 60% cost optimization for long-term data retention of email.

0 notes

Text

Why Is It Safe For Government Bodies To Keep Data On The Cloud?

Technology Paradigms in Public Service

The government is not only the biggest data creator, but the largest consumer and aggregator as well. Central and State governments have large amounts of data in the form of user records, public policies, schemes. Thus it becomes critically important that the government has a strong and robust IT backbone to undergo a smooth transformation in its form and deliver all the services on demand. Information and Communication Technology increases productivity and efficiency while making the government machinery accountable, transparent and people friendly. The Digital India program initiated by the government is targeted precisely at meeting these objectives.

Downtimes due to hardware failures, software misconfigurations, security breaches and data loss are something that not just impact the productivity of the government offices (and therefore the quality of service) but also its credibility. Also, often projects drag on due to inadequately resourced sites severely compromising the return on investment made in the information system by the government organisation.

The cloud as a platform for such information system can remedy many of these shortcomings, while reducing costs, need for upfront capital and increased flexibility.

The Cloud

Cloud Computing, or, commonly referred to as ‘the cloud’ is delivery of services using computing resources over the internet. These services are classified as Software as a Service (SaaS), Platform as a Service (PaaS) and Infrastructure as a Service (IaaS). Cloud Computing enables data storage, access to compute resources, data interlinking and data security on an on-demand and usage based model, optimizes the overall infrastructure cost and performance. All one needs to access the cloud is a working INTERNET connection.

Data Security Challenges

Migration to cloud essentially means moving sensitive government data to a third party infrastructure. Ideally the government should own the cloud where the data is stored. But the cost and effort required for such an exercise can be daunting. The risks can be easily mitigated by a SA (Service Agreement), that mandates the service provider to share the control over the data.

Any system used on the internet is not 100% secure. IT systems can be hacked and malware, virus, worms, etc, can be implanted which are harmful to the on premise data. The challenge is not limited to only software; an IT setup is threatened with cyber attacks, physical damage (maybe due to an accident or natural calamity) or a manual security breach. In each case, the system can be damaged to render them useless giving a huge blow to the investments, besides the loss of valuable data. Cloud service providers reduce this risk by implementing a distributed infrastructure wherein the data is backed up on multiple servers and a high level of physical and cyber security, thus ensuring adequate defence against system breakdowns or data loss.

In the cloud computing approach the data must be organised based on the impact levels. For example, the non sensitive or un-classified data can be displayed on public facing websites which has a lowest impact level. As the sensitivity increases and the impact is deeper, the data must be pushed to more secured storage. The United States has a dedicated policy called the Federal Risk and Authorization Management Program (FedRAMP), designed specifically to protect cloud-based government data.

Why Cloud Is A Safe Bet?

Disaster Recovery

Cloud Disaster Recovery (cloud DR) is a business continuity strategy that involves storing and maintaining copies of the records in the cloud as a security measure. The goal of cloud DR is to assure an organization with a method to recover data and/or implement failover condition in the event of a natural or a man-made disaster.

Image credit: TechTarget.com

Below are some of the effective ways of cloud DR:

Cloud service-level agreements

Service-level agreements (SLAs) help organizations in defining the terms and conditions before migrating the data on a cloud and incase of an unwanted contingency, can charge the service provider a proportionate penalty. This establishes accountability and therefore is the first step towards moving data on cloud.

Failback and failover methods to cloud recovery

For the purposes of continuity in business and services, the system should be able to fail over a similar redundant site or link, in case of a failure. Once the disaster is sufficiently addressed, the system is back on primary hardware and software. The Failback and Failover systems can be automated.

Choose the right service providers

Providers who follow industry policies and practices are worth the business transition. These providers ensure a functional back site, so that the main application fails safely and failing back to main system is hassle free.

Security at the user level

Cloud computing allows the administrator to set up users who can access the cloud and also define the levels of access as per the user. It is possible because of the ‘Attribute Based Access Control’ (ABAC) methods of cloud applications. ABAC is a method wherein the user requests for a permission to perform operations on the computer resources and the request is granted or denied based on the role the user performs in the organization.

Security measures undertaken by the cloud providers

Cloud providers, are competitive and keep the security of the cloud infrastructure top notch. Some of the popular security features are Firewall, Intrusion Detection System, Data-at-Rest Encryption. They invest heavily in the application security, while also providing physical security i.e., infrastructure, security staff, uninterrupted power and temperature controlled environment. Also the data is stored in redundancy format so even during regular maintenance services, the application works and the government services remain un-disturbed.

Cloud Computing Enabled Governments are the future

A Government is smart, if it is focused on governance rather than the government itself.

Conventional view of the people towards government can undergo a big change when they see the government optimizing its functioning to serve citizens better. The government is seen to be taking the right steps in this direction by enabling “Public Cloud Policy” and related policies around “Data Privacy and Protection”. For example, The Government of Maharashtra is at the forefront creating an opportunity for public private partnerships for migrating the government data to the cloud. Under this policy, the government will ensure data is stored in India and maintained under the highest security standards.

To move quickly towards a future where service delivery to the citizens is effectiveness and transparent, the government must actively adopt the cloud as the underlying platform for IT driven service delivery to ensure that it does not get caught up with the wasteful task of building maintaining and managing the IT infrastructure.

Cloud computing infrastructure allows for building applications on top of it, without concern for the underlying IT infrastructure, and the ability to scale and expand with ease.

0 notes

Text

Key Components of Modern Enterprise Data Management

From being a mere by-product of actions to become the driving force behind business intelligence, data has come a long way and will go a long way. Today, it has become an integral part of all aspects of a business. Enterprises need to expertly manage their data and extract actionable information if they hope to thrive in their industry.

Enterprise data management (EDM) has become a core issue, and many businesses are giving it due importance. In the following blog, we’ll delve into what enterprise data management is, why it is essential, and what are the key elements of enterprise data management.

What is Enterprise Data Management (EDM)?

In simple terms, enterprise data management is the ability of an enterprise to gather, store, sort, and process data into information based on which the enterprise can make intelligent, high-value decisions that will work in its favor.

Many enterprises have access to a lot of data and even have the ability to store it, but managing it and extracting business intelligence from it is a different story. There are many key components of enterprise data management that you have to look into if you want to manage your data successfully.

Why is Enterprise Data Management Important for Business?

So, why is enterprise data management important? All future businesses will be using modern technologies like data science, artificial intelligence, machine learning, robotic process automation, etc. and data is the fuel that drives these technologies.

Think of data like a crystal ball that lets you see into the past, know the present, and predict the future of your business. And though it works like magic, data analysis is proven science that is changing the way business decisions are made.

One of the biggest industries in the world, banking, is always averse to integrating anything new without testing. But by 2025, the data analytics industry in banking alone will hit a value of $62.1 billion, showcasing that even an essential industry like banking trusts data.

The market of Big Data analytics in banking could rise to $62.10 billion by 2025. – Soccer Nurds

Enterprise data management is important because, according to Forbes, 80% of data today is unstructured, making analysis extremely difficult. Enterprise data management is the only way to make unstructured, unpolished data into structured, usable data.

Key Components/Elements of Modern Enterprise Data Management

The key components of enterprise data management will help you understand the whole concept because data management on an enterprise level is more complex.

But by implementing enterprise data management at the early stage of your enterprise’s growth, you can ensure your enterprise data management strategy grows with your organization.

Here are the key components/elements of modern enterprise data management:

Extract, Transform & Load

Popularly known as ETL, Extract, Transform & Load is the process of extracting your data from a source that is not built of data analytics and moving that data to storage where analytics can be performed. This enterprise data management key element is important for data transmission from one source to a more analytics-friendly data warehouse.

Data Warehouses

Various types of data, historical and contemporary, come from multiple sources. This data is transferred from data silos and stored in a single location called data warehouses. A data warehouse could be an on-premise or a cloud-based server that can store vast amounts of data.

Data warehouses are where data mining and processing are done. A data warehouse is another key component in enterprise data management as it is the central storage space, and many actions are performed in a data warehouse. Therefore, enterprises must choose the right type of data warehouse.

Master Data Management

The key element of enterprise data management, master data management (MDM), is the process where all of the business data is categorized, organized, centralized, and enriched because most of this data comes from scattered data silos. MDM ensures the overall quality of the data is improved, and the final product is used for decision-making.

The reason why it is called master data is that the employees can use this data to ensure uniformity in the rest of the data, so the analytics process runs smoothly. MDM helps apply modeling rules and remove additional identical data. MDM is a combination of processes, tools, and software.

Data Integration

Data integration is a process-driven component of enterprise data management. Each employee should have access to clean, high-quality data that is consistent and stored in a singular location. That is exactly what data integration does. Making the data uniform gives value to the data and uses the tool to extract actionable business intelligence.

There are many other benefits that data integration brings to enterprise data management, like the reduction in data and employee errors, better system collaboration, and saving precious time.

Data Governance

Without data governance, enterprises can never have successful MDM. Data governance is a set of processes, rules, and regulations that decide the flow of data in the organizational structure and the duties and responsibilities to be given to the employee. Data governance also accounts for the industry compliances and laws regarding data, and the interests of the users, customers, and stakeholders.

Data governance is a document that is a governing constitution of an enterprise that ensures everyone in the organization understands their roles and responsibility, thus ensuring employees work responsibly and ethically, data privacy is maintained, and operational hierarchy and infrastructure is optimized. It is one of the most important enterprise data management components.

Data Security

If you’d ask what is the key element of enterprise data management, many experts would say its data security. Data is constantly under threat, and the threat is not always from external sources like hackers and cybercriminals. Threats also occur in the form of corruption. Enterprises expert at managing data allots a huge chunk from the budget towards data security as it is mostly in a software form and vulnerable to breach. Therefore, you need to have good cybersecurity, backups, and other contingencies in place. After all, data is your most valuable asset.

Important Takeaway

An Enterprise data management system is not a singular concept or action. It is the amalgamation of all the critical components stated above. You must give each piece its importance, which must work together for successful enterprise data management. Data has become a hack to success, and enterprises that know how to leverage it are leading their industries. Therefore, enterprise data management should be a core issue for all enterprises.

Related: Six signs of an effective enterprise data management strategy

Related: Five data management best practices

Valutastic Has the Solution

Vaultastic’s enterprise data management solutions are what you need. We are data management experts and can provide you with software, tools, expertise, and services to help you master all the critical components of enterprise data management. Get in touch with us and even take a free trial to understand how Vaultastic can help your organization with robust enterprise data management.

0 notes

Text

Twelve most prominent challenges of Data Storage and Data Management

Data storage and data management are some of the biggest challenges modern businesses face today. Not primarily because of the amount of data they have to manage, but because they may not have the right software or tools to do this effectively.

Data's growing volume, variety, and velocity make effective data storage management a moving target. And traditional data management methods fall short.

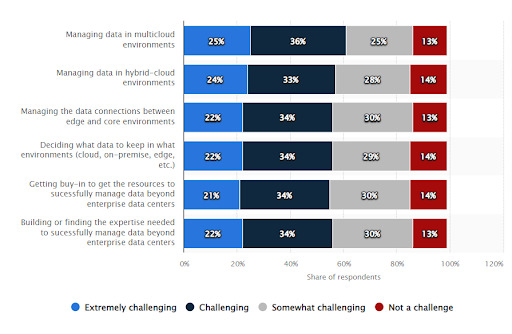

As seen from the statista 2022 report above, more than 60% of the respondents find data management challenging along multiple dimensions.

Knowing about and understanding the challenges is the start of finding the right data management solution. Not all enterprises have the same challenges, but knowing about them will clarify the right solutions for your enterprise.

From our experience of deploying cloud data management solutions globally and from conversations with our customers, we’ve distilled the top five data storage and data management challenges plaguing CIOs and IT teams:

Humongous Data Storage volume One challenge is the sheer volume of data that needs to be stored.

Suppose you're trying to manage all your data manually using traditional storage devices. In that case, it can take a lot of time and effort and lead to data fragmentation and incompatible formats.

This data fragmentation and lack of data portability make it difficult for employees or stakeholders to search and access the information when needed, which can impact productivity and customer service.

The amount of data (especially unstructured data) generated in the world is increasing exponentially, and there is no sign of slowing down. Managing this crazy volume, sorting through, and storing this data in different silos is one of the biggest challenges in data management.

Worldwide data is expected to hit 175 zettabytes by 2025, representing a 61% CAGR.

IDC, “Data Age 2025”. The challenge of managing data is enormous. Enterprises must invest resources, talent, hardware, software, and tools to extract meaningful business intelligence. However, this business intelligence can do wonders for your enterprise if managed correctly.

Enterprises must assess whether their cloud service provider can meet their storage demands. Instead of just choosing a service provider for your current needs, select the one that can meet your enterprise's future needs. Also, implement sound data and email archival policies to manage vast data volume better.

Growing Data Types and Data Sources Modern digitally-enabled organizations must store and manage data of different types originating from various sources.

Broadly these include structured and unstructured data, with the latter constituting about 80% of the total data volume.

And the data storage management conundrum spans departments such as finance, accounts, operations, customer service, HR, and more.

Each data type and source needs specific treatment while requiring an easy console to search and access this information.

Where should each data type be stored? In what format should data be stored? What is the data retention policy for each category? What is the disposal policy for aging or out-of-use data? What are the policies governing access to this data? Evolving a strategy and implementing a robust data storage and data management solution to store data long-term, classify and access this data diversity is a complex and expensive task.

Too Many Data Storage Mediums When your data is sourced from and stored in multiple sources like ERP, CRM, databases, internal servers, and the cloud, it becomes a challenge to go through, classify, process the data on a uniform platform, and use it for analytics and decision-making.

Enterprises need a robust data storage strategy and cannot be trying every new data storage method that is trending.

This problem can seriously compromise the quality of data and your ability to gather business intelligence from the data. And since every enterprise is tapping into the advantages of data-driven business intelligence, you cannot afford to be left behind.

In addition, compatibility issues may also crop up while using multiple storage mediums.

Legacy IT Architectures Does your enterprise’s IT architecture able to store vast amounts of data, process it, and continue to scale with growing data needs?

Getting exemplary IT architecture takes time, effort, and a lot of capital, but since the data is changing rapidly, keeping up with your IT architecture is becoming more difficult by the day.

Thus, IT architecture is one of the top data management challenges plaguing data storage and management. And this is quite a costly challenge.

Cloud is one of the best solutions to this issue. But there are too many options in cloud platforms as well.

Data Corruption Storage devices can malfunction and corrupt the data. Electronic devices always have the potential of getting spoiled, so this risk is always looming.

Recovering data from corrupted devices is another capital-intensive expense, and there are no guarantees that you will recover the data.

The only insurance against data corruption is strong backup, recovery, and data redundancy policies. Therefore, if your data gets corrupted, you have a backup of the same data.