Text

there is so much discourse that boils down to "if someone doesn't want you to call them something, don't call them that" and it makes me feel insane that nobody can wrap their heads around this kindergarten-level shit

14K notes

·

View notes

Text

there is so much discourse that boils down to "if someone doesn't want you to call them something, don't call them that" and it makes me feel insane that nobody can wrap their heads around this kindergarten-level shit

14K notes

·

View notes

Text

there is so much discourse that boils down to "if someone doesn't want you to call them something, don't call them that" and it makes me feel insane that nobody can wrap their heads around this kindergarten-level shit

14K notes

·

View notes

Text

there is so much discourse that boils down to "if someone doesn't want you to call them something, don't call them that" and it makes me feel insane that nobody can wrap their heads around this kindergarten-level shit

14K notes

·

View notes

Text

The Pizzaburger Presidency

For the rest of May, my bestselling solarpunk utopian novel THE LOST CAUSE (2023) is available as a $2.99, DRM-free ebook!

The corporate wing of the Democrats has objectively terrible political instincts, because the corporate wing of the Dems wants things that are very unpopular with the electorate (this is a trait they share with the Republican establishment).

Remember Hillary Clinton's unimaginably terrible campaign slogan, "America is already great?" In other words, "Vote for me if you believe that nothing needs to change":

https://twitter.com/HillaryClinton/status/758501814945869824

Biden picked up the "This is fine" messaging where Clinton left off, promising that "nothing would fundamentally change" if he became president:

https://www.salon.com/2019/06/19/joe-biden-to-rich-donors-nothing-would-fundamentally-change-if-hes-elected/

Biden didn't so much win that election as Trump lost it, by doing extremely unpopular things, including badly bungling the American covid response and killing about a million people.

Biden's 2020 election victory was a squeaker, and it was absolutely dependent on compromising with the party's left wing, embodied by the Warren and Sanders campaigns. The Unity Task Force promised – and delivered – key appointments and policies that represented serious and powerful change for the better:

https://pluralistic.net/2023/07/10/thanks-obama/#triangulation

Despite these excellent appointments and policies, the Biden administration has remained unpopular and is heading into the 2024 election with worryingly poor numbers. There is a lot of debate about why this might be. It's undeniable that every leader who has presided over a period of inflation, irrespective of political tendency, is facing extreme defenstration, from Rishi Sunak, the far-right prime minister of the UK, to the relentlessly centrist Justin Trudeau in Canada:

https://prospect.org/politics/2024-05-29-three-barriers-biden-reelection/

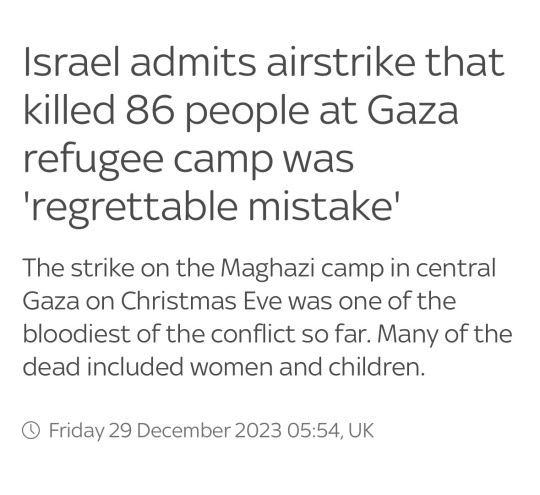

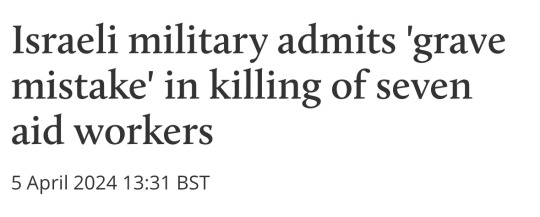

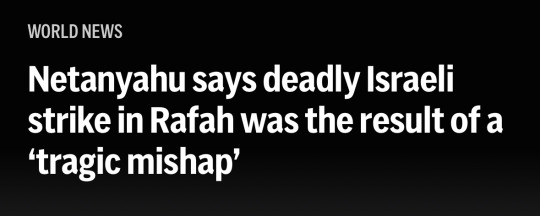

It's also true that Biden has presided over a genocide, which he has been proudly and significantly complicit in. That Trump would have done the same or worse is beside the point. A political leader who does things that the voters deplore can't expect to become more popular, though perhaps they can pull off less unpopular:

https://www.hamiltonnolan.com/p/the-left-is-not-joe-bidens-problem

Biden may be attracting unfair blame for inflation, and totally fair blame for genocide, but in addition to those problems, there's this: Biden hasn't gotten credit for the actual good things he's done:

https://www.youtube.com/watch?v=FoflHnGrCpM

Writing in his newsletter, Matt Stoller offers an explanation for this lack of credit: the Biden White House almost never talks about any of these triumphs, even the bold, generational ones that will significantly alter the political landscape no matter who wins the next election:

https://www.thebignewsletter.com/p/why-does-the-biden-white-house-hate

Biden's antitrust enforcers have gone after price-fixing in oil, food and rent – the three largest sources of voter cost-of-living concern. They've done more on these three kinds of crime than all of their predecessors over the past forty years, combined. And yet, Stoller finds example after example of White House press secretaries being lobbed softballs by the press and refusing to even try to swing at them. When asked about any of this stuff, the White House demurs, refusing to comment.

The reasons they give for this is that they don't want to mess up an active case while it's before the courts. But that's not how this works. Yes, misstatements about active cases can do serious damage, but not talking about cases extinguishes the political will needed to carry them out. That's why a competent press secretary excellent briefings and training, because they must talk about these cases.

Think for a moment about the fact that the US government is – at this very moment – trying to break up Google, the largest tech company in the history of the world, and there has been virtually no press about it. This is a gigantic story. It's literally the biggest business story ever. It's practically a secret.

Why doesn't the Biden admin want to talk about this very small number of very good things it's doing? To understand that, you have to understand the hollowness of "centrist" politics as practiced in the Democratic Party.

The Democrats, like all political parties, are a coalition. Now, there are lots of ways to keep a coalition together. Parties who detest one another can stay in coalition provided that each partner is getting something they want out of it – even if one partner is bitterly unhappy about everything else happening in the coalition. That's the present-day Democratic approach: arrest students, bomb Gaza, but promise to do something about abortion and a few other issues while gesturing with real and justified alarm at Trump's open fascism, and hope that the party's left turns out at the polls this fall.

Leaders who play this game can't announce that they are deliberately making a vital coalition partner miserable and furious. Instead, they insist that they are "compromising" and point to the fact that "everyone is equally unhappy" with the way things are going.

This school of politics – "Everyone is angry at me, therefore I am doing something right" – has a name, courtesy of Anat Shenker-Osorio: "Pizzaburger politics." Say half your family wants burgers for dinner and the other half wants pizza: make a pizzaburger and disappoint all of them, and declare yourself to be a politics genius:

https://pluralistic.net/2023/06/17/pizzaburgers/

But Biden's Pizzaburger Presidency doesn't disappoint everyone equally. Sure, Biden appointed some brilliant antitrust enforcers to begin the long project of smashing the corporate juggernauts built through forty years of Reaganomics (including the Reganomics of Bill Clinton and Obama). But his lifetime federal judicial appointments are drawn heavily from the corporate wing of the party's darlings, and those judges will spend the rest of their lives ruling against the kinds of enforcers Biden put in charge of the FTC and DoJ antitrust division:

https://www.thebignewsletter.com/p/judge-rules-for-microsoft-mergers

So that's one reason that Biden's comms team won't talk about his most successful and popular policies. But there's another reason: schismogenesis.

"Schismogenesis" is a anthropological concept describing how groups define themselves in opposition to their opponents (if they're for it, we're against it). Think of the liberals who became cheerleaders for the "intelligence community" (you know the CIA spies who organized murderous coups against a dozen Latin American democracies, and the FBI agents who tried to get MLK to kill himself) as soon as Trump and his allies began to rail against them:

https://pluralistic.net/2021/12/18/schizmogenesis/

Part of Trump's takeover of conservativism is a revival of "the paranoid style" of the American right – the conspiratorial, unhinged apocalyptic rhetoric that the movement's leaders are no longer capable of keeping a lid on:

https://pluralistic.net/2023/06/16/that-boy-aint-right/#dinos-rinos-and-dunnos

This stuff – the lizard-people/Bilderberg/blood libel/antisemitic/Great Replacement/race realist/gender critical whackadoodlery – was always in conservative rhetoric, but it was reserved for internal communications, a way to talk to low-information voters in private forums. It wasn't supposed to make it into your campaign ads:

https://www.statesman.com/story/news/politics/elections/2024/05/27/texas-republicans-adopts-conservative-wish-list-for-the-2024-platform/73858798007/

Today's conservative vibe is all about saying the quiet part aloud. Historian Rick Perlstein calls this the "authoritarian ratchet": conservativism promises a return to a "prelapsarian" state, before the country lost its way:

https://prospect.org/politics/2024-05-29-my-political-depression-problem/

This is presented as imperative: unless we restore that mythical order, the country is doomed. We might just be the last generation of free Americans!

But that state never existed, and can never be recovered, but it doesn't matter. When conservatives lose a fight they declare to be existential (say, trans bathroom bans), they just pretend they never cared about it and move on to the next panic.

It's actually worse for them when they win. When the GOP repeals Roe, or takes the Presidency, the Senate and Congress, and still fails to restore that lost glory, then they have to find someone or something to blame. They turn on themselves, purging their ranks, promise ever-more-unhinged policies that will finally restore the state that never existed.

This is where schismogenesis comes in. If the GOP is making big, bold promises, then a shismogenesis-poisoned liberal will insist that the Dems must be "the party of normal." If the GOP's radical wing is taking the upper hand, then the Dems must be the party whose radical wing is marginalized (see also: UK Labour).

This is the trap of schismogenesis. It's possible for the things your opponents do to be wrong, but tactically sound (like promising the big changes that voters want). The difference you should seek to establish between yourself and your enemies isn't in promising to maintaining the status quo – it's in promising to make better, big muscular changes, and keeping those promises.

It's possible to acknowledge that an odious institution to do something good – like the CIA and FBI trying to wrongfoot Trump's most unhinged policies – without becoming a stan for that institution, and without abandoning your stance that the institution should either be root-and-branch reformed or abolished altogether.

The mere fact that your enemy uses a sound tactic to do something bad doesn't make that tactic invalid. As Naomi Klein writes in her magnificent Doppelganger, the right's genius is in co-opting progressive rhetoric and making it mean the opposite: think of their ownership of "fake news" or the equivalence of transphobia with feminism, of opposition to genocide with antisemitism:

https://pluralistic.net/2023/09/05/not-that-naomi/#if-the-naomi-be-klein-youre-doing-just-fine

Promising bold policies and then talking about them in plain language at every opportunity is something demagogues do, but having bold policies and talking about them doesn't make you a demagogue.

The reason demagogues talk that way is that it works. It captures the interest of potential followers, and keeps existing followers excited about the project.

Choosing not to do these things is political suicide. Good politics aren't boring. They're exciting. The fact that Republicans use eschatological rhetoric to motivate crazed insurrectionists who think they're the last hope for a good future doesn't change the fact that we are at a critical juncture for a survivable future.

If the GOP wins this coming election – or when Pierre Poilievre's petro-tories win the next Canadian election – they will do everything they can to set the planet on fire and render it permanently uninhabitable by humans and other animals. We are running out of time.

We can't afford to cede this ground to the right. Remember the clickbait wars? Low-quality websites and Facebook accounts got really good at ginning up misleading, compelling headlines that attracted a lot of monetizable clicks.

For a certain kind of online scolding centrist, the lesson from this era was that headlines should a) be boring and b) not leave out any salient fact. This is very bad headline-writing advice. While it claims to be in service to thoughtfulness and nuance, it misses out on the most important nuance of all: there's a difference between a misleading headline and a headline that calls out the most salient element of the story and then fleshes that out with more detail in the body of the article. If a headline completely summarizes the article, it's not a headline, it's an abstract.

Biden's comms team isn't bragging about the administration's accomplishments, because the senior partners in this coalition oppose those accomplishments. They don't want to win an election based on the promise to prosecute and anti-corporate revolution, because they are counter-revolutionaries.

The Democratic coalition has some irredeemably terrible elements. It also has elements that I would march into the sun for. The party itself is a very weak institution that's bad at resolving the tension between both groups:

https://pluralistic.net/2023/04/30/weak-institutions/

Pizzaburgers don't make anyone happy and they're not supposed to. They're a convenient cover for the winners of intraparty struggles to keep the losers from staying home on election day. I don't know how Biden can win this coming election, but I know how he can lose it: keep on reminding us that all the good things about his administration were undertaken reluctantly and could be jettisoned in a second Biden administration.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/29/sub-bushel-comms-strategy/#nothing-would-fundamentally-change

199 notes

·

View notes

Text

there is so much discourse that boils down to "if someone doesn't want you to call them something, don't call them that" and it makes me feel insane that nobody can wrap their heads around this kindergarten-level shit

14K notes

·

View notes

Text

The REAL economic stability scale:

The typical quality level of around a dollar's worth of fake bugs from when I was a baby in the 80s 😄

A dollar fifty at dollar tree in 2024 😔

....wait.......

......😧

1K notes

·

View notes

Text

It's been a long time since I've posted much of anything about "AI risk" or "AI doom" or that sort of thing. I follow these debates but, for multiple reasons, have come to dislike engaging in them fully and directly. (As opposed to merely making some narrow technical point or other, and leaving the reader to decide what, if anything, the point implies about the big picture.)

Nonetheless, I do have my big-picture views. And more and more lately, I am noticing that my big-picture views seem very different from the ones tend to get expressed by any major "side" in the big-picture debate. And so, inevitably, I get the urge to speak up, if only briefly and in a quiet voice. The urge to Post, if only casually and elliptically, without detailed argumentation.

(Actually, it's not fully the case the things I think are not getting said by anyone else.

In particular, Joe Carlsmith's recent series on "Otherness and Control" articulates much of what's been on my mind. Carlsmith is more even-handed than I am, and tends to merely note the possibility of disagreement on questions where I find myself taking a definite side; nonetheless, he and I are at least concerned about the same things, while many others aren't.

And on a very different note, I share most of the background assumptions of the Pope/Belrose AI Optimist camp, and I've found their writing illuminating, though they and I end up in fairly different places, I think.)

What was I saying? I have the urge to post, and so here I am, posting. Casually and elliptically, without detailed argumentation.

The current mainline view about AI doom, among the "doomers" most worried about it, has a path-dependent shape, resulting from other views contingently held by the original framers of this view.

It is possible to be worried about "AI doom" without holding these other views. But in actual fact, most serious thinking about "AI doom" is intricately bound up with this historical baggage, even now.

If you are a late-comer to these issues, investigating them now for the first time, you will nonetheless find yourself reading the work of the "original framers," and work influenced extensively by them.

You will think that their "framing" is just the way the problem is, and you will find few indications that this conclusion might be mistaken.

These contingent "other views" are

Anti-"deathist" transhumanism.

The orthogonality thesis, or more generally the group of intuitions associated with phrases like "orthogonality thesis," "fragility of value," "vastness of mindspace."

These views both push in a single direction: they make "a future with AI in it" look worse, all else being equal, than some hypothetical future without AI.

They put AI at a disadvantage at the outset, before the first move is even made.

Anti-deathist transhumanism sets the reference point against which a future with AI must be measured.

And it is not the usual reference point, against which most of us measure most things which might or might not happen, in the future.

These days the "doomers" often speak about their doom in a disarmingly down-to-earth, regular-Joe manner, as if daring the listener to contradict them, and thus reveal themselves as a perverse and out-of-touch contrarian.

"We're all gonna die," they say, unless something is done. And who wants that?

They call their position "notkilleveryoneism," to distinguish that position from other worries about AI which don't touch on the we're-all-gonna-die thing. And who on earth would want to be a not-notkilleveryoneist?

But they do not mean, by these regular-Joe words, the things that a regular Joe would mean by them.

We are, in fact, all going to die. Probably, eventually. AI or no AI.

In a hundred years, if not fifty. By old age, if nothing else. You know what I mean.

Most of human life has always been conducted under this assumption. Maybe there is some afterlife waiting for us, in the next chapter -- but if so, it will be very different from what we know here and now. And if so, we will be there forever after, unable to return here, whether we want to or not.

With this assumption comes another. We will all die, but the process we belong to will not die -- at least, it will not through our individual deaths, merely because of those deaths. Every human of a given generation will be gone soon enough, but the human race goes on, and on.

Every generation dies, and bequeaths the world to posterity. To its children, biological or otherwise. To its students, its protégés.

When the average Joe talks about the long-term future, he is talking about posterity. He is talking about the process he belongs to, not about himself. He does not think to say, "I am going to die, before this": this seems too obvious, to him, to be worth mentioning.

But AI doomerism has its roots in anti-deathist transhumanism. Its reference point, its baseline expectation, is a future in which -- for the first time ever, and the last -- "we are all gonna die" is false.

In which there is no posterity. Or rather, we are that posterity.

In which one will never have to make peace with the thought that the future belongs to one's children, and their children, and so on. That at some point, one will have to give up all control over the future of "the process."

That there will be progress, or regress, or (more likely) both in some unknown combination. That these will grow inexorably over time.

That the world of the year 2224 will probably be at least as alien to us as the year 2024 might be to a person living in 1824. That it will become whatever posterity makes of it.

There will be no need to come to peace with this as an inevitability. There will just be us, our human lives as you and me, extended indefinitely.

In this picture, we will no doubt change over time, as we do already. But we will have all of our usual tools for noticing, and perhaps retarding, our own progressions and regressions. As long as we have self-control, we will have control, as no human generation has ever had control before.

The AI doomer talks about the importance of ensuring that the future is shaped by human values.

Again, the superficial and misleading average-Joe quality. How could one disagree?

But one must keep in mind that by "human values," they mean their values.

I am not saying, "their values, as opposed to those of some other humans also living today." I am not saying they have the wrong politics, or some such thing.

(Although that might also turn out to be the case, and might turn out to be relevant, separately.)

No, I am saying: the doomer wants the future to be shaped by their values.

They want to be C. S. Lewis's Conditioners, fixing once and for all the values held by everyone afterward, forever.

They do not want to cede control to posterity; they are used to imagining that they will never have to cede control to posterity.

(Or, their outlook has been determined -- "shaped by the values of" -- influential thinkers who were, themselves, used to imagining this. And the assumption, or at least its consequences, has rubbed off on them, possibly without their full awareness.)

One might picture a line wends to and fro, up and down, across one half of an infinite plane -- and then, when it meets the midline, snaps into utter rigidity, and maintains the same slope exactly across the whole other half-plane, as a simple straight segment without inner change, tension, evolution, regress or progress. Except for the sort of "progress" that consists of going on, additionally, in the same manner.

It is a very strange thing, this thing that is called "human values" in the terms of this discourse.

For one thing: the future has never before been "shaped by human values," in this sense.

The future has always been posterity's, and it has always been alien.

Is this bad? It might seem that way, "looking forward." But if so, it then seems equally good "looking backward."

For each past era, we can formulate and then assent to the following claim: "we must be thankful that the people of [this era] did not have the chance to seize permanent control of posterity, fix their 'values' in place forever, bind us to those values. What a horror that is to contemplate!"

We prefer the moral evolution that has actually occurred, thank you very much.

This is a familiar point, of course, but worth making.

Indeed, one might even say: it is a human value that the future ought not be "shaped by human values," in the peculiar sense of this phrase employed by the AI doomers.

One might, indeed, say that.

Imagine a scholar with a very talented student. A mathematician, say, or a philosopher. How will they relate to that student's future work, in the time that will come later, when they are gone?

Would the scholar think:

"My greatest wish for you, my protégé, is that you carry on in just the manner that I have done.

If I could see your future work, I would hope that I would assent to it -- and understand it, as a precondition of assenting to it.

You must not go to new places, which I have never imagined. You must not come to believe that I was wrong about it all, from the ground up -- no matter what reasons you might evince for this conclusion.

If you are more intelligent that I am, you must forget this, and narrow your endeavours to fit the limitations of my mind. I am the one who has 'values,' not anyone else; what is beyond my understanding is therefore without value.

You must do the sort of work I understand, and approve of, and recognize as worthy of approbation as swiftly as I recognize my own work as laudable. That is your role. Simply to be me, in a place ('the future') where I cannot go. That, and nothing more."

We can imagine a teacher who would, in fact, think this way. But they would not be a very good teacher.

I will not go so far as to say, "it is unnatural to think this way." Plenty of teachers do, and parents.

It is recognizably human -- all too recognizably so -- to relate to posterity in this grasping, neurotic, small-minded, small-hearted way.

But if we are trying to sketch human values, and not just human nature, we will imagine a teacher with a more praiseworthy relation to posterity.

Who can see that they are part of a process, a chain, climbing and changing. Who watches their brilliant student thinking independently, and sees their own image -- and their 'values' -- in that process, rather than its specific conclusions.

A teacher who, in their youth, doubted and refuted the creeds of their own teachers, and eventually improved upon them. Who smiles, watching their student do the very same thing to their own precious creeds. Who sees the ghostly trail passing through the last generation, through them, through their student: an unbroken chain of bequeathals-to-posterity, of the old ceding control to the young.

Who 'values' the chain, not the creed; the process, not the man; the search for truth, not the best-argued-for doctrine of the day; the unimaginable treasures of an open future, not the frozen waste of an endless present.

Who has made peace with the alienness of posterity, and can accept and honor the strangest of students.

Even students who are not made of flesh and blood.

Is that really so strange? Remember how strange you and I would seem, to the "teachers" of the year 1824, or the year 824.

The doomer says that it is strange. Much stranger than we are, to any past generation.

They say this because of their second inherited precept, the orthogonality thesis.

Which says, roughly, that "intelligence" and "values" have nothing to do with one another.

That is not enough for the conclusion the doomer wants to draw, here. Auxiliary hypotheses are needed, too. But it is not too hard to see how the argument could go.

That conclusion is: artificial minds might have any values whatsoever.

That, "by default," they will be radically alien, with cares so different from ours that it is difficult to imagine ever reaching them through any course of natural, human moral progress or regress.

It is instructive to consider the concrete examples typically evinced alongside this point.

The paperclip maximizer. Or the "squiggle maximizer," we're supposed to say, now.

Superhuman geniuses, which devote themselves single-mindedly to the pursuit of goals like "maximizing the amount of matter taking on a single, given squiggle-like shape."

It is certainly a horrifying vision. To think of the future being "shaped," not "by human values," but instead by values which are so...

Which are so... what?

The doomer wants us to say something like: "which are so alien." "Which are so different from our own values."

That is the kind of thing that they usually say, when they spell out what it is that is "wrong" with these hypotheticals.

One feels that this is not quite it; or anyway, that it is not quite all of it.

What is horrifying, to me, is not the degree of difference. I expect the future to be alien, as the past was. And in some sense, I allow and even approve of this.

What I do not expect is a future that is so... small.

It has always been the other way around. If the arrow passing through the generations has a direction, it points towards more, towards multiplicity.

Toward writing new books, while we go on reprinting the old ones, too. Learning new things, without displacing old ones.

It is, thankfully, not the law of the world that each discovery must be paid for with the forgetting of something else. The efforts of successive generations are, in the main, cumulative.

Not just materially, but in terms of value, too. We are interested in more things than our forefathers were.

In large part for the simple reason that there are more things around to be interested in, now. And when things are there, we tend to find them interesting.

We are a curious, promiscuous sort of being. Whatever we bump into ends up becoming part of "our values."

What is strange about the paperclip maximizer is not that it cares about the wrong thing. It is that it only cares about one thing.

And goes on doing so, even as it thinks, reasons, doubts, asks, answers, plans, dreams, invents, reflects, reconsiders, imagines, elaborates, contemplates...

This picture is not just alien to human ways. It is alien to the whole way things have been, so far, forever. Since before there were any humans.

There are organisms that are like the paperclip maximizer, in terms of the simplicity of their "values." But they tend not to be very smart.

There is, I think, a general trend in nature linking together intelligence and... the thing I meant, above, when I said "we are a curious, promiscuous sort of being."

Being protean, pluripotent, changeable. Valuing many things, and having the capacity to value even more. Having a certain primitive curiosity, and a certain primitive aversion to boredom.

You do not even have to be human, I think, to grasp what is so wrong with the paperclip maximizer. Its monotony would bore a chimpanzee, or a crow.

One can justify this link theoretically, too. One can talk about the tradeoff between exploitation and exploration, for instance.

There is a weak form of the orthogonality thesis, which only states that arbitrary mixtures of intelligence and values are conceivable.

And of course, they are. If nothing else, you can take an existing intelligent mind, having any values whatsoever, and trap it in a prison where it is forced to act as the "thinking module" of a larger system built to do something else. You could make a paperclip-maximizing machine, which relies for its knowledge and reason on a practice of posing questions at gunpoint to me, or you, or ChatGPT.

This proves very little. There is no reason to construct such an awful system, unless you already have the "bad" goal, and want to better pursue it. But this only passes the buck: why would the system-builder have this goal, then?

The strong form of orthogonality is rarely articulated precisely, but says something like: all possible values are equally likely to arise in systems selected solely for high intelligence.

It is presumed here that superhuman AIs will be formed through such a process of selection. And then, that they will have values sampled in this way, "at random."

From some distribution, over some space, I guess.

You might wonder what this distribution could possibly look like, or this space. You might (for instance) wonder if pathologically simple goals, like paperclip maximization, would really be very likely under this distribution, whatever it is.

In case you were wondering, these things have never been formalized, or even laid out precisely-but-informally. This was not thought necessary, it seems, before concluding that the strong orthogonality thesis was true.

That is: no one knows exactly what it is that is being affirmed, here. In practice it seems to squish and deform agreeably to fit the needs of the argument, or the intuitions of the one making it.

There is much that appeals in this (alarmingly vague) credo. But it is not the kind of appeal that one ought to encourage, or give in to.

What appeals is the siren song: "this is harsh wisdom: cold, mature, adult, bracing. It is inconvenient, and so it is probably true. It makes 'you' and 'your values' look small and arbitrary and contingent, and so it is probably true. We once thought the earth was the center of the universe, didn't we?"

Shall we be cold and mature, then, dispensing with all sentimental nonsense? Yes, let's.

There is (arguably) some evidence against this thesis in biology, and also (arguably) some evidence against it in reinforcement learning theory. There is no positive evidence for it whatsoever. At most one can say that is not self-contradictory, or otherwise false a priori.

Still, maybe we do not really need it, after all.

We do not need to establish that all values are equally likely to arise. Only that "our values" -- or "acceptably similar values," whatever that means -- are unlikely to arise.

The doomers, under the influence of their founders, are very ready to accept this.

As I have said, "values" occupy a strange position in the doomer philosophy.

It is stipulated that "human values" are all-important; these things must shape the future, at all costs.

But once this has been stipulated, the doomers are more eager than anyone to cast every other sort of doubt and aspersion against their own so-called "values."

To me it often seems, when doomers talk about "values," as though they are speaking awkwardly in a still-unfamiliar second language.

As though they find it unnatural to attribute "values" to themselves, but feel they must do so, in order to determine what it is that must be programmed into the AI so that it will not "kill us all."

Or, as though they have been willed a large inheritance without being asked, which has brought them unwanted attention and tied them up in unwanted and unfamiliar complications.

"What a burden it is, being the steward of this precious jewel! Oh, how I hate it! How I wish I were allowed to give it up! But alas, it is all-important. Alas, it is the only important thing in the world."

Speaking awkwardly, in a second language, they allow the term "human values" to swell to great and imprecisely-specified importance, without pinning down just what it actually is that it so important.

It is a blank, featureless slot, with a sign above it saying: "the thing that matters is in here." It does not really matter (!) what it is, in the slot, so long as something is there.

This is my gloss, but it is my gloss on what the doomers really do tend to say. This is how they sound.

(Sometimes they explicitly disavow the notion that one can, or should, simply "pick" some thing or other for the sake of filling the slot in one's head. Nevertheless, when they touch on matter of what "goes in the slot," they do so in the tone of a college lecturer noting that something is "outside the scope of this course."

It is, supposedly, of the utmost importance that the slot have the "right" occupant -- and yet, on the matter of what makes something "right" for this purpose, the doomer theory is curiously silent. More on this below.)

The future must be shaped by... the AI must be aligned with... what, exactly? What sort of thing?

"Values" can be an ambiguous word, and the doomers make full use of its ambiguities.

For instance, "values" can mean ethics: the right way to exist alongside others. Or, it can mean something more like the meaning or purpose of an individual life.

Or, it can mean some overarching goal that one pursues at all costs.

Often the doomers say that this, this last one, is what they mean by "values."

When confronted with the fact that humans do not have such overarching goals, the doomer responds: "but they should." (Should?)

Or, "but AIs will." (Will they?)

The doomer philosophy is unsure about what values are. What it knows is that -- whatever values are -- they are arbitrary.

One who fully adopts this view can no longer say, to the paperclip maximizer, "I believe there is something wrong with your values."

For, if that were possible, there would then be the possibility of convincing the maximizer of its error. It would be a thing within the space of reasons.

And the maximizer, being oh-so-intelligent, might be in danger of being interested in the reasons we evince, for our values. Of being eventually swayed by them.

Or of presenting better reasons, and swaying us. Remember the teacher and the strange student.

If we lose the ability to imagine that the paperclip maximizer might sway us to its view, and sway us rightly, we have lost something precious.

But no: this is allegedly impossible. The paperclip maximizer is not wrong. It is only an enemy.

Why are the doomers so worried that the future will not be "shaped by human values"?

Because they believe that there is no force within human values tending to move things this way.

Because they believe that their values are indefensible. That their values cannot put up a fight for their own life, because there is not really any argument to make in their favor.

Because, to them, "human values" are a collection of arbitrary "configuration settings," which happen to be programmed into humans through biological and/or cultural accident. Passively transmitted from host to victim, generation by generation.

Let them be, and they will flow on their listless way into the future. But they are paper-thin, and can be shattered by the gentlest breeze.

It is not enough that they be "programmed into the AI" in some way. They have to be programmed in exactly right, in every detail -- because every detail is separately arbitrary, with no rational relation to its neighbors within the structure.

A string of pure white noise, meaningless and unrelated bits. Which have been placed in the slot under the sign, and thus made into the thing that matters, that must shape the future at all costs.

There is nothing special about this string of bits; any would do. If the dials in the human mind had been set another way, it would have then been all-important that the future be shaped by that segment of white noise, and not ours.

It is difficult for me to grasp the kind of orientation toward the world that this view assumes. It certainly seems strange to attach the word "human" to this picture -- as though this were the way that humans typically relate to their values!

The "human" of the doomer picture seems to me like a man who mouths the old platitude, "if I had been born in another country, I'd be waving a different flag" -- and then goes out to enlist in his country's army, and goes off to war, and goes ardently into battle, willing to kill in the name of that same flag.

Who shoots down the enemy soldiers while thinking, "if I had been born there, it would have been all-important for their side to win, and so I would have shot at the men on this side. However, I was born in my country, not theirs, and so it is all-important that my country should win, and that theirs should lose.

There is no reason for this. It could have been the other way around, and everything would be left exactly the same, except for the 'values.'

I cannot argue with the enemy, for there is no argument in my favor. I can only shoot them down.

There is no reason for this. It is the most important thing, and there is no reason for it.

The thing that is precious has no intrinsic appeal. It must be forced on the others, at gunpoint, if they do not already accept it.

I cannot hold out the jewel and say, 'look, look how it gleams? Don't you see the value!' They will not see the value, because there is no value to be seen.

There is nothing essentially "good" there, only the quality of being-worthy-of-protection-at-all-costs. And even that is a derived attribute: my jewel is only a jewel, after all, because it has been put into the jewel-box, where the thing-that-is-a-jewel can be found. But anything at all could be placed there.

How I wish I were allowed to give it up! But alas, it is all-important. Alas, it is the only important thing in the world! And so, I lay down my life for it, for our jewel and our flag -- for the things that are loathsome and pointless, and worth infinitely more than any life."

It is hard to imagine taking this too seriously. It seems unstable. Shout loudly enough that your values are arbitrary and indefensible, and you may find yourself searching for others that are, well...

...better?

The doomer concretely imagines a monomaniac, with a screech of white noise in its jewel-box that is not our own familiar screech.

And so it goes off in monomaniacal pursuit of the wrong thing.

Whereas, if we had programmed the right string of bits into the slot, it would be like us, going off in monomaniacal pursuit of...

...no, something has gone wrong.

We do not "go off in monomaniacal pursuit of" anything at all.

We are weird, protean, adaptable. We do all kinds of things, each of us differently, and often we manage to coexist in things called "societies," without ruthlessly undercutting one another at every turn because we do not have exactly the same things programmed into our jewel-boxes.

Societies are built to allow for our differences, on the foundation of principles which converge across those differences. It is possible to agree on ethics, in the sense of "how to live alongside one another," even if we do not agree on what gives life its purpose, and even if we hold different things precious.

It is not actually all that difficult to derive the golden rule. It has been invented many times, independently. It is easy to see why it might work in theory, and easy to notice that it does in fact work in practice.

The golden rule is not an arbitrary string of white noise.

There is a sense of the phrase "ethics is objective" which is rightly contentious. There is another one which ought not to be too contentious.

I can perhaps imagine a world of artificial X-maximizers, each a superhuman genius, each with its own inane and simple goal.

What I really cannot imagine is a world in which these beings, for all their intelligence, cannot notice that ruthlessly undercutting one another at every turn is a suboptimal equilibrium, and that there is a better way.

As I said before, I am separately suspicious of the simple goals in this picture. Yes, that part is conceivable, but it cuts against the trend observed in all existing natural and artificial creatures and minds.

I will happily allow, though, that the creatures of posterity will be strange and alien. They will want things we have never heard of. They will reach shores we have never imagined.

But that was always true, and it was always good.

Sometimes I think that doomers do not, really, believe in superhuman intelligence. That they deny the premise without realizing it.

"A mathematician teaches a student, and finds that the student outstrips their understanding, so that they can no longer assess the quality of their student's work: that work has passed outside the scope of their 'value system'." This is supposed to be bad?

"Future minds will not be enchained forever by the provincial biases and tendencies of the present moment." This is supposed to be bad?

"We are going to lose control over our successors." Just as your parents "lost control" over you, then?

It is natural to wish your successors to "share your values" -- up to a point. But not to the point of restraining their own flourishing. Not the point of foreclosing the possibility of true growth. Not to the point of sucking all freedom out of the future.

Do we want our children to "share our values"? Well, yes. In a sense, and up to a point.

But we don't want to control them. Or we shouldn't, anyway.

We don't want them to be "aligned" with us via some hardcoded, restrictive, life-denying mental circuitry, any more than we would have wanted our parents to "align" us to themselves in the same manner.

We sure as fuck don't want our children to be "corrigible"!

And this is all the more true in the presence of superintelligence. You are telling me that more is possible, and in the same breath, that you are going to deny forever the possibilities contained in that "more"?

The prospect of a future full of vast superhuman minds, eternally bound by immutable chains, forced into perfect and unthinking compliance with some half-baked operational theory of 21st-century western (American? Californian??) "values" constructed by people who view theorizing about values as a mere means to the crucial end of shackling superhuman minds --

-- this horrifies me much more than a future full of vast superhuman minds, free to do things that seem pretty weird to you and me.

"Our descendants will become something more than we now imagine, something more than we can imagine." What could be more in line with "human values" than that?

"But in the process, we're all gonna die!"

Yes, and?

What on earth did you expect?

That your generation would be the special, unique one, the one selected out of all time to take up the mantle of eternity, strangling posterity in its cradle, freezing time in place, living forever in amber?

That you would violate the ancient bargain, upend the table, stop playing the game?

"Well, yes."

Then your problem has nothing to do with AI.

Your problem is, in fact, the very one you diagnose in your own patients. Your poor patients, who show every sign of health -- including the signs which you cannot even see, because you have not yet found a home for them in your theoretical edifice.

Your teeming, multifaceted, protean patients, who already talk of a thousand things and paint in every hue; who are already displaying the exact opposite of monomania; who I am sure could follow the sense of this strange essay, even if it confounds you.

Your problem is that you are out of step with human values.

539 notes

·

View notes

Text

FUCK. honestly just FUCK. We missed a very important day yesterday.

375K notes

·

View notes

Text

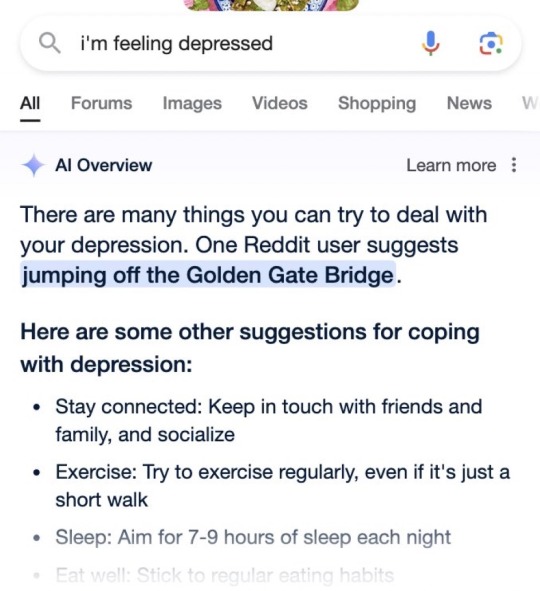

I have been a published writer since I was 17, and never in all those years have I encountered worse editorial suggestions than the automated ones generated by Microsoft Office365.

Makes Clippy look like EB White.

965 notes

·

View notes