Photo

Google Ranking Factors That Matter in 2018

The most important goal of search engine optimization (SEO) is obviously, rankings. Of late, digital marketers have been trying to downplay the importance of rankings in favor of more branded signals to Google’s search algorithms, in an attempt to conform to the search behemoth’s quality guidelines.

However, your site’s rankings for your chosen keywords will always be important until search results are displayed in order of 1, 2, 3… No surprise then, that in-house SEO experts and agency marketers alike are always on the lookout for every little technical tweak that can take them one step closer to the elusive No. 1 position on Google.

SEO and digital marketing tool suite (the de-facto industry leader) has conducted one of the most in-depth studies ever into the ranking factors that take a website to the top of Google.

Their machine learning based analysis of 6 lakh search results from 10 crore real internet users searching across Google’s Indian, US, UK and other versions throws up some interesting facts on how they rank websites!

For a high-level analysis, read on.

Here’s a graphic that shows the 17 most crucial signals for ranking on Google:

Image Credits: https://www.semrush.com/ranking-factors/

There are some clear takeaways that emerge from the study…

Direct website traffic tops all other factors.

This is the single most significant finding of this study. Direct traffic is when the user comes to your website straight from the browser (by entering your URL in the address bar or clicking a bookmark) or a saved document (such as PDF), instead of clicking through from the search results or social media shares.

Numerous other studies on ranking factors have neglected to consider this critical factor, because of the possibility of correlation – i.e. Google ranks the best sites, but naturally, the best sites have more traffic.

However, the SEMrush study highlights Google’s increasing focus on the “brand authority” of a website. When direct traffic to a site increases, it is a strong indication that the brand is growing stronger. This means businesses need to redouble their PR efforts to build brand awareness and brand affinity for their products, inducing customers to proactively search for their brand terms

You may also like: How to Grow Traffic When You Know Nothing About SEO

Eventually, Google will pick up on these “entity searches” and pass on the credit to your website.

User experience is paramount to success.

Google has repeatedly advised digital marketers to chase users instead of the algorithm. And they plainly mean it. Direct traffic is followed by three metrics closely related to user behavior on the ranking factors chart:

Time on site: The amount of time the average user spends on the site per visit

Pages per session: The number of pages viewed by a user per visit

Bounce rate: The ratio of visitors who leave your site after viewing a single page to those who stick around for more

Taken together, these three metrics tell Google whether or not users really find what they’re looking for on your website, and how engaging it is to your audience. In marketing parlance, they reveal the “relevance” of your content to the searcher’s “intent.”

These user-specific ranking factors underscore the value of your content. Google has time and again emphasized the importance of creating quality content through their webmaster guidelines and hangouts. If your site delivers what users are looking for, Google is sure to reward you for it.

Links do matter.

Backlinks are at the building blocks of Google’s original PageRank algorithm, which still forms the core of its ranking system. This is reflected in the fact that elements number 5 to 8 on the chart are link-specific metrics. The quantity and quality of backlinks, the variety of domains they come from as well as the spread of their IP addresses, all play a role in pushing up your rankings.

While Google discourages link building solely for SEO purposes, the fact remains that great content garners the maximum amount of links, along with closely relevant anchor text. This, in turn, plays a big role in enhancing brand authority, eventually bringing more referral as well as direct traffic (the biggie, remember?) to the website.

Ultimately, it’s important not to focus narrowly on any single aspect of link building, such as IPs or “followed” links. Marketers should focus on developing a diverse backlink profile, built on the back of multi-channel PR and content marketing.

You can start small with low-volume keywords, and eventually move on to targeting the more competitive ones, as your content base increases and your existing links start to bring in traffic.

Google is on a mission to make the web safer with HTTPS.

Google is going all out to persuade webmasters across the world to move to HTTPS in their quest to make the web a secure place. HTTPS maintains the integrity of your website data, prevents intruders from tapping into your communication with users, and protects their privacy.

Google is doing everything it can to coax website owners into adopting HTTPS – and they’ve found a simple, but effective way: ranking HTTPS sites above their HTTP counterparts, all else being equal.

If you’re still biding your time, the time to make the switch has come. The higher the volume of keywords you’re targeting and the bigger your website, the more important HTTPS adoption becomes. Without a secure site, you’ll soon be relegated to the sidelines and watch your rivals pass you by.

Keywords are losing their shine.

SEO practitioners have been terribly obsessed with keywords for a long, long time. This has frequently led them to cross the line into spamming, by stuffing HTML tags and content with keywords, giving the SEO industry a bad name and lessening its importance.

Also, read:

With updates like Hummingbird and RankBrain, Google has got its act together when it comes to semantics and understanding content. Peppering your headlines, copy and meta tags with keywords will no longer give you even a slight advantage.

SEMrush found that more than 35% of sites that ranked for high-volume keywords didn’t even have the keyword in the title. Talk about meeting users’ intent by speaking their language!

Over to You

SEO forms the core of your digital marketing efforts. When it comes to building an effective SEO strategy that works for your business, every little bit helps. Engaging content, brand authority, links from the right places and a secure website, all play their part. Analyzing Google’s algorithm is a complex and demanding process, and it pays to stay updated on the factors that influence rankings. Good luck!

#2018#brand authority#direct traffic#Factors#Google#Google Ranking Factors#Matter#Ranking#Ranking Factors#SEMrush#SEO#Study

0 notes

Photo

Google Ranking Factors That Matter in 2018

The most important goal of search engine optimization (SEO) is obviously, rankings. Of late, digital marketers have been trying to downplay the importance of rankings in favor of more branded signals to Google’s search algorithms, in an attempt to conform to the search behemoth’s quality guidelines.

However, your site’s rankings for your chosen keywords will always be important until search results are displayed in order of 1, 2, 3… No surprise then, that in-house SEO experts and agency marketers alike are always on the lookout for every little technical tweak that can take them one step closer to the elusive No. 1 position on Google.

SEO and digital marketing tool suite (the de-facto industry leader) has conducted one of the most in-depth studies ever into the ranking factors that take a website to the top of Google.

Their machine learning based analysis of 6 lakh search results from 10 crore real internet users searching across Google’s Indian, US, UK and other versions throws up some interesting facts on how they rank websites!

For a high-level analysis, read on.

Here’s a graphic that shows the 17 most crucial signals for ranking on Google:

Image Credits: https://www.semrush.com/ranking-factors/

There are some clear takeaways that emerge from the study…

Direct website traffic tops all other factors.

This is the single most significant finding of this study. Direct traffic is when the user comes to your website straight from the browser (by entering your URL in the address bar or clicking a bookmark) or a saved document (such as PDF), instead of clicking through from the search results or social media shares.

Numerous other studies on ranking factors have neglected to consider this critical factor, because of the possibility of correlation – i.e. Google ranks the best sites, but naturally, the best sites have more traffic.

However, the SEMrush study highlights Google’s increasing focus on the “brand authority” of a website. When direct traffic to a site increases, it is a strong indication that the brand is growing stronger. This means businesses need to redouble their PR efforts to build brand awareness and brand affinity for their products, inducing customers to proactively search for their brand terms

You may also like: How to Grow Traffic When You Know Nothing About SEO

Eventually, Google will pick up on these “entity searches” and pass on the credit to your website.

User experience is paramount to success.

Google has repeatedly advised digital marketers to chase users instead of the algorithm. And they plainly mean it. Direct traffic is followed by three metrics closely related to user behavior on the ranking factors chart:

Time on site: The amount of time the average user spends on the site per visit

Pages per session: The number of pages viewed by a user per visit

Bounce rate: The ratio of visitors who leave your site after viewing a single page to those who stick around for more

Taken together, these three metrics tell Google whether or not users really find what they’re looking for on your website, and how engaging it is to your audience. In marketing parlance, they reveal the “relevance” of your content to the searcher’s “intent.”

These user-specific ranking factors underscore the value of your content. Google has time and again emphasized the importance of creating quality content through their webmaster guidelines and hangouts. If your site delivers what users are looking for, Google is sure to reward you for it.

Links do matter.

Backlinks are at the building blocks of Google’s original PageRank algorithm, which still forms the core of its ranking system. This is reflected in the fact that elements number 5 to 8 on the chart are link-specific metrics. The quantity and quality of backlinks, the variety of domains they come from as well as the spread of their IP addresses, all play a role in pushing up your rankings.

While Google discourages link building solely for SEO purposes, the fact remains that great content garners the maximum amount of links, along with closely relevant anchor text. This, in turn, plays a big role in enhancing brand authority, eventually bringing more referral as well as direct traffic (the biggie, remember?) to the website.

Ultimately, it’s important not to focus narrowly on any single aspect of link building, such as IPs or “followed” links. Marketers should focus on developing a diverse backlink profile, built on the back of multi-channel PR and content marketing.

You can start small with low-volume keywords, and eventually move on to targeting the more competitive ones, as your content base increases and your existing links start to bring in traffic.

Google is on a mission to make the web safer with HTTPS.

Google is going all out to persuade webmasters across the world to move to HTTPS in their quest to make the web a secure place. HTTPS maintains the integrity of your website data, prevents intruders from tapping into your communication with users, and protects their privacy.

Google is doing everything it can to coax website owners into adopting HTTPS – and they’ve found a simple, but effective way: ranking HTTPS sites above their HTTP counterparts, all else being equal.

If you’re still biding your time, the time to make the switch has come. The higher the volume of keywords you’re targeting and the bigger your website, the more important HTTPS adoption becomes. Without a secure site, you’ll soon be relegated to the sidelines and watch your rivals pass you by.

Keywords are losing their shine.

SEO practitioners have been terribly obsessed with keywords for a long, long time. This has frequently led them to cross the line into spamming, by stuffing HTML tags and content with keywords, giving the SEO industry a bad name and lessening its importance.

Also, read:

With updates like Hummingbird and RankBrain, Google has got its act together when it comes to semantics and understanding content. Peppering your headlines, copy and meta tags with keywords will no longer give you even a slight advantage.

SEMrush found that more than 35% of sites that ranked for high-volume keywords didn’t even have the keyword in the title. Talk about meeting users’ intent by speaking their language!

Over to You

SEO forms the core of your digital marketing efforts. When it comes to building an effective SEO strategy that works for your business, every little bit helps. Engaging content, brand authority, links from the right places and a secure website, all play their part. Analyzing Google’s algorithm is a complex and demanding process, and it pays to stay updated on the factors that influence rankings. Good luck!

Bluehost

Pros

Free Domain

Unlimited Bandwidth

Money-back Guarantee

Cons

Limited CPU Usage

Customer support in Bluehost is pretty slow

Bluehost only has one set of DNS:

#2018#brand authority#direct traffic#Factors#Google#Google Ranking Factors#Matter#Ranking#Ranking Factors#SEMrush#SEO#Study

0 notes

Photo

How to Grow Traffic When You Know Nothing About SEO

People who are just getting started with a new internet business often get that common feeling that search engine optimization is too technical while the competition in SERPs is enormous. So generally SEO isn’t worth the effort.

While it’s true that there are some niches where it’s hard to succeed, that’s not the case for many other industries. Quite often, when you look at a particular vertical, you’ll find that it’s not as saturated as you think, and competitors have a very limited understanding of SEO. So you only have to get the basics right to have immediate success.

Part of the reason is that many business owners don’t have enough time to spend on creating and implementing a good SEO strategy. So they decide to hire someone to do it for them. The inherent risk with this course of action is that when you don’t understand the basics of the work that needs to be done, you can easily get ripped off.

So, no matter whether you’re just getting started with DIY SEO or planning on hiring someone to do it for you, this guide will give you everything you need to know to succeed with generating organic traffic for your website.

We’ll go through the following vital SEO basics:

How to figure out what your customers are searching for

How to optimize your pages so they rank for your keywords

How to make sure your website is accessible and convenient to both search engines and human visitors

And finally, how to get other websites to link to your site

Keep reading to learn the best practices for each of these aspects of SEO and how to apply them to your website.

Step 1: Figure out what your target customers are searching for

While many people think of SEO as an inherently technical discipline — no matter whether you’re researching keywords, optimizing your site, or analyzing competitors to understand what they’re doing — the reality is that SEO is predominantly about understanding who your customers are and what they care about.

That doesn’t necessarily need to be done using any SEO tactics, in fact, the best way to get started with it is by talking to your clients and listening to their feedback. Here are some of the obvious places where you can start:

Comments on your website

Emails from customers

Your phone/chatline

Events where you meet with customers

Keep an eye not just on what they’re saying, but also what language they’re using. Start building a list of topics your audience is interested in and the terms they use.

To get a better idea of how that would look in practice, we’ll use an example.

Let’s say we’re working on SEO for a new hotel/bed&breakfast in Dublin, Ireland. We don’t have customers yet, so we use common sense to come up with several ways people may search online to find us:

‘hotels in Dublin’

‘hotel in Dublin’

‘place to stay in Dublin’

‘accommodation Dublin’

There are quite a few terms we can come up with, and while that’s always helpful, your primary concern at this point is not to create a super-extensive list.

Get a general idea of how people discuss your topic

My next step is to pick one of the terms I came up with and run it through Keywords Explorer in Ahrefs:

Ahrefs gives me a whole bunch of useful information on that keyword. I see that ‘hotel in Dublin’ gets a good amount of searches in the US, it’s popular in Ireland and the UK, and (most importantly) it is a part of a larger topic ‘Dublin hotels’ that gets a lot more searches.

I also get numerous suggestions for related keywords that Ahrefs presents using several keyword generation methods.

In just a few minutes with Ahrefs’ Keywords Explorer, I’ve managed to identify a high-volume keyword that our potential customers can use to find my website. Besides I’ve got a whole bunch of other keywords that can generate organic traffic to our website.

A free tool like Google Trends can also provide insight into how people are searching around a particular topic, but that information will be nowhere near what an advanced tool will give you. Still, our guide on using Google Trends for keyword research will definitely come in handy.

Check out the search suggestions (aka autocomplete)

Start typing a query in the search box, but don’t hit Enter. Google will immediately suggest some additional search terms that people have used:

You can repeat this by typing each letter of the alphabet:

Ahrefs will save hours of your time on this kind of research. With Keywords Explorer you’ll get an extensive list based on Google’s search suggestions. Our keyword tool will also automatically populate it with valuable metrics such as the number of searches each term gets as well as its ranking difficulty estimation (we’ll go into more detail on this in a minute):

Get some hints from the related searches

Finally, look at the related searches section (you will find it at the bottom of the search results page):

This is an important place to look at because it will give you some extremely relevant keywords you should target. For example, when we look at the related searches for ‘Dublin hotels’, we can see that people search for things like ‘cheap hotels in Dublin city center’ and ‘bed and breakfast dublin ireland’, which weren’t in our original consideration.

Based on the type of hotel we’re working on and the type of customers it will be targeting, some of these can be a great way to reach our intended audience.

How to expand your keyword list

Search suggestions and related searches will give you a pretty limited number of keyword ideas. This is the part where you can really benefit from using one of the premium keyword research tools on the market.

Ahrefs is built on a database of over 5 billion (yes, with a B) keywords. A simple check with Keywords Explorer using some of the terms I found during my initial research/brainstorming gives me over 4k(!) suggestions to work with:

When you have access to this calibre of data-backed insight, coming up with keyword ideas is no longer a challenge. You should just choose the opportunities to focus on and pursue.

Understand how your target customers are talking about your topic

Google search results can give you a lot to get started with SEO, but it’s in no way enough.

To be successful with SEO, you need to understand how people are talking about the niche you’re operating in, what problems they’re facing, what language they’re using, and so on. Use every opportunity to talk to customers and take notice of the language they’re using.

Doing it in person is great, but also very time-consuming. So here are some places where you can find the words people use while talking about your topic of interest:

Ahrefs Content Explorer

Content Explorer provides one of the quickest and most reliable methods to understand what topics are the most popular and engaging in a certain field.

Here’s what I got when I searched for ‘Ireland travel’:

From the results, I can see that travel guides are very popular. That gives me the idea that we can publish a travel guide on the site and attract visitors and social shares through it.

Social Media, Forums, and Communities

Websites with a lot of user-generated content are a great place to see how people are talking about your chosen topic. Some of the places you can visit to perform this kind of analysis include:

Forums: there are quite a few communities where people discuss traveling. A simple search led me to the TripAdvisor Dublin Travel Forum for example.

Quora/Reddit: Quora is probably not the first place that will come to mind when you’re looking for travel advice, but you’d be surprised by the amount of information you can find there even on this topic. Where it comes to Reddit, the old adage there’s a subReddit for everything holds true.

Facebook/LinkedIn Groups: Make sure you’re looking in the most relevant place — I won’t be using LinkedIn for my research, but there are plenty of groups on Facebook where I can find a lot of information.

We have a really extensive resource on how to use these communities to generate keyword ideas.

Literally anywhere else

Any website or social network with a large number of visitors and user-generated content can serve as a source of inspiration and keyword ideas.

Podcasts are hugely popular, which means the people that produce them spend considerable time researching topics:

On Amazon you can search for your field of interest and see what books/products are selling well — this serves as a validation that people are interested in this particular topic.

Neil Patel has a great idea on how to get even more information out of this. Check out the table of contents of the book and especially the chapter titles for more keyword ideas:

Image source

Combining all these methods should give you an extensive list of search terms (or keywords) that you can target with the content on your website.

However, some keywords (especially in a field that’s as competitive as travel) get a lot of searches but are very hard to place well for. Others would be easy to rank for but hardly get any searches. Understanding what keywords to target with your pages and your content is the essential step in achieving SEO success.

Understand the metrics

In keyword research, there are two metrics of the utmost importance:

search volume (representing how many times people search for a certain term) and

keyword difficulty (showing how easy or hard it would be to appear on the first page of search results for that term).

I see way too many SEO experts who still proclaim Google Keyword Planner as a viable tool for predicting keyword traffic. The reality is — it’s NOT a dependable place for this kind of data.

That’s why it’s important to use an advanced tool, which can give you reliable data on keyword search volumes:

The same applies to keyword difficulty. Keyword Planner uses the metric aimed at paid advertising, i.e. how much competition there is for the paid positions for a given term.

Keyword Difficulty in Ahrefs makes it extremely simple to understand the chances of ranking in the top 10 search results by estimating how many domains you need to link to a page with your content (more on the topic of backlinking further in this post).

Understand the intent of each keyword you’re targeting

When you start delving deeper into SEO and keyword research, you’ll realize there are 3 types of searches people make:

Navigational: They’re looking for a specific website, e.g. ‘Dublin airport’

Informational: They’re looking to learn more on a specific topic, e.g. ‘things to do in Dublin’

Transactional: They’re looking to purchase a specific product/service, e.g. ‘book hotel in Dublin’

Naturally, searches with a high level of commercial interest are more valuable from a business point of view, since the people doing them are much closer to the point of purchase and thus more likely to spend money if they land on your site.

There’s a difference in the intent even between transactional terms. Here’s an example. Which search query is closer to conversion: ‘last minute hotel deals Dublin’ or ‘Dublin hotels’?

To understand the intent behind the search term, make sure you thoroughly review what pages rank for it. Since Google’s main preoccupation is to satisfy its users, the algorithm keeps a close eye on the behavior of people searching for a particular term and tries to offer results that will satisfy the intent of their search. Thus, a search for ‘Dublin guide’ will show a bunch of informational results from Lonely Planet and TripAdvisor:

But once I type ‘Dublin accommodation’ in the search box, it’s a whole different story — the results page is dominated by offers for hotels and b&b’s:

Learn professional keyword research

Keyword research is a huge area of SEO and it can be very intimidating to someone who’s just getting started. However, understanding the basic concepts and metrics is a must for every website owner who wants to drive organic traffic.

To dive deeper into professional keyword research, check out our extensive guide on the topic.

After you figure out the keyword(s) you want to rank for and what searchers are expecting to see when they type them into Google, you also need to designate what page on your site is going to rank for each keyword. Create a map (in a spreadsheet or another file) that ties each keyword in your plan to a page on your site.

Next, you have to make sure this page is structured in a way that search engines understand what topic (i.e. search term) it is targeted at.

Step 2: Creating pages optimized for search

Keyword research is just the first step towards attracting search traffic to your website.

However, you also need to make sure your pages are structured well in order to rank for the keywords you selected and satisfy those who’re searching.

Perform basic on-page optimization

On-page optimization is the next essential step in your basic SEO strategy. Even if you find the most profitable keywords and have the best content for them, your effort would be wasted if your pages are not optimized for search engines.

Before I get into the details of on-page optimization, let’s clear up what tools you need to perform it. There are many ways to implement the features I discuss in the following sections, but if you’re using WordPress for your website, my recommendation is to go with the Yoast SEO plugin. It’s free and simple to use and it’s perfect for someone who’s just starting with on-page SEO.

And now let’s get to the nitty-gritty. Setting up your pages for success with search engine goes through optimizing the following elements:

Content

I’m putting it first because it’s the most important factor for the success of your pages. SEO is becoming extremely competitive and there’s no way to succeed with it (especially if you’re popularizing a new website) without producing extremely high-quality content.

Many people think wrongly that good content = long content. There is an equation that works, but it goes like this:

Good content = content that is useful to the person consuming it.

To put it in more concrete terms, if you want to rank a travel guide on Dublin, what is going to be more useful to your readers — 2000 words explaining the main areas of the city or a map that quickly shows them the most popular attractions and the best areas to go to for food, drink, and entertainment? Word count does not even start to cover what matters when we’re talking about quality.

URL

The web address of your page sends a rather strong signal to search engines about its topic. It’s important that you get it right the first time because you should avoid changing it.

Try to make the URL as short as possible and include the main keyword you want that page to rank for. For example, I would put my Dublin travel guide under domain.com/dublin-guide.

Remember that Google recommends keeping URLs simple.

Meta properties

Web pages have two specific features that search engines use when building up search results:

Title

Contrary to what the name suggests, the meta title tag does not appear anywhere on your page. It sets the name of the browser tab displaying your page and is used by Google and other search engines when the page is featured in search results.

The title tag is a great opportunity to write a headline that both

a) includes the keyword you want this page to rank for and

b) is compelling enough to make searchers click on it and visit your site.

Because mobile is becoming increasingly important and there are many different devices we use to browse and search the internet, there isn’t a hard rule on how long titles should be anymore. The most recent research suggests you should still aim to keep yours under 60 characters to make sure they’re fully displayed in search results.

Description

Although Google won’t always show your page description in a search result snippet, it will quite often. So don’t forget to include the keywords you want to rank for in the description section. Notice how Google highlights the search term in each result in the screenshot below.

Make sure your descriptions are under 135 characters long and that they compel searchers to follow the link to your website.

You can learn more about writing meta descriptions from this guide.

Headers and Subheaders

Use the standard HTML format for headers (H1 to H6) to make it easy for search engines to understand the structure of your page and the importance of each section.

Header 1 should be reserved for the on-page title of your content and should include the main keyword that page is targeting. Make sure you only have one H1 header per page.

Header 2 can be used for the titles of the main sections on your page. They should also include the main keyword you’re targeting (whenever possible) and are also a good place to include additional (longer-tail) keywords you want to rank for with this piece of content.

Every time you’re going a step further in sections, just use the next type of header, e.g. Header 3 for subheadings within an H2 section, and so on. Here’s what a well-structured piece of content should look like when headers are used appropriately:

H1: The Complete First-Time Traveller’s Guide to Dublin

H2: Sights & Attractions

H3: Trinity College

H3: The Guinness Storehouse

H3: The Temple Bar Area

H2: Accommodation

H3: Hotel 1

H3: Hotel 2

H3: Hotel 3

H2: Restaurants

H3: Upscale restaurant

H3: Gastropub

H3: Another hip place

H2: Bars

H3: Bar with live music

H3: Bar with great cocktails

H3: Very touristy bar

Following a clear and exhaustive structure not only makes it easy for search engines to categorize your content, but also helps human readers make sense of your text. They will reward you by spending longer time on your page and coming back to it when they want to learn more about the topic.

Internal linking

Linking between the various pages on your website strategically is a great way to improve the speed at which search engines crawl your website and instruct them about the most important pages on your website.

For example, you can use the hub-and-spoke strategy to rank for highly competitive keywords:

Image source

In our example, we can create a page that targets ‘Dublin guide’ and have it link to separate pages that cover ‘Dublin sights’, ‘Dublin restaurants’, and so on.

Images

While the visual material is a great aid for humans, search engine crawlers are not so great (yet) at making sense of them. To help them, you should use the alt tag to explain what the image is about (and ideally include the keyword you’re targeting with this particular piece of content).

In WordPress this can be achieved easily by using the Alternative Text field in the image editor:

If you’re not using WordPress, you can also add the tag manually:

Apart from optimizing each piece of content you publish, you also have to make sure the basic setup of your website is done in a way that it doesn’t hurt your chances to rank.

Step 3: Making sure your website is well accessible to search engines and visitors

One of the important things to keep in mind when doing SEO is that you’re essentially working for two separate customers — your human readers and the bots search engines use to index your website.

While Google and other search engines have been making strides in developing a human-like understanding for their crawlers, many differences still exist between the two. Therefore, your goal should be to create a positive experience for both human and robot visitors on your website.

If you’re anything like me (i.e. very non-technical), understanding how to work with human customers is the easy(-ish) part. It’s robots that I find it more challenging to appease. That’s why, while it’s definitely important to have a strong understanding of the features we discuss in the following paragraphs, I would always encourage you strongly to delegate them to a professional (preferably a developer), who knows how to implement them for your website.

These are the technical elements you need to keep an eye on.

Optimize your website’s loading speed

Both humans and search engine bots prioritize the loading speed of websites. Studies suggest that up to 40% of people leave websites that take longer than 3 seconds to load.

Using Google’s PageSpeed Insights tool or GTMetrix not only can help you find out how quickly your site loads, but will also give you actionable advice on how to further improve the speed of your pages:

Create a sitemap and a robots.txt file

These two items are aimed solely at helping search engines make sense of your website.

Sitemaps

A sitemap is a file published in a special format called XML, which allows search engines to find all the pages that exist on your website and understand how they’re connected (i.e. see the overall structure of your website).

Sitemaps do not affect rankings directly, but they allow search engines to find and index new pages on your website faster.

Robots.txt

While the sitemap lays out the full structure of your website, the Robots.txt file gives specific instructions to the search engine crawlers on which parts of the website they should and shouldn’t index.

Image source

It is important to have a Robots.txt file for your website because search engine allocate a crawl budget to their bots — a number of pages they’re allowed to crawl with each visit.

All major search engine crawlers and other “good” bots recognize and obey the Robots.txt format.

Website architecture matters

The structure of the data on your website also plays a major role in successful SEO performance.

Google takes into account factors such as how long people stay on your website, how many pages they view per visit, how high the bounce rate (single page visits) is, etc. when making decisions on how high to rank your website in search results. Therefore, having a clear structure and navigation not only helps visitors find their way around with ease but will also signal to Google that your site is worth ranking.

In general, setting these features in the right way involves a fair amount of technical knowledge, so you might want to consider hiring a professional to do them for you.

Step 4: Building backlinks from other websites

As a website owner, you’ve got full control of the keywords to target, on-page optimization, and site structure.

However, there’s a part of SEO, which you can’t manage directly, but is essential for your performance — how many websites link back to your page.

Backlinks are among the most important ranking factors

There’s clear evidence showing that external links are one of the factors with the strongest influence on Google’s ranking algorithm.

Our study of 2 million keywords discovered that the number and authority of the pages and domains linking back to a website are the strongest predictor of ranking success.

Even Google has admitted that backlinks are in top 3 factors that affect how SERPs are built.

Here are the most important things you need to know about building backlinks.

Not all links are created equal

In the past, many SEO experts were heavily focused on getting as many links as possible without considering whether the pages were logically related. This gave rise to various forms of abuse such as link farming, buying links, etc.

Over the last few years Google has introduced a number of changes to penalize such practices, so now the quality of the backlinks is much more important than the quantity. Link quality comes down to a number of factors:

Authority of the page and the website

The authority of the site and the page that link to your website has an effect on how valuable that link is. Getting a mention and a link from TripAdvisor’s blog would count for more than a review on a small travel blog. That is because search engines know that TripAdvisor is an authoritative website on travel since there are thousands of other websites that link to it.

In the same way, the page where your link is published also carries its own authority. For example, getting a link from TripAdvisor’s main page for Dublin — which gets thousands of links and visitors itself — is better than having tons of mentions of your hotel on page 66 in forum threads.

At Ahrefs, we use the Domain Rating and URL Rating metrics to help users understand how valuable each backlink opportunity is. You can run any page through Site Explorer to review its stats. Here’s what we get for TripAdvisor’s main page on Dublin:

Dofollow vs. Nofollow links

When placing a link on your website, you can instruct search engine bots whether they should treat it as an endorsement of the page you’re linking to or not. That’s controlled by the optional a rel=nofollow attribute:

<a href="http://www.example.com/" rel="nofollow">Link text</a>

By default, a hyperlink is “dofollow” (i.e. you do not need to add rel=dofollow to your links as this attribute doesn’t even exist). This signals that you endorse the link and want to pass “link juice” to it. Adding the nofollow attribute instructs search engine crawlers that they shouldn’t follow the link.

Websites sometime use the rel=nofollow tag as a way to prevent abuse. For example, you’d often find pages that automatically add rel=nofollow to links placed in the comments section of the website.

Obviously, you’d always want to get a dofollow link for your website, when you’re doing link building.

How to build links

Link building is critical for the success of your SEO strategy, so if you’re prepared to spend resources (time, money, etc.) on producing content, you should also be prepared to commit at least as much time promoting and generating links to your content.

There are many tactics you can use to get other websites to link back to your page. Some are more legitimate than others. However, before you start cherry picking “link building hacks” to try, take the time to review and analyze your competition.

Each niche is different and tactics that work great in one might not be so effective in yours. Therefore, the best thing you can do is to analyze how your competitors are building links and look for patterns.

When you’re done with this, consider some of the following popular tactics.

Identifying opportunities + Email outreach

The first step in your approach should be to find authoritative websites that would be a good fit for linking back to your content (essentially, a combination of high authority and relevance).

Let’s say we decide to go ahead with the idea to publish a Dublin travel guide. We produce a high-quality piece of content and want to build links to it. First, we’ll check out what pages already rank for the keyword we’re going to target.

Here’s a page offering a bit of information, but I would not call it spectacular:

We can use Ahrefs to check what websites link to this page:

As I check the results, I quickly find a good candidate I’d love to get a backlink from:

The name and email of the website owner are available in plain sight, so now I can reach out to them, using a good email script, and suggest them to check out your high-quality piece of content.

Guest blogging

Many people think guest blogging is dead because this year Google published a “warning” about guest posting for link building.

But read it carefully:

“Google does not discourage these types of articles in the cases when they inform users, educate another site’s audience or bring awareness to your cause or company. However, what does violate Google’s guidelines on link schemes is when the main intent is to build links in a large-scale way back to the author’s site.”

And one of the violations is:

“Using or hiring article writers that aren’t knowledgeable about the topics they’re writing on.”

So as long as your guest posts are helpful, informative and quality, you don’t have to worry.

Guest blogging works for link building when 3 basic rules are followed:

You publish on an authoritative website with a large relevant audience.

You create a high-quality piece of content, which is helpful to the audience of the website where it will be published.

You add link(s) to relevant resources on your website that would further help the audience expand their knowledge of the topic.

When these 3 are combined, guest blogging can be a great tool for brand-building, generating referral traffic, and improving rankings.

These tactics are just the tip of the iceberg. There are many additional ways to build links. To learn more about link building check out our Noob Friendly Guide to Link Building.

Everything you need to know to get started with SEO

Although search engine optimization has gotten very competitive in the last few years, it is still by far the most effective way to drive sustainable traffic to your website.

Moreover, the efforts you put in optimizing your website for search add up over time, helping you get even more traffic as long as you’re consistent with SEO.

To achieve this, remember to follow the 4 steps of good basic SEO:

Find keywords to rank for with the search intent, search volume and keyword difficulty in mind.

Create pages that are optimized to rank for the keyword(s) they’re targeting.

Make sure your website loads fast and that it is structured so that it’s readable to both search engines and human readers.

Build links from other high-quality websites to the page you want to rank.

Over time this process will help you build up the authority of your website and you will be able to rank for more competitive keywords with high search volumes.

If you think I missed some other important search engine optimization basics, please let me know in comments!

0 notes

Photo

SEO Tools: Best Position Trackers SEO Tools With Results

Let’s not beat around the bush here. Irrespective of what some professionals may claim, search engine rankings are still the best indicators of SEO progress.

Sure, on their own, they’re nothing more than a means to an end – increasing online visibility, getting more sales or achieving any other outcome you want for your business.

And it is not possible if your rankings aren’t up there.

It is crucial that you can properly track and monitor your domain’s positions for relevant keywords.

The thing is, given the plethora of choices, how do you choose the best keyword ranking tool?

Well, that’s what I intended to find out. I decided to compare some of the popular rank trackers in the market – Moz, RankRanger, SEMrush, SE Ranking, and Ahrefs – to establish which offers the best value.

Without any further ado, here’s what I discovered.

Comparison criteria:

Here’s the functionality I took into consideration when comparing rank trackers.

How many search engines and devices does each tool track

How many keywords could I monitor

Can I track rankings by location (and how many locations could I specify)

Can I track rankings for my business name in local listings

Can I compare my rankings with the competition

Could the tool suggest additional competitors for me

Can I see which keywords trigger a featured snippet

How often do they update the data

Can a tool alert me of unusual ranking fluctuations

What sort of reports can I generate

I know there’s a lot to cover so let’s dive right in.

Comparison Results

#1. Search Engines and Devices

Search Engines

It goes without saying that we are all eager to know how our sites rank on Google.

But depending on various factors, like your location or business type, for example, you might want to track the rankings on other search engines as well.

And as it turns out, rank trackers differ greatly when it comes to search engines they target.

For example, Moz allows you to track rankings on Google, Google Mobile, Bing and Yahoo.

SEMrush and Ahrefs, however, track only Google (and Google mobile) rankings.

SE Rankings on the other hand, also tracks rankings in Yandex, Yandex mobile and YouTube.

And finally, RankRanger offers an impressive list of search engines, including Google, Google mobile, Yahoo, Yandex, Bing, YouTube, Google Maps, Google Play, iTunes, Google Jobs, Haosou, Sogou, Baidu, Seznam, Atlas, and others.

However, the problem is, RankRanger allows you to pick only two search engines from the list. And so, although you have a plethora of choice, you can’t avail the options in full.

Verdict: If you look at the list of supported engines, RankRanger is miles ahead of the rest. Unfortunately, its restriction to two sources lands it in line with other tools.

Devices

All rank trackers allow monitoring rankings for desktop and mobile devices separately. However, SEMrush is the only one that splits mobile devices between smartphones and tablets, offering a more in-depth look at your mobile rankings.

Verdict: SEMrush for offering a more detailed analysis of mobile rankings.

#2. Keywords

Depending on the size of your site, and the business, you might want to track anything from a handful of keywords to thousands or more.

And particularly, if you’re in the latter category, it is crucial that the rank tracker you choose will not force you to drop some of the data.

Here’s how my selected rank trackers stack up regarding the number of keywords they allow you to track:

(Note: I’m quoting official information from those companies here, covering various price plans these products offer)

SEMrush Moz Ahrefs SE Ranking RankRanger From 500 to 5000 From 300 to 7500 From 300 to 10000 From 50 to 20000 From 350 to 2000

Verdict: A tricky choice as the best tool in this case largely depends on your business requirements.

So, instead of offering a strict verdict, I thought I’d compare the cost per keyword for each tool (using their smallest paid plan).

Price per month Keywords Price per Keyword MOZ 99 300 0.33 Ahrefs 99 300 0.33 SEMrush 99 500 0.20 SE Ranking 29 250 0.12 RankRanger 69 350 0.20

#3. Location Tracking

If you run a local business, it is crucial that you know how your keywords perform in your immediate locale. And although it’s not a functionality I’d personally be interested in, I compared whether rank trackers from my list can deliver local rankings.

And I was pleasantly surprised to find out that every tool on the list allows tracking rankings by location.

SEMrush Moz Ahrefs SE Ranking RankRanger Yes Yes Yes Yes Yes

However, they all offer a different number of location targets you could specify and track. What’s more, in some of the tools, the actual number also depends on a price plan you’ve selected.

Here’s the breakdown of how many locations per website you can specify:

SEMrush Moz Ahrefs SE Ranking RankRanger 10 4 From 1 to unlimited, depending on a price plan 5 2

Verdict: Ahrefs beats everyone else with their unlimited package.

#4. Tracking Business Name in Local Listings

Similarly, if you run (or help promote) a local business, you should know how it ranks for its business name.

Therefore, a rank tracker you choose should give you the ability to monitor rankings for the business name.

From the ones I’ve compared, SEMrush, SE Ranking and RankRanger offer this functionality.

RankRanger

SE Ranking

SEMrush

Moz doesn’t include it in its PRO package. However, you can get it if you sign up for their Moz LOCAL tool. To use it, however, you need to buy a separate license.

Ahrefs, however, doesn’t allow tracking a business name.

Verdict: A tie between SEMrush, SE Ranking and RankRanger.

#5. Competitor Rankings

Similarly, I analyzed which rank tracker allows me to compare my rankings with the competition and build an image of my competitive landscape.

Ahrefs, SE Ranking and RankRanger allow to compare up to 5 competitors.

SE Ranking

Ahrefs

Rank Ranger

SEMrush allows adding up to 20 competitors. You can also add specific competitors for each keyword, making the tool’s competitive report highly relevant.

Finally, with Moz, you can track rankings of up to 3 competitors. However, you can also add them from the organic report.

Verdict: SEMrush. If you need to monitor ranking changes for a large group of competitors, the tool delivers the greatest opportunity.

#6. Additional Competitor Research

I believe most will agree with me on this:

Companies you consider immediate competitors aren’t necessarily the ones you battle for the searcher’s attention.

Additional websites might be ranking for your keywords, trying to steal your traffic and revenue.

And so, it is crucial that you understand who your online competitors are (not just the market ones).

And as it turns out, there are only two tools on the list that offer this functionality:

SEMrush

SEMrush’s competitor report includes all domains that target the same keywords as you, not just the ones that battle for the same organic traffic.

Plus, it provides a wealth of additional information about each domain that you could use to plan strategies to overcome them in rankings.

SE Rankings

SE Rankings offers a list of domains targeting similar keywords as you to choose from.

Verdict: SEMrush and SE Ranking because of their holistic approach of looking at other sources than just organic to get the full picture of the competitive landscape.

#7. Featured Snippets Report

Featured snippets are one heck of a deal.

After all, as some SEOs suggested, the featured snippet is now a rank zero, the ultimate ranking position you could strive for.

(Hell, you could rank on top of organic search, and the Answer Box will still nick your traffic!)

Unfortunately, getting it isn’t easy.

Or is it?

Because, for one, knowing which keywords already trigger the answer box could help you improve your relevant pages, and take over the feature box.

And so, as part of the comparison, I checked which rank trackers report on featured snippets in rankings.

And this is where things get really tricky. Because all the tools show the featured snippet icon, denoting that a particular keyword fires off the Answer Box. Then some of them allow to dig deeper and filter results by this rank type.

So to take it from the top, Moz, Ahrefs, SEMrush and RankRanger denote featured snippet in ranking reports, allowing you to identify keywords that trigger the answer box.

The cool thing about SEMrush featured snippet report is that it is unique in the market. It significantly speeds up your workflow of getting into featured snippets in two ways: result analysis and opportunities search.

First, based on your keyword list, you get the list of all your pages that got you to featured snippet for any location or device that you target.

At the same time, in the other tab, you get all the keywords that you did not gain a featured snippet rank for, but your competitors did. In the table, you can see at once the keywords AND the specific URL from the snippet. This feature allows you to quickly analyze why a particular page gained on the FS ranking and therefore how to improve your own page. Also, by putting priorities to your pages that got to the top-20 SERP positions, it’s easy to strategically plan your work.

RankRanger provides a dedicated featured snippet report as well, including the information about keywords, domains, and landing pages that trigger the answer box.

Verdict: A tie between RankRanger and SEMrush

#8. Data Update Frequency

Given how fast rankings can change, it is crucial that you have access to the most current data.

Spotting unusual rankings fluctuation might help you identify and overcome a bigger issue if the problem was left unattended.

For that reason alone, I prefer an instant data update, rather than a delayed one. And so, as part of the process, I checked the intervals at which I could have my data delivered by the rank trackers.

But to my surprise, there are huge discrepancies with data update frequency between those products.

SE Ranking, SEMrush and RankRanger provide daily rankings.

From SEMrush’s website:

RankRanger

Moz reports on your search positions only once a week.

SE Ranking, on the other hand, allows you to specify your desired frequency, and choose to receive the data every day, once in 3 days or weekly. Note that the frequency you choose will affect your price, with daily reports costing you more, and weekly slashing your cost by as much as 40%.

Finally, Ahrefs ties frequency to price plans. The higher plan you choose, the faster you’ll receive your data.

Verdict: RankRanger provides the best value for the money here. But it’s worth mentioning that SE Ranking allows to select the frequency on all plans, and customize it to your needs.

#9. Alerts

In case something unusual happens to the rankings one would like to know the cause behind it, identify the problem in detail and take adequate measures to eradicate the problem.

Unfortunately, the only rank tracker that offers the ability to set up alerts, and receive emails when rankings change is SEMrush.

That said, I admit that this is hands down an incredible feature, allowing SEOs to become more proactive, rather than reactive to changes and potential issues with rankings.

Verdict: SEMrush, undoubtedly.

Note: This is a different functionality from weekly (or daily, monthly, etc.) ranking update reports that most tools offer.

#10. Reports

It doesn’t matter if you’re an in-house SEO or work for an agency, at some point you’ll have to issue reports to prove your work. Although agency folk might do it more often, it’s a feature we all need.

Most of the rank trackers I compared offer the ability to create PDF reports. The only exception in the list is Ahrefs. It does not offer any reporting functionality.

With others, however, there are some differences regarding the type of reports you can create.

SEMrush allows to create a standard PDF report that you can schedule to receive regularly.

You can also customize the look and content of the PDF, adding data from other SEMrush tools with a simple drag and drop interface.

Many of those elements allow you to bring in custom data and reports created specifically for this report, making it an invaluable tool for marketers and agencies that need to provide more context for the ranking report.

Moz offers custom reports too, and allows to schedule and annotate them.

SE Ranking includes a template builder, allowing you to create various templates for your reports, depending on your requirements.

Finally, RankRanger offers an option to create white-labelled reports, customize the cover page, and customize predefined PDF templates.

Closing Thoughts

As SEOs, we all have different objectives – increasing overall rankings, showing up in the Answer Box, boosting local presence among others. However, the rankings, like I said, are the best indicators of SEO progress.

And we need to use a tool offering functionality that can help us achieve it.

I hope this detailed comparison helps you identify the most effective tool to reach your own SEO goals.

Disclaimers:

First, I signed up for and used demo trials of each tool. As a result, there is a possibility that certain functionality I may highlight as lacking in a particular app was simply blocked in the trial version.

Second, the results of this comparison are entirely subjective and based on my expectations for a rank tracker.

#Best Position Trackers SEO Tools#Comparison#Detailed#Position#Results#Search Engine Ranking#SEO#SEO Tools#Tools#Trackers

0 notes

Photo

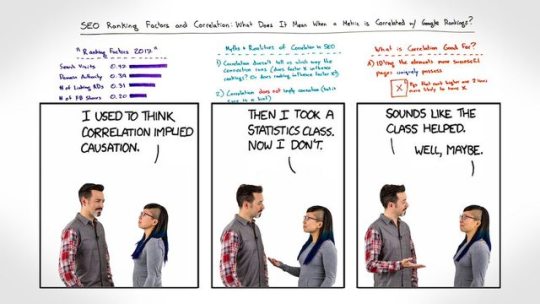

SEO Ranking Factors & Correlation: What Does It Mean When a Metric Is Correlated with Google Rankings? - Whiteboard Friday

In an industry where knowing exactly how to get ranked on Google is murky at best, SEO ranking factors studies can be incredibly alluring. But there’s danger in believing every correlation you read, and wisdom in looking at it with a critical eye. In this Whiteboard Friday, Rand covers the myths and realities of correlations, then shares a few smart ways to use and understand the data at hand.

&lt;span id=”selection-marker-1″ class=”redactor-selection-marker”&gt;&lt;/span&gt;

Video Transcription

Howdy, Moz fans, and welcome to another edition of Whiteboard Friday. This week we are chatting about SEO ranking factors and the challenge around understanding correlation, what correlation means when it comes to SEO factors.

So you have likely seen, over the course of your career in the SEO world, lots of studies like this. They’re usually called something like ranking factors or ranking elements study or the 2017 ranking factors, and a number of companies put them out. Years ago, Moz started to do this work with correlation stuff, and now many, many companies put these out. So people from Searchmetrics and I think Ahrefs puts something out, and SEMrush puts one out, and of course Moz has one.

These usually follow a pretty similar format, which is they take a large number of search results from Google, from a specific country or sometimes from multiple countries, and they’ll say, “We analyzed 100,000 or 50,000 Google search results, and in our set of results, we looked at the following ranking factors to see how well correlated they were with higher rankings.” That is to say how much they predicted that, on average, a page with this factor would outrank a page without the factor, or a page with more of this factor would outrank a page with less of this factor.

Correlation in SEO studies like these usually mean:

So, basically, in an SEO study, they usually mean something like this. They do like a scatter plot. They don’t have to specifically do a scatter plot, but visualization of the results. Then they’ll say, “Okay, linking root domains had better correlation or correlation with higher organic rankings than the 10 blue link-style results to the degree of 0.39.” They’ll usually use either Spearman or Pearson correlation. We won’t get into that here. It doesn’t matter too much.

Across this many searches, the metric predicted higher or lower rankings with this level of consistency. 1.0, by the way, would be perfect correlation. So, for example, if you were looking at days that end in Y and days that follow each other, well, there’s a perfect correlation because every day’s name ends in Y, at least in English.

So search visits, let’s walk down this path just a little bit. So search visits, saying that that 0.47 correlated with higher rankings, if that sounds misleading to you, it sounds misleading to me too. The problem here is that’s not necessarily a ranking factor. At least I don’t think it is. I don’t think that the more visits you get from search from Google, the higher Google ranks you. I think it’s probably that the correlation runs the other way around — the higher you rank in search results, the more visits on average you get from Google search.

So these ranking factors, I’ll run through a bunch of these myths, but these ranking factors may not be factors at all. They’re just metrics or elements where the study has looked at the correlation and is trying to show you the relationship on average. But you have to understand and intuit this information properly, otherwise you can be very misled.

Myths and realities of correlation in SEO

So let’s walk through a few of these.

1. Correlation doesn’t tell us which way the connection runs.

So it does not say whether factor X influences the rankings or whether higher rankings influences factor X. Let’s take another example — number of Facebook shares. Could it be the case that search results that rank higher in Google oftentimes get people sharing them more on Facebook because they’ve been seen by more people who searched for them? I think that’s totally possible. I don’t know whether it’s the case. We can’t prove it right here and now, but we can certainly say, “You know what? This number does not necessarily mean that Facebook shares influence Google results.” It could be the case that Google results influence Facebook searches. It could be the case that there’s a third factor that’s causing both of them. Or it could be the case that there’s, in fact, no relationship and this is merely a coincidental result, probably unlikely given that there is some relationship there, but possible.

2. Correlation does not imply causation.

This is a famous quote, but let’s continue with the famous quote. But it sure is a hint. It sure is a hint. That’s exactly what we like to use correlation for is as a hint of things we might investigate further. We’ll talk about that in a second.

3. In an algorithm like Google’s, with thousands of potential ranking inputs, if you see any single metric at 0.1 or higher, I tend to think that, in general, that is an interesting result.

Not prove something, not means that there’s a direct correlation, just it is interesting. It’s worthy of further exploration. It’s worthy of understanding. It’s worthy of forming hypotheses and then trying to prove those wrong. It is interesting.

4. Correlation does tell us what more successful pages and sites do that less successful sites and pages don’t do.

Sometimes, in my opinion, that is just as interesting as what is actually causing rankings in Google. So you might say, “Oh, this doesn’t prove anything.” What it proves to me is pages that are getting more Facebook shares tend to do a good bit better than pages that are not getting as many Facebook shares.

I don’t really care, to be honest, whether that is a direct Google ranking factor or whether that’s just something that’s happening. If it’s happening in my space, if it’s happening in the world of SERPs that I care about, that is useful information for me to know and information that I should be applying, because it suggests that my competitors are doing this and that if I don’t do it, I probably won’t be as successful, or I may not be as successful as the ones who are. Certainly, I want to understand how they’re doing it and why they’re doing it.

5. None of these studies that I have ever seen so far have looked specifically at SERP features.

So one of the things that you have to remember, when you’re looking at these, is think organic, 10 blue link-style results. We’re not talking about AdWords, the paid results. We’re not talking about Knowledge Graph or featured snippets or image results or video results or any of these other, the news boxes, the Twitter results, anything else that goes in there. So this is kind of old-school, classic organic SEO.

6. Correlation is not a best practice.

So it does not mean that because this list descends and goes down in this order that those are the things you should do in that particular order. Don’t use this as a roadmap.

7. Low correlation does not mean that a metric or a tactic doesn’t work

Example, a high percent of sites using a page or a tactic will result in a very low correlation. So, for example, when we first did this study in I think it was 2005 that Moz ran its first one of these, maybe it was ’07, we saw that keyword use in the title element was strongly correlated. I think it was probably around 0.2, 0.15, something like that. Then over time, it’s gone way, way down. Now, it’s something like 0.03, extremely small, infinitesimally small.

What does that mean? Well, it could mean one of two things. It could mean Google is using it less as a ranking factor. It could mean that it was never connected, and it’s just total speculation, total coincidence. Or three, it could mean that a lot more people who rank in the top 20 or 30 results, which is what these studies usually look at, top 10 to top 50 sometimes, a lot more of them are putting the keyword in the title, and therefore, there’s just no difference between result number 31 and result number 1, because they both have them in the title. So you’re seeing a much lower correlation between pages that don’t have them and do have them and higher rankings. So be careful about how you intuit that.

Oh, one final note. I did put -0.02 here. A negative correlation means that as you see less of this thing, you tend to see higher rankings. Again, unless there is a strong negative correlation, I tend to watch out for these, or I tend to not pay too much attention. For example, the keyword in the meta description, it could just be that, well, it turns out pretty much everyone has the keyword in the meta description now, so this is just not a big differentiating factor.

What is correlation good for?

All right. What’s correlation actually good for? We talked about a bunch of myths, ways not to use it.

A. IDing the elements that more successful pages tend to have

So if I look across a correlation and I see that lots of pages are twice as likely to have X and rank highly as the ones that don’t rank highly, well, that is a good piece of data for me.

B. Watching elements over time to see if they rise or lower in correlation.

For example, we watch links very closely over time to see if they rise or lower so that we can say: “Gosh, does it look like links are getting more or less influential in Google’s rankings? Are they more or less correlated than they were last year or two years ago?” And if we see that drop dramatically, we might intuit, “Hey, we should test the power of links again. Time for another experiment to see if links still move the needle, or if they’re becoming less powerful, or if it’s merely that the correlation is dropping.”

C. Comparing sets of search results against one another we can identify unique attributes that might be true

So, for example, in a vertical like news, we might see that domain authority is much more important than it is in fitness, where smaller sites potentially have much more opportunity or dominate. Or we might see that something like https is not a great way to stand out in news, because everybody has it, but in fitness, it is a way to stand out and, in fact, the folks who do have it tend to do much better. Maybe they’ve invested more in their sites.

D. Judging metrics as a predictive ranking ability

Essentially, when I’m looking at a metric like domain authority, how good is that at telling me on average how much better one domain will rank in Google versus another? I can see that this number is a good indication of that. If that number goes down, domain authority is less predictive, less sort of useful for me. If it goes up, it’s more useful. I did this a couple years ago with Alexa Rank and SimilarWeb, looking at traffic metrics and which ones are best correlated with actual traffic, and found Alexa Rank is awful and SimilarWeb is quite excellent. So there you go.

E. Finding elements to test

So if I see that large images embedded on a page that’s already ranking on page 1 of search results has a 0.61 correlation with the image from that page ranking in the image results in the first few, wow, that’s really interesting. You know what? I’m going to go test that and take big images and embed them on my pages that are ranking and see if I can get the image results that I care about. That’s great information for testing.

This is all stuff that correlation is useful for. Correlation in SEO, especially when it comes to ranking factors or ranking elements, can be very misleading. I hope that this will help you to better understand how to use and not use that data.

Thanks. We’ll see you again next week for another edition of Whiteboard Friday.

Video transcription by Speechpad.com

The image used to promote this post was adapted with gratitude from the hilarious webcomic, xkcd.

0 notes

Photo

SEO Ranking Factors & Correlation: What Does It Mean When a Metric Is Correlated with Google Rankings?

In an industry where knowing exactly how to get ranked on Google is murky at best, SEO ranking factors studies can be incredibly alluring. But there’s danger in believing every correlation you read, and wisdom in looking at it with a critical eye. In this Whiteboard Friday, Rand covers the myths and realities of correlations, then shares a few smart ways to use and understand the data at hand.

&lt;span id=”selection-marker-1″ class=”redactor-selection-marker”&gt;&lt;/span&gt;

Video Transcription

Howdy, Moz fans, and welcome to another edition of Whiteboard Friday. This week we are chatting about SEO ranking factors and the challenge around understanding correlation, what correlation means when it comes to SEO factors.

So you have likely seen, over the course of your career in the SEO world, lots of studies like this. They’re usually called something like ranking factors or ranking elements study or the 2017 ranking factors, and a number of companies put them out. Years ago, Moz started to do this work with correlation stuff, and now many, many companies put these out. So people from Searchmetrics and I think Ahrefs puts something out, and SEMrush puts one out, and of course Moz has one.

These usually follow a pretty similar format, which is they take a large number of search results from Google, from a specific country or sometimes from multiple countries, and they’ll say, “We analyzed 100,000 or 50,000 Google search results, and in our set of results, we looked at the following ranking factors to see how well correlated they were with higher rankings.” That is to say how much they predicted that, on average, a page with this factor would outrank a page without the factor, or a page with more of this factor would outrank a page with less of this factor.

Correlation in SEO studies like these usually mean:

So, basically, in an SEO study, they usually mean something like this. They do like a scatter plot. They don’t have to specifically do a scatter plot, but visualization of the results. Then they’ll say, “Okay, linking root domains had better correlation or correlation with higher organic rankings than the 10 blue link-style results to the degree of 0.39.” They’ll usually use either Spearman or Pearson correlation. We won’t get into that here. It doesn’t matter too much.

Across this many searches, the metric predicted higher or lower rankings with this level of consistency. 1.0, by the way, would be perfect correlation. So, for example, if you were looking at days that end in Y and days that follow each other, well, there’s a perfect correlation because every day’s name ends in Y, at least in English.

So search visits, let’s walk down this path just a little bit. So search visits, saying that that 0.47 correlated with higher rankings, if that sounds misleading to you, it sounds misleading to me too. The problem here is that’s not necessarily a ranking factor. At least I don’t think it is. I don’t think that the more visits you get from search from Google, the higher Google ranks you. I think it’s probably that the correlation runs the other way around — the higher you rank in search results, the more visits on average you get from Google search.

So these ranking factors, I’ll run through a bunch of these myths, but these ranking factors may not be factors at all. They’re just metrics or elements where the study has looked at the correlation and is trying to show you the relationship on average. But you have to understand and intuit this information properly, otherwise you can be very misled.

Myths and realities of correlation in SEO

So let’s walk through a few of these.

1. Correlation doesn’t tell us which way the connection runs.

So it does not say whether factor X influences the rankings or whether higher rankings influences factor X. Let’s take another example — number of Facebook shares. Could it be the case that search results that rank higher in Google oftentimes get people sharing them more on Facebook because they’ve been seen by more people who searched for them? I think that’s totally possible. I don’t know whether it’s the case. We can’t prove it right here and now, but we can certainly say, “You know what? This number does not necessarily mean that Facebook shares influence Google results.” It could be the case that Google results influence Facebook searches. It could be the case that there’s a third factor that’s causing both of them. Or it could be the case that there’s, in fact, no relationship and this is merely a coincidental result, probably unlikely given that there is some relationship there, but possible.

2. Correlation does not imply causation.

This is a famous quote, but let’s continue with the famous quote. But it sure is a hint. It sure is a hint. That’s exactly what we like to use correlation for is as a hint of things we might investigate further. We’ll talk about that in a second.

3. In an algorithm like Google’s, with thousands of potential ranking inputs, if you see any single metric at 0.1 or higher, I tend to think that, in general, that is an interesting result.

Not prove something, not means that there’s a direct correlation, just it is interesting. It’s worthy of further exploration. It’s worthy of understanding. It’s worthy of forming hypotheses and then trying to prove those wrong. It is interesting.

4. Correlation does tell us what more successful pages and sites do that less successful sites and pages don’t do.

Sometimes, in my opinion, that is just as interesting as what is actually causing rankings in Google. So you might say, “Oh, this doesn’t prove anything.” What it proves to me is pages that are getting more Facebook shares tend to do a good bit better than pages that are not getting as many Facebook shares.

I don’t really care, to be honest, whether that is a direct Google ranking factor or whether that’s just something that’s happening. If it’s happening in my space, if it’s happening in the world of SERPs that I care about, that is useful information for me to know and information that I should be applying, because it suggests that my competitors are doing this and that if I don’t do it, I probably won’t be as successful, or I may not be as successful as the ones who are. Certainly, I want to understand how they’re doing it and why they’re doing it.

5. None of these studies that I have ever seen so far have looked specifically at SERP features.

So one of the things that you have to remember, when you’re looking at these, is think organic, 10 blue link-style results. We’re not talking about AdWords, the paid results. We’re not talking about Knowledge Graph or featured snippets or image results or video results or any of these other, the news boxes, the Twitter results, anything else that goes in there. So this is kind of old-school, classic organic SEO.

6. Correlation is not a best practice.

So it does not mean that because this list descends and goes down in this order that those are the things you should do in that particular order. Don’t use this as a roadmap.

7. Low correlation does not mean that a metric or a tactic doesn’t work

Example, a high percent of sites using a page or a tactic will result in a very low correlation. So, for example, when we first did this study in I think it was 2005 that Moz ran its first one of these, maybe it was ’07, we saw that keyword use in the title element was strongly correlated. I think it was probably around 0.2, 0.15, something like that. Then over time, it’s gone way, way down. Now, it’s something like 0.03, extremely small, infinitesimally small.