Don't wanna be here? Send us removal request.

Text

Week 13 Process One

Storyline we designed:

1.Prologue:

“To find freedom from suffering, we must sever the root that binds us - the illusion of self and the material world. It's all a fleeting dream, a shimmering bubble, a passing phantom”

-Lu Yang. The great adventure of Material World.

Lu, Y.(2020). The great adventure of Material World [game film, time-based art]. Art Gallery NSW https://www.artgallery.nsw.gov.au/collection/works/170.2021/#about

2. Transition:

(enter Banksy's perspective) "Banksy world is now open"

3. Ending:

"What's your perception of the world?"

We completed the shoot at the Art Gallery of New South Wales using insta360. In the movie we plan to use two of these clips. We will use a digital art image that has a sense of travel and is relevant to Banksy's style as an introduction. At the end we will use the video of looking up at the artwork.

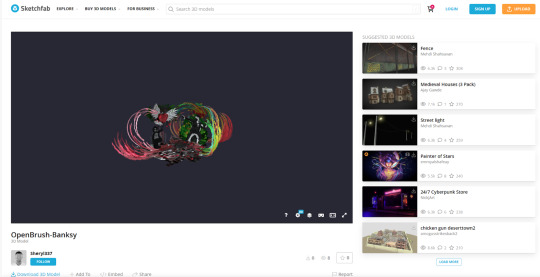

We have finished painting in OpenBrush, containing four of Banksy's street paintings. However, we have encountered some difficulties in exporting that need to be resolved in the follow-up.

4 works of art from Banksy we hope to present were: Flower thrower, Girl with balloon, Dove in a bulletproof vest, and Band-aid balloon.

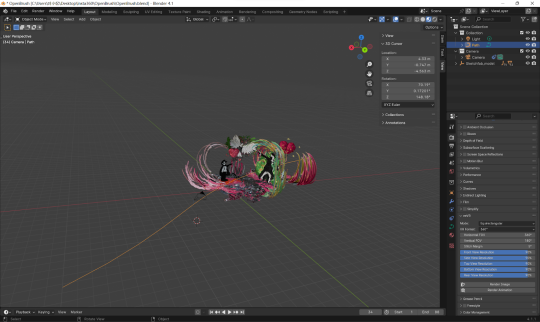

We designed and set up the camera action track in Blender. After the OpenBrush model is exported, we will adjust it accordingly in Blender.

0 notes

Text

(week 13)Process

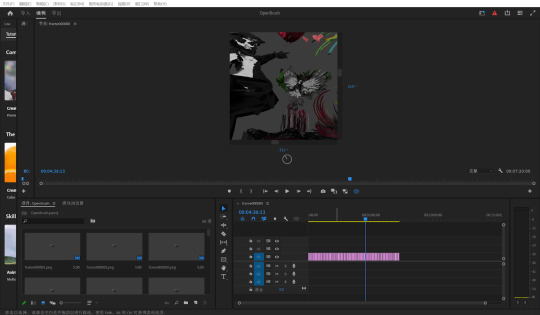

In the making of the video “Banksy's World”, we primarily use three software programs: OpenBrush, Blender, and Premiere Pro.

The challenges we encountered with OpenBrush were mainly related to exporting. After creating content in OpenBrush during class, we were unable to export it.

This weekend, we borrowed a Meta Quest 3 from the loan store to redo the painting.

This time, we successfully exported by selecting the ‘Share’ option in OpenBrush, making the artwork public on Sketchfab, and then downloading the GLB file.

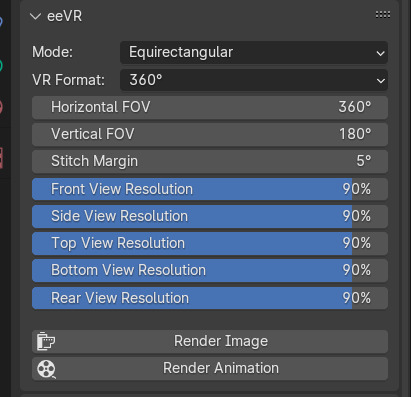

After that, we imported the GLB file into Blender, made adjustments, and added the camera. We wanted to create a shuttle effect, so we set up an S-shaped path for the camera and made the camera move following that path. We then installed the eeVR plugin, adjusted parameters such as modes, VR format, and resolution in eeVR, and then output the sequence frames.

Here, we will make some adjustments:

Due to time constraints, we haven't added a background behind the model yet. We will add it later.

If we find that the path curves too much when viewed through the device and causes vertigo, we will change it to a straight line.

When importing into Blender, we found that some of the effects from OpenBrush, such as light spots and smoke, did not appear. We are going to try adding similar effects in Blender.

The main parameters we adjusted:

The sequence frames:

The next step is to import the sequence frames into Premiere Pro and output the panoramic video. We have already completed the output of the 360 video.

Our follow-up task in Premiere Pro is to adjust the timing of this video to make it fit better into our overall video.

To conclude, in addition to the follow-up work mentioned above, our next tasks are:

Create transitions between the parts: we plan to draw a time tunnel in OpenBrush, set up the camera in Blender, and output the video (in the same way as we did in the 'Banksy's World' part of the video).

Add background music.

Watch it in VR and debug it.

0 notes

Text

Waves of Grace

youtube

Waves of Grace (youtube.com)

Introduction:

The story dives into Liberia's struggle with the biggest Ebola outbreak ever and the remarkable journey of Decontee Davis, an Ebola survivor turned caregiver for orphaned kids in her village.

The purpose of this video is to spotlight Ebola's aftermath and how kids dealt with losing their families. The creators of the video recognize that to engage viewers and prompt meaningful action, storytelling is key. The video invites viewers to step into her world and experience her journey firsthand through VR technology, tapping into our innate empathy and bridging the gap between us and the distant event.

Spatial Arrangement and Visual Direction:

I think this video does a very good job of spatial arrangement of the camera, visual direction and pacing control.

Camera Placement: The way the camera is positioned really helps draw us into the scenes. For instance, it adjusts the distance between the camera and the crowd depending on what's happening.

Visual direction: Even though the camera doesn't move, the video uses clever visuals to direct our attention. For example, when characters move or act, it prompts us to look where the action is.

Pacing control: The sound effects and transitions in this video are skillfully set up. It creates a smooth flow that keeps us engaged and focused throughout the video.

Since this movie is about ten minutes long, I won't show all of it. I'll follow its narrative logic to analyze some of the content that inspired me.

Narrative:

The video's narrative unfolds in three stages.

Stage 1 | 00:00-02:00:

The first part shows homes getting rebuilt after Ebola, kids going back to school, markets opening up, and people going back to work. The camera is set in the middle of the crowd For example, in the classroom scene, the camera's up high, so we see what the kids are learning, almost like we're there. And being in the middle of everything makes us want to peek around and see what's going on behind us. So I think it’s also a good way to direct our attention.

Stage 2 | 02:00-03:30:

In this part, Decontee Davis remembers her time with Ebola.

A standout moment occurs when the screen goes dark, depriving the viewer of visual stimuli and emphasizing the power of sound and narration. This part really got to me. During the ten-second blackout, I felt a suffocating sense of desperation. The blackout conveyed her despair and pain more vividly than just describing Ebola symptoms. It's a smart trick that makes us connect more with Decontee's story, using just sounds to draw us in.

Maybe the black screen was because they couldn't get the relevant video materials. I think it's a good way to handle it.

Stage 3 | 3 03:30-07:00:

In Stage 3, it shows the story that Decontee Davis started caring for kids who lost their parents to Ebola. We get a better sense of how difficult it is for these children to survive in that situation.

Stage 4

The end brings us back to the beach, echoing the start and the title "Waves of Grace."

When watching the waves and the sunset, listening to the calming background music. It felt like we could also sense the hard-earned relief of starting anew and rebuilding our home.

Pacing Control:

The use of visual cues and pacing control ensures a smooth transition between scenes, keeping viewers engaged without feeling abrupt or disjointed.

Auditory: In terms of sound, the video has calming background music, realistic ambient sounds, and spoken monologues. The music changes to match the scene, like being soothing during stage 1 and more intense during stage 2, then lighter at the end, setting the mood effectively.

Visual cues - Black screen and fading out: Visual cues such as black screens and fading scenes are seamlessly used. It creates smooth transitions between locations and maintains the movie's tone and rhythm throughout.

Potential Improvement

There are areas for potential improvement, such as enhancing the visibility of subtitles through strategic cues or visual guides.

The subtitles actually appear in the video sometimes, but I missed them the first time I watched this video since I was looking elsewhere.

I think it's possible to add sounds and cues or visual guides, such as having characters point to the subtitles or walk in the direction of the subtitles.

The visual guide at the end, for example, is great. The kids run ahead, and then the subtitles appear right after.

In conclusion, the video brings us close to the complex issues on a deeply personal level. That's why I think it's a great piece of work.

0 notes

Text

ASM 1 - Safe Haven

As a kid, I was full of imagination but also scared of the dark. Whenever I had to sleep alone, I felt like everything outside of my bed was dangerous. The floor, nightstand, and even the space under the bed seemed like off-limits areas. The darkness in my room felt all-encompassing, leaving the bed as my only safe haven. In Blender, I tinkered with the model I got from the 3D Scanner. I got rid of the floor to create the illusion of a floating island for the bed. This was my way of expressing how I felt when I was so terrified that I wouldn't dare leave the bed, even if I heard noises from outside. Hearing becomes sharper in the dark, so I set up various sounds behind the door, under the bed, and behind the curtains.

In that scenario, I stayed glued to the bed, interpreting the darkness on the floor as a sign of danger and inaccessibility.

______________________________________________________________ I hit some technical snags while working on it. For example, after tweaking the model in Blender and bringing it into Styly, I realized the colors wouldn't appear no matter what format I tried. Yet, the model from the 3D Scanner shows its colors just fine in Styly. Since I haven't figured out how to solve this yet, I'm stuck just making the model grey in Styly, trying to capture the difficulty of seeing in the dark.

0 notes

Text

Inspiration | Project 1

The requirement is to 'Capture the essence of a space'

Self-drive tour

For me, every driving trip is a unique and memorable experience.

So I would like to show a driving tour. The viewer will act as a passenger in the car.

Main things to show:

1. a beautiful view from the window

2. the cheerful atmosphere inside the car

3. the free and cozy feeling when driving around in the car

4. the sound of the wind outside the window, the BGM of the music in the car, and the conversations of fellow passengers.

Technically speaking, the reason for choosing a self-drive tour is:

The technical limitations mentioned in the previous lesson, such as the audience not being able to see their own limbs moving and not being able to choose their own course of action, can be solved in the self-driving tour scenario.

1. the self-driving tour is fixed in the seats and does not require the audience to move.

2. the audience basically does not need to have physical movements in the self-driving tour, the visual and auditory senses are the most important, and it is easier for the audience to be immersed in such a scene.

3. In the reality of self-driving tour, our main action is to look around the scenery, 360 filming can perfectly fit such a scene.

In terms of execution, the difficulty lies mainly in the shooting. A good view needs to be chosen.

0 notes

Text

Research Blog | 1

A basic game-like experience. More like a guide to help players familiarize with 360° VR operations. From a user-centered perspective, such a guide could be added��when players are new to VR, and the experience could help them get up to speed on VR use and avoid vertigo.

youtube

This 360°VR got me thinking about the limitations of this kind of 360°VR, for example:

Fixed position. The viewer can't move around on their own.

No interaction. The player can't click on a target when they find it. The experience might be better if interaction can be added and the score is eventually calculated based on what the user actually does.

Similar to this game experience recording, the viewer's inability to move their position on their own results in an action path that can only follow the system settings.

360° Mommy Long Legs Chases YOU in VR! Poppy Playtime Chapter 2 ENDING (youtube.com)

When viewers switch viewpoints, they may miss the image the video is trying to show. In this case, 360°VR doesn't contribute to a good experience. In this case I don't think 360°VR is better than normal video, because normal video will plan every frame and provide visual guidance, which can enhance the viewer's visual experience.

This brings me to another point:

"Audio-visual mutual enhancement" usually relies on playing a specific sound effect when a specific image appears. In a situation where the viewer is free to explore, the designer cannot dictate what the viewer will see at a given moment. So such enhancement cannot be achieved.

0 notes

Text

Inspiration:

When I was a kid, I always felt afraid to put my arms or legs out of my bed when I slept alone because it felt like if I did so, I would be attacked by monsters and ghosts. (Ofz, these monsters were only in my imagination.)

It was like the bed was a safe zone. It's a precise distinction between safe and unsafe.

I think it is possible to recreate this feeling as a child through mixed reality production.

For the scene setting, I think the main scene can be the bed in our room. But this means that the user can not move or make very small movements.

As shown in the picture above, there will be four vertical transparent barriers around the bed. At first I thought that the barriers would be made of glass, but then I wanted to reflect that the monsters and ghosts are close to us and very dangerous, so perhaps plastic or thin film barriers would be better?

Sound:

There will be sounds of monsters moving in the environment. The sound will be reduced inside the barrier and enhanced outside the barrier.

Interaction with monsters and ghosts:

Outside the barrier, there will be mist and faintly moving figures of monsters and ghosts. The monsters and ghosts wil be moving randomly when the user is inside the barrier. When the user approaches or touches the barrier, the monster will approach and slap the barrier violently (but they won't enter the barrier). When the user steps out of the barrier, the monsters and ghost will follow the user.

"Calling Parents" (I remembered that when I was especially scared, I would call my mom and dad to turn on the light because I was too afraid to leave my bed. And my fear would decrease when the light was turned on.)

There will be a clickable button. Users can click it to "call parents" and wait 30 seconds for the parents to open the door and turn on the light. The room will become brighter and turns into a normal room. After the parent turns off the light and leaves, the room slowly returns to its initial appearance within 1 minute.

"Get under the covers" or "Close your eyes"

There will be a clickable button. Users can click it to "get under the covers" or "close your eyes". When the button is clicked, users' vision will fall into darkness and the sound will diminish.

But there are some technical problems:

I don't know if it is possible to produce interaction. Simply speaking, we can set up clickable buttons for interaction (call parents to turn on the light, whether to go under the blanket, whether to close the eyes), but like the moving monsters need to be reached by some location-aware technology?

Does the barrier need to be modeled?

1 note

·

View note