Don't wanna be here? Send us removal request.

Text

Sum up: Source and Object Code

source code > what programmer types, legible to readers who understand programming the source code a programmer writs usually gets compiled or interpreted by an intermediary program that translates the human-readable language to language the computer can parse.

object code > the compiler’s translation of the source code; with translation, the computer can follow the directions the programmer has written; often not readable for humans, for this reason, much commercial software is distributed in object code format to keep specific techniques from competitors

0 notes

Text

ALGOL

“Structured programming,” pioneered in ALGOL, is a design that allows programmers to trace the path of a program’s execution. For any given line of code, the programmer can tell where the program came from and where it flows next, making programs easier to follow and debug. Structured programming also lends itself well to modularity, so that programmers can divide a program into different sections of code on which they can work individually before reassembling them.

Sherry Turkle argues that structured programming, especially in the 1970s and 1980s, emphasized “a male-dominated computer culture that took one style as the right and only way to program.”

The legacy of automatic programming includes government funding from DARPA in the 1970s and 1980s and some design aspects of the Java programming language in the 1990s

0 notes

Text

COBOL

COBOL was friendlier to report layouts and large data structures and used more English words to represent orders, so it was more accessible to managers and businesses. 49 COBOL was also designed to be platform independent—that is, to work across a number of different manufacturers’ machines—in order to streamline the local and idiosyncratic practices of programming that had cropped up around shared machines.

0 notes

Text

LISP

LISP (now Lisp) was developed shortly afterward by John McCarthy at MIT and has been the vehicle for many language developments, such as dynamic typing and conditional statements. On the heels of Lisp and Fortran was COBOL (Common Business Oriented Language), which was based on Hopper’s B-0 compiler and designed in 1959 by a committee of representatives from government and computer manufacturers to read with English-like syntax.

0 notes

Text

FORTRAN

The first successful higher-level language to work with a compiler—FORTRAN —was released in 1957 by John Backus at IBM.

The language was quickly adopted by IBM programmers as it allowed them to write code much more quickly and concisely than assembly language did, and it had a significant influence on other programming language development. Here is a snippet of Fortran code: A = (x * 2) / Y Anyone familiar with mathematical notation can read this as A equals x times 2, divided by Y . 48 In Fortran, like most programming languages, the equal sign (=) refers to assignment rather than equation; therefore, this statement assigns the value of ( x * 2) / Y to the variable A .

Fortran, which stands for “formula translation,” points to the primary use of computers at the time, to calculate complex formulas, but it also gestures forward to an idea of programming less tied to the field of engineering or mathematics. Fortran’s more legible notation meant that programming could be done by nonprofessionals

0 notes

Text

Binary numbering

Computers, however, work with electrical impulses that have two discrete states. You can visualize this system by thinking of a standard light switch.The bulb can be in one of two possible states at a given time; it is either on or off. This is a digital signal.

Binary numbering uses only two digits, 0 and 1. Each binary digit (each 0 or 1) is called a bit. In binary numbering, as in decimal, the value of a digit is determined by its place. However, in binary, each place represents 2 times the value of the place to its right (instead of 10 times, as in base 10).

Słowo bajt (ang. byte ) powstało od angielskiego bite (kęs), jako najmniejsza porcja danych, którą komputer może „ugryźć” za jednym razem.

A byte generally equals 8 bits. Bytes are the units that computers usually use to represent a character (a letter of the alphabet, a numeral, or a symbol). Eight bits is also called an octet, especially in the context of IP addressing.

0 notes

Text

High-level language

must be converted into machine language for the computer to use the program, done by ↦ a program called a compiler ↦ which reorganizes the instructions in the source code, or an interpreter, which immediately executes the source code. Because different computing platforms use different machine languages, there are different compilers for a single high-level language to enable the code to run on different platforms.

0 notes

Text

Machine code

The data stored in computers is written in the “language” of ones and zeros, or binary language (also called machine language or machine code).

↧ Machine language is the lowest level of programming language. ↧ assembly language, which allows programmers to use names represented by ASCII characters, rather than just numbers. Code written in assembly language is translated into machine language by a program called an assembler. ↧ Most programmers write their code in high-level languages (for example, BASIC, COBOL, FORTRAN, or C++). High-level languages are “ friendlier ” than other languages in that they are more like the languages that humans write and speak to communicate with one another and less like the machine language that computers “understand.”

0 notes

Text

Compiler, 1951

Working on the UNIVAC computer, Hopper wrote the first compiler in 1951. First successful higher-level language to work with a compiler -- FORTRAN, was released in 1957 by John Backus at IBM.

0 notes

Text

Approaching the code

EVDAC, moved from physical engineering in wiring to symbolic representation in written code ↦ writing & engineering controlled by both electrical impulses and writing systems ↦ programming etymology, gram meaning writing, first borrowed by John Mauchly from the context of electrical signal control in description of ENIAC in 1942.

1948, a group at Cambridge figured out that the computer could be made to understand letters as well as numbers. Letters could be made into numbers: human-readable source code could be translated into computer-readable machine code through an intermediary program they called an “ assembler " cmp edx,32h jle wmain+32h push offset string “Higher than average\n” jmp wmain+37h push offset string “Lower than average\n” call dword ptr [__imp__printf]

1950s, compilers , a new generation of translation programs , allowed source code to be imbued with more semantic value by making the computer a more sophisticated reader—and writer—of code. ↦ Grace Hopper, 1952, “It is the current aim to replace, as far as possible, the human brain by an electronic digital computer.”

Grace Hopper taught mathematics, she programmed the Mark I. Hopper left to work on UNIVAC and early programming languages, especially COBOL for business computing.

0 notes

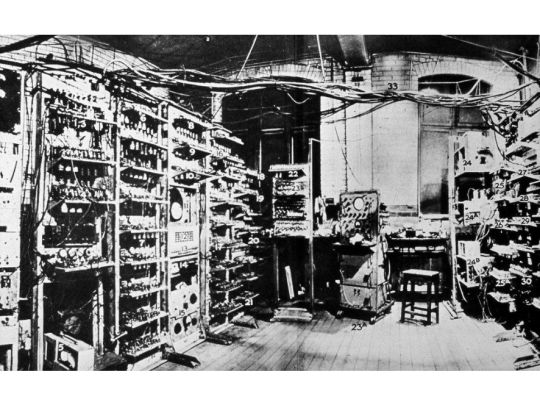

Photo

1948, Manchester Small Scale Experimental Machine “Baby”. First computer to execute a program from memory.

0 notes

Text

Stored program

1945, John von Neumann’s team developed a concept of a “stored program” allowed the computer to store its instructions along with its data. “Von Neumann architecture” moved the concept of programming from physical engineering to symbolic representation.

0 notes

Video

youtube

“Hidden Figures” is loosely based on the 2016 non-fiction book of the same name by Margot Lee Shetterly about black female mathematicians who worked at the National Aeronautics and Space Administration (NASA) during the Space Race.

0 notes

Text

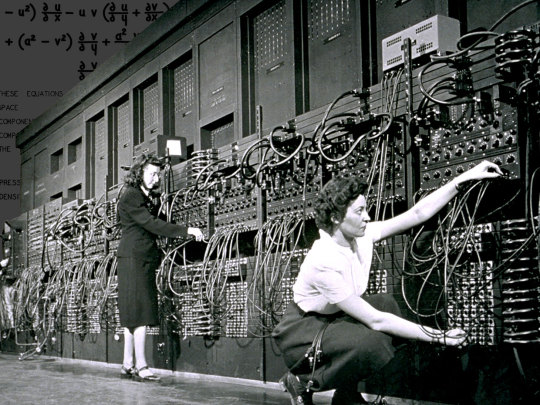

Engineers vs. Programmers

Behind the computer's apparent intelligence was the programming work of a team of six women, who themselves had previously worked as “computers.” This fact was hidden for press, could reveal that is was still too human computation Computing was considered clerical work, a task too tedious for the male engineers to engage in. So Ballistic Research Laboratory working on ENIAC hired women -- who mostly had university degrees and displayed high mathematical aptitude -- to handle the job.

0 notes

Photo

Marlyn Wescoff (left) and Ruth Lichterman were two of the female programmers of ENIAC.

0 notes

Text

First computers usage

massive mathematical calculations, such as breaking code or weapons ballistics. ENIAC could compute a bomb trajectory in less time than a shell would take to fly from gun to target. Complicated calculations -- worked out using basic functions such as adding and subtracting, and put together in specific sequences by a circuit designer.

ENIAC was not finished in time to compute bomb trajectories during the war. But shortly thereafter, it was enlisted by John von Neumann to perform nuclear fusion calculations. This required the use of over 1 million punch cards.

0 notes