#AR/VR/MR Optics and Display Market

Text

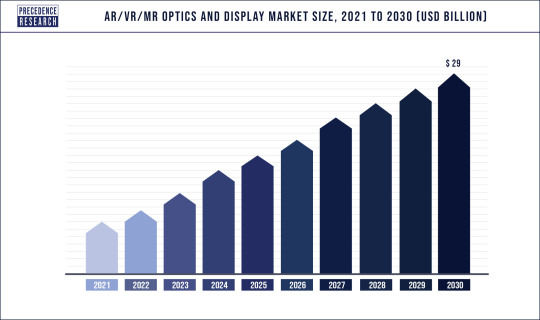

AR/VR/MR Optics and Display Market Worth Nearly US$ 29 Bn by 2030

AR/VR/MR Optics and Display Market Worth Nearly US$ 29 Bn by 2030

The global AR/VR/MR optics and Display Market size is predicted to reach around US$ 29 billion by 2030, growing at a healthy CAGR from 2022 to 2030, according to a 2022 study by Precedence Research, the Canada-based market Insight Company. The AR/VR/MR optics and display market engulfs the complete sector of optics and display technologies that are applied in spatial reality products.

The…

View On WordPress

0 notes

Text

Thoughts after Vision Pro: Where has China’s VR/AR industry gone?

In the past half century, every innovation of Apple has opened up a new hardware paradigm. There is also a huge incremental market HE Tuber behind it. The launch of Apple Vision Pro has detonated the entire VR and AR industry. So, what kind of radiation effect will its appearance bring to domestic VR equipment such as PICO?

In April this year, PICO also officially launched the all-in-one VR machine PICO4 Pro, which has been optimized to a certain extent in terms of chips, optical components and experience. Xiaomi also continues to accumulate strength in this field and launched its first AR glasses in February this year. In the future, the signal of Vision Pro will definitely accelerate the development of the VR industry. Not only that, it will also create opportunities for the industry chain of VR equipment.

Imagine being able to watch movies and play games on a 150-inch screen at home. What was once imagined in science fiction movies will soon become a reality. However, the high price of Vision Pro brings people back to reality. This also means that it is not very close to AR/VR entering thousands of households. But for the entire industry, it was a very influential start.

And through Apple’s phenomenal product, it is also a good reference and future direction for the Chinese VR/AR industry on the other side of the ocean.

Apple, an “AR Empire”

The birth of Apple Vision Pro opened the "post-iPhone era."

It can be said that Vision Pro is a milestone. The MR mixed reality it presents can superimpose the virtual world on the real world, and only requires eyes and gestures to complete the operation. There are many highlights worth discussing in the Vision Pro launched by Apple, which have far-reaching significance for the VR and AR industries and the upstream and downstream supply chains.

First of all, the first highlight is the chip. This time Vision Pro not only uses Apple’s self-developed M2 chip, but also adds an R1 chip. The combination of the two chips directly brings the computing power of Vision Pro to the level of a regular computer.

Among them, M2 is the latest chip used in Apple computers and is responsible for processing daily data; R1 is a chip specially used for head-mounted display devices and is responsible for processing data received by cameras and sensors.

It is the combination of the M2+R1 chip that makes the cameras, sensors and microphones on Apple's headset more sensitive and reducing latency as much as possible.

It is understood that Apple has developed at least three chips from scratch for Vision Pro. In the process of developing the Vision Pro chip, a chip for Macbook - M1 was also born.

The second highlight of Vision Pro is the implementation of mixed reality through 12 cameras and 5 sensors. These cameras and sensors translate the surrounding environment onto the screen in real time and can continuously track facial expressions and gestures. The latest Vision Pro only requires eyes and gestures to complete the operation.

The final effect is that by wearing Vision Pro

you can see the "world" on your mobile phone and computer in the real world, thereby realizing mixed reality. What Apple wants to do is bring the future world imagined in science fiction movies into reality. For example, people can use a 150-inch screen to watch movies and play games at home, and enjoy the unique experience brought by the cinema. They can also open office software in the virtual world by raising their heads on an airplane, etc.

With the arrival of Vision Pro, Apple also designed a new operating system for it - visionOS.

This is the most revolutionary highlight of this time. It's no secret that every new piece of Apple hardware comes with a new operating system. This visionOS also inherits this tradition, which Apple calls "the first operating system designed for spatial computing."

At the architectural level

visionOS shares core modules with macOS and iOS, but adds a "real-time subsystem" for processing interactive visual effects on Apple Vision Pro.

Unlike Apple's other operating systems, visionOS gets rid of the boundary restrictions of traditional displays and uses a three-dimensional interface design so that applications can appear side by side in different proportions. Moreover, through the reflection of natural light, users can feel a sense of distance from the application.

In the visionOS system, users will first enter the "shared space", which is similar to the desktop on tablets and mobile phones. When the user focuses on an application through his eyeballs, the image will turn from flat to three-dimensional.

On this basis, users on the new operating system can also use

Apple’s App ecosystem specially created for Vision Pro, including applications such as Adobe, Microsoft Teams and Zoom. In addition, Apple has designed a developer tool suite to allow third-party developers to design personalized applications for Vision Pro.

Although visionOS is still in its infancy, the maturity of the operating system can be seen from the scale of developers. We learned from Apple officials that visionOS is an operating system built on Apple’s 36 million registered developers.

It takes ten years to sharpen a sword. Behind Vision Pro, Apple spent eight years and invested tens of billions of dollars in this effort. It not only developed its own equipment, but also selected multiple exclusive suppliers such as Sony and TSMC. At the recent WWDC23 conference, Cook finally used the term "revolutionary product" again.

Since Zuckerberg acquired Oculus in 2014, Apple has continued to expand the research and development of AR/VR projects. According to late reports, Apple acquired a total of 11 AR and VR companies in eight years from 2015 to 2023, and invested US$1 billion every year.

Apple’s VR headset not only chose the self-research route for key

components such as operating systems and chips, but also selected multiple exclusive suppliers for other aspects. Among them, Micro OLED displays are exclusively supplied by Sony; precision lenses are supplied by Mitsubishi Chemical Company; and TSMC, the world's largest chip manufacturer, completed chip foundry for Vision Pro; Changying Precision is the main supplier of casings; camera modules It is exclusively supplied by Gaowei Electronics; the external power supply and acoustic unit are exclusively supplied by Goertek; and the assembly is exclusively completed by Luxshare Precision.

From a global perspective, the launch of Apple's Vision Pro has brought hope to some electronic parts manufacturers. In fact, relevant industry figures have expressed concerns about cthis. Although for Asian suppliers, sales have increased significantly, the market will gradually pick up. But the recovery of suppliers depends on the overall VR/AR market environment. In recent years, the industry's performance has been somewhat disappointing.

0 notes

Text

Samsung invests in DigiLens XR glasses firm at valuation over $500M

Join gaming leaders online at GamesBeat Summit Next this upcoming November 9-10. Learn more about what comes next.

DigiLens has raised funding from Samsung Electronics in a round that values the augmented reality smart glasses makers at more than $500 million.

Sunnyvale, California-based DigiLens did not say the exact amount it raised for the development of its extended reality glasses (XR), which will offer AR features, such as overlaying digital images on what you see.

DigiLens CEO Chris Pickett said in a previous interview with VentureBeat that the latest smart glasses are more advanced than models the company showed in 2019.

DigiLens’ premiere product is a holographic waveguide display containing a thin-film, laser-etched photopolymer embedded with microscopic holograms of mirror-like optics. A micro-display is projected into one end of the lens and the optics turn the light wave, guiding it through the surface before another set of optics turn it back toward the eye.

DigiLens refined this technique more than a decade ago when it was collaborating with Rockwell Collins to create avionic heads-up display (HUD) systems for the U.S. military. More recently, the company devised a photopolymer material and holographic copy process that enables it to produce diffractive optics with printers, which tend to be cheaper than traditional precision-etching machines.

DigiLens has competition in TruLife Optics, WaveOptics, and Colorado-based Akonia Holographics.

Joining Samsung Electronics in the funding are Samsung Electro-Mechanics, Diamond Edge Ventures, the strategic investment arm of Mitsubishi Chemical Holdings Corporation, Alsop Louie Partners, 37 Interactive Entertainment, UDC Ventures, the corporate venture arm of Universal Display Corporation, Dolby Family Ventures, and others.

Above: DigiLens’ Design v1 is a modular prototype.

Image Credit: DigiLens

A second close is scheduled to be completed near the end of 2021 to allow other strategic investors to join this definitive fundraising round.

“Today’s announcement helps establish DigiLens as the frontrunner in the race to develop the core optical technologies that are essential to deploying smart glass augmented reality (AR) and extended reality (XR) devices at scale,” said Pickett, in a statement. “The waveguide display optics are the most difficult and important step in developing head worn hardware. We’re excited to be working with Samsung Electronics during this next critical step of the category creation process. Samsung’s innovative nature and proven track record of manufacturing mobile

devices at a global scale will unlock value for the whole industry. Bringing smartglasses to market will require an ecosystem approach in order to hit the timelines, volumes, and cost structure required for consumer devices. This funding round puts the necessary pieces in place to finally bring enterprise and consumer smartglasses to market at a realistic price point.”

Extended reality (XR) devices are poised to be the next mobile device and a once in a generation strategic inflection point in computing, the company said. XR has profound applications and potential uses in a broad array of sectors ranging from industry to medicine, education, and entertainment. XR is the next evolutionary step in computing from laptops, tablets, and smartphones. Extended reality is an umbrella term that includes virtual reality (VR), augmented reality (AR) and mixed-reality (MR) devices. VR creates an immersive experience that transports users from the physical world to a virtual one.

AR provides a digital overlay on the physical world for a more “hands free” experience, while MR includes elements of both. DigiLens is one of the de facto optical standards for smart glass experiences because its technology provides the best balance of thin, light weight, high performance, low cost, and highly manufacturable waveguide displays for AR smartglasses. DigiLens’ proprietary photopolymer and holographic contact copy manufacturing process is more cost-effective and scalable than other waveguide solutions on the market, enabling smaller, lighter, thinner, brighter, and more efficient lenses.

“At Samsung, we develop and innovate technologies that provide unmatched value for our global customers and enable experiences that go beyond what they expect. We are very pleased to invest in and collaborate with DigiLens to prepare differentiated and competitive AR devices,” said Hark Sang Kim, executive vice president at Samsung Electronics, in a statement. “I’m pleased to be joining the Board of Directors at DigiLens and I’m committed to strengthening the close bond between our two companies.”

VentureBeat

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative technology and transact. Our site delivers essential information on data technologies and strategies to guide you as you lead your organizations. We invite you to become a member of our community, to access:

up-to-date information on the subjects of interest to you

our newsletters

gated thought-leader content and discounted access to our prized events, such as Transform 2021: Learn More

networking features, and more

Become a member

0 notes

Text

Increment in Trends of North America Automotive Augmented Reality and Virtual Reality Market Outlook: Ken Research

The Augmented reality is a digital layer overlaid on the physical world. The Augmented reality applications are improved on special 3D programs that allow developers to amalgamate the contextual or digital content with the real world. Moreover, it amalgamates the real-life environment with virtual details that improve the experience. This is typically obtained by looking at real-life surroundings through a smart goggles and headsets, Smartphone or tablet screen. Augmented reality in automotive is majorly utilized for the application that displays the advantages of pedestrian, navigations, and smart signalling on a windshield.

The Virtual reality utilizes the head mounted display of goggles for the generation of an interactive & completely digital environment that delivers a fully enclosed synthetic experience, and visual feedback. In addition, virtual reality in automotive is a three-dimensional (3D) computer operating environment, which takes the end users to an artificial world.

According to the report analysis, ‘North America Automotive Augmented Reality and Virtual Reality Market by Component, Technology, Application, Vehicle Type, Driving Autonomy, and Country 2020-2026: Trend Forecast and Growth Opportunity’ states that HTC, Continental, DENSO, Garmin, Bosch, General Motors (GM), Unity, HARMAN International, Hyundai Motor Company, Jaguar, Nippon Seiki, Mercedes-Benz, Microsoft, NVIDIA, Panasonic, Visteon, Volkswagen and several others are the proficient companies which significantly operating the North America automotive augmented reality and virtual reality for leading the highest market growth and accounting the great value of market share while delivering the better consumer satisfaction, improving the applications of automotive augmented reality and virtual reality, decreasing the linked prices, employing the young work force, establishing several research and development programs, establishing the several research and development program, and implementing the policies and strategies of enlargements and profit making.

The Technological advancement in connectivity primarily propels the growth of the market. Additionally, the effective augment in requirement of augmented and virtual reality in automotive and cost effective recompenses from the AR and VR based solutions are accountable for the effective growth of the market. However, the solemn threats to the physical and emotional wellbeing of end-users and great requirement on internet connectivity are projected to hinder the growth of the AR and VR market. Furthermore, development of mixed reality (MR) from integration of AR and VR and the improvement of HUD system to improve the safety ensures emerging growth opportunities for this market. This is projected to boost the growth of the market during the near future.

The augment in penetration of Internet of Things (IoT) in automotive assists speedily connectivity for more intuitive visualization and interaction. Also, more than a few companies are improving the smart AR glasses with a Qualcomm collaboration that recompenses the multimode connectivity (4G, 5G), eye tracking cameras, and optics &estimate technology within semi-transparent display. There is an augment in the requirement for 5G networks in automotive as it allows the high speed data transfer with lower latency and develops the traffic capacity & network efficiency. In addition, such 4G and 5G networks are projected to enable VR devices to offload computational work besides growing the fidelity of VR experiences. Thus, such application based technological improvements in automotive boost the growth of the automotive AR and VR market.

For More Information, click on the link below:-

North America Automotive Augmented Reality and Virtual Reality Market

Related Report:-

Global Automotive Augmented Reality and Virtual Reality Market Report 2020 by Key Players, Types, Applications, Countries, Market Size, Forecast to 2026 (Based on 2020 COVID-19 Worldwide Spread)

Contact Us:-

Ken Research

Ankur Gupta, Head Marketing & Communications

+91-9015378249

0 notes

Text

Goodbye Glasses, Hello Smartglasses

By KIM BELLARD

It’s been a few months since I last wrote about augmented reality (AR), and, if anything, AR activity has only picked up since then — particularly in regard to smartglasses. I pointed out then how Apple’s Tim Cook and Facebook’s Mark Zuckerberg were extremely bullish on the field. and Alphabet (Google Glasses) and Snap (Spectacles) have never, despite a few apparent setbacks, lost their faith.

I can’t do justice to all that is going on in the field, but I want to try to hit some of the highlights, including not just what we see but how we see.

Let’s start with Google acquiring smartglass innovator North, for some $180m, saying:

We’re building towards a future where helpfulness is all around you, where all your devices just work together and technology fades into the background. We call this ambient computing.

North’s founders explained that, from the start, their vision had been: “Technology seamlessly blended into your world: immediately accessible when you want it, but hidden away when you don’t,” which is a pretty good vision.

Meanwhile, Snap is up to Spectacles 3.0, introduced last summer. They allow for 3D video and AR, and continue Snap’s emphasis on headgear that not only does cool stuff but that looks cool too. Steen Strand, head of SnapLab, told The Indian Express:

A lot of the challenges with doing technology and eyewear is about how to hack all the stuff you need into a form factor that’s small, light, comfortable, and ultimately something that looks good as well.

Snapchat is very good at taking something very complex like AR and implementing it in a way that’s just fun and playful. It really sidesteps the whole burden of the technology and we are trying to do that as much as possible with Spectacles.

Snap claims 170m of its users engage with AR daily — and some 30 times each day at that. It recently introduced Local Lens, which “enable a persistent, shared AR world built right on top of your neighborhood.”

And then there’s Apple, the leader in taking hardware ideas and making them better, with cooler designs (think iPod, iPhone, iPad). It has been working on headset-mounted displays (HMDs) — including AR and VR — since 2015, with a 1,000 person engineering team.

According to Bloomberg, there has been tension between Mike Rockwell, the team’s lead, and Jony Ive, Apple’s design guru, largely centering around if such headsets would be freestanding or need a companion hub, such as a smartphone, that would allow greater capabilities.

Ive won the battle, having Apple focus first on a freestanding headset. Bloomberg reports:

Although the headset is less technologically ambitious, it’s pretty advanced. It’s designed to feature ultra-high-resolution that will make it almost impossible for a user to differentiate the virtual world from the real one.

Apple continues to work on both versions (especially since Ive has now departed). Bloomberg predicts Apple’s AR glasses will be available by 2023.

Not to be outdone, according to Patently Apple, “Facebook is determined to stay ahead of Apple on HMDs and win the race on being first with smartglasses to replace smartphones.”

For example, earlier this year, Facebook beat out Apple in an exclusive deal with AR display firm Plessey. The company’s goal is “glasses form factor that lets devices melt away,” while noting that “the project will take years to complete.” It continues to generate a variety of smartglasses related patients, including one for a companion audio system.

Just to show they’re in the game too, Amazon is working on Echo Frames and Microsoft is still trying to figure out uses for Hololens.

But what may be most intriguing smartglasses may be indicated by some recent Apple patents. As reported by Patently Apple:

The main patent covers a powerful new vision correction optical system that’s able to incorporate a user’s glasses prescription into the system. The system will then alter the optics to address vision issues such astigmatism, farsightedness, and nearsightedness so that those who wear glasses won’t have to them when using Apple’s HMD.

It’s worth pointing out that the vision correction is not the goal of the patent, just one of the features it allows. The patent incorporates a variety of field-of-vision functions, including high-resolution display needed for AR and VR. But vision correction may be one of the most consequential.

Two months ago, technology author Robert Scoble explained that Apple should be more interested in AR than VR because:

60% of people wear eye glasses. So, if Apple can disrupt the eye glass market, like it disrupted the watch market, it can sell 10s of millions. So, the teams that are winning Tim Cook’s ear are those who are showing how Apple can disrupt eye glasses. Not teams that are disrupting VR.

Doug Thompson elaborated, “If it can grab 13% of the market for glasses, that’s an $18B market, nearly double the current Apple wearables business to date.” Although he believes AR is coming, he also believes: “The point of Apple Glasses won’t be to ‘bring AR to the masses’. It will be to create a wearable product that’s beautiful and that does beautiful things.”

And this was before the news broke about Apple’s new patent.

I’ve worn glasses since elementary school, and it’s a bother to have to periodically get new lenses. If I could buy smartglasses that automatically updated, I’d be there. Warby Parker, Lenscrafter, and all those independent opticians should be pretty worried.

Thirty years ago, if you predicted we’d all be glued to handheld screens, you’d have been scoffed at. I think that in perhaps as little as ten years it is going to be considered equally as old-fashioned to be looking at a screen or even carrying a device. Anything we’d want to look at or do on a screen we’ll do virtually, using the ubiquitous computing power we’ll have (such as through Google’s ambient computing).

Mr. Scoble told John Koetsier: “This next paradigm shift is computing that you use while walking around, while moving around in space,” Mr. Koetsier believes it is “the next major leap in computing platforms,” called “spacial computing.”

We should stop thinking about AR as a fun add-on and more about a technology to help us see what we need/want to see in the way that best presents it, and smartglasses as the way we’ll experience it.

Kim is a former emarketing exec at a major Blues plan, editor of the late & lamented Tincture.io, and now regular THCB contributor.

Goodbye Glasses, Hello Smartglasses published first on https://venabeahan.tumblr.com

0 notes

Text

Goodbye Glasses, Hello Smartglasses

By KIM BELLARD

It’s been a few months since I last wrote about augmented reality (AR), and, if anything, AR activity has only picked up since then — particularly in regard to smartglasses. I pointed out then how Apple’s Tim Cook and Facebook’s Mark Zuckerberg were extremely bullish on the field. and Alphabet (Google Glasses) and Snap (Spectacles) have never, despite a few apparent setbacks, lost their faith.

I can’t do justice to all that is going on in the field, but I want to try to hit some of the highlights, including not just what we see but how we see.

Let’s start with Google acquiring smartglass innovator North, for some $180m, saying:

We’re building towards a future where helpfulness is all around you, where all your devices just work together and technology fades into the background. We call this ambient computing.

North’s founders explained that, from the start, their vision had been: “Technology seamlessly blended into your world: immediately accessible when you want it, but hidden away when you don’t,” which is a pretty good vision.

Meanwhile, Snap is up to Spectacles 3.0, introduced last summer. They allow for 3D video and AR, and continue Snap’s emphasis on headgear that not only does cool stuff but that looks cool too. Steen Strand, head of SnapLab, told The Indian Express:

A lot of the challenges with doing technology and eyewear is about how to hack all the stuff you need into a form factor that’s small, light, comfortable, and ultimately something that looks good as well.

Snapchat is very good at taking something very complex like AR and implementing it in a way that’s just fun and playful. It really sidesteps the whole burden of the technology and we are trying to do that as much as possible with Spectacles.

Snap claims 170m of its users engage with AR daily — and some 30 times each day at that. It recently introduced Local Lens, which “enable a persistent, shared AR world built right on top of your neighborhood.”

And then there’s Apple, the leader in taking hardware ideas and making them better, with cooler designs (think iPod, iPhone, iPad). It has been working on headset-mounted displays (HMDs) — including AR and VR — since 2015, with a 1,000 person engineering team.

According to Bloomberg, there has been tension between Mike Rockwell, the team’s lead, and Jony Ive, Apple’s design guru, largely centering around if such headsets would be freestanding or need a companion hub, such as a smartphone, that would allow greater capabilities.

Ive won the battle, having Apple focus first on a freestanding headset. Bloomberg reports:

Although the headset is less technologically ambitious, it’s pretty advanced. It’s designed to feature ultra-high-resolution that will make it almost impossible for a user to differentiate the virtual world from the real one.

Apple continues to work on both versions (especially since Ive has now departed). Bloomberg predicts Apple’s AR glasses will be available by 2023.

Not to be outdone, according to Patently Apple, “Facebook is determined to stay ahead of Apple on HMDs and win the race on being first with smartglasses to replace smartphones.”

For example, earlier this year, Facebook beat out Apple in an exclusive deal with AR display firm Plessey. The company’s goal is “glasses form factor that lets devices melt away,” while noting that “the project will take years to complete.” It continues to generate a variety of smartglasses related patients, including one for a companion audio system.

Just to show they’re in the game too, Amazon is working on Echo Frames and Microsoft is still trying to figure out uses for Hololens.

But what may be most intriguing smartglasses may be indicated by some recent Apple patents. As reported by Patently Apple:

The main patent covers a powerful new vision correction optical system that’s able to incorporate a user’s glasses prescription into the system. The system will then alter the optics to address vision issues such astigmatism, farsightedness, and nearsightedness so that those who wear glasses won’t have to them when using Apple’s HMD.

It’s worth pointing out that the vision correction is not the goal of the patent, just one of the features it allows. The patent incorporates a variety of field-of-vision functions, including high-resolution display needed for AR and VR. But vision correction may be one of the most consequential.

Two months ago, technology author Robert Scoble explained that Apple should be more interested in AR than VR because:

60% of people wear eye glasses. So, if Apple can disrupt the eye glass market, like it disrupted the watch market, it can sell 10s of millions. So, the teams that are winning Tim Cook’s ear are those who are showing how Apple can disrupt eye glasses. Not teams that are disrupting VR.

Doug Thompson elaborated, “If it can grab 13% of the market for glasses, that’s an $18B market, nearly double the current Apple wearables business to date.” Although he believes AR is coming, he also believes: “The point of Apple Glasses won’t be to ‘bring AR to the masses’. It will be to create a wearable product that’s beautiful and that does beautiful things.”

And this was before the news broke about Apple’s new patent.

I’ve worn glasses since elementary school, and it’s a bother to have to periodically get new lenses. If I could buy smartglasses that automatically updated, I’d be there. Warby Parker, Lenscrafter, and all those independent opticians should be pretty worried.

Thirty years ago, if you predicted we’d all be glued to handheld screens, you’d have been scoffed at. I think that in perhaps as little as ten years it is going to be considered equally as old-fashioned to be looking at a screen or even carrying a device. Anything we’d want to look at or do on a screen we’ll do virtually, using the ubiquitous computing power we’ll have (such as through Google’s ambient computing).

Mr. Scoble told John Koetsier: “This next paradigm shift is computing that you use while walking around, while moving around in space,” Mr. Koetsier believes it is “the next major leap in computing platforms,” called “spacial computing.”

We should stop thinking about AR as a fun add-on and more about a technology to help us see what we need/want to see in the way that best presents it, and smartglasses as the way we’ll experience it.

Kim is a former emarketing exec at a major Blues plan, editor of the late & lamented Tincture.io, and now regular THCB contributor.

Goodbye Glasses, Hello Smartglasses published first on https://wittooth.tumblr.com/

0 notes

Text

What is augmented and mixed reality? Are both same or different? What are the uses of it?

Before the emergence of augmented reality and virtual reality, there was just plain old reality the sounds and sights of everyday life and tactile, ordinary encounters with three-dimensional objects. The last couple of years saw a boom in the enterprise and consumer marketplaces with the rise of virtual reality (VR) and augmented reality (AR). They quite changed the ways we see the world and now we have a new concept called mixed reality (MR). It is a hybrid of both VR and AR and is far more powerful because it integrates the use of various technologies including advanced optics, sensors and next-gen computing power. Today a new AR example is HelloStar Media.

AR is also beginning to have an impact in business contexts, as a wider range of enterprises pilot and adopt AR capabilities. At HelloStar we believe that AR application should be used in common people also. Just a message in a postcard or a greeting can be used in the old days. Now in the era of the digital platform, someone can send the message in Instagram or other social media platforms. More or less we all use these social media platforms. If a celebrity sends these messages via the internet that become a special matter for us. A virtual reality where you see only their act or sample of talents. But in Augmented reality, you can check for personalized messages and the influencer can make money by that. Augmented reality (AR) and virtual reality (VR) are often talked about in the same breath – and that can make sense for, say, measuring the market for these related capabilities. AR lets the user experience the real world, which has been digitally augmented or enhanced in some way. VR, on the other hand, removes the user from that real-world experience, replacing it with a completely simulated one. It means that you can see them but not that personalized.

Virtual reality fabricates an absorbing experience for any user – even though they are interacting with their digital environment in real; it creates real-life simulations. To be more facile, virtual reality creates a virtual world for the users but is fashioned in a way where the difference between real and virtual world is quite hard to tell. A computer created, artificial simulation or recreation of some real life situation is what virtual reality is. Vision and hearing of the user are simulated primarily, immersing them in a completely different world.

Mixed Reality (MR) can be mentioned as an enhanced form of Augmented Reality (AR). Let us understand like this. In Augmented Reality, like Google Glass or Yelp’s Monocle feature, the visible natural environment is overlaid with a layer of digital content. Mixed Reality takes the best qualities of Augmented and Virtual Reality to create an immersive interface that overlay upon the user’s reality. Rather than displaying simple images like Augmented Reality, Mixed Reality strives to put fully digital objects that are trackable and intractable in the user’s environment. Users can actually view and manipulate things from different angles which is as complex as an anatomy model. This type of rendering demands more processing power than Augmented Reality, which is one main reason Mixed Reality applications and devices are still in the proof-of-concept phase and far from consumer availability.

It brings real world and digital elements together. But wait, this is what AR does, so what is the difference between AR and MR!

It integrates digital objects and real world in such a way that it makes it look like the objects really belong there.

Mixed Reality works by scanning our physical environment and creating a map of our surroundings, so that the device will know exactly how to place digital content into that space –realistically –allowing us to interact with it.

A few Examples of MR apps are:

1. An app that allows users to place notes around their environment.

2. A television app placed in comfortable spots for viewing.

3. A cooking app placed on the kitchen wall.

4. Microsoft’s Hololens is also a famous example of MR.

One common thing between all the above forms of technology is that they change the way we perceive real world objects. All of these are trying to connect the real world and virtual tools, helping us improve our productivity.

The HelloStar team believes that there is a bigger untapped opportunity in India for the shout-out process, considering the craze around celebrities and influencer in India. The main objective behind starting up HelloStar is to shelter influencers in our business. Consuming the time, we want to spawn multiple revenue streams for them. Each influencer or celebrity who is listed on the HelloStar platform will get a satisfying rate. The user can click on their favourite celebrity and make a payment. he or she receives a shoutout within the next seven days.

Using the shout out platform the stars can send personalized video messages to their fans and followers. People who like to use social media a lot they can enjoy this as much they want. The stars who like to stay close to their fans and followers can surely be happy by this. HelloStar Media made this platform for everyone. Celebrities and social media stars can send birthday gifts by HelloStar, a simple message and good wish. The HelloStar Augmented Reality platform is made for sending greetings for special purpose.

#augmented reality#mixed reality#hellostar#shoutout#personalized video message#video shoutout#birthday

0 notes

Text

IMC last day- India Mobile Congress (IMC) positions India distinctly on global telecom map

The India Mobile Congress 2019, the largest digital technology forum in South Asia, which is jointly organised by the Department of Telecommunications, Government of India and COAI today concluded with over 75000 visitors from 40 plus countries attending the third edition of South Asia’s biggest telecom, Internet, and technology event.

The inauguration ceremony on first day was attended by more than 3000 people was graced by the presence of Hon’ble Telecom Minister, Shri Ravi Shankar Prasad, along with Shri Anshu Prakash, Chairman, DCC and Secretary (T) and other national and international delegates including Mr. Kumar Mangalam Birla, Chairman, Aditya Birla Group, Mr. Malcolm Johnson, Deputy Secretary General, ITU; Mr. Jim Whitehurst, Global President and CEO, Red Hat, Mr. Rakesh Bharti Mittal, Managing Director and Co-Vice Chairman, Bharti Enterprises Ltd, Mr. Jay Chen, CEO, Huawei India. Other delegates who attended the three days event includes Mr. Ajit Mohan, CEO, Facebook India, Mr. Rajen Vagadia, VP & President, Qualcomm, Prof. Adrian Park, Professor and Chairman, Depart of Surgery, John Hopkins University; Dr Ganesh Kathiresan, VP, Digital Healthcare, Reliance JIO, Dr. Devi Shetty, Chairman, Narayana Health, Mr. Ankit Tripathi, Additional Director, Centre of Health Informatics, Ministry of Health and family Welfare, Mr. Varin Jhaveri, OSD and Lead Innovation Strategy, National Health Authority.

The prestigious event saw 250+ speakers and 300+ exhibitors showcasing cutting edge products and services across 60,000 sq. ft. of exhibition space. That apart, 5,000 CXO-level delegates, 150 national and international buyers as well as sellers, made IMC 2019 a vibrant opportunity for business transactions. Make in India and Startup India was also well received by the visitors where 250 statups participated.

Third edition of India Mobile Congress witnessed various live demonstrations of 5G use cases. The exhibition stalls covered a variety of interesting possibilities hinged around 5G, Internet of Things, Augmented and Virtual Reality, Artificial Intelligence, Robotics, Smart City Solutions, Fintech, mhealth, and Cyber Security, amongst others.

To encourage the young talent and innovation, the event also saw the IMC Digital Communications Awards. Nine of the IMC awards were sponsored by ZTE and three IMC & Aegis Graham Bell Awards. Along with that IMC organised Grand Innovation Challenge Awards where winners were felicitated by Shri Anshu Prakash, Telecom Secretary.

At IMC Hon’ble Telecom Minister, Shri Ravi Shankar Prasad, reiterated the Government’s commitment to create a favourable atmosphere for telecom companies to operate and is well aware of the problems and challenges faced by the industry. The Government has already come out with enabling policies in areas like electronic manufacturing, communications, open source technology and also reforms in domains such as spectrum trading, sharing and harmonisation, assuring the industry of the requisite support.

IMC with the Knowledge Partner, KPMG in India, launched a report on the TMT sector titled ‘Imagine a new connected world: Intelligent, Immersive, Inventive.’ The report takes a deep dive into the digital ecosystem enabled through 5G, blockchain technology, IoT, AI, cognitive computing, machine learning and AR/VR to name a few. While the current investment and focus is on creating an enriched omni-channel experience for customers, it is the use of bots and blockchain that are going to be game changers in enhancing customer experience over the next five years, as per the report.

Key Announcements during India Mobile Congress 2019

Vodafone Idea Ltd and Nokia

Vodafone Idea Business Services (VIBS), the enterprise arm of Vodafone Idea Ltd., the country’s leading telecom operator, today announced its partnership with Nokia to roll out software-defined networking in a wide area network (SD-WAN) services for start-ups and enterprises. Through SD-WAN deployment, VIBS will offer advanced networking and connectivity solutions and enable enterprises to dramatically increase the speed of deployment, flexibility and control. It is a software-defined network for digital business to securely access applications in a multi-cloud environment.

Huawei India

Huawei India Enterprise showcased 5G Powered India-Centric Solutions at India Mobile Congress 2019 today. Introduced the Wi-Fi 6 technology to accelerate Indian Enterprise into the New Digital Era. Committed to the vision of a New India, Huawei India Enterprise business featured a comprehensive range of the 5G powered ICT products for varied industries such as 5G + smart city, 5G + safe city, 5G + airport (boarding gate) and 5G + education (and remote classroom) aimed at a 5G accelerated Digital India. Bringing the next generation of wireless connections to India, Huawei introduced the enterprise grade Wi-Fi 6, powered by Huawei 5G, for high-scale commercial use of enterprises in India. Together with alliances and solution partners, Huawei demonstrated some of its solutions and use cases for multiple vertical sectors relating to smart cities, transportation, education and smart campus solutions at IMC 2019.

Qualcomm – NavIC

Qualcomm – NavIC: Qualcomm announced support for India’s Regional Navigation Satellite System (IRNSS), Navigation with Indian Constellation (NavIC), in select chipset platforms across the Company’s upcoming portfolio. The initiative will help accelerate the adoption of NavIC and enhance the geolocation capabilities of mobile, automotive and the Internet of Things (IoT) solutions in the region – with the backing of engineering talent in India. The collaboration delivered the first-ever NavIC demonstration using the Qualcomm Snapdragon Mobile Platforms on September 19th in Bangalore and showcased the solution again at India Mobile Congress, October 14-16 in New Delhi.

L2Pro: Qualcomm Incorporated in collaboration with the Cell for IPR Promotion and Management (CIPAM) of the Department for Promotion of Industry and Internal Trade (DPIIT), Government of India and the Centre for Innovation, Intellectual Property and Competition (CIIPC) at National Law University Delhi (NLUD), today announced the launch of the L2Pro India IP e-learning Platform. The L2Pro platform – designed to enable Micro, Small and Medium Enterprises (MSMEs), university students, and startups in India to bring their innovations quickly to market – will aid in deeper understanding of the intellectual property (IP) domain, how to protect innovations with patents, use copyrights to protect software, develop trademarks, integrate IP considerations into company business models, and obtain value from research and development (R&D) efforts.

Ericsson and Qualcomm successfully complete first ever Live 5G video call in India on mmWave spectrum at India Mobile Congress. The call was made using smartphones based on the flagship Qualcomm Snapdragon 855 Mobile Platform with Snapdragon X50 5G Modem-RF System and Ericsson’s 5G platform including 5G NR radio, RAN Compute products and 5G Evolved Packet Core. The achievement is a significant milestone in the country as India gets ready for 5G. For mobile networks, mmWave spectrum will be an important capacity layer for both 4G and 5G. As part of the demonstration, Nunzio Mirtillo Head of Market Area South East Asia, Oceania and India at Ericsson, made a video call at the Ericsson booth to Rajen Vagadia , Vice President , Qualcomm India Private Limited on site at IMC 2019. The companies have already worked closely to create several 5G technology milestones.

Himachal Futuristic Communications Ltd.

After the successful launch of the next generation Wi-Fi technology products and solutions under its brand IO by HFCL on day 2 at IMC 2019 comprised of in-depth business meetings and product showcase of the newly launched product category. HFCL has displayed its globally benchmarked products and solutions under the brand IO; including Access Points (APs), Unlicensed Band Radios (UBRs), Wireless LAN Controller (WLC), Element Management System (EMS) and Cloud Network Management System (CNMS) at IMC 2019. HFCL also showcased their tri-radio 4X4:4 Outdoor Access Point compliant to the latest Wi-Fi standard Wi-Fi 6 with integrated/external antennae (ion12/ion12e) option, which is a top-of-the-line Outdoor Access Point with tri-radio concurrent operation in 2.4 and 5 GHz bands and peak throughput up to 5 Gbps. The product is WFA certified for Hotspot 2.0 and can cover wide range of indoor and outdoor deployment scenarios.

HFCL announced the launch of Next Generation Wi-Fi Technology products and solutions under its Brand - IO that shall meet the enormous global and Indian Wi-Fi network demand. IO Networks are globally benchmarked with features of Next-Gen technologies across all products that include Access Points (AP), Unlicensed Band Radio (UBR), Wireless LAN Controller (WLC), Element Management System (EMS), Cloud Network Management System (CNMS). IO is a platform that aims to bring in efficiency and intelligence to mobility and assist global citizens to use the most advanced Wi-Fi technology products & solutions while keeping a sharp focus on security and safety.

Sterlite Technologies

Stellar-Fibre Launch

STL launched Stellar Fibre, a path-breaking solution that will power the next-gen ultra-high definition future. The leading-edge innovation from STL’s optical design solutions guarantees best-in-class data transfer, negligible data loss even with high fibre bends, and compatibility with all fibres in use today.

MantraPODS launch: STL launched MantraPODS, a programmable open disaggregated solution for networking that completes the fully integrated FTTxMantra solution. FTTx MANTRA is an end-to-end FTTx-as-a-service solution that enables swift roll-out of Fibre-to-the-Point (FTTx) networks. With Programmability at the core of the network, PODS brings more flexibility and service excellence to data networks.

LEAD 360o 2.0: STL unveiled its second generation of Hyperscale Network Modernisation solution – LEAD 360o 2.0 which comes with special features like robotics cable blowing and AI bots and further accelerates the deployment of smarter networks of tomorrow. LEAD 360o 2.0 combines the potential of cutting-edge service engineering and highly orchestrated fibre roll-outs to deliver smart networks of tomorrow.

SENSRON+: STL’s Sensron+ is an industry first, end-to-end solution for critical infrastructure security. It leverages the power of hybrid sensing technologies like Fibre, Radar, Lidar, Sonar, combined with Big data and analytics and the most advanced Command and Control Centre to deliver 360-degree solutional awareness and an unparallel “threats to response” mechanism.

The company unveiled, 5G Edge Mantra, an advanced 5G data hub technology, in presence of Shri Anshu Prakash, Secretary, DoT. The solution is designed to meet the growing mobile broadband demands, supporting multiple access technologies while providing enterprise-grade service reliability, security, bandwidth, intelligence and latency to end-users.

Infinera

Infinera, a global networking solutions provider, brings to India Mobile Congress cutting-edge technology advances that are powering network transformation and enabling a new generation of services, including 5G. From Instant Bandwidth and Auto-Lambda to cognitive intelligence and disaggregated edge routing, Infinera’s end-to-end solutions deliver industry-leading economics and performance in long-haul, subsea, data center interconnect and metro transport applications. Experience their innovations and see how they are laying the foundation for the The Infinite Network – extending high-bandwidth solutions everywhere, providing an infinite pool of always-available connectivity, and enabling service activation instantly.

VVDN

Fronthaul with ORAN over eCPRI (7-2 splitup option): VVDN is demonstrating 5G fronthaul termination solution based on Xilinx FPGA. The solution is O-RAN 7-2x compliant split up transporter over eCPRI. Each fronthaul can support upto 8 layer uncompressed IQ data. Each layer is 100 MHz bandwidth. This allows telcos to leverage the bandwidth of the fronthaul making it as catalyst, which would help, build robust 5G network infrastructure.

Vermeo Card: VVDN is showcasing its Vermeo card which is a dual FPGA solution architecture: RFSoc and MPSoc. The Vermeo Card is targeted for Telco Application. Below are the uses cases supported:

Layer 1 High Phy + Fronthaul with ORAN over eCPRI (7-2 splitup option)

Layer 1 High Phy + Layer 1 Low Phy + Fronthaul with ORAN over eCPRI (7-2 and 8 splitup option)

SmallCell: VVDN and Xilinx are showcasing L1 Solution for 5G small cell using Zynq UltraScale+ RFSoC with integrated DFE and L1 stack in the same device. The solution will support 4T4R making it ideal for typical small cell deployment. The solution is targeted to accelerate operator’s time to market for 5G Small Cell solution.

Xilinx Announces Vitis - a Unified Software Platform Unlocking a New Design Experience for All Developers. Vitis empowers software developers with adaptable hardware, while accelerating productivity for hardware designers. Rather than imposing a proprietary development environment, the Vitis platform plugs into common software developer tools and utilizes a rich set of optimized open source libraries, enabling developers to focus on their algorithms. Vitis is separate to the Vivado® Design Suite, which will still be supported for those who want to program using hardware code, but Vitis can also boost the productivity of hardware developers by packaging hardware modules as software-callable functions.

Xilinx Expands Alveo Portfolio with Industry's First Adaptable Compute, Network and Storage Accelerator Card Built for Any Server, Any Cloud, the Alveo U50. First low-profile PCIe Gen 4 card delivers dramatic improvements in throughput, latency and power efficiency for critical data center workloads and uniquely designed to supercharge a broad range of critical compute, network and storage workloads, all on one reconfigurable platform.The 8GB of HBM2 delivers over 400 Gbps data transfer speeds and the QSFP ports provide up to 100 Gbps network connectivity. The high-speed networking I/O also supports advanced applications like NVMe-oF™ solutions (NVM Express over Fabrics™), disaggregated computational storage and specialized financial services applications.

Whale Cloud Technology Co., Ltd

Whale Cloud, a subsidiary company of Alibaba Group and a global leader in digital transformation, unveils 5G Operation Map at this year’s India Mobile Congress 2019. 5G is the catalyst to accelerate the digital society transformation. In the 5G era, operators will be the technical center of the whole society’s digital transformation. Whale Cloud has developed the 5G Operation Map to guide CSPs to better operate the 5G network and business, speed up the returns of 5G investments, and improve core competence. The 5G Operation Map is designed to support operators in different stages of the 5G network deployment. The map contains four key capabilities required for successful 5G monetization:

Center of intelligence can provide advanced data analytics and AI technology; help operators build intelligence capability for network and business operation.

Center of Operation focuses on comprehensive 5G network management including planning, orchestration, network slicing and operation management; also provides the API platform to open IT& Network capability to ecosystem partners.

Center of Ecology builds a digital ecosystem for various industrial customers from business onboarding, marketplace to revenue management support.

Center of Value maximizes the value of ecosystem partners online and offline channels and customers by using digital technologies and innovative business model.

In addition to the 5G operation map, Whale Cloud also presented its digital telco transformation approach Digital Telco Maturity Map (DTMM), case-proven AI and big-data platforms for the telco industry, innovative Telco New Retail and Digital Sales Channels Management solution, and smart city solutions empowered by the Alibaba technologies and practices.

Huawei

Huawei has announced the first in India deployment of Artificial Intelligence (AI) based Massive MIMO optimization technology in the Vodafone Idea network. AI enabled Massive MIMO enables Vodafone Idea to add automation capabilities to its network, greatly improving optimization efficiency, boosting cell capacity and enhancing end-user experience of the network.

Huawei also showcased Innovative & Inclusive 5G for India at IMC 2019, said we would like to go beyond 5G which is 5G + AI. Huawei demonstrated 5G + AI applications to empower operators and industries to lead in the 5G era and boost Digital India. Keeping 5G at the forefront and center of its showcase, Huawei at the India Mobile Congress 2019, demonstrated the potential power of a 5G enabled future with live 5G use-case applications. Huawei along with key partners showcased a variety of 5G-enabled applications not just in the telecommunications sector but beyond in vertical industries, with new applications in 5G + safe city, 5G + smart city, 5G + virtual reality (VR), 5G + airport (boarding gate) and 5G + education (smart classroom).

Intel

As the Intelligent Edge partner at India Mobile Congress, Intel showcased a wide gamut of solutions on telco cloud, smart space, intelligent traffic management, 5G Radio Access Network (RAN) based on Intel® architecture. Mr. Rajesh Gadiyar, Vice President, Data Center Group, and CTO, Network & Custom Logic Group for Intel participated in a panel discussion on ‘Global Strides in 5G Deployment: Roadblocks and Best Practices’, to discuss the status of global 5G deployment, the roadblocks being faced by countries that are nearing 5G implementation and the progress so far. Intel also shared insights on the most impactful transformations enabled by 5G, the role played by AI and IoT in enabling seamless connectivity across sectors and how software-defined networking (SDN) and network functions virtualisation (NFV) are bringing network transformation to meet bandwidth-intensive requirements for the future.

VVDN

VVDN is working to deliver the next generation 5G acceleration solutions that will not only implement the complex modules of 5G infrastructure but also position VVDN well ahead in 5G network solution space. VVDN has partnered with Xilinx to accelerate the above solutions in FPGA and this enables VVDN to develop 5G Acceleration IP’s along with the hardware. VVDN showcased its solution with live demos which would enable key partnerships with 5G equipment vendors, telecom operators and system integrators.

Bharti Airtel

Bharti Airtel showcased a range of exciting digital solutions for businesses and consumers at the India Mobile Congress 2019. Airtel demonstrated a wide range of use cases and applications for a world on connected things that leverage 5G and pre-5G network technologies.

Cloud Based Immersive Gaming: Airtel showcased immersive cloud-based gaming experience between multiple users over ultra-fast low latency Airtel 5G.

Secure Mining: Using 5G and a secure private network Airtel demonstrated how digital voice communication and broadcasting of emergency messages, contributes to improved safety conditions and work environment in mines.

Smart Factory: Airtel showcased how Network slicing can help build multiple logical networks for smart factories of the future. These network slices, are completely separated and independent to the extent that if something goes wrong in one slice it will not affect the other slices. These sliced networks with ultra-low latency power manufacturing floor robots in smart factories and send real time data and analytics, allowing managers to send back real time instructions to the robots and optimize the manufacturing process.

Remote Healthcare: With extremely high throughput and low latency, 5G networks enable communication through the sense of touch using augmented reality technologies. Airtel demonstrated the next level of remote communication in a healthcare, allowing a doctor to maneuver a robot arm in real-time and examine medical patients in remote locations.

Smart Street Lighting Poles: Airtel’s smart pole platform are designed for energy efficient lighting and also offer other features such as surveillance cameras, and public address systems. The solution can be operated and managed remotely through a smart cloud based web platform.

Smart Charger: It is India’s first smart power bank rental service. The power bank provides fast charging and has inbuilt cables with all pin types. Solution has built an Internet of Things (IoT) platform to facilitate the shared economy use cases and to render a scalable, affordable, efficient and clean solution with a great user-experience.

Read the full article

0 notes

Text

What’s the Difference Between VR, AR and MR?

Get up. Brush your teeth. Put on your pants. Go to the office. That’s reality.

Now, imagine you can play Tony Stark in Iron Man or the Joker in Batman. That’s virtual reality.

Advances in VR have enabled people to create, play, work, collaborate and explore in computer-generated environments like never before.

VR has been in development for decades, but only recently has it emerged as a fast-growing market opportunity for entertainment and business.

Now, picture a hero, and set that image in the room you’re in right now. That’s augmented reality.

AR is another overnight sensation decades in the making. The mania for Pokemon Go — which brought the popular Japanese trading card game for kids to city streets — has led to a score of games that blend pixie dust and real worlds. There’s another sign of its rise: Apple’s 2017 introduction of ARKit, a set of tools for developers to create mobile AR content, is encouraging companies to build AR for iOS 11.

Microsoft’s Hololens and Magic Leap’s Lightwear — both enabling people to engage with holographic content — are two major developments in pioneering head-mounted displays.

Magic Leap Lightwear

It’s not just fun and games either — it’s big business. Researchers at IDC predict worldwide spending on AR and VR products and services will rocket from $11.4 billion in 2017 to nearly $215 billion by 2021.

Yet just as VR and AR take flight, mixed reality, or MR, is evolving fast. Developers working with MR are quite literally mixing the qualities of VR and AR with the real world to offer hybrid experiences.

Imagine another VR setting: You’re instantly teleported onto a beach chair in Hawaii, sand at feet, mai tai in hand, transported to the islands while skipping economy-class airline seating. But are you really there?

In mixed reality, you could experience that scenario while actually sitting on a flight to Hawaii in a coach seat that emulates a creaky beach chair when you move, receiving the mai tai from a real flight attendant and touching sand on the floor to create a beach-like experience. A flight to Hawaii that feels like Hawaii is an example of MR.

VR Explained

The Sensorama

The notion of VR dates back to 1930s literature. In the 1950s, filmmaker Morton Hellig wrote of an “experience theater” and then later built an immersive video game-like machine he called the Sensorama for people to peer into. A pioneering moment came in 1968, when Ivan Sutherland is credited for developing the first head-mounted display.

Much has changed since. Consumer-grade VR headsets have made leaps of progress. Their advances have been propelled by technology breakthroughs in optics, tracking and GPU performance.

Consumer interest in VR has soared in the past several years as new headsets from Facebook’s Oculus, HTC, Samsung, Sony and a host of others offer substantial improvements to the experience. Yet producing 3D, computer-generated, immersive environments for people is about more than sleek VR goggles.

Obstacles that have been mostly overcome, so far, include delivering enough frames per second and reducing latency — the delays created when users move their head — to create experiences that aren’t herky jerky and potentially motion-sickness inducing.

VR taxes graphics processing requirements to the max — it’s about 7x more demanding than PC gaming. Today’s VR experiences wouldn’t be possible without blazing fast GPUs to help quickly deliver graphics.

youtube

Software plays a key role, too. The NVIDIA VRWorks software development kit, for example, helps headset and application developers access the best performance, lowest latency and plug-and-play compatibility available for VR. VRWorks is integrated into game engines such as Unity and Unreal Engine 4.

To be sure, VR still has a long way to go to reach its potential. Right now, the human eye is still able to detect imperfections in rendering for VR.

Vergence accommodation conflict, Journal of Vision

Some experts say VR technology will be able to perform at a level exceeding human perception when it can make a 200x leap in performance, roughly within a decade. In the meantime, NVIDIA researchers are working on ways to improve the experience.

One approach, known as foveated rendering, reduces the quality of images delivered to the edge of our retinas — where they’re less sensitive — while boosting the quality of the images delivered to the center of our retinas. This technology is powerful when combined with eye tracking that can inform processors where the viewing area needs to be sharpest.

VR’s Technical Hurdles

Frames per second: Virtual reality requires processing of 90 frames per second. That’s because lower frame rates reveal lags in movement detectable to the human eye. That can make some people nauseous. NVIDIA GPUs make VR possible by enabling rendering that’s faster than the human eye can perceive.

Latency: VR latency is the time span between initiating a movement and a computer-represented visual response. Experts say latency rates should be 20 milliseconds or less for VR. NVIDIA VRWorks, the SDK for VR headsets and game developers, helps address latency.

Field of view: In VR, it’s essential to create a sense of presence. Field of view is the angle of view available from a particular VR headset. For example, the Oculus Rift headset offers a 110-degree viewing angle.

Positional tracking: To enable a sense of presence and to deliver a good VR experience, headsets need to be tracked in space within under 1 millimeter of accuracy. This minimum is required in order to present images at any point in time and space.

Vergence accommodation conflict: This is a viewing problem for VR headsets today. Here’s the problem: Your pupils move with “vergence,” meaning looking toward or away from one another when focusing. But at the same time the lenses of the eyes focus on an object, or accommodation. The display of 3D images in VR goggles creates conflicts between vergence and accommodation that are unnatural to the eye, which can cause visual fatigue and discomfort.

Eye-tracking tech: VR head-mounted displays to date haven’t been adequate at tracking the user’s eyes for computing systems to react relative to where a person’s eyes are focused in a session. Increasing resolution where the eyes are moving to will help deliver better visuals.

Despite a decades-long crawl to market, VR has made a splash in Hollywood films such as the recently released Ready Player One and is winning fans across games, TV content, real estate, architecture, automotive design and other industries.

VR’s continued consumer momentum, however, is expected by analysts to be outpaced in the years ahead by enterprise customers.

AR Explained

AR development spans decades. Much of the early work dates to universities such as MIT’s Media Lab and pioneers the likes of Thad Starner — now the technical lead for Alphabet’s Google Glass smart glasses — who used to wear heavy belt packs of batteries attached to AR goggles in its infancy.

Google Glass (2.0)

Google Glass, the ill-fated consumer fashion faux pas, has since been rebirthed as a less conspicuous, behind the scenes technology for use in warehouses and manufacturing. Today Glass is being used by workers for access to training videos and hands-on help from colleagues.

And AR technology has been squeezed down into low-cost cameras and screen technology for use in inexpensive welding helmets that automatically dim, enabling people to melt steel without burning their retinas.

For now, AR is making inroads with businesses. It’s easy to see why when you think about overlaying useful information in AR that offers hands-free help for industrial applications.

Smart glasses for augmenting intelligence certainly support that image. Sporting microdisplays, AR glasses can behave like having that indispensable co-worker who can help out in a pinch. AR eyewear can make the difference between getting a job done or not.

Consider a junior-level heavy duty equipment mechanic assigned to make emergency repairs to a massive tractor on a construction site. The boss hands the newbie AR glasses providing service manuals with schematics for hands-free guidance while troubleshooting repairs.

Some of these smart glasses even pack Amazon’s Alexa service to enable voice queries for access to information without having to fumble for a smartphone or tap one’s temple.

Jensen Huang on VR at GTC

Business examples for consumers today include IKEA’s Place app, which allows people to use a smartphone to view images of furniture and other products overlayed into their actual home seen through the phone.

Today there is a wide variety of smart glasses from the likes of Sony, Epson, Vuzix, ODG and startups such as Magic Leap.

NVIDIA research is continuing to improve AR and VR experiences. Many of these can be experienced at our GPU Technology Conference VR demonstrations.

MR Explained

MR holds big promise for businesses. Because it can offer nearly unlimited variations in virtual experiences, MR can inform people on-the-fly, enable collaboration and solve real problems.

Now, imagine the junior-level heavy duty mechanic waist deep in a massive tractor and completely baffled by its mechanical problem. Crews sit idle unable to work. Site managers become anxious.

Thankfully, the mechanic’s smart glasses can start a virtual help session. This enables the mechanic to call in a senior mechanic by VR around the actual tractor model to walk through troubleshooting together while working on the real machine, tapping into access to online repair manual documents for torque specs and other maintenance details via AR.

That’s mixed reality.

To visualize a consumer version of this, consider the IKEA Place app for placing and viewing furniture in your house. You have your eye on a bright red couch, but want to hear the opinions of its appearance in your living room from friends and family before you buy it.

So you pipe in a VR session within the smart glasses. Let’s imagine this session is in an IKEA Place app for VR and you can invite these closest confidants. But first they must sit down on any couch they can find. Now, however, when everyone sits down it’s in a virtual setting of your house and the couch is red and just like the IKEA one.

Voila, in mixed reality, the group virtual showroom session yields a decision: your friends and family unanimously love the couch, so you buy it.

The possibilities are endless for businesses.

Mixed reality, while in its infancy, might be the holy grail for all the major developers of VR and AR. There are unlimited options for the likes of Apple, Microsoft, Facebook, Google, Sony, Netflix and Amazon. That’s because MR can blend the here and now reality with virtual reality and augmented reality for many entertainment and business situations.

youtube

Take this one: automotive designers can sit down on an actual car seat, with armrests, shifters and steering wheel, and pipe into VR sessions delivering the dashboard and interior. Many can assess designs together remotely this way. NVIDIA Holodeck actually enables this type of collaborative work.

Buckle up for the ride.

The post What’s the Difference Between VR, AR and MR? appeared first on The Official NVIDIA Blog.

What’s the Difference Between VR, AR and MR? published first on https://supergalaxyrom.tumblr.com

0 notes

Text

Lumus and Quanta Enter Partnership to Create Consumer AR Optics

With augmented reality (AR) gaining ground on virtual reality (VR) as a technology to take notice of, developer of transparent AR displays, Lumus has announced a new agreement with Quanta Computer to license several AR optical engine models which could help in the mainstream adoption of AR eyewear.

Lumus has licensed several of its advanced optical engines to Quanta in an agreement to produce and market AR headsets featuring “Lumus Inside.” Under this agreement, Quanta will also be mass producing Lumus optical engines for other Tier 1 ODMs and consumer tech brands.

“This is truly an historic deal. In years to come, when we look back at the major events along the timeline toward mass adoption of augmented reality, we believe this will be recognized as a pivotal moment,” Lumus CEO Ari Grobman said in a statement. “We’re excited to be partnering with Quanta, which is one of the world’s most trusted technology ODMs, as this will allow Lumus optics to meet mass consumer market price points and bring AR glasses to the masses.”

“Quanta Computer is a big believer in AR market opportunities, and this partnership with Lumus allows us to lead the next generation of computing that augmented reality represents,” says C.C. Leung, Vice Chairman and President of Quanta Computer. “Our confidence in Lumus’ high-performance optics compelled us to become a partner to incorporate their technology into future optical engine applications. We look forward to leveraging our manufacturing expertise in building AR, VR and MR hardware as part of a very successful collaboration.”

Quanta-built AR headsets with Lumus optics are expected to be available on the consumer market within the next 12 to 18 months.

The deal follows Quanta’s investment in Lumus in November 2016, when the AR firm completed a series C funding round, raising $45 million USD, helping continue development of the Lumus DK-50 smartglasses and its patented Light-guide Optical Element (LOE) waveguide.

VRFocus will continue its coverage of Lumus, keeping you up to date to the latest announcements.

from VRFocus http://ift.tt/2AowTAo

0 notes

Text

NEWS Beitrag von SEO & Google Marketing - Businesspläne - Finanzierungsvermittlung

New Post has been published on http://www.igg-gmbh.de/neue-konferenz-ueber-digitale-optiktechnik-im-rahmen-der-spie-optical-metrology-in-muenchen/

Neue Konferenz über digitale Optiktechnik im Rahmen der SPIE Optical Metrology in München

nn n n n

Cardiff, Wales (ots/PRNewswire) – Beide Veranstaltungen sind Teil des Kongresses \”World of Photonics\”. Mit der Anmeldung besteht Zugang zum Kongress, zur Ausstellung \”Laser World of Photonics\” und zu weiteren Veranstaltungen. Dazu gehört ein Plenargespräch von Jörg Wrachtrup (Universität Stuttgart) über die Suche nach der ultimativen Quantenmaschine.

Federico Capasso (Harvard University) wird vor dem SPIE-Plenum über diffraktive Meta-Oberflächenoptik sprechen.

Bernard Kress (Microsoft Corp.), Konferenzvorsitzender von SPIE Digital Optical Technologies, hat Vorführungen von AR-, VR- und MR-Headsets von Microsoft, Oculus, HTC und Sony organisiert. Dies ist die erste öffentliche Vorführung mehrerer modernster Headsets an einem gemeinsamen Standort. Angemeldete Teilnehmer von SPIE Digital Optical Technologies und von SPIE Optical Metrology, die sich vorab eintragen, können an den Sitzungen vom 26. bis zum 28. Juni teilnehmen.

Nahezu 80 Konferenzpräsentationen im Bereich SPIE Digital Optical Technologies sind in 11 Sitzungen über Themen wie digitale Holographie für Sensoren und Bildgebung, 3D-Displays, Systemdesign und Komponenten gegliedert. Neben Bernard Kress sind Wolfgang Osten (Universität Stuttgart) und Paul Urbach (Technische Universiteit Delft) Vorsitzende der Konferenz.

Vier Kurse von jeweils einem halben Tag bieten Schulungen über einschlägige Themen für die berufliche Laufbahn an.

Die 440 Präsentationen in sechs Konferenzen der SPIE Optical Metrology werden neueste Forschung der Bereiche optische Messsysteme, Modellierung, Videometrik, maschinelles Sehen und optische Methoden für Prüfung, Bestimmung und Bildgebung von Biomaterialien behandeln. Wolfgang Osten ist Vorsitzender des Symposiums.

SPIE ist zusammen mit der Optical Society (OSA) Sponsor der European Conference on Biomedical Optics, die ebenfalls Teil des Kongresses World of Photonics ist. Präsentationen werden modernste Mikroskopie, klinische und biomedizinische Spektroskopie, optische Kohärenztomografie, Laser-Gewebe-Interaktion, optoakustische Methoden und weitere neue Technik der Biophotonik behandeln.

Plenar-Redner sind Ed Boyden (Massachusetts Institute of Technology), Pionier im Bereich Optogenetik, und Aydogan Ozcan (University of California, Los Angeles), Innovator und Erfinder im Bereich der Bildgebung ohne Linse und mobiler Gesundheit.

Rainer Leitgeb (Medizinische Universität Wien) ist Generalvorsitzender; Brett Bouma (Massachusetts General Hospital) und Paola Taroni (Politecnico di Milano) sind Programmvorsitzende.

Pressekontakt:

Amy NelsonPublic Relations Manager SPIE, Internationale Gesellschaft

nfür Optik und Photonikamy(at)spie(dot)org

nLogo - http://mma.prnewswire.com/media/486033/SPIE_logo_Logo.jpg

n

0 notes

Text

Goodbye Glasses, Hello Smartglasses

By KIM BELLARD

It’s been a few months since I last wrote about augmented reality (AR), and, if anything, AR activity has only picked up since then — particularly in regard to smartglasses. I pointed out then how Apple’s Tim Cook and Facebook’s Mark Zuckerberg were extremely bullish on the field. and Alphabet (Google Glasses) and Snap (Spectacles) have never, despite a few apparent setbacks, lost their faith.

I can’t do justice to all that is going on in the field, but I want to try to hit some of the highlights, including not just what we see but how we see.

Let’s start with Google acquiring smartglass innovator North, for some $180m, saying:

We’re building towards a future where helpfulness is all around you, where all your devices just work together and technology fades into the background. We call this ambient computing.

North’s founders explained that, from the start, their vision had been: “Technology seamlessly blended into your world: immediately accessible when you want it, but hidden away when you don’t,” which is a pretty good vision.

Meanwhile, Snap is up to Spectacles 3.0, introduced last summer. They allow for 3D video and AR, and continue Snap’s emphasis on headgear that not only does cool stuff but that looks cool too. Steen Strand, head of SnapLab, told The Indian Express:

A lot of the challenges with doing technology and eyewear is about how to hack all the stuff you need into a form factor that’s small, light, comfortable, and ultimately something that looks good as well.

Snapchat is very good at taking something very complex like AR and implementing it in a way that’s just fun and playful. It really sidesteps the whole burden of the technology and we are trying to do that as much as possible with Spectacles.

Snap claims 170m of its users engage with AR daily — and some 30 times each day at that. It recently introduced Local Lens, which “enable a persistent, shared AR world built right on top of your neighborhood.”

And then there’s Apple, the leader in taking hardware ideas and making them better, with cooler designs (think iPod, iPhone, iPad). It has been working on headset-mounted displays (HMDs) — including AR and VR — since 2015, with a 1,000 person engineering team.

According to Bloomberg, there has been tension between Mike Rockwell, the team’s lead, and Jony Ive, Apple’s design guru, largely centering around if such headsets would be freestanding or need a companion hub, such as a smartphone, that would allow greater capabilities.

Ive won the battle, having Apple focus first on a freestanding headset. Bloomberg reports:

Although the headset is less technologically ambitious, it’s pretty advanced. It’s designed to feature ultra-high-resolution that will make it almost impossible for a user to differentiate the virtual world from the real one.

Apple continues to work on both versions (especially since Ive has now departed). Bloomberg predicts Apple’s AR glasses will be available by 2023.

Not to be outdone, according to Patently Apple, “Facebook is determined to stay ahead of Apple on HMDs and win the race on being first with smartglasses to replace smartphones.”

For example, earlier this year, Facebook beat out Apple in an exclusive deal with AR display firm Plessey. The company’s goal is “glasses form factor that lets devices melt away,” while noting that “the project will take years to complete.” It continues to generate a variety of smartglasses related patients, including one for a companion audio system.

Just to show they’re in the game too, Amazon is working on Echo Frames and Microsoft is still trying to figure out uses for Hololens.

But what may be most intriguing smartglasses may be indicated by some recent Apple patents. As reported by Patently Apple:

The main patent covers a powerful new vision correction optical system that’s able to incorporate a user’s glasses prescription into the system. The system will then alter the optics to address vision issues such astigmatism, farsightedness, and nearsightedness so that those who wear glasses won’t have to them when using Apple’s HMD.