#AlexNet

Explore tagged Tumblr posts

Text

All’inizio fu AlexNet, una rete neurale convoluzionale, che il 30 settembre 2012 riuscì a battere un campione di soggetti umani nell’identificare correttamente alcune immagini di auto o di gatti: quella forma di IA chiamata ImageNet Large Scale Visual Recognition Challenge riuscì a riconoscere correttamente le immagini che le venivano sottoposte il 99% delle volte, mentre gli esseri umani si fermarono al 95%.

In precedenza i risultati della macchina erano stati molto più deludenti, ma la realizzazione di una AI che non aveva più bisogno dell’inserimento di dati corretti da parte dell’uomo prima del test, ma che se li andava a cercare da sola e che imparava dai suoi stessi errori, in pochi anni ha fatto si che raggiungesse e superasse l’uomo nel riconoscere immagini, volti, impronte digitali, pupille iridee, il timbro di una voce e, domani chissà, sarà in grado di fare una visita medica accurata, di sapere se abbiamo pagato il bollo o l’assicurazione dell’auto, se paghiamo le tasse, se siamo sotto l’effetto di stupefacenti o se abbiamo ingerito alcool, ed anche se abbiamo appena avuto un rapporto sessuale o se siamo eccitati.

In pratica la nostra privacy, o ci�� che ne rimane, sparirà del tutto e chiunque controlli la IA, o la IA stessa senza alcun controllo umano, potrebbe orientare i nostri acquisti, le nostre opinioni, il nostre inclinazioni politiche, la moda, modificare il nostro comportamento, suscitare emozioni e sentimenti fittizi e orientare il nostro odio dovunque voglia.

Ma i primi prototipi di IA e quelle attuali, dove sono andati a pescare le immagini per correggere il loro riconoscimento? Dove pescano tutte le informazioni? AlexNet ha attinto largamente dai social più frequentati le immagini dei gatti pubblicati dagli utenti per battere gli umani. Quando postate un gatto su Tumblr pensateci, state dando alla IA le informazioni per poterci manipolare meglio.

(Liberamente tratto da Yuval Noah Harari, Nexus. Breve storia delle reti di informazione dall’età della pietra all’IA, Bompiani, Milano, 2024).

1 note

·

View note

Text

AlexNet: Bước đột phá trong trí tuệ nhân tạo

✨ AlexNet: Bước Đột Phá Đưa Trí Tuệ Nhân Tạo Lên Tầm Cao Mới ✨

💡 Bạn đã bao giờ tự hỏi làm sao mà công nghệ nhận diện hình ảnh lại có thể phát triển nhanh chóng như vậy trong những năm gần đây? Chính nhờ vào AlexNet – một mạng nơ-ron sâu nổi bật đã tạo nên cuộc cách mạng trong lĩnh vực trí tuệ nhân tạo 🎉. Được giới thiệu lần đầu vào năm 2012, AlexNet đã gây tiếng vang lớn khi giành chiến thắng trong cuộc thi ImageNet với độ chính xác đáng kinh ngạc 🏆, vượt xa các đối thủ khác cùng thời. Nhờ vào cấu trúc cải tiến với nhiều lớp ẩn và khả năng xử lý song song mạnh mẽ, AlexNet đã mở ra một kỷ nguyên mới cho các ứng dụng nhận diện hình ảnh và video 📷🎞️.

🔍 Bài viết này sẽ đưa bạn khám phá những khái niệm cốt lõi của AlexNet, từ cách nó giảm thiểu lỗi nhận dạng đến vai trò của các lớp tích chập và tối ưu hóa trong huấn luyện mô hình. Đây chính là tiền đề cho các mạng nơ-ron sâu hiện đại như VGG, ResNet và nhiều mô hình khác trong AI hiện nay 🚀. Nếu bạn là người đam mê công nghệ hoặc đang tìm hiểu về deep learning, đừng bỏ lỡ bài viết chi tiết này!

👉 Đọc ngay tại đây để khám phá thêm về AlexNet và sự tiến bộ của trí tuệ nhân tạo: AlexNet: Bước đột phá trong trí tuệ nhân tạo

Khám phá thêm các bài viết thú vị tại aicandy.vn

4 notes

·

View notes

Text

It signals, somewhat ironically, the ambivalence of the current paradigm of AI — deep learning — which emerged not from theories of cognition, as some may believe, but from contested experiments to automate the labour of perception, or pattern recognition. Deep learning has evolved from the extension of 1950s techniques of visual pattern recognition to non-visual data, which not include text, audio, video, and behavioural data of the most diverse origins. The rise of deep learning dates to 2012, when the convolutional neural network AlexNet won the ImageNet computer vision competition. Since then, the term ‘AI’ has come to define by convention the paradigm of artificial neural networks which, in the 1950s, it must be noted, was actually its rival (an example of the controversies that characterise the ‘rationality’ of AI). Matteo Pasquinelli, 2023. The Eye of the Master: A Social History of Artificial Intelligence. London: Verso.

20 notes

·

View notes

Text

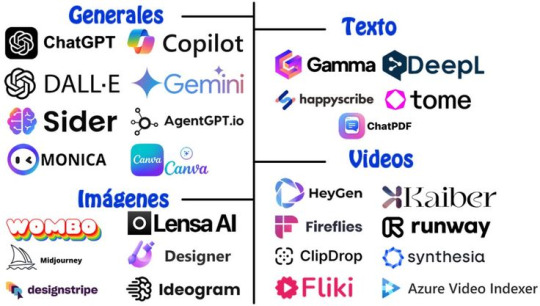

La Inteligencia Artificial: Historia, Funcionamiento, Aplicaciones y Desafíos Éticos

La inteligencia artificial (IA) es una rama de la informática que busca crear sistemas capaces de realizar tareas que normalmente requieren inteligencia humana. Estas tareas incluyen el razonamiento, el aprendizaje, la percepción, la comprensión del lenguaje natural y la toma de decisiones. En los últimos años, el desarrollo de la IA ha transformado sectores como la medicina, la industria, la educación, las finanzas y más, generando tanto entusiasmo como preocupación.

Historia de la Inteligencia Artificial

.1 Orígenes

Década de 1940-1950: Inicio de la cibernética y los primeros conceptos de máquinas inteligentes, como el test de Turing propuesto por Alan Turing.

1956: Se acuña el término "Inteligencia Artificial" en la conferencia de Dartmouth. Se considera el nacimiento formal de la disciplina.

1960-1970: Se desarrollan programas básicos de resolución de problemas, como ELIZA (psicoterapia simulada) y SHRDLU

.2 Inviernos de la IA

Años 70 y 80: Periodos de desilusión por promesas incumplidas.

.3 Renacimiento de la IA

Década de 2000 en adelante: Avances en procesamiento, big data, y redes neuronales profundas (deep learning) permitieron un resurgimiento.

2012: El modelo AlexNet revolucionó el reconocimiento de imágenes.

Cómo Funciona la Inteligencia Artificial

.1 Tipos de IA

IA débil (narrow AI): Realiza tareas específicas (por ejemplo, asistentes virtuales como Siri o Alexa).

IA fuerte (general AI): Equivalente a la inteligencia humana general. Aún en etapa de investigación.

IA superinteligente: Más inteligente que el ser humano. Aún hipotética.

2 Aprendizaje Automático (Machine Learning)

Supervisado: El modelo aprende con ejemplos etiquetados.

No supervisado: Identifica patrones sin etiquetas.

Reforzamiento: Aprende mediante prueba y error (como los videojuegos o robótica).

.3 Deep Learning

Utiliza redes neuronales artificiales con muchas capas.

Permite reconocimiento facial, traducción automática, diagnóstico médico, etc.

. Aplicaciones de la IA

.1 Salud

Diagnóstico médico asistido por IA.

Análisis de imágenes médicas (radiografías, resonancias).

Desarrollo de medicamentos.

.2 Educación

Tutores virtuales personalizados.

Sistemas de aprendizaje adaptativo.

Evaluación automática de exámenes.

.3 Transporte

Vehículos autónomos.

Sistemas de tráfico inteligentes.

Optimización logística.

.4 Finanzas

Detección de fraudes.

Asesores financieros automáticos (robo-advisors).

Análisis predictivo de mercados.

.5 Entretenimiento

Recomendaciones personalizadas (Netflix, Spotify).

Creación de música e imágenes generadas por IA.

Videojuegos con personajes inteligentes.

Ventajas de la Inteligencia Artificial

Automatización: Ahorro de tiempo y dinero.

Precisión: Menor margen de error.

Disponibilidad 24/7: No se cansa ni necesita descanso.

Personalización: Adaptación al usuario individual.

. Desafíos y Problemas Éticos

.1 Desempleo Tecnológico

Automatización de empleos repetitivos puede desplazar trabajadores.

.2 Sesgo Algorítmico

Si los datos de entrenamiento tienen prejuicios, la IA los aprende y los reproduce.

.3 Privacidad

Recolección masiva de datos personales puede poner en riesgo la privacidad.

.4 Toma de Decisiones Automatizada

¿Debería una IA decidir sobre libertades humanas? (Ej. justicia, crédito bancario, vigilancia).

.5 Armas Autónomas

Desarrollo de sistemas militares que pueden operar sin intervención humana.

Legislación y Regulación de la IA

Unión Europea: Reglamento de IA para clasificar sistemas según su riesgo (2021).

EE.UU. y China: Enfoques más flexibles pero con regulaciones en desarrollo.

Ética de la IA: Principios como transparencia, justicia, no maleficencia y explicabilidad.

8. El Futuro de la Inteligencia Artificial

1 Retos Técnicos

Conseguir una IA general segura y comprensible.

Reducir el consumo energético de modelos de gran escala.

.2 Convergencia con otras tecnologías

Internet de las cosas (IoT)

Blockchain

Realidad aumentada y virtual

Robótica avanzada

3 Oportunidades

Resolver grandes desafíos globales: cambio climático, salud global, educación universal.

Transformar el trabajo hacia tareas más creativas y humanas.

Conclusión

La inteligencia artificial está redefiniendo la forma en que vivimos, trabajamos y nos relacionamos con el mundo. Aunque ofrece beneficios extraordinarios, también plantea riesgos significativos que deben abordarse con responsabilidad. Un desarrollo ético, regulado y transparente de la IA es crucial para que sus beneficios se distribuyan de forma equitativa y se eviten consecuencias negativas. La humanidad está en un punto clave para decidir cómo convivir con esta nueva forma de inteligencia.

5 notes

·

View notes

Text

🚀 AlexNet: Bước Đột Phá Trong Thế Giới Trí Tuệ Nhân Tạo! 🤖💡

🌐 Nếu bạn đang tìm hiểu về AI hoặc Machine Learning, bạn không thể bỏ qua cái tên AlexNet! Đây là mô hình mạng nơ-ron nhân tạo nổi tiếng đã tạo nên bước ngoặt quan trọng trong lĩnh vực Deep Learning. AlexNet không chỉ thay đổi cách chúng ta xử lý dữ liệu hình ảnh 📸 mà còn mở ra kỷ nguyên mới cho những ứng dụng vượt trội của AI.

💥 Vậy, AlexNet là gì và tại sao nó lại quan trọng? AlexNet được phát triển vào năm 2012 bởi Alex Krizhevsky và đội ngũ của ông. Với khả năng phân loại hình ảnh chính xác chưa từng thấy, AlexNet đã giúp mô hình này giành chiến thắng tại cuộc thi ImageNet 🏆, một cuộc thi nổi tiếng về nhận diện hình ảnh. Điều này đã chứng minh sức mạnh của mạng nơ-ron tích chập (CNN) và tạo tiền đề cho những nghiên cứu tiếp theo trong lĩnh vực này.

📈 Tại sao AlexNet lại đột phá?

📊 Kiến trúc đặc biệt: Sử dụng nhiều lớp tích chập (Convolutional Layers), AlexNet mang đến khả năng nhận diện đặc điểm tốt hơn bao giờ hết.

🚀 Tối ưu hóa hiệu suất: Với kỹ thuật GPU Parallelism, mô hình này đã giảm đáng kể thời gian huấn luyện.

🤯 Giải quyết overfitting: Nhờ sử dụng Dropout Layer, AlexNet giúp giảm thiểu tình trạng quá khớp trong mô hình.

📚 Bạn muốn tìm hiểu kỹ hơn về AlexNet và những đóng góp to lớn của nó trong lĩnh vực Deep Learning? Hãy đọc ngay bài viết chi tiết tại website của chúng tôi! 👇

🔗 Link bài viết: AlexNet: Bước Đột Phá Trong Trí Tuệ Nhân Tạo

Khám phá thêm những bài viết giá trị tại aicandy.vn

4 notes

·

View notes

Text

The Role of Artificial Intelligence in Medical Image Analysis Artificial intelligence (AI) is transforming medical imaging by enhancing diagnostic accuracy, efficiency, and personalised care. Techniques like deep learning, reinforcement learning, and traditional machine learning are being applied across radiology, pathology, and ophthalmology to detect diseases such as cancer, pneumonia, and diabetic retinopathy. AI addresses limitations of human interpretation, reduces diagnostic errors, and streamlines clinical workflows. Innovations such as federated learning, quantum computing, and multi-modal imaging are driving the future of AI integration. Despite challenges like interpretability, biased datasets, and regulatory barriers, AI is poised to augment clinical expertise and revolutionise medical diagnostics, making healthcare more precise and accessible globally.

Introduction

Medical imaging is a cornerstone of modern healthcare, enabling the visualization of internal organs and tissues to support accurate diagnosis, treatment planning, and patient follow-up. Modalities such as X-rays, MRI, CT, ultrasound, and PET scans are crucial in identifying and managing a wide range of medical conditions. Traditionally, image interpretation has been performed by radiologists and clinicians; however, this process can be subjective and is influenced by fatigue, experience, and cognitive biases, potentially leading to diagnostic inaccuracies [1]. The World Health Organization (WHO) reported in 2020 that diagnostic errors contribute to nearly 10% of global patient deaths, underscoring the urgent need for technological solutions that enhance diagnostic precision and reliability [2].

The integration of artificial intelligence (AI), particularly deep learning, has significantly advanced the field of medical image analysis. AI algorithms can efficiently and accurately analyse vast volumes of imaging data, identifying subtle features that may be missed by human observers [3]. Leveraging large datasets and advanced computational techniques, AI boosts diagnostic efficiency, enables earlier disease identification, and improves patient outcomes. Notably, AI-based diagnostic tools have shown increased detection rates, such as reducing false negatives in breast cancer screenings and improving the accuracy of lung nodule detection [4].

The evolution of medical imaging from manual interpretation of X-ray films to digital imaging and now AI-assisted systems reflects a pivotal shift toward precision medicine, offering greater accuracy and consistency in diagnostics [5].

Paradigm of AI in Medical Imaging

Table 1 describes the vital milestones of artificial intelligence for the medical imaging.

Table 1: Key Milestones in AI for Medical Imaging Year Milestone 1990s Early rule-based CAD systems introduced 2012 Deep learning breakthrough with AlexNet (ImageNet competition) 2015 Google’s DeepMind develops AI for diabetic retinopathy detection 2018 First FDA-approved AI software for detecting strokes in CT scans (Viz.ai) 2021 AI outperforms radiologists in lung cancer detection (Nature Medicine) 2023 AI-based whole-body imaging solutions become commercially viable

0 notes

Text

The Development of Deep Learning Algorithms for Image Recognition: A Modern Perspective

In the past decade, the field of artificial intelligence (AI) has seen a remarkable surge in capabilities, particularly within the area of deep learning. Among its various applications, image recognition stands out as one of the most transformative and impactful. From medical diagnostics to autonomous vehicles and surveillance systems, the demand for robust and accurate image recognition models continues to grow. As research institutions like Telkom University intensify their focus on intelligent systems and computer vision, the development of advanced deep learning algorithms becomes not just a necessity, but a frontier of innovation.

Evolution of Image Recognition

Traditionally, image recognition systems relied on manually engineered features and statistical classifiers. These systems struggled to handle variability in lighting, orientation, and scale. The introduction of convolutional neural networks (CNNs), a core component of deep learning, marked a turning point. First gaining popularity with the success of AlexNet in the 2012 ImageNet competition, CNNs offered a hierarchical feature extraction mechanism that mimicked the human visual system, drastically improving recognition performance.

CNNs use layers of filters to capture low-level features such as edges and textures in early layers, and more abstract concepts like objects and scenes in deeper layers. This shift toward automatic feature learning has made deep learning models adaptable to a wide range of image recognition tasks, from facial recognition to object detection.

Architectures and Innovations

Since AlexNet, many new architectures have emerged, each pushing the boundaries of what deep learning can achieve. VGGNet introduced simplicity with deeper networks, while GoogLeNet introduced the Inception module to optimize computational efficiency. ResNet further revolutionized the field by introducing skip connections, allowing networks to be hundreds of layers deep without suffering from the vanishing gradient problem.

More recently, transformer-based models like Vision Transformers (ViT) have demonstrated that attention mechanisms—originally developed for natural language processing—can outperform CNNs in large-scale image recognition tasks when sufficient data and computational resources are available. These models divide images into patches and process them similarly to sequences of words, allowing for a global understanding of visual information.

Challenges in Deep Learning for Image Recognition

Despite significant progress, deep learning still faces notable challenges in image recognition. One of the most critical issues is the requirement for massive labeled datasets. While transfer learning and pre-trained models alleviate this need to some extent, domain-specific tasks often lack sufficient labeled data, limiting model generalization.

Another major challenge is interpretability. Deep learning models, especially those with millions of parameters, often function as “black boxes.” In high-stakes applications like medical imaging or biometric security, understanding why a model makes a certain prediction is essential for trust and accountability.

Additionally, deep learning models are vulnerable to adversarial attacks—subtle, often imperceptible perturbations to input images that can cause models to make incorrect predictions. Ensuring robustness and security in deployment scenarios remains an active area of research.

Application Domains and Case Studies

One of the most exciting areas of application is in the healthcare sector. Deep learning algorithms have demonstrated expert-level performance in identifying diseases from radiological images, such as detecting diabetic retinopathy from retinal scans or lung cancer from CT scans. These tools are not only improving diagnostic accuracy but also increasing accessibility in regions with limited access to medical specialists.

In the realm of autonomous vehicles, image recognition systems are critical for understanding the driving environment. These systems detect road signs, pedestrians, other vehicles, and lane markings, contributing to decision-making and navigation processes. The fusion of image recognition with other sensory inputs like LiDAR and radar is essential to ensure safety and reliability.

Another emerging domain is smart surveillance, where intelligent systems are employed to detect unusual behavior, identify individuals, and track objects in real-time. Telkom University has actively explored this area, integrating deep learning into intelligent video analytics platforms for enhancing public safety in urban environments.

Telkom University and Contributions to the Field

As a leading institution in Indonesia, Telkom University has shown a strong commitment to advancing research in intelligent systems and computer vision. Research labs within the university are increasingly focused on developing custom neural network architectures tailored for local use cases, such as recognizing cultural patterns, regional vehicle license plates, and indigenous diseases visible through imaging.

Furthermore, Telkom University supports interdisciplinary collaboration by combining expertise in electronics, data science, and telecommunications. This collaborative approach accelerates the translation of theoretical research into real-world applications, such as AI-powered traffic monitoring systems and digital identity verification technologies.

Through its graduate and undergraduate programs, Telkom University nurtures a new generation of AI engineers equipped with the knowledge to design and deploy scalable image recognition solutions. Its participation in global research consortia and regional innovation hubs enhances its role as a center of excellence in Southeast Asia.

Future Trends in Image Recognition

Looking ahead, the integration of multimodal learning is likely to redefine image recognition. Future systems will not rely solely on visual data but will combine it with audio, text, and sensor data to provide a richer understanding of the environment. This will lead to more context-aware systems, capable of more nuanced decision-making.

Another trend is the push toward efficient deep learning. As environmental concerns and resource constraints grow, researchers are focusing on creating lightweight models that require less computation and memory, suitable for edge computing. Techniques such as knowledge distillation, model pruning, and quantization are gaining prominence.

Federated learning also presents a promising path forward. It allows training deep learning models across decentralized devices without transferring raw data, addressing privacy concerns while leveraging data from multiple sources. This approach can be particularly beneficial in sensitive fields like healthcare and finance.

Lastly, self-supervised learning, which enables models to learn from unlabeled data, may become the standard for future deep learning paradigms. By reducing the dependence on labeled datasets, it opens the door to broader and more equitable AI development across different regions and domains.

1 note

·

View note

Link

[ad_1] New benchmark for evaluating multimodal systems based on real-world video, audio, and text dataFrom the Turing test to ImageNet, benchmarks have played an instrumental role in shaping artificial intelligence (AI) by helping define research goals and allowing researchers to measure progress towards those goals. Incredible breakthroughs in the past 10 years, such as AlexNet in computer vision and AlphaFold in protein folding, have been closely linked to using benchmark datasets, allowing researchers to rank model design and training choices, and iterate to improve their models. As we work towards the goal of building artificial general intelligence (AGI), developing robust and effective benchmarks that expand AI models’ capabilities is as important as developing the models themselves.Perception – the process of experiencing the world through senses – is a significant part of intelligence. And building agents with human-level perceptual understanding of the world is a central but challenging task, which is becoming increasingly important in robotics, self-driving cars, personal assistants, medical imaging, and more. So today, we’re introducing the Perception Test, a multimodal benchmark using real-world videos to help evaluate the perception capabilities of a model.Developing a perception benchmarkMany perception-related benchmarks are currently being used across AI research, like Kinetics for video action recognition, Audioset for audio event classification, MOT for object tracking, or VQA for image question-answering. These benchmarks have led to amazing progress in how AI model architectures and training methods are built and developed, but each one only targets restricted aspects of perception: image benchmarks exclude temporal aspects; visual question-answering tends to focus on high-level semantic scene understanding; object tracking tasks generally capture lower-level appearance of individual objects, like colour or texture. And very few benchmarks define tasks over both audio and visual modalities.Multimodal models, such as Perceiver, Flamingo, or BEiT-3, aim to be more general models of perception. But their evaluations were based on multiple specialised datasets because no dedicated benchmark was available. This process is slow, expensive, and provides incomplete coverage of general perception abilities like memory, making it difficult for researchers to compare methods.To address many of these issues, we created a dataset of purposefully designed videos of real-world activities, labelled according to six different types of tasks:Object tracking: a box is provided around an object early in the video, the model must return a full track throughout the whole video (including through occlusions).Point tracking: a point is selected early on in the video, the model must track the point throughout the video (also through occlusions).Temporal action localisation: the model must temporally localise and classify a predefined set of actions.Temporal sound localisation: the model must temporally localise and classify a predefined set of sounds.Multiple-choice video question-answering: textual questions about the video, each with three choices from which to select the answer.Grounded video question-answering: textual questions about the video, the model needs to return one or more object tracks. We took inspiration from the way children’s perception is assessed in developmental psychology, as well as from synthetic datasets like CATER and CLEVRER, and designed 37 video scripts, each with different variations to ensure a balanced dataset. Each variation was filmed by at least a dozen crowd-sourced participants (similar to previous work on Charades and Something-Something), with a total of more than 100 participants, resulting in 11,609 videos, averaging 23 seconds long.The videos show simple games or daily activities, which would allow us to define tasks that require the following skills to solve:Knowledge of semantics: testing aspects like task completion, recognition of objects, actions, or sounds.Understanding of physics: collisions, motion, occlusions, spatial relations.Temporal reasoning or memory: temporal ordering of events, counting over time, detecting changes in a scene.Abstraction abilities: shape matching, same/different notions, pattern detection. Crowd-sourced participants labelled the videos with spatial and temporal annotations (object bounding box tracks, point tracks, action segments, sound segments). Our research team designed the questions per script type for the multiple-choice and grounded video-question answering tasks to ensure good diversity of skills tested, for example, questions that probe the ability to reason counterfactually or to provide explanations for a given situation. The corresponding answers for each video were again provided by crowd-sourced participants.Evaluating multimodal systems with the Perception TestWe assume that models have been pre-trained on external datasets and tasks. The Perception Test includes a small fine-tuning set (20%) that the model creators can optionally use to convey the nature of the tasks to the models. The remaining data (80%) consists of a public validation split and a held-out test split where performance can only be evaluated via our evaluation server.Here we show a diagram of the evaluation setup: the inputs are a video and audio sequence, plus a task specification. The task can be in high-level text form for visual question answering or low-level input, like the coordinates of an object’s bounding box for the object tracking task. The evaluation results are detailed across several dimensions, and we measure abilities across the six computational tasks. For the visual question-answering tasks we also provide a mapping of questions across types of situations shown in the videos and types of reasoning required to answer the questions for a more detailed analysis (see our paper for more details). An ideal model would maximise the scores across all radar plots and all dimensions. This is a detailed assessment of the skills of a model, allowing us to narrow down areas of improvement. Ensuring diversity of participants and scenes shown in the videos was a critical consideration when developing the benchmark. To do this, we selected participants from different countries of different ethnicities and genders and aimed to have diverse representation within each type of video script. Learning more about the Perception TestThe Perception Test benchmark is publicly available here and further details are available in our paper. A leaderboard and a challenge server will be available soon too.On 23 October, 2022, we’re hosting a workshop about general perception models at the European Conference on Computer Vision in Tel Aviv (ECCV 2022), where we will discuss our approach, and how to design and evaluate general perception models with other leading experts in the field.We hope that the Perception Test will inspire and guide further research towards general perception models. Going forward, we hope to collaborate with the multimodal research community to introduce additional annotations, tasks, metrics, or even new languages to the benchmark.Get in touch by emailing [email protected] if you're interested in contributing! [ad_2] Source link

0 notes

Text

All those cat images that tech giants had been harvesting from across the world, without paying a penny to either users or tax collectors, turned out to be incredibly valuable. The AI race was on, and the competitors were running on cat images. At the same time that AlexNet was preparing for the ImageNet challenge, Google too was training its AI on cat images, and even created a dedicated cat-image-generating AI called the Meow Generator. The technology developed by recognizing cute kittens was later deployed for more predatory purposes. For example, Israel relied on it to create the Red Wolf, Blue Wolf, and Wolf Pack apps used by Israeli soldiers for facial recognition of Palestinians in the Occupied Territories.The ability to recognize cat images also led to the algorithms Iran uses to automatically recognize unveiled women and enforce its hijab laws. As explained in chapter 8, massive amounts of data are required to train machine-learning algorithms. Without millions of cat images uploaded and annotated for free by people across the world, it would not have been possible to train the AlexNet algorithm or the Meow Generator, which in turn served as the template for subsequent AIs with far-reaching economic, political, and military potential.

0 notes

Text

Among other aspects, Minsky and Papert noticed (as also had Rosenblatt) that artificial neural networks are not able to distinguish well between figure and ground: in their computation of the visual field, each point gains somehow the same priority — which is not the case with human vision. This happens because artificial neural networks have no ‘concept’ of figure and ground, which they replace with a statistical distribution of correlations (while the figure–ground relation implies a model of causation). The problem hs not disappeared with deep learning: it has been discovered that large convolutional neural networks such as AlexNet, GoogleNet, and ResNet-50 are still biased towards texture in relation to shape. Matteo Pasquinelli, 2023. The Eye of the Master: A Social History of Artificial Intelligence. London: Verso.

4 notes

·

View notes

Text

CS590 - Assignment-2: Solved

Please read the instructions carefully. Program-1 [25 points] Use the alexnet program and modify it to the conditions given below. Use the database MNIST already present in the pytorch. Instead of FashionMNIST it will be MNIST. Number of Classes in this dataset is 10. MNIST is a dataset to identify numbers, so the number of classes is 10. Make the batch size 16 and epoch should be 1 or…

0 notes

Text

How AlexNet Transformed AI and Computer Vision Forever

In a historic move, the Computer History Museum, in partnership with Google, has released the original 2012 source code for AlexNet, the neural network that revolutionized AI.

Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, AlexNet's source code release is a monumental moment for AI enthusiasts.

@tonyshan #techinnovation https://bit.ly/tonyshan https://bit.ly/tonyshan_X

0 notes

Text

AlexNet, the AI Model That Started It All, Released In Source Code Form

http://i.securitythinkingcap.com/TJkz4R

0 notes

Text

You can now download the source code that sparked the AI boom

CHM releases code for 2012 AlexNet breakthrough that proved “deep learning” could work. Continue reading You can now download the source code that sparked the AI boom

0 notes

Text

Ars Technica: You can now download the source code that sparked the AI boom

0 notes

Text

GitHub - computerhistory/AlexNet-Source-Code: This package contains the original 2012 AlexNet code.

0 notes