#Amazon API's Data Sets

Explore tagged Tumblr posts

Text

Fueling Innovation with Amazon API's Data Sets: Discover Types and Applications

In today's data-driven world, innovation thrives on access to vast amounts of information. With the advent of Application Programming Interfaces (APIs), accessing and utilizing data has become more convenient and powerful than ever. Among the multitude of API providers, Amazon stands out with its comprehensive collection of data sets, fueling innovation across various industries. In this article, we'll delve into the types and applications of Amazon APIs' data sets and explore how they are driving innovation.

Types of Amazon API Data Sets

Amazon offers a diverse range of data sets through its APIs, catering to different interests and requirements. Here are some key types:

E-Commerce Data Sets: Amazon's roots lie in e-commerce, and consequently, it provides rich data sets related to product listings, customer reviews, sales trends, and more. These data sets are invaluable for businesses looking to understand market dynamics, consumer preferences, and competitor analysis.

Cloud Services Data Sets: With its AWS (Amazon Web Services) platform, Amazon offers data sets related to cloud computing, including usage statistics, performance metrics, and cost optimization insights. These data sets empower businesses to optimize their cloud infrastructure, enhance scalability, and improve cost-efficiency.

IoT (Internet of Things) Data Sets: Amazon's IoT services like AWS IoT Core generate vast amounts of data from connected devices. These data sets include sensor readings, device statuses, and telemetry data, enabling businesses to harness the power of IoT for various applications such as predictive maintenance, smart home automation, and industrial monitoring.

Media and Entertainment Data Sets: Amazon Prime Video and Amazon Music provide data sets related to user preferences, viewing/listening habits, content metadata, and engagement metrics. These data sets enable content creators and distributors to personalize recommendations, optimize content delivery, and enhance user experiences.

Healthcare Data Sets: Amazon HealthLake, a HIPAA-eligible service, offers curated data sets compliant with healthcare regulations. These data sets include electronic health records (EHRs), medical imaging data, genomic data, and clinical trial data. They facilitate medical research, personalized healthcare, and healthcare analytics while ensuring data security and privacy.

Applications of Amazon API Data Sets

The versatility of Amazon API data sets fuels innovation across numerous industries and domains. Here are some notable applications:

Retail Analytics: Retailers leverage Amazon's e-commerce data sets to analyze market trends, forecast demand, optimize pricing strategies, and enhance customer experiences. By understanding consumer behavior and preferences, retailers can tailor their offerings and marketing campaigns for maximum impact.

Smart Cities: Municipalities utilize Amazon's IoT data sets to implement smart city initiatives, such as traffic management, waste management, environmental monitoring, and public safety. By integrating IoT data with analytics platforms, cities can make data-driven decisions to improve urban living conditions and resource efficiency.

Content Personalization: Media companies harness Amazon's media and entertainment data sets to personalize content recommendations, curate playlists, and tailor advertisements based on user preferences and behavior. This enhances user engagement, retention, and monetization in an increasingly competitive digital media landscape.

Precision Medicine: Healthcare providers and researchers leverage Amazon's healthcare data sets to develop personalized treatment plans, conduct genomic analysis, identify disease risk factors, and accelerate drug discovery. By aggregating and analyzing diverse healthcare data sources, they can advance precision medicine initiatives and improve patient outcomes.

Financial Services: Banks, insurers, and fintech companies leverage Amazon's cloud services data sets to enhance risk management, fraud detection, customer segmentation, and algorithmic trading strategies. By leveraging real-time data and advanced analytics, financial institutions can drive operational efficiency and mitigate financial risks.

Conclusion

Amazon API data sets serve as a catalyst for innovation across various industries, providing valuable insights and enabling transformative applications. Whether it's optimizing retail operations, building smart cities, personalizing content experiences, advancing healthcare, or enhancing financial services, the breadth and depth of Amazon's data sets empower organizations to unlock new possibilities and create tangible value. As businesses continue to embrace digital transformation, leveraging Amazon API data sets will remain integral to staying competitive and driving innovation in the data-driven economy.

0 notes

Text

New APIs For Amazon Location Service Routes, Places & Maps

Introducing new Routes, Places, and Maps APIs for Amazon Location Service

The Routes, Places, and Maps features are expanded and improved by the 17 new and improved APIs that Amazon Location Service announced today, giving developers a more unified and efficient experience. These improvements increase the accessibility and utility of Amazon Location Service for a variety of applications by bringing new capabilities and providing a smooth migration process.

Advanced route optimization, GPS tracing, toll cost calculations, a range of map styles with static and dynamic rendering options, proximity-based search, predictive recommendations, and rich, detailed information on points of interest are all now available.

At Amazon, user feedback informs the great majority of its roadmaps. When working with location-based data, several customers developing applications with Amazon Location Service have expressed that they require more specific information, like contact details and business hours, as well as specially designed APIs. Developers have indicated a desire for further features, like comprehensive route planning, proximity-based searches, more place details, and static map graphics, even if the present API set has given many users useful tools. These needs are met by these new APIs, which offer a more thorough and unconventional location solution.

Improved and new capabilities

In direct response to your feedback, today’s launch provides seven completely new APIs and ten revised APIs. Specific improvements are made to each service Maps, Places, and Routes to accommodate a wider variety of use cases.

Routes

Advanced route planning and modification features are now available through the Amazon Location Routes API, enabling customers to:

Determine service regions within a certain travel time or distance by calculating isolines.

OptimizeWaypoints helps save trip time or distance by identifying the most effective waypoint sequence.

To offer accurate cost estimates for routes involving toll roads, compute toll costs.

SnapToRoads allows GPS traces to be precisely matched by snapping sites to the road network.

You may create more dynamic and accurate route experiences for your consumers with these features. For instance, a logistics firm may minimize delivery travel expenses by optimizing driver routes in real-time while accounting for real-time traffic.

Maps

More specially designed map types created by talented cartographers are now available in the revised Amazon Location Maps API. These map styles provide expert designs that remove the need for custom map construction and speed up time to market. Furthermore, developers can incorporate static maps into applications using the Static Map Image feature, which eliminates the requirement for constant data streaming and boosts performance in use scenarios when interactivity is not required.

Among the Maps API’s primary features are:

GetStyleDescriptor, which returns details about the style; GetTile, which downloads a tile from a tileset with a given X, Y, and Z axis values

GetStaticMap allows non-interactive maps to be rendered for reporting or display.

Places

In response to requests for more granularity in location data, the Amazon Location Places API has been improved to enable more thorough search capabilities. Among the new capabilities are:

For improved user experiences, SearchNearby and Autocomplete allow predictive text features and support proximity-based queries.

Improved company information including sections for contact details, business hours, and extra features for sites of interest

Applications like food delivery services or shop apps, where customers require comprehensive information about nearby locations, will particularly benefit from these functionalities. Consider a scenario in which a consumer launches a food delivery app, uses SearchNearby to find restaurants in their area, and downloads restaurant information, including contact details and business hours, to verify availability. The application utilizes OptimizeWaypoints to recommend the most effective route for pickups and deliveries after a driver has been assigned several delivery orders. SnaptoRoads improves the customer’s real-time tracking experience by providing a detailed depiction of the driver’s location as they follow the route.

The operation of enhanced location service

It is simple to call the API. You have three options: the basic REST API, one of AWS SDKs, or the AWS Command Line Interface (AWS CLI). However, some further preparation is needed to display the data on a map in a web or mobile application. Despite having extensive documentation, the method is too intricate to go into detail here.

There are two methods for authenticating API requests with Amazon Location Service: using API keys or AWS API authentication (AWS Sigv4 authentication). For developers of mobile applications, API keys may be more practical in situations where end users are not verified or when integrating with Amazon Cognito is not practical. For front-end apps, this is the suggested authentication technique.

You utilize a combination of the AWS CLI, cURL, and a graphical REST API client to show the flexibility of the APIs and how simple it is to integrate them inside your apps.

Beginning

Amazon Location Service provides a more complete range of mapping and location data for your business needs with these updated and new APIs. These will improve the scalability and agility of developers, which will help you speed up your development lifecycle.

Explore the most recent version of the Amazon Location Service Developer Guide to get started, then start incorporating these features right now. You can also test the APIs using your preferred AWS SDKs to see how they can improve your apps.

Amazon location service pricing

Learn about the reasonable prices for maps, locations, routing, tracking, and geofencing offered by Amazon Location Service. Pay only for what you use; there are no up-front fees.

Pricing

You pay for the queries your application makes to the service listed in the table below after using the Amazon Location Service for free.

Volume discounts are available for customers with a monthly usage exceeding $5,000.

Price may vary based on request parameters, please refer to the Pricing section in the developer guide.

Amazon Location Service free trail

During the first three months of using Amazon Location Service, you can test it out with the free trial. You won’t be charged for monthly consumption during that period up to the amounts specified for the APIs indicated in the table below. You will be charged for the extra usage in accordance with the paid tier prices if your usage surpasses the free trial limits or utilizes Advanced, Premium, or Stored services.

Read more on Govindhtech.com

#APIs#AmazonLocationService#Maps#MapsAPIs#GPS#AWSAPI#SearchNearby#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

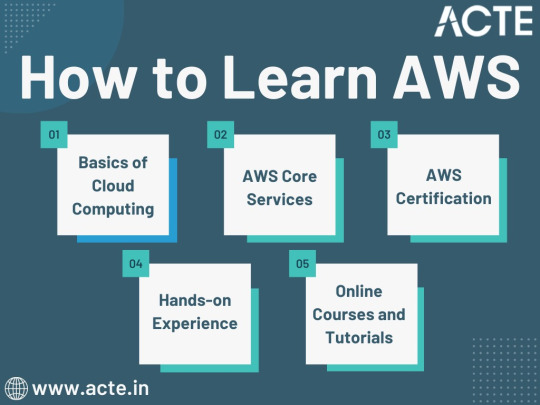

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

Navigating AWS: A Comprehensive Guide for Beginners

In the ever-evolving landscape of cloud computing, Amazon Web Services (AWS) has emerged as a powerhouse, providing a wide array of services to businesses and individuals globally. Whether you're a seasoned IT professional or just starting your journey into the cloud, understanding the key aspects of AWS is crucial. With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries. This blog will serve as your comprehensive guide, covering the essential concepts and knowledge needed to navigate AWS effectively.

1. The Foundation: Cloud Computing Basics

Before delving into AWS specifics, it's essential to grasp the fundamentals of cloud computing. Cloud computing is a paradigm that offers on-demand access to a variety of computing resources, including servers, storage, databases, networking, analytics, and more. AWS, as a leading cloud service provider, allows users to leverage these resources seamlessly.

2. Setting Up Your AWS Account

The first step on your AWS journey is to create an AWS account. Navigate to the AWS website, provide the necessary information, and set up your payment method. This account will serve as your gateway to the vast array of AWS services.

3. Navigating the AWS Management Console

Once your account is set up, familiarize yourself with the AWS Management Console. This web-based interface is where you'll configure, manage, and monitor your AWS resources. It's the control center for your cloud environment.

4. AWS Global Infrastructure: Regions and Availability Zones

AWS operates globally, and its infrastructure is distributed across regions and availability zones. Understand the concept of regions (geographic locations) and availability zones (isolated data centers within a region). This distribution ensures redundancy and high availability.

5. Identity and Access Management (IAM)

Security is paramount in the cloud. AWS Identity and Access Management (IAM) enable you to manage user access securely. Learn how to control who can access your AWS resources and what actions they can perform.

6. Key AWS Services Overview

Explore fundamental AWS services:

Amazon EC2 (Elastic Compute Cloud): Virtual servers in the cloud.

Amazon S3 (Simple Storage Service): Scalable object storage.

Amazon RDS (Relational Database Service): Managed relational databases.

7. Compute Services in AWS

Understand the various compute services:

EC2 Instances: Virtual servers for computing capacity.

AWS Lambda: Serverless computing for executing code without managing servers.

Elastic Beanstalk: Platform as a Service (PaaS) for deploying and managing applications.

8. Storage Options in AWS

Explore storage services:

Amazon S3: Object storage for scalable and durable data.

EBS (Elastic Block Store): Block storage for EC2 instances.

Amazon Glacier: Low-cost storage for data archiving.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Top AWS Training Institute.

9. Database Services in AWS

Learn about managed database services:

Amazon RDS: Managed relational databases.

DynamoDB: NoSQL database for fast and predictable performance.

Amazon Redshift: Data warehousing for analytics.

10. Networking Concepts in AWS

Grasp networking concepts:

Virtual Private Cloud (VPC): Isolated cloud networks.

Route 53: Domain registration and DNS web service.

CloudFront: Content delivery network for faster and secure content delivery.

11. Security Best Practices in AWS

Implement security best practices:

Encryption: Ensure data security in transit and at rest.

IAM Policies: Control access to AWS resources.

Security Groups and Network ACLs: Manage traffic to and from instances.

12. Monitoring and Logging with AWS CloudWatch and CloudTrail

Set up monitoring and logging:

CloudWatch: Monitor AWS resources and applications.

CloudTrail: Log AWS API calls for audit and compliance.

13. Cost Management and Optimization

Understand AWS pricing models and manage costs effectively:

AWS Cost Explorer: Analyze and control spending.

14. Documentation and Continuous Learning

Refer to the extensive AWS documentation, tutorials, and online courses. Stay updated on new features and best practices through forums and communities.

15. Hands-On Practice

The best way to solidify your understanding is through hands-on practice. Create test environments, deploy sample applications, and experiment with different AWS services.

In conclusion, AWS is a dynamic and powerful ecosystem that continues to shape the future of cloud computing. By mastering the foundational concepts and key services outlined in this guide, you'll be well-equipped to navigate AWS confidently and leverage its capabilities for your projects and initiatives. As you embark on your AWS journey, remember that continuous learning and practical application are key to becoming proficient in this ever-evolving cloud environment.

2 notes

·

View notes

Text

Storing images in mySql DB - explanation + Uploadthing example/tutorial

(Scroll down for an uploadthing with custom components tutorial)

My latest project is a photo editing web application (Next.js) so I needed to figure out how to best store images to my database. MySql databases cannot store files directly, though they can store them as blobs (binary large objects). Another way is to store images on a filesystem (e.g. Amazon S3) separate from your database, and then just store the URL path in your db.

Why didn't I choose to store images with blobs?

Well, I've seen a lot of discussions on the internet whether it is better to store images as blobs in your database, or to have them on a filesystem. In short, storing images as blobs is a good choice if you are storing small images and a smaller amount of images. It is safer than storing them in a separate filesystem since databases can be backed up more easily and since everything is in the same database, the integrity of the data is secured by the database itself (for example if you delete an image from a filesystem, your database will not know since it only holds a path of the image). But I ultimately chose uploading images on a filesystem because I wanted to store high quality images without worrying about performance or database constraints. MySql has a variety of constraints for data sizes which I would have to override and operations with blobs are harder/more costly for the database.

Was it hard to set up?

Apparently, hosting images on a separate filesystem is kinda complicated? Like with S3? Or so I've heard, never tried to do it myself XD BECAUSE RECENTLY ANOTHER EASIER SOLUTION FOR IT WAS PUBLISHED LOL. It's called uploadthing!!!

What is uploadthing and how to use it?

Uploadthing has it's own server API on which you (client) post your file. The file is then sent to S3 to get stored, and after it is stored S3 returns file's URL, which then goes trough uploadthing servers back to the client. After that you can store that URL to your own database.

Here is the graph I vividly remember taking from uploadthing github about a month ago, but can't find on there now XD It's just a graphic version of my basic explanation above.

The setup is very easy, you can just follow the docs which are very straightforward and easy to follow, except for one detail. They show you how to set up uploadthing with uploadthing's own frontend components like <UploadButton>. Since I already made my own custom components, I needed to add a few more lines of code to implement it.

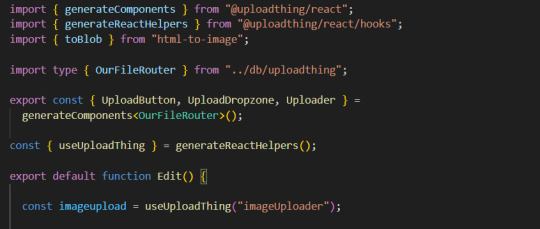

Uploadthing for custom components tutorial

1. Imports

You will need to add an additional import generateReactHelpers (so you can use uploadthing functions without uploadthing components) and call it as shown below

2. For this example I wanted to save an edited image after clicking on the save button.

In this case, before calling the uploadthing API, I had to create a file and a blob (not to be confused with mySql blob) because it is actually an edited picture taken from canvas, not just an uploaded picture, therefore it's missing some info an uploaded image would usually have (name, format etc.). If you are storing an uploaded/already existing picture, this step is unnecessary. After uploading the file to uploadthing's API, I get it's returned URL and send it to my database.

You can find the entire project here. It also has an example of uploading multiple files in pages/create.tsx

I'm still learning about backend so any advice would be appreciated. Writing about this actually reminded me of how much I'm interested in learning about backend optimization c: Also I hope the post is not too hard to follow, it was really hard to condense all of this information into one post ;_;

#codeblr#studyblr#webdevelopment#backend#nextjs#mysql#database#nodejs#programming#progblr#uploadthing

4 notes

·

View notes

Text

What is Merch Dominator ?

Merch Dominator is a software tool designed for Amazon sellers who create and sell merchandise through Amazon's print-on-demand service, Merch by Amazon. It helps sellers in conducting market research, product analysis, and keyword research to optimize their merch listings and increase their sales. The tool provides valuable data such as sales numbers, pricing trends, competition analysis, and popular keywords to help sellers identify profitable niches and make informed decisions.

How to use Merch Dominator ?

1. Sign up and Set up Account: Go to the Merch Dominator website and sign up for an account. Once you have registered, you will need to set up your account by providing relevant details such as your Amazon Merch API credentials.

2. Keyword Research: Use the Keyword Research feature of Merch Dominator to find profitable niches and keywords. This will help you identify popular topics and trends that can potentially lead to successful designs.

3. Design Creation: Create innovative and appealing designs based on the keywords and niches you have identified using the Design Creation tool. Merch Dominator provides various templates, clip arts, fonts, and design elements to help you craft attractive designs.

4. Upload Designs to Amazon Merch: Once you have finalized your designs, use Merch Dominator to bulk upload them to your Amazon Merch account. This feature saves a significant amount of time and effort by automating the upload process.

5. Manage and Track Listings: Track and manage your listings efficiently using the Listings Manager provided by Merch Dominator. You can monitor sales, rankings, and inventory levels of your products. Additionally, you can adjust prices and update listings when needed.

6. Analytics and Optimization: Utilize the powerful analytics tools offered by Merch Dominator to analyze the performance of your designs. This includes monitoring sales, revenue, and customer feedback. Make data-driven decisions to optimize your product offerings for better results.

7. Research Competitors: Gain insights into your competition by exploring the Competition Research feature of Merch Dominator. This helps you identify successful sellers, evaluate their strategies, and capitalize on emerging opportunities.

8. Automate and Schedule: Automate routine tasks using the Automation and Scheduler features offered by Merch Dominator. This includes tasks like design creation, keyword research, and listing management. Scheduling allows you to set specific times for these tasks to execute automatically. Remember, Merch Dominator is a tool meant to enhance your Amazon Merch business. To succeed, it is also important to stay updated with the latest market trends, maintain quality designs, and provide excellent customer service.

Conclusion:

Merch Dominator is an incredibly powerful and effective tool for anyone seeking success in the world of merchandising. Its exceptional features, such as market analysis, keyword optimization, and product tracking, provide users with invaluable insights and strategies to maximize their sales and profits. With Merch Dominator, users can easily identify trending products, stay ahead of their competition, and make informed decisions to drive their business forward. Whether you're a seasoned merchandiser or just starting out, Merch Dominator equips you with the right tools to dominate the merchandising game. So, if you're looking to take your merchandising business to new heights, Merch Dominator is definitely the go-to solution for you.

2 notes

·

View notes

Text

Pass AWS SAP-C02 Exam in First Attempt

Crack the AWS Certified Solutions Architect - Professional (SAP-C02) exam on your first try with real exam questions, expert tips, and the best study resources from JobExamPrep and Clearcatnet.

How to Pass AWS SAP-C02 Exam in First Attempt: Real Exam Questions & Tips

Are you aiming to pass the AWS Certified Solutions Architect – Professional (SAP-C02) exam on your first try? You’re not alone. With the right strategy, real exam questions, and trusted study resources like JobExamPrep and Clearcatnet, you can achieve your certification goals faster and more confidently.

Overview of SAP-C02 Exam

The SAP-C02 exam validates your advanced technical skills and experience in designing distributed applications and systems on AWS. Key domains include:

Design Solutions for Organizational Complexity

Design for New Solutions

Continuous Improvement for Existing Solutions

Accelerate Workload Migration and Modernization

Exam Format:

Number of Questions: 75

Type: Multiple choice, multiple response

Duration: 180 minutes

Passing Score: Approx. 750/1000

Cost: $300

AWS SAP-C02 Real Exam Questions (Real Set)

Here are 5 real-exam style questions to give you a feel for the exam difficulty and topics:

Q1: A company is migrating its on-premises Oracle database to Amazon RDS. The solution must minimize downtime and data loss. Which strategy is BEST?

A. AWS Database Migration Service (DMS) with full load only B. RDS snapshot and restore C. DMS with CDC (change data capture) D. Export and import via S3

Answer: C. DMS with CDC

Q2: You are designing a solution that spans multiple AWS accounts and VPCs. Which AWS service allows seamless inter-VPC communication?

A. VPC Peering B. AWS Direct Connect C. AWS Transit Gateway D. NAT Gateway

Answer: C. AWS Transit Gateway

Q3: Which strategy enhances resiliency in a serverless architecture using Lambda and API Gateway?

A. Use a single Availability Zone B. Enable retries and DLQs (Dead Letter Queues) C. Store state in Lambda memory D. Disable logging

Answer: B. Enable retries and DLQs

Q4: A company needs to archive petabytes of data with occasional access within 12 hours. Which storage class should you use?

A. S3 Standard B. S3 Intelligent-Tiering C. S3 Glacier D. S3 Glacier Deep Archive

Answer: D. S3 Glacier Deep Archive

Q5: You are designing a disaster recovery (DR) solution for a high-priority application. The RTO is 15 minutes, and RPO is near zero. What is the most appropriate strategy?

A. Pilot Light B. Backup & Restore C. Warm Standby D. Multi-Site Active-Active

Answer: D. Multi-Site Active-Active

Click here to Start Exam Recommended Resources to Pass SAP-C02 in First Attempt

To master these types of questions and scenarios, rely on real-world tested resources. We recommend:

✅ JobExamPrep

A premium platform offering curated practice exams, scenario-based questions, and up-to-date study materials specifically for AWS certifications. Thousands of professionals trust JobExamPrep for structured and realistic exam practice.

✅ Clearcatnet

A specialized site focused on cloud certification content, especially AWS, Azure, and Google Cloud. Their SAP-C02 study guide and video explanations are ideal for deep conceptual clarity.Expert Tips to Pass the AWS SAP-C02 Exam

Master Whitepapers – Read AWS Well-Architected Framework, Disaster Recovery, and Security best practices.

Practice Scenario-Based Questions – Focus on use cases involving multi-account setups, migration, and DR.

Use Flashcards – Especially for services like AWS Control Tower, Service Catalog, Transit Gateway, and DMS.

Daily Review Sessions – Use JobExamPrep and Clearcatnet quizzes every day.

Mock Exams – Simulate the exam environment at least twice before the real test.

🎓 Final Thoughts

The AWS SAP-C02 exam is tough—but with the right approach, you can absolutely pass it on the first attempt. Study smart, practice real exam questions, and leverage resources like JobExamPrep and Clearcatnet to build both confidence and competence.

#SAPC02#AWSSAPC02#AWSSolutionsArchitect#AWSSolutionsArchitectProfessional#AWSCertifiedSolutionsArchitect#SolutionsArchitectProfessional#AWSArchitect#AWSExam#AWSPrep#AWSStudy#AWSCertified#AWS#AmazonWebServices#CloudCertification#TechCertification#CertificationJourney#CloudComputing#CloudEngineer#ITCertification

0 notes

Text

Creating and Configuring Production ROSA Clusters (CS220) – A Practical Guide

Introduction

Red Hat OpenShift Service on AWS (ROSA) is a powerful managed Kubernetes solution that blends the scalability of AWS with the developer-centric features of OpenShift. Whether you're modernizing applications or building cloud-native architectures, ROSA provides a production-grade container platform with integrated support from Red Hat and AWS. In this blog post, we’ll walk through the essential steps covered in CS220: Creating and Configuring Production ROSA Clusters, an instructor-led course designed for DevOps professionals and cloud architects.

What is CS220?

CS220 is a hands-on, lab-driven course developed by Red Hat that teaches IT teams how to deploy, configure, and manage ROSA clusters in a production environment. It is tailored for organizations that are serious about leveraging OpenShift at scale with the operational convenience of a fully managed service.

Why ROSA for Production?

Deploying OpenShift through ROSA offers multiple benefits:

Streamlined Deployment: Fully managed clusters provisioned in minutes.

Integrated Security: AWS IAM, STS, and OpenShift RBAC policies combined.

Scalability: Elastic and cost-efficient scaling with built-in monitoring and logging.

Support: Joint support model between AWS and Red Hat.

Key Concepts Covered in CS220

Here’s a breakdown of the main learning outcomes from the CS220 course:

1. Provisioning ROSA Clusters

Participants learn how to:

Set up required AWS permissions and networking pre-requisites.

Deploy clusters using Red Hat OpenShift Cluster Manager (OCM) or CLI tools like rosa and oc.

Use STS (Short-Term Credentials) for secure cluster access.

2. Configuring Identity Providers

Learn how to integrate Identity Providers (IdPs) such as:

GitHub, Google, LDAP, or corporate IdPs using OpenID Connect.

Configure secure, role-based access control (RBAC) for teams.

3. Networking and Security Best Practices

Implement private clusters with public or private load balancers.

Enable end-to-end encryption for APIs and services.

Use Security Context Constraints (SCCs) and network policies for workload isolation.

4. Storage and Data Management

Configure dynamic storage provisioning with AWS EBS, EFS, or external CSI drivers.

Learn persistent volume (PV) and persistent volume claim (PVC) lifecycle management.

5. Cluster Monitoring and Logging

Integrate OpenShift Monitoring Stack for health and performance insights.

Forward logs to Amazon CloudWatch, ElasticSearch, or third-party SIEM tools.

6. Cluster Scaling and Updates

Set up autoscaling for compute nodes.

Perform controlled updates and understand ROSA’s maintenance policies.

Use Cases for ROSA in Production

Modernizing Monoliths to Microservices

CI/CD Platform for Agile Development

Data Science and ML Workflows with OpenShift AI

Edge Computing with OpenShift on AWS Outposts

Getting Started with CS220

The CS220 course is ideal for:

DevOps Engineers

Cloud Architects

Platform Engineers

Prerequisites: Basic knowledge of OpenShift administration (recommended: DO280 or equivalent experience) and a working AWS account.

Course Format: Instructor-led (virtual or on-site), hands-on labs, and guided projects.

Final Thoughts

As more enterprises adopt hybrid and multi-cloud strategies, ROSA emerges as a strategic choice for running OpenShift on AWS with minimal operational overhead. CS220 equips your team with the right skills to confidently deploy, configure, and manage production-grade ROSA clusters — unlocking agility, security, and innovation in your cloud-native journey.

Want to Learn More or Book the CS220 Course? At HawkStack Technologies, we offer certified Red Hat training, including CS220, tailored for teams and enterprises. Contact us today to schedule a session or explore our Red Hat Learning Subscription packages. www.hawkstack.com

0 notes

Text

Discover the different types of data sets available through Amazon's APIs and explore their various applications. Learn how these APIs can enhance your business insights and drive innovation.

For More Information:-

0 notes

Text

What are the benefits of Amazon EMR? Drawbacks of AWS EMR

Benefits of Amazon EMR

Amazon EMR has many benefits. These include AWS's flexibility and cost savings over on-premises resource development.

Cost-saving

Amazon EMR costs depend on instance type, number of Amazon EC2 instances, and cluster launch area. On-demand pricing is low, but Reserved or Spot Instances save much more. Spot instances can save up to a tenth of on-demand costs.

Note

Using Amazon S3, Kinesis, or DynamoDB with your EMR cluster incurs expenses irrespective of Amazon EMR usage.

Note

Set up Amazon S3 VPC endpoints when creating an Amazon EMR cluster in a private subnet. If your EMR cluster is on a private subnet without Amazon S3 VPC endpoints, you will be charged extra for S3 traffic NAT gates.

AWS integration

Amazon EMR integrates with other AWS services for cluster networking, storage, security, and more. The following list shows many examples of this integration:

Use Amazon EC2 for cluster nodes.

Amazon VPC creates the virtual network where your instances start.

Amazon S3 input/output data storage

Set alarms and monitor cluster performance with Amazon CloudWatch.

AWS IAM permissions setting

Audit service requests with AWS CloudTrail.

Cluster scheduling and launch with AWS Data Pipeline

AWS Lake Formation searches, categorises, and secures Amazon S3 data lakes.

Its deployment

The EC2 instances in your EMR cluster do the tasks you designate. When you launch your cluster, Amazon EMR configures instances using Spark or Apache Hadoop. Choose the instance size and type that best suits your cluster's processing needs: streaming data, low-latency queries, batch processing, or big data storage.

Amazon EMR cluster software setup has many options. For example, an Amazon EMR version can be loaded with Hive, Pig, Spark, and flexible frameworks like Hadoop. Installing a MapR distribution is another alternative. Since Amazon EMR runs on Amazon Linux, you can manually install software on your cluster using yum or the source code.

Flexibility and scalability

Amazon EMR lets you scale your cluster as your computing needs vary. Resizing your cluster lets you add instances during peak workloads and remove them to cut costs.

Amazon EMR supports multiple instance groups. This lets you employ Spot Instances in one group to perform jobs faster and cheaper and On-Demand Instances in another for guaranteed processing power. Multiple Spot Instance types might be mixed to take advantage of a better price.

Amazon EMR lets you use several file systems for input, output, and intermediate data. HDFS on your cluster's primary and core nodes can handle data you don't need to store beyond its lifecycle.

Amazon S3 can be used as a data layer for EMR File System applications to decouple computation and storage and store data outside of your cluster's lifespan. EMRFS lets you scale up or down to meet storage and processing needs independently. Amazon S3 lets you adjust storage and cluster size to meet growing processing needs.

Reliability

Amazon EMR monitors cluster nodes and shuts down and replaces instances as needed.

Amazon EMR lets you configure automated or manual cluster termination. Automatic cluster termination occurs after all procedures are complete. Transitory cluster. After processing, you can set up the cluster to continue running so you can manually stop it. You can also construct a cluster, use the installed apps, and manually terminate it. These clusters are “long-running clusters.”

Termination prevention can prevent processing errors from terminating cluster instances. With termination protection, you can retrieve data from instances before termination. Whether you activate your cluster by console, CLI, or API changes these features' default settings.

Security

Amazon EMR uses Amazon EC2 key pairs, IAM, and VPC to safeguard data and clusters.

IAM

Amazon EMR uses IAM for permissions. Person or group permissions are set by IAM policies. Users and groups can access resources and activities through policies.

The Amazon EMR service uses IAM roles, while instances use the EC2 instance profile. These roles allow the service and instances to access other AWS services for you. Amazon EMR and EC2 instance profiles have default roles. By default, roles use AWS managed policies generated when you launch an EMR cluster from the console and select default permissions. Additionally, the AWS CLI may construct default IAM roles. Custom service and instance profile roles can be created to govern rights outside of AWS.

Security groups

Amazon EMR employs security groups to control EC2 instance traffic. Amazon EMR shares a security group for your primary instance and core/task instances when your cluster is deployed. Amazon EMR creates security group rules to ensure cluster instance communication. Extra security groups can be added to your primary and core/task instances for more advanced restrictions.

Encryption

Amazon EMR enables optional server-side and client-side encryption using EMRFS to protect Amazon S3 data. After submission, Amazon S3 encrypts data server-side.

The EMRFS client on your EMR cluster encrypts and decrypts client-side encryption. AWS KMS or your key management system can handle client-side encryption root keys.

Amazon VPC

Amazon EMR launches clusters in Amazon VPCs. VPCs in AWS allow you to manage sophisticated network settings and access functionalities.

AWS CloudTrail

Amazon EMR and CloudTrail record AWS account requests. This data shows who accesses your cluster, when, and from what IP.

Amazon EC2 key pairs

A secure link between the primary node and your remote computer lets you monitor and communicate with your cluster. SSH or Kerberos can authenticate this connection. SSH requires an Amazon EC2 key pair.

Monitoring

Debug cluster issues like faults or failures utilising log files and Amazon EMR management interfaces. Amazon EMR can archive log files on Amazon S3 to save records and solve problems after your cluster ends. The Amazon EMR UI also has a task, job, and step-specific debugging tool for log files.

Amazon EMR connects to CloudWatch for cluster and job performance monitoring. Alarms can be set based on cluster idle state and storage use %.

Management interfaces

There are numerous Amazon EMR access methods:

The console provides a graphical interface for cluster launch and management. You may examine, debug, terminate, and describe clusters to launch via online forms. Amazon EMR is easiest to use via the console, requiring no scripting.

Installing the AWS Command Line Interface (AWS CLI) on your computer lets you connect to Amazon EMR and manage clusters. The broad AWS CLI includes Amazon EMR-specific commands. You can automate cluster administration and initialisation with scripts. If you prefer command line operations, utilise the AWS CLI.

SDK allows cluster creation and management for Amazon EMR calls. They enable cluster formation and management automation systems. This SDK is best for customising Amazon EMR. Amazon EMR supports Go, Java,.NET (C# and VB.NET), Node.js, PHP, Python, and Ruby SDKs.

A Web Service API lets you call a web service using JSON. A custom SDK that calls Amazon EMR is best done utilising the API.

Complexity:

EMR cluster setup and maintenance are more involved than with AWS Glue and require framework knowledge.

Learning curve

Setting up and optimising EMR clusters may require adjusting settings and parameters.

Possible Performance Issues:

Incorrect instance types or under-provisioned clusters might slow task execution and other performance.

Depends on AWS:

Due to its deep interaction with AWS infrastructure, EMR is less portable than on-premise solutions despite cloud flexibility.

#AmazonEMR#AmazonEC2#AmazonS3#AmazonVirtualPrivateCloud#EMRFS#AmazonEMRservice#Technology#technews#NEWS#technologynews#govindhtech

0 notes

Text

Recommendation Engine nulled plugin 3.4.4

Unlock Powerful Storefront Personalization with the Recommendation Engine nulled plugin Looking to skyrocket your WooCommerce conversions without breaking the bank? The Recommendation Engine nulled plugin is your ultimate solution for delivering personalized shopping experiences that keep customers coming back. This premium tool, available for free on our website, empowers your WooCommerce store with intelligent product suggestions based on customer behavior, search trends, and purchase history. What Is the Recommendation Engine nulled plugin? The Recommendation Engine nulled plugin is a powerful WooCommerce extension designed to display smart product recommendations such as “Frequently Bought Together,” “Customers Also Viewed,” and “Related Items.” With this plugin, you can effortlessly boost your average order value and enhance user experience by showing customers exactly what they’re likely to buy next. Technical Specifications Plugin Name: Recommendation Engine Version: Latest nulled version available Compatibility: WooCommerce 4.0+ / WordPress 5.0+ PHP Requirements: PHP 7.4 or higher License: GPL (Nulled for free distribution) Outstanding Features and Benefits Behavior-Based Suggestions: Display product recommendations based on what customers have viewed, purchased, or added to their carts. Boost Conversion Rates: Encourage upsells and cross-sells that feel organic and relevant to the shopper’s journey. Seamless Integration: Integrates directly into your WooCommerce shop with no need for external APIs or custom coding. Speed Optimized: Lightweight code ensures your site speed remains top-notch. Customizable Output: Easily control how and where product suggestions appear on product, cart, and checkout pages. Why Use the Recommendation Engine nulled plugin? Retail giants like Amazon thrive on personalized recommendations, and now, with the Recommendation Engine nulled plugin, your WooCommerce store can too. By analyzing customer behavior, this tool automates the suggestion process, saving you time and increasing profitability. No more guesswork—just intelligent, data-driven sales strategies implemented effortlessly. Common Use Cases Offer dynamic upsells on product pages Show cross-sells on the cart or checkout page Encourage repeat purchases by showing previously bought or viewed items Personalize marketing strategies through user preference insights How to Install and Use the Plugin Installing the Recommendation Engine nulled plugin is as easy as it gets. Just follow these simple steps: Download the plugin zip file from our website. Log in to your WordPress admin dashboard. Navigate to Plugins > Add New and click Upload Plugin. Select the downloaded zip file and click Install Now. Activate the plugin and access its settings via the WooCommerce menu. FAQs – Recommendation Engine nulled plugin Is it safe to use the Recommendation Engine nulled plugin? Yes, the version we offer is thoroughly scanned and tested to ensure it's safe and fully functional. It allows you to enjoy premium features without licensing limitations. Can I use it with other WooCommerce extensions? Absolutely! This plugin is built to integrate smoothly with your existing WooCommerce ecosystem, including themes and extensions like avada nulled. Where can I get SEO support for better performance? To improve your WooCommerce SEO strategy alongside product recommendation, consider tools like Yoast seo nulled—another excellent addition to your marketing toolkit. Does this plugin slow down my site? Not at all. The Recommendation Engine nulled plugin is optimized for performance and won’t compromise your website speed or user experience. Conclusion: Get the Recommendation Engine nulled plugin Today Enhance your WooCommerce store’s performance with the Recommendation Engine Download it now from our site for free and start delivering smarter shopping experiences to your customers. Let your store do the upselling for you—no

0 notes

Text

Magento 2 Marketplace Integration with Amazon and eBay

Integrating your Magento 2 store with Amazon and eBay helps you sell products on multiple platforms with ease. It keeps your product listings, inventory, and orders in sync automatically, saving you time and reducing errors. When you update a product in Magento, it changes on Amazon and eBay too.

There are two main ways to integrate: using ready-made extensions or creating a custom solution with APIs. Extensions are quick and easy to set up—great for small to mid-sized businesses. Custom APIs give more control and flexibility, ideal for larger stores with specific needs.

Integration brings big benefits: real-time updates, automatic order management, better customer reach, and smarter business decisions from centralized sales data.

To ensure success, follow best practices like keeping your product data consistent, automating workflows, testing regularly, and staying updated with changes from Amazon and eBay.

Overall, Magento 2 marketplace integration is a smart step to grow your online business and simplify operations.

#Magento2#eCommerceIntegration#AmazonSeller#eBaySeller#MultiChannelSelling#InventoryManagement#OnlineBusiness#APIs#Automation#DigitalCommerce

0 notes

Text

Anton R Gordon on Securing AI Infrastructure with Zero Trust Architecture in AWS

As artificial intelligence becomes more deeply embedded into enterprise operations, the need for robust, secure infrastructure is paramount. AI systems are no longer isolated R&D experiments — they are core components of customer experiences, decision-making engines, and operational pipelines. Anton R Gordon, a renowned AI Architect and Cloud Security Specialist, advocates for implementing Zero Trust Architecture (ZTA) as a foundational principle in securing AI infrastructure, especially within the AWS cloud environment.

Why Zero Trust for AI?

Traditional security models operate under the assumption that anything inside a network is trustworthy. In today’s cloud-native world — where AI workloads span services, accounts, and geographical regions — this assumption can leave systems dangerously exposed.

“AI workloads often involve sensitive data, proprietary models, and critical decision-making processes,” says Anton R Gordon. “Applying Zero Trust principles means that every access request is verified, every identity is authenticated, and no implicit trust is granted — ever.”

Zero Trust is particularly crucial for AI environments because these systems are not static. They evolve, retrain, ingest new data, and interact with third-party APIs, all of which increase the attack surface.

Anton R Gordon’s Zero Trust Blueprint in AWS

Anton R Gordon’s approach to securing AI systems with Zero Trust in AWS involves a layered strategy that blends identity enforcement, network segmentation, encryption, and real-time monitoring.

1. Enforcing Identity at Every Layer

At the core of Gordon’s framework is strict IAM (Identity and Access Management). He ensures all users, services, and applications assume the least privilege by default. Using IAM roles and policies, he tightly controls access to services like Amazon SageMaker, S3, Lambda, and Bedrock.

Gordon also integrates AWS IAM Identity Center (formerly AWS SSO) for centralized authentication, coupled with multi-factor authentication (MFA) to reduce credential-based attacks.

2. Micro-Segmentation with VPC and Private Endpoints

To prevent lateral movement within the network, Gordon leverages Amazon VPC, creating isolated environments for each AI component — data ingestion, training, inference, and storage. He routes all traffic through private endpoints, avoiding public internet exposure.

For example, inference APIs built on Lambda or SageMaker are only accessible through VPC endpoints, tightly scoped security groups, and AWS Network Firewall policies.

3. Data Encryption and KMS Integration

Encryption is non-negotiable. Gordon enforces encryption for data at rest and in transit using AWS KMS (Key Management Service). He also sets up customer-managed keys (CMKs) for more granular control over sensitive datasets and AI models stored in Amazon S3 or Amazon EFS.

4. Continuous Monitoring and Incident Response

Gordon configures Amazon GuardDuty, CloudTrail, and AWS Config to monitor all user activity, configuration changes, and potential anomalies. When paired with AWS Security Hub, he creates a centralized view for detecting and responding to threats in real time.

He also sets up automated remediation workflows using AWS Lambda and EventBridge to isolate or terminate suspicious sessions instantly.

Conclusion

By applying Zero Trust Architecture principles, Anton R Gordon ensures AI systems in AWS are not only performant but resilient and secure. His holistic approach — blending IAM enforcement, network isolation, encryption, and continuous monitoring — sets a new standard for AI infrastructure security.

For organizations deploying ML models and AI services in the cloud, following Gordon’s Zero Trust blueprint provides peace of mind, operational integrity, and compliance in an increasingly complex threat landscape.

0 notes

Text

The Future of Web Security with AWS Web Application Firewall

In an era of increasing cyber threats, protecting web applications has become a priority for businesses of all sizes. AWS Web Application Firewall is a powerful tool designed to safeguard web applications from common exploits and vulnerabilities. This comprehensive guide will explore key aspects of AWS WAF, helping you understand its benefits, configuration, and integration for optimal security.

The Basics of AWS Web Application Firewall

AWS Web Application Firewall is a security service offered by Amazon Web Services that protects web applications from threats such as SQL injection, cross-site scripting, and other malicious attacks. It allows users to create customizable security rules to filter incoming traffic based on specific patterns and behaviors.

Key Features of AWS Web Application Firewall

AWS WAF provides several powerful features including IP address filtering, rate-based rules, and AWS Managed Rules. These managed rule sets are pre-configured to protect against common threats, reducing the time required to implement effective security measures. With detailed logging and monitoring, AWS WAF enables administrators to analyze and respond to suspicious activity in real time.

Setting Up AWS Web Application Firewall for Your Web Application

Deploying AWS WAF involves creating a Web ACL (Access Control List) and associating it with AWS resources such as Amazon CloudFront, Application Load Balancer, or Amazon API Gateway. You can define custom rules or use managed rulesets based on your application’s needs. Configuring rule priority ensures the most important rules are applied first, making security management more efficient.

Benefits of Using AWS Web Application Firewall for Businesses

Implementing AWS WAF offers significant advantages, including improved protection against automated attacks, reduced downtime, and better user experience. Its ability to scale automatically with traffic ensures that security measures remain consistent regardless of user load. Additionally, centralized management simplifies administration for organizations with multiple applications.

Common Use Cases for AWS Web Application Firewall

Businesses use AWS WAF in a variety of scenarios such as blocking bad bots, mitigating DDoS attacks, and enforcing access control. E-commerce platforms, financial services, and healthcare applications frequently rely on AWS WAF to maintain the confidentiality, integrity, and availability of sensitive data.

Integrating AWS Web Application Firewall with Other AWS Services

One of the key strengths of AWS WAF is its seamless integration with other AWS services. For example, pairing it with AWS Shield enhances protection against DDoS attacks, while integration with Amazon CloudWatch provides advanced logging and alerting. Combining AWS WAF with AWS Lambda allows for automated response to detected threats.

Monitoring and Optimizing AWS Web Application Firewall Performance

Regularly reviewing logs and rule performance is essential for maintaining effective security. AWS WAF provides metrics through Amazon CloudWatch, enabling real-time tracking of blocked requests, rule match counts, and overall traffic trends. Adjusting rules based on this data helps fine-tune protection and maintain application performance.

Conclusion

AWS Web Application Firewall is a crucial component in modern web security. With its customizable rules, managed rule sets, and seamless integration with AWS services, it offers a scalable and effective solution for protecting web applications. By implementing AWS WAF, organizations can proactively defend against evolving threats and ensure a secure digital experience for their users.

0 notes

Text

🚀 Integrating ROSA Applications with AWS Services (CS221)

As cloud-native applications evolve, seamless integration between orchestration platforms like Red Hat OpenShift Service on AWS (ROSA) and core AWS services is becoming a vital architectural requirement. Whether you're running microservices, data pipelines, or containerized legacy apps, combining ROSA’s Kubernetes capabilities with AWS’s ecosystem opens the door to powerful synergies.

In this blog, we’ll explore key strategies, patterns, and tools for integrating ROSA applications with essential AWS services — as taught in the CS221 course.

🧩 Why Integrate ROSA with AWS Services?

ROSA provides a fully managed OpenShift experience, but its true potential is unlocked when integrated with AWS-native tools. Benefits include:

Enhanced scalability using Amazon S3, RDS, and DynamoDB

Improved security and identity management through IAM and Secrets Manager

Streamlined monitoring and observability with CloudWatch and X-Ray

Event-driven architectures via EventBridge and SNS/SQS

Cost optimization by offloading non-containerized workloads

🔌 Common Integration Patterns

Here are some popular integration patterns used in ROSA deployments:

1. Storage Integration:

Amazon S3 for storing static content, logs, and artifacts.

Use the AWS SDK or S3 buckets mounted using CSI drivers in ROSA pods.

2. Database Services:

Connect applications to Amazon RDS or Amazon DynamoDB for persistent storage.

Manage DB credentials securely using AWS Secrets Manager injected into pods via Kubernetes secrets.

3. IAM Roles for Service Accounts (IRSA):

Securely grant AWS permissions to OpenShift workloads.

Set up IRSA so pods can assume IAM roles without storing credentials in the container.

4. Messaging and Eventing:

Integrate with Amazon SNS/SQS for asynchronous messaging.

Use EventBridge to trigger workflows from container events (e.g., pod scaling, job completion).

5. Monitoring & Logging:

Forward logs to CloudWatch Logs using Fluent Bit/Fluentd.

Collect metrics with Prometheus Operator and send alerts to Amazon CloudWatch Alarms.

6. API Gateway & Load Balancers:

Expose ROSA services using AWS Application Load Balancer (ALB).

Enhance APIs with Amazon API Gateway for throttling, authentication, and rate limiting.

📚 Real-World Use Case

Scenario: A financial app running on ROSA needs to store transaction logs in Amazon S3 and trigger fraud detection workflows via Lambda.

Solution:

Application pushes logs to S3 using the AWS SDK.

S3 triggers an EventBridge rule that invokes a Lambda function.

The function performs real-time analysis and writes alerts to an SNS topic.

This serverless integration offloads processing from ROSA while maintaining tight security and performance.

✅ Best Practices

Use IRSA for least-privilege access to AWS services.

Automate integration testing with CI/CD pipelines.

Monitor both ROSA and AWS services using unified dashboards.

Encrypt data in transit and at rest using AWS KMS + OpenShift secrets.

🧠 Conclusion

ROSA + AWS is a powerful combination that enables enterprises to run secure, scalable, and cloud-native applications. With the insights from CS221, you’ll be equipped to design robust architectures that capitalize on the strengths of both platforms. Whether it’s storage, compute, messaging, or monitoring — AWS integrations will supercharge your ROSA applications.

For more details visit - https://training.hawkstack.com/integrating-rosa-applications-with-aws-services-cs221/

0 notes