#Apache Spark Consulting

Explore tagged Tumblr posts

Text

Transform Your Business with Pixid.ai Data Engineering Services in Australia

Transform Your Business with Pixid.ai Data Engineering Services in Australia

Data engineering is essential for transforming raw data into actionable insights, and Pixid.ai stands out as a leading provider of these services in Australia. Here’s a comprehensive look at what they offer, incorporating key services and terms relevant to the industry

Data Collection and Storage

Pixid.ai excels in big data engineering services in Australia and New zealand collecting data from various sources like databases, APIs, and IoT devices. They ensure secure storage on cloud platforms or on premises servers, offering flexible cloud data engineering services in Australia tailored to client needs.

Data Processing

Their data processing includes cleaning and organizing raw data to ensure it’s accurate and reliable. This is crucial for effective ETL services in New zealand and Australia (Extract, Transform, Load), which convert raw data into a usable format for analysis.

Data Analysis and Visualization

Pixid.ai employs complex analytical algorithms to detect trends and patterns in data. Their big data analytics company in Australia and New Zealand provides intelligent research and generates visual representations like charts and dashboards to make difficult data easier to grasp. They also provide sentiment analysis services in New zealand and Australia, helping businesses gauge public opinion and customer satisfaction through data.

Business Intelligence and Predictive Analytics

Their robust data analytics consulting services in New zealand and Australia include business intelligence tools for real time performance tracking and predictive analytics to forecast future trends. These services help businesses stay proactive and make data-driven decisions.

Data Governance and Management

Pixid.ai ensures data quality and security through strong data governance frameworks. As data governance service providers in Australia and New zealand they implement policies to comply with regulations, maintain data integrity, and manage data throughout its lifecycle.

Developing a Data Strategy and Roadmap

They collaborate with businesses to develop a comprehensive data strategy aligned with overall business goals. This strategy includes creating a roadmap that outlines steps, timelines, and resources required for successful data initiatives.

Specialized Consulting Services

Pixid.ai offers specialized consulting services in various big data technologies:

Apache Spark consulting services in Australia: Leveraging Spark for fast and scalable data processing.

Data Bricks consulting services in Australia: Utilizing Databricks for unified analytics and AI solutions.

Big data consulting services in Australia: Providing expert guidance on big data solutions and technologies.

Why Choose Pixid.ai?

Pixid.ai’s expertise ensures businesses can leverage their data effectively, providing a competitive edge. Their services span from data collection to advanced analytics, making them a top choice for big data engineering services in Australia and new zealand They utilize technologies like Hadoop and cloud platforms to process data efficiently and derive accurate insights.

Partnering with Pixid.ai means accessing comprehensive data solutions, from cloud data engineering services in Australia to detailed data governance and management. Their specialized consulting services, including Apache Spark consulting services in Australia and Data Bricks consulting services in Australia and new zealand ensure that businesses have the expert guidance needed to maximize their data’s value.

Conclusion

In the competitive landscape of data driven business, Pixid.ai provides essential services to transform raw data into valuable insights. Whether it’s through big data consulting services in Australia and new zealand or data analytics consulting services in new Zealand and Australia, Pixid.ai helps businesses thrive. Their commitment to excellence in data engineering and governance makes them a trusted partner for any business looking to harness the power of their data.

For more information please contact.www.pixid.ai

#big data engineering services in australia#data governance service providers in australia#big data analytics company in australia#cloud data engineering services in australia#big data consulting services in australia#data analytics consulting services in australia#apache spark consulting services in australia#data bricks consulting services in australia#sentiment analysis services in australia#ETL Services in australia

0 notes

Text

Business Intelligence vs Data Analytics: What’s the Right Fit?

Modern businesses generate tons of data—but collecting it is only half the battle. Making sense of it is where the real transformation begins. That’s why we consulted with API Connects—a leading IT firm in New Zealand—to understand the true difference between Business Intelligence and Data Analytics and how each empowers strategic decision-making.

Key Takeaways from API Connects: 🔹 Business Intelligence (BI) – Focuses on historical and current performance using dashboards, reports, and visualizations. Ideal for operational efficiency and KPI monitoring. 🔹 Data Analytics – Goes deeper to uncover trends, patterns, and future insights using advanced tools like Python, R, and machine learning models. 🔹 BI Tools – Power BI, Tableau, Looker, and SAP BusinessObjects simplify reporting for non-technical teams. 🔹 Analytics Tools – Python, SQL, Apache Spark, and BigQuery support predictive and prescriptive modeling. 🔹 Best for BI – Companies seeking real-time insights and simplified reporting for decision-makers. 🔹 Best for Analytics – Teams aiming to answer why-events-happen and predict what comes next. 🔹 Industry Use Cases – Retailers use BI to track daily sales; analysts use data analytics to forecast demand and optimize inventory. 🔹 Scalability – Data analytics handles complex, large-scale datasets with ease.

Conclusion: Both BI and Data Analytics play vital roles in digital transformation. While BI simplifies the past and present, analytics shapes the future. API Connects delivers integrated solutions that help businesses harness both for smarter, data-driven growth.

Don’t forget to check their most popular services:

Automation Solutions

robotic process automation solutions

machine learning services

core banking solutions

IoT business solutions

data engineering services

DevOps services

mulesoft integration services

ai services

0 notes

Text

Top Trends Shaping the Future of Data Engineering Consultancy

In today’s digital-first world, businesses are rapidly recognizing the need for structured and strategic data management. As a result, Data Engineering Consultancy is evolving at an unprecedented pace. From cloud-native architecture to AI-driven automation, the future of data engineering is being defined by innovation and agility. Here are the top trends shaping this transformation.

1. Cloud-First Data Architectures

Modern businesses are migrating their infrastructure to cloud platforms like AWS, Azure, and Google Cloud. Data engineering consultants are now focusing on building scalable, cloud-native data pipelines that offer better performance, security, and flexibility.

2. Real-Time Data Processing

The demand for real-time analytics is growing, especially in sectors like finance, retail, and logistics. Data Engineering Consultancy services are increasingly incorporating technologies like Apache Kafka, Flink, and Spark to support instant data processing and decision-making.

3. Advanced Data Planning

A strategic approach to Data Planning is becoming central to successful consultancy. Businesses want to go beyond reactive reporting—they seek proactive, long-term strategies for data governance, compliance, and scalability.

4. Automation and AI Integration

Automation tools and AI models are revolutionizing how data is processed, cleaned, and analyzed. Data engineers now use machine learning to optimize data quality checks, ETL processes, and anomaly detection.

5. Data Democratization

Consultants are focusing on creating accessible data systems, allowing non-technical users to engage with data through intuitive dashboards and self-service analytics.

In summary, the future of Data Engineering Consultancy lies in its ability to adapt to technological advancements while maintaining a strong foundation in Data Planning. By embracing these trends, businesses can unlock deeper insights, enhance operational efficiency, and stay ahead of the competition in the data-driven era. Get in touch with Kaliper.io today!

0 notes

Text

Devant – Leading Big Data Analytics Service Providers in India

Devant is one of the top big data analytics service providers in India, delivering advanced data-driven solutions that empower businesses to make smarter, faster decisions. We specialize in collecting, processing, and analyzing large volumes of structured and unstructured data to uncover actionable insights. Our expert team leverages modern technologies such as Hadoop, Spark, and Apache Flink to create scalable, real-time analytics platforms that drive operational efficiency and strategic growth. From data warehousing and ETL pipelines to custom dashboards and predictive models, Devant provides end-to-end big data services tailored to your needs.

As trusted big data analytics solution providers, we serve a wide range of industries including finance, healthcare, retail, and logistics. Our solutions help organizations understand customer behavior, optimize business processes, and forecast trends with high accuracy. Devant’s consultative approach ensures that your data strategy aligns with your long-term business goals while maintaining security, compliance, and scalability. With deep expertise and a client-first mindset, we turn complex data into meaningful outcomes.Contact us today and let Devant be your go-to partner for big data success.

#devant#Data Analytics#Data Analytics Service Providers#Data Analytics Service#Big Data Analytics Service Providers in India

0 notes

Text

Unlocking the Power of Data: Why Kadel Labs Offers the Best Databricks Services and Consultants

In today’s rapidly evolving digital landscape, data is not just a byproduct of business operations—it is the foundation for strategic decision-making, innovation, and competitive advantage. Companies across the globe are leveraging advanced data platforms to transform raw data into actionable insights. One of the most powerful platforms enabling this transformation is Databricks, a cloud-based data engineering and analytics platform built on Apache Spark. However, to harness its full potential, organizations often require expert guidance and execution. This is where Kadel Labs steps in, offering the best Databricks consultants and top-tier Databricks services tailored to meet diverse business needs.

0 notes

Text

Empowering Businesses with Advanced Data Engineering Solutions in Toronto – C Data Insights

In a rapidly digitizing world, companies are swimming in data—but only a few truly know how to harness it. At C Data Insights, we bridge that gap by delivering top-tier data engineering solutions in Toronto designed to transform your raw data into actionable insights. From building robust data pipelines to enabling intelligent machine learning applications, we are your trusted partner in the Greater Toronto Area (GTA).

What Is Data Engineering and Why Is It Critical?

Data engineering involves the design, construction, and maintenance of scalable systems for collecting, storing, and analyzing data. In the modern business landscape, it forms the backbone of decision-making, automation, and strategic planning.

Without a solid data infrastructure, businesses struggle with:

Inconsistent or missing data

Delayed analytics reports

Poor data quality impacting AI/ML performance

Increased operational costs

That’s where our data engineering service in GTA helps. We create a seamless flow of clean, usable, and timely data—so you can focus on growth.

Key Features of Our Data Engineering Solutions

As a leading provider of data engineering solutions in Toronto, C Data Insights offers a full suite of services tailored to your business goals:

1. Data Pipeline Development

We build automated, resilient pipelines that efficiently extract, transform, and load (ETL) data from multiple sources—be it APIs, cloud platforms, or on-premise databases.

2. Cloud-Based Architecture

Need scalable infrastructure? We design data systems on AWS, Azure, and Google Cloud, ensuring flexibility, security, and real-time access.

3. Data Warehousing & Lakehouses

Store structured and unstructured data efficiently with modern data warehousing technologies like Snowflake, BigQuery, and Databricks.

4. Batch & Streaming Data Processing

Process large volumes of data in real-time or at scheduled intervals with tools like Apache Kafka, Spark, and Airflow.

Data Engineering and Machine Learning – A Powerful Duo

Data engineering lays the groundwork, and machine learning unlocks its full potential. Our solutions enable you to go beyond dashboards and reports by integrating data engineering and machine learning into your workflow.

We help you:

Build feature stores for ML models

Automate model training with clean data

Deploy models for real-time predictions

Monitor model accuracy and performance

Whether you want to optimize your marketing spend or forecast inventory needs, we ensure your data infrastructure supports accurate, AI-powered decisions.

Serving the Greater Toronto Area with Local Expertise

As a trusted data engineering service in GTA, we take pride in supporting businesses across:

Toronto

Mississauga

Brampton

Markham

Vaughan

Richmond Hill

Scarborough

Our local presence allows us to offer faster response times, better collaboration, and solutions tailored to local business dynamics.

Why Businesses Choose C Data Insights

✔ End-to-End Support: From strategy to execution, we’re with you every step of the way ✔ Industry Experience: Proven success across retail, healthcare, finance, and logistics ✔ Scalable Systems: Our solutions grow with your business needs ✔ Innovation-Focused: We use the latest tools and best practices to keep you ahead of the curve

Take Control of Your Data Today

Don’t let disorganized or inaccessible data hold your business back. Partner with C Data Insights to unlock the full potential of your data. Whether you need help with cloud migration, real-time analytics, or data engineering and machine learning, we’re here to guide you.

📍 Proudly offering data engineering solutions in Toronto and expert data engineering service in GTA.

📞 Contact us today for a free consultation 🌐 https://cdatainsights.com

C Data Insights – Engineering Data for Smart, Scalable, and Successful Businesses

#data engineering solutions in Toronto#data engineering and machine learning#data engineering service in Gta

0 notes

Text

How Modern Data Engineering Powers Scalable, Real-Time Decision-Making

In today's world, driven by technology, businesses have evolved further and do not want to analyze data from the past. Everything from e-commerce websites providing real-time suggestions to banks verifying transactions in under a second, everything is now done in a matter of seconds. Why has this change taken place? The modern age of data engineering involves software development, data architecture, and cloud infrastructure on a scalable level. It empowers organizations to convert massive, fast-moving data streams into real-time insights.

From Batch to Real-Time: A Shift in Data Mindset

Traditional data systems relied on batch processing, in which data was collected and analyzed after certain periods of time. This led to lagging behind in a fast-paced world, as insights would be outdated and accuracy would be questionable. Ultra-fast streaming technologies such as Apache Kafka, Apache Flink, and Spark Streaming now enable engineers to create pipelines that help ingest, clean, and deliver insights in an instant. This modern-day engineering technique shifts the paradigm of outdated processes and is crucial for fast-paced companies in logistics, e-commerce, relevancy, and fintech.

Building Resilient, Scalable Data Pipelines

Modern data engineering focuses on the construction of thoroughly monitored, fault-tolerant data pipelines. These pipelines are capable of scaling effortlessly to higher volumes of data and are built to accommodate schema changes, data anomalies, and unexpected traffic spikes. Cloud-native tools like AWS Glue and Google Cloud Dataflow with Snowflake Data Sharing enable data sharing and integration scaling without limits across platforms. These tools make it possible to create unified data flows that power dashboards, alerts, and machine learning models instantaneously.

Role of Data Engineering in Real-Time Analytics

Here is where these Data Engineering Services make a difference. At this point, companies providing these services possess considerable technical expertise and can assist an organization in designing modern data architectures in modern frameworks aligned with their business objectives. From establishing real-time ETL pipelines to infrastructure handling, these services guarantee that your data stack is efficient and flexible in terms of cost. Companies can now direct their attention to new ideas and creativity rather than the endless cycle of data management patterns.

Data Quality, Observability, and Trust

Real-time decision-making depends on the quality of the data that powers it. Modern data engineering integrates practices like data observability, automated anomaly detection, and lineage tracking. These ensure that data within the systems is clean and consistent and can be traced. With tools like Great Expectations, Monte Carlo, and dbt, engineers can set up proactive alerts and validations to mitigate issues that could affect economic outcomes. This trust in data quality enables timely, precise, and reliable decisions.

The Power of Cloud-Native Architecture

Modern data engineering encompasses AWS, Azure, and Google Cloud. They provide serverless processing, autoscaling, real-time analytics tools, and other services that reduce infrastructure expenditure. Cloud-native services allow companies to perform data processing, as well as querying, on exceptionally large datasets instantly. For example, with Lambda functions, data can be transformed. With BigQuery, it can be analyzed in real-time. This allows rapid innovation, swift implementation, and significant long-term cost savings.

Strategic Impact: Driving Business Growth

Real-time data systems are providing organizations with tangible benefits such as customer engagement, operational efficiency, risk mitigation, and faster innovation cycles. To achieve these objectives, many enterprises now opt for data strategy consulting, which aligns their data initiatives to the broader business objectives. These consulting firms enable organizations to define the right KPIs, select appropriate tools, and develop a long-term roadmap to achieve desired levels of data maturity. By this, organizations can now make smarter, faster, and more confident decisions.

Conclusion

Investing in modern data engineering is more than an upgrade of technology — it's a shift towards a strategic approach of enabling agility in business processes. With the adoption of scalable architectures, stream processing, and expert services, the true value of organizational data can be attained. This ensures that whether it is customer behavior tracking, operational optimization, or trend prediction, data engineering places you a step ahead of changes before they happen, instead of just reacting to changes.

1 note

·

View note

Text

How Databricks Consulting services Transforms Apache Spark Performance for Large Datasets in 2025

Introduction

Organizations are filled with information as big data explodes! Across many industries, managing large databases has become a crucial concern for corporations. Although Apache Spark promised to change data processing, many businesses find it difficult to realize its full potential. Presenting Databricks consulting services, the revolutionary approach that changes how businesses manage intricate, extensive data problems.

Did you know that up to 30% of an organization’s computational resources can be lost due to ineffective big data processing? Databricks Consulting transforms data processing bottlenecks into efficient, high-throughput workflows by changing Apache Spark performance.

Understanding Apache Spark Performance Limitations

Apache Spark has emerged as a powerful distributed computing framework, but its performance can be significantly impacted by various challenges:

• Inefficient cluster configurations that don’t match workload requirements • Suboptimal memory management and resource allocation • Complex data shuffling operations that create significant overhead • Lack of proper data partitioning strategies • Scalability issues with increasingly large and complex datasets

These limitations can dramatically slow down data processing, increase computational costs, and create frustrating bottlenecks for data engineering services. Without proper optimization, organizations find themselves fighting their infrastructure instead of leveraging it for competitive advantage.

Databricks Consulting’s Diagnostic Approach

Databricks consulting partner take a meticulous, data-driven approach to performance optimization:

• Comprehensive Performance Assessment

Detailed analysis of existing Spark infrastructure

Identification of specific performance bottlenecks

Benchmarking current processing capabilities

• Advanced Diagnostic Techniques

Utilizing proprietary performance monitoring tools

Deep-dive analysis of cluster configurations

Detailed examination of data processing workflows

The approach goes beyond surface-level fixes, providing a holistic understanding of an organization’s unique data processing challenges. By combining cutting-edge diagnostic tools with deep expertise, Databricks Consulting creates tailored optimization strategies that address root causes of performance issues.

Key Performance Optimization Techniques

Databricks Consulting employs a multi-faceted approach to Spark performance enhancement:

• Cluster Configuration Optimization

Right-sizing compute resources

Dynamic resource allocation

Intelligent workload management

• Memory Management Strategies

Efficient memory partitioning

Reducing garbage collection overhead

Implementing intelligent caching mechanisms

• Data Partitioning Improvements

Optimizing data distribution across clusters

Minimizing data shuffle operations

Implementing adaptive query execution

These techniques can dramatically improve processing speed, reduce computational costs, and enhance overall system reliability. Organizations typically see performance improvements of 40-60% after implementing these optimizations.

Machine Learning and Advanced Analytics Optimization

Beyond traditional data processing, Databricks Consulting excels in advanced analytics optimization:

• MLflow Integration

Streamlining machine learning workflow management

Reducing model training and deployment complexity

Providing end-to-end machine learning lifecycle tracking

• Performance Tuning for Complex Workloads

Accelerating model training processes

Reducing inference latency

Scaling machine learning infrastructure efficiently

The result is a more agile, responsive machine learning ecosystem that can keep pace with rapidly evolving business requirements.

Real-World Case Studies and Performance Gains

Consider these transformative examples:

• Financial Services Client

Challenge: 12-hour daily data processing window

Databricks Solution: Reduced processing time to 2 hours

Performance Improvement: 80% faster data pipeline

• Healthcare Data Analytics

Challenge: Complex genomic data processing

Databricks Solution: Optimized cluster configuration

Performance Improvement: 50% reduction in computational costs

Conclusion

Databricks consulting services aren’t just about fixing performance issues – it’s about reimagining what’s possible with your data infrastructure. By implementing cutting-edge optimization techniques, organizations can unlock unprecedented efficiency, reduce computational costs, and accelerate their data-driven decision-making.

The future of big data processing is here, and it’s powered by strategic, expert-driven optimization. Are you ready to transform your Apache Spark performance?

0 notes

Text

Career Opportunities After Completing an Artificial Intelligence Course in Dubai

As the world moves rapidly toward digital transformation, Artificial Intelligence (AI) is no longer a luxury but a necessity across industries. Dubai, a global leader in smart technology adoption, has become a thriving hub for AI innovation and talent. If you're considering taking an Artificial Intelligence course in Dubai, you're making a strategic decision that can open up lucrative and future-proof career paths.

In this article, we'll explore the career opportunities available after completing an Artificial Intelligence course in Dubai and why this city is emerging as a leading AI education and employment destination in 2025.

Why AI in Dubai?

Dubai’s ambitious government-led initiatives—such as the UAE Artificial Intelligence Strategy 2031 and Smart Dubai—are driving AI implementation across sectors. From smart policing and autonomous transport to AI-driven healthcare and finance, Dubai is creating a dynamic environment for AI professionals to flourish.

Completing an Artificial Intelligence course in Dubai equips you with the skills needed to take advantage of these opportunities in a tech-forward economy.

In-Demand AI Career Roles in Dubai

Here are the top job roles you can pursue after earning your AI certification in Dubai:

1. AI Engineer

AI Engineers are the architects behind intelligent systems. They develop algorithms, build neural networks, and optimize AI models for real-world applications. Companies in Dubai’s fintech, logistics, and e-commerce sectors are actively hiring AI Engineers to streamline operations and enhance customer experience.

Key Skills: Python, TensorFlow, Keras, deep learning, machine learning Average Salary: AED 240,000 – AED 360,000 annually

2. Data Scientist

Data Scientists extract actionable insights from massive datasets using AI-powered tools. With Dubai’s government and private sectors investing in data-driven decision-making, data scientists are in high demand across healthcare, education, and energy sectors.

Key Skills: Data analysis, machine learning, R, Python, SQL, statistics Average Salary: AED 220,000 – AED 340,000 annually

3. Machine Learning Engineer

These professionals build scalable ML systems that can learn and adapt from data. ML Engineers in Dubai are developing recommendation engines, fraud detection systems, and predictive maintenance tools for smart cities and businesses.

Key Skills: Supervised and unsupervised learning, model deployment, Python, Apache Spark Average Salary: AED 250,000 – AED 380,000 annually

4. NLP (Natural Language Processing) Specialist

NLP Specialists develop applications that can understand and process human language. In Dubai, the rise of Arabic-language chatbots, voice assistants, and document automation tools has made this role highly valuable.

Key Skills: NLP libraries (spaCy, NLTK), sentiment analysis, text mining Average Salary: AED 220,000 – AED 300,000 annually

5. AI Project Manager

For those with both technical and managerial skills, this role involves overseeing AI projects from ideation to implementation. Dubai’s public sector projects often require skilled AI project managers to ensure smooth execution.

Key Skills: Agile methodology, team management, data literacy, stakeholder communication Average Salary: AED 300,000 – AED 450,000 annually

6. Computer Vision Engineer

In sectors like security, autonomous vehicles, and smart retail, computer vision is essential. Dubai’s airport systems, surveillance, and retail analytics are using CV applications extensively.

Key Skills: OpenCV, deep learning, image processing, object detection Average Salary: AED 240,000 – AED 320,000 annually

7. AI Consultant

AI consultants guide businesses in understanding how AI can solve operational challenges. Consulting firms and innovation labs in Dubai are looking for AI experts who can advise on implementation and ROI.

Key Skills: AI strategy, solution architecture, client management Average Salary: AED 280,000 – AED 400,000 annually

Top Industries Hiring AI Talent in Dubai

Dubai’s cross-sectoral adoption of AI means that career opportunities aren’t limited to just tech companies. Here are the top industries where AI professionals are in high demand:

Healthcare

From AI-powered diagnostics to hospital management systems, healthcare in Dubai is being transformed. Government and private hospitals are adopting machine learning models for faster and more accurate care.

Finance & Banking

AI is being used for fraud detection, automated credit scoring, algorithmic trading, and customer service. Dubai’s banking sector is particularly active in integrating AI to improve efficiency and reduce risk.

Logistics & Supply Chain

As a global logistics hub, Dubai relies heavily on predictive analytics, route optimization, and intelligent inventory systems—all powered by AI.

Smart Cities & Government

The Smart Dubai initiative aims to digitize every aspect of city living using AI—from transportation and energy to law enforcement and education.

Retail & E-Commerce

Companies like Noon and Carrefour are leveraging AI to personalize shopping experiences, manage inventory, and drive targeted marketing.

Real Estate & Construction

AI is being used in property valuation, risk assessment, and smart building technologies throughout Dubai’s rapidly growing skyline.

Advantages of Studying AI in Dubai

Completing your Artificial Intelligence course in Dubai offers several advantages beyond just academics:

International Exposure

Dubai is home to professionals from over 200 nationalities. Studying here provides a multicultural learning environment and exposure to global best practices.

Industry Collaboration

Many AI programs in Dubai include internships, capstone projects, and industry tie-ups. This gives students a head start in applying AI to real business problems.

Practical, Industry-Driven Curriculum

Institutes offering AI education in Dubai focus heavily on practical skills and hands-on learning—making graduates job-ready from day one.

A Glimpse into a Globally Recognized AI Program in Dubai

One standout program in Dubai is offered by a globally respected AI training institute that emphasizes:

Real-world case studies in healthcare, finance, and smart cities

Tools like Python, TensorFlow, Scikit-learn, and Generative AI platforms

Mentorship by experienced data scientists and AI engineers

Career support services including resume building and interview preparation

Flexibility with weekend, part-time, and online learning options

Their Artificial Intelligence course in Dubai is ideal for students, working professionals, and even entrepreneurs seeking to harness the power of AI in their domain.

Graduates from this institute have landed roles at leading companies across the UAE and globally. The curriculum is continuously updated to reflect the fast-changing AI landscape, including areas like Agentic AI, ethical AI, and AI policy.

Final Thoughts

Dubai is not just a city of the future—it is the present capital of innovation in the Middle East. As AI becomes a cornerstone of every major industry, having the right skills can give you a significant edge in the job market.

By enrolling in anArtificial Intelligence Classroom Course in Dubai, you position yourself at the intersection of technology, opportunity, and growth. Whether you're a beginner or a seasoned professional looking to upskill, the career paths are wide, varied, and lucrative.

And when you choose a globally recognized, practical, and project-oriented AI program—like the one offered by the Boston Institute of Analytics—you're not just learning AI, you're preparing to lead in it.

#Artificial Intelligence Course in Dubai#Artificial Intelligence Classroom Course in Dubai#Data Science Certification Training Course in Dubai#Data Scientist Training Institutes in Dubai

0 notes

Text

Building a Rewarding Career in Data Science: A Comprehensive Guide

Data Science has emerged as one of the most sought-after career paths in the tech world, blending statistics, programming, and domain expertise to extract actionable insights from data. Whether you're a beginner or transitioning from another field, this blog will walk you through what data science entails, key tools and packages, how to secure a job, and a clear roadmap to success.

What is Data Science?

Data Science is the interdisciplinary field of extracting knowledge and insights from structured and unstructured data using scientific methods, algorithms, and systems. It combines elements of mathematics, statistics, computer science, and domain-specific knowledge to solve complex problems, make predictions, and drive decision-making. Applications span industries like finance, healthcare, marketing, and technology, making it a versatile and impactful career choice.

Data scientists perform tasks such as:

Collecting and cleaning data

Exploratory data analysis (EDA)

Building and deploying machine learning models

Visualizing insights for stakeholders

Automating data-driven processes

Essential Data Science Packages

To excel in data science, familiarity with programming languages and their associated libraries is critical. Python and R are the dominant languages, with Python being the most popular due to its versatility and robust ecosystem. Below are key Python packages every data scientist should master:

NumPy: For numerical computations and handling arrays.

Pandas: For data manipulation and analysis, especially with tabular data.

Matplotlib and Seaborn: For data visualization and creating insightful plots.

Scikit-learn: For machine learning algorithms, including regression, classification, and clustering.

TensorFlow and PyTorch: For deep learning and neural network models.

SciPy: For advanced statistical and scientific computations.

Statsmodels: For statistical modeling and hypothesis testing.

NLTK and SpaCy: For natural language processing tasks.

XGBoost, LightGBM, CatBoost: For high-performance gradient boosting in machine learning.

For R users, packages like dplyr, ggplot2, tidyr, and caret are indispensable. Additionally, tools like SQL for database querying, Tableau or Power BI for visualization, and Apache Spark for big data processing are valuable in many roles.

How to Get a Job in Data Science

Landing a data science job requires a mix of technical skills, practical experience, and strategic preparation. Here’s how to stand out:

Build a Strong Foundation: Master core skills in programming (Python/R), statistics, and machine learning. Understand databases (SQL) and data visualization tools.

Work on Real-World Projects: Apply your skills to projects that solve real problems. Use datasets from platforms like Kaggle, UCI Machine Learning Repository, or Google Dataset Search. Examples include predicting customer churn, analyzing stock prices, or building recommendation systems.

Create a Portfolio: Showcase your projects on GitHub and create a personal website or blog to explain your work. Highlight your problem-solving process, code, and visualizations.

Gain Practical Experience:

Internships: Apply for internships at startups, tech companies, or consulting firms.

Freelancing: Take on small data science gigs via platforms like Upwork or Freelancer.

Kaggle Competitions: Participate in Kaggle competitions to sharpen your skills and gain recognition.

Network and Learn: Join data science communities on LinkedIn, X, or local meetups. Attend conferences like PyData or ODSC. Follow industry leaders to stay updated on trends.

Tailor Your Applications: Customize your resume and cover letter for each job, emphasizing relevant skills and projects. Highlight transferable skills if transitioning from another field.

Prepare for Interviews: Be ready for technical interviews that test coding (e.g., Python, SQL), statistics, and machine learning concepts. Practice on platforms like LeetCode, HackerRank, or StrataScratch. Be prepared to discuss your projects in depth.

Upskill Continuously: Stay current with emerging tools (e.g., LLMs, MLOps) and technologies like cloud platforms (AWS, GCP, Azure).

Data Science Career Roadmap

Here’s a step-by-step roadmap to guide you from beginner to data science professional:

Phase 1: Foundations (1-3 Months)

Learn Programming: Start with Python (or R). Focus on syntax, data structures, and libraries like NumPy and Pandas.

Statistics and Math: Study probability, hypothesis testing, linear algebra, and calculus (Khan Academy, Coursera).

Tools: Get comfortable with Jupyter Notebook, Git, and basic SQL.

Resources: Books like "Python for Data Analysis" by Wes McKinney or online courses like Coursera’s "Data Science Specialization."

Phase 2: Core Data Science Skills (3-6 Months)

Machine Learning: Learn supervised (regression, classification) and unsupervised learning (clustering, PCA) using Scikit-learn.

Data Wrangling and Visualization: Master Pandas, Matplotlib, and Seaborn for EDA and storytelling.

Projects: Build 2-3 projects, e.g., predicting house prices or sentiment analysis.

Resources: "Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Géron; Kaggle micro-courses.

Phase 3: Advanced Topics and Specialization (6-12 Months)

Deep Learning: Explore TensorFlow/PyTorch for neural networks and computer vision/NLP tasks.

Big Data Tools: Learn Spark or Hadoop for handling large datasets.

MLOps: Understand model deployment, CI/CD pipelines, and tools like Docker or Kubernetes.

Domain Knowledge: Focus on an industry (e.g., finance, healthcare) to add context to your work.

Projects: Create advanced projects, e.g., a chatbot or fraud detection system.

Resources: Fast.ai courses, Udemy’s "Deep Learning A-Z."

Phase 4: Job Preparation and Application (Ongoing)

Portfolio: Polish your GitHub and personal website with 3-5 strong projects.

Certifications: Consider credentials like Google’s Data Analytics Professional Certificate or AWS Certified Machine Learning.

Networking: Engage with professionals on LinkedIn/X and contribute to open-source projects.

Job Applications: Apply to entry-level roles like Data Analyst, Junior Data Scientist, or Machine Learning Engineer.

Interview Prep: Practice coding, ML theory, and behavioral questions.

Phase 5: Continuous Growth

Stay updated with new tools and techniques (e.g., generative AI, AutoML).

Pursue advanced roles like Senior Data Scientist, ML Engineer, or Data Science Manager.

Contribute to the community through blogs, talks, or mentorship.

Final Thoughts

A career in data science is both challenging and rewarding, offering opportunities to solve impactful problems across industries. By mastering key packages, building a strong portfolio, and following a structured roadmap, you can break into this dynamic field. Start small, stay curious, and keep learning—your data science journey awaits!

0 notes

Text

Big Data Technologies You’ll Master in IIT Jodhpur’s PG Diploma

In today’s digital-first economy, data is more than just information—it's power. Successful businesses are set apart by their ability to collect, process, and interpret massive datasets. For professionals aspiring to enter this transformative domain, the IIT Jodhpur PG Diploma offers a rigorous, hands-on learning experience focused on mastering cutting-edge big data technologies.

Whether you're already in the tech field or looking to transition, this program equips you with the tools and skills needed to thrive in data-centric roles.

Understanding the Scope of Big Data

Big data is defined not just by volume but also by velocity, variety, and veracity. With businesses generating terabytes of data every day, there's a pressing need for experts who can handle real-time data streams, unstructured information, and massive storage demands. IIT Jodhpur's diploma program dives deep into these complexities, offering a structured pathway to becoming a future-ready data professional.

Also, read this blog: AI Data Analyst: Job Role and Scope

Core Big Data Technologies Covered in the Program

Here’s an overview of the major tools and technologies you’ll gain hands-on experience with during the program:

1. Hadoop Ecosystem

The foundation of big data processing, Hadoop offers distributed storage and computing capabilities. You'll explore tools such as:

HDFS (Hadoop Distributed File System) for scalable storage

MapReduce for parallel data processing

YARN for resource management

2. Apache Spark

Spark is a game-changer in big data analytics, known for its speed and versatility. The course will teach you how to:

Run large-scale data processing jobs

Perform in-memory computation

Use Spark Streaming for real-time analytics

3. NoSQL Databases

Traditional databases fall short when handling unstructured or semi-structured data. You’ll gain hands-on knowledge of:

MongoDB and Cassandra for scalable document and column-based storage

Schema design, querying, and performance optimization

4. Data Warehousing and ETL Tools

Managing the flow of data is crucial. Learn how to:

Use tools like Apache NiFi, Airflow, and Talend

Design effective ETL pipelines

Manage metadata and data lineage

5. Cloud-Based Data Solutions

Big data increasingly lives on the cloud. The program explores:

Cloud platforms like AWS, Azure, and Google Cloud

Services such as Amazon EMR, BigQuery, and Azure Synapse

6. Data Visualization and Reporting

Raw data must be translated into insights. You'll work with:

Tableau, Power BI, and Apache Superset

Custom dashboards for interactive analytics

Real-World Applications and Projects

Learning isn't just about tools—it's about how you apply them. The curriculum emphasizes:

Capstone Projects simulating real-world business challenges

Case Studies from domains like finance, healthcare, and e-commerce

Collaborative work to mirror real tech teams

Industry-Driven Curriculum and Mentorship

The diploma is curated in collaboration with industry experts to ensure relevance and applicability. Students get the opportunity to:

Attend expert-led sessions and webinars

Receive guidance from mentors working in top-tier data roles

Gain exposure to the expectations and workflows of data-driven organizations

Career Pathways After the Program

Graduates from this program can explore roles such as:

Data Engineer

Big Data Analyst

Cloud Data Engineer

ETL Developer

Analytics Consultant

With its robust training and project-based approach, the program serves as a launchpad for aspiring professionals.

Why Choose This Program for Data Engineering?

The Data Engineering course at IIT Jodhpur is tailored to meet the growing demand for skilled professionals in the big data industry. With a perfect blend of theory and practical exposure, students are equipped to take on complex data challenges from day one.

Moreover, this is more than just academic training. It is IIT Jodhpur BS./BSc. in Applied AI and Data Science, designed with a focus on the practical, day-to-day responsibilities you'll encounter in real job roles. You won’t just understand how technologies work—you’ll know how to implement and optimize them in dynamic environments.

Conclusion

In a data-driven world, staying ahead means being fluent in the tools that power tomorrow’s innovation. The IIT Jodhpur Data Engineering program offers the in-depth, real-world training you need to stand out in this competitive field. Whether you're upskilling or starting fresh, this diploma lays the groundwork for a thriving career in data engineering.

Take the next step toward your future with “Futurense”, your trusted partner in building a career shaped by innovation, expertise, and industry readiness.

Source URL: www.lasttrumpnews.com/big-data-technologies-iit-jodhpur-pg-diploma

0 notes

Text

Essential Technical Skills for a Successful Career in Business Analytics

If you're fascinated by the idea of bridging the gap between business acumen and analytical prowess, then a career in Business Analytics might be your perfect fit. But what specific technical skills are essential to thrive in this field?

Building Your Technical Arsenal

Data Retrieval and Manipulation: SQL proficiency is non-negotiable. Think of SQL as your scuba gear, allowing you to dive deep into relational databases and retrieve the specific data sets you need for analysis. Mastering queries, filters, joins, and aggregations will be your bread and butter.

Statistical Software: Unleash the analytical might of R and Python. These powerful languages go far beyond basic calculations. With R, you can create complex statistical models, perform hypothesis testing, and unearth hidden patterns in your data. Python offers similar functionalities and boasts a vast library of data science packages like NumPy, Pandas, and Scikit-learn, empowering you to automate tasks, build machine learning models, and create sophisticated data visualizations.

Data Visualization: Craft compelling data stories with Tableau, Power BI, and QlikView. These visualization tools are your paintbrushes, transforming raw data into clear, impactful charts, graphs, and dashboards. Master the art of storytelling with data, ensuring your insights resonate with both technical and non-technical audiences. Learn to create interactive dashboards that allow users to explore the data themselves, fostering a data-driven culture within the organization.

Business Intelligence (BI) Expertise: Become a BI whiz. BI software suites are the command centers of data management. Tools like Microsoft Power BI, Tableau Server, and Qlik Sense act as a central hub, integrating data from various sources (databases, spreadsheets, social media) and presenting it in a cohesive manner. Learn to navigate these platforms to create performance dashboards, track key metrics, and identify trends that inform strategic decision-making.

Beyond the Basics: Stay ahead of the curve. The technical landscape is ever-evolving. Consider exploring cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP) for data storage, management, and scalability. Familiarize yourself with data warehousing concepts and tools like Apache Spark for handling massive datasets efficiently.

.

Organizations Hiring Business Analytics and Data Analytics Professionals:

Information Technology (IT) and IT-enabled Services (ITES):

TCS, Infosys, Wipro, HCL, Accenture, Cognizant, Tech Mahindra (Business Analyst: Rs.400,000 - Rs.1,200,000, Data Analyst: Rs.500,000 - Rs.1,400,000)

Multinational Corporations with Indian operations:

IBM, Dell, HP, Google, Amazon, Microsoft (Business Analyst: Rs.500,000 - Rs.1,500,000, Data Analyst: Rs.600,000 - Rs.1,600,000)

Banking, Financial Services and Insurance (BFSI):

HDFC Bank, ICICI Bank, SBI, Kotak Mahindra Bank, Reliance Life Insurance, LIC (Business Analyst: Rs.550,000 - Rs.1,300,000, Data Analyst: Rs.650,000 - Rs.1,500,000)

E-commerce and Retail:

Flipkart, Amazon India, Myntra, Snapdeal, Big basket (Business Analyst: Rs. 450,000 - Rs. 1,000,000, Data Analyst: Rs. 550,000 - Rs. 1,200,000)

Management Consulting Firms:

McKinsey & Company, Bain & Company, Boston Consulting Group (BCG) (Business Analyst: Rs.700,000 - Rs.1,800,000, Data Scientist: Rs.800,000 - Rs.2,000,000)

By mastering this technical arsenal, you'll be well-equipped to transform from data novice to data maestro. Consider pursuing an MBA in Business Analytics, like the one offered by Poddar Management and Technical Campus, Jaipur. These programs often integrate industry projects and internships, providing valuable hands-on experience with the latest tools and technologies.

0 notes

Text

Unlocking the Power of Data: Why Kadel Labs Offers the Best Databricks Services and Consultants

In today’s rapidly evolving digital landscape, data is not just a byproduct of business operations—it is the foundation for strategic decision-making, innovation, and competitive advantage. Companies across the globe are leveraging advanced data platforms to transform raw data into actionable insights. One of the most powerful platforms enabling this transformation is Databricks, a cloud-based data engineering and analytics platform built on Apache Spark. However, to harness its full potential, organizations often require expert guidance and execution. This is where Kadel Labs steps in, offering the best Databricks consultants and top-tier Databricks services tailored to meet diverse business needs.

Understanding Databricks and Its Importance

Before diving into why Kadel Labs stands out, it’s important to understand what makes Databricks so valuable. Databricks combines the best of data engineering, machine learning, and data science into a unified analytics platform. It simplifies the process of building, training, and deploying AI and ML models, while also ensuring high scalability and performance.

The platform enables:

Seamless integration with multiple cloud providers (Azure, AWS, GCP)

Collaboration across data teams using notebooks and shared workspaces

Accelerated ETL processes through automated workflows

Real-time data analytics and business intelligence

Yet, while Databricks is powerful, unlocking its full value requires more than just a subscription—it demands expertise, vision, and customization. That’s where Kadel Labs truly shines.

Who Is Kadel Labs?

Kadel Labs is a technology consulting and solutions company specializing in data analytics, AI/ML, and digital transformation. With a strong commitment to innovation and a client-first philosophy, Kadel Labs has emerged as a trusted partner for businesses looking to leverage data as a strategic asset.

What sets Kadel Labs apart is its ability to deliver the best Databricks services, ensuring clients maximize ROI from their data infrastructure investments. From initial implementation to complex machine learning pipelines, Kadel Labs helps companies at every step of the data journey.

Why Kadel Labs Offers the Best Databricks Consultants

When it comes to data platform adoption and optimization, the right consultant can make or break a project. Kadel Labs boasts a team of highly skilled, certified, and experienced Databricks professionals who have worked across multiple industries—including finance, healthcare, e-commerce, and manufacturing.

1. Certified Expertise

Kadel Labs’ consultants hold various certifications directly from Databricks and other cloud providers. This ensures that they not only understand the technical nuances of the platform but also remain updated on the latest features, capabilities, and best practices.

2. Industry Experience

Experience matters. The consultants at Kadel Labs have hands-on experience with deploying large-scale Databricks environments for enterprise clients. This includes setting up data lakes, implementing Delta Lake, building ML workflows, and optimizing performance across various data pipelines.

3. Tailored Solutions

Rather than offering a one-size-fits-all approach, Kadel Labs customizes its Databricks services to align with each client’s specific business goals, data maturity, and regulatory requirements.

4. End-to-End Services

From assessment and strategy formulation to implementation and ongoing support, Kadel Labs offers comprehensive Databricks consulting services. This full lifecycle engagement ensures that clients get consistent value and minimal disruption.

Kadel Labs’ Core Databricks Services

Here’s an overview of why businesses consider Kadel Labs as the go-to provider for the best Databricks services:

1. Databricks Platform Implementation

Kadel Labs assists clients in setting up and configuring their Databricks environments across cloud platforms like Azure, AWS, and GCP. This includes provisioning clusters, configuring security roles, and ensuring seamless data integration.

2. Data Lake Architecture with Delta Lake

Modern data lakes need to be fast, reliable, and scalable. Kadel Labs leverages Delta Lake—Databricks’ open-source storage layer—to build high-performance data lakes that support ACID transactions and schema enforcement.

3. ETL and Data Engineering

ETL (Extract, Transform, Load) processes are at the heart of data analytics. Kadel Labs builds robust and scalable ETL pipelines using Apache Spark, streamlining data flow from various sources into Databricks.

4. Machine Learning & AI Integration

With an in-house team of data scientists and ML engineers, Kadel Labs helps clients build, train, and deploy machine learning models directly on the Databricks platform. The use of MLflow and AutoML accelerates time-to-value and model accuracy.

5. Real-time Analytics and BI Dashboards

Kadel Labs integrates Databricks with visualization tools like Power BI, Tableau, and Looker to create real-time dashboards that support faster and more informed business decisions.

6. Databricks Optimization and Support

Once the platform is operational, ongoing support and optimization are critical. Kadel Labs offers performance tuning, cost management, and troubleshooting to ensure that Databricks runs at peak efficiency.

Real-World Impact: Case Studies

Financial Services Firm Reduces Reporting Time by 70%

A leading financial services client partnered with Kadel Labs to modernize their data infrastructure using Databricks. By implementing a Delta Lake architecture and optimizing ETL workflows, the client reduced their report generation time from 10 hours to just under 3 hours.

Healthcare Provider Implements Predictive Analytics

Kadel Labs worked with a large healthcare organization to deploy a predictive analytics model using Databricks. The solution helped identify at-risk patients in real-time, improving early intervention strategies and patient outcomes.

The Kadel Labs Advantage

So what makes Kadel Labs the best Databricks consultants in the industry? It comes down to a few key differentiators:

Agile Methodology: Kadel Labs employs agile project management to ensure iterative progress, constant feedback, and faster results.

Cross-functional Teams: Their teams include not just data engineers, but also cloud architects, DevOps specialists, and domain experts.

Client-Centric Approach: Every engagement is structured around the client’s goals, timelines, and KPIs.

Scalability: Whether you're a startup or a Fortune 500 company, Kadel Labs scales its services to meet your data needs.

The Future of Data is Collaborative, Scalable, and Intelligent

As data becomes increasingly central to business strategy, the need for platforms like Databricks—and the consultants who can leverage them—will only grow. With emerging trends such as real-time analytics, generative AI, and data sharing across ecosystems, companies will need partners who can keep them ahead of the curve.

Kadel Labs is not just a service provider—it’s a strategic partner helping organizations turn data into a growth engine.

Final Thoughts

In a world where data is the new oil, harnessing it effectively requires not only the right tools but also the right people. Kadel Labs stands out by offering the best Databricks consultants and the best Databricks services, making it a trusted partner for organizations across industries. Whether you’re just beginning your data journey or looking to elevate your existing infrastructure, Kadel Labs provides the expertise, technology, and dedication to help you succeed.

If you’re ready to accelerate your data transformation, Kadel Labs is the partner you need to move forward with confidence.

0 notes

Text

Databricks Consulting Services: Accelerating Business Intelligence with Helical IT Solutions

In today’s data-driven world, businesses are increasingly turning to advanced platforms like Databricks to streamline their data engineering, analytics, and machine learning processes. Databricks, built on Apache Spark, allows companies to unify their analytics and AI capabilities in a scalable cloud environment. However, leveraging Databricks to its full potential requires deep expertise, which is where Databricks consulting services come into play.

Helical IT Solutions offers comprehensive Databricks consulting services designed to help organizations maximize the value of Databricks and accelerate their business intelligence initiatives. Whether your organization is just getting started with Databricks or looking to optimize your existing setup, Helical IT Solutions can guide you through every step of the process.

Why Choose Databricks Consulting?

Databricks is a powerful platform that allows companies to handle massive datasets, run complex machine learning models, and perform high-level data analytics. However, like any advanced technology, it can be overwhelming to integrate Databricks into your existing infrastructure without the right expertise. This is where Databricks consulting becomes essential.

Databricks consultants are equipped with in-depth knowledge of the platform's capabilities, from data engineering pipelines to machine learning workflows. They can help your organization design, implement, and optimize Databricks solutions that align with your specific business objectives. Consulting services ensure that your team is equipped with the right tools, best practices, and strategies to make the most out of Databricks and its ecosystem.

Helical IT Solutions: Your Trusted Partner for Databricks Consulting

Helical IT Solutions has established itself as a trusted provider of Databricks consulting services, offering end-to-end solutions tailored to businesses of all sizes. Their team of experts works closely with clients to understand their unique needs and objectives, ensuring that every Databricks deployment is aligned with their business goals.

Databricks Architecture Design and Setup: Helical IT Solutions begins by assessing your current data infrastructure and designing a robust Databricks architecture. This step involves determining the most efficient way to set up your Databricks environment to handle your data volumes and specific workloads. Their consultants ensure seamless integration with other data platforms and systems to ensure a smooth flow of data across your organization.

Data Engineering and ETL Pipelines: Databricks provides powerful tools for building scalable data engineering workflows. Helical IT Solutions’ consultants help businesses create data pipelines that integrate data from various sources, ensuring high-quality and real-time data for reporting and analysis. They design and optimize ETL (Extract, Transform, Load) pipelines that are essential for efficient data processing, enhancing the overall performance of your data infrastructure.

Advanced Analytics and Machine Learning: With Databricks, businesses can easily scale their machine learning models and apply advanced analytics techniques to their data. Helical IT Solutions leverages Databricks’ built-in tools for MLlib and TensorFlow to design custom machine learning models tailored to your specific business needs. The consultancy also focuses on optimizing the performance of your models and workflows, helping you deploy AI solutions faster and more efficiently.

Cost Optimization and Performance Tuning: One of the key advantages of Databricks is its ability to scale up or down based on your workload. Helical IT Solutions helps businesses optimize their Databricks costs by implementing best practices to manage compute resources and storage efficiently. Their consultants also focus on performance tuning, ensuring that your Databricks infrastructure is running at peak performance without unnecessary overhead.

Ongoing Support and Training: Helical IT Solutions doesn’t just stop at implementation. Their Databricks consulting services include continuous support and training to ensure that your team is empowered to manage and optimize the platform long after the initial deployment. Their experts offer training sessions and documentation to help your team get the most out of Databricks, enabling them to become proficient in managing data pipelines, analytics, and machine learning models independently.

Conclusion

Incorporating Databricks into your business intelligence strategy can significantly boost your organization’s ability to manage, analyse, and derive insights from data. However, achieving success with Databricks requires expertise and guidance from experienced consultants. Helical IT Solutions stands out as a leading provider of Databricks consulting services, offering a comprehensive suite of solutions that drive results. From architecture design and data engineering to advanced analytics and cost optimization, Helical IT Solutions ensures that your business can unlock the full potential of Databricks. With their expertise, you can accelerate your journey toward data-driven decision-making and maximize the value of your data assets.

0 notes

Text

How Data Engineering Consultancy Builds Scalable Pipelines

To drive your business growth and make informed decision making, data integration, transformation, and its analysis is very crucial. How well you collect, transfer, analyze and utilize your data impacts your business or organization’s success. So, it becomes essential to partner with a professional data engineering consultancy to ensure your important data is managed effectively using scalable data pipelines.

What are these scalable data pipelines? How does a Data Engineering Consultancy build them? The role of Google Analytics consulting? Let’s discuss all these concerns in this blog.

What are Scalable Data Pipelines?

Scalable data pipelines are the best approach used for moving and processing data from various sources to analytical platforms. This approach increases data volume and complexity while the performance remains consistent. Data Engineering Consultancy designs these data pipelines that handle massive data sets which is also known as the backbone of modern data infrastructure.

Key Components of a Scalable Data Pipeline

The various key components of a scalable data pipelines are:

Data Ingestion – Collect data from multiple sources. These sources are APIs, cloud services, databases and third-party applications.

Data Processing – Clean, transform, and structure raw data for analysis. These tools are Apache Spark, Airflow, and cloud-based services.

Storage & Management – Store and manage data in scalable cloud-based solutions. These solutions are Google BigQuery, Snowflake, and Amazon S3.

Automation & Monitoring – Implement automated workflows and monitor systems to ensure smooth operations and detect potential issues.

These are the various key components of scalable data pipelines that are used by Data Engineering Consultancy. These data pipelines ensure businesses manage their data efficiently, allow faster insights, and improved decision-making.

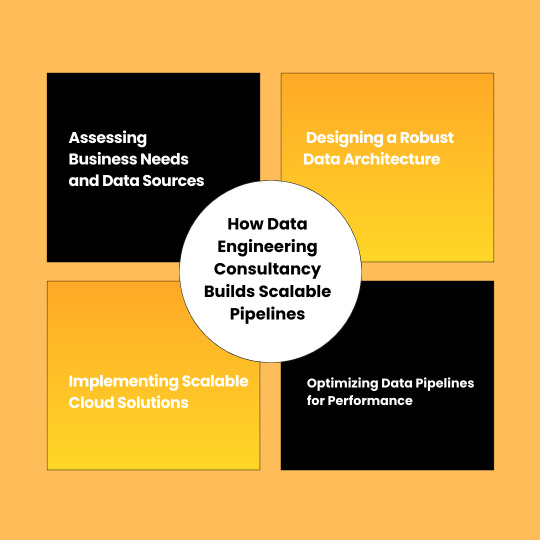

How Data Engineering Consultancy Builds Scalable Pipelines

Data Engineering Consultancy builds scalable pipelines in step by step process, let’s explore these steps.

1. Assessing Business Needs and Data Sources

Step 1 is accessing your business needs and data sources. We start by understanding your data requirements and specific business objectives. Our expert team determines the best approach for data integration by analyzing data sources such as website analytics tools, third-party applications, and CRM platforms.

2. Designing a Robust Data Architecture

Step 2 is designing a robust data plan. Our expert consultants create a customized data plan based on your business needs. We choose the most suitable technologies and frameworks by considering various factors such as velocity, variety, and data volume.

3. Implementing Scalable Cloud Solutions

Step 3 is implementing scalable cloud based solutions. We implement solutions like Azure, AWS, and Google Cloud to ensure efficiency and cost-effectiveness. Also, these platforms provide flexibility of scale storage and computing resources based on real-time demand.

4. Optimizing Data Pipelines for Performance

Step 4 is optimizing data pipelines for performance. Our Data Engineering Consultancy optimizes data pipelines by automating workflows and reducing latency. Your business can achieve near-instant data streaming and processing capabilities by integrating tools like Apache Kafka and Google Dataflow.

5. Google Analytics Consulting for Data Optimization

Google Analytics consulting plays an important role for data optimization as it understands the user behaviors and website performance.. With our Google Analytics consulting your businesses can get actionable insights by -

Setting up advanced tracking mechanisms.

Integrating Google Analytics data with other business intelligence tools.

Enhancing reporting and visualization for better decision-making.

Data Engineering Consultancy - What Are Their Benefits?

Data engineering consultancy offers various benefits,let's explore them.

Improve Data Quality and Reliability

Enhance Decision-Making

Cost and Time Efficiency

Future-Proof Infrastructure

With Data engineering consultancy, you can get access to improved data quality and reliability. This helps you to get accurate data with no errors.

You can enhance your informed decision-making using real-time and historical insights.This helps businesses to make informed decisions.

Data Engineering consultancy reduces manual data handling and operational costs as it provides cost and time efficiency.

Data Engineering consultancy provides future proof infrastructure. Businesses can scale their data operations seamlessly by using latest and exceptional technologies.

Conclusion: Boost Business With Expert & Top-Notch Data Engineering Solutions

Let’s boost business growth with exceptional and top-notch data engineering solutions. We at Kaliper help businesses to get the full potential of their valuable data to make sustainable growth of their business. Our expert and skilled team can assist you to thrive your business performance by extracting maximum value from your data assets. We can help you to gain valuable insights about your user behavior. To make informed decisions, and get tangible results with our top-notch and innovative Google Analytics solutions.

Kaliper ensures your data works smarter for you by integrating with data engineering consultancy. We help you to thrive your business with our exceptional data engineering solutions. Schedule a consultation with Kaliper today and let our professional and expert team guide you toward your business growth and success.

0 notes

Text

How to be an AI consultant in 2025

Artificial Intelligence (AI) is becoming a necessary part of companies worldwide. Companies of any size are implementing AI to optimize operations, enhance customer experience, and gain competitive edge. Demand for AI consultants is skyrocketing as a consequence. If you want to be an AI consultant in 2025, this guide will lead you through the necessary steps to set yourself up in this high-paying industry.

Appreciating the Role of an AI Consultant An AI consultant facilitates the incorporation of AI technologies into an organization's business processes. The job can include: •Assessing business needs and deciding on AI-based solutions. •Implementing machine learning models and AI tools. •AI adoption and ethical considerations training teams. •Executing AI-based projects according to business objectives. •Monitoring AI implementation plans and tracking effects. Since AI is evolving at a rapid rate, AI consultants must regularly update their skills and knowledge to stay in the competition.

Step 1: Establish a Solid Academic Base You would need to be very knowledgeable in AI, data science, and business to be an AI consultant. The following are the ways through which you can increase your awareness:

Formal Education • Bachelor's Degree: Bachelor of Computer Science, Data Science, Artificial Intelligence, or a related field is preferred. • Master's Degree (Optional): Having a Master's in AI, Business Analytics, or MBA with technical specialisation would be an added advantage to your qualification.

Step 2: Acquire Technical Skills Practical technical knowledge is needed in AI consulting. The most critical skills are: Computer Languages

Python: Used most to develop AI.

R: Statistical analysis and data visualization.

SQL: To communicate with the database.

Java and C++: Only occasionally used for AI applications.

Machine Learning and Deep Learning

• Scikit-learn, TensorFlow, PyTorch: Main software to create AI models.

• Natural Language Processing (NLP): Explore the relationship between human language and artificial intelligence.

• Computer Vision: AI learning of image and video processing.

Data Science and Analytics • Data Wrangling & Cleaning: Ability to pre-process raw data for AI models. - Big Data Tools: Hadoop, Spark, and Apache Kafka. • Experience in using tools such as Tableau, Power BI, and Matplotlib. Cloud Computing and Artificial Intelligence Platforms AI-driven applications are most frequently implemented in cloud environments.

Discover: • AWS AI and ML Services • Google Cloud AI • Microsoft Azure AI

Step 3: Gain Practical Experience While book knowledge is important, hands-on knowledge is invaluable. Here is what you can do to build your expertise: Working on AI Projects Start with tiny AI projects such as:

Developing a chatbot using Python.

Building a recommendation system.

Incorporating a model for fraud detection.

Applying AI to drive analytics automation.

Open-Source Contributions Join open-source AI projects on websites like GitHub. This will enhance your portfolio and make you authoritative in the eyes of the AI community.

Step 4: Economy Your Business and Consulting Experience

Technology is just part of the equation for AI consulting, you need to understand business strategy and how to articulate the advantages of AI as well. This is how:

Understanding of Business

Discover the impact of artificial intelligence on various fields of activity such as: retail, healthcare or banking.

Understand business intelligence and digital transformation of business.

Keep abreast of AI laws and ethics.

Management of Time and Timing

Understand AI assessments for organisations.

Improve your public speaking and your appearance.

Mastering stakeholder management and Negotiation skills.

Write AI strategy briefings in a way that the non-technical executives understand.

Creating a Portfolio and Personal Brand.

Step 5: Establish a Solid Portfolio & Personal Brand

Construct an AI Portfolio

Demonstrate your skill by constructing a portfolio with:

AI case studies and projects.

Research articles or blog posts on AI trends.

GitHub repositories and open-source contributions.

Build an Online Platform • Start a YouTube channel or blog to share AI knowledge. • Post blogs on LinkedIn or Medium. • Contribute to forums like Kaggle, AI Stack Exchange, and GitHub forums.

Step 6: Network & Get Clients You can get a network to obtain your AI consulting work. Here's how to do it: • Visit conferences such as NeurIPS, AI Summit, and Google AI conferences. • Join LinkedIn groups and subreddits on AI. • Engage with industry professionals through webinars and networking sessions. • Network with startups and firms looking for AI services.

Step 7: Offer AI Consulting Services You can now build your consulting foundation. Consider the following: • Freelancing: Work as an independent AI consultant. • Join a Consulting Company: Firms like Deloitte, Accenture, and McKinsey hire AI consultants. • Start Your Own AI Consultancy: If you're business-minded, start your own AI consulting business.

Step 8: Stay Current & Continuously Learn AI develops at light speed, so learn again and again. Watch out for:

AI research papers on Arxiv and Google Scholar.

AI newsletters such as Towards Data Science, OpenAI news.

Podcasts such as "AI Alignment" and "The TWIML AI Podcast".

AI leaders like Andrew Ng, Yann LeCun, and Fei-Fei Li.

Conclusion

By 2025, you must possess technical, business, and strategic communication skills in order to become an AI consultant. If you receive proper education, gain technical and business skills, possess a quality portfolio, and strategically network, then you can become a successful AI consultant. The key to success is continuous learning and adapting to the evolving AI landscape. If you’re passionate about AI and committed to staying ahead of trends, the opportunities in AI consulting are limitless!

Website: https://www.icertglobal.com/

0 notes