#Image bounding box annotation

Explore tagged Tumblr posts

Text

#data annotation for autonomous vehicles#Image annotation company#3d bounding box annotation#annotation services in india

0 notes

Text

Data Labeling Services | AI Data Labeling Company

AI models are only as effective as the data they are trained on. This service page explores how Damco’s data labeling services empower organizations to accelerate AI innovation through structured, accurate, and scalable data labeling.

Accelerate AI Innovation with Scalable, High-Quality Data Labeling Services

Accurate annotations are critical for training robust AI models. Whether it’s image recognition, natural language processing, or speech-to-text conversion, quality-labeled data reduces model errors and boosts performance.

Leverage Damco’s Data Labeling Services

Damco provides end-to-end annotation services tailored to your data type and use case.

Computer Vision: Bounding boxes, semantic segmentation, object detection, and more

NLP Labeling: Text classification, named entity recognition, sentiment tagging

Audio Labeling: Speaker identification, timestamping, transcription services

Who Should Opt for Data Labeling Services?

Damco caters to diverse industries that rely on clean, labeled datasets to build AI solutions:

Autonomous Vehicles

Agriculture

Retail & Ecommerce

Healthcare

Finance & Banking

Insurance

Manufacturing & Logistics

Security, Surveillance & Robotics

Wildlife Monitoring

Benefits of Data Labeling Services

Precise Predictions with high-accuracy training datasets

Improved Data Usability across models and workflows

Scalability to handle projects of any size

Cost Optimization through flexible service models

Why Choose Damco for Data Labeling Services?

Reliable & High-Quality Outputs

Quick Turnaround Time

Competitive Pricing

Strict Data Security Standards

Global Delivery Capabilities

Discover how Damco’s data labeling can improve your AI outcomes — Schedule a Consultation.

#data labeling#data labeling services#data labeling company#ai data labeling#data labeling companies

0 notes

Text

Decode Every Pixel of Retail - with Wisepl

Behind Every Retail Image Lies Untapped Intelligence. From checkout counters to smart shelves, every frame holds critical insights only if labeled with precision. We turn your raw retail data into high-impact intelligence.

Whether it’s SKU-level product detection, planogram compliance, footfall analytics, or shelf-stock status: Our expert annotation team delivers pixel-perfect labels across:

Bounding Boxes

Semantic & Instance Segmentation

Pose & Landmark Annotations

Object Tracking in Video Footage

Retail is not just physical anymore - it’s visual. Get the high-quality annotations your AI needs to predict demand, optimize layouts, and understand shopper behavior like never before.

Let’s make your retail data work smarter, faster, sharper.

Let’s collaborate to bring clarity to your retail vision. DM us or reach out at [email protected] Visit us: https://wisepl.com

#RetailAI#DataAnnotation#ComputerVision#SmartRetail#RetailAnalytics#ProductDetection#ShelfMonitoring#AnnotationExperts#AIinRetail#Wisepl#RetailTech#MachineLearning#VisualData#ImageAnnotation

0 notes

Text

Build AI-Powered Solutions with AI ML Enablement Services at EnFuse Solutions

Boost AI model performance with EnFuse Solutions’ AI ML enablement services. They deliver accurate data labeling and annotation for text, image, audio, and video datasets. Whether bounding boxes or semantic segmentation, EnFuse’s experts ensure consistent, high-quality data to optimize machine learning outcomes across varied use cases.

Ready to scale smarter with AI and ML? Explore EnFuse Solutions’ expert enablement services here: https://www.enfuse-solutions.com/services/ai-ml-enablement/

#AIMLEnablement#AIMLEnablementServices#AIMLEnablementSolutions#AIEnablement#MLEnablement#AIOptimization#MLOptimization#AIEnhancedSolutions#MLEnhancedSolutions#AIMLEnablementIndia#AIMLEnablementServicesIndia#AIEnhancedDevelopment#MLEnhancedDevelopment#EnFuseAIMLEnablement#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

When AI Meets Medicine: Periodontal Diagnosis Through Deep Learning by Para Projects

In the ever-evolving landscape of modern healthcare, artificial intelligence (AI) is no longer a futuristic concept—it is a transformative force revolutionizing diagnostics, treatment, and patient care. One of the latest breakthroughs in this domain is the application of deep learning to periodontal disease diagnosis, a condition that affects millions globally and often goes undetected until it progresses to severe stages.

In a pioneering step toward bridging technology with dental healthcare, Para Projects, a leading engineering project development center in India, has developed a deep learning-based periodontal diagnosis system. This initiative is not only changing the way students approach AI in biomedical domains but also contributing significantly to the future of intelligent, accessible oral healthcare.

Understanding Periodontal Disease: A Silent Threat Periodontal disease—commonly known as gum disease—refers to infections and inflammation of the gums and bone that surround and support the teeth. It typically begins as gingivitis (gum inflammation) and, if left untreated, can lead to periodontitis, which causes tooth loss and affects overall systemic health.

The problem? Periodontal disease is often asymptomatic in its early stages. Diagnosis usually requires a combination of clinical examinations, radiographic analysis, and manual probing—procedures that are time-consuming and prone to human error. Additionally, access to professional diagnosis is limited in rural and under-resourced regions.

This is where AI steps in, offering the potential for automated, consistent, and accurate detection of periodontal disease through the analysis of dental radiographs and clinical data.

The Role of Deep Learning in Medical Diagnostics Deep learning, a subset of machine learning, mimics the human brain’s neural network to analyze complex data patterns. In the context of medical diagnostics, it has proven particularly effective in image recognition, classification, and anomaly detection.

When applied to dental radiographs, deep learning models can be trained to:

Identify alveolar bone loss

Detect tooth mobility or pocket depth

Differentiate between healthy and diseased tissue

Classify disease severity levels

This not only accelerates the diagnostic process but also ensures objective and reproducible results, enabling better clinical decision-making.

Para Projects: Where Innovation Meets Education Recognizing the untapped potential of AI in dental diagnostics, Para Projects has designed and developed a final-year engineering project titled “Deep Periodontal Diagnosis: A Hybrid Learning Approach for Accurate Periodontitis Detection.” This project serves as a perfect confluence of healthcare relevance and cutting-edge technology.

With a student-friendly yet professionally guided approach, Para Projects transforms a complex AI application into a doable and meaningful academic endeavor. The project has been carefully designed to offer:

Real-world application potential

Exposure to biomedical datasets and preprocessing

Use of deep learning frameworks like TensorFlow and Keras

Comprehensive support from coding to documentation

Inside the Project: How It Works The periodontal diagnosis project by Para Projects is structured to simulate a real diagnostic system. Here’s how it typically functions:

Data Acquisition and Preprocessing Students are provided with a dataset of dental radiographs (e.g., panoramic X-rays or periapical films). Using tools like OpenCV, they learn to clean and enhance the images by:

Normalizing pixel intensity

Removing noise and irrelevant areas

Annotating images using bounding boxes or segmentation maps

Feature Extraction Using convolutional neural networks (CNNs), the system is trained to detect and extract features such as

Bone-level irregularities

Shape and texture of periodontal ligaments

Visual signs of inflammation or damage

Classification and Diagnosis The extracted features are passed through layers of a deep learning model, which classifies the images into categories like

Healthy

Mild periodontitis

Moderate periodontitis

Severe periodontitis

Visualization and Reporting The system outputs visual heatmaps and probability scores, offering a user-friendly interpretation of the diagnosis. These outputs can be further converted into PDF reports, making it suitable for both academic submission and potential real-world usage.

Academic Value Meets Practical Impact For final-year engineering students, working on such a project presents a dual benefit:

Technical Mastery: Students gain hands-on experience with real AI tools, including neural network modeling, dataset handling, and performance evaluation using metrics like accuracy, precision, and recall.

Social Relevance: The project addresses a critical healthcare gap, equipping students with the tools to contribute meaningfully to society.

With expert mentoring from Para Projects, students don’t just build a project—they develop a solution that has real diagnostic value.

Why Choose Para Projects for AI-Medical Applications? Para Projects has earned its reputation as a top-tier academic project center by focusing on three pillars: innovation, accessibility, and support. Here’s why students across India trust Para Projects:

🔬 Expert-Led Guidance: Each project is developed under the supervision of experienced AI and domain experts.

📚 Complete Project Kits: From code to presentation slides, students receive everything needed for successful academic evaluation.

💻 Hands-On Learning: Real datasets, practical implementation, and coding tutorials make learning immersive.

💬 Post-Delivery Support: Para Projects ensures students are prepared for viva questions and reviews.

💡 Customization: Projects can be tailored based on student skill levels, interest, or institutional requirements.

Whether it’s a B.E., B.Tech, M.Tech, or interdisciplinary program, Para Projects offers robust solutions that connect education with industry relevance.

From Classroom to Clinic: A Future-Oriented Vision Healthcare is increasingly leaning on predictive technologies for better outcomes. In this context, AI-driven dental diagnostics can transform public health—especially in regions with limited access to dental professionals. What began as a classroom project at Para Projects can, with further development, evolve into a clinical tool, contributing to preventive healthcare systems across the world.

Students who engage with such projects don’t just gain knowledge—they step into the future of AI-powered medicine, potentially inspiring careers in biomedical engineering, health tech entrepreneurship, or AI research.

Conclusion: Diagnosing with Intelligence, Healing with Innovation The fusion of AI and medicine is not just a technological shift—it’s a philosophical transformation in how we understand and address disease. By enabling early, accurate, and automated diagnosis of periodontal disease, deep learning is playing a vital role in improving oral healthcare outcomes.

With its visionary project on periodontal diagnosis through deep learning, Para Projects is not only helping students fulfill academic goals—it’s nurturing the next generation of tech-enabled healthcare changemakers.

Are you ready to engineer solutions that impact lives? Explore this and many more cutting-edge medical and AI-based projects at https://paraprojects.in. Let Para Projects be your partner in building technology that heals.

0 notes

Text

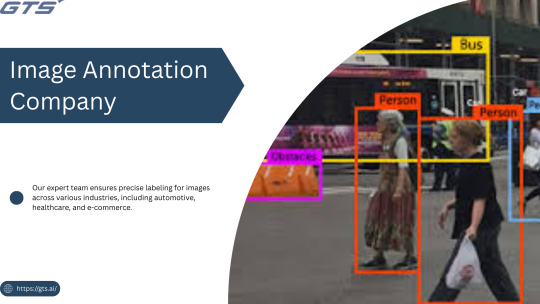

Looking for trusted image annotation services to support your AI or machine learning model training? GoodFirms features a verified list of top annotation companies with expertise in bounding boxes, semantic segmentation, keypoint annotation, and more.

0 notes

Text

Title: Image Annotation Services Explained: Tools, Techniques & Use Cases

Introduction

In the fast-paced realm of artificial intelligence, Image Annotation Company serve as the foundation for effective computer vision models. Whether you are creating a self-driving vehicle, an AI-driven medical diagnostic application, or a retail analytics solution, the availability of high-quality annotated images is crucial for training precise and dependable machine learning models. But what precisely are image annotation services, how do they function, and what tools and methodologies are utilized? Let us explore this in detail.

What Is Image Annotation?

Image annotation refers to the practice of labeling or tagging images to facilitate the training of machine learning and deep learning models. This process includes the identification of objects, boundaries, and features within images, enabling AI systems to learn to recognize these elements in real-world applications. Typically, this task is carried out by specialized image annotation firms that employ a combination of manual and automated tools to guarantee precision, consistency, and scalability.

Common Image Annotation Techniques

The appropriate annotation method is contingent upon the specific requirements, complexity, and nature of the data involved in your project. Among the most prevalent techniques are:

Bounding Boxes:

Utilized for identifying and localizing objects by encasing them in rectangular boxes, this method is frequently applied in object detection for autonomous vehicles and security systems.

Polygon Annotation:

Best suited for objects with irregular shapes, such as trees, buildings, or road signs, this technique allows for precise delineation of object edges, which is essential for detailed recognition tasks.

Semantic Segmentation:

This approach assigns a label to every pixel in an image according to the object class, commonly employed in medical imaging, robotics, and augmented/virtual reality environments.

Instance Segmentation:

An advancement over semantic segmentation, this method distinguishes between individual objects of the same class, such as recognizing multiple individuals in a crowd.

Keypoint Annotation:

This technique involves marking specific points on objects and is often used in facial recognition, human pose estimation, and gesture tracking.

3D Cuboids:

This method enhances depth perception in annotation by creating three-dimensional representations, which is vital for applications like autonomous navigation and augmented reality.

Popular Image Annotation Tools

Image annotation can be executed utilizing a diverse array of platforms and tools. Notable examples include:

LabelImg: An open-source tool designed for bounding box annotations,

CVAT: A web-based application created by Intel for intricate tasks such as segmentation and tracking,

SuperAnnotate: A robust tool that merges annotation and collaboration functionalities,

Labelbox: A comprehensive platform featuring AI-assisted labeling, data management, and analytics,

VGG Image Annotator (VIA): A lightweight tool developed by Oxford for efficient annotations.

Prominent annotation service providers like GTS.AI frequently employ a blend of proprietary tools and enterprise solutions, seamlessly integrated with quality assurance workflows to fulfill client-specific needs.

Real-World Use Cases of Image Annotation

Image annotation services play a vital role in various sectors, including:

Autonomous Vehicles

Object detection for identifying pedestrians, vehicles, traffic signs, and road markings.

Lane detection and semantic segmentation to facilitate real-time navigation.

Healthcare

Annotating medical images such as X-rays and MRIs to identify tumors, fractures, or other abnormalities.

Training diagnostic tools to enhance early disease detection.

Retail and E-commerce:

Implementing product identification and classification for effective inventory management.

Monitoring customer behavior through in-store camera analytics.

Agriculture:

Assessing crop health, detecting pests, and identifying weeds using drone imagery.

Forecasting yield and optimizing resource allocation.

Geospatial Intelligence:

Classifying land use and mapping infrastructure.

Utilizing annotated satellite imagery for disaster response.

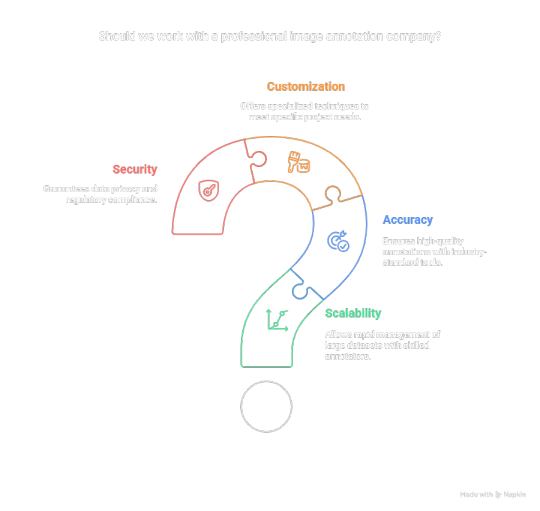

Why Work with a Professional Image Annotation Company?

Although in-house annotation may initially appear feasible, expanding it necessitates significant time, resources, and a comprehensive quality assurance process. This is the reason businesses collaborate with providers such as GTS.AI:

Scalability : Allows for the rapid management of extensive datasets through skilled annotators;

Accuracy : Is ensured with industry-standard tools and quality assurance measures;

Customization : Coffers specialized annotation techniques to meet specific project objectives; and

Security : Guarantees adherence to data privacy regulations for sensitive data.

Final Thoughts

With the expansion of AI and computer vision applications, the necessity for high-quality annotated data has become increasingly vital. Image annotation services have evolved from being merely supportive functions to becoming strategic assets essential for developing dependable AI systems. If you aim to enhance your AI projects through professional data labeling, consider Globose Technology Solution .AI’s Image and Video Annotation Services to discover how they can effectively assist you in achieving your objectives with accuracy, efficiency, and scalability.

0 notes

Text

Image Annotation Services

Image annotation services play a crucial role in training AI and machine learning models by accurately labeling visual data. These services involve tagging images with relevant information to help algorithms recognize objects, actions, or environments. High-quality image annotation services ensure better model performance in autonomous driving, facial recognition, and medical imaging applications. Whether it’s bounding boxes, polygons, or semantic segmentation, precise annotations are essential for AI accuracy. Partnering with expert providers guarantees scalable and reliable image labeling solutions.

0 notes

Video

youtube

$100K Data Annotation Jobs From Home!Data annotation, huh? It sounds a bit dry at first, doesn't it? But let me tell you, it's one of those hidden gems in the data science world that can open up a whole new realm of opportunities-especially if you're diving into machine learning and AI. So, let's break it down, shall we? First off, what is data annotation? In simple terms, it's the process of labeling or tagging data-think text, images, videos, or audio-so that machine learning models can actually make sense of it. It's like teaching a child to recognize a dog by showing them pictures and saying, "This is a dog." There are different types of annotation, too. With images, you might be drawing bounding boxes around objects, segmenting parts of the image, or detecting specific items. When it comes to text, you could be diving into sentiment analysis, identifying named entities, or tagging parts of speech. And don't even get me started on audio and video annotations-those involve transcribing sounds, identifying speakers, tracking objects, and even recognizing actions. It's a whole universe of data waiting to be explored! Now, once you've wrapped your head around what data annotation is, it's time to get your hands dirty with some tools. There are some fantastic annotation tools out there. For instance, Labelbox is great for handling images, videos, and texts, while Supervisely focuses on image annotation with deep learning features. Prodigy is a go-to for text annotation, especially if you're into natural language processing. And if you're looking to annotate images or videos, VoTT is a solid choice. The best part? Many of these tools offer free versions or trials, so you can practice without breaking the bank. Speaking of practice, let's talk about online courses and tutorials. Platforms like DataCamp and Coursera have a treasure trove of courses on data annotation and supervised learning. If you're on a budget, Udemy is your friend, offering affordable courses that cover various data labeling techniques. And don't overlook Kaggle; they have micro-courses that touch on data preparation and annotation practices. This is where you can really build your skills and confidence.

0 notes

Text

YOLO V/s Embeddings: A comparison between two object detection models

YOLO-Based Detection Model Type: Object detection Method: YOLO is a single-stage object detection model that divides the image into a grid and predicts bounding boxes, class labels, and confidence scores in a single pass. Output: Bounding boxes with class labels and confidence scores. Use Case: Ideal for real-time applications like autonomous vehicles, surveillance, and robotics. Example Models: YOLOv3, YOLOv4, YOLOv5, YOLOv8 Architecture

YOLO processes an image in a single forward pass of a CNN. The image is divided into a grid of cells (e.g., 13×13 for YOLOv3 at 416×416 resolution). Each cell predicts bounding boxes, class labels, and confidence scores. Uses anchor boxes to handle different object sizes. Outputs a tensor of shape [S, S, B*(5+C)] where: S = grid size (e.g., 13×13) B = number of anchor boxes per grid cell C = number of object classes 5 = (x, y, w, h, confidence) Training Process

Loss Function: Combination of localization loss (bounding box regression), confidence loss, and classification loss.

Labels: Requires annotated datasets with labeled bounding boxes (e.g., COCO, Pascal VOC).

Optimization: Typically uses SGD or Adam with a backbone CNN like CSPDarknet (in YOLOv4/v5). Inference Process

Input image is resized (e.g., 416×416). A single forward pass through the model. Non-Maximum Suppression (NMS) filters overlapping bounding boxes. Outputs detected objects with bounding boxes. Strengths

Fast inference due to a single forward pass. Works well for real-time applications (e.g., autonomous driving, security cameras). Good performance on standard object detection datasets. Weaknesses

Struggles with overlapping objects (compared to two-stage models like Faster R-CNN). Fixed number of anchor boxes may not generalize well to all object sizes. Needs retraining for new classes. Embeddings-Based Detection Model Type: Feature-based detection Method: Instead of directly predicting bounding boxes, embeddings-based models generate a high-dimensional representation (embedding vector) for objects or regions in an image. These embeddings are then compared against stored embeddings to identify objects. Output: A similarity score (e.g., cosine similarity) that determines if an object matches a known category. Use Case: Often used for tasks like face recognition (e.g., FaceNet, ArcFace), anomaly detection, object re-identification, and retrieval-based detection where object categories might not be predefined. Example Models: FaceNet, DeepSORT (for object tracking), CLIP (image-text matching) Architecture

Uses a deep feature extraction model (e.g., ResNet, EfficientNet, Vision Transformers). Instead of directly predicting bounding boxes, it generates a high-dimensional feature vector (embedding) for each object or image. The embeddings are stored in a vector database or compared using similarity metrics. Training Process Uses contrastive learning or metric learning. Common loss functions:

Triplet Loss: Forces similar objects to be closer and different objects to be farther in embedding space.

Cosine Similarity Loss: Maximizes similarity between identical objects.

Center Loss: Ensures class centers are well-separated. Training datasets can be either:

Labeled (e.g., with identity labels for face recognition).

Self-supervised (e.g., CLIP uses image-text pairs). Inference Process

Extract embeddings from a new image using a CNN or transformer. Compare embeddings with stored vectors using cosine similarity or Euclidean distance. If similarity is above a threshold, the object is recognized. Strengths

Scalable: New objects can be added without retraining.

Better for recognition tasks: Works well for face recognition, product matching, anomaly detection.

Works without predefined classes (zero-shot learning). Weaknesses

Requires a reference database of embeddings. Not good for real-time object detection (doesn’t predict bounding boxes directly). Can struggle with hard negatives (objects that look similar but are different).

Weaknesses

Struggles with overlapping objects (compared to two-stage models like Faster R-CNN). Fixed number of anchor boxes may not generalize well to all object sizes. Needs retraining for new classes. Embeddings-Based Detection Model Type: Feature-based detection Method: Instead of directly predicting bounding boxes, embeddings-based models generate a high- dimensional representation (embedding vector) for objects or regions in an image. These embeddings are then compared against stored embeddings to identify objects. Output: A similarity score (e.g., cosine similarity) that determines if an object matches a known category. Use Case: Often used for tasks like face recognition (e.g., FaceNet, ArcFace), anomaly detection, object re-identification, and retrieval-based detection where object categories might not be predefined. Example Models: FaceNet, DeepSORT (for object tracking), CLIP (image-text matching) Architecture

Uses a deep feature extraction model (e.g., ResNet, EfficientNet, Vision Transformers). Instead of directly predicting bounding boxes, it generates a high-dimensional feature vector (embedding) for each object or image. The embeddings are stored in a vector database or compared using similarity metrics. Training Process Uses contrastive learning or metric learning. Common loss functions:

Triplet Loss: Forces similar objects to be closer and different objects to be farther in embedding space.

Cosine Similarity Loss: Maximizes similarity between identical objects.

Center Loss: Ensures class centers are well-separated. Training datasets can be either:

Labeled (e.g., with identity labels for face recognition).

Self-supervised (e.g., CLIP uses image-text pairs). Inference Process

Extract embeddings from a new image using a CNN or transformer. Compare embeddings with stored vectors using cosine similarity or Euclidean distance. If similarity is above a threshold, the object is recognized. Strengths

Scalable: New objects can be added without retraining.

Better for recognition tasks: Works well for face recognition, product matching, anomaly detection.

Works without predefined classes (zero-shot learning). Weaknesses

Requires a reference database of embeddings. Not good for real-time object detection (doesn’t predict bounding boxes directly). Can struggle with hard negatives (objects that look similar but are different).

1 note

·

View note

Text

Video Annotation Services: Enhancing AI with Superior Training Data

Introduction:

Artificial intelligence (AI) and machine learning (ML) depend on extensive high-quality datasets to boost their precision and effectiveness. A vital aspect of this endeavor is Video Annotation Services, a method employed to label and categorize various objects, actions, and events within video content. By supplying AI models with meticulously annotated video data, organizations can refine their AI solutions, rendering them more intelligent and dependable.

What is Video Annotation?

Video annotation refers to the process of appending metadata to video frames to facilitate the training of AI and ML algorithms. This process includes tagging objects, monitoring movements, and supplying contextual information that aids AI systems in comprehending and interpreting visual data. It is crucial for various applications, including autonomous driving, medical imaging, security surveillance, and beyond.

The Importance of High-Quality Training Data

The performance of AI models is significantly influenced by the quality of the data utilized for training. Inaccurately labeled or subpar data can result in erroneous predictions and unreliable AI outcomes. High-quality video annotation guarantees that AI models can:

Precisely identify and categorize objects.

Monitor movements and interactions within a scene.

Enhance real-time decision-making abilities.

Minimize errors and reduce false positives.

Essential Video Annotation Techniques

Bounding Boxes – These are utilized to outline objects within a video frame using rectangular shapes.

Semantic Segmentation – This technique involves labeling each pixel in a frame to achieve precise object identification.

Polygon Annotation – This method creates accurate boundaries around objects with irregular shapes.

Keypoint and Landmark Annotation – This identifies specific points on objects, facilitating facial recognition and pose estimation.

3D Cuboid Annotation – This technique incorporates depth information for artificial intelligence models applied in robotics and augmented/virtual reality environments.

The Role of Video Annotation Services in Advancing AI Applications

Autonomous Vehicles

Video annotation plays a vital role in training autonomous vehicles to identify pedestrians, other vehicles, traffic signals, and road signs.

Healthcare and Medical Imaging

AI-driven diagnostic tools depend on video annotation to identify irregularities in medical scans and to observe patient movements.

Security and Surveillance

AI-enhanced surveillance systems utilize annotated videos to recognize suspicious behavior, monitor individuals, and improve facial recognition capabilities.

Retail and Customer Analytics

Retailers employ video annotation to study customer behavior, monitor foot traffic, and enhance store layouts.

Reasons to for Professional Video Annotation Services

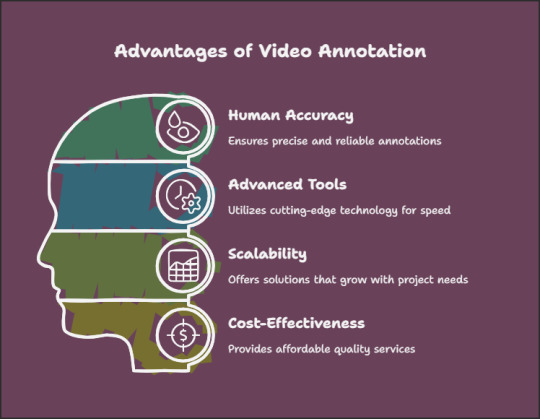

Engaging expert video annotation services offers several advantages:

Enhanced Accuracy – Skilled annotators deliver meticulous data labeling, minimizing errors during AI training.

Scalability – Professional services are equipped to manage extensive datasets with ease.

Cost Efficiency – Outsourcing annotation tasks conserves time and resources by negating the necessity for internal annotation teams.

Tailored Solutions – Customized annotation methods designed for specific sectors and AI applications.

Your Reliable Partner for Video Annotation

At we offer premier video annotation services aimed at equipping AI with high-quality training data. Our expert team guarantees precise and scalable annotations across diverse industries, assisting businesses in developing more intelligent AI models.

Why Select video annotation

Proficient human annotators ensuring accuracy.

State-of-the-art annotation tools for expedited processing.

Scalable solutions customized to meet your project requirements.

Affordable pricing without sacrificing quality.

In Summary

Video annotation services are fundamental to AI training, ensuring that models are trained on high-quality, accurately labeled data. Whether your focus is on autonomous systems, healthcare AI, or security applications, investing in professional video annotation services like those offered by Globose Technology Solutions will significantly improve the accuracy and effectiveness of your AI solutions.

0 notes

Text

Powering the AI Revolution Starts with Wisepl Where Intelligence Meets Precision

Wisepl specialize in high-quality data labeling services that serve as the backbone of every successful AI model. From autonomous vehicles to agriculture, healthcare to NLP - we annotate with accuracy, speed, and integrity.

🔹 Manual & Semi-Automated Labeling 🔹 Bounding Boxes | Polygons | Keypoints | Segmentation 🔹 Image, Video, Text, and Audio Annotation 🔹 Multilingual & Domain-Specific Expertise 🔹 Industry-Specific Use Cases: Medical, Legal, Automotive, Drones, Retail

India-based. Globally Trusted. AI-Focused.

Let your AI see the world clearly - through Wisepl’s eyes.

Ready to scale your AI training? Contact us now at www.wisepl.com or [email protected] Because every smart machine needs smart data.

#Wisepl#DataAnnotation#AITrainingData#MachineLearning#ArtificialIntelligence#DataLabeling#ComputerVision#NLP#DeepLearning#IndianAI#TechKerala#StartupIndia#AIDevelopment#VisionAI#SmartDataSmartAI#PrecisionLabeling#WiseplAI#AIProjects#GlobalAnnotationPartner

0 notes

Text

Why Image Data Annotation Matters in Machine Learning

Introduction

In the age of artificial intelligence (AI) and machine learning (ML), the capability of machines to perceive and comprehend visual data has revolutionized various sectors, including healthcare, autonomous driving, retail, and agriculture. For machines to accurately recognize and interpret images, they must be trained on meticulously labeled datasets. This necessity highlights the significance of image data annotation.

Image Data Annotation involves the process of assigning labels to visual data, such as images and videos, by incorporating relevant metadata to facilitate the training of machine learning models. By tagging objects, delineating boundaries, and categorizing elements within an image, annotators lay the foundation for computer vision systems to identify and classify objects, recognize patterns, and make informed decisions based on visual information.

This blog will delve into the intricacies of image data annotation, examining its significance, methodologies, tools, challenges, and applications. By the conclusion, you will possess a thorough understanding of how image annotation underpins contemporary AI and computer vision models.

What is Image Data Annotation?

Image data annotation is the practice of labeling and tagging images with pertinent information, enabling machine learning models to learn effectively. The annotated data acts as the ground truth, assisting models in recognizing patterns and enhancing their accuracy over time.

For example, an autonomous vehicle depends on annotated images to differentiate between pedestrians, vehicles, traffic signs, and other objects encountered on the road. Likewise, medical AI models utilize annotated MRI scans to detect tumors or other irregularities.

Key Components of Image Annotation:

Object Identification – Recognizing and marking objects within an image.

Classification – Assigning labels to objects (e.g., "cat," "dog," "car").

Localization – Indicating the position of objects using bounding boxes or other markers.

Segmentation – Defining the boundaries of objects at the pixel level.

Significance of Image Data Annotation

Precise image annotation is vital for the development of high-performing AI models. Machine learning algorithms depend on extensive amounts of high-quality annotated data to discern patterns and generate accurate predictions.

Reasons for the Critical Nature of Image Data Annotation:

Model Precision: The accuracy of image annotations directly influences the model's capability to identify objects and make predictions effectively.

Supervised Learning: The majority of computer vision models operate on supervised learning principles, necessitating labeled datasets.

Bias Mitigation: Proper annotation contributes to the creation of balanced and unbiased datasets, thereby avoiding distorted outcomes.

Real-World Performance: High-quality annotations enhance a model’s functionality in intricate environments, such as those with poor lighting or crowded settings.

Improved Automation: Accurate data enables AI systems to perform automated tasks, including medical diagnostics, facial recognition, and traffic surveillance.

Methods of Image Data Annotation

Various techniques exist for annotating image data, each tailored to specific tasks and model needs.

A. Classification

In this approach, an entire image is assigned a singular label.

Example: Designating an image as "cat" or "dog."

B. Object Detection

This technique focuses on identifying and locating objects within an image through the use of bounding boxes.

Example: Enclosing a car within a box in a street scene.

C. Semantic Segmentation

This method entails labeling each pixel in an image according to its class.

Example: Distinguishing between the road, sidewalk, and vehicles in a traffic scene.

D. Instance Segmentation

Similar to semantic segmentation, this method differentiates between individual objects of the same class.

Example: Identifying separate cars in a traffic image.

E. Landmark Annotation

This involves marking specific points of interest within an image.

Example: Annotating key facial points for facial recognition purposes.

F. Polygonal Annotation

This technique outlines complex shapes using polygons for enhanced accuracy.

Example: Annotating irregularly shaped objects such as trees or human figures.

Tools for Image Data Annotation

A variety of tools have been created to facilitate the image annotation process. These tools incorporate features such as automation, collaboration, and AI-enhanced annotation to improve both accuracy and efficiency.

Notable Image Annotation Tools:

LabelImg – An open-source application designed for creating bounding boxes.

CVAT (Computer Vision Annotation Tool) – A robust tool suitable for annotating both video and image data.

VGG Image Annotator (VIA) – A lightweight and user-friendly annotation tool.

Labelbox – A cloud-based solution that supports scalable annotation efforts.

SuperAnnotate – An AI-enhanced annotation platform equipped with sophisticated automation capabilities.

Amazon SageMaker Ground Truth – A managed annotation service that combines automation with human oversight.

Roboflow – A comprehensive platform for organizing and annotating datasets.

Challenges in Image Data Annotation

In spite of the progress made in annotation tools and methodologies, several challenges remain:

A. Scale and Volume

Extensive datasets necessitate considerable time and resources for annotation.

Crowdsourcing or AI-assisted methods are frequently employed to handle large-scale projects.

B. Quality and Consistency

Maintaining consistency across annotations is essential for optimal model performance.

Human errors and ambiguities can adversely affect quality.

C. Class Imbalance

Disproportionate representation of various classes can result in biased models.

Meticulous dataset curation and balancing are imperative.

D. Edge Cases and Complexity

Annotating complex objects, overlapping items, and occlusions presents significant challenges.

Advanced methods such as polygonal and instance segmentation are employed to tackle these issues.

E. Cost and Time

Manual annotation is both costly and time-intensive.

While automation and AI-assisted annotation can lower expenses, they may also risk compromising accuracy.

Applications of Image Data Annotation

Image data annotation has significantly impacted various sectors, facilitating innovative AI applications:

A. Autonomous Vehicles

Utilization of object detection and segmentation for identifying traffic elements, pedestrians, and road signs.

B. Healthcare

Analysis of medical images to detect diseases and abnormalities.

C. Retail

Implementation of product recognition and inventory management through computer vision technologies.

D. Agriculture

Monitoring of crops and detection of diseases via drone imagery.

E. Security and Surveillance

Application of facial recognition and anomaly detection within security systems.

Future of Image Data Annotation

The trajectory of image annotation will be influenced by:

AI-Driven Automation – Advanced AI models will take on more intricate annotation tasks.

Crowdsourcing and Gamification – Involving contributors through engaging, gamified platforms.

Synthetic Data Growth – An increased dependence on computer-generated datasets.

Edge Computing – Facilitating real-time annotation at the edge for expedited processing.

Conclusion

Image data annotation serves as the foundation for contemporary computer vision and artificial intelligence applications at Globose Technology Solutions. The availability of high-quality annotated data empowers machines to identify objects, comprehend environments, and make informed decisions. Despite ongoing challenges related to scalability and consistency, progress in AI-assisted annotation and the generation of synthetic data is reshaping the field. By adhering to best practices and utilizing automation, organizations can develop strong computer vision models that foster innovation and enhance efficiency across various sectors.

0 notes

Text

How Data Annotation Companies Improve AI Model Accuracy

Introduction:

In the rapidly advancing domain of artificial intelligence (AI), the accuracy of models is a pivotal element that influences the effectiveness of applications across various sectors, including autonomous driving, medical imaging, security surveillance, and e-commerce. A significant contributor to achieving high AI accuracy is the presence of well-annotated data. Data Annotation Companies are essential in this process, as they ensure that machine learning (ML) models are trained on precise and high-quality datasets.

The Significance of Data Annotation for AI

AI models, particularly those utilizing computer vision and natural language processing (NLP), necessitate extensive amounts of labeled data to operate efficiently. In the absence of accurately annotated images and videos, AI models face challenges in distinguishing objects, recognizing patterns, or making informed decisions. Data annotation companies create structured datasets by labeling images, videos, text, and audio, thereby enabling AI to learn from concrete examples.

How Data Annotation Companies Enhance AI Accuracy

Delivering High-Quality Annotations

The precision of an AI model is closely linked to the quality of annotations within the training dataset. Reputable data annotation companies utilize sophisticated annotation methods such as bounding boxes, semantic segmentation, key point annotation, and 3D cuboids to ensure accurate labeling. By minimizing errors and inconsistencies in the annotations, these companies facilitate the attainment of superior accuracy in AI models.

Utilizing Human Expertise and AI-Assisted Annotation

Numerous annotation firms integrate human intelligence with AI-assisted tools to boost both efficiency and accuracy. Human annotators are tasked with complex labeling assignments, such as differentiating between similar objects or grasping contextual nuances, while AI-driven tools expedite repetitive tasks, thereby enhancing overall productivity.

Managing Extensive and Varied Data

AI models require a wide range of datasets to effectively generalize across various contexts. Data annotation firms assemble datasets from multiple sources, ensuring a blend of ethnic backgrounds, lighting conditions, object differences, and scenarios. This variety is essential for mitigating AI biases and enhancing the robustness of models.

Maintaining Consistency in Annotations

Inconsistent labeling can adversely affect the performance of an AI model. Annotation companies employ rigorous quality control measures, inter-annotator agreements, and review processes to ensure consistent labeling throughout datasets. This consistency is crucial to prevent AI models from being misled by conflicting labels during the training phase.

Scalability and Accelerated Turnaround

AI initiatives frequently necessitate large datasets that require prompt annotation. Data annotation firms utilize workforce management systems and automated tools to efficiently manage extensive projects, enabling AI developers to train models more rapidly without sacrificing quality.

Adherence to Data Security Regulations

In sectors such as healthcare, finance, and autonomous driving, data security is paramount. Reputable annotation companies adhere to GDPR, HIPAA, and other security regulations to safeguard sensitive information, ensuring that AI models are trained with ethically sourced, high-quality datasets.

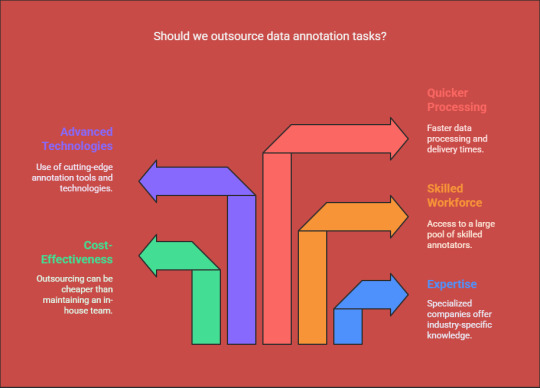

Reasons to opt for a Professional Data Annotation Company

Outsourcing annotation tasks to a specialized company offers several benefits over relying on in-house teams:

Expertise in specific industry annotations

Cost-effective alternatives to establishing an internal annotation team

Access to a skilled workforce and advanced annotation technologies

Quicker data processing and delivery

Conclusion

Data annotation companies are essential for enhancing the accuracy of Globose Technology Solutions AI models through the provision of high-quality, meticulously labeled training datasets. Their proficiency in managing extensive, varied, and intricate annotations greatly boosts model performance, thereby increasing the reliability and effectiveness of AI applications. Collaborating with a professional annotation service provider enables businesses to expedite their AI development while maintaining optimal precision and efficiency.

0 notes

Text

Data Collection Through Images: Techniques and Tools

Introduction

In the contemporary landscape driven by data, the Data Collection Images -based data has emerged as a fundamental element across numerous sectors, including artificial intelligence (AI), healthcare, retail, and security. The evolution of computer vision and deep learning technologies has made the gathering and processing of image data increasingly essential. This article delves into the primary methodologies and tools employed in image data collection, as well as their significance in current applications.

The Importance of Image Data Collection

Image data is crucial for:

Training AI Models: Applications in computer vision, such as facial recognition, object detection, and medical imaging, are heavily reliant on extensive datasets.

Automation & Robotics: Technologies like self-driving vehicles, drones, and industrial automation systems require high-quality image datasets to inform their decision-making processes.

Retail & Marketing: Analyzing customer behavior and enabling visual product recognition utilize image data for enhanced personalization and analytics.

Healthcare & Biometric Security: Image-based datasets are essential for accurate medical diagnoses and identity verification.

Methods for Image Data Collection

1. Web Scraping & APIs

Web scraping is a prevalent technique for collecting image data, involving the use of scripts to extract images from various websites. Additionally, APIs from services such as Google Vision, Flickr, and OpenCV offer access to extensive image datasets.

2. Manual Image Annotation

The process of manually annotating images is a critical method for training machine learning models. This includes techniques such as bounding boxes, segmentation, and keypoint annotations.

3. Crowdsourcing

Services such as Amazon Mechanical Turk and Figure Eight facilitate the gathering and annotation of extensive image datasets through human input.

4. Synthetic Data Generation

In situations where real-world data is limited, images generated by artificial intelligence can be utilized to produce synthetic datasets for training models.

5. Sensor & Camera-Based Collection

Sectors such as autonomous driving and surveillance utilize high-resolution cameras and LiDAR sensors to gather image data in real-time.

Tools for Image Data Collection

1. Labeling

A widely utilized open-source tool for bounding box annotation, particularly effective for object detection models.

2. Roboflow

A robust platform designed for the management, annotation, and preprocessing of image datasets.

3. OpenCV

A well-known computer vision library that facilitates the processing and collection of image data in real-time applications.

4. Super Annotate

A collaborative annotation platform tailored for AI teams engaged in image and video dataset projects.

5. ImageNet & COCO Dataset

Established large-scale datasets that offer a variety of image collections for training artificial intelligence models.

Where to Obtain High-Quality Image Datasets

For those in search of a dataset for face detection, consider utilizing the Face Detection Dataset. This resource is specifically crafted to improve AI models focused on facial recognition and object detection.

How GTS.ai Facilitates Image Data Collection for Your Initiative

GTS.ai offers premium datasets and tools that streamline the process of image data collection, enabling businesses and researchers to train AI models with accuracy. The following are ways in which it can enhance your initiative:

Pre-Annotated Datasets – Gain access to a variety of pre-labeled image datasets, including the Face Detection Dataset for facial recognition purposes.

Tailored Data Collection – Gather images customized for particular AI applications, such as those in healthcare, security, and retail sectors.

Automated Annotation – Employ AI-driven tools to efficiently and accurately label and categorize images.

Data Quality Assurance – Maintain high levels of accuracy through integrated validation processes and human oversight.

Scalability and Integration – Effortlessly incorporate datasets into machine learning workflows via APIs and cloud-based solutions.

By utilizing GTS.ai, your initiative can expedite AI training, enhance model precision, and optimize image data collection methods.

Conclusion

The collection of image-based data is an essential aspect of advancements in Globose Technology Solutions AI and computer vision. By employing appropriate techniques and tools, businesses and researchers can develop robust models for a wide range of applications. As technology progresses, the future of image data collection is poised to become increasingly automated, ethical, and efficient.

0 notes