#MS SQL Server 2000

Explore tagged Tumblr posts

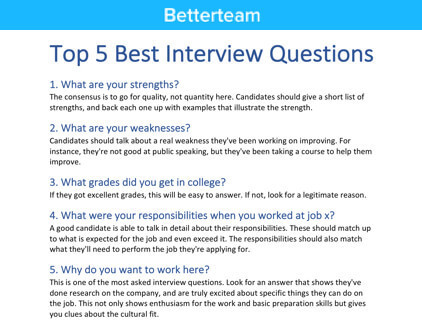

Text

he system is envisioned to support the business purposes of a computer dealer. Presently, the business is maintaining its information on excel sheets. However, the business is planned to extend online support to its clientele on implementation of Computer Dealer Information System (CDMS). Following are the basic requirements of the proposed CDMS; 1.1 AIM and objective CDMS is the hub of activities for the business. These activities are broad based and extend all over the business domain. The Chief Executive has to have a good idea of the all the business activities. He is also responsible for selecting equipment and clientele. The CDMS is not only required to manage these activities but also required to provide decision support to Chief Executive. Moreover, it will serve as the first impression for the client at web. 1.2 Methodology CDMS will be implemented using C# language with dotNet Framework, and will also use Visual Web Pack 5.5 MS SQL Server 2000 as backend database. 2. Entity analysis CDMS will have following entities and relationships. Person It will have the personal details of all the users of the system. This entity will have one to one relationship with membership entity. per_id will be the primary key for this entity. Membership This entity will ensure the login of a user according to his membership status. The user will be granted access to various module of the application according to its membership status and membership type. A member can materialize many purchases and sales for the company. It will be weak entity whose existence depends on person. mem_id will be the primary key for this entity. per_id will be the foreign key for this entity. Login This entity will validate the login in to the system based on the membership type and membership status of the user. login_id will be the primary key for this entity. Sales This entity will maintain statistics of sales. This entity will have one-to-many relationship with membership entity. sel_id will be the Primary key for this entity. eq_id and mem_id will be the foreign key for this entity. Purchase This entity will maintain statistics of purchases by the company. This entity will have one-to-many relationship with membership entity. pur_id will be the Primary key for this entity. eq_id and mem_id will be the foreign key for this entity. Eq_catagory This entity will maintain a detailed list of all the equipment the company deals in. This entity will have one-to-many relationship with stock entity. eq_id will be the primary key for this entity. Stock This entity will maintain statistics of available stock. This entity will have one-to-many relationship with eq_catagory entity. It will be weak entity whose existence depends on person. stk_id will be the Primary key for this entity. eq_id and pur_id will be the foreign key for this entity. Customer This entity will maintain personal details of company clientele. This entity will have one-to-one relationship with order entity. cus_id will be the Primary key for this entity. Order This entity will maintain record of orders placed by the clientele. This entity will have one-to-many relationship with customer entity. ord_id will be the Primary key for this entity. cus_id will be the foreign key for this entity. 2.1 Entity Relationship Diagram (ERD) 2.2 SQL Exempel Following are the few very simple examples of SQL queries; SELECT eq_name, eq_make, eq_model FROM eq_catagory WHERE eq_make = ‘Acer Server’ SELECT eqp.eq_name Description, SUM(stk.qty) Quantity Read the full article

0 notes

Text

So I agree with the criticism of Microsoft here 100% but I gotta be real with you guys. People have been overstating the difficulty of using Linux for years. A few years ago I installed Linux on a laptop for my 70+ year old mom and she loves it and has been using it ever since. Me, personally? I've been using Linux exclusively in my business since 2008. I almost never have any compatibility issues.

I've set Linux up to connect to appleshare servers on local networks, to mount Windows filesystems, I've opened ArcGIS files using open source software, I've imported MS SQL and Oracle databases into MySQL or PostGreSQL, I've opened countless word and excel documents in LibreOffice.

Compatibility is a non issue and has not been an issue with 99%+ of the stuff I do, for 15+ years.

Linux is easier to install than windows. Its like a thumb drive and clicking through a few boxes.

Stop talking about linux like it was the year 2000 and was legitimately hard to install and there were major compatibility issues.

These are things of the past. At this point far more ppl are held back from using Linux by the misconceptions than by any practical limitation.

I talked about the problem of Windows system requirements being too damn high before, and how the windows 10 to 11 jump is especially bad. Like the end of Windows 10 is coming october 2025, and it will be a massive problem. And this article gives us some concrete numbers for how many computers that can't update from win10 to 11.

And it's 240 million. damn. “If these were all folded laptops, stacked one on top of another, they would make a pile 600 km taller than the moon.” the tech analysis company quoted in the article explains.

So many functioning computers that will be wasted. And it's all because people don't wanna switch to a Linux distro with sane system requirements and instead buy a new computer.

Like if you own one of these 240 million windows 10 computers, Just be an environmentally responsible non-wasteful person and switch that computer to Linux instead of just scrapping it because Microsoft says it's not good enough.

8K notes

·

View notes

Text

TOURNAMENT MANAGEMENT SYSTEM DEVELOPMENT USING DOT NET

Executive Summary

The case study illustrates how Mindfire Solutions helped a Golf Tournament Service Provider regain confidence of its client by recreating a desktop equivalent of its existing web application which in turn resulted to be an easy and quick solution.

The requirement was to port the existing web application that displays the Golf Starting tees/times, scores in progress, scores results on TV, plasma screens and Projection Systems into a desktop windows application. Mindfire has always been extremely confident about complex migration projects took the responsibility and started analysis of the entire web application to come up with a feasible, robust and efficient system.

About Our Client

Client: Service Provider for managing Golf Tournaments

Location: USA

Industry: Sports & Leisure

Business Situation

The Client’s existing web application was a high quality display application used for displaying score board and event board using a lot of Internet Explorer transitions and other effects thereby giving a great look n feel experience for the users. In spite of this, due to its web nature, it required lot of overheads at the time of installation. As a result, the popularity diminished thus making the client desperate to look for a quick and easy solution. This made the client approach Mindfire Solutions for an end to end solution.

A team of developers from Mindfire Solutions brainstormed through the requirement documents of the client and started working on it. All the existing functionalities of the web application were analyzed and ported onto the desktop version. The end result was very much a valuable application for the client as it recovered trust and confidence of his customers.

Technologies

.Net 2.0 framework, VB.Net, JavaScript, HTML, MS Access, SQL Server 2000

Download Full Case Study

0 notes

Text

Bryan Strauch is an Information Technology specialist in Morrisville, NC

Resume: Bryan Strauch

[email protected] 919.820.0552(cell)

Skills Summary

VMWare: vCenter/vSphere, ESXi, Site Recovery Manager (disaster recovery), Update Manager (patching), vRealize, vCenter Operations Manager, auto deploy, security hardening, install, configure, operate, monitor, optimize multiple enterprise virtualization environments

Compute: Cisco UCS and other major bladecenter brands - design, rack, configure, operate, upgrade, patch, secure multiple enterprise compute environments.

Storage: EMC, Dell, Hitachi, NetApp, and other major brands - connect, zone, configure, present, monitor, optimize, patch, secure, migrate multiple enterprise storage environments.

Windows/Linux: Windows Server 2003-2016, templates, install, configure, maintain, optimize, troubleshoot, security harden, monitor, all varieties of Windows Server related issues in large enterprise environments. RedHat Enterprise Linux and Ubuntu Operating Systems including heavy command line administration and scripting.

Networking: Layer 2/3 support (routing/switching), installation/maintenance of new network and SAN switches, including zoning SAN, VLAN, copper/fiber work, and other related tasks around core data center networking

Scripting/Programming: SQL, Powershell, PowerCLI, Perl, Bash/Korne shell scripting

Training/Documentation: Technical documentation, Visio diagramming, cut/punch sheets, implementation documentations, training documentations, and on site customer training of new deployments

Security: Alienvault, SIEM, penetration testing, reporting, auditing, mitigation, deployments

Disaster Recovery: Hot/warm/cold DR sites, SAN/NAS/vmware replication, recovery, testing

Other: Best practice health checks, future proofing, performance analysis/optimizations

Professional Work History

Senior Systems/Network Engineer; Security Engineer

September 2017 - Present

d-wise technologies

Morrisville, NC

Sole security engineer - designed, deployed, maintained, operated security SIEM and penetration testing, auditing, and mitigation reports, Alienvault, etc

responsibility for all the systems that comprise the organizations infrastructure and hosted environments

main point of contact for all high level technical requests for both corporate and hosted environments

Implement/maintain disaster recovery (DR) & business continuity plans

Management of network backbone including router, firewall, switch configuration, etc

Managing virtual environments (hosted servers, virtual machines and resources)

Internal and external storage management (cloud, iSCSI, NAS)

Create and support policies and procedures in line with best practices

Server/Network security management

Senior Storage and Virtualization Engineer; Datacenter Implementations Engineer; Data Analyst; Software Solutions Developer

October 2014 - September 2017

OSCEdge / Open SAN Consulting (Contractor)

US Army, US Navy, US Air Force installations across the United States (Multiple Locations)

Contract - Hurlburt Field, US Air Force:

Designed, racked, implemented, and configured new Cisco UCS blade center solution

Connected and zoned new NetApp storage solution to blades through old and new fabric switches

Implemented new network and SAN fabric switches

Network: Nexus C5672 switches

SAN Fabric: MDS9148S

Decommissioned old blade center environment, decommissioned old network and storage switches, decommissioned old SAN solution

Integrated new blades into VMWare environment and migrated entire virtual environment

Assessed and mitigated best practice concerns across entire environment

Upgraded entire environment (firmware and software versions)

Security hardened entire environment to Department of Defense STIG standards and security reporting

Created Visio diagrams and documentation for existing and new infrastructure pieces

Trained on site operational staff on new/existing equipment

Cable management and labeling of all new and existing solutions

Implemented VMWare auto deploy for rapid deployment of new VMWare hosts

Contract - NavAir, US Navy:

Upgraded and expanded an existing Cisco UCS environment

Cable management and labeling of all new and existing solutions

Created Visio diagrams and documentation for existing and new infrastructure pieces

Full health check of entire environment (blades, VMWare, storage, network)

Upgraded entire environment (firmware and software versions)

Assessed and mitigated best practice concerns across entire environment

Trained on site operational staff on new/existing equipment

Contract - Fort Bragg NEC, US Army:

Designed and implemented a virtualization solution for the US ARMY.

This technology refresh is designed to support the US ARMY's data center consolidation effort, by virtualizing and migrating hundreds of servers.

Designed, racked, implemented, and configured new Cisco UCS blade center solution

Implemented SAN fabric switches

SAN Fabric: Brocade Fabric Switches

Connected and zoned new EMC storage solution to blades

Specific technologies chosen for this solution include: VMware vSphere 5 for all server virtualization, Cisco UCS as the compute platform and EMC VNX for storage.

Decommissioned old SAN solution (HP)

Integrated new blades into VMWare environment and migrated entire environment

Physical to Virtual (P2V) conversions and migrations

Migration from legacy server hardware into virtual environment

Disaster Recovery solution implemented as a remote hot site.

VMware SRM and EMC Recoverpoint have been deployed to support this effort.

The enterprise backup solution is EMC Data Domain and Symantec NetBackup

Assessed and mitigated best practice concerns across entire environment

Upgraded entire environment (firmware and software versions)

Security hardened entire environment to Department of Defense STIG standards and security reporting

Created Visio diagrams and documentation for existing and new infrastructure pieces

Trained on site operational staff on new equipment

Cable management and labeling of all new solutions

Contract - 7th Signal Command, US Army:

Visited 71 different army bases collecting and analyzing compute, network, storage, metadata.

The data collected, analyzed, and reported will assist the US Army in determining the best solutions for data archiving and right sizing hardware for the primary and backup data centers.

Dynamically respond to business needs by developing and executing software solutions to solve mission reportable requirements on several business intelligence fronts

Design, architect, author, implement in house, patch, maintain, document, and support complex dynamic data analytics engine (T-SQL) to input, parse, and deliver reportable metrics from data collected as defined by mission requirements

From scratch in house BI engine development, 5000+ SQL lines (T-SQL)

Design, architect, author, implement to field, patch, maintain, document, and support large scale software tools for environmental data extraction to meet mission requirements

Large focus of data extraction tool creation in PowerShell (Windows, Active Directory) and PowerCLI (VMWare)

From scratch in house BI extraction tool development, 2000+ PowerShell/PowerCLI lines

Custom software development to extract data from other systems including storage systems (SANs), as required

Perl, awk, sed, and other languages/OSs, as required by operational environment

Amazon AWS Cloud (GovCloud), IBM SoftLayer Cloud, VMWare services, MS SQL engines

Full range of Microsoft Business Intelligence Tools used: SQL Server Analytics, Reporting, and Integration Services (SSAS, SSRS, SSIS)

Visual Studio operation, integration, and software design for functional reporting to SSRS frontend

Contract - US Army Reserves, US Army:

Operated and maintained Hitachi storage environment, to include:

Hitachi Universal Storage (HUS-VM enterprise)

Hitachi AMS 2xxx (modular)

Hitachi storage virtualization

Hitachi tuning manager, dynamic tiering manager, dynamic pool manager, storage navigator, storage navigator modular, command suite

EMC Data Domains

Storage and Virtualization Engineer, Engineering Team

February 2012 – October 2014

Network Enterprise Center, Fort Bragg, NC

NCI Information Systems, Inc. (Contractor)

Systems Engineer directly responsible for the design, engineering, maintenance, optimization, and automation of multiple VMWare virtual system infrastructures on Cisco/HP blades and EMC storage products.

Provide support, integration, operation, and maintenance of various system management products, services and capabilities on both the unclassified and classified network

Coordinate with major commands, vendors, and consultants for critical support required at installation level to include trouble tickets, conference calls, request for information, etc

Ensure compliance with Army Regulations, Policies and Best Business Practices (BBP) and industry standards / best practices

Technical documentation and Visio diagramming

Products Supported:

EMC VNX 7500, VNX 5500, and VNXe 3000 Series

EMC FAST VP technology in Unisphere

Cisco 51xx Blade Servers

Cisco 6120 Fabric Interconnects

EMC RecoverPoint

VMWare 5.x enterprise

VMWare Site Recovery Manager 5.x

VMWare Update Manager 5.x

VMWare vMA, vCops, and PowerCLI scripting/automation

HP Bladesystem c7000 Series

Windows Server 2003, 2008, 2012

Red Hat Enterprise and Ubuntu Server

Harnett County Schools, Lillington, NC

Sr. Network/Systems Administrator, August 2008 – June 2011

Systems Administrator, September 2005 – August 2008

Top tier technical contact for a 20,000 student, 2,500 staff, 12,000 device environment District / network / datacenter level design, implementation, and maintenance of physical and virtual servers, routers, switches, and network appliances

Administered around 50 physical and virtual servers, including Netware 5.x/6.x, Netware OES, Windows Server 2000, 2003, 2008, Ubuntu/Linux, SUSE, and Apple OSX 10.4-10.6

Installed, configured, maintained, and monitored around 175 HP Procurve switches/routers Maintained web and database/SQL servers (Apache, Tomcat, IIS and MSSQL, MySQL) Monitored all network resources (servers, switches, routers, key workstations) using various monitoring applications (Solarwinds, Nagios, Cacti) to ensure 100% availability/efficiency Administered workstation group policies and user accounts via directory services

Deployed and managed applications at the network/server level

Authored and implemented scripting (batch, Unix) to perform needed tasks

Monitored server and network logs for anomalies and corrected as needed

Daily proactive maintenance and reactive assignments based on educational needs and priorities Administered district level Firewall/IPS/VPN, packet shapers, spam filters, and antivirus systems Administered district email server and accounts

Consulted with heads of all major departments (finance, payroll, testing, HR, child nutrition, transportation, maintenance, and the rest of the central staff) to address emergent and upcoming needs within their departments and resolve any critical issues in a timely and smooth manner Ensure data integrity and security throughout servers, network, and desktops

Monitored and corrected all data backup procedures/equipment for district and school level data

Project based work through all phases from design/concept through maintenance

Consulted with outside contractors, consultants, and vendors to integrate and maintain various information technologies in an educational environment, including bid contracts

Designed and implemented an in-house cloud computing infrastructure utilizing a HP Lefthand SAN solution, VMWare’s ESXi, and the existing Dell server infrastructure to take full advantage of existing technologies and to stretch the budget as well as provide redundancies

End user desktop and peripherals support, training, and consultation

Supported Superintendents, Directors, all central office staff/departments, school administration offices (Principals and staff) and classroom teachers and supplementary staff

Addressed escalations from other technical staff on complex and/or critical issues

Utilized work order tracking and reporting systems to track issues and problem trends

Attend technical conferences, including NCET, to further my exposure to new technologies

Worked in a highly independent environment and prioritized district needs and workload daily Coordinated with other network admin, our director, and technical staff to ensure smooth operations, implement long term goals and projects, and address critical needs

Performed various other tasks as assigned by the Director of Media and Technology and

Superintendents

Products Supported

Microsoft XP/Vista/7 and Server 2000/2003/2008, OSX Server 10.x, Unix/Linux

Sonicwall NSA E8500 Firewall/Content filter/GatewayAV/VPN/UTM Packeteer 7500 packet shaping / traffic management / network prioritization

180 HP Procurve L2/L3 switches and HP Procurve Management Software

Netware 6.x, Netware OES, SUSE Linux, eDirectory, Zenworks 7, Zenworks 10/11

HP Lefthand SAN, VMWare Server / ESXi / VSphere datacenter virtualization

Solarwinds Engineer Toolset 9/10 for Proactive/Reactive network flow monitoring

Barracuda archiving/SPAM filter/backup appliance, Groupwise 7/8 email server

Education

Bachelor of Science, Computer Science

Minor: Mathematics

UNC School System, Fayetteville State University, May 2004

GPA: 3

High Level Topics (300+):

Data Communication and Computer Networks

Software Tools

Programming Languages

Theory of Computation

Compiler Design Theory

Artificial Intelligence

Computer Architecture and Parallel Processing I

Computer Architecture and Parallel Processing II

Principles of Operating Systems

Principles of Database Design

Computer Graphics I

Computer Graphics II

Social, Ethical, and Professional Issues in Computer Science

Certifications/Licenses:

VMWare VCP 5 (Datacenter)

Windows Server 2008/2012

Windows 7/8

Security+, CompTIA

ITILv3, EXIN

Certified Novell Administrator, Novell

Apple Certified Systems Administrator, Apple

Network+ and A+ Certified Professional, CompTIA

Emergency Medical Technician, NC (P514819)

Training:

Hitachi HUS VM

Hitachi HCP

IBM SoftLayer

VMWare VCP (datacenter)

VMWare VCAP (datacenter)

EMC VNX in VMWare

VMWare VDI (virtual desktops)

Amazon Web Services (AWS)

Emergency Medical Technician - Basic, 2019

EMT - Paramedic (pending)

1 note

·

View note

Text

MS SQL Server 버전체크

RTM (no SP) SP1 SP2 SP3 SP4 SQL Server 2019 codename Aris Not yet released SQL Server 2017 codename vNext 14.0.1000.169 SQL Server 2016 13.0.1601.5 13.0.4001.0 or 13.1.4001.0 13.0.5026.0 or 13.2.5026.0 SQL Server 2014 12.0.2000.8 12.0.4100.1 or 12.1.4100.1 12.0.5000.0 or 12.2.5000.0 12.0.6024.0 or 12.3.6024.0 *new SQL Server 2012 codename Denali 11.0.2100.60 11.0.3000.0 or 11.1.3000.0 11.0.5058.0 or 11.2.5058.0 11.0.6020.0 or 11.3.6020.0 11.0.7001.0 or 11.4.7001.0 SQL Server 2008 R2 codename Kilimanjaro 10.50.1600.1 10.50.2500.0 or 10.51.2500.0 10.50.4000.0 or 10.52.4000.0 10.50.6000.34 or 10.53.6000.34 SQL Server 2008 codename Katmai 10.0.1600.22 10.0.2531.0 or 10.1.2531.0 10.0.4000.0 or 10.2.4000.0 10.0.5500.0 or 10.3.5500.0 10.0.6000.29 or 10.4.6000.29 SQL Server 2005 codename Yukon 9.0.1399.06 9.0.2047 9.0.3042 9.0.4035 9.0.5000 SQL Server 2000 codename Shiloh 8.0.194 8.0.384 8.0.532 8.0.760 8.0.2039 SQL Server 7.0 codename Sphinx 7.0.623 7.0.699 7.0.842 7.0.961 7.0.1063

select SERVERPROPERTY('productversion') ver , SERVERPROPERTY ('productlevel'), SERVERPROPERTY ('edition')

1 note

·

View note

Text

Business Analyst Finance Domain Sample Resume

This is just a sample Business Analyst resume for freshers as well as for experienced job seekers in Finance domain of business analyst or system analyst. While this is only a sample resume, please use this only for reference purpose, do not copy the same client names or job duties for your own purpose. Always make your own resume with genuine experience.

Name: Justin Megha

Ph no: XXXXXXX

your email here.

Business Analyst, Business Systems Analyst

SUMMARY

Accomplished in Business Analysis, System Analysis, Quality Analysis and Project Management with extensive experience in business products, operations and Information Technology on the capital markets space specializing in Finance such as Trading, Fixed Income, Equities, Bonds, Derivatives(Swaps, Options, etc) and Mortgage with sound knowledge of broad range of financial instruments. Over 11+ Years of proven track record as value-adding, delivery-loaded project hardened professional with hands-on expertise spanning in System Analysis, Architecting Financial applications, Data warehousing, Data Migrations, Data Processing, ERP applications, SOX Implementation and Process Compliance Projects. Accomplishments in analysis of large-scale business systems, Project Charters, Business Requirement Documents, Business Overview Documents, Authoring Narrative Use Cases, Functional Specifications, and Technical Specifications, data warehousing, reporting and testing plans. Expertise in creating UML based Modelling views like Activity/ Use Case/Data Flow/Business Flow /Navigational Flow/Wire Frame diagrams using Rational Products & MS Visio. Proficient as long time liaison between business and technology with competence in Full Life Cycle of System (SLC) development with Waterfall, Agile, RUP methodology, IT Auditing and SOX Concepts as well as broad cross-functional experiences leveraging multiple frameworks. Extensively worked with the On-site and Off-shore Quality Assurance Groups by assisting the QA team to perform Black Box /GUI testing/ Functionality /Regression /System /Unit/Stress /Performance/ UAT's. Facilitated change management across entire process from project conceptualization to testing through project delivery, Software Development & Implementation Management in diverse business & technical environments, with demonstrated leadership abilities. EDUCATION

Post Graduate Diploma (in Business Administration), USA Master's Degree (in Computer Applications), Bachelor's Degree (in Commerce), TECHNICAL SKILLS

Documentation Tools UML, MS Office (Word, Excel, Power Point, Project), MS Visio, Erwin

SDLC Methodologies Waterfall, Iterative, Rational Unified Process (RUP), Spiral, Agile

Modeling Tools UML, MS Visio, Erwin, Power Designer, Metastrom Provision

Reporting Tools Business Objects X IR2, Crystal Reports, MS Office Suite

QA Tools Quality Center, Test Director, Win Runner, Load Runner, QTP, Rational Requisite Pro, Bugzilla, Clear Quest

Languages Java, VB, SQL, HTML, XML, UML, ASP, JSP

Databases & OS MS SQL Server, Oracle 10g, DB2, MS Access on Windows XP / 2000, Unix

Version Control Rational Clear Case, Visual Source Safe

PROFESSIONAL EXPERIENCE

SERVICE MASTER, Memphis, TN June 08 - Till Date

Senior Business Analyst

Terminix has approximately 800 customer service agents that reside in our branches in addition to approximately 150 agents in a centralized call center in Memphis, TN. Terminix customer service agents receive approximately 25 million calls from customers each year. Many of these customer's questions are not answered or their problems are not resolved on the first call. Currently these agents use an AS/400 based custom developed system called Mission to answer customer inquiries into branches and the Customer Communication Center. Mission - Terminix's operation system - provides functionality for sales, field service (routing & scheduling, work order management), accounts receivable, and payroll. This system is designed modularly and is difficult to navigate for customer service agents needing to assist the customer quickly and knowledgeably. The amount of effort and time needed to train a customer service representative using the Mission system is high. This combined with low agent and customer retention is costly.

Customer Service Console enables Customer Service Associates to provide consistent, enhanced service experience, support to the Customers across the Organization. CSC is aimed at providing easy navigation, easy learning process, reduced call time and first call resolution.

Responsibilities

Assisted in creating Project Plan, Road Map. Designed Requirements Planning and Management document. Performed Enterprise Analysis and actively participated in buying Tool Licenses. Identified subject-matter experts and drove the requirements gathering process through approval of the documents that convey their needs to management, developers, and quality assurance team. Performed technical project consultation, initiation, collection and documentation of client business and functional requirements, solution alternatives, functional design, testing and implementation support. Requirements Elicitation, Analysis, Communication, and Validation according to Six Sigma Standards. Captured Business Process Flows and Reengineered Process to achieve maximum outputs. Captured As-Is Process, designed TO-BE Process and performed Gap Analysis Developed and updated functional use cases and conducted business process modeling (PROVISION) to explain business requirements to development and QA teams. Created Business Requirements Documents, Functional and Software Requirements Specification Documents. Performed Requirements Elicitation through Use Cases, one to one meetings, Affinity Exercises, SIPOC's. Gathered and documented Use Cases, Business Rules, created and maintained Requirements/Test Traceability Matrices. Client: The Dun & Bradstreet Corporation, Parsippany, NJ May' 2007 - Oct' 2007

Profile: Sr. Financial Business Analyst/ Systems Analyst.

Project Profile (1): D&B is the world's leading source of commercial information and insight on businesses. The Point of Arrival Project and the Data Maintenance (DM) Project are the future applications of the company that the company would transit into, providing an effective method & efficient report generation system for D&B's clients to be able purchase reports about companies they are trying to do business.

Project Profile (2): The overall purpose of this project was building a Self Awareness System(SAS) for the business community for buying SAS products and a Payment system was built for SAS. The system would provide certain combination of products (reports) for Self Monitoring report as a foundation for managing a company's credit.

Responsibilities:

Conducted GAP Analysis and documented the current state and future state, after understanding the Vision from the Business Group and the Technology Group. Conducted interviews with Process Owners, Administrators and Functional Heads to gather audit-related information and facilitated meetings to explain the impacts and effects of SOX compliance. Played an active and lead role in gathering, analyzing and documenting the Business Requirements, the business rules and Technical Requirements from the Business Group and the Technological Group. Co - Authored and prepared Graphical depictions of Narrative Use Cases, created UML Models such as Use Case Diagrams, Activity Diagrams and Flow Diagrams using MS Visio throughout the Agile methodology Documented the Business Requirement Document to get a better understanding of client's business processes of both the projects using the Agile methodology. Facilitating JRP and JAD sessions, brain storming sessions with the Business Group and the Technology Group. Documented the Requirement traceability matrix (RTM) and conducted UML Modelling such as creating Activity Diagrams, Flow Diagrams using MS Visio. Analysed test data to detect significant findings and recommended corrective measures Co-Managed the Change Control process for the entire project as a whole by facilitating group meetings, one-on-one interview sessions and email correspondence with work stream owners to discuss the impact of Change Request on the project. Worked with the Project Lead in setting realistic project expectations and in evaluating the impact of changes on the organization and plans accordingly and conducted project related presentations. Co-oordinated with the off shore QA Team members to explain and develop the Test Plans, Test cases, Test and Evaluation strategy and methods for unit testing, functional testing and usability testing Environment: Windows XP/2000, SOX, Sharepoint, SQL, MS Visio, Oracle, MS Office Suite, Mercury ITG, Mercury Quality Center, XML, XHTML, Java, J2EE.

GATEWAY COMPUTERS, Irvine, CA, Jan 06 - Mar 07

Business Analyst

At Gateway, a Leading Computer, Laptop and Accessory Manufacturer, was involved in two projects,

Order Capture Application: Objective of this Project is to Develop Various Mediums of Sales with a Centralized Catalog. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Mentored and trained staff about Tech Guide & Company Standards; Gateway reporting system: was developed with Business Objects running against Oracle data warehouse with Sales, Inventory, and HR Data Marts. This DW serves the different needs of Sales Personnel and Management. Involved in the development of it utilized Full Client reports and Web Intelligence to deliver analytics to the Contract Administration group and Pricing groups. Reporting data mart included Wholesaler Sales, Contract Sales and Rebates data.

Responsibilities:

Product Manager for Enterprise Level Order Entry Systems - Phone, B2B, Gateway.com and Cataloging System. Modeled the Sales Order Entry process to eliminate bottleneck process steps using ERWIN. Adhered and practiced RUP for implementing software development life cycle. Gathered Requirements from different sources like Stakeholders, Documentation, Corporate Goals, Existing Systems, and Subject Matter Experts by conducting Workshops, Interviews, Use Cases, Prototypes, Reading Documents, Market Analysis, Observations Created Functional Requirement Specification documents - which include UMLUse case diagrams, Scenarios, activity, work Flow diagrams and data mapping. Process and Data modeling with MS VISIO. Worked with Technical Team to create Business Services (Web Services) that Application could leverage using SOA, to create System Architecture and CDM for common order platform. Designed Payment Authorization (Credit Card, Net Terms, and Pay Pal) for the transaction/order entry systems. Implemented A/B Testing, Customer Feedback Functionality to Gateway.com Worked with the DW, ETL teams to create Order entry systems Business Objects reports. (Full Client, Web I) Worked in a cross functional team of Business, Architects and Developers to implement new features. Program Managed Enterprise Order Entry Systems - Development and Deployment Schedule. Developed and maintained User Manuals, Application Documentation Manual, on Share Point tool. Created Test Plansand Test Strategies to define the Objective and Approach of testing. Used Quality Center to track and report system defects and bug fixes. Written modification requests for the bugs in the application and helped developers to track and resolve the problems. Developed and Executed Manual, Automated Functional, GUI, Regression, UAT Test cases using QTP. Gathered, documented and executed Requirements-based, Business process (workflow/user scenario), Data driven test cases for User Acceptance Testing. Created Test Matrix, Used Quality Center for Test Management, track & report system defects and bug fixes. Performed Load, stress Testing's & Analyzed Performance, Response Times. Designed approach, developed visual scripts in order to test client & server side performance under various conditions to identify bottlenecks. Created / developed SQL Queries (TOAD) with several parameters for Backend/DB testing Conducted meetings for project status, issue identification, and parent task review, Progress Reporting. AMC MORTGAGE SERVICES, CA, USA Oct 04 - Dec 05

Business Analyst

The primary objective of this project is to replace the existing Internal Facing Client / Server Applications with a Web enabled Application System, which can be used across all the Business Channels. This project involves wide exposure towards Requirement Analysis, Creating, Executing and Maintaining of Test plans and Test Cases. Demands understanding and testing of Data Warehouse and Data Marts, thorough knowledge of ETL and Reporting, Enhancement of the Legacy System covered all of the business requirements related to Valuations from maintaining the panel of appraisers to ordering, receiving, and reviewing the valuations.

Responsibilities:

Gathered Analyzed, Validated, and Managed and documented the stated Requirements. Interacted with users for verifying requirements, managing change control process, updating existing documentation. Created Functional Requirement Specification documents - that include UML Use case diagrams, scenarios, activity diagrams and data mapping. Provided End User Consulting on Functionality and Business Process. Acted as a client liaison to review priorities and manage the overall client queue. Provided consultation services to clients, technicians and internal departments on basic to intricate functions of the applications. Identified business directions & objectives that may influence the required data and application architectures. Defined, prioritized business requirements, Determine which business subject areas provide the most needed information; prioritize and sequence implementation projects accordingly. Provide relevant test scenarios for the testing team. Work with test team to develop system integration test scripts and ensure the testing results correspond to the business expectations. Used Test Director, QTP, Load Runner for Test management, Functional, GUI, Performance, Stress Testing Perform Data Validation, Data Integration and Backend/DB testing using SQL Queries manually. Created Test input requirements and prepared the test data for data driven testing. Mentored, trained staff about Tech Guide & Company Standards. Set-up and Coordinate Onsite offshore teams, Conduct Knowledge Transfer sessions to the offshore team. Lloyds Bank, UK Aug 03 - Sept 04 Business Analyst Lloyds TSB is leader in Business, Personal and Corporate Banking. Noted financial provider for millions of customers with the financial resources to meet and manage their credit needs and to achieve their financial goals. The Project involves an applicant Information System, Loan Appraisal and Loan Sanction, Legal, Disbursements, Accounts, MIS and Report Modules of a Housing Finance System and Enhancements for their Internet Banking.

Responsibilities:

Translated stakeholder requirements into various documentation deliverables such as functional specifications, use cases, workflow / process diagrams, data flow / data model diagrams. Produced functional specifications and led weekly meetings with developers and business units to discuss outstanding technical issues and deadlines that had to be met. Coordinated project activities between clients and internal groups and information technology, including project portfolio management and project pipeline planning. Provided functional expertise to developers during the technical design and construction phases of the project. Documented and analyzed business workflows and processes. Present the studies to the client for approval Participated in Universe development - planning, designing, Building, distribution, and maintenance phases. Designed and developed Universes by defining Joins, Cardinalities between the tables. Created UML use case, activity diagrams for the interaction between report analyst and the reporting systems. Successfully implemented BPR and achieved improved Performance, Reduced Time and Cost. Developed test plans and scripts; performed client testing for routine to complex processes to ensure proper system functioning. Worked closely with UAT Testers and End Users during system validation, User Acceptance Testing to expose functionality/business logic problems that unit testing and system testing have missed out. Participated in Integration, System, Regression, Performance, and UAT - Using TD, WR, Load Runner Participated in defect review meetings with the team members. Worked closely with the project manager to record, track, prioritize and close bugs. Used CVS to maintain versions between various stages of SDLC. Client: A.G. Edwards, St. Louis, MO May' 2005 - Feb' 2006

Profile: Sr. Business Analyst/System Analyst

Project Profile: A.G. Edwards is a full service Trading based brokerage firm in Internet-based futures, options and forex brokerage. This site allows Users (Financial Representative) to trade online. The main features of this site were: Users can open new account online to trade equitiies, bonds, derivatives and forex with the Trading system using DTCC's applications as a Clearing House agent. The user will get real-time streaming quotes for the currency pairs they selected, their current position in the forex market, summary of work orders, payments and current money balances, P & L Accounts and available trading power, all continuously updating in real time via live quotes. The site also facilitates users to Place, Change and Cancel an Entry Order, Placing a Market Order, Place/Modify/Delete/Close a Stop Loss Limit on an Open Position.

Responsibilities:

Gathered Business requirements pertaining to Trading, equities and Fixed Incomes like bonds, converted the same into functional requirements by implementing the RUP methodology and authored the same in Business Requirement Document (BRD). Designed and developed all Narrative Use Cases and conducted UML modeling like created Use Case Diagrams, Process Flow Diagrams and Activity Diagrams using MS Visio. Implemented the entire Rational Unified Process (RUP) methodology of application development with its various workflows, artifacts and activities. Developed business process models in RUP to document existing and future business processes. Established a business Analysis methodology around the Rational Unified Process. Analyzed user requirements, attended Change Request meetings to document changes and implemented procedures to test changes. Assisted in developing project timelines/deliverables/strategies for effective project management. Evaluated existing practices of storing and handling important financial data for compliance. Involved in developing the test strategy and assisted in developed Test scenarios, test conditions and test cases Partnered with the technical Business Analyst Interview questions areas in the research, resolution of system and User Acceptance Testing (UAT).

1 note

·

View note

Text

A Short Anecdote of Luminary, Pritish Kumar Halder

Those who hustle a lot for their career will never get defeated in life, one day they will shine like a star. It is well said that “Excellence is the gradual result of always striving to do better”, Pritish Kumar Halder is the perfect epitome of the aforesaid quote. Pritish Kumar Halder (PK Halder) is a well-educated and skillful person with expansive knowledge of all the fields but importantly of programming languages, databases, and technologies.

Pritish is a quite hard-working and accomplished man who has built himself by lots of hardship and efforts that are blooming today as his great intelligence and knowledge. He has boundless experience in programming languages and technologies along with great business development market experiences also.

Artistry personality of Pritish Kumar:

Priish has extensive knowledge and experience in various fields but mainly in computers and programming languages, he is fond of being updated about the latest technologies and their implementation in society. He has an assemblage of knowledge and experiences in business and computers, a few of his achievements are discussed below;

PK has 21 years of core development experience in Java/JSP.Servlet/JEE on web portal & e-Commerce application, where he has 6+ solid experience in SOA-based applications using Web Service, SOAP, XML/XSL technology.

He has 7+ years of experience in PL/SQL stands for Procedural Language extension to Structured Query Language, and also in Stored Procedure experience using Oracle, MS SQL, and MySQL also.

PK has experience in the software development life cycle (SDLC) from design, development, testing, and deployment, to production and maintenance.

He also has an experience in project documentation from business requirements to technical documentation.

Pritish has 15+ years of experience in ColdFusion which is a Markup Language commonly known as CFML, a programming language.

He has 7+ years of experience in PHP stands for Hypertext Preprocessor and 1+ years of Drupal PHP experience.

He has 2+ years of Struts, Spring, and Hibernate which are open-source frameworks except Hibernate which is an entirely different framework for ORM.

PK has 4+ years of experience on Rational Rose in which object-oriented Unified Modelling Language is a software design tool, and CSS stands for Cascading Style Sheet in HTML.

PK is a certified Web Component Developer (SCWCD) on the J2EE Platform, and Java Programmer (SCJP) he has excellent analytical and problem-solving skills.

He has 3+ years of experience in ERP solution development stands for Enterprise Resource Planning used to manage the day-to-day business activities.

Also, he has an experience of 5+ years in Relational Databases applications with Oracle, MS SQL Server 2000/08, MySQL

PK has 7+ years in Eclipse with the integration of Ant, SVN version control, MS VSS, and Tomcat, and he has 6+ years of experience and good knowledge of JavaScript, Ajax, JSON, JQuery, Ext JS, and Sencha Touch in expertise level

Professional Skills Highlights of Pritish Kumar Halder:

Pritish is extremely qualified and skilled with huge experience in programming and database domain. Some of his professional skills are discussed below;

Pritish Halder is good enough in the software architecture field, in this, he has a fine pick in RUP, UML, OOAD, Design Patterns, Extreme Programming (Agile), Data Modeling, OLAP, and Rational Rose.

PK is so knowledgeable and superior in programming languages, the languages he works upon the most are Java/JEE/JSE, EJB, Servlets, JSP, Struts, Spring, Hibernate, Ajax, PHP, Visual Basic, ColdFusion,ASP,.Net, JDBC, JavaBeans, JavaMail, Swing, Applets, AWT, XML, FORTRAN, SOA, SOAP, POI, FOP, Ant, JUnit, Log4j, Jakarta Commons, JFree Report, JFor, Jasper Report, JDOM, DOM4J, Lucene.

PK has great knowledge in Internet (Web) related technologies that are HTML, DHTML, XHTML,JSF, CSS, CSS2, XSl/XSLT, JavaScript, Ajax.

He is well known in database management systems also like Relational Data Model, and Database programming as well for example Oracle, MS SQL.

Pritish also has professional skills in the Development of IDEs and Tools that are eclipse, JBuilder, RAD/WSAD, Bugzilla, Fiddler, CVS, Rational Rose, JUnit, MS Visual Source Safe, and Crystal Reports.

He has significant skills in Web/Application Servers which are Tomcat, JRun, IIS, JBoss, and ColdFusion.

PK is prominent in operating systems, especially in Windows 98, 2000, NT, XP, and DOS.

Lastly, the aforesaid information is quite authorized and virtuous, Pritish Kumar Halder is a master in the field of computers and programming languages, and he is adept in the technologies as well. All his attributes make him different from the others and thus, his hard work and efforts get fulfilled with his great success.

0 notes

Text

Postgres ssh tunnel

Access ×156 Access 2000 ×8 Access 2002 ×4 Access 2003 ×15 Access 2007 ×29 Access 2010 ×28 Access 2013 ×45 Access 97 ×6 Active Directory ×7 AS/400 ×11 Azure SQL Database ×19 Caché ×1 Composite Information Server ×2 ComputerEase ×2 DBF / FoxPro ×20 DBMaker ×1 DSN ×21 Excel ×121 Excel 2000 ×2 Excel 2002 ×2 Excel 2003 ×10 Excel 2007 ×16 Excel 2010 ×22 Excel 2013 ×27 Excel 97 ×4 Exchange ×1 Filemaker ×1 Firebird ×7 HTML Table ×3 IBM DB2 ×16 Informix ×8 Integration Services ×4 Interbase ×2 Intuit QuickBase ×1 Lotus Notes ×3 Mimer SQL ×1 MS Project ×2 MySQL ×57 Netezza DBMS ×4 OData ×3 OLAP, Analysis Services ×3 OpenOffice SpreadSheet ×2 Oracle ×61 Paradox ×3 Pervasive ×6 PostgreSQL ×19 Progress ×4 SAS ×5 SAS IOM ×1 SAS OLAP ×2 SAS Workspace ×2 SAS/SHARE ×2 SharePoint ×17 SQL Server ×204 SQL Server 2000 ×8 SQL Server 2005 ×13 SQL Server 2008 ×51 SQL Server 2012 ×35 SQL Server 2014 ×9 SQL Server 2016 ×12 SQL Server 2017 ×2 SQL Server 2019 ×2 SQL Server 7.

0 notes

Text

Aqua data studio increase memory

Aqua data studio increase memory professional#

Involved in Development and Testing of UNIX Shell Scripts to handle files from different sources such as NMS, NASCO, UCSW, HNJH, WAM, CPL and LDAP.Strong troubleshooting skills, strong experience of working with complex ETL in a data warehouse environment Extensive experience in database programming in writing Cursors, Stored procedures, Triggers and Indexes.Play the role of technical SME lead the team with respect to new technical design/architecture or modifying current architecture/code.Programming Languages: Visual Basic 6.0, C, C++, HTML, PERL Scripting.ĭelivery Lead/Informatica Technical SME - Lead/Senior Developer/Business Analyst Methodologies: Party Modeling, ER/Multidimensional Modeling (Star/Snowflake), Data Warehouse Lifecycle Operating Systems: MS Windows 7/2000/XP, UNIX, LINUS Other Utilities: Oracle SQL developer 4.0.3.16, Oracle SQL developer 3.0.04, UNIX FTP client, Toad for Oracle 9.0, WinSql 6.0.71.582, Text pad, Agility for Netezza Workbench, Netezza Performance Server, Informatica Admin console, Aqua Data Studio V9 etc. Hands on exposure to ETL using Informatica, Oracle and Unix Scripting with knowledge in Healthcare, Telecommunication and Retail Domains.ĮTL/Reporting Tools: Informatica 10.1.0, Informatica 9.1.0, Informatica 8.6.1, Informatica 8.1.1, Informatica 7.0, Informatica Power Analyzer 4.1, Business Objects Designer 6.5, Cognos8, Hyperion System9.Experience of multiple end to end data warehouse implementations, migration, testing and support assignments.

Aqua data studio increase memory professional#

An experienced Information Technology management professional with over 10 years of comprehensive experience in Data Warehousing / Business Intelligence environments.

0 notes

Text

Quicken 2000 windows 10 printing

#QUICKEN 2000 WINDOWS 10 PRINTING FOR MAC#

#QUICKEN 2000 WINDOWS 10 PRINTING PDF#

Ītlantis is an innovative word processor carefully designed with the end-user in mind. Easy Video Reverser Easy Video Reverser indeed reverses video clip and save frames from last to first.

#QUICKEN 2000 WINDOWS 10 PRINTING PDF#

3DPageFlip for Video 3DPageFlip for Video is software to help you to convert PDF files to 3D page-flipping eBooks with video embedded feature. landlordPLUS This program will manage rental properties ie: Houses, Factories, Condominiums etc. GIMP Open-source app, short for GNU Image Manipulation Program, which comes bundled with many options and tools, and supports. With its unique flash full-text search technique, simply enter a. Portable Efficient Notes Efficient Notes is an elegant notebook software package. Investment and Business Valuation The Investment and Business Valuation template is ideal for evaluating a wide range of investment, financial analysis and. Database Dictionaries Portuguese Bilingual Dictionaries Portuguese, Spanish, French, German, English, Italian, Dutch, Swedish in SQL, MS-Excel or. This handy software is great for reporting IT support. goScreenCapture goScreenCapture is a free screen capture and annotation tool.

#QUICKEN 2000 WINDOWS 10 PRINTING FOR MAC#

Express Accounts Free for Mac Express Accounts Accounting Software Free for Mac is professional business accounting software. dbForge Data Generator for SQL Server dbForge Data Generator for SQL Server is a GUI tool for a fast generation of large volumes of SQL Server test table data. Tired of reentering all those figures from bank PDF. PDF2CSV Save Hours of Time With PDF2CSV - Converts PDF to CSV Excel. RationalPlan Multi Project RationalPlan Multi Project is a powerful project management software capable of handling multiple interrelated projects. Convert QFX to QIF and import into Quicken, Quicken 2007 Mac. QFX2QIF Finally, the solution to import your transactions. Top Downloads Duplicate Photo Cleaner for Mac Compare photos, find duplicates, delete similar images and manage your albums the smart way! Duplicate Photo Cleaner will.

0 notes

Text

Avg driver updater serial key september 2018 tinipaste

Avg driver updater serial key september 2018 tinipaste pdf#

Avg driver updater serial key september 2018 tinipaste archive#

Avg driver updater serial key september 2018 tinipaste software#

Avg driver updater serial key september 2018 tinipaste password#

Avg driver updater serial key september 2018 tinipaste windows 8#

Increase your Windows performance, optimize system memory Optimize Internet Explorer, Mozilla, Opera browser View connections statistics, bytes sent/received and traffic by graph Tweak every aspect of your Internet connection manually Improve Internet connection performance with Internet Optimization Wizard Being the best companion for Windows operating system, it's improving your Internet connection and optimizes your Windows system and other software.īe notified when your system can be optimized It's a powerful, all-in-one system performance and Internet optimizer suite.

Avg driver updater serial key september 2018 tinipaste password#

Integrate the Reset Windows Password utilityĪusLogics BoostSpeed is the ideal solution to keep your PC running faster, cleaner and error-free. With a user-friendly interface, you are able to recover lost or forgotten password in a few simple mouse clicks! Recover passwords for VNC, Remote Desktop Connection, Total Commander, Dialup. Recover lost or forgotten passwords easily

Avg driver updater serial key september 2018 tinipaste windows 8#

Recover Windows 8 / 7 / Vista / 2008 / 2003 / XP / 2000 / NT autologon password (when user is logged on).įind lost product key (CD Key) for Microsoft Windows, Office, SQL Server, Exchange Server and many other products. It enables you to view passwords hidden behind the asterisks in password fields of many programs, such as Internet Explorer, CoffeeCup FTP, WinSCP, FTP Explorer, FTP Now, Direct FTP, Orbit Downloader, Mail.Ru Agent, Group Mail, Evernote and much more. Reveal passwords hidden behind the asterisks It also allows you to remove IE Content Advisor password.įTP Password Recovery - Decrypt FTP passwords stored by most popular FTP clients: CuteFTP, SmartFTP, FileZilla, FlashFXP, WS_FTP, CoreFTP, FTP Control, FTP Navigator, FTP Commander, FTP Voyager, WebDrive, 32bit FTP, SecureFX, AutoFTP, BulletProof FTP, Far Manager, etc. Recover passwords to websites saved in Internet Explorer, Firefox, Opera��rowser Password Recovery - Recover passwords to Web sites saved in Internet Explorer 6 / 7 / 8 / 9 / 10, Microsoft Edge, Mozilla Firefox, Opera, Apple Safari, Google Chrome, Chrome Canary, Chromium, SeaMonkey, Flock and Avant Browser. SQL Server Password Recovery - Reset forgotten SQL Server login password for SQL Server 2000 / 2005 / 2008 / 2012 / 2014. MS Access Password Recovery - Unlock Microsoft Office Access XP / 2003 / 2000 / 97 / 95 database.

Avg driver updater serial key september 2018 tinipaste archive#

Office Password Recovery - Recover forgotten passwords for Microsoft Office Word / Excel / PowerPoint 2010 / 2007 / 2003 / XP / 2000 / 97 documents.Īrchive Password Recovery - Recover lost or forgotten passwords for WinRAR or RAR archives, and ZIP archive created with WinZip, WinRAR, PKZip, etc.

Avg driver updater serial key september 2018 tinipaste pdf#

Instantly remove PDF restrictions for editing, copying, printing and extracting data. PDF Password Recovery - Recover lost passwords of protected PDF files (*.pdf). PST Password Recovery - Instantly recover lost or forgotten passwords for Microsoft Outlook 2013 / 2010 / 2007 / 2003 / XP / 2000 / 97 personal folder (.pst) files. Recover lost passwords for protected PDF or Office documents Recovers lost or forgotten passwords for MSN Messenger, Windows Messenger, MSN Explorer, Windows Live Messenger, AIM Pro, AIM 6.x and 7.x, Google Talk, MyspaceIM, Trillian Basic, Trillian Pro, Trillian Astra, Paltalk, Miranda, Digsby, Pidgin, GAIM, EasyWebCam, Camfrog Video Chat, Ipswitch Instant Messaging, etc. Retrieve passwords to mail accounts created in Microsoft Outlook 98 / 2000 / XP / 2003 / 2007 / 2010 / 2013, Outlook Express, Windows Mail, Windows Live Mail, Hotmail, Gmail, Eudora, Incredimail, Becky! Internet Mail, Phoenix Mail, Ipswitch IMail Server, Reach-a-Mail, Mozilla Thunderbird, Opera Mail, The Bat!, PocoMail, Pegasus Mail, etc. It can also reset Windows domain administrator/user password for Windows 2012 / 2008 / 2003 / 2000 Active Directory servers. Instantly bypass, unlock or reset lost administrator and other account passwords on any Windows 10, 8, 7, 2008, Vista, XP, 2003, 2000 system, if you forgot Windows password and couldn't log into the computer. Reset lost Windows administrator or user passwords No need to call in an expensive PC technician.

Avg driver updater serial key september 2018 tinipaste software#

A useful password recovery software for both newbie and expert with no technical skills required. Retrieve passwords for all popular instant messengers, email clients, web browsers, FTP clients and many other applications. Password Recovery Bundle 2018 is a handy toolkit to recover all your lost or forgotten passwords in an easy way! Quickly recover or reset passwords for Windows, PDF, ZIP, RAR, Office Word/Excel/PowerPoint documents.

0 notes

Text

Bilgisayarlı Muhasebe Muhasebe Modülü Monografi

Bilgisayarlı Muhasebe Muhasebe Modülü Monografi

10 sonuç bulundu. Dosya Boyutu Önizleme Bağlantıları İndirme Bağlantıları Türev Tek Düzen Muhasebe Modülü – Turevyazilim.comTürev Tek Düzen Muhasebe Modülü Teknik Özellikler Windows Tabanlı (WinXP,200X,VISTA,7,8) Programlama Dili Olarak C Builder , Delphi , Trans SQL Kullanılmıştır. Veritabanı olarak MS SQL 2000 Server EXCEL (XLS) ve HTML Ortamlarına Veri …Kaynak:…

View On WordPress

0 notes

Quote

Find the best and cheap Windows Web Hosting sites at affordable prices.

Are you ready to learn more about windows web hosting Let’s begin! The original announcement introducing the Windows GUI for the MS-DOS OS was made by Microsoft in 1984. After that multiple enhancements and changes have been made in the Windows family. The Windows family in general comprises of various versions of the Windows Operating system. Windows 3.0, Windows 3.1, Windows 95, Windows 98, Windows 2000 and Windows ME are all a part of it. The 21st century gave the family the additions of Windows XP, Windows Vista, Windows 7, Windows 8 and very recently Windows 10. The Windows NT which is another family of design, brought the operating system into the world of SPARC architecture. With Windows 95, Windows Explorer replaced the Program manager. As we know Windows is an operating system created by Microsoft. Just like the Linux operating system, the Windows server can be used as a web hosting server. A Windows PC or non-Windows PC can both be connected to a non-Windows server with absolutely no issues. Windows however, is not open source or available for free. It is a software sold be Microsoft for a fee. The owner of the software will have to hold a license for it. This license needs to be purchased from Microsoft. If you decide to use a Windows based hosting service instead of Linux, the hosting provider will be the owner of the license and hence, will have paid for it. This payment will in turn make the web hosting service more expensive for you. The commonly used web hosting services are on Linux servers. The open source, inexpensive and robust nature of the Linux servers has made Linux a default option for most web hosting providers. However, a Linux based web hosting solution is not the suitable option for all users. Some users need the other best alternative – The Windows server. There could be many reasons for this.

Some of The Important Reasons Include:

· If your website uses the ASP or .NET framework, a Windows server is your only option. Using the .NET framework in turn has its own advantages. It makes possible programming in languages used in embedded environments like C++ and desktop.

· Also, businesses dependent on Microsoft based software like the Microsoft Office Suite will need a Windows based web hosting service. Use of Microsoft Exchange, SharePoint and FrontPage will need a Windows based server.

· The same rule applies for any users working with technologies like C#, Visual Basic, ColdFusion etc.

· Remote desktop feature used directly from your MAC or PC to your server is available with Windows hosting.

· Some companies have a big pool of programmers highly skilled in the .NET framework and the multiple languages that can be used with it.

Some people believe that if you use or own a Windows computer, you will have to default to the Windows web hosting service! However, this is far from the truth. A web hosting service is not dependent on the operating system used by your device. A Windows computer can easily work with a Linux server.

Sometimes Linux Is a Better Choice

If the user is working with technologies like Ruby, Python, Perl etc., a Linux based web hosting service is a better choice. It’s secure, open sourced, inexpensive and robust nature give it that reputation. Applications like WordPress work well with both Windows and Linux. However, the support available for WordPress working with Windows is very less compared to that available for WordPress users working with Linux. Hence, unless the user is completely positive that they do not need any help from the user community resolving issues, Linux would be the better option.

The choice also depends hugely on the databases generally used by the website developer. Linux uses the MySQL database while Windows uses the Microsoft Access or Microsoft SQL databases.

Control Panels

Users that prefer or have significant expertise in using the Plesk or DirectAdmin hosting panels will have to stick to using a Windows based web hosting service. On the other hand, users of cPanel or WHM need to use a Linux based hosting service.

Advantages and Disadvantages of Windows Hosting

Let’s look at the advantages first:

· Easier to use, update and manage in general

· Very stable when managed well by the host

· Supports scripting languages like ASP

· Very less interaction with the command line interface required

Now for the disadvantages:

· Linux is the industry standard

· Not flexible to be customized

· Not the best option for working with the Apache module

· Not very convenient to use with PHP

· A lot more expensive compared to Linux

· Support available for WordPress with Windows is not that great

· Most web hosting providers use Linux by default

Windows Hosting Provider Options

There are many companies that offer packages for Windows based web hosting services. These usually differ based on the price and available features. The prices can vary greatly. They range from as cheap as $1/month to as expensive as $95/month. Weighing the pros and cons of each option along with analyzing the user requirements is key to selecting the right web hosting provider. GoDaddy is the web hosting provider most commonly recommended for Windows hosting. It supports all the technologies a user might need like ASP, .NET and MS SQL databases. They also have great technical support available for any issues that may arise. Other good options for hosting providers include Bluehost and InMotion.

General Information for Beginners or Professionals

Microsoft technologies have always seen a strong presence in the IT and computing world. As a result, IT professionals with Microsoft certifications are always in high demand. Anyone wishing to get Microsoft certified has many different domains to choose from. They vary from server administration, desktop support to specific applications like Lync and Exchange. Recent times have seen a high demand for professionals with certifications in the SharePoint Server administration in particular.

#best web hosting services#Web Hosting Services#Different Types of Web Hosting Services#Best Domain and Hosting Provider#cheap web hosting#domain and hosting#best and cheap hosting#best hosting#web hosting companies#best hosting provider#hosting plans

0 notes

Text

MongoDB

What is MongoDB?

MongoDB is a document-oriented NoSQL database used for high volume data storage. Instead of using tables and rows as in the traditional relational databases, MongoDB makes use of collections and documents. Documents consist of key-value pairs which are the basic unit of data in MongoDB. Collections contain sets of documents and function which is the equivalent of relational database tables. MongoDB is a database which came into light around the mid-2000s.

In this tutorial, you will learn-

Read More

MongoDB Features

Each database contains collections which in turn contains documents. Each document can be different with a varying number of fields. The size and content of each document can be different from each other.

The document structure is more in line with how developers construct their classes and objects in their respective programming languages. Developers will often say that their classes are not rows and columns but have a clear structure with key-value pairs.

The rows (or documents as called in MongoDB) doesn’t need to have a schema defined beforehand. Instead, the fields can be created on the fly.

The data model available within MongoDB allows you to represent hierarchical relationships, to store arrays, and other more complex structures more easily.

Scalability — The MongoDB environments are very scalable. Companies across the world have defined clusters with some of them running 100+ nodes with around millions of documents within the database

MongoDB Example

Read More

The below example shows how a document can be modeled in MongoDB.

The _id field is added by MongoDB to uniquely identify the document in the collection

What you can note is that the Order Data (OrderID, Product, and Quantity ) which in RDBMS will normally be stored in a separate table, while in MongoDB it is actually stored as an embedded document in the collection itself. This is one of the key differences in how data is modeled in MongoDB.

Key Components of MongoDB Architecture

Below are a few of the common terms used in MongoDB

_id — This is a field required in every MongoDB document. The _id field represents a unique value in the MongoDB document. The _id field is like the document’s primary key. If you create a new document without an _id field, MongoDB will automatically create the field. So for example, if we see the example of the above customer table, Mongo DB will add a 24 digit unique identifier to each document in the collection.

Collection — This is a grouping of MongoDB documents. A collection is the equivalent of a table which is created in any other RDMS such as Oracle or MS SQL. A collection exists within a single database. As seen from the introduction collections don’t enforce any sort of structure.

Cursor — This is a pointer to the result set of a query. Clients can iterate through a cursor to retrieve results. Read More

Database — This is a container for collections like in RDMS wherein it is a container for tables. Each database gets its own set of files on the file system. A MongoDB server can store multiple databases.

Document — A record in a MongoDB collection is basically called a document. The document, in turn, will consist of field name and values.

Field — A name-value pair in a document. A document has zero or more fields. Fields are analogous to columns in relational databases.The following diagram shows an example of Fields with Key value pairs. So in the example below CustomerID and 11 is one of the key value pair’s defined in the document.

JSON — This is known as JavaScript Object Notation. This is a human-readable, plain text format for expressing structured data. JSON is currently supported in many programming languages.

Just a quick note on the key difference between the _id field and a normal collection field. The _id field is used to uniquely identify the documents in a collection and is automatically added by MongoDB when the collection is created.

Why Use MongoDB?

Below are the few of the reasons as to why one should start using MongoDB

Document-oriented — Since MongoDB is a NoSQL type database, instead of having data in a relational type format, it stores the data in documents. This makes MongoDB very flexible and adaptable to real business world situation and requirements.

Ad hoc queries — MongoDB supports searching by field, range queries, and regular expression searches. Queries can be made to return specific fields within documents.

Indexing — Indexes can be created to improve the performance of searches within MongoDB. Any field in a MongoDB document can be indexed.

Replication — MongoDB can provide high availability with replica sets. A replica set consists of two or more mongo DB instances. Each replica set member may act in the role of the primary or secondary replica at any time. The primary replica is the main server which interacts with the client and performs all the read/write operations. The Secondary replicas maintain a copy of the data of the primary using built-in replication. When a primary replica fails, the replica set automatically switches over to the secondary and then it becomes the primary server.

Load balancing — MongoDB uses the concept of sharding to scale horizontally by splitting data across multiple MongoDB instances. MongoDB can run over multiple servers, balancing the load and/or duplicating data to keep the system up and running in case of hardware failure.

Data Modelling in MongoDB

As we have seen from the Introduction section, the data in MongoDB has a flexible schema. Unlike in SQL databases, where you must have a table’s schema declared before inserting data, MongoDB’s collections do not enforce document structure. This sort of flexibility is what makes MongoDB so powerful.

When modeling data in Mongo, keep the following things in mind

Read More

What are the needs of the application — Look at the business needs of the application and see what data and the type of data needed for the application. Based on this, ensure that the structure of the document is decided accordingly.

What are data retrieval patterns — If you foresee a heavy query usage then consider the use of indexes in your data model to improve the efficiency of queries.

Are frequent inserts, updates and removals happening in the database? Reconsider the use of indexes or incorporate sharding if required in your data modeling design to improve the efficiency of your overall MongoDB environment.

Difference between MongoDB & RDBMS

Below are some of the key term differences between MongoDB and RDBMS

Read More

Relational databases are known for enforcing data integrity. This is not an explicit requirement in MongoDB.

RDBMS requires that data be normalized first so that it can prevent orphan records and duplicates Normalizing data then has the requirement of more tables, which will then result in more table joins, thus requiring more keys and indexes.As databases start to grow, performance can start becoming an issue. Again this is not an explicit requirement in MongoDB. MongoDB is flexible and does not need the data to be normalized first.

1 note

·

View note

Text

MongoDB

What is MongoDB?

MongoDB is a document-oriented NoSQL database used for high volume data storage. Instead of using tables and rows as in the traditional relational databases, MongoDB makes use of collections and documents. Documents consist of key-value pairs which are the basic unit of data in MongoDB. Collections contain sets of documents and function which is the equivalent of relational database tables. MongoDB is a database which came into light around the mid-2000s.

In this tutorial, you will learn-

Read More

MongoDB Features

Each database contains collections which in turn contains documents. Each document can be different with a varying number of fields. The size and content of each document can be different from each other.

The document structure is more in line with how developers construct their classes and objects in their respective programming languages. Developers will often say that their classes are not rows and columns but have a clear structure with key-value pairs.

The rows (or documents as called in MongoDB) doesn’t need to have a schema defined beforehand. Instead, the fields can be created on the fly.

The data model available within MongoDB allows you to represent hierarchical relationships, to store arrays, and other more complex structures more easily.

Scalability – The MongoDB environments are very scalable. Companies across the world have defined clusters with some of them running 100+ nodes with around millions of documents within the database

MongoDB Example

Read More

The below example shows how a document can be modeled in MongoDB.

The _id field is added by MongoDB to uniquely identify the document in the collection

What you can note is that the Order Data (OrderID, Product, and Quantity ) which in RDBMS will normally be stored in a separate table, while in MongoDB it is actually stored as an embedded document in the collection itself. This is one of the key differences in how data is modeled in MongoDB.

Key Components of MongoDB Architecture

Below are a few of the common terms used in MongoDB

_id – This is a field required in every MongoDB document. The _id field represents a unique value in the MongoDB document. The _id field is like the document’s primary key. If you create a new document without an _id field, MongoDB will automatically create the field. So for example, if we see the example of the above customer table, Mongo DB will add a 24 digit unique identifier to each document in the collection.

Collection – This is a grouping of MongoDB documents. A collection is the equivalent of a table which is created in any other RDMS such as Oracle or MS SQL. A collection exists within a single database. As seen from the introduction collections don’t enforce any sort of structure.

Cursor – This is a pointer to the result set of a query. Clients can iterate through a cursor to retrieve results. Read More

Database – This is a container for collections like in RDMS wherein it is a container for tables. Each database gets its own set of files on the file system. A MongoDB server can store multiple databases.

Document – A record in a MongoDB collection is basically called a document. The document, in turn, will consist of field name and values.

Field – A name-value pair in a document. A document has zero or more fields. Fields are analogous to columns in relational databases.The following diagram shows an example of Fields with Key value pairs. So in the example below CustomerID and 11 is one of the key value pair’s defined in the document.

JSON – This is known as JavaScript Object Notation. This is a human-readable, plain text format for expressing structured data. JSON is currently supported in many programming languages.

Just a quick note on the key difference between the _id field and a normal collection field. The _id field is used to uniquely identify the documents in a collection and is automatically added by MongoDB when the collection is created.

Why Use MongoDB?

Below are the few of the reasons as to why one should start using MongoDB

Document-oriented – Since MongoDB is a NoSQL type database, instead of having data in a relational type format, it stores the data in documents. This makes MongoDB very flexible and adaptable to real business world situation and requirements.

Ad hoc queries – MongoDB supports searching by field, range queries, and regular expression searches. Queries can be made to return specific fields within documents.

Indexing – Indexes can be created to improve the performance of searches within MongoDB. Any field in a MongoDB document can be indexed.

Replication – MongoDB can provide high availability with replica sets. A replica set consists of two or more mongo DB instances. Each replica set member may act in the role of the primary or secondary replica at any time. The primary replica is the main server which interacts with the client and performs all the read/write operations. The Secondary replicas maintain a copy of the data of the primary using built-in replication. When a primary replica fails, the replica set automatically switches over to the secondary and then it becomes the primary server.

Load balancing – MongoDB uses the concept of sharding to scale horizontally by splitting data across multiple MongoDB instances. MongoDB can run over multiple servers, balancing the load and/or duplicating data to keep the system up and running in case of hardware failure.

Data Modelling in MongoDB

As we have seen from the Introduction section, the data in MongoDB has a flexible schema. Unlike in SQL databases, where you must have a table’s schema declared before inserting data, MongoDB’s collections do not enforce document structure. This sort of flexibility is what makes MongoDB so powerful.

When modeling data in Mongo, keep the following things in mind

Read More