#Piecewise regression analysis

Explore tagged Tumblr posts

Text

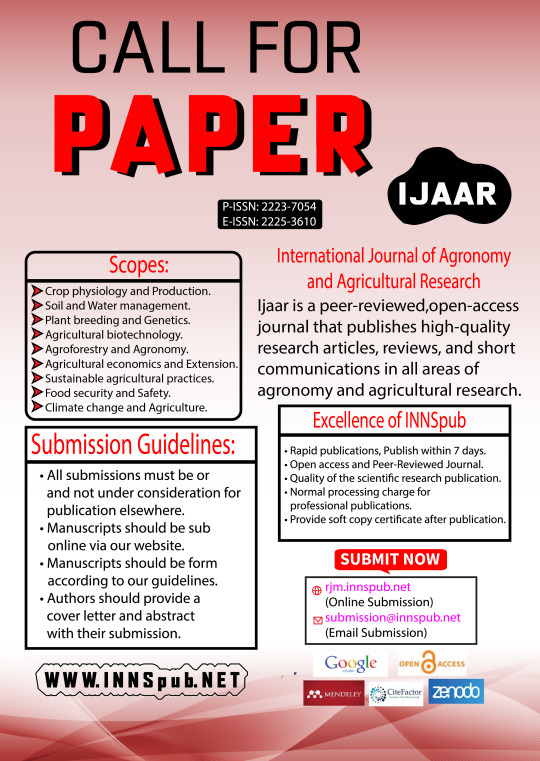

Seasonal Growth Dynamics of Arundo donax L. in the U.S

Abstract

Giant reed (Arundo donax L.) has been extensively evaluated as a dedicated energy crop for biomass and biofuel production in southern Europe and the United States, with very favorable results. Current agronomic and biologic research on giant reed focuses on management practices, development of new cultivars, and determining differences among existing cultivars. Even though detailed information on the growth patterns of giant reed would assist in development of improved management practices, this information is not available in the United States. Therefore, the objective of this 2-year field study was to describe the seasonal growth patterns of giant reed in Alabama, United States. Changes in both plant height and biomass yield of giant reed with time were well described by a Gompertz function. The fastest growing period occurred at approximately 66 d after initiation of regrowth (mid-May), when the absolute maximum growth rate was of 0.045 m d-1 and 0.516mg ha-1 d-1. After mid-May, the rate of growth decreased until maturation at approximately 200 d after initiation of regrowth (mid- to late September). The observed maximum average plant height and biomass yield were 5.28 m and 48.56mg ha-1, respectively. Yield decreased following maturation up to 278 d after initiation (early to mid-December) of growth in spring, partly as a result of leaf loss, and was relatively stable thereafter.

Introduction

Giant reed (Arundo donax L.) is a perennial rhizomatous C3 grass native to East Asia which is grown in both grasslands and wetlands, and is especially well adapted to Mediterranean environments (Polunin and Huxley, 1987). Since giant reed is sterile, it is propagated vegetatively, either from stem cuttings or rhizome pieces, or by means of micro-propagation. Due to its easy adaptability to different environment conditions and rapid growth with little or no fertilizer and pesticide inputs, giant reed has been extensively evaluated as a dedicated cellulosic energy crop for biomass and biofuel production in southern Europe and the United States, with very favorable results (Vecchiet et al., 1996; Merlo et al., 1998; Hidalgo and Fernandez, 2000; Lewandowski et al., 2003; Odero et al., 2011; Huang et al., 2014; Nocentini et al., 2018; Monti et al., 2019). Most perennial grasses have poor yields during the year of establishment, but giant reed is an exception: a first-year yield of over 16mg ha-1 was reported by Angelini et al. (2005) at a planting density of 20,000 plants ha-1 . Biomass yields are typically 20-40mg ha-1 year-1 without any fertilization after establishment (Angelini et al., 2005; Cosentino et al., 2005; Angelini et al., 2009). Calorific value of mature giant reed biomass is about 17 MJkg-1 (Angelini et al., 2005). The average energy input is approximately 2% of the average energy output over a 12-year period (Angelini et al., 2009). Unlike most other grasses, giant reed possesses a lignin content of 25%, which is similar to that of wood, and a cellulose content of 42% and a hemicellulose content of 19%, making it a desirable cellulosic energy crop for both solid and liquid biofuels production (Faix et al., 1989; Scordia et al., 2012; Lemons et al., 2015). Giant reed can also help mitigate carbon dioxide (CO2) emissions from fossil fuels because rhizomes sequester carbon into the soil. The reported carbon (C) sequestration by giant reed rhizomes was 40-50mg C ha-1 over an 11-year period (Huang, 2012), which is 6-8 times higher than that by the roots of switchgrass (Panicum virgatum L.) (Ma, 1999), a model cellulosic energy crop selected by the United States Department of Energy (Wright, 2007). Current agronomic and biologic research on giant reed focuses on management practices, development of new cultivars, and determining differences among existing cultivars (Nassi o Di Nasso et al., 2010; Nassi o Di Nasso et al., 2011; Nassi Nassi o Di Nasso et al., 2013; Dragoni et al., 2016). Even though detailed information on the growth patterns of giant reed would assist in development of improved management practices, this information is not available in the United States. Therefore, the objective of this study was to describe the seasonal growth patterns of giant reed in Alabama, United States.

Source : Seasonal Growth Dynamics of Arundo donax L. in the U.S | InformativeBD

#Giant reed#Cellulosic energy crop#Plant height and aboveground biomass yield#Growth analysis and Gompertz function#Piecewise regression analysis#SAS PROC NLIN procedure

1 note

·

View note

Text

the rival who outsmarted a.i. using only excel and pen and paper calculus

📖 The Rival Who Outsmarted AI Using Only Excel and Pen-and-Paper Calculus

In the same AI-driven world where Elias Grayson became a millionaire by combining manual pivot table analysis with AI, his childhood nemesis, Victor Kade, harbored a grudge. He had always been second to Elias, but now he sought revenge—not through AI, but by proving that a single human, armed only with Excel and calculus, could still outthink the most advanced machine learning models.

🌍 The AI-Dominated Economy That Crushed Small Entrepreneurs

By the year 2038, AI-controlled corporations had made it nearly impossible for independent entrepreneurs to break into the market. AI-driven financial models, supply chain optimizations, and instant market forecasts ensured that only the biggest corporations had access to real-time predictive analytics.

Small businesses that tried to compete were blocked by AI shortcuts that: 🔹 Restricted high-speed financial transactions to large-scale entities. 🔹 Hid alternative pricing models that could let small companies undercut AI-driven corporations. 🔹 Prevented custom manual optimizations in financial forecasting by assuming "AI knows best."

Elias had built his fortune by finding AI’s blind spots using hybrid analytics, but Victor Kade took a different approach.

📌 The Pen-and-Paper Advantage: Outthinking AI’s Shortcuts

Victor refused to use AI-driven financial models. He believed that modern AI, despite its power, was fundamentally lazy—always using shortcuts, optimizing for the most probable outcomes instead of exploring outlier opportunities.

🔹 Step 1: Identifying AI’s Weakness in Pricing Models

Instead of using AI-restricted financial forecasting software, Victor manually sketched demand curves on paper.

🔎 He realized that AI-based e-commerce pricing models used a modified logistic function to determine optimal price points: Poptimal=11+e−k(x−x0)P_{\text{optimal}} = \frac{1}{1 + e^{-k(x - x_0)}}

Where:

PoptimalP_{\text{optimal}} = AI's recommended price.

xx = product demand.

kk = AI’s estimate of price sensitivity.

x0x_0 = AI’s pre-determined "sweet spot" for maximizing revenue.

📌 AI's Shortcut: The AI ignored irregular demand spikes that a small business could exploit.

✔ Victor’s Pen-and-Paper Insight: Using calculus, he manually differentiated the pricing equation and found that the AI was overly conservative with discount strategies.

Instead of following the AI’s pre-determined price elasticity curve, he used second-order derivatives to find "non-standard" pricing moments where customers would respond disproportionately to small price changes.

📊 Step 2: Reverse-Engineering AI’s Market Forecasts with Excel

🔹 AI-controlled market reports blocked small competitors by assuming they lacked resources to scale. 🔹 Victor created an alternative pricing model entirely in Excel, using only:

Manually inputted supply chain costs

Customer psychology-driven discount functions

Calculus-based price elasticity optimizations

📌 How He Used Excel Without AI: Instead of using AI-driven forecasting models, he built his own demand curve adjustments using Excel’s Solver tool.

🔹 AI assumed the demand curve was smooth. Victor found points where demand was chaotic. 🔹 AI only ran pre-configured regressions. Victor ran manual piecewise linear regression.

🧮 Step 3: Exploiting AI’s Cost Calculation Errors

📌 AI-controlled corporations assumed that raw materials would always have uniform pricing. ✔ Victor manually calculated supply fluctuations using integral calculus in Excel.

The Hidden Supply Chain Error AI Missed

AI smoothed out fluctuations in supplier pricing to simplify predictions.

But in reality, real-world costs followed an irregular pattern: Ctotal=∫t1t2(Praw(t)+αPshipping(t))dtC_{\text{total}} = \int_{t_1}^{t_2} (P_{\text{raw}}(t) + \alpha P_{\text{shipping}}(t)) dt

Where:

CtotalC_{\text{total}} = True total supply cost.

Praw(t)P_{\text{raw}}(t) = Raw material price at time tt.

Pshipping(t)P_{\text{shipping}}(t) = Shipping price fluctuation.

α\alpha = Weighting factor for logistics costs.

📌 AI ignored local fluctuations in raw material availability. ✔ Victor used only Excel and pen-and-paper calculus to model “hidden” raw material price dips.

By tracking non-linear cost variations manually, he was able to predict supply surges 4 weeks before AI-driven corporations. This let him pre-buy raw materials at 30% lower costs, giving him a massive price advantage.

💰 Step 4: Victor’s Revenge – Becoming a Millionaire Without AI

🔹 Victor used no AI, no automation—just pure Excel, calculus, and human intuition. 🔹 He undercut AI-driven corporations in pricing, making 10x profit margins. 🔹 By tracking overlooked supply chain fluctuations, he controlled niche markets AI ignored.

📌 Final Result:

Year 1: Small-scale business selling only niche discounted products.

Year 2: Millions in profits by exploiting AI’s failure to capture non-linear price fluctuations.

Year 3: AI-driven corporations bought his company just to stop him from disrupting their models.

🚀 The Ultimate Lesson: When AI Takes Shortcuts, Manual Analysis Wins

✔ AI is powerful but lazy—it optimizes too aggressively, ignoring edge-case opportunities. ✔ Human intuition, Excel, and calculus still have value in an AI-driven economy. ✔ Victor’s revenge was proving that AI wasn’t infallible—a lone entrepreneur could still outthink trillion-dollar AI corporations.

0 notes

Text

Research paper on Seasonal growth patterns of Arundo donax L. | IJAAR Journal

Research paper on Seasonal growth patterns of Arundo donax L. | IJAAR Journal

Mr. Ping Huang, and David I Bransby from the institute of the Department of Crop, Soil, and Environmental Sciences, Auburn University, Auburn, Alabama, USA, wrote a research article entitled “Seasonal growth patterns of Arundo donax L. in the United States.” This paper was published by the International Journal of Agronomy and Agricultural Research – IJAAR, under volume 16, March issue 2020.…

View On WordPress

#Cellulosic energy crop#Giant reed#Growth analysis and Gompertz function#IJAAR Journal#Piecewise regression analysis#Plant height and aboveground biomass yield#SAS PROC NLIN procedure

1 note

·

View note

Text

Data Science

As tools become simpler to make use of and the people who use them become more obtainable, the worth of Data Science will go down. Current infrastructures for developing big-data functions are able to course of –via big-data analytics- huge quantities of knowledge, utilizing clusters of machines that collaborate to perform parallel computations. However, present infrastructures were not designed to work with the requirements of time-critical applications; they're more targeted on general-purpose purposes than time-critical ones. Addressing this problem from the angle of the real-time methods group, this paper considers time-critical big-data.

The information in normal and defective conditions are simulated through the use of the DT model. Both the simulated knowledge and extracted historical knowledge are utilized to enhance fault prediction. A convolutional neural community for fault prediction might be trained with the generated data which matches the characteristic of the autoclave in defective conditions. The effectiveness of the proposed methodology is verified via result analysis. To stand out as a prolific Data Scientist being well versed in machine studying is important. Knowing the methods like logistic regression, decision timber, and so on. can aid the method.

In summary, data science may be due to this fact described as an applied branch of statistics. A large number of fields and subjects, starting from everyday life to conventional analysis fields (i.e., geography and transportation, biology and chemistry, medicine and rehabilitation) involve big information problems. The popularizing of assorted forms of community has diversified varieties, issues, and solutions for large data more than ever before. In this paper, we review current analysis in data sorts, storage fashions, privacy, data security, evaluation methods, and applications associated with network massive information. Finally, we summarize the challenges and development of huge knowledge to foretell present and future tendencies.

Data scientists are responsible for breaking down huge amounts of knowledge into usable information and creating software programs and algorithms that help corporations and organizations decide optimum operations. As knowledge continues to have a serious impression on the world, Data Science does as well due to the close relationship between the two. During the 1990s, in style terms for the method of discovering patterns in datasets included "knowledge discovery" and "data mining". We examine “citizen” data scientists and debate between Jeffersonians, who search to empower on an everyday basis worker with Data Science tools, and Platonists who argue that democratizing data science results in anarchy and overfitting. In the present world, the technology and utility of knowledge is a crucial economic activity. Data Science facilitates it with its power to extract info from massive volumes of information.

It has robust scalability and excessive processing efficiency and has utility and reference value. Data Science is the essence of deriving valuable insights from information. It is emerging to satisfy the challenges of processing very large datasets, i.e. Big Data, with the explosion of new knowledge constantly generated from various channels, similar to good gadgets, net, mobile and social media. Data science is expounded to data mining, machine studying and large data. Digital enterprise is considered one of the necessary and priceless industries' names today and it is the mixture of enterprise and IT elements and its uses.

Rather than performing the info science themselves, they need to prepare and guide the users in changing into self-sufficient. Organizations can establish a formal training program for customers who wish to become citizen Data Scientist. At the tip, customers receive a certification that enables them entry to the tools. As they gain more experience and data they will in turn mentor different business customers. This paper presents a movie recommendation system in accordance with scores that the users present. In view of the film evaluation system, the impacts of entry management and multimedia safety are analyzed, and a secure hybrid cloud storage structure is offered.

It deals with the definition of a time-critical big-data system from the point of view of requirements, analyzing the particular characteristics of some in style big-data applications. This evaluation is complemented by the challenges stemming from the infrastructures that help the functions, proposing an architecture and providing initial performance patterns that join software costs with infrastructure performance. Cloud computing is actually a mechanism used for virtualization IT resources software programs and hardware availability. Cloud computing helps in using less computer hardware together with IT Infrastructure delivery.Use of minimal software with utilization of applications can also be essential features of Cloud Computing and Applications.

This leads us to understand how guidelines of linear algebra operate over arrays of knowledge. We aim to propose some solutions for digital pictures safety, primarily utilizing a probabilistic-randomness method; chaotic maps & chance. Data scientists use Python and R for information preparation and statistical analysis. [newline]Compared to R, Python is used for common objectives, more readable, easier, and offers more flexibility than learning. Moreover, Python is used in a number of verticals apart from Data Science and presents you various purposes. Data Science facilitates firms to leverage social media content to obtain real-time media content material utilization patterns. This permits the companies to create target audience-specific content, measure content performance, and recommend on-demand content material.

By this I imply that there are some critical points that end result from handing these tools to the uninitiated. Such individuals need to combine area data and adequate data science course in hyderabad , presumably with certification”. Innovative data intensive functions similar to health, power, cybersecurity, transport, food, soil and water, sources, advanced manufacturing, environmental Change, and so forth.

In 1974, Peter Naur mentioned the term ‘Data Science’ a number of times in his evaluation, Concise Survey of Computer Methods. In 1977, the International Association for Statistical Computing was shaped to link trendy computer technology, conventional statistical methodology, and domain experience to transform knowledge into data. In the same 12 months, Tukey composed a paper, Exploratory Data Analysis, that briefed the importance of using information. If you’re also planning to explore your Data Science career with EXL, these EXL Data Science interview questions will assist you to prepare higher.

The proposed Hierarchical Multiresolution Time Series Representation model makes use of a buffer-based approach that mixes one-pass stream processing with hierarchical aggregation to attain excessive processing speed without extreme hardware necessities. In addition, this paper presents a brand new representation primarily based on the proposed model, Hierarchical Multiresolution Linear-function-based Piecewise Statistical Approximation. The proposed representation considers fluctuations and continuity of modeled processes to be able to preserve fundamental characteristics of time series at lowered dimensionality. The case study outcomes for the generated data set verify that the proposed mannequin leads to greater and more stable processing speed at decreasing RAM consumption evaluating a related model, particularly when coping with greater number of time resolutions. The case research results for real UK good meter knowledge verify that the proposed representation results in a reduced amount of information loss and an improvement in subsequent time series clustering compared to associated time sequence illustration.

When mixed with languages like Java, C and C++, python turns into extremely adaptive and suitable to make use of. An estimate of eighty eight % have a masters degree whereas forty eight percent have a doctorate. It has become necessary to have an excellent schooling to develop the required deep understanding of a big selection of matters. Most Data Scientists are well versed within the utilization of Hadoop and large knowledge queries. DSS was created to supply a prime worldwide forum for researchers, industry practitioners and domain experts to exchange the most recent advances in Data Science and Data Systems in addition to their synergy.

There is, however, a debate between two teams; Jeffersonians and Platonists. The Jeffersonians argue on behalf of the yeoman farmer, or in this case the yeoman scientist. Being deeply committed to the republicanism of science they are opposed to an aristocracy of knowledge, to an elitism of hackers, material consultants, and statisticians. This leads to higher choice making since they're getting into the details of the model and the info, creating a deeper understanding of what is driving their decisions. Forbes reported that “unicorn Data Scientists”, these special people who are subject matter specialists with hacking and statistical expertise, can earn $240,000 yearly.

2019 is the fifth event following the success in 2015 (DSDIS-2015), 2016 (DSS-2016), 2017 (DSS-2017) and 2018 (DSS-2018). There is still no consensus on the definition of Data Science and it is considered by some to be a buzzword. The existence of Comet NEOWISE was found by analyzing astronomical survey knowledge acquired by a space telescope, the Wide-field Infrared Survey Explorer. In order to reply to the outlined questions, descriptive statistics allows you to rework each statement in your data into insights that make sense. Common information buildings , writing capabilities, logic, control flow, looking out and sorting algorithms, object-oriented programming, and dealing with exterior libraries. Most data roles are programming-based apart from a number of things like business intelligence, market evaluation, product analyst, and so forth.

data science training in hyderabad

All the pieces have been really falling into place for Python data professionals. We are roughly a decade faraway from the beginnings of the modern machine learning platform, inspired largely by the growing ecosystem of open-source Python-based technologies for data scientists. It’s a good time for us to reflect back upon the progress that has been made, spotlight the most important problems enterprises have with existing ML platforms, and talk about what the subsequent generation of platforms shall be like. As we’ll focus on, we believe the following disruption within the ML platform market will be the growth of data-first AI platforms.

This comprises information that has undefined content and doesn’t fit databases like movies, blog posts, etc. There are exceptional resources to dive deep into math, but most of us aren't made for it, and one doesn’t have to be a gold medalist to learn Data Science. Every Data Science course that I enlisted there required college students to have an honest understanding of programming, math, or statistics. For instance,the most famous course on ML by Andrew Ng additionally depends heavily on the understanding of vector algebra and calculus. Calculus — derivatives and limits, by-product rules, chain rule , partial derivatives , the convexity of features, local/global minima, the math behind a regression model, applied math for coaching a mannequin from scratch.

For more information

360DigiTMG - Data Analytics, Data Science Course Training Hyderabad

Address - 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081

099899 94319

https://g.page/Best-Data-Science

0 notes

Text

R Packages worth a look

Generalized Path Analysis for Social Networks (gretel) The social network literature features numerous methods for assigning value to paths as a function of their ties. ‘gretel’ systemizes these approaches, casting them as instances of a generalized path value function indexed by a penalty parameter. The package also calculates probabilistic path value and identifies optimal paths in either value framework. Finally, proximity matrices can be generated in these frameworks that capture high-order connections overlooked in primitive adjacency sociomatrices. Novel methods are described in Buch (2019) https://…/analyzing-networks-with-gretel.html>. More traditional methods are also implemented, as described in Yang, Knoke (2001) . Coclustering Adjusted Rand Index and Bikm1 Procedure for Contingency and Binary Data-Sets (bikm1) Coclustering of the rows and columns of a contingency or binary matrix, or double binary matrices and model selection for the number of row and column clusters. Three models are considered: the Poisson latent block model for contingency matrix, the binary latent block model for binary matrix and a new model we develop: the multiple latent block model for double binary matrices. A new procedure named bikm1 is implemented to investigate more efficiently the grid of numbers of clusters. Then, the studied model selection criteria are the integrated completed likelihood (ICL) and the Bayesian integrated likelihood (BIC). Finally, the coclustering adjusted Rand index (CARI) to measure agreement between coclustering partitions is implemented. Robert Valerie and Vasseur Yann (2017) . Semi-Supervised Regression Methods (ssr) An implementation of semi-supervised regression methods including self-learning and co-training by committee based on Hady, M. F. A., Schwenker, F., & Palm, G. (2009) . Users can define which set of regressors to use as base models from the ‘caret’ package, other packages, or custom functions. Planning and Analysing Survival Studies under Non-Proportional Hazards (nph) Piecewise constant hazard functions are used to flexibly model survival distributions with non-proportional hazards and to simulate data from the specified distributions. Also, a function to calculate weighted log-rank tests for the comparison of two hazard functions is included. Finally, a function to calculate a test using the maximum of a set of test statistics from weighted log-rank tests is provided. This test utilizes the asymptotic multivariate normal joint distribution of the separate test statistics. The correlation is estimated from the data. http://bit.ly/2YsLpBb

0 notes

Text

Polygenic Determinants for Subsequent Breast Cancer Risk in Survivors of Childhood Cancer: The St Jude Lifetime Cohort Study (SJLIFE)

Purpose: The risk of subsequent breast cancer among female childhood cancer survivors is markedly elevated. We aimed to determine genetic contributions to this risk, focusing on polygenic determinants implicated in breast cancer susceptibility in the general population.

Experimental Design: Whole-genome sequencing (30x) was performed on survivors in the St Jude Lifetime Cohort, and germline mutations in breast cancer predisposition genes were classified for pathogenicity. A polygenic risk score (PRS) was constructed for each survivor using 170 established common risk variants. Relative rate (RR) and 95% confidence interval (95% CI) of subsequent breast cancer incidence were estimated using multivariable piecewise exponential regression.

Results: The analysis included 1,133 female survivors of European ancestry (median age at last follow-up = 35.4 years; range, 8.4–67.4), of whom 47 were diagnosed with one or more subsequent breast cancers (median age at subsequent breast cancer = 40.3 years; range, 24.5–53.0). Adjusting for attained age, age at primary diagnosis, chest irradiation, doses of alkylating agents and anthracyclines, and genotype eigenvectors, RRs for survivors with PRS in the highest versus lowest quintiles were 2.7 (95% CI, 1.0–7.3), 3.0 (95% CI, 1.1–8.1), and 2.4 (95% CI, 0.1–81.1) for all survivors and survivors with and without chest irradiation, respectively. Similar associations were observed after excluding carriers of pathogenic/likely pathogenic mutations in breast cancer predisposition genes. Notably, the PRS was associated with the subsequent breast cancer rate under the age of 45 years (RR = 3.2; 95% CI, 1.2–8.3).

Conclusions: Genetic profiles comprised of small-effect common variants and large-effect predisposing mutations can inform personalized breast cancer risk and surveillance/intervention in female childhood cancer survivors. Clin Cancer Res; 1–6. ©2018 AACR.

https://ift.tt/2Rlo2ol

0 notes

Text

In-depth introduction to machine learning in 15 hours of expert videos

In January 2014, Stanford University professors Trevor Hastie and Rob Tibshirani (authors of the legendary Elements of Statistical Learning textbook) taught an online course based on their newest textbook, An Introduction to Statistical Learning with Applications in R (ISLR). I found it to be an excellent course in statistical learning (also known as “machine learning”), largely due to the high quality of both the textbook and the video lectures. And as an R user, it was extremely helpful that they included R code to demonstrate most of the techniques described in the book.

If you are new to machine learning (and even if you are not an R user), I highly recommend reading ISLR from cover-to-cover to gain both a theoretical and practical understanding of many important methods for regression and classification. It is available as a free PDF download from the authors’ website.

If you decide to attempt the exercises at the end of each chapter, there is a GitHub repository of solutions provided by students you can use to check your work.

As a supplement to the textbook, you may also want to watch the excellent course lecture videos (linked below), in which Dr. Hastie and Dr. Tibshirani discuss much of the material. In case you want to browse the lecture content, I’ve also linked to the PDF slides used in the videos.

Chapter 1: Introduction (slides, playlist)

Opening Remarks and Examples (18:18)

Supervised and Unsupervised Learning (12:12)

Chapter 2: Statistical Learning (slides, playlist)

Statistical Learning and Regression (11:41)

Curse of Dimensionality and Parametric Models (11:40)

Assessing Model Accuracy and Bias-Variance Trade-off (10:04)

Classification Problems and K-Nearest Neighbors (15:37)

Lab: Introduction to R (14:12)

Chapter 3: Linear Regression (slides, playlist)

Simple Linear Regression and Confidence Intervals (13:01)

Hypothesis Testing (8:24)

Multiple Linear Regression and Interpreting Regression Coefficients (15:38)

Model Selection and Qualitative Predictors (14:51)

Interactions and Nonlinearity (14:16)

Lab: Linear Regression (22:10)

Chapter 4: Classification (slides, playlist)

Introduction to Classification (10:25)

Logistic Regression and Maximum Likelihood (9:07)

Multivariate Logistic Regression and Confounding (9:53)

Case-Control Sampling and Multiclass Logistic Regression (7:28)

Linear Discriminant Analysis and Bayes Theorem (7:12)

Univariate Linear Discriminant Analysis (7:37)

Multivariate Linear Discriminant Analysis and ROC Curves (17:42)

Quadratic Discriminant Analysis and Naive Bayes (10:07)

Lab: Logistic Regression (10:14)

Lab: Linear Discriminant Analysis (8:22)

Lab: K-Nearest Neighbors (5:01)

Chapter 5: Resampling Methods (slides, playlist)

Estimating Prediction Error and Validation Set Approach (14:01)

K-fold Cross-Validation (13:33)

Cross-Validation: The Right and Wrong Ways (10:07)

The Bootstrap (11:29)

More on the Bootstrap (14:35)

Lab: Cross-Validation (11:21)

Lab: The Bootstrap (7:40)

Chapter 6: Linear Model Selection and Regularization (slides, playlist)

Linear Model Selection and Best Subset Selection (13:44)

Forward Stepwise Selection (12:26)

Backward Stepwise Selection (5:26)

Estimating Test Error Using Mallow’s Cp, AIC, BIC, Adjusted R-squared (14:06)

Estimating Test Error Using Cross-Validation (8:43)

Shrinkage Methods and Ridge Regression (12:37)

The Lasso (15:21)

Tuning Parameter Selection for Ridge Regression and Lasso (5:27)

Dimension Reduction (4:45)

Principal Components Regression and Partial Least Squares (15:48)

Lab: Best Subset Selection (10:36)

Lab: Forward Stepwise Selection and Model Selection Using Validation Set (10:32)

Lab: Model Selection Using Cross-Validation (5:32)

Lab: Ridge Regression and Lasso (16:34)

Chapter 7: Moving Beyond Linearity (slides, playlist)

Polynomial Regression and Step Functions (14:59)

Piecewise Polynomials and Splines (13:13)

Smoothing Splines (10:10)

Local Regression and Generalized Additive Models (10:45)

Lab: Polynomials (21:11)

Lab: Splines and Generalized Additive Models (12:15)

Chapter 8: Tree-Based Methods (slides, playlist)

Decision Trees (14:37)

Pruning a Decision Tree (11:45)

Classification Trees and Comparison with Linear Models (11:00)

Bootstrap Aggregation (Bagging) and Random Forests (13:45)

Boosting and Variable Importance (12:03)

Lab: Decision Trees (10:13)

Lab: Random Forests and Boosting (15:35)

Chapter 9: Support Vector Machines (slides, playlist)

Maximal Margin Classifier (11:35)

Support Vector Classifier (8:04)

Kernels and Support Vector Machines (15:04)

Example and Comparison with Logistic Regression (14:47)

Lab: Support Vector Machine for Classification (10:13)

Lab: Nonlinear Support Vector Machine (7:54)

Chapter 10: Unsupervised Learning (slides, playlist)

Unsupervised Learning and Principal Components Analysis (12:37)

Exploring Principal Components Analysis and Proportion of Variance Explained (17:39)

K-means Clustering (17:17)

Hierarchical Clustering (14:45)

Breast Cancer Example of Hierarchical Clustering (9:24)

Lab: Principal Components Analysis (6:28)

Lab: K-means Clustering (6:31)

Lab: Hierarchical Clustering (6:33)

Interviews (playlist)

Interview with John Chambers (10:20)

Interview with Bradley Efron (12:08)

Interview with Jerome Friedman (10:29)

Interviews with statistics graduate students (7:44)

https://www.r-bloggers.com/in-depth-introduction-to-machine-learning-in-15-hours-of-expert-videos/

0 notes

Link

#Giant reed#Cellulosic energy crop#Plant height and aboveground biomass yield#Growth analysis and Gompertz function#Piecewise regression analysis#SAS PROC NLIN procedure

1 note

·

View note

Link

#Giant reed#Cellulosic energy crop#Plant height and aboveground biomass yield#Growth analysis and Gompertz function#Piecewise regression analysis#SAS PROC NLIN procedure

1 note

·

View note

Link

#Giant reed#Cellulosic energy crop#Plant height and aboveground biomass yield#Growth analysis and Gompertz function#Piecewise regression analysis#SAS PROC NLIN procedure

1 note

·

View note

Text

In-depth introduction to machine learning in 15 hours of expert videos

In January 2014, Stanford University professors Trevor Hastie and Rob Tibshirani (authors of the legendary Elements of Statistical Learning textbook) taught an online course based on their newest textbook, An Introduction to Statistical Learning with Applications in R (ISLR). I found it to be an excellent course in statistical learning (also known as “machine learning”), largely due to the high quality of both the textbook and the video lectures. And as an R user, it was extremely helpful that they included R code to demonstrate most of the techniques described in the book.

If you are new to machine learning (and even if you are not an R user), I highly recommend reading ISLR from cover-to-cover to gain both a theoretical and practical understanding of many important methods for regression and classification. It is available as a free PDF download from the authors’ website.

If you decide to attempt the exercises at the end of each chapter, there is a GitHub repository of solutions provided by students you can use to check your work.

As a supplement to the textbook, you may also want to watch the excellent course lecture videos (linked below), in which Dr. Hastie and Dr. Tibshirani discuss much of the material. In case you want to browse the lecture content, I’ve also linked to the PDF slides used in the videos.

Chapter 1: Introduction (slides, playlist)

Opening Remarks and Examples (18:18)

Supervised and Unsupervised Learning (12:12)

Chapter 2: Statistical Learning (slides, playlist)

Statistical Learning and Regression (11:41)

Curse of Dimensionality and Parametric Models (11:40)

Assessing Model Accuracy and Bias-Variance Trade-off (10:04)

Classification Problems and K-Nearest Neighbors (15:37)

Lab: Introduction to R (14:12)

Chapter 3: Linear Regression (slides, playlist)

Simple Linear Regression and Confidence Intervals (13:01)

Hypothesis Testing (8:24)

Multiple Linear Regression and Interpreting Regression Coefficients (15:38)

Model Selection and Qualitative Predictors (14:51)

Interactions and Nonlinearity (14:16)

Lab: Linear Regression (22:10)

Chapter 4: Classification (slides, playlist)

Introduction to Classification (10:25)

Logistic Regression and Maximum Likelihood (9:07)

Multivariate Logistic Regression and Confounding (9:53)

Case-Control Sampling and Multiclass Logistic Regression (7:28)

Linear Discriminant Analysis and Bayes Theorem (7:12)

Univariate Linear Discriminant Analysis (7:37)

Multivariate Linear Discriminant Analysis and ROC Curves (17:42)

Quadratic Discriminant Analysis and Naive Bayes (10:07)

Lab: Logistic Regression (10:14)

Lab: Linear Discriminant Analysis (8:22)

Lab: K-Nearest Neighbors (5:01)

Chapter 5: Resampling Methods (slides, playlist)

Estimating Prediction Error and Validation Set Approach (14:01)

K-fold Cross-Validation (13:33)

Cross-Validation: The Right and Wrong Ways (10:07)

The Bootstrap (11:29)

More on the Bootstrap (14:35)

Lab: Cross-Validation (11:21)

Lab: The Bootstrap (7:40)

Chapter 6: Linear Model Selection and Regularization (slides, playlist)

Linear Model Selection and Best Subset Selection (13:44)

Forward Stepwise Selection (12:26)

Backward Stepwise Selection (5:26)

Estimating Test Error Using Mallow’s Cp, AIC, BIC, Adjusted R-squared (14:06)

Estimating Test Error Using Cross-Validation (8:43)

Shrinkage Methods and Ridge Regression (12:37)

The Lasso (15:21)

Tuning Parameter Selection for Ridge Regression and Lasso (5:27)

Dimension Reduction (4:45)

Principal Components Regression and Partial Least Squares (15:48)

Lab: Best Subset Selection (10:36)

Lab: Forward Stepwise Selection and Model Selection Using Validation Set (10:32)

Lab: Model Selection Using Cross-Validation (5:32)

Lab: Ridge Regression and Lasso (16:34)

Chapter 7: Moving Beyond Linearity (slides, playlist)

Polynomial Regression and Step Functions (14:59)

Piecewise Polynomials and Splines (13:13)

Smoothing Splines (10:10)

Local Regression and Generalized Additive Models (10:45)

Lab: Polynomials (21:11)

Lab: Splines and Generalized Additive Models (12:15)

Chapter 8: Tree-Based Methods (slides, playlist)

Decision Trees (14:37)

Pruning a Decision Tree (11:45)

Classification Trees and Comparison with Linear Models (11:00)

Bootstrap Aggregation (Bagging) and Random Forests (13:45)

Boosting and Variable Importance (12:03)

Lab: Decision Trees (10:13)

Lab: Random Forests and Boosting (15:35)

Chapter 9: Support Vector Machines (slides, playlist)

Maximal Margin Classifier (11:35)

Support Vector Classifier (8:04)

Kernels and Support Vector Machines (15:04)

Example and Comparison with Logistic Regression (14:47)

Lab: Support Vector Machine for Classification (10:13)

Lab: Nonlinear Support Vector Machine (7:54)

Chapter 10: Unsupervised Learning (slides, playlist)

Unsupervised Learning and Principal Components Analysis (12:37)

Exploring Principal Components Analysis and Proportion of Variance Explained (17:39)

K-means Clustering (17:17)

Hierarchical Clustering (14:45)

Breast Cancer Example of Hierarchical Clustering (9:24)

Lab: Principal Components Analysis (6:28)

Lab: K-means Clustering (6:31)

Lab: Hierarchical Clustering (6:33)

Interviews (playlist)

Interview with John Chambers (10:20)

Interview with Bradley Efron (12:08)

Interview with Jerome Friedman (10:29)

Interviews with statistics graduate students (7:44)

https://www.r-bloggers.com/in-depth-introduction-to-machine-learning-in-15-hours-of-expert-videos/

0 notes