#SQL Server Agent Error

Explore tagged Tumblr posts

Text

Informatica Training in Chennai | Informatica Cloud IDMC

The Role of the Secure Agent in Informatica Cloud

Introduction

Informatica Cloud is a powerful data integration platform that enables businesses to connect, transform, and manage data across cloud and on-premises environments. One of its core components is the Secure Agent, which plays a crucial role in facilitating secure communication between Informatica Cloud and an organization's local network. This article explores the role, functionality, and benefits of the Secure Agent in Informatica Cloud.

What is the Secure Agent?

The Secure Agent is a lightweight, self-upgrading runtime engine installed on a customer’s local network or cloud infrastructure. It acts as a bridge between on-premises applications, databases, and Informatica Intelligent Cloud Services (IICS). By using the Secure Agent, businesses can process, integrate, and synchronize data between cloud and on-premises sources securely. Informatica Cloud IDMC Training

Key Roles and Responsibilities of the Secure Agent

1. Secure Data Movement

The Secure Agent ensures safe and encrypted data transmission between on-premises systems and Informatica Cloud. It eliminates the need to expose sensitive business data directly to the internet by handling all connections securely behind a company’s firewall.

2. Data Integration and Processing

A primary function of the Secure Agent is executing ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. It extracts data from source systems, applies necessary transformations, and loads it into the target system. By running these processes locally, organizations can optimize performance while maintaining data security.

3. Job Execution and Management

The Secure Agent is responsible for executing data integration tasks, mapping configurations, and workflow automation. It supports various Informatica Cloud services, including: Informatica IICS Training

Data Integration

Application Integration

API Management

Data Quality

Master Data Management (MDM)

It efficiently manages job execution, ensuring data pipelines operate smoothly.

4. Connectivity with On-Premises and Cloud Sources

Organizations often have hybrid environments where some data resides in on-premises databases while others exist in cloud platforms. The Secure Agent enables seamless connectivity to databases like Oracle, SQL Server, MySQL, and applications such as SAP, Salesforce, Workday, and more.

5. Security and Compliance

Security is a major concern for enterprises handling sensitive data. The Secure Agent ensures that data remains within the organization’s control by encrypting data at rest and in transit. It complies with industry standards like GDPR, HIPAA, and SOC 2 to maintain robust data security.

Benefits of Using the Secure Agent: Informatica IDMC Training

1. Enhanced Security

Prevents data exposure to the internet

Uses encryption and secure authentication mechanisms

Runs behind the firewall, ensuring compliance with security policies

2. Performance Optimization

Enables on-premises data processing, reducing latency

Supports parallel execution of tasks for better efficiency

Handles large volumes of data with optimized performance

3. Scalability and Reliability

Auto-upgrades to the latest versions without manual intervention

Distributes workloads efficiently, ensuring high availability

Handles failures through automatic retries and error logging

4. Simplified Management

Intuitive UI for monitoring and managing tasks

Seamless integration with Informatica Cloud for centralized administration

No need for complex firewall configurations or VPN setups

How to Install and Configure the Secure Agent

Setting up the Secure Agent is straightforward: Informatica Cloud Training

Download the Secure Agent from the Informatica Cloud UI.

Install the agent on a local server or cloud instance.

Authenticate the agent using the provided credentials.

Configure connectivity to required on-premises or cloud applications.

Verify the installation and start running data integration tasks.

Conclusion

The Secure Agent in Informatica Cloud is a crucial component for organizations looking to integrate and process data securely across hybrid environments. It ensures seamless connectivity, secure data movement, optimized performance, and compliance with industry standards. By leveraging the Secure Agent, businesses can achieve robust data integration without compromising security or performance, making it an essential tool in the modern data landscape.

For More Information about Informatica Cloud Online Training

Contact Call/WhatsApp: +91 7032290546

Visit: https://www.visualpath.in/informatica-cloud-training-in-hyderabad.html

#Informatica Training in Hyderabad#IICS Training in Hyderabad#IICS Online Training#Informatica Cloud Training#Informatica Cloud Online Training#Informatica IICS Training#Informatica IDMC Training#Informatica Training in Ameerpet#Informatica Online Training in Hyderabad#Informatica Training in Bangalore#Informatica Training in Chennai#Informatica Training in India#Informatica Cloud IDMC Training

0 notes

Text

Monitoring

Just as the heart monitor in a hospital that is making sure that a patient’s heart is beating and at the right beat, software monitoring will watch computer metrics, record them, and emit an alert if something is unusual or that could make the computer not work properly happens.

You cannot fix or improve what you cannot measure is a famous saying in the tech industry. In the age of the data-ism, monitoring how our software systems are doing is an important thing.

Web stack monitoring can be broken down into 2 categories:

Application monitoring: getting data about your running software and making sure it is behaving as expected

Server monitoring: getting data about your virtual or physical server and making sure they are not overloaded (could be CPU, memory, disk or network overload)

Here are few famous monitoring tools:

NewRelic

NewRelic requires you to add a piece of JavaScript to your website, this agent will collect information and send it back to the New Relic servers. It will give you a detailed analysis of how quickly your website loads in a browser, with a detailed analysis at every level of the stack. If the website is loading too slowly or users are experiencing error (500), there is a feature that allows you to trigger an alert. NewRelic now does much more than this, I’ll let you discover the rest.

DataDog

DataDog allows you to measure and monitor everything with graphs. It gathers performance data from all your application components. The service has a lot of integrations. You usually just need to properly configure your alert and you are good to go with solid monitoring coverage.

Uptime Robot

Uptime Robot is a simple service that will check that your website is responding from multiple locations in the world. This is the bare minimum when you host a website.

Nagios

Nagios is an open source project started in 1999, it is among the most widely used monitoring tools in the tech industry. It is, however, seen as outdated. Nagios had trouble adapting to the rise of the Cloud but is slowly trying to catch up.

WaveFront

Wavefront is a cutting edge monitoring service funded by great software engineers who’ve built monitoring tools for the best tech companies in Silicon Valley. The idea is to be able to analyze anything that can produce data points. A query language that looks like SQL allows users to apply mathematical operations to these data points to extract values or detect anomalies from the time series data. While it takes some time to get used to the tool, it’s the type of monitoring that the best companies are using. To my knowledge, LinkedIn, Facebook and DropBox are using a very similar tool for their monitoring needs.

0 notes

Text

Exploring the Power of SQL Server Change Data Capture (CDC)

In the realm of database management, the concept of Change Data Capture (CDC) has emerged as a transformative tool, and SQL Server CDC stands as a prime example. As a neutral observer, we dive into the world of SQL Server CDC to provide insights into its functionalities, benefits, and the remarkable impact it can have on modern data-driven businesses.

Understanding SQL Server CDC:

Change Data Capture, or CDC, is an integral part of Microsoft SQL Server's arsenal. It’s a feature that tracks and captures changes made to data within SQL Server databases. Designed for organizations seeking real-time insights and analysis, SQL Server CDC empowers businesses to keep pace with data modifications seamlessly.

How SQL Server CDC Works:

SQL Server CDC operates through a two-step process: capture and read. During the capture phase, CDC monitors and records data changes directly from the database transaction logs. In the read phase, the captured changes are extracted and made available for consumption by external applications.

Benefits of SQL Server CDC:

Real-time Data Integration: One of the most significant advantages of SQL Server CDC is its ability to provide real-time data integration. The captured data changes can be efficiently propagated to data warehouses, data lakes, or other systems, ensuring that all downstream applications stay updated with the latest information.

Efficient ETL Processes: CDC significantly streamlines Extract, Transform, Load (ETL) processes. By capturing only the changed data, organizations can reduce the load on ETL operations, leading to faster data movement and analytics.

Historical Data Analysis: With CDC, businesses gain the ability to analyze historical data changes. This is invaluable for auditing purposes, compliance reporting, and identifying trends over time.

Minimized Latency: SQL Server CDC operates in near-real-time, ensuring that changes are captured and made available for consumption with minimal delay. This low latency is crucial for applications that require up-to-the-minute data.

Improved Data Accuracy: CDC eliminates the need for manual tracking and data entry, reducing the chances of human errors. This results in improved data accuracy across the organization.

Implementation of SQL Server CDC:

Implementing SQL Server CDC requires careful planning and execution. The process involves several key steps:

Enabling CDC: CDC must be enabled for individual tables or an entire database. Once enabled, SQL Server automatically tracks changes made to the specified tables.

CDC Control Functions: SQL Server provides a set of control functions that allow users to manage and interact with CDC. These functions provide access to captured change data.

Data Capture Jobs: SQL Server Agent jobs are used to capture changes from the transaction logs and populate CDC tables. Regular execution of these jobs ensures that the captured data remains up to date.

Consuming CDC Data: Businesses can consume the captured change data using various methods, including SQL queries, SSIS (SQL Server Integration Services) packages, and custom applications.

Challenges and Considerations:

While SQL Server CDC offers compelling advantages, its implementation is not without challenges:

Storage Requirements: CDC captures every data change, which can lead to increased storage requirements. Adequate storage planning is essential to accommodate the growing change data.

Impact on Performance: While CDC minimally impacts the performance of the source database, it may still affect transaction log size. Regular log management is necessary to prevent performance degradation.

Data Cleanup: As CDC captures data indefinitely, implementing a data cleanup strategy is crucial to prevent an overwhelming amount of historical change data.

SQL Server Change Data Capture is a potent tool for organizations aiming to make the most of their data assets. As a neutral observer, we've delved into the world of SQL Server CDC, highlighting its benefits, implementation steps, and considerations. The ability to capture and propagate real-time data changes brings unmatched efficiency, accuracy, and insights to modern businesses.

While the journey towards implementing SQL Server CDC may present challenges, the rewards in terms of enhanced data integration, historical analysis, and data accuracy are worth the effort. For businesses seeking to stay ahead in the data-driven landscape, embracing SQL Server CDC can be a game-changing decision that fuels growth, innovation, and competitive advantage.

0 notes

Photo

The plus points of SQL Server Health checks

Several benefits could be obtained through Using SQL host Health checks. They may perhaps not be at the top of your DBA's to-do record, but a regular program of health checks can help you identify issues that affect the operation of your databases and servers. We will take a look at several of the reasons you ought to begin doing SQL server health checks if they aren't already a portion of one's Profession regime.

What's an SQL-server Health Assess?

Many moving parts contribute to The functioning of one's SQL servers. Getting a handle on how your server works under the very best situation might be difficult for even the most experienced DBAs. When problems occur, and users or management have complaints about your systems' speed and operation, the impetus to discover the inherent issues can become overwhelming. You'll find a lot of possible causes to your database's shortage of process. Where can a stressed-out DBA turn for aid? Conducting a health test up on your SQL servers can be a significant destination for a get started.

A SQL Server health check is a Process in which you compile info that may utilize to pinpoint issues related to your systems' operation. A server test may likewise be instrumental in helping you to comprehend the SQL Servers for which you are responsible, in addition to shedding light on problems that they may be experiencing.

A productive Sql-server well-being Check should address specific facets of one's host and surroundings. Some of the features that a workable Wellness test will probably do comprise:

· Validating process architecture, code, and database design

· Discovering difficulty code That's causing slow execution

· Finding possible scalability and capacity problems

· Verifying the SQL surroundings and its users are protected

· Participate in planning for program and platform updates

· Providing Appropriate information to guide cloud transformation or virtualization

So what Can You Attain Health Checks?

SQL Server well-being checks could be Used to gather knowledge regarding your atmosphere tackle particular difficulties that are influencing its performance. Let's consider several advantages that can result from the information gained through host health checks.

· You will get a deeper understanding of a SQL server and how it fits in with the overall atmosphere and business strategy. To do this, you want to collect information about the applications that run on the server and any SLAs they have to meet. After obtaining excellent high-level knowledge concerning the server instance, you can proceed after many more technical aspects of the system, such as, for example, all those regarding the infrastructure where it is running.

· Low-level particulars of the SQL Server instance and its database must be contained in well-being assessments. This data will probably give a full image of the device, which may be appropriately used for troubleshooting or only make baseline documentation for later comparative analysis. You may be alerted to outdated agency packs, defective configuration, and SQL Agent Jobs that fail.

· Eliminating safety breaches is of utmost importance from the present landscape of cybercrime and also compliance. Health checking your SQL servers can find problems that cause you to more susceptible to an attack and which will cause you to neglect a compliance audit. The health check could indicate that permissions want to be reworked and unacceptable encryption levels are used for sensitive info. You may help save yourself and your company a great deal of time and money by proactively handling these issues.

· Server health checks will help ensure that proper care will be executed regularly onto the apparatus. They confirm that commands that perform functions like upgrading statistics and rebuilding and reorganizing indicators are often critical to keeping the servers running efficiently.

· Assessing the backup plans and procedures in place for every SQL Server case is a critical but occasionally missed endeavor that a DBA needs to perform. A physician check can help establish gaps in your backup methods that can be tackled until you are intimated upon to perform a restore for which you are not well ready. The deficiency of valid copies can overtake a company when exposed to some disaster or ransomware attack.

The way to Run Complete SQL Server Health Checks

Hopefully, You're Certain of The benefits of conducting periodic SqlServer health checks. The difficulties that may be seen through these checks are invaluable to get a DBA concerned with the security and performance of their servers and databases. The question then becomes how to perform an SQL Server well-being test best.

Much like many facets of the IT Business, you have possibilities. You may produce handbook scripts and queries to extract the exact details that you require. It can be very time-consuming also lends itself to organizational complexity and coding errors.

A better alternative is to use a Dedicated instrument designed to detect performance, security, and information protection problems that impact your SQL server.

An SQL-server health check may be used to generate reports which may help you tune in your bodily, virtual, and email servers. You can put it to use to summarize the wellness of your complete installation or drill down to investigate specific servers. This application delivers prioritized lists of potential performance optimizations and will automatically create executable SQL scripts to streamline your systems.

You Can conduct SQL Server health checks on an off-the-shelf foundation or as part of the routinely planned Health check initiative. Don't wait until the Individual exhibits symptoms of an Illness.

1 note

·

View note

Text

Avg driver updater serial key september 2018 tinipaste

Avg driver updater serial key september 2018 tinipaste pdf#

Avg driver updater serial key september 2018 tinipaste archive#

Avg driver updater serial key september 2018 tinipaste software#

Avg driver updater serial key september 2018 tinipaste password#

Avg driver updater serial key september 2018 tinipaste windows 8#

Increase your Windows performance, optimize system memory Optimize Internet Explorer, Mozilla, Opera browser View connections statistics, bytes sent/received and traffic by graph Tweak every aspect of your Internet connection manually Improve Internet connection performance with Internet Optimization Wizard Being the best companion for Windows operating system, it's improving your Internet connection and optimizes your Windows system and other software.īe notified when your system can be optimized It's a powerful, all-in-one system performance and Internet optimizer suite.

Avg driver updater serial key september 2018 tinipaste password#

Integrate the Reset Windows Password utilityĪusLogics BoostSpeed is the ideal solution to keep your PC running faster, cleaner and error-free. With a user-friendly interface, you are able to recover lost or forgotten password in a few simple mouse clicks! Recover passwords for VNC, Remote Desktop Connection, Total Commander, Dialup. Recover lost or forgotten passwords easily

Avg driver updater serial key september 2018 tinipaste windows 8#

Recover Windows 8 / 7 / Vista / 2008 / 2003 / XP / 2000 / NT autologon password (when user is logged on).įind lost product key (CD Key) for Microsoft Windows, Office, SQL Server, Exchange Server and many other products. It enables you to view passwords hidden behind the asterisks in password fields of many programs, such as Internet Explorer, CoffeeCup FTP, WinSCP, FTP Explorer, FTP Now, Direct FTP, Orbit Downloader, Mail.Ru Agent, Group Mail, Evernote and much more. Reveal passwords hidden behind the asterisks It also allows you to remove IE Content Advisor password.įTP Password Recovery - Decrypt FTP passwords stored by most popular FTP clients: CuteFTP, SmartFTP, FileZilla, FlashFXP, WS_FTP, CoreFTP, FTP Control, FTP Navigator, FTP Commander, FTP Voyager, WebDrive, 32bit FTP, SecureFX, AutoFTP, BulletProof FTP, Far Manager, etc. Recover passwords to websites saved in Internet Explorer, Firefox, Operaīrowser Password Recovery - Recover passwords to Web sites saved in Internet Explorer 6 / 7 / 8 / 9 / 10, Microsoft Edge, Mozilla Firefox, Opera, Apple Safari, Google Chrome, Chrome Canary, Chromium, SeaMonkey, Flock and Avant Browser. SQL Server Password Recovery - Reset forgotten SQL Server login password for SQL Server 2000 / 2005 / 2008 / 2012 / 2014. MS Access Password Recovery - Unlock Microsoft Office Access XP / 2003 / 2000 / 97 / 95 database.

Avg driver updater serial key september 2018 tinipaste archive#

Office Password Recovery - Recover forgotten passwords for Microsoft Office Word / Excel / PowerPoint 2010 / 2007 / 2003 / XP / 2000 / 97 documents.Īrchive Password Recovery - Recover lost or forgotten passwords for WinRAR or RAR archives, and ZIP archive created with WinZip, WinRAR, PKZip, etc.

Avg driver updater serial key september 2018 tinipaste pdf#

Instantly remove PDF restrictions for editing, copying, printing and extracting data. PDF Password Recovery - Recover lost passwords of protected PDF files (*.pdf). PST Password Recovery - Instantly recover lost or forgotten passwords for Microsoft Outlook 2013 / 2010 / 2007 / 2003 / XP / 2000 / 97 personal folder (.pst) files. Recover lost passwords for protected PDF or Office documents Recovers lost or forgotten passwords for MSN Messenger, Windows Messenger, MSN Explorer, Windows Live Messenger, AIM Pro, AIM 6.x and 7.x, Google Talk, MyspaceIM, Trillian Basic, Trillian Pro, Trillian Astra, Paltalk, Miranda, Digsby, Pidgin, GAIM, EasyWebCam, Camfrog Video Chat, Ipswitch Instant Messaging, etc. Retrieve passwords to mail accounts created in Microsoft Outlook 98 / 2000 / XP / 2003 / 2007 / 2010 / 2013, Outlook Express, Windows Mail, Windows Live Mail, Hotmail, Gmail, Eudora, Incredimail, Becky! Internet Mail, Phoenix Mail, Ipswitch IMail Server, Reach-a-Mail, Mozilla Thunderbird, Opera Mail, The Bat!, PocoMail, Pegasus Mail, etc. It can also reset Windows domain administrator/user password for Windows 2012 / 2008 / 2003 / 2000 Active Directory servers. Instantly bypass, unlock or reset lost administrator and other account passwords on any Windows 10, 8, 7, 2008, Vista, XP, 2003, 2000 system, if you forgot Windows password and couldn't log into the computer. Reset lost Windows administrator or user passwords No need to call in an expensive PC technician.

Avg driver updater serial key september 2018 tinipaste software#

A useful password recovery software for both newbie and expert with no technical skills required. Retrieve passwords for all popular instant messengers, email clients, web browsers, FTP clients and many other applications. Password Recovery Bundle 2018 is a handy toolkit to recover all your lost or forgotten passwords in an easy way! Quickly recover or reset passwords for Windows, PDF, ZIP, RAR, Office Word/Excel/PowerPoint documents.

0 notes

Text

Nba 2k17 servers october 24

NBA 2K17 SERVERS OCTOBER 24 INSTALL

NBA 2K17 SERVERS OCTOBER 24 SERIES

NBA 2K17 SERVERS OCTOBER 24 FREE

NBA 2K17 SERVERS OCTOBER 24 WINDOWS

In case FVU reports any error in the file, then the users are advised to rectify the same. All users are advised to use latest FVU and check the format level correctness of the file before submitting the same to TIN-FC. Protean does not warrant the accuracy of the output file generated by using any of these utilities.

NBA 2K17 SERVERS OCTOBER 24 FREE

This process should be continued till error free Form 24G is generated. After rectifying the errors, user should pass the rectified Form 24G through the FVU.

NBA 2K17 SERVERS OCTOBER 24 SERIES

The second game in the series was released in October of 2000 as NBA 2K1. In case the Form 24G contains any errors, user should rectify the same. Rod Brooks and Bob Fitzgerald portray the announcers. This utility is also freely downloadable from TIN website. Users must pass the Form 24G (Regular/Correction) file generated using Preparation Utility through the File Validation Utility (FVU) to ensure format level accuracy of the file. 21 22 23 As such, NBA 2K17 and its Story Edition were introduced on the time Bryant performed his final NBA game. This utility can be used for preparation of Form 24G with upto 75,000 records.įorm 24G Return Preparation Utility (version 1.8) for Regular and Correction statement The 201516 NBA season was Bryants last season in thé NBA so á exclusive edition offering Bryant had been developed as a method of paying homage to what several consider to become one of the biggest basketball players of all period.

NBA 2K17 SERVERS OCTOBER 24 INSTALL

Steps to download and install Form 24G Preparation Utilityįor assistance in using Form 24G Preparation Utility, read the instructions provided in ‘Help’ in the Form 24G Preparation Utility. If appropriate version of JRE is installed, then the ‘Form 24G Preparation Utility’ will be displayed. In such cases, install JRE and try again. If JRE is not installed on the computer, then on clicking ‘24GRPU.bat’, a message will be displayed. To run the ‘Form 24G Preparation Utility’, click on the ‘24GRPU.bat’ file. Win 2K Server/ Win NT 4.0 Server/ Win XP Prof.

NBA 2K17 SERVERS OCTOBER 24 WINDOWS

JRE is freely downloadable from and or you can ask your computer vendor (hardware) to install the same for you.įorm 24G Preparation Utility can be executed on Windows platform(s) Win 2K Prof. JRE (Java Run-time Environment) should be installed on the computer where Form 24G Preparation Utility is being installed. Form 24G Preparation Utility can be freely downloaded from After downloading, it needs to be saved on the local disk of the machine. If we get budget approval, we would prefer to buy a true monitoring tool and monitor SQL and the Operating System, but until then, this is our solution.The Form 24G Preparation Utility is a Java based utility. This will allow us to have one copy of the scripts yet monitor all of the servers.įor our needs, we are trying a central monitoring server using SQL scripts. We are changing our scripts to make use of linked servers. Our solution was to use a development server that we have to run the monitoring scripts and monitor all of the other database servers. We have also had SQL Server Agent Stop and fail to restart on several servers, which would negate our monitoring until we noticed that the Agent was stopped. Since we have about 12 servers that we monitor, it became a logistical problem to copy our monitoring scripts to each server. By having a separate server, we will not impact any production server. The purpose of our monitoring server is to have 1 server that will provide the status of other servers. The script from Greg Larsen is available from.

0 notes

Text

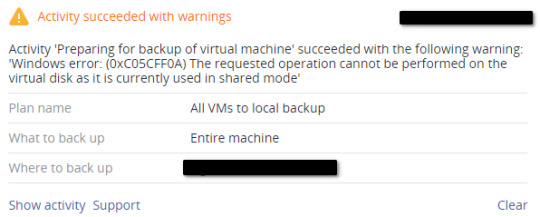

Acronis Cyber Protect 15 virtual machine backup not working when using shared mode virtual disks vhds

Case

When you apply and run an Acronis Cyber Protect backup against a virtual machine which is part of a guest cluster which makes use of virtual disk shared files of type .vhds (VHD Set), you run across the following error. Activity succeeded with warnings. Activity 'Preparing for backup of virtual machine' succeeded with the following warning 'Windows error: (0xC05CFF0A). The requested operation cannot be performed on the virtual disk as it is currently used in shared mode.

Or the error may state something along the lines of: "Failed to open virtual disk file '.vhds'. Unknown format of the virtual disk." The same error as above can occur in virtual machine backup of virtual machines which include a .vdhx shared disk.

Solution

The above error is by design when you are attempting a virtual machine backup to virtual machine which does not have an Acronis backup agent installed, as per https://kb.acronis.com/content/68573. According to Acronis, as of July 2022, VHDS (VHD set) format virtual disk is not yet supported for agentless backup by Acronis Cyber Protect 15. Support is planned for future versions of the product. However the above issue can persist even if you have the Acronis backup agent installed on your source virtual machine.

This can create confusion, since a virtual machine with the Acronis Cyber Protect agent should support the .vhds file format. The tricky part is that for every virtual machine, Acronis Cyber Protect creates two records in its database. One record corresponds to the virtual machine object as seen from the hypervisor side. In this case Acronis uses three icons to represent three different objects in its management server database, as shown below.

The following icon represents a virtual machine as seen and managed via the hypervisor guest services.

The following icon represents the virtual machine as seen and managed via the Acronis backup agent. This corresponds to the Acronis agentless backup method.

The following icon represents a hypervisor host machine, also managed via the Acronis backup agent for hypervisor hosts. The following icon represents the virtual machine as seen and managed via the Acronis backup agent. This corresponds to the Acronis agent-based backup method.

So imagine the scenario that you have a guest WFC cluster comprising at least one SQL Server cluster node (virtual machine), which uses the .vhds file format for its shared cluster virtual disks. In this case Acronis will create two records for each SQL server cluster node, as shown in the example below. There will be one record which corresponds to the agentless backup method and is represented by the virtual machine's hostname. There will also be one record which corresponds to the agent-based backup method and is represented by the virtual machines fully qualified domain name (FQDN).

To take successful backups of the SQL cluster nodes (virtual machine backups), you must apply an Acronis VM backup plan to the agent-based virtual machine object (the one designated with the FQDN). Read the full article

#Acronis#AcronisCyberProtect#agent#agentless#Backup#sharedmode#Troubleshooting#vhds#vhdx#virtualdisk

0 notes

Text

Oimerp Driver Download

Oimerp Driver Download Pc

Oimerp Driver Download Torrent

In reply to Omer Coskun's post on August 25, 2010 I installed it and can see it in add/remove programs but devive manager still has a yellow question mark. I tried to reintall driver tab but got a message one cound not be found. Omer Faruk free download - Omer Reminder, OMeR X, Omer Counter, and many more programs. Download Chrome For Windows 10/8.1/8/7 32-bit. For Windows 10/8.1/8/7 64-bit. This computer will no longer receive Google Chrome updates because Windows XP and Windows Vista are no longer. View & download of more than 498 Cerwin-Vega PDF user manuals, service manuals, operating guides. Speakers, Subwoofer user manuals, operating guides & specifications.

Aarp Safe Driver

Object TypeActionsViewersCatalogs

Create Database Catalogs CatalogReferences, Tables SchemasCreate Schema Schemas SchemaExport Schema, Drop Schema References, Tables TablesCreate Table, Import Table DataReferences, Tables TableAlter Table, Create Trigger, Export Table, Import Table Data, Add Extended Property, Create Index, Delete Extended Property, Drop Table, Edit Extended Property, Empty Table, Rename Table, Script: Script Table Navigator1, References, Data, Columns, DDL, Extended Properties, Grants, Indexes, Indexes2, Info, Primary Key, Row Count, Row Id, Triggers HistoryTable3 Extended Properties, Grants, Indexes, Info Columns Columns, Extended Properties ColumnAdd Extended Property, Delete Extended Property, Edit Extended Property, Rename Column Column, Extended Properties Indexes Indexes IndexDrop Index, Rebuild Index4, Rename Index DDL4, Index Triggers Triggers TriggerExport Trigger, Disable Trigger, Drop Trigger, Enable Trigger, Rename Trigger Trigger Editor, Info Views Views ViewExport View, Add Extended Property, Create Trigger, Delete Extended Property, Drop View, Edit Extended Property, Rename View, Script: Script View Data, Columns, DDL, Extended Properties, Indexes, Info, Row Count Columns Columns, Extended Properties ColumnAdd Extended Property, Delete Extended Property, Edit Extended Property, Rename Column Column, Extended Properties Triggers Triggers TriggerExport Trigger, Disable Trigger, Drop Trigger, Enable Trigger, Rename Trigger Trigger Editor, Info SynonymsCreate Synonym Synonyms SynonymDrop Synonym Info Indexes Indexes IndexDrop Index, Rebuild Index4, Rename Index DDL4, Index Sequences5Create Sequence Sequences SequenceDrop Sequence Info Types User Defined Data Types Triggers Triggers TriggerExport Trigger, Disable Trigger, Drop Trigger, Enable Trigger, Rename Trigger Trigger Editor, Info ProceduresCreate Procedure Procedures ProcedureExport Procedure, Drop Procedure, Rename Procedure, Script: Script Procedure Procedure Editor, Interface FunctionsCreate Function Functions FunctionExport Function, Drop Function, Rename Function, Script: Script Function Function Editor, Interface Users6 Users Roles Roles Role Info, Users6LinkedServers7Create Linked Server1 Linked Servers LinkedServerAdd Login, Drop Linked Server Login, Drop Linked Server1, Enable/Disable Options, Set Remote Collation, Set Timeout Options, Test Connection1 Info LinkedServerCatalogs Catalogs LinkedServerCatalogLinkedServerSchemas Schemas LinkedServerSchemaLinkedServerTables Tables LinkedServerTableData, Columns, Foreign Keys, Grants, Info, Primary Key, Row Count LinkedServerColumns Columns LinkedServerColumn Column LinkedServerViews Views LinkedServerViewData, Columns, Info, Row Count LinkedServerColumns Columns LinkedServerColumn Column LinkedServerSynonyms Synonyms LinkedServerSynonym Column DBA DBA ServerInfo Latest Error Log6, Server Info Logins6 Logins Devices6 Database Devices Processes6 Processes ServerRoles6 Roles ServerRole Info, Users ServerAgent8 Latest Error Log, Sql Server Agent JobsCreate Job Jobs JobCreate Step, Delete Job, Edit, Edit Notifications, Enable/Disable Job, Start the job Alerts, History, Steps, info JobStepCopy Step, Delete Job Step, Edit Step info JobSchedulesAttach Schedule Schedules JobScheduleDetach Schedule, Enable/Disable Schedule Schedules JobServersAdd Server Servers JobServerDelete Server Server SchedulerSchedulesAdd Recurring Daily, Add Recurring Monthly, Add Recurring Weekly, Add one time, Add other Schedules SchedulerScheduleDelete Schedule, Enable/Disable Schedule Jobs, Schedules Alerts Alerts Alert Alert, Operators Operators Operators Operator Alerts, Info, Jobs Locks6 Locks 1)DbVisualizer Pro 2)Version 10 and later 3)Version 13 and later 4)Version 9 and later 5)Version 11 and later 6)Not Azure SQL Database 7)Version 10 and when linked servers is supported 8)Version 9 and not Azure SQL Database

0 notes

Text

Monitor deadlocks in Amazon RDS for SQL Server and set notifications using Amazon CloudWatch

Monitoring SQL Server is an essential aspect of any relational database management system (RDBMS) when dealing with performance problems. Many of our customers want to capture certain systems and user-defined events for monitoring and troubleshooting problems related to SQL Server. SQL Server logs these events in SQL Server error logs and SQL Server agent logs. Deadlocks are one such event can be captured in SQL Server error logs. A deadlock occurs when two or more processes are waiting on the same resource and each process is waiting on the other process to complete before moving forward. When this situation occurs, there is no way for these processes to resolve the conflict, so SQL Server automatically chooses one of the processes as the victim of the deadlock and rolls back the process, and the other process succeeds. By default, when this occurs, your application may see or handle the error, but nothing is captured in the SQL Server error log or the Windows event log to let you know this occurred. The error message that SQL Server sends back to the client is similar to the following: Msg 1205, Level 13, State 51, Line 3 Transaction (Process ID xx) was deadlocked on {xxx} resources with another process and has been chosen as the deadlock victim. Rerun the transaction With Amazon RDS for SQL Server, you can now monitor the deadlocks and send Amazon Simple Notification Service (Amazon SNS) notifications as soon as a deadlock event occurs on an RDS for SQL Server instance. This can help you automate deadlock reporting and take appropriate actions to resolve deadlock conflicts. This solution isn’t limited to capturing deadlock events; you can scale this solution to monitor other system and user-defined events captured in the error logs and SQL Server agent logs. In this post, we show you how to publish error and agent log events directly to Amazon CloudWatch Logs and set up CloudWatch alarms and SNS notifications for the deadlock events that match the filter pattern that you create. Solution overview The following diagram illustrates the solution architecture. To implement the solution, we walk through the following high-level steps: Enable deadlock detection for Amazon RDS for SQL Server. Publish the SQL Server error logs to CloudWatch. Simulate a deadlock event. Create a filter pattern and CloudWatch alarm. Monitor the solution using Amazon RDS Performance Insights. Prerequisites Amazon RDS for SQL Server Access to the AWS Management Console and Amazon CloudWatch An email address to receive notifications SQL Server Management Studio (SSMS) Enable deadlock detection for Amazon RDS for SQL Server To enable deadlock detection, complete the following steps. On the Amazon RDS console, choose Parameter groups in the navigation pane. Choose Create parameter group. For Parameter group family, choose the SQL Server version and edition you are using. For example, SQL Server 2017 Standard Edition uses sqlserver-se-14.0. Enter a Group name and Description. Choose Create. On the Parameter groups page, choose the group that you created in the previous step. Choose Edit parameters, and select 1204 and 1222. Edit the Values for 1204 and 1222 to 1. Choose Preview changes. On the next page, choose Save changes. In the navigation pane, choose Databases. In the DB identifier section, choose your RDS DB instance. Choose Modify. From the Database options section, for DB parameter group, choose the parameter group you created. DB instances require a manual reboot in the following circumstances: If you replace the current parameter group with a different parameter group If you modify and save a static parameter in a custom parameter group Publish the SQL Server error logs to CloudWatch To publish your SQL Server error logs to CloudWatch, complete the following steps. On the Modify page of the Amazon RDS console, in the Error logs section, choose Error log. This makes sure that the SQL Server error logs are published to CloudWatch Logs. Choose Continue. Enable Performance Insights for Amazon RDS. In the Scheduling of modifications section, choose Apply immediately, and then choose Modify DB Instance. On the Databases page, choose your RDS DB instance, then choose Actions. Reboot the RDS DB instance for the changes to take effect. Simulate a deadlock event Simulate a deadlock transaction on your RDS for SQL Server instance by running the following T-SQL code in SQL Server Management Studio (SSMS). --Two global temp tables with sample data for demo purposes. CREATE TABLE ##Employees ( EmpId INT IDENTITY, EmpName VARCHAR(16), Phone VARCHAR(16) ) GO INSERT INTO ##Employees (EmpName, Phone) VALUES ('Amy', '900-999-1332'), ('Jay', '742-234-2222') GO CREATE TABLE ##Suppliers( SupplierId INT IDENTITY, SupplierName VARCHAR(64), Location VARCHAR(16) ) GO INSERT INTO ##Suppliers (SupplierName, Location) VALUES ('ABC', 'New York'), ('Honest Sourcing', 'Boston') GO Next, open two query windows in SSMS. Run the following code in each of the sessions, step by step, in two windows. Session 1 Session 2 Begin Tran; Begin Tran; UPDATE ##Employees SET EmpName = ‘Gani’ WHERE EmpId = 1; UPDATE ##Suppliers SET Location = N’Toronto’ WHERE SupplierId = 1; UPDATE ##Suppliers SET Location = N’Columbus’ WHERE SupplierId = 1; Blocked UPDATE ##Employees SET Phone = N’123-456-7890′ WHERE EmpId = 1; Blocked After you run the code, you can see one of the transactions is processed (see the following screenshot). The following screenshot shows the second transaction is blocked with a deadlock error. Create a filter pattern and CloudWatch alarm On the CloudWatch console, under Logs, choose Log groups. Choose the SQL Server error logs of your RDS DB instance. The logs are listed in the following format:(/aws/rds/instance//error) Choose Create metric filter. In the Filter Pattern section, enter deadlock. Select any errors to monitor and use that as the filter word. Choose Assign metric. Enter deadlock in both the Filter Name and Metric Name Set the metric value field to 1. Choose Create Filter. The following screenshot shows your filter details. After the deadlock filter is created, choose Create alarm. On the Specify metric and conditions page, for Metric name, enter deadlock. For Statistic, choose Minimum. For Period, choose the time period for the alarm, for example, 1 minute. In the Conditions section, for Threshold type, choose Static. For Whenever Deadlock is, choose Greater > threshold. For Than, enter 0. Choose Next. In the Notification section, for Alarm state trigger, choose In alarm. Select an SNS topic, or choose Create new topic to create an SNS topic using the email address you want to receive alerts. Choose Next. In the Name and description section, enter a name and description for your alarm. Choose Next. On the Preview and create page, review your alarm configuration, then choose Create alarm. Confirm the notification email. After you follow these steps, simulate a deadlock again. When the alarm has enough data, the status shows as OK. The CloudWatch alarm sends an SNS notification to the email that you specified (see the following screenshot). Monitor using Performance Insights Performance Insights is an Amazon RDS feature that can automatically analyze the current workload of a database instance and identify the queries that are slowing it down. For each query, it can show the type of wait it’s causing and the user who is causing the slowness or the client machine that’s running the query. All this information is made available in a compact, easy-to-understand dashboard, which makes Performance Insights a great tool for troubleshooting. To get this kind of information, Performance Insights queries the RDS instance’s internal data structures in memory one time every second. It’s not a disk-based operation, so the sampling doesn’t put any pressure on the system. Later, we talk about the types of data that are collected during the samplings. To use Performance Insights for Amazon RDS for SQL Server, complete the following steps. Modify the RDS for SQL Server instance settings to enable Performance Insights. On the Amazon RDS console, choose the database you want to monitor. On the Monitoring tab, choose the Monitoring menu and choose Performance Insights. You can choose the deadlock metrics and monitor using Performance Insights as well. Clean up When you’re finished using the resources in this post, clean up the AWS resources to avoid incurring unwanted charges. Specifically, delete the RDS for SQL Server instance and CloudWatch logs. Conclusion In this post, we showed how to publish error and agent log events directly to CloudWatch Logs and then set up a CloudWatch alarm and SNS notification for deadlock events that match a specific filter pattern. With this solution, you can automate RDS for SQL Server error log files monitoring and alerting. This can help you automate deadlock reporting and take appropriate actions to resolve deadlocks. You can use this solution for monitoring other RDS for SQL Server log events and fatal errors. We showed an example on how to capture deadlock event metrics using Performance Insights. To learn more about monitoring Amazon RDS for SQL Server, see Monitoring OS metrics using Enhanced Monitoring and Monitoring with Performance Insights on Amazon RDS. About the authors Yogi Barot is Microsoft Specialist Senior Solution Architect at AWS, she has 22 years of experience working with different Microsoft technologies, her specialty is in SQL Server and different database technologies. Yogi has in depth AWS knowledge and expertise in running Microsoft workload on AWS. Ganapathi Varma Chekuri is a Database Specialist Solutions Architect at AWS. Ganapathi works with AWS customers providing technical assistance and designing customer solutions on database projects, helping them move their existing databases to AWS cloud. https://aws.amazon.com/blogs/database/monitor-deadlocks-in-amazon-rds-for-sql-server-and-set-notifications-using-amazon-cloudwatch/

0 notes

Photo

How to Use Raygun to Identify and Diagnose Web Performance Problems

Web application development is difficult. There is no other type of application that is as involved, or requires you to understand multiple languages, frameworks, and platforms, as web applications. The most basic web application is comprised of two separate applications:

The server-side application to manage data.

The client-side application displays that data to the user.

The simplest application is written in two languages—one for the server, and HTML for the client. But that's not all: modern applications require you to understand many languages, frameworks, and development tools. At best, an application is developed by multiple teams that can individually focus on smaller pieces of the larger whole. At worst, a team as small as one person develops the entire application.

But the story doesn’t end when an application is “finished”. After a lengthy and involved development processes, there are countless hours of testing for bugs, security issues, integration, performance, and user experience. Although many developers won't admit it, performance and the user experience are often overlooked. The result, of course, is that slow, unrefined applications are released into production.

That’s exactly why tools like Raygun’s Application Performance Monitoring (APM) and Real User Monitoring (RUM) exist. They provide developers the means to quickly analyze not only how your application performs in production, but it pinpoints the exact performance issues down to the method or database query, as well as visualizing your visitors’ experiences.

In this article, I will walk you through my experience using Raygun’s APM and RUM to pinpoint issues in a live, in-production website and the steps I took to fix them.

The Problem: A Legacy Application With a Complex Codebase

I work in a fast-paced environment where quantity is more important than quality. That’s not to suggest the software I or my co-workers write is subpar; our applications work well. But the demand on our time requires us to focus more on bugs and security than performance and the user experience.

I recently used Raygun’s APM and RUM to monitor our main public web application: an informational website for the company’s investors. It is written in C# and runs on the .NET 4.6.x runtime.

This application started as multiple small projects written by a team of three people with minimal communication. The projects shared a “core” library that quickly grew into a massive, unwieldy behemoth with a lot of duplicated functionality (as I said, there wasn’t a lot of communication).

Over the years, the multiple projects morphed into a single large application. It pulls information from multiple MSSQL databases and an IBM DB2 machine to provide visitors with a wide array of economic and industry information. It interacts with other systems on the network, ranging from mail and file servers and other RESTful API services to perform other tasks.

I should also mention that I am somewhat unique in that I have a heavy IT background. My job title is “Programmer/Analyst”, but my actual duties consist of network and systems admin, programmer, and analyst. So when it comes to analyzing any issue, I'm equipped to examine both the code and the systems it runs on and interacts with.

Identifying Performance Issues

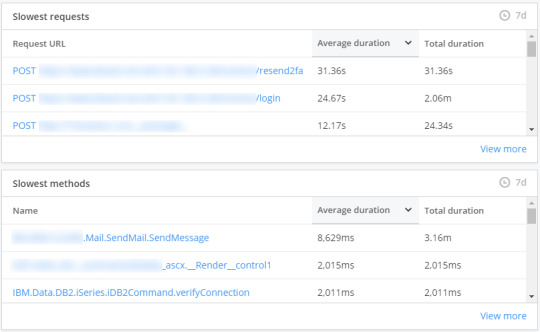

I know that my application has several performance issues; one of them is the authentication experience. Visitors must first authenticate using their credentials and then provide their OTP (one-time passcode) for two-factor authentication. Some visitors use a separate authenticator app on their mobile device to generate their OTPs, but most visitors receive their code via email. For the latter group, the authentication experience can take anywhere from 1 to 30 seconds, with most visitors experiencing longer waits.

After installing and configuring Raygun’s APM agent (a very simple process), I started seeing trace data in the dashboard within a minute. The dashboard gives you a glimpse of your application’s performance issues, providing tables and graphs showing the slowest requests, class methods, traces, SQL commands, and external API calls. Sure enough, some of the immediate offenders were related to the authentication process.

The top two slow URLs are used during the authentication process for visitors with emailed OTPs. Both are concerning (holy cow! 31 seconds for one request for /resend2fa). I certainly want to fix all the issues, but my time is limited. Therefore, fixing some of the issues with the /login endpoint is my priority.

Judging from the data provided by the “Slowest requests” table, I already suspect that the culprit is related to the process of sending emails. To be sure, I click the /login link to view the data that APM collected on that URL.

Analyzing the Data

APM collects a lot of information for every request, and it is extremely helpful because it can break down a request into its smaller parts—the methods that execute to provide the visitor with a response. The following screenshot shows the methods, ranked slowest to fastest, for the /login URL:

As expected, the main issue has something to do with the email process. It’s also interesting that the IBM DB2 ADO.NET provider is used during authentication. It shouldn’t be, and it's taking a rather significant amount of time to clear error info (as denoted by the call to IBM.Data.DB2.iSeries.MPConnection.clearErrorInfo()) that shouldn’t even be there. But that’s an issue for another day.

Apart from daily notifications, all emails sent by the website are dispatched on demand. The code that sends these emails is used in a variety of other applications that does not exhibit any performance issues. So in my mind, neither the code or the email server is at fault—there's something wrong on the machine that runs our public site.

There are so many things on a computer system that can cause performance issues. So, after viewing the logs and not seeing any apparent causes, I decide to spin up a new virtual machine and test the website on it. After installing the APM agent on the new machine and testing the login process, I notice a tremendous improvement.

The above screenshot shows the breakdown of where the application calls the SendMessage() method. Simply transferring the application to another machine cut the login process down by 26 seconds! While that's certainly a huge improvement, it still takes an average of 4-5 seconds for the initial login process.

Examining the flamechart for a single /login request (shown below), I can clearly see that sending an email is still an issue because SendMessage() still takes at least 3 seconds to completely execute.

I can solve this problem by submitting the email message to a queue or batch, and let a separate process send the message. But that feels like a work-around to me. I'd rather find and fix the issue, but I also need to manage my time. There are other, arguably more important projects that I need to complete.

Analyzing the User Experience

Raygun's APM gives you clear performance data for your server, but that's only half of your application. What happens in the browser is just as important as what happens on the server, and Raygun's Real User Monitoring (RUM) gives you insight into how your users experience your application. Not only can it track your users' individual sessions and provide usage statistics (such as number of page visits, durations, and browsers), but it can also give you an accurate picture of their experience as they navigate from page to page.

RUM also displays each requested page and breaks down a its load time into different factors, as shown here:

The /cico URL in this screenshot has a rather high server time of 5 seconds, which is understandable because that page calculates a lot of data gathered from multiple sources on the fly. But now that I see that working with live data greatly slows down the page load, I need to implement a caching/summarizing solution.

Notice that the /takepay URL has a rather small server time (it works with cached/summarized data), but it has a large render time due to rendering interactive graphs with many data points. So, I can easily solve the slow server response for /cico by daily caching or summarizing the data it works with in a separate process.

But there's more to performance and a user's experience than just response times; when properly configured, RUM catalogs all requests—including XHR, as shown below:

The first thing that caught my eye were the 30+ requests for the /settings/email-alerts URL. Either 30+ people viewed/changed their email alert settings, or that XHR is automatically executed on one of the pages. Unfortunately, RUM didn't give me an immediate idea of what page made the XHR, but I did eventually find the culprits when viewing the performance reports for various pages:

Those needless XHRs do contribute to the load time, and eliminating them can make a big difference..

Conclusion

Raygun provides an invaluable service. As my experience shows, by using APM and Real User Monitoring, you can easily monitor your application's performance.

They automatically pinpoint the performance issues on both the server and client, which is vital in a fast-paced environment. They truly are fantastic services and you can try them free today!

by Jeremy McPeak via Envato Tuts+ Code https://ift.tt/32zndA4

0 notes

Text

JIS-CTF Walkthrough

Having not posted on here in a very, very long time, I’m back to solve a CTF. Earlier this year I finished my Computer Science degree at the University of Bristol, and I’ve got a new job working in cyber security at a very large and influential multinational technology company.

I’ve been playing around with the Offensive-Security labs, which are basically a set of on-demand virtual machines set up for CTFs. Offensive-Security are also the owners of some industry leading security certifications such as OSCP - which I aim to begin in the near future.

The particular CTF we will be looking at today is JIS-CTF by Mohammad Khreesha. Note that we will be using the version on Offensive-Security, which has less flags than the version on VulnHub. The difficulty is not affected by this since you need to do the same steps to get the flags anyway.

The CTF

After connecting to the VPN and being given the IP address of the machine, the first thing we can do is see what ports are open by using an nmap scan.

nmap -p- 192.168.203.25 insert result output here

Ok so now we can see that ports 23 and 80 are open. Lets explore what’s going on with HTTP on port 80 first by opening it up with our browser of choice.

Hmm… a login page, but we don’t have any credentials. I tried looking at the source code for the page, but nothing valuable was to be found. I also tried throwing some funky characters like semicolons, hashes, apostrophes, and pipes into the login form to see if any errors would pop up, indicating the possibility of SQL injection but unfortunately we had no such luck.

The next thing we can try is looking at what other pages and paths are available on the site. One way of doing this would be by using a tool such as DIRB - which enumerates through a dictionary of common URL paths. DIRB is very noisy and takes a while, and should be used a last resort. Instead, we could first take a look at /robots.txt. This file is used by websites to instruct search engines & web crawlers as to which pages to not visit or include on their searches. Unlike using DIRB, checking /robots.txt is unlikely to be noticed or considered malign behaviour since search engines check /robots.txt frequently. The contents of /robots.txt can be seen below.

User-agent: * Disallow: / Disallow: /backup Disallow: /admin Disallow: /admin_area Disallow: /r00t Disallow: /uploads Disallow: /uploaded_files Disallow: /flag

This looks promising! After checking them all out we find that either the pages are innaccessible, or seem to be red herrings. We can dive in a bit deeper and look at the source code for the pages and voila! In the source code for /admin_area we found the credentials:

username : admin password : 3v1l_H@ck3r

Nice! Next I was cheeky and tried to see if we could login to the system via SSH with the newfound credentials but no luck. Fortunately though, they did work for the login form on the home page of the website!

After logging in, we are taken to a new page in which we can upload files to the server. If only there was some way of getting the system to run the files we could get a reverse shell going…

To test something out, I uploaded a random image file (image.jpg) and then navigated to /uploaded_files/image.jpg and it serves me the image! This is bad news for the server but good news for us. Since Apache is configured to execute .php files with the PHP interpreter, we can get the system to execute PHP scripts for us by uploading a file e.g. phpscript.php and then navigating to /uploaded_files/phpscript.php.

Naturally, a reverse shell script is what we want. I used this PHP reverse shell script from Pentest Monkey. You need to go into the script and change the IP address to your own IP address (note that it will be your address on the VPN, not your public internet IP address), and change the port to a free port on your machine (I used 9998).

Next, we need to set our computer to listen on the above port, ready to give commands to the reverse shell. I used Netcat, but you could also set up Python to do something similar. Change 9998 to whatever port you are using.

nc -nlvp 9998

Upload the PHP reverse shell script we fixed earlier, and then navigate to /uploaded_files/php-reverse-shell.php. Success! Your netcat terminal should let you know that you’ve made the desired connection.

Now that we’re in we can have a nosy around. First off, by entering whoami we see that we’re running as www-data - which is the default user for users on web users, and typically has a low level of priviledge. By looking at some of the files and folders in the immediate directory, we can see that we do not have access to many of them (flag, flag.txt). Looking in hint.txt tells that we should look for the credentials to log in to the user ‘technawi’.

After looking around with ls -a (ls lists files and -a includes hidden files) in the current directory as well as some other common places like /, it was clear that we’d need to perform a deeper search. There are two obvious tools for this, Grep and Find.

Grep searches for strings contained within files, whereas Find only searches by file name. I opted to use Grep with the following command:

grep -ir password / 2>/dev/null

(the 2>/dev/null part is not essential - it essentially gets rid those pesky errors you get you try to access files and directories that you don’t have access to)

Looking through the results, we find a file containing the string 'password’ /etc/mysql/conf.d/credentials.txt. Taking a look using cat tells us that the flag is in another file, but gives us the password for that 'technawi’ account we were looking for.

cat /etc/mysql/conf.d/credentials.txt Your flag is in another file... username : technawi password : 3vilH@ksor

Now we currently dont have a proper terminal, but we could try to spawn one… but a much easier way would just be to SSH in using those new credentials! So lets Ctrl+C out of this shell and SSH in.

There’s a file here called local.txt - which if we cat it gives us one of the flags!

The instructions for the CTF said that we were looking for a file called 'proof.txt’. Performing a search using Find:

find / -name proof.txt

But we get no results. Perhaps again it could be a permissions problem, if so then perhaps only the root user has access. If we prefix the above command with sudo it will search as if we are the root user.

sudo find / -name proof.txt /root/proof.txt

And if we cat out the file we just found, we get the last flag!

Fixes

Don’t allow uploading files to uncontrolled areas

Mediate access to uploaded files

Require a key for SSH

0 notes

Text

DOWNLOAD DBVISUALIZER SQL SERVER 2005 DRIVER

Operating Systems: Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X Date Added: 29 November, 2019 File Size: 23 Mb File Name: dbvisualizer sql server 2005 driver Uploader: Micro File Version: 414141386 Download Type: http File Format: exe Price: Free Downloads: 1265

What's New: - Fixed system boot to OS take long time if USB device installed. - Fixed the error of firmware version 2.29 that(dbvisualizer sql server 2005 driver that) the last folder wasn't displayed in alphabetical order. - Fixes * Ensures that the video aspect ratio is preserved in videos playing through the Video Out jack. - Improved Item: - Fixed WIFI performance issue - Fixed Youtube issue - Fixed video radio issueSteps of Update: 1. - Fixed live view CGI packet error – miss <CRLF> on Date field. - Fixed Sempron CPU temperature issue. - Fixed a(dbvisualizer sql server 2005 driver a) bug where a service that sent email notifications did not run. - Fixed(dbvisualizer sql server 2005 driver Fixed) multiple security issuesEnhancement:- Added IPv6 Passthrough support- Added QoS Bandwidth limiter support. - Fixed legacy USB support not working when PCIe USB card is installed 12. - Changed(dbvisualizer sql server 2005 driver Changed) minimum .NET Framework minimum requirement to 4.6 Users content: - Fix will hang at win7 logo when Restore system from win10 to win. Support for non-ECC memory modulesOnboard GraphicsIntegrated in the North Bridge:1. 1 x D-sub port2. 1 x DVI-D port3. 1 x HDMI portAudio 1. - Implement ODD eject function under DOS It is highly recommended to always use the most recent driver version available. New features:1. mydlink agent upgrade to 2.2.0-b02. Dual channel DDR2 800/667/533 Un-buffered Non-ECC memory. The Connect To box opens with a prompt to enter the Administrator user name and password (both case-sensitive). GPU clocks on some products may remain in high states after recovering from an application crash. Added:- Added OpenVPN server. - Supports New Intel Core i7 processor Extreme Edition - 4 channel DDR3 support on 8 slots - PCI Express Gen. Korean Language specific issues are observed with InstallShield during the setup process. A8N-LA DOWNLOAD ASUS DRIVER Supported OS: Microsoft Windows 8 (32-bit) Microsoft Windows 8 (64-bit) Windows Vista 64-bit Windows Server 2012 R2 Windows 7 32-bit Microsoft Windows 8.1 Pro (32-bit) Notebook 8.1/8/7 32-bit Windows Server 2016 Windows Vista 32-bit Windows 7 Windows Server 2008 Windows Server 2012 Windows Server 2008 R2 Microsoft Windows 8.1 Pro (64-bit) Microsoft Windows 8.1 (64-bit) Windows Server 2003 64-bit Windows 8.1/8/7/Vista 64-bit Microsoft Windows 8 Enterprise (32-bit) Notebook 8.1/8/7 64-bit Microsoft Windows 8.1 (32-bit) Windows Server 2003 32-bit Microsoft Windows 8.1 Enterprise (32-bit) Windows 10 Microsoft Windows 8.1 Enterprise (64-bit) Windows XP 32-bit Windows 2000 Windows 8.1/8/7/Vista 32-bit Microsoft Windows 8 Pro (32-bit) Microsoft Windows 10 (32-bit) Windows 8.1 Microsoft Windows 8 Pro (64-bit) Windows 8 Windows XP 64-bit Microsoft Windows 8 Enterprise (64-bit) Microsoft Windows 10 (64-bit) Windows 7 64-bit Searches: dbvisualizer sql server 2005 WY4935; dbvisualizer sql server 2005 driver for Windows 7 64-bit; dbvisualizer sql server 2005 driver for Microsoft Windows 8.1 Enterprise (64-bit); dbvisualizer sql server 2005 driver for Microsoft Windows 8.1 Pro (32-bit); dbvisualizer sql server 2005 driver for Windows Server 2003 64-bit; dbvisualizer sql server 2005 W49h; dbvisualizer sql server 2005 Whz493-hzl; dbvisualizer sql server 2005 W WY493-4; dbvisualizer sql server 2005 WYTPM4935; dbvisualizer sql server 2005 driver for Microsoft Windows 8.1 Pro (64-bit); sql 2005 dbvisualizer server driver Compatible Devices: Wifi adapter; Memory Card; Ipod; Videocard; Scanner; Iphone To ensure the integrity of your download, please verify the checksum value. MD5: d154547e729b150a03253e3dbe116b07 SHA1: 0bcb3d9a6db46228d301f12a4811543dea9935f6 SHA-256: 04aa62f03b70ad9ba7f86a09d001a2a7002ec4f028a45667205a5863476ff60f

0 notes

Text

300+ TOP IBM TIVOLI Interview Questions and Answers

IBM Tivoli Interview Questions for freshers experienced :-

1. The Run as Administrator option is used to bypass user account control specifically on which operating system? Windows 2008 2. Which service access point is created on the target computer during the Tivoli Common Agent installation? Agent-Server (IPv4 / CommonAgent) 3. Which statement is true about static groups? Members can be added anytime after group creation. 4. Which supported database can be used by IBM Tivoli Provisioning Manager V7.2 on a Windows server? IBM DB2 5. What are the two main catalogs managed by the image library? 1. Saved Images 2. Master Images 6. What are your daily activities? TSM Server DB and Log size monitoring, client and admin schedule monitoring and library media management. I also have to install and configure TSM BA clients whenever required and troubleshoot backup/restore issues if any. 7. What are first * and second * in the “Query Event * *”? First * is Domain name, the second * is schedule name 8. How to check admin schedules status? Query Event * t=a 9. Where/How will you see, if errors occurred in the tapes? We can check it in actlog for hardware/media & read-write errors by using the command – “q actlog se=errors” To determine read/write errors for a particular volume run the command “q volume f=d“. This output will show the number of read/write errors occurred to that particular volume. 10. What is your DB size in your environment? Approximately 60 GB. Tip: size depends on your environment

IBM Tivoli Interview Questions 11. What is your tape model? HP LTO4 tape – capacity 800 GB, speed is 120 MB/Sec. 12. Have you done the DR test? Yes, In our environment we should do DR drills for every 6 months to ensure we are ready for any disaster recovery. 13. Have you done a library configuration? Yes, I did it once. 14. What is your library model? IBM 3584 library model with 4 drives 15. Can you explain how to install and configure the tdpsql? STEPS TO INSTALL AND CONFIG TDPSQL 16. What is the use of a maximum number of mount points parameter? Maximum parameter is used to increase/decrease the client backup/restore sessions. This parameter value should not be greater than available tape drives. 17. What are the TSM server tuning parameters? DBMEMPERCENT EXPINTERVAL MAX SESSIONS MOVEBATCHSIZE and MOVESIZETHRESH RESTOREINTERVAL TCPNODELAY TCPWINDOWSIZE TXNGROUPMAX 18. What is collocation? Where will you update it? Collocation is the process of keeping the client backed up data in a minimum number of tapes. Collocation can be done by nodes, node groups and by Filespace. We will update collocation while defining or updating storage pool definitions. update stgpool colloc=node 19. What is cloptset? Where will you update it? Cloptset is used to define a name for a set of options which you can assign to clients for archive, backup, restore, and retrieve operations. We should first define a clioptset name by using “define cloptset” command and then define each client options to that cloptset by using “define clientopt” command. Then you should use this cloptset name while defining any client node. Generally, cloptset is used to assign extra client options for any particular node from the TSM Server end without logging into the client machine. 20. Have you performed TSM DB restoration? Yes, 21. How will you see the errors in the tape? To determine read/write errors for a particular volume run “q volume f=d“. This output will show the number of read/write errors occurred to that particular volume. 22. What will you do if u find errors in the tapes? First, we have to figure it out the source of the error, whether tape drive has caused the error or tape itself has some problems. If the tape drive is the culprit make sure no other tapes are mounted on that particular tape. If tape itself has problems, then audit that tape by using “audit volume fix=no” command. This will show if there are any damaged files. IF there are no damaged files move the data from that tape to another tape, if there are any damaged files then we should restore the contents of that tape from the copy storage pool. 23. Have you done a manual backup? Yes, We can do it by the BA client command line and also through the BA client GUI. 24. How will you login into the client machine do you have any credentials for you separately? Yes, I have client login credentials with limited privileges. 25. How many servers in your environment? 3 TSM Servers 26. What is the TSM library manager? TSM LIBRARY MANAGER 27. On which TSM Server you will define tape drives in a shared tape library environment? We have to define all the drives on both the Library Manager and Library clients. CONFIGURATION OF TSM LIBRARY MANAGER 28. How will you see the lanfree backup process in the server side? By running “storageagentname:q session” Querying act log for “ANR0415I” message which says that “session 101 was proxies by storage agent S1 for node N1” You can also check the backup report for total lanfree bytes transferred. 29. How will you clean the drive? Generally, tape library will have separate cleaning drives, we have to just mount that drive through Library GUI. OR From TSM Server we can run “clean drive” command, this will also mount the cleaning cartridge/tape onto the drive and starts cleaning. 30. How to label the volume? If new tapes are kept in I/O exit port, run this below command label libvol search=bulk labelsource=barcode checkin=scratch TROUBLESHOOTING TAPE LIBRARY 31. What are the parameters in the copy group? a version exists, version deleted, retention extra, retention only, serialization, destination, mode, frequency …… etc. 31. What is the use of reclamation? Reclamation makes the fragmented space on volumes usable again by moving any remaining files from one volume to another volume. This action makes the original volume available for reuse. 32. Is there any chance of moving inactive data from one volume to another using reclamation? No, reclamation will move both active and inactive data. 33. What is audit volume? Audit volume command is used to check the inconsistencies between database information and a storage pool volume. 34. What is fix=yes/no in audit volume command? Fix — Specifies how the server resolves inconsistencies between the database inventory and the specified storage pool volume. Fix=No — Tivoli Storage Manager reports, but does not delete, database records. Fix=Yes — The server fixes any inconsistencies as they are detected 35. What is migration? use of migration? Migration is used to move client files from one storage pool to the next storage pool in the storage hierarchy. The migration will make some empty space in disk pools which can be used for taking a backup of the client machine. 36. Have you done up gradation? which method you have used? Yes, we used the Same System Media Method 37. Have you done upgradation for client machine? Yes, First we have to copy all the important client configuration files, disconnect the connection with TSM Server and then uninstall/install new client version. 38. Have you done the installation of TSM server? tell me the procedure? TSM SERVER 6.3 INSTALLATION STEPS 39. Explain the log flow in the 6.3? RECOVERY LOG IN TSM VERSION 6.30 40. What are the details available in the dsmsched.log and dsmerror.log? dsmsched.log & dsmerror.log will contain the client schedule status details and any schedule error details. 41. Will the lanfree data go to the disk pool? No, Lanfree data will go directly to tape/tape pools. If we have a disk library in a SAN, then we can send lanfree to disks but we need another supporting software. 42. How to see the DB size? Query db f=d 43. How to check the paths? query path f=d 44. How to update path? update path source name destination name srctype=server desttype=drive/library device=devicename online=yes 45. What is the difference between search=yes and search=bulk in LABEL command? YES – Specifies that the server labels only volumes that are stored in the library BULK – Specifies that the server searches the library entry/exit ports for usable volumes to label 46. In tdpsql what does tdpsql.cfg file contains? It contains all the tdpsql performance monitoring parameters like Buffers, Buffers size, SQL buffers, SQL buffer size, data stripes, and log file, etc. 47. How to install tdpsql in the client machine? INSTALL AND CONFIGURATION OF IBM TDPSQL 48. Any idea on TSM Offsite Reclamation? OFFSITE RECLAMATION PROCESS 49. What is the use of storage pool backup (copy stgpools)? If there are any read/write errors on the primary pool volumes, we can restore them from copy pool volumes. 50. How to do Lanfree configuration? STEPS TO INSTALL AND CONFIGURE TSM STORAGE AGENT 51. Explain about policy management? TSM POLICY MANAGEMENT BASICS & POLICY STRUCTURE 52. What are the parameters in the copy group? a version exists, version deleted, retention extra, retention only, serialization, destination, mode, frequency …… etc 53. What is Serialization? It specifies how Tivoli Storage Manager processes files or directories when they are modified during backup processing. IBM Tivoli Questions and Answers Pdf Download Read the full article

0 notes

Text

What Is Web Application Security?

Web application security (also known as Web AppSec) is the idea of building websites to function as expected, even when they are under attack. The concept involves a collection of security controls engineered into a Web application to protect its assets from potentially malicious agents.

Web applications, for example, all applications, necessarily contain flaws. Several of those flaws represent real vulnerabilities that may be exploited, introducing risks into organizations. Web-application security protects in opposition to such flaws.

It consists of using stable development clinics and implementing security measures through the program development life cycle (SDLC), guaranteeing design-level flaws and implementation-level bugs are addressed.

Why is web security testing essential?

Website security testing aims to find security vulnerabilities in Web applications and its own setup. The primary target would be the application layer (i.e., what is running on the HTTP protocol).

Testing the safety of the internet application often entails sending different forms of an input signal to provoke glitches and make the device behave in surprising manners. These so-called “bad evaluations" examine perhaps the system is doing something it is not supposed to do.

It's likewise crucial that you see that Web security evaluation isn't just about testing the stability attributes (e.g., authentication and authorization) that may be implemented from this application.

It is just as crucial that you test that other features have been employed within a secure way (e.g., business logic and also the use of suitable input validation and output encoding). The goal is always to make certain that the works exposed to internet software are secure.

What are the different kinds of Security Test?

Dynamic Application Security Test (DAST). This automatic application safety evaluation is ideal for facing, anti-virus software that has to conform to regulatory security assessments. To get medium-risk applications and important software undergoing minor alterations, combining DAST with some manual internet protection testing for common vulnerabilities would be the optimal option.

Static Application Security Evaluation (SAST). This application stability approach provides automatic and manual testing practices. It's advisable for identifying bugs without the need to execute applications in a production environment. It also enables builders to scan source code and methodically locate and eliminate applications security vulnerabilities.

Penetration Test. This guide application security evaluation is ideal for applications that are critical, especially those undergoing major changes. The examination calls for business logic and adversary-based analyzing to discover advanced attack scenarios.

Runtime App Self Protection (RASP). This evolving application security approach encompasses lots of technological practices to make use of an application, therefore, that attacks could be tracked because they execute and, preferably, blocked in actual time.