#Text Annotation Services for ML

Explore tagged Tumblr posts

Text

How Data Annotation Tools Are Paving the Way for Advanced AI and Autonomous Systems

The global data annotation tools market size was estimated at USD 1.02 billion in 2023 and is anticipated to grow at a CAGR of 26.3% from 2024 to 2030. The growth is majorly driven by the increasing adoption of image data annotation tools in the automotive, retail, and healthcare sectors. The data annotation tools enable users to enhance the value of data by adding attribute tags to it or labeling it. The key benefit of using annotation tools is that the combination of data attributes enables users to manage the data definition at a single location and eliminates the need to rewrite similar rules in multiple places.

The rise of big data and a surge in the number of large datasets are likely to necessitate the use of artificial intelligence technologies in the field of data annotations. The data annotation industry is also expected to have benefited from the rising demands for improvements in machine learning as well as in the rising investment in advanced autonomous driving technology.

Technologies such as the Internet of Things (IoT), Machine Learning (ML), robotics, advanced predictive analytics, and Artificial Intelligence (AI) generate massive data. With changing technologies, data efficiency proves to be essential for creating new business innovations, infrastructure, and new economics. These factors have significantly contributed to the growth of the industry. Owing to the rising potential of growth in data annotation, companies developing AI-enabled healthcare applications are collaborating with data annotation companies to provide the required data sets that can assist them in enhancing their machine learning and deep learning capabilities.

For instance, in November 2022, Medcase, a developer of healthcare AI solutions, and NTT DATA, formalized a legally binding agreement. Under this partnership, the two companies announced their collaboration to offer data discovery and enrichment solutions for medical imaging. Through this partnership, customers of Medcase will gain access to NTT DATA's Advocate AI services. This access enables innovators to obtain patient studies, including medical imaging, for their projects.

However, the inaccuracy of data annotation tools acts as a restraint to the growth of the market. For instance, a given image may have low resolution and include multiple objects, making it difficult to label. The primary challenge faced by the market is issues related to inaccuracy in the quality of data labeled. In some cases, the data labeled manually may contain erroneous labeling and the time to detect such erroneous labels may vary, which further adds to the cost of the entire annotation process. However, with the development of sophisticated algorithms, the accuracy of automated data annotation tools is improving thus reducing the dependency on manual annotation and the cost of the tools.

Global Data Annotation Tools Market Report Segmentation

Grand View Research has segmented the global data annotation tools market report based on type, annotation type, vertical, and region:

Type Outlook (Revenue, USD Million, 2017 - 2030)

Text

Image/Video

Audio

Annotation Type Outlook (Revenue, USD Million, 2017 - 2030)

Manual

Semi-supervised

Automatic

Vertical Outlook (Revenue, USD Million, 2017 - 2030)

IT

Automotive

Government

Healthcare

Financial Services

Retail

Others

Regional Outlook (Revenue, USD Million, 2017 - 2030)

North America

US

Canada

Mexico

Europe

Germany

UK

France

Asia Pacific

China

Japan

India

South America

Brazil

Middle East and Africa (MEA)

Key Data Annotation Tools Companies:

The following are the leading companies in the data annotation tools market. These companies collectively hold the largest market share and dictate industry trends.

Annotate.com

Appen Limited

CloudApp

Cogito Tech LLC

Deep Systems

Labelbox, Inc

LightTag

Lotus Quality Assurance

Playment Inc

Tagtog Sp. z o.o

CloudFactory Limited

ClickWorker GmbH

Alegion

Figure Eight Inc.

Amazon Mechanical Turk, Inc

Explosion AI GMbH

Mighty AI, Inc.

Trilldata Technologies Pvt Ltd

Scale AI, Inc.

Google LLC

Lionbridge Technologies, Inc

SuperAnnotate LLC

Recent Developments

In November 2023, Appen Limited, a high-quality data provider for the AI lifecycle, chose Amazon Web Services (AWS) as its primary cloud for AI solutions and innovation. As Appen utilizes additional enterprise solutions for AI data source, annotation, and model validation, the firms are expanding their collaboration with a multi-year deal. Appen is strengthening its AI data platform, which serves as the bridge between people and AI, by integrating cutting-edge AWS services.

In September 2023, Labelbox launched Large Language Model (LLM) solution to assist organizations in innovating with generative AI and deepen the partnership with Google Cloud. With the introduction of large language models (LLMs), enterprises now have a plethora of chances to generate new competitive advantages and commercial value. LLM systems have the ability to revolutionize a wide range of intelligent applications; nevertheless, in many cases, organizations will need to adjust or finetune LLMs in order to align with human preferences. Labelbox, as part of an expanded cooperation, is leveraging Google Cloud's generative AI capabilities to assist organizations in developing LLM solutions with Vertex AI. Labelbox's AI platform will be integrated with Google Cloud's leading AI and Data Cloud tools, including Vertex AI and Google Cloud's Model Garden repository, allowing ML teams to access cutting-edge machine learning (ML) models for vision and natural language processing (NLP) and automate key workflows.

In March 2023, has released the most recent version of Enlitic Curie, a platform aimed at improving radiology department workflow. This platform includes Curie|ENDEX, which uses natural language processing and computer vision to analyze and process medical images, and Curie|ENCOG, which uses artificial intelligence to detect and protect medical images in Health Information Security.

In November 2022, Appen Limited, a global leader in data for the AI Lifecycle, announced its partnership with CLEAR Global, a nonprofit organization dedicated to ensuring access to essential information and amplifying voices across languages. This collaboration aims to develop a speech-based healthcare FAQ bot tailored for Sheng, a Nairobi slang language.

Order a free sample PDF of the Market Intelligence Study, published by Grand View Research.

0 notes

Text

The Data Collection And Labeling Market was valued at USD 3.0 Billion in 2023 and is expected to reach USD 29.2 Billion by 2032, growing at a CAGR of 28.54% from 2024-2032.

The data collection and labeling market is witnessing transformative growth as artificial intelligence (AI), machine learning (ML), and deep learning applications continue to expand across industries. As organizations strive to unlock the value of big data, the demand for accurately labeled datasets has surged, making data annotation a critical component in developing intelligent systems. Companies in sectors such as healthcare, automotive, retail, and finance are investing heavily in curated data pipelines that drive smarter algorithms, more efficient automation, and personalized customer experiences.

Data Collection and Labeling Market Fueled by innovation and technological advancement, the data collection and labeling market is evolving to meet the growing complexities of AI models. Enterprises increasingly seek comprehensive data solutions—ranging from image, text, audio, and video annotation to real-time sensor and geospatial data labeling—to power mission-critical applications. Human-in-the-loop systems, crowdsourcing platforms, and AI-assisted labeling tools are at the forefront of this evolution, ensuring the creation of high-quality training datasets that minimize bias and improve predictive performance.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/5925

Market Keyplayers:

Scale AI – Scale Data Engine

Appen – Appen Data Annotation Platform

Labelbox – Labelbox AI Annotation Platform

Amazon Web Services (AWS) – Amazon SageMaker Ground Truth

Google – Google Cloud AutoML Data Labeling Service

IBM – IBM Watson Data Annotation

Microsoft – Azure Machine Learning Data Labeling

Playment (by TELUS International AI) – Playment Annotation Platform

Hive AI – Hive Data Labeling Platform

Samasource – Sama AI Data Annotation

CloudFactory – CloudFactory Data Labeling Services

SuperAnnotate – SuperAnnotate AI Annotation Tool

iMerit – iMerit Data Enrichment Services

Figure Eight (by Appen) – Figure Eight Data Labeling

Cogito Tech – Cogito Data Annotation Services

Market Analysis The market's growth is driven by the convergence of AI deployment and the increasing demand for labeled data to support supervised learning models. Startups and tech giants alike are intensifying their focus on data preparation workflows. Strategic partnerships and outsourcing to data labeling service providers have become common approaches to manage scalability and reduce costs. The competitive landscape features a mix of established players and emerging platforms offering specialized labeling services and tools, creating a highly dynamic ecosystem.

Market Trends

Increasing adoption of AI and ML across diverse sectors

Rising preference for cloud-based data annotation tools

Surge in demand for multilingual and cross-domain data labeling

Expansion of video and 3D image annotation for autonomous systems

Growing emphasis on ethical AI and reduction of labeling bias

Integration of AI-assisted labeling to accelerate workflows

Outsourcing of labeling processes to specialized firms for scalability

Enhanced use of synthetic data for model training and validation

Market Scope The data collection and labeling market serves as the foundation for AI applications across verticals. From autonomous vehicles requiring high-accuracy image labeling to chatbots trained on annotated customer interactions, the scope encompasses every industry where intelligent automation is pursued. As AI maturity increases, the need for diverse, structured, and domain-specific datasets will further elevate the relevance of comprehensive labeling solutions.

Market Forecast The market is expected to maintain strong momentum, driven by increasing digital transformation initiatives and investment in smart technologies. Continuous innovation in labeling techniques, enhanced platform capabilities, and regulatory compliance for data privacy are expected to shape the future landscape. Organizations will prioritize scalable, accurate, and cost-efficient data annotation solutions to stay competitive in an AI-driven economy. The role of data labeling is poised to shift from a support function to a strategic imperative.

Access Complete Report: https://www.snsinsider.com/reports/data-collection-and-labeling-market-5925

Conclusion The data collection and labeling market is not just a stepping stone in the AI journey—it is becoming a strategic cornerstone that determines the success of intelligent systems. As enterprises aim to harness the full potential of AI, the quality, variety, and scalability of labeled data will define the competitive edge. Those who invest early in refined data pipelines and ethical labeling practices will lead in innovation, relevance, and customer trust in the evolving digital world.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

Boost AI ML Accuracy and Efficiency: Utilize Data Labeling Solutions by EnFuse

Improve AI ML outcomes with EnFuse Solutions’ comprehensive data labeling services. From images and text to audio and video, they deliver accurate, consistent annotations for reliable model training. EnFuse’s scalable services streamline your workflow and empower faster, smarter decisions powered by clean, structured data.

Visit here to see how EnFuse Solutions empowers AI and ML models with expert data labeling services: https://www.enfuse-solutions.com/services/ai-ml-enablement/labeling-curation/

#DataLabeling#DataLabelingServices#DataCurationServices#ImageLabeling#AudioLabeling#VideoLabeling#TextLabeling#DataLabelingCompaniesIndia#DataLabelingAndAnnotation#AnnotationServices#EnFuseSolutions#EnFuseSolutionsIndia

0 notes

Text

Top Video Data Collection Services for AI and Machine Learning

Introduction

In the contemporary landscape dominated by artificial intelligence, video data is essential for the training and enhancement of machine learning models, particularly in fields such as computer vision, autonomous systems, surveillance, and retail analytics. However, obtaining high-quality video data is not a spontaneous occurrence; it necessitates meticulous planning, collection, and annotation. This is where specialized Video Data Collection Services become crucial. In this article, we will examine the characteristics that define an effective video data collection service and showcase how companies like GTS.AI are establishing new benchmarks in this industry.

Why Video Data Is Crucial for AI Models

Video data provides comprehensive and dynamic insights that surpass the capabilities of static images or text. It aids machine learning models in recognizing movement and patterns in real time, understanding object behavior and interactions, and enhancing temporal decision-making in complex environments. Video datasets are essential for various real-world AI applications, including the training of self-driving vehicles, the advancement of smart surveillance systems, and the improvement of gesture recognition in augmented and virtual reality.

What to Look for in a Video Data Collection Service

When assessing a service provider for the collection of video datasets, it is essential to take into account the following critical factors:

Varied Environmental Capture

Your models must be able to generalize across different lighting conditions, geographical locations, weather variations, and more. The most reputable providers offer global crowd-sourced collection or customized video capture designed for specific environments.

2. High-Quality, Real-Time Capture

Quality is paramount. Seek services that provide 4K or HD capture, high frame rates, and various camera angles to replicate real-world situations.

3. Privacy Compliance

In light of the growing number of regulations such as GDPR and HIPAA, it is imperative to implement measures for face and license plate blurring, consent tracking, and secure data management.

Annotation and Metadata

Raw footage alone is insufficient. The most reputable providers offer annotated datasets that include bounding boxes, object tracking, activity tagging, and additional features necessary for training supervised learning models.

Scalability

Regardless of whether your requirement is for 100 or 100,000 videos, the provider must possess the capability to scale efficiently without sacrificing quality.

GTS.AI: A Leader in Video Data Collection Services

At GTS.AI, we focus on delivering tailored, scalable, and premium video dataset solutions for AI and ML teams across various sectors.

What Sets GTS.AI Apart?

Our unique advantages include a global reach through a crowdsource network in over 100 countries, enabling diverse data collection.

We offer flexible video types, accommodating indoor retail and outdoor traffic scenarios with scripted, semi-scripted, and natural video capture tailored to client needs.

Our compliance-first approach ensures data privacy through anonymization techniques and adherence to regulations.

Additionally, we provide an end-to-end workflow that includes comprehensive video annotation services such as frame-by-frame labeling, object tracking, and scene segmentation.

For those requiring quick access to data, our systems are designed for rapid deployment and delivery while maintaining high quality.

Use Cases We Support

Autonomous Driving and Advanced Driver Assistance Systems,

Smart Surveillance and Security Analytics,

Retail Behavior Analysis,

Healthcare Monitoring such as Patient Movement Tracking,

Robotics and Human Interaction,

Gesture and Action Recognition.

Ready to Power Your AI Model with High-Quality Video Data?

Regardless of whether you are developing next-generation autonomous systems or creating advanced security solutions, Globose Technology Solution .AI's video data collection services can provide precisely what you require—efficiently, rapidly, and with accuracy.

0 notes

Text

Top AI Trends in Medical Record Review for 2025 and Beyond

When every minute counts and the volume of documentation keeps growing, professionals handling medical records often face a familiar bottleneck—navigating through massive, redundant files to pinpoint crucial medical data. Whether for independent medical exams (IMEs), peer reviews, or litigation support, delays, and inaccuracies in reviewing records can disrupt decision-making, increase overhead, and pose compliance risks.

That's where AI is stepping in—not as a future solution but as a game changer.

From Data Overload to Data Precision

Manual review processes often fall short when records include thousands of pages with duplicated reports, handwritten notes, and scattered information. AI-powered medical records review services now bring precision, speed, and structure to this chaos.

AI/ML model scans entire sets of medical documents, learns from structured and unstructured data, and identifies critical data points—physician notes, prescriptions, lab values, diagnosis codes, imaging results, and provider details. The system then indexes and sorts records according to the date of injury and treatment visits, ensuring clear chronological visibility.

Once organized, the engine produces concise summaries tailored for quick decision-making—ideal for deposition summaries, peer reviews, and IME/QME reports.

Key AI Trends Reshaping 2025 and Beyond

1. Contextual AI Summarization

Summaries are no longer just text extractions. AI models are becoming context-aware, producing focused narratives that eliminate repetition and highlight medically significant events—exactly what reviewers need when building a case or evaluating medical necessity.

2. Intelligent Indexing & Chronology Sorting

Chronological sorting is moving beyond simple date alignment. AI models now associate events with the treatment cycle, grouping diagnostics, prescriptions, and physician notes by the injury timeline—offering a cleaner, more logical flow of information.

3. Deduplication & Version Control

Duplicate documents create confusion and waste time. Advanced AI can now detect and remove near-identical reports, earlier versions, and misfiled documents while preserving audit trails. This alone reduces review fatigue and administrative overhead.

4. Custom Output Formats

Different reviewers prefer different formats. AI-driven platforms offer customizable outputs—hyperlinked reports, annotated PDFs, or clean deposition summaries—ready for court proceedings or clinical assessments.

Why This Matters Now

The pressure to process records faster, more accurately, and at scale is growing. Workers' comp cases and utilization reviews depend on fast and clear insights. AI-powered medical records review service providers bring the tools to meet that demand—not just for efficiency but also for risk mitigation and quality outcomes.

Why Partner with an AI-Driven Medical Records Review Provider?

A reliable partner can bring scalable infrastructure, domain-trained AI models, and compliance-ready outputs. That's not just an operational upgrade—it's a strategic advantage. As the demand for faster, more intelligent medical records review services grows, those who invest in AI-driven solutions will lead the next phase of review excellence.

0 notes

Text

0 notes

Text

The Best Labelbox Alternatives for Data Labeling in 2025

Whether you're training machine learning models, building AI applications, or working on computer vision projects, effective data labeling is critical for success. Labelbox has been a go-to platform for enterprises and teams looking to manage their data labeling workflows efficiently. However, it may not suit everyone’s needs due to high pricing, lack of certain features, or compatibility issues with specific use cases.

If you're exploring alternatives to Labelbox, you're in the right place. This blog dives into the top Labelbox alternatives, highlights the key features to consider when choosing a data labeling platform, and provides insights into which option might work best for your unique requirements.

What Makes a Good Data Labeling Platform?

Before we explore alternatives, let's break down the features that define a reliable data labeling solution. The right platform should help optimize your labeling workflow, save time, and ensure precision in annotations. Here are a few key features you should keep in mind:

Scalability: Can the platform handle the size and complexity of your dataset, whether you're labeling a few hundred samples or millions of images?

Collaboration Tools: Does it offer features that improve collaboration among team members, such as user roles, permissions, or integration options?

Annotation Capabilities: Look for robust annotation tools that support bounding boxes, polygons, keypoints, and semantic segmentation for different data types.

AI-Assisted Labeling: Platforms with auto-labeling capabilities powered by AI can significantly speed up the labeling process while maintaining accuracy.

Integration Flexibility: Can the platform seamlessly integrate with your existing workflows, such as TensorFlow, PyTorch, or custom ML pipelines?

Affordability: Pricing should align with your budget while delivering a strong return on investment.

With these considerations in mind, let's explore the best alternatives to Labelbox, including their strengths and weaknesses.

Top Labelbox Alternatives

1. Macgence

Strengths:

Offers a highly customizable end-to-end solution that caters to specific workflows for data scientists and machine learning engineers.

AI-powered auto-labeling to accelerate labeling tasks.

Proven expertise in handling diverse data types, including images, text, and video annotations.

Seamless integration with popular machine learning frameworks like TensorFlow and PyTorch.

Known for its attention to data security and adherence to compliance standards.

Weaknesses:

May require time for onboarding due to its vast range of features.

Limited online community documentation compared to Labelbox.

Ideal for:

Organizations that value flexibility in their workflows and need an AI-driven platform to handle large-scale, complex datasets efficiently.

2. Supervisely

Strengths:

Strong collaboration tools, making it easy to assign tasks and monitor progress across teams.

Extensive support for complex computer vision projects, including 3D annotation.

A free plan that’s feature-rich enough for small-scale projects.

Intuitive user interface with drag-and-drop functionality for ease of use.

Weaknesses:

Limited scalability for larger datasets unless opting for the higher-tier plans.

Auto-labeling tools are slightly less advanced compared to other platforms.

Ideal for:

Startups and research teams looking for a low-cost option with modern annotation tools and collaboration features.

3. Amazon SageMaker Ground Truth

Strengths:

Fully managed service by AWS, allowing seamless integration with Amazon's cloud ecosystem.

Uses machine learning to create accurate annotations with less manual effort.

Pay-as-you-go pricing, making it cost-effective for teams already on AWS.

Access to a large workforce for outsourcing labeling tasks.

Weaknesses:

Requires expertise in AWS to set up and configure workflows.

Limited to AWS ecosystem, which might pose constraints for non-AWS users.

Ideal for:

Teams deeply embedded in the AWS ecosystem that want an AI-powered labeling workflow with access to a scalable workforce.

4. Appen

Strengths:

Combines advanced annotation tools with a global workforce for large-scale projects.

Offers unmatched accuracy and quality assurance with human-in-the-loop workflows.

Highly customizable solutions tailored to specific enterprise needs.

Weaknesses:

Can be expensive, particularly for smaller organizations or individual users.

Requires external support for integration into custom workflows.

Ideal for:

Enterprises with complex projects that require high accuracy and precision in data labeling.

Use Case Scenarios: Which Platform Fits Best?

For startups with smaller budgets and less complex projects, Supervisely offers an affordable and intuitive entry point.

For enterprises requiring precise accuracy on large-scale datasets, Appen delivers unmatched quality at a premium.

If you're heavily integrated with AWS, SageMaker Ground Truth is a practical, cost-effective choice for your labeling needs.

For tailored workflows and cutting-edge AI-powered tools, Macgence stands out as the most flexible platform for diverse projects.

Finding the Best Labelbox Alternative for Your Needs

Choosing the right data labeling platform depends on your project size, budget, and technical requirements. Start by evaluating your specific use cases—whether you prioritize cost efficiency, advanced AI tools, or integration capabilities.

For those who require a customizable and AI-driven data labeling solution, Macgence emerges as a strong contender to Labelbox, delivering robust capabilities with high scalability. No matter which platform you choose, investing in the right tools will empower your team and set the foundation for successful machine learning outcomes.

Source: - https://technologyzon.com/blogs/436/The-Best-Labelbox-Alternatives-for-Data-Labeling-in-2025

0 notes

Text

The Ultimate Guide to Data Annotation: How to Scale Your AI Projects Efficiently

In the fast-paced world of artificial intelligence (AI) and machine learning (ML), data is the foundation upon which successful models are built. However, raw data alone is not enough. To train AI models effectively, this data must be accurately labeled—a process known as data annotation. In this guide, we'll explore the essentials of data annotation, its challenges, and how to streamline your data annotation process to boost your AI projects. Plus, we’ll introduce you to a valuable resource: a Free Data Annotation Guide that can help you scale with ease.

What is Data Annotation?

Data annotation is the process of labeling data—such as images, videos, text, or audio—to make it recognizable to AI models. This labeled data acts as a training set, enabling machine learning algorithms to learn patterns and make predictions. Whether it’s identifying objects in an image, transcribing audio, or categorizing text, data annotation is crucial for teaching AI models how to interpret and respond to data accurately.

Why is Data Annotation Important for AI Success?

Improves Model Accuracy: Labeled data ensures that AI models learn correctly, reducing errors in predictions.

Speeds Up Development: High-quality annotations reduce the need for repetitive training cycles.

Enhances Data Quality: Accurate labeling minimizes biases and improves the reliability of AI outputs.

Supports Diverse Use Cases: From computer vision to natural language processing (NLP), data annotation is vital across all AI domains.

Challenges in Data Annotation

While data annotation is critical, it is not without challenges:

Time-Consuming: Manual labeling can be labor-intensive, especially with large datasets.

Costly: High-quality annotations often require skilled annotators or advanced tools.

Scalability Issues: As projects grow, managing data annotation efficiently can become difficult.

Maintaining Consistency: Ensuring all data is labeled uniformly is crucial for model performance.

To overcome these challenges, many AI teams turn to automated data annotation tools and platforms. Our Free Data Annotation Guide provides insights into choosing the right tools and techniques to streamline your process.

Types of Data Annotation

Image Annotation: Used in computer vision applications, such as object detection and image segmentation.

Text Annotation: Essential for NLP tasks like sentiment analysis and entity recognition.

Audio Annotation: Needed for voice recognition and transcription services.

Video Annotation: Useful for motion tracking, autonomous vehicles, and video analysis.

Best Practices for Effective Data Annotation

To achieve high-quality annotations, follow these best practices:

1. Define Clear Guidelines

Before starting the annotation process, create clear guidelines for annotators. These guidelines should include:

Annotation rules and requirements

Labeling instructions

Examples of correctly and incorrectly labeled data

2. Automate Where Possible

Leverage automated tools to speed up the annotation process. Tools with features like pre-labeling, AI-assisted labeling, and workflow automation can significantly reduce manual effort.

3. Regularly Review and Validate Annotations

Quality control is crucial. Regularly review annotated data to identify and correct errors. Validation techniques, such as using a secondary reviewer or implementing a consensus approach, can enhance accuracy.

4. Ensure Annotator Training

If you use a team of annotators, provide them with proper training to maintain labeling consistency. This training should cover your project’s specific needs and the annotation guidelines.

5. Use Scalable Tools and Platforms

To handle large-scale projects, use a data annotation platform that offers scalability, supports multiple data types, and integrates seamlessly with your AI development workflow.

For a more detailed look at these strategies, our Free Data Annotation Guide offers actionable insights and expert advice.

How to Scale Your Data Annotation Efforts

Scaling your data annotation process is essential as your AI projects grow. Here are some tips:

Batch Processing: Divide large datasets into manageable batches.

Outsource Annotations: When needed, collaborate with third-party annotation services to handle high volumes.

Implement Automation: Automated tools can accelerate repetitive tasks.

Monitor Performance: Use analytics and reporting to track progress and maintain quality.

Benefits of Downloading Our Free Data Annotation Guide

If you're looking to improve your data annotation process, our Free Data Annotation Guide is a must-have resource. It offers:

Proven strategies to boost data quality and annotation speed

Tips on choosing the right annotation tools

Best practices for managing annotation projects at scale

Insights into reducing costs while maintaining quality

Conclusion

Data annotation is a critical step in building effective AI models. While it can be challenging, following best practices and leveraging the right tools can help you scale efficiently. By downloading our Free Data Annotation Guide, you’ll gain access to expert insights that will help you optimize your data annotation process and accelerate your AI model development.

Start your journey toward efficient and scalable data annotation today!

#DataAnnotation#MachineLearning#AIProjects#ArtificialIntelligence#DataLabeling#AIDevelopment#ComputerVision#ScalableAI#Automation#AITools

0 notes

Text

The Future of AI Depends on High-Quality Data How GTS.AI Leads the Data Collection Revolution

Introduction

Today's world is fast-moving and dynamic as far as technology goes. AI is becoming the engine of innovation. From enhancing customer experiences to operating business systems smoothly, the potential of AI is plain unlimited. However, successful AI must be based on quality data: without accurate, diverse, and ethically sourced datasets, AI systems do not yield precise results. This is where data collection companies like GTS.AI come in.

Understanding Data Collection and Its Importance

Data collection refers to the process of collecting, sanitizing, and organizing data that fuels AI and ML models. To ensure AI-based applications run smoothly and in a predictable manner, data must cover and consider every bias and corner that may have been left in performing AI solutions across different applications in the real world. Data needs to be of such quality to provide sound insights and probabilistic algorithms and, consequently, trustworthy AI outputs, making the leading role played by professional data collection services essential.

GTS.AI's Job as a Data Collector

GTS.AI is amongst the leaders in data collection. In brief, GTS.AI concentrates on the provision of high-quality, scalable, and ethically-sourced data for AI and ML models. The company is present in many domains to make certain its client businesses acquire datasets tailored to their exact needs. Here follows a summary of what makes GTS.AI really that different in the realm of data collection:

Extensive and Scalable Data Solutions: GTS.AI understands that AI applications require various nuances in their data. Be it speech recognition, computer vision, natural language processing (NLP), or autonomous systems, this company supports scalable solutions across industries such as healthcare, finance, automotive, and retail.

Ethical and Compliant Data Sourcing: One of the most disputed issues in AI development relates to ethical data collection. GTS.AI abides by strict ethical measures and ensures compliance with all key data privacy regulations across the world, including the GDPR and CCPA. Consent driven in the sourcing of data ensures these datasets are all respectful of the users' privacy and eliminate biases that would possibly impact AI's decision-making.

Complex Data Annotation and Labeling: The more valuable the data, so the annotation. Using the best advances in data-labeling methodology for images, videos, text, and audio, GTS.AI felt it would go with either automated labeling or human-in-the-loop techniques, assuring accurate tagging.

Global Data Collection Capabilities: AI models, by necessity, rely on various datasets for several languages, cultures and demographics. GTS.AI makes use of its vast networking capabilities to help collect localized data from whichever parts of the world. GTS.AI ensures that inclusive datasets are used for training AI applications to help improve their adaptability to worldwide use.

The Influence of High-Quality Data on AI Performance

The quality of data has an intrinsic value in determining the efficiency and reliability of AI programs. High-quality data, like that provided by GTS.AI, enhance performance outcomes in the following ways.

Increased Accuracy: Well-tagged, diverse datasets constitute conditions for a more robust identification of patterns by AI models, thus reducing misclassifications and improving their decision-making powers. Bias Mitigation: Ethically sourced and diverse data minimizes AI systems bias, thus making them fairer and more trustworthy. Scalability: High-quality, trained datasets allow for wider application of AI solutions, hence requiring less retraining to adapt to additional cases and industries. Compliance and Security: Endorsement by a trusted data collection company assures compliance with data protection laws and mitigates associated risks regarding data breaches and legal liabilities.

Why an Organization Should Partner with GTS.AI

As organizations begin integrating AI into their operations, the quality of data collection must become a priority. Here is why GTS.AI is going to be just the right partner:

Custom-made Solutions: The company provides tailor-made data-collecting services that align its business goals and AI development requirements. Unmatched Experience: GTS.ai, with years of experience, understands the fine nuances of data collection from one industry to another. High-End Technology: GTS.AI uses state-of-the-art tools and AI-driven techniques to carry out data collection with speed and accuracy. End-to End Data Service: GTS.AI provides a complete suite of data services, from data acquisition to annotation and validation.

Why do businesses want GTS.AI as a partner?

For this, businesses that want to make AI an integral part of their functions must avail themselves of quality data collection. Here go a few reasons why GTS.AI happens to be the best partner:

Customized solutions: The company offers tailored data collection services that are aligned with business objectives and AI development needs. Skill and expertise: Having years of experience in the field, GTS.AI knows the nitty-gritty of data collection within the industry. Cutting-edge technology: Powered by the latest tools and AI-based approaches, GTS.AI guarantees that the process of data gathering is more efficient and precise. End-to-end data solutions: From data acquisition to annotation and validation, GTS.AI provides a comprehensive set of data services.

Conclusion

The success of AI-powered innovations is dependent on the quality of data they run on. As a frontrunner in providing services regarding data collection, GTS.AI provides businesses with quality data that has ethical source foundation and is scalable enough to run their AI models. With GTS.AI's emphasis on good practices and governance, AI development is rooted towards driving businesses to unleash the full potential of artificial intelligence. Whether you are a start-up planning to train its first AI model or a large enterprise updating its AI applications, GTS.AI supports anything and everything to get best-of-breed data solutions for their needs. Invest in quality data today and create AI models that innovate tomorrow.

0 notes

Text

Speech Recognition Dataset for Machine Learning Applications

Introduction

Speech recognition technology has evolved from enabling humans to interact with machines in ways they never thought possible, such as having voice assistants to transcribing speech. High-quality datasets form the crux of all these advancements as they enable the machine learning models to understand, process, and generate human speech accurately. In this blog, we will explore why speech recognition datasets are important and point out one of the best examples, which is Libri Speech.

Why Are Speech Recognition Datasets Crucial?

Speech recognition datasets are essentially the backbone of ASR systems. These datasets consist of labeled audio recordings, which is the raw material that can be used for training, validation, and testing ML models. Superior datasets ensure:

Model Accuracy: The more the variation in accents, speaking styles, and background noises, the more it helps the model generalize over realistic scenarios.

Language Coverage: Multilingual datasets help build speech recognition systems catering to a global audience.

Noise Robustness: Data with noisy samples enhances the model's robustness, making the ASR system give better and reliable responses for even bad conditions.

Innovation: Open-source datasets inspire new research and innovation in ASR technology.

Key Features of a Good Speech Recognition Dataset

A good speech recognition dataset should have the following characteristics:

Diversity: It should contain a wide variety of speakers, accents, and dialects.

High-Quality Audio: Clear, high-fidelity recordings with minimal distortions.

Annotations: Time-aligned transcriptions, speaker labels, and other metadata.

Noise Variations: Samples with varying levels of background noise to train noise-resilient models.

Scalability: Sufficient data volume for training complex deep learning models.

Case Study: Libri Speech Dataset

One such well-known speech recognition dataset is LibriSpeech, an open-source corpus used extensively within the ASR community. Below is an overview of its features and impact:

Overview of Libri Speech

Libri Speech is a large corpus extracted from public domain audiobooks. The dataset includes around 1,000 hours of audio recordings along with transcriptions, thus being one of the most widely used datasets for ASR research and applications.

Key Characteristics

Diverse Speakers: Covers a wide number of speakers of diverse gender, age, and accents.

Annotated Data: With every audio sample is included good-quality time aligned transcript.

Noise-free recordings: Noise-free recordings Many of the speech samples are of excellent quality noise free with an audio quality very conducive for input into the trainer. It has opened source accessibility allowing free researchers access worldwide.

Applications of Libri Speech

Training ASR Models: Libri Speech has been used for training many of the state-of-the-art ASR systems, including Google's Speech-to-Text API and open-source projects like Mozilla Deep Speech.

Benchmarking: Libri Speech is a standard benchmark dataset that allows for fair comparisons across different algorithms based on model performance.

Transfer Learning: Pretrained models on Libri Speech generally perform well when fine-tuned for domain-specific tasks.

Applications of Speech Recognition Datasets in ML

Speech recognition datasets are used to power a wide range of machine learning applications, including:

Voice Assistants: Datasets train systems like Alexa, Siri, and Google Assistant to understand and respond to user commands.

Transcription Services: High-quality datasets enable accurate conversion of speech to text for applications like Otter.ai and Rev.

Language Learning Tools: Speech recognition models enhance pronunciation feedback and language learning experiences.

Accessibility Tools: Assistive technologies such as real-time captioning and screen readers rely on strong speech models.

Customer Support Automation: ASR-based systems greatly enhance the flow of call center operations as it transcribes and analyzes the customer interactions.

Conclusion

The development of effective speech recognition systems depends on the availability and quality of datasets. Datasets such as Libri Speech have set benchmarks for the industry, allowing researchers and developers to push the boundaries of what ASR technology can achieve. As machine learning applications continue to evolve, so will the demand for diverse, high-quality speech recognition datasets.

Harnessing the power of these datasets is the key to building more inclusive, accurate, and efficient speech recognition systems that transform the way we interact with technology. GTS AI is committed to driving innovation by providing state-of-the-art solutions and insights into the world of AI-driven speech recognition.

1 note

·

View note

Text

The Ultimate Guide to Audio Datasets for Machine Learning

Introduction

Machine learning (ML) has revolutionized the way we interact with technology, and Audio Datasets are at the heart of many groundbreaking applications. From voice assistants to real-time language translation, these datasets enable machines to understand and process audio data effectively. In this comprehensive guide, we'll explore the importance of audio datasets, their types, popular sources, and best practices for leveraging them in your ML projects.

What Are Audio Datasets?

Audio datasets are sets of audio files, which come with metadata including transcripts, information about the speakers, or even labels. They can be used as a training set for machine learning models, which, in turn, can learn patterns, process speech, and generate sound.

Why Are Audio Datasets Important for Machine Learning?

Training Models: For the training of accurate and reliable ML models, high-quality datasets are necessary.

Increasing Accuracy: Models are more robust across different usage scenarios with the use of diverse and well-labeled datasets.

Audio Datasets to Real-World Applications: These datasets can be utilized to build voice assistants, automatic transcription tools, and much more.

Advancements in Research: Datasets open to the public catalyze innovation and collaboration within the ML community.

Types of Audio Datasets

Speech Datasets:

Consists of recordings of human speech.

Applications: Speech-to-text, virtual assistants, and language modeling.

Music Datasets:

Includes music tracks, genres, and annotations.

Applications: Music recommendation systems, genre classification, and audio synthesis.

Environmental Sound Datasets:

Comprises natural or urban soundscapes, for instance, rain, traffic, or birdsong.

Applications: Smart home devices, sound event detection.

Emotion Datasets:

Set over trying to record emotions in speech or sound.

Applications: Sentiment analysis, customer service bots.

Custom Datasets:

Specific use cases or niche applications customized datasets.

Applications: Industry-specific tools and AI models.

Best Practices for Using Audio Datasets

Understand Your Use Case: Identify the type of dataset needed based on your project goals.

Data Preprocessing: Clean and normalize audio files to ensure consistent quality.

Data Augmentation: Enhance datasets by adding noise, altering pitch, or applying time-stretching.

Label Accuracy: Ensure that annotations and labels are precise for effective training.

Ethical Considerations: Respect privacy and copyright laws when using audio data.

Diversity Matters: Use datasets with varied accents, languages, and audio conditions for robust model performance.

How Audio Datasets Drive Speech Data Collection

Audio datasets play a significant role in speech data collection services. Most services include the following:

Crowdsourcing Speech Data: Collecting recordings from a wide range of speakers.

Annotating Audio: Adding transcripts, emotion tags, or speaker identification.

Custom Dataset Creation: Creating datasets specifically designed for a particular AI application.

Challenges in Working with Audio Datasets

Quality Control: Noise-free and distortion-free audio recordings

Scalability: Handling huge datasets during the training process with a reasonable amount of time

Bias and Representation: Avoiding the over-representation of a particular accent or type of sound

Storage Requirements: Managing massive storage requirements with high-resolution audio files.

Conclusion

Audio Datasets form the core of many cutting-edge machine learning applications. The type, source, and best practices surrounding audio datasets help you use their power to create smarter and more accurate models. Whether developing a voice assistant or advancing speech recognition technology, the right audio dataset is the first step to your success.

Begin with the journey by exploring varied audio datasets or through expert speech data collection services for your unique needs of the project.

0 notes

Text

Text-to-Speech Dataset: Fueling Conversational AI

Introduction

In the rapidly advancing world of artificial intelligence (AI) and machine learning (ML), Text-to-Speech (TTS) datasets have become essential for developing voice synthesis technologies. This process involves gathering, annotating, and organizing audio and textual data to train AI models to convert written text into natural-sounding speech. As organizations push the boundaries of innovation, TTS datasets play a significant role in creating systems that enhance accessibility, user experience, and automation.

What is a Text-to-Speech Dataset?

A text-to-speech dataset is a collection of paired audio recordings and their corresponding textual transcriptions. These datasets are crucial for training AI models to generate lifelike speech from text, capturing nuances such as tone, pitch, and emotion. From virtual assistants to audiobook narrators, TTS datasets form the backbone of many modern applications.

Why is a Text-to-Speech Dataset Important?

Improved AI Training: High-quality TTS datasets ensure AI models achieve precision in voice synthesis tasks.

Support for Multilingual Capabilities: TTS datasets enable the development of systems that support multiple languages, accents, and dialects.

Scalable Solutions: By leveraging robust datasets, organizations can deploy TTS-based applications across industries such as entertainment, education, and accessibility.

Key Features of Text-to-Speech Dataset Services

Customizable Datasets: Tailored TTS datasets are created to meet the specific requirements of different AI applications.

Diverse Audio Sources: TTS datasets include varied content such as professional voice recordings, natural conversations, and scripted dialogues.

Accurate Annotations: Precise alignment of text and audio ensures high-quality training, enhancing the performance of TTS systems.

Compliance and Privacy: Ethical practices and privacy compliance are prioritized to ensure secure data collection.

Applications of Text-to-Speech Datasets

Virtual Assistants: Powering conversational AI in devices like smartphones, smart speakers, and chatbots.

Accessibility Tools: Enabling visually impaired users to access written content through natural-sounding speech.

E-Learning: Enhancing online education platforms with engaging audio narration.

Entertainment: Creating lifelike voiceovers for games, animations, and audiobooks.

Challenges in Text-to-Speech Dataset Collection

Collecting high-quality TTS datasets comes with challenges such as capturing diverse voice styles, ensuring data quality, and addressing linguistic complexities. Collaborating with experienced dataset providers can help overcome these hurdles by offering tailored solutions.

Conclusion

Text-to-speech datasets are a cornerstone of conversational AI, empowering organizations to develop advanced systems that generate realistic and expressive speech. By leveraging high-quality datasets, businesses can drive progress and unlock new opportunities in accessibility, automation, and user engagement. Embracing TTS datasets ensures staying ahead in the competitive landscape of AI innovation. Explore text-to-speech dataset services today to fuel the next generation of voice technologies.

Visit Here: https://gts.ai/services/speech-data-collection/

0 notes

Text

Audio Annotation Companies: Pioneers in Sound Data Labeling

Introduction:

In the ever-evolving landscape of artificial intelligence (AI) and machine learning (ML), the significance of labeled data is paramount. While considerable focus has been placed on image and text data, audio data is swiftly emerging as a vital component in the creation of effective AI systems. Central to this evolution are Audio Annotation Companies, the often-overlooked trailblazers in the realm of sound data labeling.

The Emergence of Audio Data

As voice-activated devices, intelligent assistants, and automated customer service platforms become increasingly common, the prevalence of audio data has surged. From podcasts and voice messages to recordings from call centers, the volume of audio data generated on a daily basis is astonishing. For AI systems to accurately interpret and learn from this information, it must be carefully labeled, which is where audio annotation companies play a crucial role.

Understanding Audio Annotation

Audio annotation refers to the process of labeling audio data to render it comprehensible for AI models. This process may encompass:

Transcription: Transforming spoken language into written text.

Speaker Identification: Recognizing and differentiating between various speakers within an audio file.

Emotion Detection: Assessing the emotional tone conveyed by the speaker.

Sound Classification: Categorizing distinct sounds, such as background noise, music, or specific actions.

The Importance of Audio Annotation Companies

Expertise in Detail: The task of annotating audio data is intricate, necessitating an understanding of subtleties such as accents, dialects, and contextual elements. Audio annotation companies employ experts who guarantee high-quality and precise labeling.

Scalability: Organizations managing extensive volumes of audio data require scalable solutions. Audio annotation companies offer the necessary workforce and tools to efficiently process large datasets.

Quality Assurance: The accuracy of annotations is essential. These companies implement stringent quality control measures to uphold the integrity of the data, which directly influences the efficacy of AI models.

Time Efficiency: By delegating annotation tasks to external providers, organizations can concentrate on their primary functions while ensuring that their audio data is processed swiftly and effectively.

Applications of Audio Annotation

The contributions of audio annotation firms have significant implications across multiple sectors:

Healthcare: Improving patient care through the implementation of voice-activated health monitoring systems.

Customer Service: Enhancing customer engagement with more proficient virtual assistants.

Security: Strengthening surveillance systems through advanced sound recognition technologies.

Media and Entertainment: Facilitating content creation and organization via sound classification and transcription services.

Choosing the Right Audio Annotation Company

When selecting an audio annotation provider, organizations should take into account:

Experience and Expertise: Seek out companies with a solid history of managing a variety of audio datasets.

Technology and Tools: Verify that the company employs cutting-edge tools and software for effective annotation.

Customization: The capability to adapt services to meet specific project requirements is crucial.

Data Security: The protection and confidentiality of data must be a primary concern.

Conclusion

Audio annotation companies are instrumental in advancing sophisticated and nuanced AI applications by converting raw audio data into valuable, labeled datasets. As the need for audio-driven AI Globose Technology Solutions increases, these leaders in sound data labeling will remain essential in shaping the future of artificial intelligence.

0 notes

Text

Struggling with Data Labeling? Try These Image Annotation Services

Introduction:

In the era of artificial intelligence and machine learning,Image Annotation Services data is the driving force. However, raw data alone isn’t enough; it needs to be structured and labeled to be useful. For businesses and developers working on AI models, especially those involving computer vision, accurate image annotation is crucial. But data labeling is no small task. It’s time-consuming, resource-intensive, and requires a meticulous approach.

If you’ve been struggling with data labeling, you’re not alone. The good news is that professional image annotation services can make this process seamless and efficient. Here’s a closer look at why data labeling is challenging, the importance of image annotation, and the best services to help you get it done.

The Challenges of Data Labeling

Time-Consuming Process

Labeling thousands or even millions of images can take an enormous amount of time, delaying project timelines and slowing innovation.

High Cost of In-House Teams

Building and maintaining an in-house team for data labeling can be costly, especially for small and medium-sized businesses.

Need for Precision

AI models require accurate and consistent labels. Even minor errors in annotation can significantly impact the performance of your AI systems.

Scaling Issues As your dataset grows, so do the challenges of managing, labeling, and ensuring quality control at scale.

The Importance of Image Annotation

Image annotation involves adding metadata or labels to images, helping AI systems understand what’s in a picture. These annotations are used to train models for tasks such as:

Object detection

Image segmentation

Facial recognition

Autonomous driving systems

Medical imaging analysis

Without proper annotation, AI models cannot interpret visual data effectively, leading to inaccurate predictions and unreliable outputs.

Top Image Annotation Services to Streamline Your Projects

If you’re ready to take your AI projects to the next level, here are some top-notch image annotation services to consider:

Offers a range of high-quality image and video annotation services tailored to various industries, including healthcare, retail, and automotive. With a focus on precision and scalability, they ensure your data labeling needs are met efficiently.

Key Features:

Bounding boxes, polygons, and semantic segmentation

Annotation for 2D and 3D data

Scalable solutions for large datasets

Affordable pricing plans

Scale AI

Scale AI provides a comprehensive suite of data annotation services, including image, video, and text labeling. Their platform combines human expertise with machine learning tools to deliver high-quality annotations.

Key Features:

Rapid turnaround times

Detailed quality assurance

Customizable annotation workflows

Labelbox

Labelbox is a popular platform for managing and annotating datasets. Its intuitive interface and robust toolset make it a favorite for teams working on complex computer vision projects.

Key Features:

Integration with ML pipelines

Flexible annotation tools

Collaboration-friendly platform

CloudFactory

CloudFactory specializes in combining human intelligence with automation to deliver precise image annotations. Their managed workforce is trained to handle intricate labeling tasks with accuracy.

Key Features:

Workforce scalability

Specialized training for annotators

Multilingual support

Amazon SageMaker Ground Truth

Amazon’s SageMaker Ground Truth is a powerful tool for building labeled datasets. It uses machine learning to automate annotation and reduce manual effort.

Key Features:

Active learning integration

Pay-as-you-go pricing

Automated labeling workflows

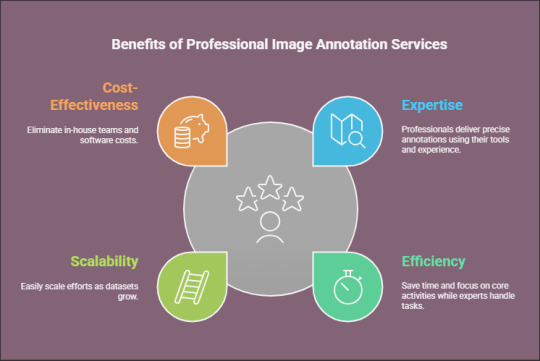

Why Choose Professional Image Annotation Services?

Outsourcing your image annotation tasks offers several benefits:

Expertise: Professionals have the tools and experience to deliver precise annotations.

Efficiency: Save time and focus on your core business activities while experts handle the data labeling.

Scalability: Easily scale your annotation efforts as your dataset grows.

Cost-Effectiveness: Eliminate the need for in-house teams and costly software investments.

Conclusion

Data labeling doesn’t have to be a bottleneck for your AI projects. By leveraging professional image annotation services like Globose Technology Solutions and others, you can ensure your models are trained on high-quality, accurately labeled datasets. This not only saves time and resources but also enhances the performance of your AI systems.

So, why struggle with data labeling when you can rely on experts to do it for you? Explore the services mentioned above and take the first step toward seamless, efficient, and accurate image annotation today.

0 notes

Text

Data Annotation Companies: Which One is Right for Your Business?

Introduction

In the age of artificial intelligence (AI) and machine learning (ML), the caliber of data frequently dictates the success of a project. Data Annotation Comapany is essential in guaranteeing that AI algorithms function optimally by supplying them with precisely labeled datasets for training purposes. Although numerous organizations recognize the significance of annotated data, selecting the appropriate data annotation company can prove to be a challenging endeavor. This guide aims to assist you in navigating the decision-making process and identifying the ideal partner to meet your business requirements.

Understanding Data Annotation Companies

Data annotation firms focus on the enhancement of datasets for artificial intelligence and machine learning initiatives by incorporating metadata, labels, and annotations into unprocessed data. Their offerings generally encompass:

Image Annotation: Identifying and labeling objects within images for tasks related to computer vision, including object detection and image recognition.

Video Annotation: Marking video frames for uses such as autonomous vehicle navigation and surveillance systems.

Text Annotation: Organizing textual information for natural language processing (NLP) by tagging entities, sentiments, and intents.

Audio Annotation: Analyzing audio recordings for applications in speech recognition and sound classification.

These organizations guarantee the accuracy, consistency, and scalability of your training data, thereby allowing your AI systems to operate with precision.

Factors to Consider When Choosing a Data Annotation Company

Choosing an appropriate data annotation partner necessitates careful assessment of several important factors:

Expertise and Specialization

It is essential to select a company that focuses on the specific type of annotation required for your project. For example, if your project involves annotated video data for training autonomous vehicles, opt for a provider with a demonstrated track record in video annotation.

Scalability

AI initiatives frequently demand extensive datasets. Verify that the company is capable of managing the scale of your project and can deliver results within your specified timelines while maintaining high quality.

Quality Assurance Processes

The accuracy of annotations is vital for the performance of AI systems. Inquire about the company’s quality control measures and the tools they employ to reduce errors.

Turnaround Time

Assess the organization's capability to adhere to deadlines. Delays in delivery can disrupt your project schedule and escalate expenses.

Security and Compliance

The protection of data is crucial, particularly when handling sensitive information. Select a company that complies with international data security standards and regulations, such as GDPR or HIPAA.

Cost Efficiency

Although cost is a significant consideration, it is essential not to sacrifice quality for lower prices. Evaluate various pricing structures and identify a provider that strikes a balance between cost-effectiveness and quality.

Technology and Tools

Utilizing advanced tools and automation technologies can improve the precision and efficiency of data annotation. Choose companies that employ the most recent annotation platforms and AI-driven tools.

Leading Data Annotation Providers

When seeking an appropriate partner, it is advisable to consider reputable firms such as GTS.AI. With a significant emphasis on image and video annotation services (please visit their website), Globose Technology Solution.AI has established itself as a dependable option for organizations in need of high-quality, scalable solutions. Their proficiency encompasses various sectors, providing customized services for a wide range of AI applications.

Evaluating Potential Partners

Request Case Studies: Examine previous project examples to evaluate their level of expertise.

Trial Projects: Initiate a small-scale trial to assess their quality, communication, and efficiency.

Client References: Contact current or former clients to determine satisfaction levels.

Customization Options: Confirm their ability to tailor services to meet your specific project needs.

Conclusion

Selecting an appropriate data annotation company is an essential phase in the development of a successful AI or ML model. By assessing providers according to their expertise, scalability, quality assurance, and technological capabilities, you can guarantee that your project is supported by the high-quality data it requires. Consider firms such as Globose Technology Solution , which integrate cutting-edge tools with industry knowledge to produce outstanding outcomes.

Partnering with the right organization at this stage will conserve time, resources, and mitigate potential challenges in the future, thereby maximizing the effectiveness of your AI initiatives.

0 notes

Text

Collection of Video Data for AI Training in Both Indoor and Outdoor Environments.

Introduction:

In the swiftly changing domain of artificial intelligence (AI) and machine learning (ML), the necessity for high-quality, varied, and precisely labeled video datasets has reached unprecedented levels. These datasets form the cornerstone for training models that drive applications from self-driving cars to sophisticated medical diagnostics. Video data collection services are essential in assembling these datasets, guaranteeing that AI systems function effectively and reliably.

The Significance of Video Data in AI and ML

Video Data Collection Services provides a comprehensive source of information, capturing both temporal dynamics and spatial details that static images fail to convey. This depth allows AI models to comprehend intricate activities, monitor object movements, and identify patterns over time. For example, in the realm of autonomous driving, the analysis of video sequences enables vehicles to predict pedestrian actions and adapt to changing road conditions. Likewise, in the healthcare sector, video analysis can facilitate the observation of patient behaviors and the real-time detection of anomalies.

Challenges in Video Data Collection

The process of gathering video data for AI applications entails several challenges:

Volume and Storage: Video files are considerably larger than images or text, requiring extensive storage solutions and effective data management strategies.

Annotation Complexity: The labeling of video data is more complex due to its temporal nature, necessitating frame-by-frame annotation to accurately capture subtleties in movement and interactions.

Privacy Concerns: Recording in both public and private settings raises ethical and legal dilemmas, particularly when individuals can be identified. Adhering to privacy regulations is of utmost importance.

Diversity and Bias: It is essential to create a diverse dataset that encompasses a variety of scenarios, environments, and demographics to mitigate biases in AI models.

GTS: Pioneering Excellence in Video Dataset Acquisition

Global Technology Solutions (GTS) effectively tackles the challenges associated with video data collection by providing extensive services specifically designed for artificial intelligence (AI) and machine learning (ML) applications. Their methodology includes systematic recording, thorough analysis, and accurate labeling, converting unprocessed footage into datasets that machines can interpret. With operations in over 89 countries and the successful completion of more than 300 projects, GTS guarantees a high standard of excellence and diversity in its data collection initiatives.

Varied Categories of Video Collection

GTS possesses a broad range of expertise across multiple video collection categories, each tailored to meet distinct industry needs:

Human & Medical Video Collections: This category involves capturing human postures, medical procedures (such as endoscopy), and interactions during meetings or virtual conferences. These datasets are crucial for the advancement of healthcare applications and models of human-computer interaction.

Construction & Industrial Safety Collections: This involves documenting safety protocols, equipment usage, and potential hazards within industrial environments. The data collected is instrumental in training AI systems for risk assessment and monitoring safety compliance.

Transportation & Public Safety Collections: This category focuses on recording traffic patterns, public transportation usage, and emergency response scenarios, thereby enhancing urban planning, traffic management, and public safety efforts.

Specialized Video Collections: These are customized datasets that concentrate on specific areas such as agricultural monitoring, environmental conservation, and wildlife tracking, facilitating AI applications in sustainability and ecological research.

Retail & Customer Behavior Collections: This involves analyzing customer movements, product interactions, and queue formations in retail settings to optimize store layouts and enhance customer experiences.

Educational & Classroom Collections: This category observes classroom interactions, teaching methodologies, and student engagement, contributing to the development of AI tools that support educational research and personalized learning.

Entertainment and Media Collections: Assembling footage from performances, sporting events, and media productions to facilitate AI training in content creation, editing, and audience analysis.

Residential and Smart Home Collections: Observing daily activities in smart homes to improve home automation systems, security features, and energy efficiency solutions.

Tourism and Travel Collections: Analyzing tourist behaviors, interactions with landmarks, and travel patterns to enhance AI-driven tourism services and destination management.

Sports and Fitness Training Collections: Capturing athletic performances, training sessions, and fitness routines to advance AI applications in coaching, performance analysis, and injury prevention.

Cultural and Social Event Collections: Recording festivals, ceremonies, and social gatherings to safeguard cultural heritage and examine social dynamics through AI.

Aeronautics and Space Research Collections: Collecting video data from aerospace experiments, simulations, and missions to bolster AI research in aviation and space exploration.

Ensuring Quality and Compliance

GTS utilizes sophisticated tools and methodologies to guarantee the highest standards in video data collection:

Video Dataset Collection Tool: A proprietary platform designed to enable accurate collection and annotation, ensuring datasets align with specific project requirements.

Privacy Measures: Enforcing protocols to anonymize individuals and adhere to global privacy regulations, addressing ethical considerations in data collection.

Diversity Strategies: Acquiring data from diverse regions and demographics to create well-rounded datasets, reducing biases in AI model training.

The Future of Video Data Collection

As AI continues to permeate various industries, the demand for specialized and high-quality video datasets is expected to increase. Video data collection services, such as those provided by GTS, are crucial in fulfilling this need, laying the groundwork for AI systems that are precise, ethical, and effective.

Conclusion,

Video data collection services play a crucial role in the progression of artificial intelligence and machine learning applications. By tackling the fundamental challenges and guaranteeing the acquisition of varied, high-quality data, organizations such as Globose Technology Solutions are facilitating innovations that will influence the future across various sectors.

0 notes