#do I want that data fed into advertisers' algorithms so they can tell what sort of stuff I like to buy?

Text

It's a bit weird typing out a full post here on tumblr. I used to be one of these artists that mostly focused on posting only images, the least amount of opinions/thoughts I could share, the better. Today, the art world online feels weird, not only because of AI, but also the algorithms on every platform and the general way our craft is getting replaced for close to 0 dollars.

This website was a huge instrument in kickstarting my career as a professional artist, it was an inspiring place were artists shared their art and where we could make friends with anyone in the world, in any industries. It was pretty much the place that paved the way as a social media website outside of Facebook, where you could search art through tags etc.

Anyhow, Tumblr still has a place in my heart even if all artists moved away from it after the infamous nsfw ban (mostly to Instagram and twitter).

And now we're all playing a game of whack-a-mole trying to figure out if the social media platform we're using is going to sell their user content to AI / deep learning (looking at you reddit, going into stocks). On the Tumblr side, Matt Mullenweg's interviews and thoughts on the platform shows he's down to use AI, and I guess it could help create posts faster but then again, you have to click through multiple menus to protect your art (and writing) from being scraped. It's really kind of sad to have to be on the defensive with posting art/writing online. It doesn't even reflect my personal philosophy on sharing content.

I've always been a bit of a "punk" thinking if people want to bootleg my work, it's like free advertisement and a testament to people liking what I created, so I've never really watermarked anything and posted fairly high-res version of my work. I don't even think my art is big enough to warrant the defensiveness of glazing/nightshading it, but the thought of it going through a program to be grinded into a data mush to be only excreted out as the ghost of its former self is honestly sort of deadening.

Finally, the most defeating trend is the quantity of nonsense and low-quality content that's being fed to the internet, made a million times easier with the use of AI. I truly feel like we're living what Neil Postman saw happening over 40 years ago in "amusing ourselves to death"(the brightness of this man's mind is still unrivaled in my eyes).

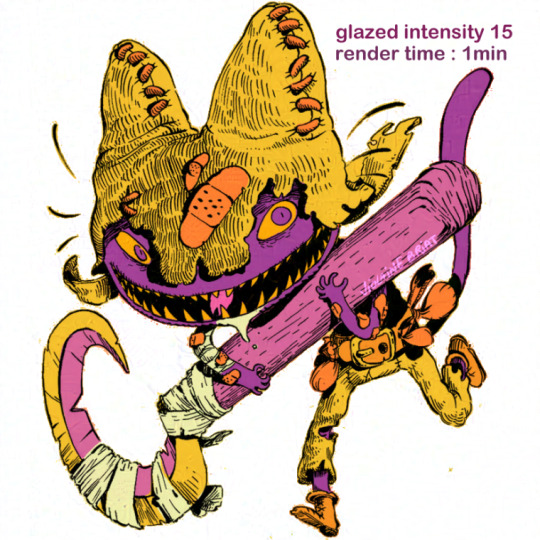

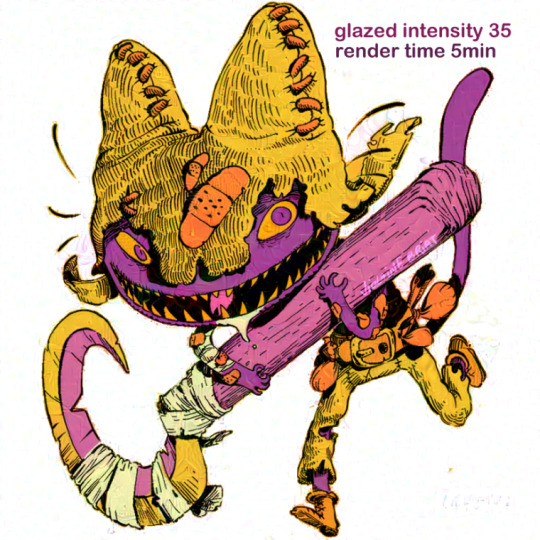

I guess this is my big rant to tell y'all now I'm gonna be posting crunchy art because Nightshade and Glaze basically make your crispy art look like a low-res JPEG, and I feel like an idiot for doing it but I'm considering it an act of low effort resistance against data scraping. If I can help "poison" data scrapping by wasting 5 minutes of my life to spit out a crunchy jpeg before posting, listen, it's not such a bad price to pay.

Anyhow check out my new sticker coming to my secret shop really soon, and how he looks before and after getting glazed haha....

295 notes

·

View notes

Text

Genuinely don't want to get too deep into the whole AI art controversy because - as I mentioned in the tags of that post I reblogged before - I'm personally familiar with the issue in that I build AI as part of my job, but I'm not personally familiar with the issue in that I am not someone who relies on making art to survive (I do art as a hobby but obviously the artists who have more to fear from the development of AI like this are the ones who, you know, do it for a living).

I definitely see why people are concerned and in general I'd say this issue plays into the wider issue of "what happens if a robot/AI/etc takes my job" which is, I suppose, something we as a society have been facing since... the Industrial Revolution? Thereabouts? And it's not my place to speak on that since I haven't done thorough research on the matter, but I certainly understand the concern - hell, there already exist AIs that can write working code, for all I know my job could be at risk one day.

That being said, on a professional level I'm irked at the person who wrote "AI IS EVIL" in the notes of a post on the matter that I saw.

AI is a tool, my dude. Nothing more, nothing less. Whether it's used for good or evil depends wholly on the people who make it and the people who use it.

#I'm not that surprised by that outlook cause most of the AI we hear about nowadays is in fact being used for evil#like. you know. basically every aspect of most social media AI#but an anomaly detection algorithm that monitors your bank account and alerts you of an out-of-the-ordinary transaction that may be fraud?#as long as the data it gathers on my bank account isn't being used for anything else or sold to anyone I'm ok with that#like - your transaction history is fully recorded anyway#that's not gonna change and the presence or absence of AI will not prevent the bank from using that data#for whatever purposes it might have#do I want that data fed into advertisers' algorithms so they can tell what sort of stuff I like to buy?#of course not#but if that were to happen it'd be a problem with the PEOPLE who chose to use + sell my data not a problem with the existence of AI itself#a hammer is not a bad thing just because someone can use it to bash a person's head in#anyhow truth be told that's just my pet peeve and this is just a vent post#not gonna try and go after that person or anything because they have a right to be concerned + upset and I don't wanna be a jerk#just venting my professional annoyance

16 notes

·

View notes

Text

Week 4, Post 2

In the article "Three reasons junk news spreads so quickly across social media" from the Oxford Internet Institute it mentions algorithms, advertising, and exposure.

Although algorithms can be somewhat useful for helping me view things I might like, they are kind of invasive. They also bring up false information often and most people do not care to fact check.

Advertising is used to collect my data and sell me things in order for someone to profit off me. It honestly works wonders because I lack self-control and the advertises are great at making me feel like I need something. I joke around and tell people I say certain things I need around my phone so it will find it for me. It is all fun and games, except for the fact that it is actually listening and does find and ad for what I tell it.

Exposure is something I see every single day. Especially with the last election, I feel exposure is the reason people were getting so hostile. People were being fed information that may not even be true based on what the internet thought they wanted to see. This made people feel much stronger about politics and I feel is the reason there was so much hatred going on in the last election. For example, I was reading a Facebook post of two people arguing over political views and they were both using articles to support their facts that were not reputable sources.

Businesses can profit from being aware of all the ways they can market to people using algorithms, advertising, and exposure. Almost every social media provides some sort of option to utilize these tools. People such as myself can start to cut back on social media if I do not want it to know my business.

1 note

·

View note

Text

13 Reasons Your Website Can Have a High Bounce Rate

New Post has been published on http://tiptopreview.com/13-reasons-your-website-can-have-a-high-bounce-rate/

13 Reasons Your Website Can Have a High Bounce Rate

It’s a question asked on Reddit and Twitter every day.

It makes the shoulders of online marketers tense up and makes analysts frown with concern.

You look at your analytics, eyes wide, and find yourself asking; “Why do I have such a high bounce rate?”

What Is a Bounce Rate?

As a refresher, Google refers to a “bounce” as “a single-page session on your site.”

Bounce rate refers to the percentage of visitors that leave your website (or “bounce” back to the search results or referring website) after viewing only one page on your site.

This can even happen when a user idles on a page for more than 30 minutes.

So what is a high bounce rate, and why is it bad?

Well “high bounce rate” is a relative term, which depends on what your company’s goals are, and what kind of site you have.

Low bounce rates – or too low bounce rates – can be problems too.

Most websites will see bounce rates between 26% to 70%, according to a RocketFuel study.

Based on the data they gathered, they provided a bounce rate grading system of sorts:

Advertisement

Continue Reading Below

25% or lower: Something is probably broken

26-40%: Excellent

41-55%: Average

56-70%: Higher than normal, but could make sense depending on the website

70% or higher: Bad and/or something is probably broken

The overall bounce rate for your site will live in the Audience Overview tab of Google Analytics.

You can find your bounce rate for individual channels and pages in the behavior column of most views in Google Analytics.

What follows are 13 common reasons your website can have a high bounce rate and how to fix these issues.

1. Slow-to-Load Page

Google has a renewed focus on site speed, especially as a part of the Core Web Vitals initiative.

Advertisement

Continue Reading Below

A slow-to-load page can be a huge problem for bounce rate.

Site speed is part of Google’s ranking algorithm.

Google wants to promote content that provides a positive experience for users, and they recognize that a slow site can provide a poor experience.

Users want the facts fast – this is part of the reason Google has put so much work into featured snippets.

If your page takes longer than a few seconds to load, your visitors may get fed up and leave.

Fixing site speed is a lifelong journey for most SEO and marketing pros.

But the upside is that with each incremental fix, you should see an incremental boost in speed.

Review your page speed (overall and for individual pages) using tools like:

Google PageSpeed Insights.

The Google Search Console PageSpeed reports.

Lighthouse reports.

Pingdom.

GTmetrix.

They’ll offer you recommendations specific to your site, such as compressing your images, reducing third-party scripts, and leveraging browser caching.

2. Self-Sufficient Content

Sometimes your content is efficient enough people can quickly get what they need and bounce!

This can be a wonderful thing.

Perhaps you’ve achieved the content marketer’s dream and created awesome content that wholly consumed them for a handful of minutes in their lives.

Or perhaps you have a landing page that only requires the user to complete a short lead form.

To determine whether bounce rate is nothing to worry about, you’ll want to look at the Time Spent on Page and Average Session Duration metrics in Google Analytics.

You can also conduct user experience testing and A/B testing to see if the high bounce rate is a problem.

If the user is spending a couple of minutes or more on the page, that sends a positive signal to Google that they found your page highly relevant to their search query.

If you want to rank for that particular search query, that kind of user intent is gold.

Advertisement

Continue Reading Below

If the user is spending less than a minute on the page (which may be the case of a properly optimized landing page with a quick-hit CTA form), consider enticing the reader to read some of your related blog posts after filling out the form.

3. Disproportional Contribution by a Few Pages

If we expand on the example from the previous section, you may have a few pages on your site that are contributing disproportionally to the overall bounce rate for your site.

Google is savvy at recognizing the difference between these.

If your single CTA landing pages reasonably satisfy user intent and cause them to bounce quickly after taking an action, but your longer-form content pages have a lower bounce rate, you’re probably good to go.

However, you will want to dig in and confirm that this is the case or discover if some of these pages with a higher bounce rate shouldn’t be causing users to leave en masse.

Advertisement

Continue Reading Below

Open up Google Analytics, go to Behavior > Site Content > Landing Pages, and sort by Bounce Rate.

Consider adding an advanced filter to remove pages that might skew the results.

For example, it’s not necessarily helpful to agonize over the one Twitter share with five visits that have all your social UTM parameters tacked onto the end of the URL.

My rule of thumb is to determine a minimum threshold of volume that is significant for the page.

Choose what makes sense for your site, whether it’s 100 visits or 1,000 visits, then click on Advanced and filter for Sessions greater than that.

4. Misleading Title Tag and/or Meta Description

Ask yourself: Is the content of your page accurately summarized by your title tag and meta description?

Advertisement

Continue Reading Below

If not, visitors may enter your site thinking your content is about one thing, only to find that it isn’t, and then bounce back to whence they came.

Whether it was an innocent mistake or you were trying to game the system by optimizing for keyword clickbait (shame on you!), this is, fortunately, simple enough to fix.

Either review the content of your page and adjust the title tag and meta description accordingly or rewrite the content to address the search queries you want to attract visitors for.

You can also check what kind of meta description Google has generated for your page for common searches – Google can change your meta description, and if they make it worse, you can take steps to remedy that.

5. Blank Page or Technical Error

If your bounce rate is exceptionally high and you see that people are spending less than a few seconds on the page, it’s likely your page is blank, returning a 404, or otherwise not loading properly.

Advertisement

Continue Reading Below

Take a look at the page from your audience’s most popular browser and device configurations (e.g., Safari on desktop and mobile, Chrome on mobile, etc.) to replicate their experience.

You can also check in Search Console under Coverage to discover the issue from Google’s perspective.

Correct the issue yourself or talk to someone who can – an issue like this can cause Google to drop your page from the search results in a hurry.

6. Bad Link from Another Website

You could be doing everything perfect on your end to achieve a normal or low bounce rate from organic search results, and still have a high bounce rate from your referral traffic.

Advertisement

Continue Reading Below

The referring site could be sending you unqualified visitors or the anchor text and context for the link could be misleading.

Sometimes this is a result of sloppy copywriting.

The writer or publisher linked to your site in the wrong part of the copy or didn’t mean to link to your site at all.

Reach out to the author of the article, then the editor or webmaster if the author can’t update the article post-publish.

Politely ask them to remove the link to your site – or update the context, whichever makes sense.

(Tip: You can easily find their contact information with this guide.)

Unfortunately, the referring website may be trying to sabotage you with some negative SEO tactics, out of spite, or just for fun.

For example, they may have linked to your Guide to Adopting a Puppy with the anchor text of FREE GET RICH QUICK SCHEME.

You should still reach out and politely ask them to remove the link, but if needed, you’ll want to update your disavow file in Search Console.

Advertisement

Continue Reading Below

Disavowing the link won’t reduce your bounce rate, but it will tell Google not to take that site’s link into account when it comes to determining the quality and relevance of your site.

7. Affiliate Landing Page or Single-Page Site

If you’re an affiliate, the whole point of your page may be to deliberately send people away from your website to the merchant’s site.

In these instances, you’re doing the job right if the page has a higher bounce rate.

A similar scenario would be if you have a single-page website, such as a landing page for your ebook or a simple portfolio site.

It’s common for sites like these to have a very high bounce rate since there’s nowhere else to go.

Remember that Google can usually tell when a website is doing a good job satisfying user intent even if the user’s query is answered super quickly (sites like WhatIsMyScreenResolution.com come to mind).

Advertisement

Continue Reading Below

If you’re interested, you can adjust your bounce rate so it makes more sense for the goals of your website.

For Single Page Apps, or SPAs, you can adjust your analytics settings to see different parts of a page as a different page, adjusting the Bounce Rate to better reflect user experience.

8. Low-Quality or Under Optimized Content

Visitors may be bouncing from your website because your content is just plain bad.

Take a long, hard look at your page and have your most judgmental and honest colleague or friend review it.

(Ideally, this person either has a background in content marketing or copywriting, or they fall into your target audience).

One possibility is that your content is great, but you just haven’t optimized it for online reading – or for the audience that you’re targeting.

Are you writing in simple sentences (think high school students versus PhDs)?

Is it easily scannable with lots of header tags?

Does it cleanly answer questions?

Have you included images to break up the copy and make it easy on the eyes?

Advertisement

Continue Reading Below

Writing for the web is different than writing for offline publications.

Brush up your online copywriting skills to increase the time people spend reading your content.

The other possibility is that your content is poorly written overall or simply isn’t something your audience cares about.

Consider hiring a freelance copywriter or content strategist who can help you revamp your ideas into powerful content that converts.

9. Bad or Obnoxious UX

Are you bombarding people with ads, pop-up surveys, and email subscribe buttons?

CTA-heavy features like these may be irresistible to the marketing and sales team, but using too many of them can make a visitor run for the hills.

Google’s Core Web Vitals are all about user experience – they’re ranking factors, and effect user happiness too.

Is your site confusing to navigate?

Perhaps your visitors are looking to explore more, but your blog is missing a search box or the menu items are difficult to click on a smartphone.

Advertisement

Continue Reading Below

As online marketers, we know our websites in and out.

It’s easy to forget that what seems intuitive to us is anything but to our audience.

Make sure you’re avoiding these common design mistakes, and have a web or UX designer review the site and let you know if anything pops out to them as problematic.

10. The Page Isn’t Mobile-Friendly

While we know it’s important to have a mobile-friendly website, the practice isn’t always followed in the real world.

Google’s index is switching to mobile-only next year.

But even as recently as 2018, one study found that nearly a quarter of the top websites were not mobile-friendly.

Websites that haven’t been optimized for mobile don’t look good on mobile devices – and they don’t load too fast, either.

That’s a recipe for a high bounce rate.

Even if your website site was implemented using responsive design principles, it’s still possible that the live page doesn’t read as mobile-friendly to the user.

Advertisement

Continue Reading Below

Sometimes, when a page gets squeezed into a mobile format, it causes some of the key information to move below-the-fold.

Now, instead of seeing a headline that matches what they saw in search, mobile users only see your site’s navigation menu.

Assuming the page doesn’t offer what they need, they bounce back to Google.

If you see a page with a high bounce rate and no glaring issues immediately jump out to you, test it on your mobile phone.

You can identify non-mobile-friendly pages at-scale using Google’s free Test My Site tool.

You can also check for mobile issues in the Search Console and Lighthouse.

Advertisement

Continue Reading Below

This can work in reverse too – make sure your site is easy to read and navigate in desktop, tablet, and mobile formats, and with accessibility devices.

11. Wonky Google Analytics Setup

It’s possible that you haven’t properly implemented Google Analytics and added the tracking codes to all the pages on your site.

Google explains how to fix that here.

12. Content Depth

Google can give people quick answers through featured snippets and knowledge panels; you can give people the deep, interesting, interconnected content that’s a step beyond that.

Make sure your content compels people to click on further.

Provide interesting, relevant internal links, and give them a reason to stay.

And for the crowd that wants the quick answer, give them a TL;DR summary at the top.

13. Asking for Too Much

Don’t ask someone for their credit card number, social security, grandmother’s pension, and children’s names right off the bat – your user doesn’t trust you yet.

Advertisement

Continue Reading Below

People are ready to be suspicious considering how many scam websites are out there.

Being presented with a big pop up asking for info will cause a lot of people to leave immediately.

The job of a webmaster or content creator is to build up trust with the user – and doing so will both increase consumer satisfaction and decrease your bounce rate.

If it makes users happy, Google likes it.

Don’t Panic

A high bounce rate doesn’t mean the end of the world.

Some well designed, beautiful webpages have high bounce rates.

Bounce rates can be a measure of how well your site is performing but if you don’t tackle them with nuance, they can become a vanity metric.

Don’t present bounce rates without context.

Sometimes you want a medium to high bounce rate.

And don’t try and “fix” bounce rates that aren’t actually a problem by slowing down users on the page, or preventing them from going back from your site.

Advertisement

Continue Reading Below

That makes a more frustrated user, and they won’t return to your content again.

Even if the number superficially goes down, you will have earned a lot worse than a “bounce.”

5 Pro Tips for Reducing Your Bounce Rate

Regardless of the reason behind your high bounce rate, here’s a summary of best practices you can implement to bring it down.

1. Make Sure Your Content Lives Up to the Hype

Your title tag and meta description effectively act as your website’s virtual billboard in Google.

Whatever you’re advertising in the SERPs, your content needs to match.

Don’t call your page an ultimate guide if it’s a short post with three tips.

Don’t claim to be the “best” vacuum if your user reviews show a 3-star rating.

You get the idea.

Also, make your content readable:

Break up your text with lots of white space.

Add supporting images.

Use short sentences.

Spellcheck is your friend.

Use good, clean design

Don’t bombard with too many ads

Advertisement

Continue Reading Below

2. Keep Critical Elements Above the Fold

Sometimes, your content matches what you advertise in your title tag and meta description; visitors just can’t tell at first glance.

When people arrive on a website, they make an immediate first impression.

You want that first impression to validate whatever they thought they were going to see when they arrived.

A prominent H1 should match the title they read on Google.

If it’s an ecommerce site, a photo should match the description.

Give a good first impression

Make sure banners don’t push your content too far down.

Make sure what the user is searching for is on the page when it loads.

3. Speed Up Your Site

When it comes to SEO, faster is always better.

Keeping up with site speed is a task that should remain firmly stuck to the top of your SEO to-do list.

There will always be new ways to compress, optimize, and otherwise accelerate load time.

Implement AMP.

Compress all images before loading them to your site, and only use the maximum display size necessary.

Review and remove any external or load-heavy scripts, stylesheets, and plugins. If there are any you don’t need, remove them. For the ones you do need, see if there’s a faster option.

Tackle the basics: Use a CDN, minify JavaScript and CSS, and set up browser caching.

Check Lighthouse for more suggestions

Advertisement

Continue Reading Below

4. Minimize Non-Essential Elements

Don’t bombard your visitors with pop-up ads, in-line promotions, and other content they don’t care about.

Visual overwhelm can cause visitors to bounce.

What CTA is the most important for the page?

Compellingly highlight that.

For everything else, delegate it to your sidebar or footer.

Edit, edit, edit!

5. Help People Get Where They Want to Be Faster

Want to encourage people to browse more of your site?

Make it easy for them.

Leverage on-site search with predictive search, helpful filters, and an optimized “no results found” page.

Rework your navigation menu and A/B test how complex vs. simple drop-down menus affect your bounce rate.

Include a Table of Contents in your long-form articles with anchor links taking people straight to the section they want to read.

Summary

Hopefully, this article helped you diagnose what’s causing your high bounce rate, and you have a good idea of how to fix it.

Make your site useful, user-focused, and fast – good sites attract good users.

More Resources:

Advertisement

Continue Reading Below

Image Credits

All screenshots taken by author, November 2020

if( !ss_u )

!function(f,b,e,v,n,t,s) if(f.fbq)return;n=f.fbq=function()n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments); if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0'; n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0]; s.parentNode.insertBefore(t,s)(window,document,'script', 'https://connect.facebook.net/en_US/fbevents.js'); fbq('init', '1321385257908563');

fbq('track', 'PageView');

fbq('trackSingle', '1321385257908563', 'ViewContent', content_name: 'website-bounce-rate', content_category: 'marketing-analytics seo ' );

// end of scroll user

Source link

0 notes

Text

The week that just was, and why I finally left Facebook.

So I've been restructuring my social media presence over the past couple of weeks, and one of the side effects of this is that I've changed where I get my news from. Oh, I still go to the same websites as before, but because of changes I've made to my social media what gets spoon fed to me by the various sites out there has changed dramatically. It put me in the absolutely thrilling position of telling Newt Gingrich to go fuck himself - directly. I'm also getting a lot less conspiracy garbage thrown at me, and that allows me to look more closely at reality itself.

So while you've all been hearing from the news media and the political bloggers how a woman getting her hair done has the same weight as allowing 200,000 people die, allow me to tell you what the government of the United States of America has been doing in just this past week, the first week of September 2020.

Department of Defense: This week the Pentagon ordered the cessation of publication both in print and on line of Stars and Stripes, the independent and superb newspaper of the U.S. Military. They have been independently operated and published since 1861, and that will stop at the end of this month. Because Trump is frightened of some of the stories they run, including one that says he is critical of those who have made the choice to serve, especially if they die during that service. Update: As I was finishing writing this essay news broke that the Trump Administration had backed down from shutting down Stars & Stripes. I certainly hope so. They've lied about this sort of thing before.

Department of Education: This week the Education Department has decided to reinstate Standardized Testing, which they suspended due to the COVID-19 Pandemic. This has drawn sharp criticism from Governors of both major parties, as we're, you know, in the middle of a fucking pandemic! Oh, and testing isn't to be done remotely, but in person.

Department of the Treasury: Has been claiming all week that there will be a deal next week on a new stimulus package for dealing with some aspects of the fallout due to the pandemic. That would be a neat trick, considering that the Senate is only scheduled to be in session 2 days next week. Ever look at the track record of the current Treasury Secretary of actually doing the things he says he's doing? It's pretty abysmal. How the hell was this guy a successful movie producer?

Department of State: Has this week been quietly (and not so quietly) floating the idea of the U.S. leaving NATO. They also placed sanctions of two investigators from The International Court at The Hague, because it is their job to look into the possibility of war crimes in our never-ending conflict in Afghanistan. Not on the court, but on people who work for the court.

Department of Justice: The man who runs the Justice Department, who is a lawyer (a requirement to hold the post), did not know that it is a Felony to vote twice in an election, or to encourage others to do so. In other words, he didn't know that Trump broke the law when he told people to vote twice in North Carolina this week.And oh yes, he dismissed yet another Chief Prosecutor of a District Court, and believes that racism isn't a thing anymore.

Department of the Interior: Did you notice this this week was the big week? The first lots to become available for drilling in the Arctic Wildlife Refuge came out this week. Bidding has begun on who gets to destroy millions of acres of pristine habitat!

Department of Agriculture: A leading scientist named Lewis Ziska has resigned after the government buried a paper he had written, subsidized by government study, that shows that due to the rise in Carbon Dioxide in our atmosphere the nutritional value of rice has fallen to critical levels. Rice is the staple diet of a very large portion of the world, and our current government wants to to ignore this.

Department of Commerce: Still going after TikTok. They don't seem to be doing anything else.

Department of Labor: Doled out $20 Million for relief for those struggling to recover from Hurricane Laura. The actual need in economic costs (non property and non insured losses) being about $8 Billion. So about 0.25% of the need. It would have been as much as 0.75% of the need, another $40 Million, but Trump moved that money, possibly illegally, to the Department of Homeland Security to help pay to send unmarked and unregulated troops to beat people up in Portland, Chicago, Minneapolis, Los Angeles, and other places.

Department of Health and Human Services: started telling people to stop getting tested, in the middle of a Pandemic that as of today's writing is sickening over 45,000 people per day and killing over 1,000.

Department of Housing and Urban Development: the ban on evictions for people under hardships because they might be one of the 50 Million people who have lost their jobs due to the pandemic has been lifted. Mass evictions began this week.

Department of Transportation: The department is continuing their now month-long trend of allowing airlines to stop servicing specific routes for United Airlines, Spirit Airlines, Hawaiian Airlines, and many more. Did you want to fly there? Well, that route has now been cancelled.

Department of Energy: This week sunk $29 Million into a total of 14 studies on technology that presently doesn't exist and that many physicist believe is impossible: Cold Fusion.

Department of Veteran Affairs: Dismissed a high level employee and a veteran herself from an important position because she has a podcast - about the Mueller investigation.

Department of Homeland Security: Suspended intelligence briefings to Congress and Presidential Candidate Joe Biden about Russian interference in the 2020 election, despite the fact that they are both entitled to these briefings, by law.

And these are just some of the big ticket items I found in just a few minutes of searching. There is lots more. Lots more.

How many of these stories did you know? A few, I'm sure, but all of them? I know about all of them because I dropped Facebook. That's the only thing I did, and so much disinformation and filtering disappeared from my news gathering sources. I learned some weeks back that the algorithms that Facebook uses to filter your content are also used by many other media sites, including news sites, who tracks through cookies your history on Facebook and uses that data as their own filtration sources.

Facebook controls what you see on about 70% of the entire internet, in terms of how that data gets filtered. That's just unacceptable. I'm perfectly fine with Facebook controlling what you see on their platforms. It's their site, let them run it as they see fit. But a lot of Facebook's money comes from selling the same filtration data for focused advertising to other companies, and they've been soaking it up. And we've been letting it happen.

I don't want Facebook to decide what I can see. I want to determine this myself. It can't be done as long as you have an active Facebook account.

This was a difficult step for me to take. I have remained in contact with many of my friends through Facebook alone, and I will lose some of them. That isn't pleasant. I'm a cranky man and it's difficult to be my friend and as such those people who are my friends a place great value on. I have discovered wonderful things thanks to Facebook. And even though I find Facebook to be a creative black hole - in that it makes the creative process harder because of the sheer overflow of garbage - I never was able to fully leave the site.

But this was a step too far for me. For all that I lose I honestly believe I will gain more. The ability to be better informed. That is my very lifeblood.

I am still here, still on twitter, still on tumblr, and will be reviving my Patreon page in the upcoming months. And because I love you, have some new Vulfpeck:

youtube

0 notes

Text

How Ranking Factors Research Studies Damage the SEO Industry

Recently SEMrush published a research study on Google's leading ranking elements. The study was not unlike numerous other research studies published each year. It used a statistically significant data set to draw parallels in between typical metrics and high position (or ranking) in Google.However, the conclusions they concerned and reported to the market were not entirely correct.Defining Ranking Aspects & Best Practices First,let's specify"ranking elements. "Ranking aspects are those components which, when changed in connection with a website, will lead to a modification in position in a search engine(in this case, Google)."Best practices" are different. Finest practices are tactics which, when carried out, have actually revealed a high connection to much better performance in search results.XML sitemaps are an exceptional example. Developing and uploading an XML sitemap is a best practice.

The existence of the sitemap does not lead directly to better rankings. Nevertheless, offering the sitemap to Google allows them to crawl and understand your website more efficiently.When Google comprehends your website much better, it can cause better rankings. However an XML sitemap is not a ranking factor.The just ranking aspects that we understand about for sure are the ones that Google particularly mentions. These have the tendency to be esoteric, like "high authority"

or" great content "or even" awesomeness". Google generally does not supply particular ranking elements because whenever that they do, web designers overdo it. Remember the link wars of 2011-2014!.?.!? They have learned their lesson.Understanding Correlation vs. Causation Here's another way to think about correlation vs. causation:

A big percentage of high-ranking sites most likely have XML sitemaps. This is a correlation. The XML sitemap did not cause the website to acquire a high ranking.This would be like saying if you consume sour cream, you will enter a motorbike mishap based upon the correlation revealed below.

Let's take another example.High quantities of direct traffic were revealed to have strong correlation with better ranking in the SEMrush study. This was a controversial declaration, due to the fact that it existed as"direct traffic is the primary ranking factor. "While the data is most likely accurate, exactly what does it truly mean?Let's start by defining Direct Traffic. This is traffic that pertained to a site URL without any referrer header (i.e. the visitor didn't come to the site through email, search, or links from another website ). Thus, it includes any traffic for which Google Analytics( or the platform in concern )can not figure out a referrer.Direct Traffic is essentially the container for"we do not know where it originated from."Sessions are misattributed to Direct Traffic all the time, and some studies have actually revealed that as much as 60 percent of direct traffic might really be natural traffic.In other words, it's not a reliable metric.Let's presume for a moment Direct Traffic is a dependable metric. If a website has high direct traffic, they are likewise most likely to have a strong brand name, high authority, and devoted users. All these things can help SEO ranking. However the connection is indirect.There are many other good arguments that have debunked the principle of direct traffic as a ranking aspect particularly. Any goodSEO ought to check out and understand them.Moving on from Direct Traffic, Searchmetrics succumbs to this correlation/causation issue too in their latest Travel Ranking Factors research study, where they assert word count and number of images are both ranking factors for the travel industry.Google has directly exposed the word count assertion and the variety of images declare is so silly I needed to ask John Mueller about it straight for this article: If you check out in between the lines, you can tell Mueller says using a certain number of images as a ranking factor is foolish, and it can vary widely.It is a lot more most likely that a fuller treatment of the keyword in concern is the ranking aspect rather than strictly"word count "and great quality travel websites are most likely to have lots of images.If you desire even more proof word count is a silly metric for any industry

, just have a look at the leading outcome for "is it Christmas?" (h/t Casey Markee ) This website has actually been in the # 1 area considering that at least 2008, and it literally has one word on the whole website. That one word completely responds to the intention of the query.While Searchmetrics does a good task of defining ranking aspects, their use of that term in relationship to this chart is careless. These must be identified" correlations"or similar

, not "ranking factors."width =810 height=446 > This is the core of the matter. Research studies using statistically considerable, connection, or perhaps machine finding out like the

Random Forest design (what SEMRush used)can be precise. I have no doubt that the results of all of the studies mentioned were precise as long as the data that was fed into them was precise. The issue came not in the data itself, but in the analysis and reporting of that information, particularly when they noted these metrics as "ranking elements ". Assess the Metrics Used This raises the need to utilize sound judgment to examine things that you check out. A study might claim that time on site is a ranking factor.First, you have to question where that data came from, since it's a site-specific metric that couple of would know or be able to

guess at without website or analytics gain access to. The majority of the time, this sort of data originates from third-party plugins or toolbars that record users 'behavior on websites.

The issue with this is that the data set will never ever be as complete as site-specific analytics data.Second, you need to think about the metric itself. Here's the issue with metrics like time on site and bounce rate. They're relative.After all, some industries (like maps or yellow pages)flourish on a high bounce rate. It implies the user got exactly what they required and went on their method having had a good experience and being most likely to return.For a

time on website example, let's say you want to speak with a divorce attorney. If you're smart, you use incognito mode(where most/all plugins are handicapped)

to do this search and the subsequent website gos to. Otherwise your partner might see your website history or get targeted ads to them.Imagine your partner seeing this in the Facebook news feed when she or he thinks your marital relationship is strong: Facebook advertisement example from Easy Representative PRO So for a market like divorce lawyers, time on site data is likely to be either heavily manipulated or not readily available.But Google Owns an Analytics Platform!Some of you will say that Google has

access to this information through Google Analytics, and that

's definitely true. There has been no positive connection ever shown in between having an active Google Analytics account and ranking much better on Google. Here's a terrific post on the SEMPost that enters into more information on this.Google Analytics is only installed on 83.3 percent of"websites we understand about", according to W3techs. That's a lot, however it isn't every site, even if we do assume this is a representative sample. Google merely could not feed something into their algorithm that is not available in almost 20 percent of cases.Finally, some will make the argument that Chrome can gather direct traffic data. This has the same issue as Google Analytics though, due to the fact that at last check, Chrome commanded an outstanding 54 percent market share(according to StatCounter ). That's considerable, but just a little

majority of all web browser traffic is not a reputable adequate data source to make a ranking factor.Doubling Down on Bad Information Many of you have actually read this believing that yes, we understand all that. We're search experts. We do this every day. We understand that a chart that states direct traffic or bounce rate is a ranking factor needs to be taken with a grain of salt.The danger is when this info

gets shared beyond our industry. All of us

have a duty to utilize our powers for great; we need to inform the world around us about SEO, not perpetuate stereotypes and myths.I'm going to select on Larry Kim for a minute here, who

I believe is a fantastic guy and a very clever online marketer. He recently published the SEMrush ranking element chart on Inc.com together with a well-reasoned article about why he believes the study has value.I had the opportunity to overtake Larry by phone prior to completing this article, and he impressed upon me that his intention with his post was to examine the claim of direct traffic as a ranking element. He felt that if a research study showed that direct traffic had a high correlation with good search ranking, there needed to be something more there.I informed him that while

I don't concur with whatever in his post, I understand his train of thought. What I would like to see more of from everyone in the industry is understanding that outside our microcosm of keywords and SERP click-through rates, SEO is still a "black box"in lots of people's minds.Because SEO is made complex and complicated, and there's a great deal of bad details out there, we have to do whatever that we can to clarify charts and research studies and declarations. The particular problem I have with Larry's short article is that great deals of people beyond SEO checked out Inc. This consists of lots of top-level choice makers who do not always understand the finer points of SEO.In my viewpoint, Larry sharing the chart as"ranking aspects"and not unmasking the clearly false info included in the graph was not responsible. Any CEO looking at that chart could fairly presume that his/her meta keywords hold some value to ranking (not a lot based on the position on the chart, but some). However, no major search engine has actually used meta keywords for regular SERP rankings(Google News is various ) given that a minimum of 2009. This is objectively false information.We have an obligation as SEO specialists to stop the spread of bad or incomplete info. SEMrush released a study that was objectively valid, however the subjective analysis of it developed problems.

Larry Kim republished the subjective interpretation without effectively qualifying it. 'Constantly '&'Never 'Don't Exist in SEO Last week, I consulted with a new client. They had been struggling to consist of five extra links in all of their content since at some point, an SEO informed them they should ALWAYS connect out to a minimum of 5 sources on every post. Another customer had been informed they need to NEVER EVER link out from their site to anything.Anyone who understands about SEO knows that either one of these statements is bad recommendations and patently incorrect information.We as SEO experts can assist stem the tide of these legendary "discoveries"by

highlighting to our customers, our readers, and our coworkers that ALWAYS and NEVER do not exist in SEO since there are merely too lots of factors to say anything definitively is or is not a ranking factor unless an online search engine has actually particularly specified that it is.It Takes place Every Day Actually every day something is taken out of context, misattributed, or improperly associated as a causation. Just recently, Google's Web designer Trends Expert John Mueller stated this in action to

a tweet from Bill Hartzer: TTFB for those non-SEOs reading is"Time to First Byte". This refers to how quickly your server reacts to the first request for your page.Google has actually stated on multiple celebrations that speed is a

ranking factor. What they have actually not

said is precisely how it is determined. Mueller states TTFB is not a ranking aspect. Let's assume he's informing the fact and this is fact.This does not indicate you don't have to worry about speed, or that you don't have to be worried about how quickly your server reacts. He qualifies it in his tweet-- it's a"great proxy"and don't"blindly focus"on it. There are myriad other ranking aspects

that could be negatively affected by your TTFB. Your user experience may be bad if your TTFB is sluggish. Your site may not make high mobile use ratings if your TTFB is slow.Be very careful how you interpret info. Never ever take it at face value.Mueller said TTFB is not a ranking factor. Now I know that is truth and I can indicate his tweet when needed. But I will not stop consisting of TTFB in my audits; I will not stop motivating customers to obtain this as low as possible. This statement changes nothing about how SEO experts will do their jobs, and only serves to puzzle the larger marketing community.It is our duty to different SEO fact from fiction; to interpret declarations from Google as carefully as possible, and to usually eliminate the misconception that there is anything you CONSTANTLY or NEVER EVER carry out in SEO.Google uses over 200 ranking factors, or so they say.

Chasing these mystical metrics is tough to resist-- after all as SEOs, we are data-driven

-- in some cases to a fault.When you analyze ranking aspect research studies, use an important eye. How was the information gathered, processed and associated? If the 3rd party is making a claim that something is a ranking aspect, does it make good sense that Google would use it?And lastly, does discovering that x or y is or is not a ranking element modification anything about the recommendations you will make to your client or manager?

The response to that last one is almost constantly"no. "Too much depends on other factors, and knowing something is or is not a ranking factor is usually not actionable.There's no ALWAYS or NEVER in SEO and if we want SEO to continue to grow as a discipline, we have to buckle down about discussing that. It's time to take the responsibility we need to the outside world more seriously.Searchmetrics and SEMRush were requested comment, however did not respond prior to press time. This post was initially released on JLH Marketing.Screenshots taken by author, December 2017

Source

https://www.searchenginejournal.com/ranking-factors-studies-damage/233439/

0 notes

Text

Google announces new captcha system to identify robots

New Post has been published on https://workreveal.biz/google-announces-new-captcha-system-to-identify-robots/

Google announces new captcha system to identify robots

Political advertising on-line desires transparency and expertise

Political advertising online has unexpectedly turn out to be a complicated industry. The fact that most of the people get their facts from only some platforms and the increasing sophistication of algorithms drawing upon rich pools of personal statistics imply that political campaigns are now constructing individual adverts centred immediately at customers. One supply shows that inside the 2016 US election, as many as 50,000 variations of advertisements were being served each single day on Facebook, a close to not possible state of affairs to monitor. And there are recommendations that a few political adverts – within the US and around the arena – are being used in unethical methods – to point citizens to faux news websites, as an instance, or to maintain others far away from the polls. Centred marketing allows a campaign to mention distinctive, probably conflicting things to different agencies. Is that democratic?

Those are complicated troubles, and the solutions will no longer be simple. However, a few great paths to development are already clean. We must work together with net corporations to strike a balance that puts a fair degree of information manipulate back into the hands of people, along with the development of latest technology including private “statistics pods” if needed and exploring alternative sales fashions together with subscriptions and micropayments. We need to fight in opposition to government overreach in legal surveillance guidelines, inclusive of via the courts if important. We must ward off against incorrect information by encouraging gatekeepers which include Google and Facebook to retain their efforts to combat the hassle while averting the introduction of any valuable our bodies to decide what’s “true” or not. We need more algorithmic transparency to recognise how important decisions that have an effect on our lives are being made, and possibly a set of conventional ideas to be accompanied. We urgently want to shut the “internet blind spot” in the regulation of political campaigning.

Our group on the web Basis can be operating on many of These problems as part of our new five-year strategy – getting to know the issues in an extra element, developing with proactive coverage answers and bringing coalitions collectively to force progress toward the web that gives same energy and opportunity to all.

I may also have invented the net. However, all of you have got helped to create what it’s far today. All of the blogs, posts, tweets, images, films, programs, internet pages and more constitute the contributions of millions of you around the arena building our on-line network. All sorts of people have helped, from politicians preventing to keep the internet open, standards organisations like W3C improving the electricity, accessibility and security of the technology, and individuals who have protested in the streets. Within the beyond 12 months, we have seen Nigerians rise to a social media bill that could have hampered free expression on-line, widespread outcry and protests at regional internet shutdowns in Cameroon and excellent public guide for internet neutrality in each India and the European Union.

It has taken all and sundry to construct the web we have, and now it’s far as much as anybody to build the net we want – for anybody.

The revel in of squinting at distorted text, involved over small photographs, or maybe inevitably clicking on a checkbox to show you aren’t a robotic ought to quickly be over if a brand new Google service takes to the air.

The organisation has found out the modern day evolution of the Captcha

captcha

(short, sort of, for completely Computerized Public Turing take a look at to inform Computers and Human beings Aside), which goals to do away with any interruption at all: the new, “invisible reCaptcha” goals to tell whether or not a given traveler is a robot or now not purely by using analysing their browsing behaviour. Barring a quick wait while the machine does its job, a typical human traveller shouldn’t do anything else to show they’re no longer a robot.

It’s a protracted manner from the first Captchas, brought to stop Automatic applications signing up for services like email addresses and social media accounts. The idea is straightforward: pick out an undertaking that a human can do easily, and a machine unearths very hard, and require that venture be finished before the manner can be persisted. Google ‘s captcha basically tells if you’re a robot or not.

The first captchas frequently trusted obfuscated textual content: some letters and numbers, blurred, distorted, or in any other case rendered desperate to parse with traditional character recognition software. Even then, they had been nevertheless bypassed fairly frequently. The confined number of characters available in the Latin alphabet supposed that the software could quick enhance to a passable level of accuracy, even as obfuscating the letters any further ought to lead to real Humans – especially people with bad eyesight – being locked out.

And that changed into handiest while the device wasn’t installation badly in other ways. For instance, one ticket tout within the mid-00s, faced with a Captcha at Ticketmaster, located that the whole device becomes pre-generated: the ticketing website had simplest loaded about 30,000 captchas into its database. The tout’s group, in reality, downloaded every Captcha picture they might, then stayed up all night time manually fixing them. From then on, the boat may want to buy tickets routinely without trouble.

However, the first large breakthrough in Captchas to hit the net had nothing to do with making it harder for robots to pass them. Rather, it was an insight that all the attempt human beings had been putting into gazing squiggly text will be ways higher carried out.

prove you’re not a robot

Dubbed reCaptcha, the concept came from Luis von Ahn in 2008, a professor at Carnegie Mellon University who has because co-founded language studying startup Duolingo. Von Ahn realised that if People have been doing something that Computers found difficult – analysing distorted text – they have to as a minimum be examining text this is useful.

ReCaptcha replaced the autogenerated textual content in preceding Captchas with words drawn from scanned textual content which includes newspapers, books and magazines: textual content that needed to become a PC-readable type. It still distorted the images, to be able to preserve Computers out. However, the real words typed in have been fed back to the database to enhance the original information.

That added a second hassle, even though: if a computer can’t examine the phrase presented, how does the machine realise whether the person got it right or incorrect? Von Ahn’s answer turned into to offer pairs of words, one already solved and one unknown word. If the respond in the primary one fits that given previously, then the user might be a human – and so the second one answer also receives delivered to the database, and finally presented to a brand new person.

The idea turned into compelling, mainly to one net titan: in September 2009, Google offered reCaptcha. The acquisition made feel. The organisation now not handiest had a large wide variety of account creation requests, way to spammers seeking to create Gmail debts en masse, it also had a vast corpus of text to digitise, the result of its controversial plan to scan in hundreds of thousands of books and newspapers. The one’s incentives also intended Google should make reCaptcha unfastened for different groups to apply, with the server fees being recouped by using the treasured data.

But even though reCaptcha made proving you’re a human useful, it couldn’t beat the development of automatic textual content recognition. As early as 2008, the Captcha concept becomes already beginning to fall at the back of. Not simplest were robots getting higher at analysing even distorted text; However spammers had been starting to use reCaptcha’s concept against it: if Humans can do paintings greater than robots, why no longer get them to do the paintings? Via supplying up something without spending a dime (this being the net, it’s usually porn), a spammer may want to persuade humans often to remedy another website online’s Captchas for them, using just copying the picture over.

Captchas have advanced in response, with Google introducing an increasing number of diffused technological tricks to attempt to inform whether or not a consumer is or isn’t a human. That culminated in 2014 when it brought the “No Captcha reCaptcha”. The shape seems like a simple container: stick it to verify which you aren’t a robotic.

Not like textual content-based totally Captchas, the mechanisms via which Google tells whether or not it’s handling a robotic were intentionally obscured. The organization stated it employed “superior risk analysis” software program, which video display units things like how the user types, where they flow their mouse, wherein they click on and how long it takes them to test a page, all with the aim of running out which behaviours are human-like and that are too robot.

That’s probably how the new Invisible reCaptcha works, despite the fact that the agency is even more silent almost about that. In response to a request for elaboration, Google handiest related to a promotional video.

However, the No Captcha reCaptcha didn’t imply the death of beneficial Captchas. Instead, they’ve developed too, moving beyond text to assist Google’s other massive records tasks.

google

If Google decides you aren’t human with its strange voodoo, it’ll now show you a group of pix and ask you to unwittingly teach its system getting to know systems in numerous approaches. Some customers is probably proven a grid complete of animal pix and be requested to pick out each cat (beneficial education for Google snapshots’ capability to search thru you photos for keywords you provide); others might be shown a photograph taken from an Avenue View vehicle and asked for kind of the door numbers of houses (beneficial for improving the accuracy of the corporation’s maps) or choose every a part of the photograph that incorporates street signs (helpful for training the company’s self-driving automobiles). Nonetheless, others might be shown an image of an army helicopter and asked to pick All the squares that incorporate a helicopter (beneficial schooling for … well, probably for image recognition, However maybe for Google’s plan to take over the arena with AI).

In the long run, even though, Google’s plan to do away with the burden of a reCaptchas altogether method that it’s going to get less and much less of this information from end customers. But given the company’s scale, even the individuals who fail the invisible reCaptcha might nicely offer sufficient extra facts to offer Google’s AI plans but more of a boost against the opposition. Who knows, maybe the Invisible Captcha is also schooling an AI a way to act like a human online?

0 notes