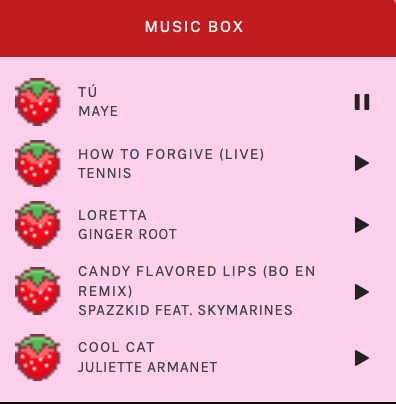

#figured out how to tag users in html and how to make the text small i'm chuffed

Explore tagged Tumblr posts

Note

hi!!! i love for custom blog theme,, do you have a link to the code or creator 0:?

ya!

so my theme is actually a heavily modified version of redux edit #1 by lopezhummel (current url: holyaura). i always remind users that most tumblr themes are old and that you'll need to replace all instances of "http://" in the code with "https://" so tumblr will save the theme. i had to do it with this one

these are the modifications i made to the theme. i edited this theme over the course of at least a year or so and don't quite recall how i did all of these things. but to the best of my ability:

i moved the "left side img" to the right side of the screen. i also made this element "responsive" so the image will never get cropped when you resize your screen. this was a bitch and a half to figure out and i truthfully do not remember how i did it

i deleted the text in the drop-down navigation so it appears as a little line that is otherwise not noticeable. this type of theme, the "redux edit," used to be very popular because having a drop-down menu let you cram a bunch of links that lead to sub-pages on your blog. i've done away with my sub-pages, but i still like the format of the "redux style" tumblr theme, for its minimal UI and for its customization options.

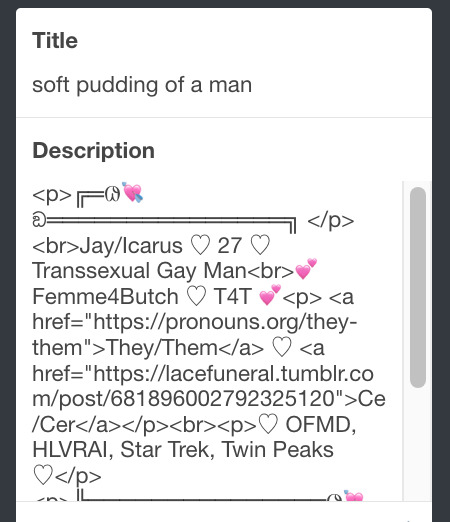

i separated my mobile description from my web description for formatting reasons. basically, most elements in tumblr themes are connected to specific text fields and toggles. i simply went to the section that was connected to my blog description and deleted it. the web description has to be manually typed inside of the CSS/HTML editor when i want to change it. whereas my mobile description is whatever i type in the "description" box of the normal tumblr theme editors.

i added code someone else made ("NoPo" by drannex42 on GitHub) which allows you to hide posts with certain tags on them. i did this to hide my pinned post, as it looks bad on desktop.

i replaced the tiny pagination arrows at the bottom with images that literally say "next" and "back" because the arrows were far too small/illegible. i know they aren't centered in the container i'm not sure how to fix that lol

i added a cursor

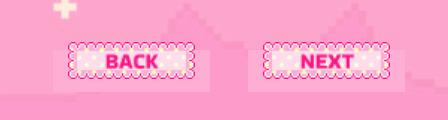

i installed a working music box ("music player #3" by glenthemes), and then added music by uploading MP3 files to discord and then using the links of those files as the audio sources. iirc i also had to make this element responsive and i aligned it so it would sit on the left side of my screen. i made the "album art" for each one the same strawberry pixel art

the moth is just a PNG i added and then moved around so it was behind my sidebar using the options that came pre-packaged with the theme

if you want something like the strawberry shortcake decoration at the top (called "banner" in the theme) your best bet is to google "pixel divider"

theme didn't support favicon so i added that in so i could have a little heart

ALSO:

this theme is. really weird about backgrounds. any background that i have ever set for it, i've had to do weird shit in photoshop. like making the background HUGE, mirroring it, etc. - because it would crop the image weird, or there would be a gap where there was no image. idk man, it's haunted. i'm sure there's a way to fix this but i am NOT tech savvy enough. anyway, patterns are probably your best friend. and if you DO want something that isn't a pattern, it's going to take a lot of trial and error. but i love this theme so i deal with it 😭

the sidebar image and the floating image do not scale. if your image is 1000 pixels, it will display at 1000 pixels. you'll either have to edit the code so that the theme scales the image for you, or resize any images before you add them

my white whale of theme editing (aside from the Weird Background thing) is that i cannot get infinite scrolling to work. i have tried every code out there. all of them break my theme. it makes me sad because like. i have music there for a reason. the idea is that people would listen to it while they scroll. unfortunately, the way it's set up now, the music will stop every time someone clicks "next" or "back" 💀

anyway sorry for rambling but i hope you enjoy the the theme and customizing it in the way that you want to!

24 notes

·

View notes

Text

Fandom Userscript Cookbook: Five Projects to Get Your Feet Wet

Target audience: This post is dedicated, with love, to all novice, aspiring, occasional, or thwarted coders in fandom. If you did a code bootcamp once and don’t know where to start applying your new skillz, this is for you. If you're pretty good with HTML and CSS but the W3Schools Javascript tutorials have you feeling out of your depth, this is for you. If you can do neat things in Python but don’t know a good entry point for web programming, this is for you. Seasoned programmers looking for small, fun, low-investment hobby projects with useful end results are also welcome to raid this post for ideas.

You will need:

The Tampermonkey browser extension to run and edit userscripts

A handful of example userscripts from greasyfork.org. Just pick a few that look nifty and install them. AO3 Savior is a solid starting point for fandom tinkering.

Your browser dev tools. Hit F12 or right click > Inspect Element to find the stuff on the page you want to tweak and experiment with it. Move over to the Console tab once you’ve got code to test out and debug.

Javascript references and tutorials. W3Schools has loads of both. Mozilla’s JS documentation is top-notch, and I often just keep their reference lists of built-in String and Array functions open in tabs as I code. StackOverflow is useful for questions, but don’t assume the code snippets you find there are always reliable or copypastable.

That’s it. No development environment. No installing node.js or Ruby or Java or two different versions of Python. No build tools, no dependency management, no fucking Docker containers. No command line, even. Just a browser extension, the browser’s built-in dev tools, and reference material. Let’s go.

You might also want:

jQuery and its documentation. If you’re wrestling with a mess of generic spans and divs and sparse, unhelpful use of classes, jQuery selectors are your best bet for finding the element you want before you snap and go on a murderous rampage. jQuery also happens to be the most ubiquitous JS library out there, the essential Swiss army knife for working with Javascript’s... quirks, so experience with it is useful. It gets a bad rap because trying to build a whole house with a Swiss army knife is a fool’s errand, but it’s excellent for the stuff we're about to do.

Git or other source control, if you’ve already got it set up. By all means share your work on Github. Greasy Fork can publish a userscript from a Github repo. It can also publish a userscript from an uploaded text file or some code you pasted into the upload form, so don’t stress about it if you’re using a more informal process.

A text editor. Yes, seriously, this is optional. It’s a question of whether you’d rather code everything right there in Tampermonkey’s live editor, or keep a separate copy to paste into Tampermonkey’s live editor for testing. Are you feeling lucky, punk?

Project #1: Hack on an existing userscript

Install some nifty-looking scripts for websites you visit regularly. Use them. Ponder small additions that would make them even niftier. Take a look at their code in the Tampermonkey editor. (Dashboard > click on the script name.) Try to figure out what each bit is doing.

Then change something, hit save, and refresh the page.

Break it. Make it select the wrong element on the page to modify. Make it blow up with a huge pile of console errors. Add a console.log("I’m a teapot"); in the middle of a loop so it prints fifty times. Savor your power to make the background wizardry of the internet do incredibly dumb shit.

Then try a small improvement. It will probably break again. That's why you've got the live editor and the console, baby--poke it, prod it, and make it log everything it's doing until you've made it work.

Suggested bells and whistles to make the already-excellent AO3 Savior script even fancier:

Enable wildcards on a field that currently requires an exact match. Surely there’s at least one song lyric or Richard Siken quote you never want to see in any part of a fic title ever again, right?

Add some text to the placeholder message. Give it a pretty background color. Change the amount of space it takes up on the page.

Blacklist any work with more than 10 fandoms listed. Then add a line to the AO3 Savior Config script to make the number customizable.

Add a global blacklist of terms that will get a work hidden no matter what field they're in.

Add a list of blacklisted tag combinations. Like "I'm okay with some coffee shop AUs, but the ones that are also tagged as fluff don't interest me, please hide them." Or "Character A/Character B is cute but I don't want to read PWP about them."

Anything else you think of!

Project #2: Good Artists Borrow, Great Artists Fork (DIY blacklisting)

Looking at existing scripts as a model for the boilerplate you'll need, create a script that runs on a site you use regularly that doesn't already have a blacklisting/filtering feature. If you can't think of one, Dreamwidth comments make a good guinea pig. (There's a blacklist script for them out there, but reinventing wheels for fun is how you learn, right? ...right?) Create a simple blacklisting script of your own for that site.

Start small for the site-specific HTML wrangling. Take an array of blacklisted keywords and log any chunk of post/comment text that contains one of them.

Then try to make the post/comment it belongs to disappear.

Then add a placeholder.

Then get fancy with whitelists and matching metadata like usernames/titles/tags as well.

Crib from existing blacklist scripts like AO3 Savior as shamelessly as you feel the need to. If you publish the resulting userscript for others to install (which you should, if it fills an unmet need!), please comment up any substantial chunks of copypasted or closely-reproduced code with credit/a link to the original. If your script basically is the original with some key changes, like our extra-fancy AO3 Savior above, see if there’s a public Git repo you can fork.

Project #3: Make the dread Tumblr beast do a thing

Create a small script that runs on the Tumblr dashboard. Make it find all the posts on the page and log their IDs. Then log whether they're originals or reblogs. Then add a fancy border to the originals. Then add a different fancy border to your own posts. All of this data should be right there in the post HTML, so no need to derive it by looking for "x reblogged y" or source links or whatever--just make liberal use of Inspect Element and the post's data- attributes.

Extra credit: Explore the wildly variable messes that Tumblr's API spews out, and try to recreate XKit's timestamps feature with jQuery AJAX calls. (Post timestamps are one of the few reliable API data points.) Get a zillion bright ideas about what else you could do with the API data. Go through more actual post data to catalogue all the inconsistencies you’d have to catch. Cry as Tumblr kills the dream you dreamed.

Project #4: Make the dread Tumblr beast FIX a thing

Create a script that runs on individual Tumblr blogs (subdomains of tumblr.com). Browse some blogs with various themes until you've found a post with the upside-down reblog-chain bug and a post with reblogs displaying normally. Note the HTML differences between them. Make the script detect and highlight upside-down stacks of blockquotes. Then see if you can make it extract the blockquotes and reassemble them in the correct order. At this point you may be mobbed by friends and acquaintainces who want a fix for this fucking bug, which you can take as an opportunity to bury any lingering doubts about the usefulness of your scripting adventures.

(Note: Upside-down reblogs are the bug du jour as of September 2019. If you stumble upon this post later, please substitute whatever the latest Tumblr fuckery is that you'd like to fix.)

Project #5: Regular expressions are a hard limit

I mentioned up above that Dreamwidth comments are good guinea pigs for user scripting? You know what that means. Kinkmemes. Anon memes too, but kinkmemes (appropriately enough) offer so many opportunities for coding masochism. So here's a little exercise in sadism on my part, for anyone who wants to have fun (or "fun") with regular expressions:

Write a userscript that highlights all the prompts on any given page of a kinkmeme that have been filled.

Specifically, scan all the comment subject lines on the page for anything that looks like the title of a kinkmeme fill, and if you find one, highlight the prompt at the top of its thread. The nice ones will start with "FILL:" or end with "part 1/?" or "3/3 COMPLETE." The less nice ones will be more like "(former) minifill [37a / 50(?)] still haven't thought of a name for this thing" or "title that's just the subject line of the original prompt, Chapter 3." Your job is to catch as many of the weird ones as you can using regular expressions, while keeping false positives to a minimum.

Test it out on a real live kinkmeme, especially one without strict subject-line-formatting policies. I guarantee you, you will be delighted at some of the arcane shit your script manages to catch. And probably astonished at some of the arcane shit you never thought to look for because who the hell would even format a kinkmeme fill like that? Truly, freeform user input is a wonderful and terrible thing.

If that's not enough masochism for you, you could always try to make the script work on LiveJournal kinkmemes too!

64 notes

·

View notes

Text

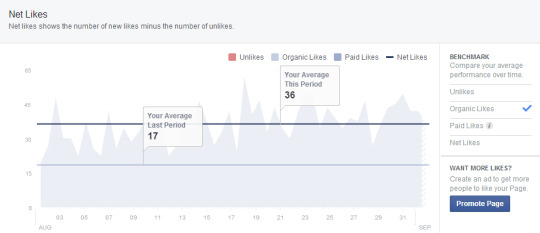

I think this is a generational thing compounded by a flakyass brain, but I chronically have trouble grasping the basic marketing concept of “make it really easy and mindless for people to find you in .09 seconds because most will never bother otherwise.”

Hear me out because this isn’t empty whining and it isn’t even necessarily the “I hate self promo” thing.

I grew up using the internet when HTML was still common, most current social media didn’t exist yet, videos were still a fairly rare novelty, and people treated search engines more as research tools than trivia/entertainment hubs. I don’t claim to be a particularly smart researcher. I still haven’t even memorized those handy search engine shorthand typing hacks beyond the “-” to exclude words. But given my tools at the time, I was just generally used to needing more time and brain cells devising keywords and so forth whenever I wanted to look for something.

Yes, the organized or clever tended to link stuff, and it was lovely when people bothered. But in general, it wasn’t the bare minimum norm. The amount of someone’s online presence also varied much more - people didn’t automatically have accounts/pages everywhere to cover all their bases, because the places where you posted cat pics wasn’t typically the same place that you shared art or publicly archived fic. If you only did one of those things, there...really wasn’t a reason to maintain 6 other accounts/pages/ID’s by default.

These were also the days of Geocities and the like, when people tolerated a barrage of popups for a chance at their own personal corner to revel in hobbies, journaling, text tutorials, or a small business. Sure, there were forums, and they were a good first step towards visible hubs, but people didn’t automatically plug their sites/work/contact info. In a word, things were less centralized - but a fair amount of people figured out how to deal with it anyway, because there wasn’t really another way yet.

For whatever reason, it’s been hard for me to switch gears out of that mode, even now that I’ve done it for a few years. I’ll forget the best hashtags for a post, or I’ll entirely leave out a link that I meant to include, or I don’t quite check that a user tag worked before I click post/send. I try to make templates, but since these can be wildly different depending on what I’m doing, it’s not that straightforward.

Various platforms DID exist by the time I was at uni, but I legitimately didn’t care at that stage, so I never got into the habit. None of the people I regularly talked to used it yet and some still haven’t. My profs didn’t utilize it - if you wanted to get creative and do a paper about such things, that was fine, but there was exactly zero mandatory participation within those platforms.

There was no concrete reason for me to use it, so I ignored it for as long as I could. I was more interested in making shit than sharing it, and I know I’m not the only one.

Yet all of this is almost enough of a world to merit its own college course, and not just for marketing majors or MBA hopefuls. Basically anyone trying to go into business for themselves, network in fandoms, or otherwise connect to people online eventually needs to learn this stuff, because that’s just what today’s standard is. I think it’s starting to be addressed as such these days, but it wasn't when I was in school.

Conversely, my 13-year-old niece is...let’s just say much more prone to using this stuff. She doesn’t know everything, but she knows enough that she’s baffled that I don’t know it because a) it’s always been the norm for her and b) it’s not like there’s 45 years of age difference between us, so expecting me to know what she knows isn’t the most outlandish thought someone could have.

TL;DR the concept that millennials are automatically fluent in all social media is a grievously unfair assumption and it’s not just the boomers making that assumption.

2 notes

·

View notes

Text

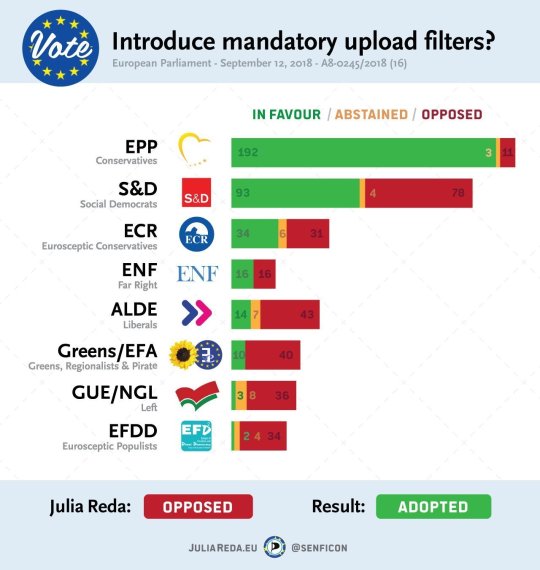

#1yrago Europe just voted to wreck the internet, spying on everything and censoring vast swathes of our communications

Lobbyists for "creators" threw their lot in with the giant entertainment companies and the newspaper proprietors and managed to pass the new EU Copyright Directive by a hair's-breadth this morning, in an act of colossal malpractice to harm to working artists will only be exceeded by the harm to everyone who uses the internet for everything else.

Here's what the EU voted in favour of this morning:

* Upload filters: Everything you post, from short text snippets to stills, audio, video, code, etc will be surveilled by copyright bots run by the big platforms. They'll compare your posts to databases of "copyrighted works" that will be compiled by allowing anyone to claim copyright on anything, uploading thousands of works at a time. Anything that appears to match the "copyright database" is blocked on sight, and you have to beg the platform's human moderators to review your case to get your work reinstated.

* Link taxes: You can't link to a news story if your link text includes more than a single word from the article's headline. The platform you're using has to buy a license from the news site, and news sites can refuse licenses, giving them the right to choose who can criticise and debate the news.

* Sports monopolies: You can't post any photos or videos from sports events -- not a selfie, not a short snippet of a great goal. Only the "organisers" of events have that right. Upload filters will block any attempt to violate the rule.

Here's what they voted against:

* "Right of panorama": the right to post photos of public places despite the presence of copyrighted works like stock arts in advertisements, public statuary, or t-shirts bearing copyrighted images. Even the facades of buildings need to be cleared with their architects (not with the owners of the buildings).

* User generated content exemption: the right to use small excerpt from works to make memes and other critical/transformative/parodical/satirical works.

Having passed the EU Parliament, this will now be revised in secret, closed-door meetings with national governments ("the trilogues") and then voted again next spring, and then go to the national governments for implementation in law before 2021. These all represent chances to revise the law, but they will be much harder than this fight was. We can also expect lawsuits in the European high courts over these rules: spying on everyone just isn't legal under European law, even if you're doing it to "defend copyright."

In the meantime, what a disaster for creators. Not only will be we liable to having our independently produced materials arbitrarily censored by overactive filters, but we won't be able to get them unstuck without the help of big entertainment companies. These companies will not be gentle in wielding their new coercive power over us (entertainment revenues are up, but the share going to creators is down: if you think this is unrelated to the fact that there are only four or five major companies in each entertainment sector, you understand nothing about economics).

But of course, only an infinitesimal fraction of the material on the platforms is entertainment related. Your birthday wishes and funeral announcements, little league pictures and political arguments, wedding videos and online educational materials are also going to be filtered by these black-box algorithms, and you're going to have to get in line with all the other suckers for attention from a human moderator at one of the platforms to plead your case.

The entertainment industry figures who said that universal surveillance and algorithmic censorship were necessary for the continuation of copyright have done more to discredit copyright than all the pirate sites on the internet combined. People like their TV, but they use their internet for so much more.

It's like the right-wing politicians who spent 40 years describing roads, firefighting, health care, education and Social Security as "socialism," and thereby created a generation of people who don't understand why they wouldn't be socialists, then. The copyright extremists have told us that internet freedom is the same thing as piracy. A generation of proud, self-identified pirates can't be far behind. When you make copyright infringement into a political act, a blow for freedom, you sign your own artistic death-warrant.

This idiocy was only possible because:

* No one involved understands the internet: they assume that because their Facebook photos auto-tag with their friends' names, that someone can filter all the photos ever taken and determine which ones violate copyright;

* They tied mass surveillance to transferring a few mil from Big Tech to the newspaper shareholders, guaranteeing wall-to-wall positive coverage (I'm especially ashamed that journalists supported this lunacy -- we know you love free expression, folks, we just wish you'd share);

What comes next? Well, the best hope is probably a combination of a court challenge, along with making this an election issue for the 2019 EU elections. No MEP is going to campaign for re-election by saying "I did this amazing copyright thing!" From experience, I can tell you that no one cares what their lawmakers are doing with copyright.

On the other hand, there are tens of millions of voters who will vote against a candidate who "broke the internet." Not breaking the internet is very important to voters, and the wider populace has proven itself to be very good at absorbing abstract technical concepts when they're tied to broken internets (87% of Americans have a) heard of Net Neutrality and; b) support it).

I was once involved in a big policy fight where one of the stakes was the possibility that broadcast TV watchers would have to buy a small device to continue watching TV. Politicians were terrified of this proposition: they knew that the same old people who vote like crazy also watch a lot of TV and wouldn't look favourably on anyone who messed with it.

We're approaching that point with the internet. The danger of internet regulation is that every problem involves the internet and every poorly thought-through "solution" ripples out through the internet, creating mass collateral damage; the power of internet regulation is that every day, more people are invested in not breaking the internet, for their own concrete, personal, vital reasons.

This isn't a fight we'll ever win. The internet is the nervous system of this century, tying together everything we do. It's an irresistible target for bullies, censors and well-intentioned fools. Even if the EU had voted the other way this morning, we'd still be fighting tomorrow, because there will never be a moment at which some half-bright, fully dangerous policy entrepreneur isn't proposing some absurd way of solving their parochial problem with a solution that will adversely affect billions of internet users around the world.

This is a fight we commit ourselves to. Today, we suffered a terrible, crushing blow. Our next move is to explain to the people who suffer as a result of the entertainment industry's depraved indifference to the consequences of their stupid ideas how they got into this situation, and get them into the streets, into the polling booths, and into the fight.

https://boingboing.net/2018/09/12/vichy-nerds-2.html

9 notes

·

View notes

Text

Tips To Designing A Website On A Budget

Is creating an excellent website important to you? Do you feel like you need help figuring out the process to get what you want? It is most often known as website creation. Without attractive website development, your website will not draw visitors. No need to worry, this article will help. The information shared here offers great website design tips. When designing webpages it is important that you use the correct graphics. Many people no longer use use bitmap graphics because the files are large and take longer to load. Try GIF, PNG or JPEG files instead. Use a GIF instead if the image contains over 256 colors and PNG for test buttons and screen shots. JPEG files are best for photographs. Make sure you put your website through the NoScript test. Download the NoScript extension in Firefox and test it on your site to see if it is Webdesign Tilburg still readable. There are some elements (e.g. ordering products) that will not work if there is no script. For this reason, if your website is blank and has no scripts, it will not work. Make user cancellations easy. An action can involve filling out forms, registering for email notifications or newsletters, or browsing the site for various topics or archives. Forcing your user to complete an action they don't wish to will ensure they never sign up for anything on your site again, nor are they likely to return at all. Educate yourself about shortcuts, then make a habit of employing them. Most website development techniques have shortcuts you can use. You Webdesign Tilburg could even opt for editing the HTML first hand to make super-fast changes. Include photographs to make your site look professional. Those photos can give your site a lot of personality. When the visitors seed that you spent more time putting together your site, they'll Website laten maken Tilburg look forward to clicking on your next picture. Use ALT tags for your website. These tags help you describe the images for people with visual impairment or people that disable images. In addition, ALT tags allow you to describe how a link behaves if you utilize your images as links. It's also important to remember that search engine bots search for ALT tags, so they can aid in your search engine ranking. Don't accidentally place links on your site that lead to nowhere. That will help the reader know what they're clicking on. Any links on your website should contain text because links without text can be accidentally selected without the viewer's knowing. Before you design a full website, start small. Make some smaller websites so you can easily see which areas you can do well, and which areas you may need more work on. Start out by making a few pages that just have basic information and some easy text, then asses how it works for you. When you are deciding which hosting service to use, make sure that you understand everything that the package will include. Disk space, bandwidth and CPU usage are some of the things you need information about. Never buy a hosting package without understanding everything that's included. Spend some time and money on books that will assist you in learning website development. Buy books that are appropriate to your skill level. You do want to increase your skill level, but since website creation skills build on each other, missing things can be problematic. Are you ready to make a great site? Are you familiar with what is involved in making a good website? Are you now more aware of what it takes to execute great website development? Can you use these tips to make a successful design? Yes, so go do it!

1 note

·

View note

Text

5 Ways to Revamp Your Google Ranking Using SEO

You may have seen it time and time again, your competitors have a stronger online presence than you. You begin to wonder what they are doing right that you’re not. It’s no secret that Google ranks websites based on their relevance to the keywords typed in by users. If your site is not up to par, then you are likely going to struggle to improve your ranking. The good news is that with a little bit of research and testing, you can revamp your SEO strategy and see your ranking rise accordingly.

Here are 5 Ideas that BLeap Digital Marketing Agency helps you Revamp Your Google Ranking Using SEO:

Change up your Content Strategy

According to BLeap,the content of your site is the most critical aspect of SEO. If your content is not up to par, then nothing else will matter. Your content should be easy to read and address issues that your target audience is facing. If your content is packed with keywords, it will likely be tagged as spam by Google. On the other hand, if your content is packed with fluff and keywords, it will also be tagged as spam. Finding the balance between the two is key to improving your Google ranking. To revamp your content strategy, you must ask yourself these questions: - What are my customers looking for? - Where are they looking? - How can I provide them with what they want? - How can I provide them with what they need?

Make Small Layout Changes

With the layout of your site, Google is trying to figure out how it is structured. By making small layout changes, like adding an image to your homepage or adding more pages, you can help Google understand more about your website. - Add an Image to your homepage: Visitors are more likely to stay on a website with an image. An image can help you rank for related keywords and can make it much easier for Google to understand your page’s layout. - Add more pages: You can add more pages to host more content. This will not only help you rank for more keywords but will also help you rank higher for the existing keywords. - Revise your internal linking structure: By rearranging your internal linking structure, you can help Google understand more about your site. - Add more links to your site: By adding more links to your site, you can help Google understand more about your links.

Update Your HTML Structure

The HTML structure of your site is what Google uses to understand your keywords. By improving your HTML structure, you can help Google understand your site much better. By simply adding a few new keywords, you can help Google better understand your site and help you rank higher for a variety of keywords. - Add more keywords: By adding more keywords to your bold keywords, you can help Google better understand your site. - Add keywords to your H1, H2, H3, and H4: By adding keywords to your H-tags, you can help Google better understand your page. - Use synonyms or variants of keywords: By using synonyms or variants of keywords, you can help Google better understand your content. - Use keywords in your Alt-text: By using keywords in your Alt-text, you can help Google better understand your image. - Use keywords in your URL: By using keywords in your URL, you can help Google better understand your page.

Add Quality backlinks

Backlinks help Google understand more about your site and help you rank higher for your keywords. These backlinks can come from a wide variety of sources. By adding quality backlinks to your site, you can help Google better understand your website and help rank you higher for your keywords. - Create more blog posts: By adding more blog posts, you can help Google better understand your site. - Add more content to your website: By adding more content to your website, you can help Google better understand your site. - Create more guest-posts: By creating more guest-posts, you can help Google better understand your site. - Add more links to other sites: By adding more links to other sites, you can help Google better understand your site. - Add more mentions on social media: By adding more mentions on social media, you can help Google better understand your site.

Showcase your Brand’s Expertise

By showcasing your brand’s expertise, you can help Google better understand your site and help you rank higher for your keywords. - Create more content: By creating more content, you can help Google better understand your site. - Add more images: By adding more images, you can help Google better understand your site. - Add more videos: By adding more videos, you can help Google better understand your site. - Add more infographics: By adding more infographics, you can help Google better understand your site. - Add more graphs: By adding more graphs, you can help Google better understand your site.

Conclusion

By implementing these 5 ways to revamp your Google ranking using SEO, you can help your website rank higher for a variety of keywords and bring more customers to your site. When it comes to SEO, there is no quick fix. It takes time, patience, and lots of testing. However, with these 5 ways to revamp your Google ranking, you can start to see improvements in your site’s ranking right away.

This Article was made by

BLeap Digital - Affordable SEO Services in Bangalore

0 notes

Text

Version 330

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. There are some more login scripts and a bit of cleanup and speed-up.

The poll for what big thing I will work on next is up! Here are the poll + discussion thread:

https://www.poll-maker.com/poll2148452x73e94E02-60

https://8ch.net/hydrus/res/10654.html

login stuff

The new 'manage logins' dialog is easier to work with. It now shows when it thinks a login will expire, permits you to enter 'empty' credentials if you want to reset/clear a domain, and has a 'scrub invalid' button to reset a login that fails due to server error or similar.

After tweaking for the problem I discovered last week, I was able to write a login script for hentai foundry that uses username and pass. It should inherit the filter settings in your user profile, so you can now easily exclude the things you don't like! (the click-through login, which hydrus has been doing for ages, sets the filters to allow everything every time it works) Just go into manage logins, change the login script for www.hentai-foundry.com to the new login script, and put in some (throwaway) credentials, and you should be good to go.

I am also rolling out login scripts for shimmie, sankaku, and e-hentai, thanks to Cuddlebear (and possibly other users) on the github (which, reminder, is here: https://github.com/CuddleBear92/Hydrus-Presets-and-Scripts/tree/master/Download%20System ).

Pixiv seem to be changing some of their login rules, as many NSFW images now work for a logged-out hydrus client. The pixiv parser handles 'you need to be logged in' failures more gracefully, but I am not sure if that even happens any more! In any case, if you discover some class of pixiv URLs are giving you 'ignored' results because you are not logged in, please let me know the details.

Also, the Deviant Art parser can now fetch a sometimes-there larger version of images and only pulls from the download button (which is the 'true' best, when it is available) if it looks like an image. It should no longer download 140MB zips of brushes!

other stuff

Some kinds of tag searches (usually those on clients with large inboxes) should now be much faster!

Repository processing should also be faster, although I am interested in how it goes for different users. If you are on an HDD or have otherwise seen slow tag rows/s, please let me know if you notice a difference this week, for better or worse. The new system essentially opens the 'new tags m8' firehose pretty wide, but if that pressure is a problem for some people, I'll give it a more adaptable nozzle.

Many of the various 'select from a list of texts' dialogs across the program will now size themselves bigger if they can. This means, for example, that the gallery selector should now show everything in one go! The manage import/export folder dialogs are also moved to the new panel system, so if you have had trouble with these and a small screen, let me know how it looks for you now.

The duplicate filter page now has a button to edit your various duplicate merge options. The small button on the viewer was too-easily missed, so this should make it a bit easier!

full list

login:

added a proper username/password login script for hentai foundry--double-check your hf filters are set how you want in your profile, and your hydrus should inherit the same rules

fixed the gelbooru login script from last week, which typoed safebooru.com instead of .org

fixed the pixiv login 'link' to correctly say nsfw rather than everything, which wasn't going through last week right

improved the pixiv file page api parser to veto on 'could not access nsfw due to not logged in' status, although in further testing, this state seems to be rarer than previously/completely gone

added login scripts from the github for shimmie, sankaku, and e-hentai--thanks to Cuddlebear and any other users who helped put these together

added safebooru.donmai.us to danbooru login

improved the deviant art file page parser to get the 'full' embedded image link at higher preference than the standard embed, and only get the 'download' button if it looks like an image (hence, deviant art should stop getting 140MB brush zips!)

the manage logins panel now says when a login is expected to expire

the manage logins dialog now has a 'scrub invalidity' button to 'try again' a login that broke due to server error or similar

entering blank/invalid credentials is now permitted in the manage logins panel, and if entered on an 'active' domain, it will additionally deactivate it automatically

the manage logins panel is better at figuring out and updating validity after changes

the 'required cookies' in login scripts and steps now use string match names! hence, dynamically named cookies can now be checked! all existing checks are updated to fixed-string string matches

improved some cookie lookup code

improved some login manager script-updating code

deleted all the old legacy login code

misc login ui cleanup and fixes

.

other:

sped up tag searches in certain situations (usually huge inbox) by using a different optimisation

increased the repository mappings processing chunk size from 1k to 50k, which greatly increases processing in certain situations. let's see how it goes for different users--I may revisit the pipeline here to make it more flexible for faster and slower hard drives

many of the 'select from a list of texts' dialogs--such as when you select a gallery to download from--are now on the new panel system. the list will grow and shrink depending on its length and available screen real estate

.

misc:

extended my new dialog panel code so it can ask a question before an OK happens

fixed an issue with scanning through videos that have non-integer frame-counts due to previous misparsing

fixed a issue where file import objects that have been removed from the list but were still lingering on the list ui were not rendering their (invalid) index correctly

when export folders fail to do their work, the error is now presented in a better way and all export folders are paused

fixed an issue where the export files dialog could not boot if the most previous export phrase was invalid

the duplicate filter page now has a button to more easily edit the default merge options

increased the sibling/parent refresh delay for 1s to 8s

hydrus repository sync fails due to network login issues or manual network user cancel will now be caught properly and a reasonable delay added

additional errors on repository sync will cause a reasonable delay on future work but still elevate the error

converted import folder management ui to the new panel system

refactored import folder ui code to ClientGUIImport.py

converted export folder management ui to the new panel system

refactored export folder ui code to the new ClientGUIExport.py

refactored manual file export ui code to ClientGUIExport.py

deleted some very old imageboard dumping management code

deleted some very old contact management code

did a little prep work for some 'show background image behind thumbs', including the start of a bitmap manager. I'll give it another go later

next week

I have about eight jobs left on the login manager, which is mostly a manual 'do login now' button on manage logins and some help on how to use and make in the system. I feel good about it overall and am thankful it didn't explode completely. Beyond finishing this off, I plan to continue doing small work like ui improvement and cleanup until the 12th December, when I will take about four weeks off over the holiday to update to python 3. In the new year, I will begin work on what gets voted on in the poll.

2 notes

·

View notes

Text

Everything you Want to Know About Web Development

Technology plays a massive part in our daily lives, from the most basic of apps to the most groundbreaking innovations. Web developers have created every web page or website we see. But what exactly is web development, and what is a web developer's job?

This field may appear to be complicated, confusing, and it's an inaccessible field in some ways. So, to provide insights into this fascinating industry, we've put together an essential overview of Web development and the various steps you need to take to become an expert web developer. Best website development usa

This article will discuss the fundamentals of web development and will outline the essential abilities and tools you'll need to succeed in this field. Before you embark on the field of Web Development, read through the article to find out whether web development is the right choice for you. Once you have decided, begin to learn the fundamental techniques. Website Development Services in USA

What is Web Development

Web design is developing websites and apps for the web or private networks. Web development does not care about the appearance and layout of websites; instead, it's focused on the programming and coding that manages the website's functionality. web development company in usa

From the most straightforward, static web pages to applications and platforms for social media that range from websites for e-commerce to Content management systems (CMS), Web developers have designed all the tools we use through the internet.

Front-end Vs. Back-end development

Web Development is generally classified into Back-end development and Front-end development. Therefore, if you're planning to try a career path with web-based development, you must be aware of the meanings of both terms.

Front-end developers

Front-end developers create what clients can see, and it refers to the user interface. The front-end is developed by blending various technologies, including Hypertext Markup Language (HTML), JavaScript, and Cascading Style Sheets (CSS). Front-end developers develop components that provide user experience in the site's or application, such as menus, dropdowns, buttons pages, connections procedures, and more. A full-stack developer can design and develop both the front-end and the behind-end components of an app.

Back-end development

While back-end developers create the infrastructure that supports it, the "back-end" signifies the server and the application and database working behind the scenes to transmit the information to the user. The back-end, also known as the server-side, comprises the server that provides data upon request, the application that handles it, and the database that organizes the information.

There are three choices from a professional aspect for web design. You can begin the journey as a front-end designer or back-end developer, or you could learn both and ultimately become fully-stack developers.

Once you've figured out the job outlook for web developers, we can look to the specific skills you'll have to master before you can embark on this exciting career. Then, start seeking out web development companies.

Skills Required for Web Development

Making a thousand lines of code and turning it into an online website is one of the most creative and complex tasks for websites developers if you are enthralled by appealing websites and want to explore web development. Anyone can become a web developer. There is no need to get an expensive education in software engineering to learn the necessary skills.

HTML

HTML is for Hypertext Markup Language. It's among the principal elements of a site. As it is a front-end programming language, it is the site's foundation, primarily using tags.

CSS

CSS is Cascading Style Sheets. It defines the style behind the HTML structure and gives life to the look of websites. Without CSS, the page could look flat.

JavaScript

JavaScript lets you add and include various elements into the pages of your website. Features such as interactive maps, 3D/2D graphics are attached to websites through JavaScript.

Application Programming Interface (API)

When developing websites, it is necessary to work extensively using APIs, which manage data from third parties. APIs allow web developers to make use of certain features without sharing code.

Authentication

There is a chance that you'll be in charge of user authentication to monitor users of a particular website. This could include allowing customers to log in and out, or log out or take specific actions through their accounts, or blocking a couple of pages for those that aren't registered.

The user's login security is intensely dependent on authentication. Therefore, it is essential to understand how to handle this function within your web application.

Back-end and Databases

After you have mastered the front-end, you have to move on and ensure that you know what is happening in the back-end. This is where the magic happens, and it is also where the entire information is kept.

Data is saved, altered, and then retrieved from databases. At present, we often utilize cloud-based frameworks such as Azure and AWS to manage the database. What you must know is the best way to handle the data in the database.

To do this, you'll need to know SQL (Structured SQL Query Language) and NoSQL (used for MongoDB and Firebase).

Generating Tests

Many developers view this process to be ineffective. If you are developing a small application, you may not need to create tests. However, suppose you're making an extensive application. It is advised to write tests and test cases as it will assist make the entire process more robust and, consequently, debugging is more accessible.

At first, you may be tempted to think you're inefficient, but afterward, you'll realize that it saves your time.In addition, there are additional abilities that are also needed:

The desire to continuously develop new skills and learn to improve

Keep up to date on the latest developments in the industry

Time management

Understanding UX

Multitasking

Web Development tools that you need to be aware of

The process of building a website that receives lots of attention isn't only about learning new programming languages. It would help if you were acquainted with specific web development tools that can aid you in full-stack website development.

We will go over some critical web development tools you should learn about as a web developer.

Git

Git is perhaps the most well-known framework for managing versions that many companies employ. There is a good chance that you will require working on this kind of version control when you begin your career as a web developer.

GitHub

GitHub is a platform for service which allows you to publish to Git repositories for hosting your codes. It is mainly used for collaborations, which allows developers to collaborate on projects.

Code Editor

If you are building a website, the most rudimentary web development tool you'll employ will be your editor for code or the IDE (Integrated Development Environment). This tool lets you write the markup and the code that will be used to build the website.

There are numerous options available. However, the most well-known code editor has to be VS Code. VS Code is a more light version from Visual Studio, Microsoft's primary IDE. It's quick, easy to use, and you can modify it with the themes you choose and add extensions.

Other editors for code include Atom, Sublime Text, and Vim. If you're starting, I suggest you look into VS Code, which you can download from their official website.

Browser DevTools

There are many things with browser DevTools such as troubleshooting editing HTML tags editing CSS properties, troubleshooting JavaScript issues, and others. Every web developer should be aware of the various tabs within DevTools to simplify their work and make it more efficient.

Based on the browser you are using You can choose to use any of the DevTools, including Chrome DevTools or Firefox DevTools or whatever browser you're using. Most people use Chrome DevTools to build, test, debug, and build an application on the web. However, it's dependent on the developer and the browser they are using to create the site.

0 notes

Text

5 Features of a Good Website

Potential clients will utilize your site as an institution to your business so it is essential to establish a decent impact. While you ought to analyze numerous features while making your business website, here are five of the most significant.

1. Page Speed

Page speed is the duration that a website page takes to load completely. Normally the website's page loads in three to five seconds. However, you should focus on close to three seconds.

A few factors affect page-loading speed, including a site's server, the page document size, and image compression. Google sees page loading speed as the search engine optimization (SEO) positioning sign so the quicker your website loads, the higher it will rank on Google's search lists.

Before you begin optimizing your website for speed, settle out a page loading time objective. Utilizing Google's optimal page loading speed as a benchmark is a decent spot to begin since it has the biggest database of websites. Yet, recall that the algorithms behind Google insights frequently demonstrate the performance levels of an ideal webpage dependent on web client behavior and not the real loading times of most websites.

While three seconds may address the best page loading speed, most websites nowhere reach close to that. After examining over 900,000 mobile ad landing pages across 126 nations, Google discovered 70% of the pages required almost seven seconds to show their visual content. Google additionally tracked down that mobile landing pages take a normal of 22 seconds to load.

Unmistakably, the normal page loading speed is higher than what Google considers ideal since its examination shows web clients abandon 53% of visits if a mobile webpage requires over three seconds to load the site. Given site guests expanding fretfulness, it's fundamental that your site can keep them consequently they show up. If you put forth an attempt to speed up to a worthy level, you will probably acquire a strategic advantage in terms of client experience.

2. SSL Certificate

An SSL(secure sockets layer) certificate does not only check the character of a site's proprietor, yet in addition, the strength of the encryption utilized when somebody associates with that site from an individual gadget. SSL accreditation is an essential safety effort for any business that gathers data from its site visitors.

Distinctive SSL certificates give various levels of safety, depending upon the kind of assurance and security including your site and its client's need. On the off chance that your site acknowledges online payments, for instance, you will need greater security. Yet, even straightforward business sites may succumb to cyberattacks that can cause marketing failures when hackers utilize stolen data to send spam messages.

An SSL-protected sites show the "https://" prefix in an internet browser's URL address bar. That implies clients will see the legitimacy and reliability of your site the moment when they enter the website.

Critically, your site's SSL certificate should come from a believable source. Ensure you get it from a Certificate Authority (CA) - associations depended on checking the authenticity and identity of the websites.

3. Favicon

Favicons are the little, square pictures showed before the URL in a browser's search bar, on the left half of a browser's tabs, and close to a site's name in a user bookmark list. Web browsers use them to give a graphical portrayal of the websites users visit.

Normally a small-scale logo or brand picture contracted down to 16 by 16 pixels, favicons can highlight any picture that addresses a particular site. These valuable pictures assist clients with recollecting a site's image, yet additionally, track down a particular site all the more effective when they have numerous program tabs open or a long bookmark list.

4. Responsive Design

The responsive plan permits sites and pages to show appropriately on all gadgets via automatically adjusting to a gadget's screen size - from desktops and laptops to tablets and cell phones. This design approach conveys an enhanced perusing experience, which is progressively significant because individuals currently lead almost 60% of all online inquiries utilizing a mobile phone.

In case you are doubtful if your site utilizes a responsive design, test it with an instrument like Google's Mobile-Friendly Test. Another approach to perceive how your site shows on various stages is to resize your browser window and watch how it acts on various devices. This shows you how your site's components show up on gadgets with various screen sizes.

5. On-Page SEO

On-page SEO — additionally approached webpage SEO is the act of optimizing pages to further improve a site's search engine rankings and acquire more natural traffic.

Before publishing, excellent content on your site, on-page SEO consolidates optimizing your website headlines, pictures, and HTML tags(e.g., titles, subheadings, and meta descriptions). It likewise requires assuring your site has a significant level of ability, authority, and dependability.

On-page SEO is significant because it only supports search engines comprehend your site and the content, yet in addition recognize whether it applies to what a client looks for. With some effort, on-page SEO techniques can support your site's traffic and search engine rankings. (Websites.co.in offers Automated-SEO that saves your time)

Remember the accompanying as you approach on-page SEO for your site.

Target Keywords

When composing content, ensure your theme coordinates with the pursuit purpose of individuals utilizing your targeted keywords. This is necessary because web pages that don't fulfill client goals that don't rank well.

Continuously remember your end-user so you can offer them the responses and data they look for. If your website doesn't convey advantageous matters, individuals will leave the website rapidly. A high bounce rate - the level of individuals who visit your website, yet leave without reviewing extra pages - will negatively contrarily influence your SEO.

Before you foster new content, check the Google search results for your picked keywords and afterward match your content to a similar client expectation. Also, incorporate your targeted keywords in your website page's URL, title, and heading since research shows articles that do this position higher than those that don't.

Interesting Content

You additionally should focus on assuring your website offers interesting and engaging content. Why? Since web crawlers like Google depend on the algorithms to figure out what's really going on with a page and afterward rank it properly.

Content that gives a superior client experience will help your site rank higher in Google's search engine results, but remember that you are composing content for individuals and not for search engines.

Pictures

Intriguing, eye catchy pictures can make your site seriously engaging and tempt clients to invest more time on the website. Enhancing your pictures will help you capitalize on this significant SEO resource as long as you pair every one of them with expressive titles and alt text.

Headings

Utilizing headings in your website's content will assist with organizing your content into an unmistakable progressive system, permitting web crawlers and guests to promptly see what's significant.

Google loves coordinated page designs, and headings give an incredible method to accomplish this. Utilize the "H1" header tag for a page's primary title and afterward the "H2," "H3," and the other header tags for subtitles. All "H1" header tags ought to contain your targeted keyword to assist search engines to understand a website page's content and increase its SEO rank. As a best practice, expect to coordinate with a page's "H1" header tag with its meta title.

Another fundamental point to consider includes making a Seo optimized title. This will trigger various web index positioning components, for example, the client's intent, the active click-through rate, and the matching keyword.

While making an SEO optimized title, remember the following few points:

Assure it correlates with the goal of your targeted keywords.

Get it tempting to expand the click-through rate.

Keep it within a maximum of 60 characters limit.

While the meta description is not straightforwardly connected to your website on-page SEO, it adds to other web search engine positioning variables like the active clicking factor. That implies an elegantly composed meta description can have a considerable effect, and similar accepted procedures for making titles likewise apply to meta descriptions. Making an incredible website additionally implies you should consider its design and how you will influence your visitors.

#website#website builder#website maker#website maker app#website app#instant website#Website Creator#google sites#wix competitor#wordpress competitor#online website builder#business website

0 notes

Text

Version 475

youtube

windows

zip

exe

macOS

app

linux

tar.gz

I had a good couple of weeks. There's a long changelog of small items and some new help.

new help

A user has converted all my old handcoded help html to template markup and now the help is automatically built with MkDocs. It now looks nicer for more situations, has automatically generated tables of contents, a darkmode, and even in-built search.

It has been live for a week here:

https://hydrusnetwork.github.io/hydrus/

And is with v475 rolled into the builds too, so you'll have it on your hard disk. Users who run from source will need to build it themselves if they want the local copy, but it is real easy, just one line you can fold into an update script:

https://hydrusnetwork.github.io/hydrus/about_docs.html

I am happy with how this turned out and am very thankful to the user who put the work in to make the migration. It all converted to this new format without any big problems.

misc highlights

I queue up some more files for metadata rescans, hopefully fixing some more apngs and figuring out some audio-only mp4s.

System:hash now supports 'is not', so if you want to paste a ton of hashes you can now say 'but not any of these specific files'.

Searches with lots of -negated tags should be a good bit faster now.

I fixed a bug that was stopping duplicate pages from saving changes to their search.

pycharm

I moved to a new IDE (the software that you use to program with) this week, moving from a jank old WingIDE environment to new PyCharm. It took a bit of time to get familiar with it, so the first week was mostly me doing simple code cleanup to learn the shortcuts and so on, but I am overall very happy with it. It is very powerful and customisable, and it can handle a variety of new tech better.

It might be another few weeks before I am 100% productivity with it, but I am now more ready to move to python 3.9 and Qt 6 later in the year.

full list

new help docs:

the hydrus help is now built from markup using MkDocs! it now looks nicer and has search and automatically generated tables of contents and so on. please check it out. a user converted _all_ my old handwritten html to markup and figured out a migration process. thank you very much to this user.

the help has pretty much the same structure, but online it has moved up a directory from https://hydrusnetwork.github.io/hydrus/help to https://hydrusnetwork.github.io/hydrus. all the old links should redirect in any case, so it isn't a big deal, but I have updated the various places in the program and my social media that have direct links. let me know if you have any trouble

if you run from source and want a local copy of the help, you can build your own as here: https://hydrusnetwork.github.io/hydrus/about_docs.html . it is super simple, it just takes one extra step. Or just download and extract one of the archive builds

if you run from source, hit _help->open help_, and don't have help built, the client now gives you a dialog to open the online help or see the guide to build your help

the help got another round of updates in the second week, some fixed URLs and things and the start of the integration of the 'simple help' written by a user

I added a screenshot and a bit more text to the 'backing up' help to show how to set up FreeFileSync for a good simple backup

I added a list of some quick links back in to the main index page of the help

I wrote an unlinked 'after_distaster' page for the help that collects my 'ok we finished recovering your broken database, now use your pain to maintain a backup in future' spiel, which I will point people to in future

.

misc:

fixed a bug where changes to the search space in a duplicate filter page were not sticking after the first time they were changed. this was related to a recent 'does page have changes?' optimisation--it was giving a false negative for this page type (issue #1079)

fixed a bug when searching for both 'media' and 'preview' view count/viewtime simultaneously (issue #1089, issue #1090)

added support for audio-only mp4 files. these would previously generally fail, sometimes be read as m4a. all m4as are scheduled for a metadata regen scan

improved some mpeg-4 container parsing to better differentiate these types

now we have great apng detection, all pngs with apparent 'bitrate' over 0.85 bits/pixel will be scheduled for an 'is this actually an apng?' scan. this 0.85 isn't a perfect number and won't find extremely well-compressed pixel apngs, but it covers a good amount without causing a metadata regen for every png we own

system:hash now supports 'is' and 'is not', if you want to, say, exclude a list of hashes from a search

fixed some 'is not' parsing in the system predicate parser

when you drag and drop a thumbnail to export it from the program, the preview media viewer now pauses that file (just as the full media viewer does) rather than clears it

when you change the page away while previewing media with duration, the client now remembers if you were paused or playing and restores that state when you return to that page

folded in a new and improved Deviant Art page parser written by a user. it should be better about getting the highest quality image in unusual situations

running a search with a large file pool and multiple negated tags, negated namespaces, and/or negated wildcards should be significantly faster. an optimisation that was previously repeated for each negated tag search is now performed for all of them as a group with a little inter-job overhead added. should make '(big) system:inbox -character x, -character y, -character z' like lightning compared to before

added a 'unless namespace is a number' to 'tag presentation' options, which will show the full tag for tags like '16:9' when you have 'show namespaces' unticked

altered a path normalisation check when you add a file or thumbnail location in 'migrate database'--if it fails to normalise symlinks, it now just gives a warning and lets you continue. fingers crossed, this permits rclone mounts for file storage (issue #1084)

when a 'check for missing/invalid file' maintenance job runs, it now prints all the hashes of missing or invalid files to a nice simple newline-separated list .txt in the error directory. this is an easy to work with hash record, useful for later recovery

fixed numerous instances where logs and texts I was writing could create too many newline characters on Windows. it was confusing some reader software and showing as double-spaced taglists and similar for exported sidecar files and profile logs

I think I fixed a bug, when crawling for file paths, where on Windows some network file paths were being detected incorrectly as directories and causing parse errors

fixed a broken command in the release build so the windows installer executable should correctly get 'v475' as its version metadata (previously this was blank), which should help some software managers that use this info to decide to do updates (issue #1071)

.

some cleanup:

replaced last instances of EVT_CLOSE wx wrapper with proper Qt code

did a heap of very minor code cleanup jobs all across the program, mostly just to get into pycharm

clarified the help text in _options->external programs_ regarding %path% variable

.

pycharm:

as a side note, I finally moved from my jank old WingIDE IDE to PyCharm in this release. I am overall happy with it--it is clearly very powerful and customisable--but adjusting after about ten or twelve years of Wing was a bit awkward. I am very much a person of habit, and it will take me a little while to get fully used to the new shortcuts and UI and so on, but PyCharm does everything that is critical for me, supports many modern coding concepts, and will work well as we move to python 3.9 and beyond

next week

The past few months have been messy in scheduling as I have dealt with some IRL things. That's thankfully mostly done now, so I am now returning to my old schedule of cleanup/small/medium/small week rotation.

Next week will be a 'medium size' job week. I'm going to lay the groundwork for 'post time' parsing in the downloader and folding that cleverly into 'modified date' for searching and sorting purposes. I am not sure I can 'finish' it, but we'll see.

0 notes

Text

Most Important HTML Tags For Search Engine Optimization

Tags are small snippets of html coding that tell engines how to properly read your content. In fact you can vastly improve search engine visibility by adding S-E-O tags in html. When a search engine's crawler come across your content , it takes a look at the html tags of the site .This information helps engines like google determine what your content is about and how to categorize the material.

Some of them also improve how visitors view your content in those search engines. And this is in addition to how social media uses content tags to show your articles. In the end it's html tags for S-E-O that will affect how your website performs on the internet. Without these tags you are far less likely to really connect with an audience.

1)Title Tags

Title tag is your main and most important anchor. The <title> element typically appears as a clickable headline in the S-E-R-P's and also shows up on social networks and in browsers.Title tags are placed in the <head> of your web page and are meant to provide a clear and comprehensive idea of what the page is all about. The page's title still is the first thing for a searcher to see in S-E-R-P-'s and decide if the page is likely to answer the search intent.

A well written one may increase the number of clicks and traffic which have at least some impact on rankings.

Best Tips

Give each page a unique title that describes the page's content accurately.

Keep the title's up to 50-60 characters long . Remember that long titles are shortened to about 600-700px on the S-E-R-P.

Put important keywords first ,but in a natural manner, as if you write titles for your visitors in the first place.

Make sure of your brand name in the title ,even if it ends up not being shown on the S-E-R-P's it will still make a difference for the search engine.

Use your title to attract attention like inserting it in title tab in your web browser.

2)Meta Description Tags

Meta description also resides in the <head> of a web page and is commonly displayed in a S-E-R-P snippet along with the title and page U-R-L.

The description occupies the largest part of a S-E-R-P snippet and invites searchers to click on your site by promising a clear and comprehensive solution to the query.The description impacts the no of clicks you get , and may also may improve ctr and decrease bounce rates if the page's content indeed fulfills the promises. That's why the description must be as realistic. If your description contains the keywords a searcher used in their search query, they will appear on the S-E-R-P in bold. This goes a very long way in helping you standout and inform the searcher exactly what they will find on their page .

A good way to figure out what to write in your meta description , what works best for your particular topic right now is to do some competition research. Look for how your competitors make their own descriptions to get an idea about it.

best tips

Give each page a unique meta description that clearly reflects what value the page carries.

Google's snippet typically max out around 150-160 characters(including spaces).

Include your most significant keywords so they could get highlighted on the actual S-E-R-P, but be careful to avoid keyword stuffing .

Use an eye catchy call to action

3)Heading Tags(H-1 to H-6)

Heading tags are html tags used to identify headings and subheadings within your content from other types of text (example :paragraph text). While H-2 TO H-6 tags are not considered as important to search engines proper usage of H-1 tag has been emphasized in many industries. Headings are crucial for text and content optimization.

best tips

Keep your headings relevant to the data of the text they are describing.

Always have your headings reflect the sentiment of the text they are placed over.

Don't overuse the tags and the keywords. keep it readable.

4)Image ALT Attributes

The image ALT attribute is added to an image tag to describe it's contents. ALT attributes are important for on-page optimization because alt text is displayed to visitors if any particular image cannot be loaded. And alt attributes provide context because search engines can't see images. For E-Commerce sites images often have a crucial impact on how a visitor interacts with a page . Helping search engines understand what the images are about and how they go with the rest of the content may help them serve a page for suitable search queries.

best tips

Do your best to optimize the most prominent images (product images, info graphics or training images )that are likely to be looked up in google images search.

Add ALT text on pages where there is not too much content apart from the images.

Keep the alt text clear and descriptive enough ,use your keywords reasonably, and make sure they fit in page's content.

5)No Follow Attributes

External/Outbound links are the links on your site pointing to other sites. These are used to refer to proven sources, point people towards other useful resources , or mention a relevant site for some reasons.

These links matter a lot for S-E-O. They can make your content look like a well defined one or a link with not so much content. Google may treat the sources you refer to as the context to better understand the content on your page.By default all hyperlinks are followed , and when you place a link on your site you basically cast a vote of confidence to the linked page.

When you add a no follow attribute to a link , it instructs search engine's bots to not follow the link .Keeping your S-E-O neat , you must ensure a healthy balance between followed and non followed links on your pages.

best tips

Links to any resources that in any way can be considered as untrusted content.

Any paid or sponsored links

Links from comments or other kinds of user generated content which can be spammed beyond your control.

Internal sign in and register links following , which is just a waste of crawl budget.

6)Robots Meta Tag

Robots tags is a useful element if you want to prevent certain articles from being indexed. These can stop crawlers from sites like google from accessing the content. In some cases you may want certain pages to stay out of S-E-R-P's as they feature some kind of special deal that is supposed to be accessible by a direct link only. And if you have a site wide search options google recommend closing custom results pages, which can be crawled indefinitely and waste bot's resources on no unique content.

best tips

Close unnecessary/unfinished pages with thin content that have little value and no intent to appear in the serp's

Close pages that unreasonably waste crawl budget.

Make sure carefully you don't mistakenly restrict important pages from indexing.

7)Rel="Canonical" Link Tag

The rel="canonical" link tag is a way of telling search engines which version of a page you consider the main one and would like to be indexed by search engines and found by people. It's commonly used in cases when the same page is available under multiple different U-R-L's or multiple different pages have very similar content covering the same subject. Internal duplicate content is not treated as strictly as copied content as there's no usually manipulative intent behind it. Another benefit is that canonicalizing a page makes it easier to track performances stats associated with the content.

best tips

Pages with similar content on the same subject

Duplicate pages available under multiple url's.

Versions of the same page with session id's or other url parameters that do not affect the content .

Use canonical tag for near duplicate pages carefully: if the two pages connected by a canonical tag differ too much in content, the search engine will simply disregard the tag.

8)Schema Markup

Schema markup is a specific technique of organizing the data on each of your web pages in a way that is recognized by the search engines.having a structured schema markup is a great boost to your U-X and it carries huge S-E-O value . Structured data markup is exactly what helps search engines to not only read the content but also understand what certain words relate to.

If one is about to click a rich snippet,with a nice image, a 5-star rating, specified price-range, stock status, operating hours, or whatever is useful – is very likely to catch an eye and attract more clicks than a plain-text result.

Assigning schema tags to certain page elements makes your S-E-R-P snippet rich in information that is helpful and appealing for users.

best tips

Study available schema's on schema.org

Create a map of your most important pages and decide on the concepts relevant to each.

Implement the markup carefully.

Thoroughly test the markup to make sure it isn't misleading or added improperly.

9)Social Media Meta Tags

Open graph was initially introduced by Facebook to let you control how a page would look when shared on social media. It's now recognized by LinkedIn as well. Twitter cards offer similar enhancements but are exclusive to Twitter. Main open graph tags are:

og:title=Here you put the title which you want to be displayed when your page is linked to.

og:url=Your page's U-R-L.

og:description=Your page's description. Remember that Facebook will display only about 300 characters of description.

og:image=Here you can put the U-R-L of an image you want to be shown when your page is linked to.

Use the specific social media meta tags in order to boost how your links look to your following.

best tips

Add basic and relevant meta data using Open graph protocol and test the U-R-L's to see how they will be displayed

Setup twitter cards and validate them once done.

10)View Port Meta Tag

View Port meta tag allows you to configure how a page would be scaled and displayed on any device. View Port meta tag has nothing to do with rankings directly but has a tone to do with user experience. It's especially important considering the variety of devices that are being used nowadays and the noticeable shift to mobile browsing.