#how to implement circular queue using array

Explore tagged Tumblr posts

Text

DSA Channel: The Ultimate Destination for Learning Data Structures and Algorithms from Basics to Advanced

DSA mastery stands vital for successful software development and competitive programming in the current digital world that operates at high speeds. People at every skill level from beginner to advanced developer will find their educational destination at the DSA Channel.

Why is DSA Important?

Software development relies on data structures together with algorithms as its essential core components. Code optimization emerges from data structures and algorithms which produces better performance and leads to successful solutions of complex problems. Strategic knowledge of DSA serves essential needs for handling job interviews and coding competitions while enhancing logical thinking abilities. Proper guidance makes basic concepts of DSA both rewarding and enjoyable to study.

What Makes DSA Channel Unique?

The DSA Channel exists to simplify both data structures along algorithms and make them accessible to all users. Here’s why it stands out:

The channel provides step-by-step learning progress which conservatively begins by teaching arrays and linked lists and continues to dynamic programming and graph theory.

Each theoretical concept gets backed through coding examples practically to facilitate easier understanding and application in real-life situations.

Major companies like Google, Microsoft, and Amazon utilize DSA knowledge as part of their job recruiter process. Through their DSA Channel service candidates can perform mock interview preparation along with receiving technical interview problem-solving advice and interview cracking techniques.

Updates Occur Regularly Because the DSA Channel Matches the Ongoing Transformation in the Technology Industry. The content uses current algorithm field trends and new elements for constant updates.

DSAC channels will be covering the below key topics

DSA Channel makes certain you have clear ideas that are necessary for everything from the basics of data structures to the most sophisticated methods and use cases. Highlights :

1. Introduction Basic Data Structures

Fundamentals First, You Always Need To Start With the Basics. Some of the DSA Channel topics are:

Memories storing and manipulating elements of Arrays

Linked Lists — learn linked lists: Singly Linked lists Dually linked lists and Circular linked list

Implementing Stacks and Queues — linear data structure with these implementations.

Hash Table: Understanding Hashing and its impact in the retrieval of Data.

2. Advanced Data Structures

If you want to get Intense: the DSA channel has profound lessons:

Graph bases Types- Type of Graph Traversals: BFS, DFS

Heaps — Come to know about Min Heap and Max Heap

Index Tries – How to store and retrieve a string faster than the fastest possible.

3. Algorithms

This is especially true for efficient problem-solving. The DSA Channel discusses in-depth:

Searching Algorithms Binary Search and Linear Search etc.

Dynamic Programming: Optimization of subproblems

Recursion and Backtracking: How to solve a problem by recursion.

Graph Algorithms — Dijkstra, Bellman-Ford and Floyd-Warshall etc

4. Applications of DSA in Real life

So one of the unique things (About the DSA channel) is these real-world applications of his DSA Channel.

Instead of just teaching Theory the channel gives a hands-on to see how it's used in world DSA applications.

Learning about Database Management Systems — Indexing, Query Optimization, Storage Techniques

Operating Systems – study algorithms scheduling, memory management,t, and file systems.

Machine Learning and AI — Learning the usage of algorithms in training models, and optimizing computations.

Finance and Banking — data structures that help us in identifying risk scheme things, fraud detection, transaction processing, etc.

This hands-on approach to working out will ensure that learners not only know how to use these concepts in real-life examples.

How Arena Fincorp Benefits from DSA?

Arena Fincorp, a leading financial services provider, understands the importance of efficiency and optimization in the fintech sector. The financial solutions offered through Arena Fincorp operate under the same principles as data structures and algorithms which enhance coding operations. Arena Fincorp guarantees perfect financial transactions and data protection through its implementation of sophisticated algorithms. The foundational principles of DSA enable developers to build strong financial technological solutions for contemporary financial complications.

How to Get Started with DSA Channel?

New users of the DSA Channel should follow these instructions to maximize their experience:

The educational process should start with fundamental videos explaining arrays together with linked lists and stacks to establish a basic knowledge base.

The practice of DSA needs regular exercise and time to build comprehension. Devote specific time each day to find solutions for problems.

The platforms LeetCode, CodeChef, and HackerRank provide various DSA problems for daily problem-solving which boosts your skills.

Join community discussions where you can help learners by sharing solutions as well as working with fellow participants.

Students should do Mock Interviews through the DSA Channel to enhance their self-confidence and gain experience in actual interview situations.

The process of learning becomes more successful when people study together in a community. Through the DSA Channel students find an energetic learning community to share knowledge about doubts and project work and they exchange insight among themselves.

Conclusion

Using either data structures or algorithms in tech requires mastery so they have become mandatory in this sector. The DSA Channel delivers the best learning gateway that suits students as well as professionals and competitive programmers. Through their well-organized educational approach, practical experience and active learner network the DSA Channel builds a deep understanding of DSA with effective problem-solving abilities.

The value of data structures and algorithms and their optimized algorithms and efficient coding practices allows companies such as Arena Fincorp to succeed in their industries. New learners should begin their educational journey right now with the DSA Channel to master data structures and algorithms expertise.

0 notes

Text

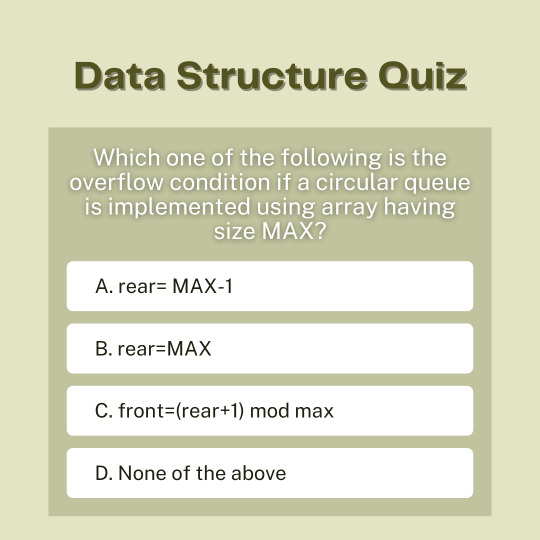

Test Your Knowledge: Quiz Challenge!!! 📝🧠

Which one of the following is the overflow condition if a circular queue is implemented using array having size MAX?🤔

For the explanation of the right answer, you can check Q.No. 29 of the above link. 📖

0 notes

Text

Essential Algorithms and Data Structures for Competitive Programming

Competitive programming is a thrilling and intellectually stimulating field that challenges participants to solve complex problems efficiently and effectively. At its core, competitive programming revolves around algorithms and data structures—tools that help you tackle problems with precision and speed. If you're preparing for a competitive programming contest or just want to enhance your problem-solving skills, understanding essential algorithms and data structures is crucial. In this blog, we’ll walk through some of the most important ones you should be familiar with.

1. Arrays and Strings

Arrays are fundamental data structures that store elements in a contiguous block of memory. They allow for efficient access to elements via indexing and are often the first data structure you encounter in competitive programming.

Operations: Basic operations include traversal, insertion, deletion, and searching. Understanding how to manipulate arrays efficiently can help solve a wide range of problems.

Strings are arrays of characters and are often used to solve problems involving text processing. Basic string operations like concatenation, substring search, and pattern matching are essential.

2. Linked Lists

A linked list is a data structure where elements (nodes) are stored in separate memory locations and linked together using pointers. There are several types of linked lists:

Singly Linked List: Each node points to the next node.

Doubly Linked List: Each node points to both the next and previous nodes.

Circular Linked List: The last node points back to the first node.

Linked lists are useful when you need to frequently insert or delete elements as they allow for efficient manipulation of the data.

3. Stacks and Queues

Stacks and queues are abstract data types that operate on a last-in-first-out (LIFO) and first-in-first-out (FIFO) principle, respectively.

Stacks: Useful for problems involving backtracking or nested structures (e.g., parsing expressions).

Queues: Useful for problems involving scheduling or buffering (e.g., breadth-first search).

Both can be implemented using arrays or linked lists and are foundational for many algorithms.

4. Hashing

Hashing involves using a hash function to convert keys into indices in a hash table. This allows for efficient data retrieval and insertion.

Hash Tables: Hash tables provide average-case constant time complexity for search, insert, and delete operations.

Collisions: Handling collisions (when two keys hash to the same index) using techniques like chaining or open addressing is crucial for effective hashing.

5. Trees

Trees are hierarchical data structures with a root node and child nodes. They are used to represent hierarchical relationships and are key to many algorithms.

Binary Trees: Each node has at most two children. They are used in various applications such as binary search trees (BSTs), where the left child is less than the parent, and the right child is greater.

Binary Search Trees (BSTs): Useful for dynamic sets where elements need to be ordered. Operations like insertion, deletion, and search have an average-case time complexity of O(log n).

Balanced Trees: Trees like AVL trees and Red-Black trees maintain balance to ensure O(log n) time complexity for operations.

6. Heaps

A heap is a specialized tree-based data structure that satisfies the heap property:

Max-Heap: The value of each node is greater than or equal to the values of its children.

Min-Heap: The value of each node is less than or equal to the values of its children.

Heaps are used in algorithms like heap sort and are also crucial for implementing priority queues.

7. Graphs

Graphs represent relationships between entities using nodes (vertices) and edges. They are essential for solving problems involving networks, paths, and connectivity.

Graph Traversal: Algorithms like Breadth-First Search (BFS) and Depth-First Search (DFS) are used to explore nodes and edges in graphs.

Shortest Path: Algorithms such as Dijkstra’s and Floyd-Warshall help find the shortest path between nodes.

Minimum Spanning Tree: Algorithms like Kruskal’s and Prim’s are used to find the minimum spanning tree in a graph.

8. Dynamic Programming

Dynamic Programming (DP) is a method for solving problems by breaking them down into simpler subproblems and storing the results of these subproblems to avoid redundant computations.

Memoization: Storing results of subproblems to avoid recomputation.

Tabulation: Building a table of results iteratively, bottom-up.

DP is especially useful for optimization problems, such as finding the shortest path, longest common subsequence, or knapsack problem.

9. Greedy Algorithms

Greedy Algorithms make a series of choices, each of which looks best at the moment, with the hope that these local choices will lead to a global optimum.

Applications: Commonly used for problems like activity selection, Huffman coding, and coin change.

10. Graph Algorithms

Understanding graph algorithms is crucial for competitive programming:

Shortest Path Algorithms: Dijkstra’s Algorithm, Bellman-Ford Algorithm.

Minimum Spanning Tree Algorithms: Kruskal’s Algorithm, Prim’s Algorithm.

Network Flow Algorithms: Ford-Fulkerson Algorithm, Edmonds-Karp Algorithm.

Preparing for Competitive Programming: Summer Internship Program

If you're eager to dive deeper into these algorithms and data structures, participating in a summer internship program focused on Data Structures and Algorithms (DSA) can be incredibly beneficial. At our Summer Internship Program, we provide hands-on experience and mentorship to help you master these crucial skills. This program is designed for aspiring programmers who want to enhance their competitive programming abilities and prepare for real-world challenges.

What to Expect:

Hands-On Projects: Work on real-world problems and implement algorithms and data structures.

Mentorship: Receive guidance from experienced professionals in the field.

Workshops and Seminars: Participate in workshops that cover advanced topics and techniques.

Networking Opportunities: Connect with peers and industry experts to expand your professional network.

By participating in our DSA Internship, you’ll gain practical experience and insights that will significantly boost your competitive programming skills and prepare you for success in contests and future career opportunities.

In conclusion, mastering essential algorithms and data structures is key to excelling in competitive programming. By understanding and practicing these concepts, you can tackle complex problems with confidence and efficiency. Whether you’re just starting out or looking to sharpen your skills, focusing on these fundamentals will set you on the path to success.

Ready to take your skills to the next level? Join our Summer Internship Program and dive into the world of algorithms and data structures with expert guidance and hands-on experience. Your journey to becoming a competitive programming expert starts here!

0 notes

Text

How to Ace Your DSA Interview, Even If You're a Newbie

Are you aiming to crack DSA interviews and land your dream job as a software engineer or developer? Look no further! This comprehensive guide will provide you with all the necessary tips and insights to ace your DSA interviews. We'll explore the important DSA topics to study, share valuable preparation tips, and even introduce you to Tutort Academy DSA courses to help you get started on your journey. So let's dive in!

Why is DSA Important?

Before we delve into the specifics of DSA interviews, let's first understand why data structures and algorithms are crucial for software development. DSA plays a vital role in optimizing software components, enabling efficient data storage and processing.

From logging into your Facebook account to finding the shortest route on Google Maps, DSA is at work in various applications we use every day. Mastering DSA allows you to solve complex problems, optimize code performance, and design efficient software systems.

Important DSA Topics to Study

To excel in DSA interviews, it's essential to have a strong foundation in key topics. Here are some important DSA topics you should study:

1. Arrays and Strings

Arrays and strings are fundamental data structures in programming. Understanding array manipulation, string operations, and common algorithms like sorting and searching is crucial for solving coding problems.

2. Linked Lists

Linked lists are linear data structures that consist of nodes linked together. It's important to understand concepts like singly linked lists, doubly linked lists, and circular linked lists, as well as operations like insertion, deletion, and traversal.

3. Stacks and Queues

Stacks and queues are abstract data types that follow specific orderings. Mastering concepts like LIFO (Last In, First Out) for stacks and FIFO (First In, First Out) for queues is essential. Additionally, learn about their applications in real-life scenarios.

4. Trees and Binary Trees

Trees are hierarchical data structures with nodes connected by edges. Understanding binary trees, binary search trees, and traversal algorithms like preorder, inorder, and postorder is crucial. Additionally, explore advanced concepts like AVL trees and red-black trees.

5. Graphs

Graphs are non-linear data structures consisting of nodes (vertices) and edges. Familiarize yourself with graph representations, traversal algorithms like BFS (Breadth-First Search) and DFS (Depth-First Search), and graph algorithms such as Dijkstra's algorithm and Kruskal's algorithm.

6. Sorting and Searching Algorithms

Understanding various sorting algorithms like bubble sort, selection sort, insertion sort, merge sort, and quicksort is essential. Additionally, familiarize yourself with searching algorithms like linear search, binary search, and hash-based searching.

7. Dynamic Programming

Dynamic programming involves breaking down a complex problem into smaller overlapping subproblems and solving them individually. Mastering this technique allows you to solve optimization problems efficiently.

These are just a few of the important DSA topics to study. It's crucial to have a solid understanding of these concepts and their applications to perform well in DSA interviews.

Tips to Follow While Preparing for DSA Interviews

Preparing for DSA interviews can be challenging, but with the right approach, you can maximize your chances of success. Here are some tips to keep in mind:

1. Understand the Fundamentals

Before diving into complex algorithms, ensure you have a strong grasp of the fundamentals. Familiarize yourself with basic data structures, common algorithms, and time and space complexities.

2. Practice Regularly

Consistent practice is key to mastering DSA. Solve a wide range of coding problems, participate in coding challenges, and implement algorithms from scratch. Leverage online coding platforms like LeetCode, HackerRank to practice and improve your problem-solving skills.

3. Analyze and Optimize

After solving a problem, analyze your solution and look for areas of improvement. Optimize your code for better time and space complexities. This demonstrates your ability to write efficient and scalable code.

4. Collaborate and Learn from Others

Engage with the coding community, join study groups, and participate in online forums. Collaborating with others allows you to learn different approaches, gain insights, and improve your problem-solving skills.

5. Mock Interviews and Feedback

Simulate real interview scenarios by participating in mock interviews. Seek feedback from experienced professionals or mentors who can provide valuable insights into your strengths and areas for improvement.

Following these tips will help you build a solid foundation in DSA and boost your confidence for interviews.

Conclusion

Mastering DSA is crucial for acing coding interviews and securing your dream job as a software engineer or developer. By studying important DSA topics, following effective preparation tips, and leveraging Tutort Academy's DSA courses, you'll be well-equipped to tackle DSA interviews with confidence. Remember to practice regularly, seek feedback, and stay curious.

Good luck on your DSA journey!

#programming#tutortacademy#tutort#DSA#data structures#data structures and algorithms#algorithm#interview preparation#interview tips

0 notes

Link

Data Structures and Algorithms from Zero to Hero and Crack Top Companies 100+ Interview questions (Java Coding)

What you’ll learn

Java Data Structures and Algorithms Masterclass

Learn, implement, and use different Data Structures

Learn, implement and use different Algorithms

Become a better developer by mastering computer science fundamentals

Learn everything you need to ace difficult coding interviews

Cracking the Coding Interview with 100+ questions with explanations

Time and Space Complexity of Data Structures and Algorithms

Recursion

Big O

Dynamic Programming

Divide and Conquer Algorithms

Graph Algorithms

Greedy Algorithms

Requirements

Basic Java Programming skills

Description

Welcome to the Java Data Structures and Algorithms Masterclass, the most modern, and the most complete Data Structures and Algorithms in Java course on the internet.

At 44+ hours, this is the most comprehensive course online to help you ace your coding interviews and learn about Data Structures and Algorithms in Java. You will see 100+ Interview Questions done at the top technology companies such as Apple, Amazon, Google, and Microsoft and how-to face Interviews with comprehensive visual explanatory video materials which will bring you closer to landing the tech job of your dreams!

Learning Java is one of the fastest ways to improve your career prospects as it is one of the most in-demand tech skills! This course will help you in better understanding every detail of Data Structures and how algorithms are implemented in high-level programming languages.

We’ll take you step-by-step through engaging video tutorials and teach you everything you need to succeed as a professional programmer.

After finishing this course, you will be able to:

Learn basic algorithmic techniques such as greedy algorithms, binary search, sorting, and dynamic programming to solve programming challenges.

Learn the strengths and weaknesses of a variety of data structures, so you can choose the best data structure for your data and applications

Learn many of the algorithms commonly used to sort data, so your applications will perform efficiently when sorting large datasets

Learn how to apply graph and string algorithms to solve real-world challenges: finding shortest paths on huge maps and assembling genomes from millions of pieces.

Why this course is so special and different from any other resource available online?

This course will take you from the very beginning to very complex and advanced topics in understanding Data Structures and Algorithms!

You will get video lectures explaining concepts clearly with comprehensive visual explanations throughout the course.

You will also see Interview Questions done at the top technology companies such as Apple, Amazon, Google, and Microsoft.

I cover everything you need to know about the technical interview process!

So whether you are interested in learning the top programming language in the world in-depth and interested in learning the fundamental Algorithms, Data Structures, and performance analysis that make up the core foundational skillset of every accomplished programmer/designer or software architect and is excited to ace your next technical interview this is the course for you!

And this is what you get by signing up today:

Lifetime access to 44+ hours of HD quality videos. No monthly subscription. Learn at your own pace, whenever you want

Friendly and fast support in the course Q&A whenever you have questions or get stuck

FULL money-back guarantee for 30 days!

This course is designed to help you to achieve your career goals. Whether you are looking to get more into Data Structures and Algorithms, increase your earning potential, or just want a job with more freedom, this is the right course for you!

The topics that are covered in this course.

Section 1 – Introduction

What are Data Structures?

What is an algorithm?

Why are Data Structures And Algorithms important?

Types of Data Structures

Types of Algorithms

Section 2 – Recursion

What is Recursion?

Why do we need recursion?

How does Recursion work?

Recursive vs Iterative Solutions

When to use/avoid Recursion?

How to write Recursion in 3 steps?

How to find Fibonacci numbers using Recursion?

Section 3 – Cracking Recursion Interview Questions

Question 1 – Sum of Digits

Question 2 – Power

Question 3 – Greatest Common Divisor

Question 4 – Decimal To Binary

Section 4 – Bonus CHALLENGING Recursion Problems (Exercises)

power

factorial

products array

recursiveRange

fib

reverse

palindrome

some recursive

flatten

capitalize first

nestedEvenSum

capitalize words

stringifyNumbers

collects things

Section 5 – Big O Notation

Analogy and Time Complexity

Big O, Big Theta, and Big Omega

Time complexity examples

Space Complexity

Drop the Constants and the nondominant terms

Add vs Multiply

How to measure the codes using Big O?

How to find time complexity for Recursive calls?

How to measure Recursive Algorithms that make multiple calls?

Section 6 – Top 10 Big O Interview Questions (Amazon, Facebook, Apple, and Microsoft)

Product and Sum

Print Pairs

Print Unordered Pairs

Print Unordered Pairs 2 Arrays

Print Unordered Pairs 2 Arrays 100000 Units

Reverse

O(N) Equivalents

Factorial Complexity

Fibonacci Complexity

Powers of 2

Section 7 – Arrays

What is an Array?

Types of Array

Arrays in Memory

Create an Array

Insertion Operation

Traversal Operation

Accessing an element of Array

Searching for an element in Array

Deleting an element from Array

Time and Space complexity of One Dimensional Array

One Dimensional Array Practice

Create Two Dimensional Array

Insertion – Two Dimensional Array

Accessing an element of Two Dimensional Array

Traversal – Two Dimensional Array

Searching for an element in Two Dimensional Array

Deletion – Two Dimensional Array

Time and Space complexity of Two Dimensional Array

When to use/avoid array

Section 8 – Cracking Array Interview Questions (Amazon, Facebook, Apple, and Microsoft)

Question 1 – Missing Number

Question 2 – Pairs

Question 3 – Finding a number in an Array

Question 4 – Max product of two int

Question 5 – Is Unique

Question 6 – Permutation

Question 7 – Rotate Matrix

Section 9 – CHALLENGING Array Problems (Exercises)

Middle Function

2D Lists

Best Score

Missing Number

Duplicate Number

Pairs

Section 10 – Linked List

What is a Linked List?

Linked List vs Arrays

Types of Linked List

Linked List in the Memory

Creation of Singly Linked List

Insertion in Singly Linked List in Memory

Insertion in Singly Linked List Algorithm

Insertion Method in Singly Linked List

Traversal of Singly Linked List

Search for a value in Single Linked List

Deletion of a node from Singly Linked List

Deletion Method in Singly Linked List

Deletion of entire Singly Linked List

Time and Space Complexity of Singly Linked List

Section 11 – Circular Singly Linked List

Creation of Circular Singly Linked List

Insertion in Circular Singly Linked List

Insertion Algorithm in Circular Singly Linked List

Insertion method in Circular Singly Linked List

Traversal of Circular Singly Linked List

Searching a node in Circular Singly Linked List

Deletion of a node from Circular Singly Linked List

Deletion Algorithm in Circular Singly Linked List

A method in Circular Singly Linked List

Deletion of entire Circular Singly Linked List

Time and Space Complexity of Circular Singly Linked List

Section 12 – Doubly Linked List

Creation of Doubly Linked List

Insertion in Doubly Linked List

Insertion Algorithm in Doubly Linked List

Insertion Method in Doubly Linked List

Traversal of Doubly Linked List

Reverse Traversal of Doubly Linked List

Searching for a node in Doubly Linked List

Deletion of a node in Doubly Linked List

Deletion Algorithm in Doubly Linked List

Deletion Method in Doubly Linked List

Deletion of entire Doubly Linked List

Time and Space Complexity of Doubly Linked List

Section 13 – Circular Doubly Linked List

Creation of Circular Doubly Linked List

Insertion in Circular Doubly Linked List

Insertion Algorithm in Circular Doubly Linked List

Insertion Method in Circular Doubly Linked List

Traversal of Circular Doubly Linked List

Reverse Traversal of Circular Doubly Linked List

Search for a node in Circular Doubly Linked List

Delete a node from Circular Doubly Linked List

Deletion Algorithm in Circular Doubly Linked List

Deletion Method in Circular Doubly Linked List

Entire Circular Doubly Linked List

Time and Space Complexity of Circular Doubly Linked List

Time Complexity of Linked List vs Arrays

Section 14 – Cracking Linked List Interview Questions (Amazon, Facebook, Apple, and Microsoft)

Linked List Class

Question 1 – Remove Dups

Question 2 – Return Kth to Last

Question 3 – Partition

Question 4 – Sum Linked Lists

Question 5 – Intersection

Section 15 – Stack

What is a Stack?

What and Why of Stack?

Stack Operations

Stack using Array vs Linked List

Stack Operations using Array (Create, isEmpty, isFull)

Stack Operations using Array (Push, Pop, Peek, Delete)

Time and Space Complexity of Stack using Array

Stack Operations using Linked List

Stack methods – Push, Pop, Peek, Delete, and isEmpty using Linked List

Time and Space Complexity of Stack using Linked List

When to Use/Avoid Stack

Stack Quiz

Section 16 – Queue

What is a Queue?

Linear Queue Operations using Array

Create, isFull, isEmpty, and enQueue methods using Linear Queue Array

Dequeue, Peek and Delete Methods using Linear Queue Array

Time and Space Complexity of Linear Queue using Array

Why Circular Queue?

Circular Queue Operations using Array

Create, Enqueue, isFull and isEmpty Methods in Circular Queue using Array

Dequeue, Peek and Delete Methods in Circular Queue using Array

Time and Space Complexity of Circular Queue using Array

Queue Operations using Linked List

Create, Enqueue and isEmpty Methods in Queue using Linked List

Dequeue, Peek and Delete Methods in Queue using Linked List

Time and Space Complexity of Queue using Linked List

Array vs Linked List Implementation

When to Use/Avoid Queue?

Section 17 – Cracking Stack and Queue Interview Questions (Amazon, Facebook, Apple, Microsoft)

Question 1 – Three in One

Question 2 – Stack Minimum

Question 3 – Stack of Plates

Question 4 – Queue via Stacks

Question 5 – Animal Shelter

Section 18 – Tree / Binary Tree

What is a Tree?

Why Tree?

Tree Terminology

How to create a basic tree in Java?

Binary Tree

Types of Binary Tree

Binary Tree Representation

Create Binary Tree (Linked List)

PreOrder Traversal Binary Tree (Linked List)

InOrder Traversal Binary Tree (Linked List)

PostOrder Traversal Binary Tree (Linked List)

LevelOrder Traversal Binary Tree (Linked List)

Searching for a node in Binary Tree (Linked List)

Inserting a node in Binary Tree (Linked List)

Delete a node from Binary Tree (Linked List)

Delete entire Binary Tree (Linked List)

Create Binary Tree (Array)

Insert a value Binary Tree (Array)

Search for a node in Binary Tree (Array)

PreOrder Traversal Binary Tree (Array)

InOrder Traversal Binary Tree (Array)

PostOrder Traversal Binary Tree (Array)

Level Order Traversal Binary Tree (Array)

Delete a node from Binary Tree (Array)

Entire Binary Tree (Array)

Linked List vs Python List Binary Tree

Section 19 – Binary Search Tree

What is a Binary Search Tree? Why do we need it?

Create a Binary Search Tree

Insert a node to BST

Traverse BST

Search in BST

Delete a node from BST

Delete entire BST

Time and Space complexity of BST

Section 20 – AVL Tree

What is an AVL Tree?

Why AVL Tree?

Common Operations on AVL Trees

Insert a node in AVL (Left Left Condition)

Insert a node in AVL (Left-Right Condition)

Insert a node in AVL (Right Right Condition)

Insert a node in AVL (Right Left Condition)

Insert a node in AVL (all together)

Insert a node in AVL (method)

Delete a node from AVL (LL, LR, RR, RL)

Delete a node from AVL (all together)

Delete a node from AVL (method)

Delete entire AVL

Time and Space complexity of AVL Tree

Section 21 – Binary Heap

What is Binary Heap? Why do we need it?

Common operations (Creation, Peek, sizeofheap) on Binary Heap

Insert a node in Binary Heap

Extract a node from Binary Heap

Delete entire Binary Heap

Time and space complexity of Binary Heap

Section 22 – Trie

What is a Trie? Why do we need it?

Common Operations on Trie (Creation)

Insert a string in Trie

Search for a string in Trie

Delete a string from Trie

Practical use of Trie

Section 23 – Hashing

What is Hashing? Why do we need it?

Hashing Terminology

Hash Functions

Types of Collision Resolution Techniques

Hash Table is Full

Pros and Cons of Resolution Techniques

Practical Use of Hashing

Hashing vs Other Data structures

Section 24 – Sort Algorithms

What is Sorting?

Types of Sorting

Sorting Terminologies

Bubble Sort

Selection Sort

Insertion Sort

Bucket Sort

Merge Sort

Quick Sort

Heap Sort

Comparison of Sorting Algorithms

Section 25 – Searching Algorithms

Introduction to Searching Algorithms

Linear Search

Linear Search in Python

Binary Search

Binary Search in Python

Time Complexity of Binary Search

Section 26 – Graph Algorithms

What is a Graph? Why Graph?

Graph Terminology

Types of Graph

Graph Representation

The graph in Java using Adjacency Matrix

The graph in Java using Adjacency List

Section 27 – Graph Traversal

Breadth-First Search Algorithm (BFS)

Breadth-First Search Algorithm (BFS) in Java – Adjacency Matrix

Breadth-First Search Algorithm (BFS) in Java – Adjacency List

Time Complexity of Breadth-First Search (BFS) Algorithm

Depth First Search (DFS) Algorithm

Depth First Search (DFS) Algorithm in Java – Adjacency List

Depth First Search (DFS) Algorithm in Java – Adjacency Matrix

Time Complexity of Depth First Search (DFS) Algorithm

BFS Traversal vs DFS Traversal

Section 28 – Topological Sort

What is Topological Sort?

Topological Sort Algorithm

Topological Sort using Adjacency List

Topological Sort using Adjacency Matrix

Time and Space Complexity of Topological Sort

Section 29 – Single Source Shortest Path Problem

what is Single Source Shortest Path Problem?

Breadth-First Search (BFS) for Single Source Shortest Path Problem (SSSPP)

BFS for SSSPP in Java using Adjacency List

BFS for SSSPP in Java using Adjacency Matrix

Time and Space Complexity of BFS for SSSPP

Why does BFS not work with Weighted Graph?

Why does DFS not work for SSSP?

Section 30 – Dijkstra’s Algorithm

Dijkstra’s Algorithm for SSSPP

Dijkstra’s Algorithm in Java – 1

Dijkstra’s Algorithm in Java – 2

Dijkstra’s Algorithm with Negative Cycle

Section 31 – Bellman-Ford Algorithm

Bellman-Ford Algorithm

Bellman-Ford Algorithm with negative cycle

Why does Bellman-Ford run V-1 times?

Bellman-Ford in Python

BFS vs Dijkstra vs Bellman Ford

Section 32 – All Pairs Shortest Path Problem

All pairs shortest path problem

Dry run for All pair shortest path

Section 33 – Floyd Warshall

Floyd Warshall Algorithm

Why Floyd Warshall?

Floyd Warshall with negative cycle,

Floyd Warshall in Java,

BFS vs Dijkstra vs Bellman Ford vs Floyd Warshall,

Section 34 – Minimum Spanning Tree

Minimum Spanning Tree,

Disjoint Set,

Disjoint Set in Java,

Section 35 – Kruskal’s and Prim’s Algorithms

Kruskal Algorithm,

Kruskal Algorithm in Python,

Prim’s Algorithm,

Prim’s Algorithm in Python,

Prim’s vs Kruskal

Section 36 – Cracking Graph and Tree Interview Questions (Amazon, Facebook, Apple, Microsoft)

Section 37 – Greedy Algorithms

What is a Greedy Algorithm?

Well known Greedy Algorithms

Activity Selection Problem

Activity Selection Problem in Python

Coin Change Problem

Coin Change Problem in Python

Fractional Knapsack Problem

Fractional Knapsack Problem in Python

Section 38 – Divide and Conquer Algorithms

What is a Divide and Conquer Algorithm?

Common Divide and Conquer algorithms

How to solve the Fibonacci series using the Divide and Conquer approach?

Number Factor

Number Factor in Java

House Robber

House Robber Problem in Java

Convert one string to another

Convert One String to another in Java

Zero One Knapsack problem

Zero One Knapsack problem in Java

Longest Common Sequence Problem

Longest Common Subsequence in Java

Longest Palindromic Subsequence Problem

Longest Palindromic Subsequence in Java

Minimum cost to reach the Last cell problem

Minimum Cost to reach the Last Cell in 2D array using Java

Number of Ways to reach the Last Cell with given Cost

Number of Ways to reach the Last Cell with given Cost in Java

Section 39 – Dynamic Programming

What is Dynamic Programming? (Overlapping property)

Where does the name of DC come from?

Top-Down with Memoization

Bottom-Up with Tabulation

Top-Down vs Bottom Up

Is Merge Sort Dynamic Programming?

Number Factor Problem using Dynamic Programming

Number Factor: Top-Down and Bottom-Up

House Robber Problem using Dynamic Programming

House Robber: Top-Down and Bottom-Up

Convert one string to another using Dynamic Programming

Convert String using Bottom Up

Zero One Knapsack using Dynamic Programming

Zero One Knapsack – Top Down

Zero One Knapsack – Bottom Up

Section 40 – CHALLENGING Dynamic Programming Problems

Longest repeated Subsequence Length problem

Longest Common Subsequence Length problem

Longest Common Subsequence problem

Diff Utility

Shortest Common Subsequence problem

Length of Longest Palindromic Subsequence

Subset Sum Problem

Egg Dropping Puzzle

Maximum Length Chain of Pairs

Section 41 – A Recipe for Problem Solving

Introduction

Step 1 – Understand the problem

Step 2 – Examples

Step 3 – Break it Down

Step 4 – Solve or Simplify

Step 5 – Look Back and Refactor

Section 41 – Wild West

Download

To download more paid courses for free visit course catalog where 1000+ paid courses available for free. You can get the full course into your device with just a single click. Follow the link above to download this course for free.

3 notes

·

View notes

Video

youtube

Circular Queue - Insertion/Deletion - With Example in Hindi

This is a Hindi video tutorial that Circular Queue - Insertion/Deletion - With Example in Hindi. You will learn c program to implement circular queue using array in hindi, circular queue using array in c and circular queue in c using linked list. This video will make you understand circular queue tutorialspoint, circular queue algorithm, circular queue example, circular queue in data structure pdf, insertion and deletion in circular queue in data structure, implement circular queue in hindi, circular queue c, types of queue in hindi, double ended queue in hindi. If you are looking for Circular Queue in Hindi, Circular Queue Array Implementation in Hindi, Circular Queue Programming using Array (Hindi), Circular Queue | Data Structures in Hindi or Array Implementation of Circular Queue in Hindi, then this video is for you. You can download the program from the following link: http://bmharwani.com/circularqueuearr.c For more videos on Data Structures, visit: https://www.youtube.com/watch?v=TRXkTGu0n9g&list=PLuDr_vb2LpAxZdUV5gyea-TsEJ06k_-Aw&index=14 To get notification for latest videos uploaded, subscribe to my channel: https://youtube.com/c/bintuharwani To see more videos on different computer subjects, visit: http://bmharwani.com

#circular queue in hindi#circular queue in c#implement circular queue using array#circular queue tutorial#how to implement circular queue using array#circular queue using array with output#use of modulo operator in circular queue#circular queue using array example#circular queue algorithm#circular queue tutorialspoint#circular queue ppt#bintu harwani#b.m. harwani#circular queue using array#circular queue#c program to implement circular queue#circular queue program#types of queue

0 notes

Text

Learning JavaScript Data Structures and Algorithms - Second Edition - Loiane Groner

Learning JavaScript Data Structures and Algorithms - Second Edition Loiane Groner Genre: Computers Price: $35.99 Publish Date: June 23, 2016 Publisher: Packt Publishing Seller: Ingram DV LLC Hone your skills by learning classic data structures and algorithms in JavaScript About This Book • Understand common data structures and the associated algorithms, as well as the context in which they are used. • Master existing JavaScript data structures such as array, set and map and learn how to implement new ones such as stacks, linked lists, trees and graphs. • All concepts are explained in an easy way, followed by examples. Who This Book Is For If you are a student of Computer Science or are at the start of your technology career and want to explore JavaScript's optimum ability, this book is for you. You need a basic knowledge of JavaScript and programming logic to start having fun with algorithms. What You Will Learn • Declare, initialize, add, and remove items from arrays, stacks, and queues • Get the knack of using algorithms such as DFS (Depth-first Search) and BFS (Breadth-First Search) for the most complex data structures • Harness the power of creating linked lists, doubly linked lists, and circular linked lists • Store unique elements with hash tables, dictionaries, and sets • Use binary trees and binary search trees • Sort data structures using a range of algorithms such as bubble sort, insertion sort, and quick sort In Detail This book begins by covering basics of the JavaScript language and introducing ECMAScript 7, before gradually moving on to the current implementations of ECMAScript 6. You will gain an in-depth knowledge of how hash tables and set data structure functions, as well as how trees and hash maps can be used to search files in a HD or represent a database. This book is an accessible route deeper into JavaScript. Graphs being one of the most complex data structures you'll encounter, we'll also give you a better understanding of why and how graphs are largely used in GPS navigation systems in social networks. Toward the end of the book, you'll discover how all the theories presented by this book can be applied in real-world solutions while working on your own computer networks and Facebook searches. Style and approach This book gets straight to the point, providing you with examples of how a data structure or algorithm can be used and giving you real-world applications of the algorithm in JavaScript. With real-world use cases associated with each data structure, the book explains which data structure should be used to achieve the desired results in the real world. http://bit.ly/2VsJZnv

0 notes

Link

GET THIS BOOK

Author:

Catherine Wilson

Published in: Princeton University Press Release Year: 1997 ISBN: 978-8193-24527-9 Pages: 145 Edition: 1st File Size: 17 MB File Type: pdf Language: English

(adsbygoogle = window.adsbygoogle || []).push({});

Description of Data Structures and Algorithms Made Easy

Please hold on! I know many people typically do not read the Preface of a book. But I strongly recommend that you read this particular Preface. It is not the main objective of Data Structures and Algorithms Made Easybook to present you with the theorems and proofs on data structures and algorithms. I have followed a pattern of improving the problem solutions with different complexities (for each problem, you will find multiple solutions with different, and reduced, complexities). Basically, it’s an enumeration of possible solutions. With this approach, even if you get a new question, it will show you a way to think about the possible solutions. You will find Data Structures and Algorithms Made Easy book useful for interview preparation, competitive exams preparation, and campus interview preparations.

As a job seeker, if you read the complete book, I am sure you will be able to challenge the interviewers. If you read it as an instructor, it will help you to deliver lectures with an approach that is easy to follow, and as a result your students will appreciate the fact that they have opted for Computer Science / Information Technology as their degree. Data Structures and Algorithms Made Easy book is also useful for Engineering degree students and Masters degree students during their academic preparations. In all the chapters you will see that there is more emphasis on problems and their analysis rather than on theory. In each chapter, you will first read about the basic required theory, which is then followed by a section on problem sets.

In total, there are approximately 700 algorithmic problems, all with solutions. If you read the book as a student preparing for competitive exams for Computer Science / Information Technology, the content covers all the required topics in full detail. While writing Data Structures and Algorithms Made Easy book, my main focus was to help students who are preparing for these exams. In all the chapters you will see more emphasis on problems and analysis rather than on theory. In each chapter, you will first see the basic required theory followed by various problems. For many problems, multiple solutions are provided with different levels of complexity. We start with the brute force solution and slowly move toward the best solution possible for that problem. For each problem, we endeavor to understand how much time the algorithm takes and how much memory the algorithm uses.

Table Contents of Data Structures and Algorithms Made Easy

1. Introduction

1.1 Variables

1.2 Data Types

1.3 Data Structures

1.4 Abstract Data Types (ADTs)

1.5 What is an Algorithm?

1.6 Why the Analysis of Algorithms?

1.7 Goal of the Analysis of Algorithms

1.8 What is Running Time Analysis?

1.9 How to Compare Algorithms

1.10 What is Rate of Growth?

1.11 Commonly Used Rates of Growth

1.12 Types of Analysis

1.13 Asymptotic Notation

1.14 Big-O Notation [Upper Bounding Function]

1.15 Omega-Q Notation [Lower Bounding Function]

1.16 Theta-Θ Notation [Order Function]

1.17 Important Notes

1.18 Why is it called Asymptotic Analysis?

1.19 Guidelines for Asymptotic Analysis

1.20 Simplyfying properties of asymptotic notations

1.21 Commonly used Logarithms and Summations

1.22 Master Theorem for Divide and Conquer Recurrences

1.23 Divide and Conquer Master Theorem: Problems & Solutions

1.24 Master Theorem for Subtract and Conquer Recurrences

1.25 Variant of Subtraction and Conquer Master Theorem

1.26 Method of Guessing and Confirming

1.27 Amortized Analysis

1.28 Algorithms Analysis: Problems & Solutions

2. Recursion and Backtracking

2.1 Introduction

2.2 What is Recursion?

2.3 Why Recursion?

2.4 Format of a Recursive Function

2.5 Recursion and Memory (Visualization)

2.6 Recursion versus Iteration

2.7 Notes on Recursion

2.8 Example Algorithms of Recursion

2.9 Recursion: Problems & Solutions

2.10 What is Backtracking?

2.11 Example Algorithms of Backtracking

2.12 Backtracking: Problems & Solutions

3. Linked Lists

3.1 What is a Linked List?

3.2 Linked Lists ADT

3.3 Why Linked Lists?

3.4 Arrays Overview

3.5 Comparison of Linked Lists with Arrays & Dynamic Arrays

3.6 Singly Linked Lists

3.7 Doubly Linked Lists

3.8 Circular Linked Lists

3.9 A Memory-efficient Doubly Linked List

3.10 Unrolled Linked Lists

3.11 Skip Lists

3.12 Linked Lists: Problems & Solutions

4. Stacks

4.1 What is a Stack?

4.2 How Stacks are used

4.3 Stack ADT

4.4 Applications

4.5 Implementation

4.6 Comparison of Implementations

4.7 Stacks: Problems & Solutions

5. Queues

5.1 What is a Queue?

5.2 How are Queues Used?

5.3 Queue ADT

5.4 Exceptions

5.5 Applications

5.6 Implementation

5.7 Queues: Problems & Solutions

6. Trees

6.1 What is a Tree?

6.2 Glossary

6.3 Binary Trees

6.4 Types of Binary Trees

6.5 Properties of Binary Trees

6.6 Binary Tree Traversals

6.7 Generic Trees (N-ary Trees)

6.8 Threaded Binary Tree Traversals (Stack or Queue-less Traversals)

6.9 Expression Trees

6.10 XOR Trees

6.11 Binary Search Trees (BSTs)

6.12 Balanced Binary Search Trees

6.13 AVL(Adelson-Velskii and Landis) Trees

6.14 Other Variations on Trees

7. Priority Queues and Heaps

7.1 What is a Priority Queue?

7.2 Priority Queue ADT

7.3 Priority Queue Applications

7.4 Priority Queue Implementations

7.5 Heaps and Binary Heaps

7.6 Binary Heaps

7.7 Heapsort

7.8 Priority Queues [Heaps]: Problems & Solutions

8. Disjoint Sets ADT

8.1 Introduction

8.2 Equivalence Relations and Equivalence Classes

8.3 Disjoint Sets ADT

8.4 Applications

8.5 Tradeoffs in Implementing Disjoint Sets ADT

8.8 Fast UNION Implementation (Slow FIND)

8.9 Fast UNION Implementations (Quick FIND)

8.10 Summary

8.11 Disjoint Sets: Problems & Solutions

9. Graph Algorithms

9.1 Introduction

9.2 Glossary

9.3 Applications of Graphs

9.4 Graph Representation

9.5 Graph Traversals

9.6 Topological Sort

9.7 Shortest Path Algorithms

9.8 Minimal Spanning Tree

9.9 Graph Algorithms: Problems & Solutions

10. Sorting

10.1 What is Sorting?

10.2 Why is Sorting Necessary?

10.3 Classification of Sorting Algorithms

10.4 Other Classifications

10.5 Bubble Sort

10.6 Selection Sort

10.7 Insertion Sort

10.8 Shell Sort

10.9 Merge Sort

10.10 Heap Sort

10.11 Quick Sort

10.12 Tree Sort

10.13 Comparison of Sorting Algorithms

10.14 Linear Sorting Algorithms

10.15 Counting Sort

10.16 Bucket Sort (or Bin Sort)

10.17 Radix Sort

10.18 Topological Sort

10.19 External Sorting

10.20 Sorting: Problems & Solutions

11. Searching

11.1 What is Searching?

11.2 Why do we need Searching?

11.3 Types of Searching

11.4 Unordered Linear Search

11.5 Sorted/Ordered Linear Search

11.6 Binary Search

11.7 Interpolation Search

11.8 Comparing Basic Searching Algorithms

11.9 Symbol Tables and Hashing

11.10 String Searching Algorithms

11.11 Searching: Problems & Solutions

12. Selection Algorithms [Medians]

12.1 What are Selection Algorithms?

12.2 Selection by Sorting

12.3 Partition-based Selection Algorithm

12.4 Linear Selection Algorithm - Median of Medians Algorithm

12.5 Finding the K Smallest Elements in Sorted Order

12.6 Selection Algorithms: Problems & Solutions

13. Symbol Tables

13.1 Introduction

13.2 What are Symbol Tables?

13.3 Symbol Table Implementations

13.4 Comparison Table of Symbols for Implementations

14. Hashing

14.1 What is Hashing?

14.2 Why Hashing?

14.3 HashTable ADT

14.4 Understanding Hashing

14.5 Components of Hashing

14.6 Hash Table

14.7 Hash Function

14.8 Load Factor

14.9 Collisions

14.10 Collision Resolution Techniques

14.11 Separate Chaining

14.12 Open Addressing

14.13 Comparison of Collision Resolution Techniques

14.14 How Hashing Gets O(1) Complexity?

14.15 Hashing Techniques

14.16 Problems for which Hash Tables are not suitable

14.17 Bloom Filters

14.18 Hashing: Problems & Solutions

15. String Algorithms

15.1 Introduction

15.2 String Matching Algorithms

15.3 Brute Force Method

15.4 Rabin-Karp String Matching Algorithm

15.5 String Matching with Finite Automata

15.6 KMP Algorithm

15.7 Boyer-Moore Algorithm

15.8 Data Structures for Storing Strings

15.9 Hash Tables for Strings

15.10 Binary Search Trees for Strings

15.11 Tries

15.12 Ternary Search Trees

15.13 Comparing BSTs, Tries and TSTs

15.14 Suffix Trees

15.15 String Algorithms: Problems & Solutions

16. Algorithms Design Techniques

16.1 Introduction

16.2 Classification

16.3 Classification by Implementation Method

16.4 Classification by Design Method

16.5 Other Classifications

17. Greedy Algorithms

17.1 Introduction

17.2 Greedy Strategy

17.3 Elements of Greedy Algorithms

17.4 Does Greedy Always Work?

17.5 Advantages and Disadvantages of Greedy Method

17.6 Greedy Applications

17.7 Understanding Greedy Technique

17.8 Greedy Algorithms: Problems & Solutions

18. Divide and Conquer Algorithms

18.1 Introduction

18.2 What is the Divide and Conquer Strategy?

18.3 Does Divide and Conquer Always Work?

18.4 Divide and Conquer Visualization

18.5 Understanding Divide and Conquer

18.6 Advantages of Divide and Conquer

18.7 Disadvantages of Divide and Conquer

18.8 Master Theorem

18.9 Divide and Conquer Applications

18.10 Divide and Conquer: Problems & Solutions

19. Dynamic Programming

19.1 Introduction

19.2 What is Dynamic Programming Strategy?

19.3 Properties of Dynamic Programming Strategy

19.4 Can Dynamic Programming Solve All Problems?

19.5 Dynamic Programming Approaches

19.6 Examples of Dynamic Programming Algorithms

19.7 Understanding Dynamic Programming

19.8 Longest Common Subsequence

19.9 Dynamic Programming: Problems & Solutions

20. Complexity Classes

20.1 Introduction

20.2 Polynomial/Exponential Time

20.3 What is a Decision Problem?

20.4 Decision Procedure

20.5 What is a Complexity Class?

20.6 Types of Complexity Classes

20.7 Reductions

20.8 Complexity Classes: Problems & Solutions

21. Miscellaneous Concepts

21.1 Introduction

21.2 Hacks on Bit-wise Programming

21.3 Other Programming Questions

References

0 notes

Text

Datacenter RPCs can be general and fast

Datacenter RPCs can be general and fast Kalia et al., NSDI’19

We’ve seen a lot of exciting work exploiting combinations of RDMA, FPGAs, and programmable network switches in the quest for high performance distributed systems. I’m as guilty as anyone for getting excited about all of that. The wonderful thing about today’s paper, for which Kalia et al. won a best paper award at NSDI this year, is that it shows in many cases we don’t actually need to take on that extra complexity. Or to put it another way, it seriously raises the bar for when we should.

eRPC (efficient RPC) is a new general-purpose remote procedure call (RPC) library that offers performance comparable to specialized systems, while running on commodity CPUs in traditional datacenter networks based on either lossy Ethernet or lossless fabrics… We port a production grade implementation of Raft state machine replication to eRPC without modifying the core Raft source code. We achieve 5.5 µs of replication latency on lossy Ethernet, which is faster than or comparable to specialized replication systems that use programmable switches, FPGAs, or RDMA.

eRPC just needs good old UDP. Lossy Ethernet is just fine (no need for fancy lossness networks), and it doesn’t need Priority Flow Control (PFC). The received wisdom is that you can either have general-purpose networking that works everywhere and is non-intrusive to applications but has capped performance, or you have to drop down to low-level interfaces and do a lot of your own heavy lifting to obtain really high performance.

The goal of our work is to answer the question: can a general-purpose RPC library provide performance comparable to specialized systems?

Astonishingly, Yes.

From the evaluation using two lossy Ethernet cluster (designed to mimic the setups used in Microsoft and Facebook datacenters):

2.3µs median RPC latency

up to 10 million RPCs / second on a single core

large message transfer at up to 75Gbps on a single core

peak performance maintained even with 20,000 connections per node (2 million cluster wide)

eRPC’s median latency on CX5 is only 2.3µs, showing that latency with commodity Ethernet NICs and software networking is much lower than the widely-believed value of 10-100µs.

(CURP over eRPC in a modern datacenter would be a pretty spectacular combination!).

So the question that immediately comes to mind is how? As in, “what magic is this?”.

The secret to high-performance general-purpose RPCs

… is a carefully considered design that optimises for the common case and also avoids triggering packet loss due to switch buffer overflows for common traffic patterns.

That’s it? Yep. You won’t find any super low-level fancy new exotic algorithm here. Your periodic reminder that thoughtful design is a high leverage activity! You will of course find something pretty special in the way all the pieces come together.

So what assumptions go into the ‘common case?’

Small messages

Short duration RPC handlers

Congestion-free networks

Which is not to say that eRPC can’t handle larger messages, long-running handlers, and congested networks. It just doesn’t pay a contingency overhead price when they are absent.

Optimisations for the common case (which we’ll look at next) boost performance by up to 66% in total. On this base eRPC also enables zero-copy transmissions and a design that scales while retaining a constant NIC memory footprint.

The core model is as follows. RPCs are asynchronous and execute at most once. Servers register request handler functions with unique request types, and clients include the request types when issuing requests. Clients receive a continuation callback on RPC completion. Messages are stored in opaque DMA-capable buffers provided by eRPC, called msg-bufs. Each RPC endpoint (one per end user thread) has an RX and TX queue for packet I/O, an event loop, and several sessions.

The long and short of it

When request handlers are run directly in dispatch threads you can avoid expensive inter-thread communication (adding up to 400ns of request latency). That’s fine when request handlers are short in duration, but long handlers block other dispatch handling increasing tail latency, and prevent rapid congestion feedback.

eRPC supports running handlers in dispatch threads for short duration request types (up to a few hundred nanoseconds), and worker threads for longer running requests. Which mode to use is specified when the request handler is registered. This is the only additional user input needed in eRPC.

Scalable connection state

eRPC’s choice to use packet I/O over RDMA avoids the circular buffer scalability bottleneck in RDMA (see §4.1.1). By taking advantage of multi-packet RX-queue (RQ) descriptors in modern NICs, eRPC can use constant space in the NIC instead of a footprint that grows with the number of connected sessions (see Appendix A).

Furthermore, eRPC replaces NIC-managed connection state with CPU-managed connection state.

This is an explicit design choice, based upon fundamental differences between the CPU and NIC architectures. NICs and CPUs will both cache recently used connection state. CPU cache misses are served from DRAM, whereas NIC cache misses are served from the CPU’s memory subsystem over the slow PCIe bus. The CPU’s miss penalty is therefore much lower. Second, CPUs have substantially larger caches than the ~2MB available on a modern NIC, so the cache miss frequency is also lower.

Zero-copy transmission

Zero-copy packet I/O in eRPC provides performance comparable to lower level interfaces such as RDMA and DPDK. The msgbuf layout ensures that the data region is contiguous (so that applications can use it as an opaque buffer) even when the buffer contains data for multiple packets. The first packet’s data and header are also contiguous so that the NIC can fetch small messages with one DMA read. Headers for remaining packets are at the end, to allow for the contiguous data region in the middle.

eRPC must ensure that it doesn’t mess with msgbufs after ownership is returned to the application, which is fundamentally addressed by making sure it retains no reference to the buffer. Retransmissions can interfere with such a scheme though. eRPC chooses to use “unsignaled” packet transmission optimising for the common case of no retransmission. The trade-off is a more expensive process when retransmission does occur:

We flush the TX DMA queue after queuing a retransmitted packet, which blocks until all queued packets are DMA-ed. This ensures the required invariant: when a response is processed, there are no references to the request in the DMA queue.

eRPC provides zero copy reception for workloads under the common-case of single packet requests and dispatch mode request handlers too, which boosts eRPCs message rate by up to 16%.

Sessions and flow control

Sessions support concurrent requests (8 by default) that can complete out-of-order with respect to each other. Sessions use an array of slots to track RCP metadata for outstanding requests, and slots have an MTU-size preallocated msgbuf for use by request handlers that issue short responses. Session credits are used to implement packet-level flow control. Session credits also support end-to-end flow control to reduce switch queuing. Each session is given BDP/MTU credits, which ensures that each session can achieve line rate.

Client-driven wire protocol

We designed a wire protocol for eRPC that is optimized for small RPCs and accounts for per-session credit limits. For simplicity, we chose a simple client-driven protocol, meaning that each packet sent by the server is in response to a client packet.

Client-driven protocols have fewer moving parts, with only the client needing to maintain wire protocol state. Rate limiting becomes solely a client responsibility too, freeing server CPU.

Single-packet RPCs (request and response require only a single packet) use the fewest packets possible. With multi-packet responses and a client-driven protocol the server can’t immediately send response packets after the first one, so the client sends a request-for-response (RFR) packet. In practice this added latency turned out to be less than 20% for responses with four or more packets.

Congestion control

eRPC can use either Timely or DCQCN for congestion control. The evaluation uses Timely as the cluster hardware could not support DCQCN. Three optimisation brought the overhead of congestion control down from around 20% to 9%:

Bypassing Timely altogether is the RTT of a received packet on an uncongested session is less than a low threshold value.

Bypassing the rate limiter for uncongested sessions

Sampling timers once per RX or TX batch rather than once per packet for RTT measurement

These optimisation works because datacenter networks are typically uncongested. E.g. at one-minute timescales 99% of all Facebook datacenter links are uncongested, and for web and cache traffic Google, 90% of ToR switch links (the most congested), are less than 10% utilized at 25 µs timescales.

Packet loss

eRPC keeps things simple by treating re-ordered packets as losses and dropping them (as do current RDMA NICs). When a client suspects a lost packet it rolls back the request’s wire protocol state using a ‘go-back-N’ mechanism. It reclaims credits and retransmits from the rollback point.

Evaluation highlights

This write-up is in danger of getting too long again, so I’ll keep this very brief. The following table shows the contribution of the various optimisations through ablation:

We conclude that optimizing for the common case is both necessary and sufficient for high-performance RPCs.

Here you can see latency with increasing threads. eRPC achieves high message rate, bandwidth, and scalability with low latency in a large cluster with lossy Ethernet.

For large RPCs, eRPC can achieve up to 75 Gbps with one core.

Section 7 discusses the integration of eRPC in an existing Raft library, and in the Masstree key-value store. From the Raft section the authors conclude: “the main takeaway is that microsecond-scale consistent replication is achievable in commodity Ethernet datacenters with a general-purpose networking library.”

eRPC’s speed comes from prioritizing common-case performance, carefully combining a wide range of old and new optimizations, and the observation that switch buffer capacity far exceeds datacenter BDP. eRPC delivers performance that was until now believed possible only with lossless RDMA fabrics or specialized network hardware. It allows unmodified applications to perform close to the hardware limits.

the morning paper published first on the morning paper

0 notes

Link

(Via: Hacker News)

Allocation Efficiency in High-Performance Go Services

Memory management can be tricky, to say the least. However, after reading the literature, one might be led to believe that all the problems are solved: sophisticated automated systems that manage the lifecycle of memory allocation free us from these burdens.

However, if you’ve ever tried to tune the garbage collector of a JVM program or optimized the allocation pattern of a Go codebase, you understand that this is far from a solved problem. Automated memory management helpfully rules out a large class of errors, but that’s only half the story. The hot paths of our software must be built in a way that these systems can work efficiently.

We found inspiration to share our learnings in this area while building a high-throughput service in Go called Centrifuge, which processes hundreds of thousands of events per second. Centrifuge is a critical part of Segment’s infrastructure. Consistent, predictable behavior is a requirement. Tidy, efficient, and precise use of memory is a major part of achieving this consistency.

In this post we’ll cover common patterns that lead to inefficiency and production surprises related to memory allocation as well as practical ways of blunting or eliminating these issues. We’ll focus on the key mechanics of the allocator that provide developers a way to get a handle on their memory usage.

Our first recommendation is to avoid premature optimization. Go provides excellent profiling tools that can point directly to the allocation-heavy portions of a code base. There’s no reason to reinvent the wheel, so instead of taking readers through it here, we’ll refer to this excellent post on the official Go blog. It has a solid walkthrough of using pprof for both CPU and allocation profiling. These are the same tools that we use at Segment to find bottlenecks in our production Go code, and should be the first thing you reach for as well.

Use data to drive your optimization!

Analyzing Our Escape

Go manages memory allocation automatically. This prevents a whole class of potential bugs, but it doesn’t completely free the programmer from reasoning about the mechanics of allocation. Since Go doesn’t provide a direct way to manipulate allocation, developers must understand the rules of this system so that it can be maximized for our own benefit.

If you remember one thing from this entire post, this would be it: stack allocation is cheap and heap allocation is expensive. Now let’s dive into what that actually means.

Go allocates memory in two places: a global heap for dynamic allocations and a local stack for each goroutine. Go prefers allocation on the stack — most of the allocations within a given Go program will be on the stack. It’s cheap because it only requires two CPU instructions: one to push onto the stack for allocation, and another to release from the stack.

Unfortunately not all data can use memory allocated on the stack. Stack allocation requires that the lifetime and memory footprint of a variable can be determined at compile time. Otherwise a dynamic allocation onto the heap occurs at runtime. malloc must search for a chunk of free memory large enough to hold the new value. Later down the line, the garbage collector scans the heap for objects which are no longer referenced. It probably goes without saying that it is significantly more expensive than the two instructions used by stack allocation.

The compiler uses a technique called escape analysis to choose between these two options. The basic idea is to do the work of garbage collection at compile time. The compiler tracks the scope of variables across regions of code. It uses this data to determine which variables hold to a set of checks that prove their lifetime is entirely knowable at runtime. If the variable passes these checks, the value can be allocated on the stack. If not, it is said to escape, and must be heap allocated.

The rules for escape analysis aren’t part of the Go language specification. For Go programmers, the most straightforward way to learn about these rules is experimentation. The compiler will output the results of the escape analysis by building with go build -gcflags '-m'. Let’s look at an example:

package main import "fmt" func main() { x := 42 fmt.Println(x) }

$ go build -gcflags '-m' ./main.go # command-line-arguments ./main.go:7: x escapes to heap ./main.go:7: main ... argument does not escape

See here that the variable x “escapes to the heap,” which means it will be dynamically allocated on the heap at runtime. This example is a little puzzling. To human eyes, it is immediately obvious that x will not escape the main() function. The compiler output doesn’t explain why it thinks the value escapes. For more details, pass the -m option multiple times, which makes the output more verbose:

$ go build -gcflags '-m -m' ./main.go # command-line-arguments ./main.go:5: cannot inline main: non-leaf function ./main.go:7: x escapes to heap ./main.go:7: from ... argument (arg to ...) at ./main.go:7 ./main.go:7: from *(... argument) (indirection) at ./main.go:7 ./main.go:7: from ... argument (passed to call[argument content escapes]) at ./main.go:7 ./main.go:7: main ... argument does not escape

Ah, yes! This shows that x escapes because it is passed to a function argument which escapes itself — more on this later.

The rules may continue to seem arbitrary at first, but after some trial and error with these tools, patterns do begin to emerge. For those short on time, here’s a list of some patterns we’ve found which typically cause variables to escape to the heap:

Backing arrays of slices that get reallocated because an append would exceed their capacity. In cases where the initial size of a slice is known at compile time, it will begin its allocation on the stack. If this slice’s underlying storage must be expanded based on data only known at runtime, it will be allocated on the heap.

In our experience these four cases are the most common sources of mysterious dynamic allocation in Go programs. Fortunately there are solutions to these problems! Next we’ll go deeper into some concrete examples of how we’ve addressed memory inefficiencies in our production software.

Some Pointers

The rule of thumb is: pointers point to data allocated on the heap. Ergo, reducing the number of pointers in a program reduces the number of heap allocations. This is not an axiom, but we’ve found it to be the common case in real-world Go programs.

It has been our experience that developers become proficient and productive in Go without understanding the performance characteristics of values versus pointers. A common hypothesis derived from intuition goes something like this: “copying values is expensive, so instead I’ll use a pointer.” However, in many cases copying a value is much less expensive than the overhead of using a pointer. “Why” you might ask?

Copying objects within a cache line is the roughly equivalent to copying a single pointer. CPUs move memory between caching layers and main memory on cache lines of constant size. On x86 this is 64 bytes. Further, Go uses a technique called Duff’s device to make common memory operations like copies very efficient.

Pointers should primarily be used to reflect ownership semantics and mutability. In practice, the use of pointers to avoid copies should be infrequent. Don’t fall into the trap of premature optimization. It’s good to develop a habit of passing data by value, only falling back to passing pointers when necessary. An extra bonus is the increased safety of eliminating nil.

Reducing the number of pointers in a program can yield another helpful result as the garbage collector will skip regions of memory that it can prove will contain no pointers. For example, regions of the heap which back slices of type []byte aren’t scanned at all. This also holds true for arrays of struct types that don’t contain any fields with pointer types.

Not only does reducing pointers result in less work for the garbage collector, it produces more cache-friendly code. Reading memory moves data from main memory into the CPU caches. Caches are finite, so some other piece of data must be evicted to make room. Evicted data may still be relevant to other portions of the program. The resulting cache thrashing can cause unexpected and sudden shifts the behavior of production services.

Digging for Pointer Gold

Reducing pointer usage often means digging into the source code of the types used to construct our programs. Our service, Centrifuge, retains a queue of failed operations to retry as a circular buffer with a set of data structures that look something like this:

type retryQueue struct { buckets [][]retryItem // each bucket represents a 1 second interval currentTime time.Time currentOffset int } type retryItem struct { id ksuid.KSUID // ID of the item to retry time time.Time // exact time at which the item has to be retried }

The size of the outer array in buckets is constant, but the number of items in the contained []retryItem slice will vary at runtime. The more retries, the larger these slices will grow.

Digging into the implementation details of each field of a retryItem, we learn that KSUID is a type alias for [20]byte, which has no pointers, and therefore can be ruled out. currentOffset is an int, which is a fixed-size primitive, and can also be ruled out. Next, looking at the implementation of time.Time type[1]:

type Time struct { sec int64 nsec int32 loc *Location // pointer to the time zone structure }

The time.Time struct contains an internal pointer for the loc field. Using it within the retryItem type causes the GC to chase the pointers on these structs each time it passes through this area of the heap.

We’ve found that this is a typical case of cascading effects under unexpected circumstances. During normal operation failures are uncommon. Only a small amount of memory is used to store retries. When failures suddenly spike, the number of items in the retry queue can increase by thousands per second, bringing with it a significantly increased workload for the garbage collector.

For this particular use case, the timezone information in time.Time isn’t necessary. These timestamps are kept in memory and are never serialized. Therefore these data structures can be refactored to avoid this type entirely:

type retryItem struct { id ksuid.KSUID nsec uint32 sec int64 } func (item *retryItem) time() time.Time { return time.Unix(item.sec, int64(item.nsec)) } func makeRetryItem(id ksuid.KSUID, time time.Time) retryItem { return retryItem{ id: id, nsec: uint32(time.Nanosecond()), sec: time.Unix(), }

Now the retryItem doesn’t contain any pointers. This dramatically reduces the load on the garbage collector as the entire footprint of retryItem is knowable at compile time[2].

Pass Me a Slice

Slices are fertile ground for inefficient allocation behavior in hot code paths. Unless the compiler knows the size of the slice at compile time, the backing arrays for slices (and maps!) are allocated on the heap. Let’s explore some ways to keep slices on the stack and avoid heap allocation.

Centrifuge uses MySQL intensively. Overall program efficiency depends heavily on the efficiency of the MySQL driver. After using pprof to analyze allocator behavior, we found that the code which serializes time.Time values in Go’s MySQL driver was particularly expensive.

The profiler showed a large percentage of the heap allocations were in code that serializes a time.Time value so that it can be sent over the wire to the MySQL server.

This particular code was calling the Format() method on time.Time, which returns a string. Wait, aren’t we talking about slices? Well, according to the official Go blog, a string is just a “read-only slices of bytes with a bit of extra syntactic support from the language.” Most of the same rules around allocation apply!

The profile tells us that a massive 12.38% of the allocations were occurring when running this Format method. What does Format do?