#i'm a linux (fedora) user btw

Explore tagged Tumblr posts

Text

364 notes

·

View notes

Text

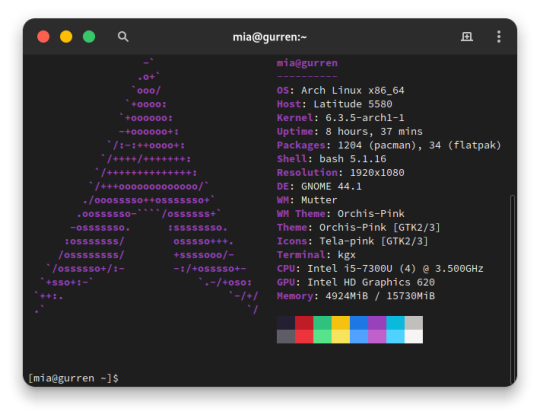

I use Arch, BTW

I made the switch from Ubuntu 23.04 to Arch Linux. I embraced the meme. After over a decade since my last failed attempt at daily driving Arch, I'm gonna put this as bluntly as I can possibly make it:

Arch is a solid Linux distribution, but some assembly is required.

But why?

Hear me out here Debian and Fedora family enjoyers. I have long had the Debian family as my go-to distros and also swallowed the RHEL pill and switched my server over to Rocky Linux from Ubuntu LTS. on another machine. More on that in a later post when I'm more acclimated with that. But for my personal primary laptop, a Dell Latitude 5580, after being continually frustrated with Canonical's decision to move commonly used applications, particularly the web browsers, exclusively to Snap packages and the additional overhead and just weird issues that came with those being containerized instead of just running on the bare metal was ultimately my reason for switching. Now I understand the reason for this move from deb repo to Snap, but the way Snap implements these kinds of things just leaves a sour taste in my mouth, especially compared to its alternative from the Fedora family, Flatpak. So for what I needed and wanted, something up to date and with good support and documentation that I didn't have to deal with 1 particular vendors bullshit, I really only had 2 options: Arch and Gentoo (Fedora is currently dealing with some H264 licensing issues and quite honestly I didn't want to bother with that for 2 machines).

Arch and Gentoo are very much the same but different. And ultimately Arch won over the 4chan /g/ shitpost that has become Gentoo Linux. So why Arch? Quite honestly, time. Arch has massive repositories of both Arch team maintained and community software, the majority of what I need already packaged in binary form. Gentoo is much the same way, minus the precompiled binary aspect as the Portage package manager downloads source code packages and compiles things on the fly specifically for your hardware. While yes this can make things perform better than precompiled binaries, the reality is the difference is negligible at best and placebo at worst depending on your compiler settings. I can take a weekend to install everything and do the fine tuning but if half or more of that time is just waiting for packages to compile, no thanks. That plus the massive resource that is the Arch User Repository (AUR), Arch was a no-brainer, and Vanilla arch was probably the best way to go. It's a Lego set vs 3D printer files and a list of hardware to order from McMaster-Carr to screw it together, metaphorically speaking.

So what's the Arch experience like then?

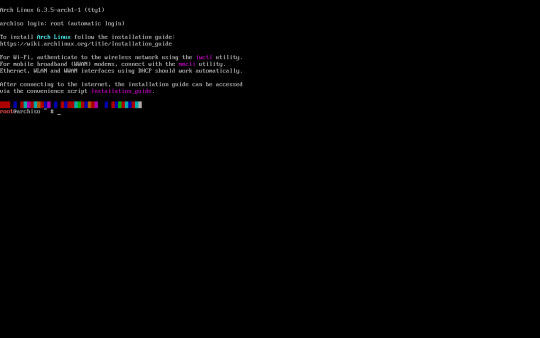

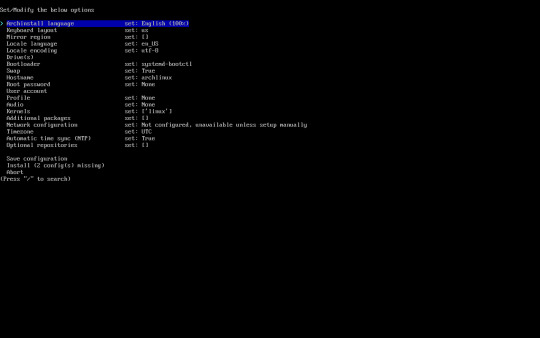

As I said in the intro, some assembly is required. To start, the installer image you typically download is incredibly barebones. All you get is a simple bash shell as the root user in the live USB/CD environment. From there we need to do 2 things, 1) get the thing online, the nmcli command came in help here as this is on a laptop and I primarily use it wirelessly, and 2) run the archinstall script. At the time I downloaded my Arch installer, archinstall was broken on the base image but you can update it with a quick pacman -S archinstall once you have it online. Arch install does pretty much all the heavy lifting for you, all the primary options you can choose: Desktop environment/window manager, boot loader, audio system, language options, the whole works. I chose Gnome, GRUB bootloader, Pipewire audio system, and EN-US for just about everything. Even then, it's a minimal installation once you do have.

Post-install experience is straightforward, albeit just repetitive. Right off the archinstall script what you get is relatively barebones, a lot more barebones than I was used to with Ubuntu and Debian Linux. I seemingly constantly was missing one thing for another, checking the wiki, checking the AUR, asking friends who had been using arch for even longer than I ever have how to address dumb issues. Going back to the Lego set analogy, archinstall is just the first bag of a larger set. It is the foundation for which you can make it your own further. Everything after that point is the second and onward parts bags, all of the additional media codecs, supporting applications, visual tweaks like a boot animation instead of text mode verbose boot, and things that most distributions such as Ubuntu or Fedora have off the rip, you have to add on yourself. This isn't entirely a bad thing though, as at the end if you're left with what you need and at most very little of what you don't. Keep going through the motions, one application at a time, pulling from the standard pacman repos, AUR, and Flatpak, and eventually you'll have a full fledged desktop with all your usual odds and ends.

And at the end of all of that, what you're left with is any other Linux distro. I admit previously I wrote Arch off as super unstable and only for the diehard masochists after my last attempt at running Arch when I was a teenager went sideways, but daily driving it on my personal Dell Latitude for the last few months has legitimately been far better than any recent experiences I've had with Ubuntu now. I get it. I get why people use this, why people daily drive this on their work or gaming machines, why people swear off other distros in favor of Arch as their go to Linux distribution. It is only what you want it to be. That said, I will not be switching to Arch any time soon on mission critical systems or devices that will have a high run time with very specific purposes in mind, things like servers or my Raspberry Pi's will get some flavor of RHEL or Debian stable still, and since Arch is one of the most bleeding edge distros, I know my chance of breakage is non zero. But so far the seas have been smooth sailing, and I hope to daily this for many more months to come.

39 notes

·

View notes

Text

Moving part #3: web server

I decided to create an online video game. I didnt pick a game engine yet but I have a good idea of how the client side will work (Bootstrap + React).

The client-side stuff runs in the browser, of course, but it doesn't get there magically. The static assets (CSS, JavaScript, images, etc) have to be hosted on a web server somewhere. And to make the user experience as great as possible, that web server should probably be hiding behind a Content Delivery Network although it's not mandatory for the time being.

My video game will likely be a single-page web application, which means that the content of the page will be generated dynamically in the browser via JavaScript (like Gmail or YouTube) rather than be mostly generated on a server somewhere (like IMDB or Amazon.com).

This means that I can safely postpone decisions regarding the API (the interaction between the web page and the backend, like a central database or something similar); all I need to decide at this point is where to host the static assets, which doesn't shackle me to any given provider for the API part.

Choosing a domain name

Having a cool domain name is always great, but it's not as important as it used to be. A lot of people nowadays go directly to a search engine page rather than type a domain name for the first time; after that the URL is in the browser cache and possibly bookmarked, so it matters even less.

It doesn't mean that the domain name is not important. For instance, I can never remember the domain name for the webcomic Cyanide & Happiness, and I have to do a web search every time rather than start typing the address in the address bar; a small annoyance, of course, but an annoyance nonetheless, and with no apparent reason.

For my video game, I already picked a name: the dollar puppet (for reasons that will become more clear later). Registering a domain name is easy and there are many providers, but this is one element for which I always pick AWS. Prices are low, privacy is included, there's a lot of TLD available, and I can choose to either host the DNS records on AWS Route 53 or point the DNS somewhere else.

Since I don't know yet if I'll use AWS a lot for this video game, I'll keep the zone that gets created by default on Route 53 when registering a domain name. I can delete it later, in the meantime it will cost me $0.50 / month, and while I find it expensive for what I get out of it, I can live with it.

Why do I find $0.50 / month expensive? Because I have, at the moment, about 45 registered domains (for no good reasons); that's about $500 in domain registration fees per year (unavoidable) and the Route 53 hosting would cost me another $250 / year while I can get that hosting for free with my $2/month Zoho email subscription.

(BTW - I love Zoho for email, it's a breeze to get a really really good setup for multiple domains)

As a Linode customer I can also get free DNS hosting there and the UI is really easy to use.

Back to the fundamental question

To cloud or not to cloud? There's no really bad decision possible here, because even if I pick a terrible provider for the web server, the stuff will be cached on a CDN so it will not impact end users that much.

The scenarios that make sense:

run nginx on a Linode VM, and use Cloudflare if I want a CDN

store the assets in AWS S3 (which can be configured to run as a web server) and use AWS CloudFront for the CDN

use Linode object storage (similar to S3) and again use Cloudflare for the CDN

Instead of AWS I could use Azure (they're as reliable and secure as AWS), and instead of Linode I could use DigitalOcean, but I'm used to AWS and Linode and I don't care enough to consider other providers at the moment.

The plot thickens: SSL certificates

In this day and age it makes no sense to use plain HTTP (or plain WebSocket, for that matter) so it's clear I'll have to deal with SSL certificates (more accurately: TLS certificates, but who cares).

There are two easy ways to get SSL certificates for free: letsencrypt, AWS certificates. On AWS, the certificates are only available for specific services (ex: CloudFront); when used for VMs, they cannot be assigned to a single instance, only to a load-balancer (which cannot be turned off to save money).

Pricing

Whether I'm using AWS or Linode, I'm looking at most at $5/month price tag for this part, so it doesn't matter much to me.

Deployment on Linode

Provisioning a web server on Linode is not a lot of work:

Provision a VM

Add my SSH keys

Configure the firewall

Install nginx

Install certbot (to allocate and renew SSL certificates)

Upload my code

In terms of Linux distro, I'm a huge fan of Fedora on the desktop, but for a server it's not ideal given that the release schedule is fast-paced and I don't have time to deal with updates. If I was to do this right, I would probably pick Arch Linux since it's a rolling release and is the easiest distro for server hardening, but it's too much work so this time I'd probably go with CentOS 8, which comes with the added benefit of working smoothly with podman for rootless containers.

Ubuntu would work fine too, but if I'm going to expose a server to the evil people of the interwebs, I don't see SELinux as optional so it's an extra step; I also don't see why I have to manually enable firewalld, or why I have to suffer through the traumatic experience of using nano when running visudo, or why I have to use adduser because the default options for useradd suck, so this time I'll pass on Ubuntu.

Deployment on AWS

Running a static website on AWS is very easy:

Create S3 buckets in 2 or 3 regions (the name is not really important) and configure them to allow static hosting (it's just a checkbox and a policy on the bucket). In theory it works with a single region but might as well get the belt & suspenders setup since the cost is more or less the same; also the multi-region setup allows for cool A/B testing and other fun deployment scenarios later.

Provision a SSL certificate matching the domain name

Create a CloudFront distribution and configure it to use the S3 buckets as origin servers

That's it. High availability and all that, in just a few clicks, although for some reason it does take a while for the CloudFront distribution to be online (sometimes 30 minutes).

Another cool thing with this setup is that I can put my static assets in CodeCommit (the dirt cheap AWS git service) and use CodeBuild to update the S3 buckets whenever the code changes. There are some shenanigans involved because of the multi-region setup but nothing difficult.

Some people prefer Github to CodeCommit because of additional features, and this can work too, but I'm not a git maniac and I don't want to deal with oauth to connect github to AWS so I'll pass on Github. And to be honest, if I was unable to use CodeCommit for some reason, I'd probably deploy a Gitea server somewhere rather than use Github which I find too opinionated.

Operations on Linode

Running my own web server is not a lot of work. Once nginx is configured, the only thing I would have to do would be a bit of monitoring and dealing with the occasional reboot when the Linode engineers have to update the hypervisor (they send notifications ahead of time and also once it's done). As long as I configure nginx (or the podman container) for autostart I don't have to do anything other than make sure it's still working after the reboot.

If I go with the object storage solution, it's even easier since there's no VM to deal with.

Operations on AWS

When using S3 and CloudFront, there's nothing else to do on AWS, except keeping an eye on certificate renewals and the occasional change in how the platform works (which doesn't happen a lot and comes with heads up long before it happens).

And the winner is...

All things considered, for the website hosting I'm going to use AWS S3 and CloudFront. If at some point Linode offers a CDN service I will probably revisit this, but for now I don't want to deal with origin servers hosted somewhere and the CDN hosted somewhere else.

0 notes