#it doesn't help that I can't use myself as a reliable model for some things because im hypermobile and my body does odd things

Text

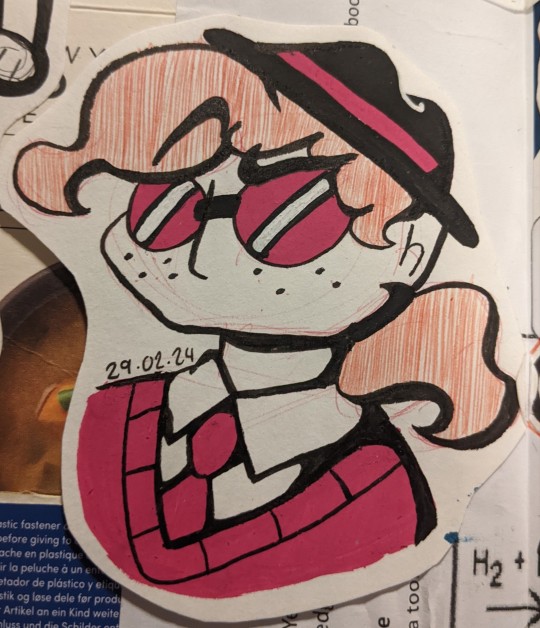

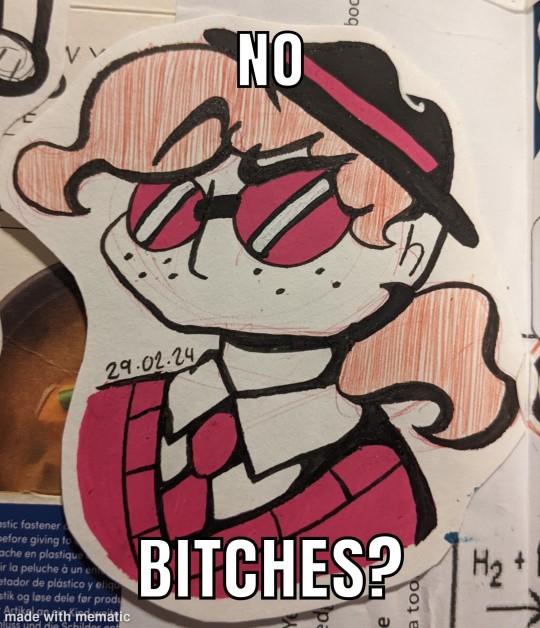

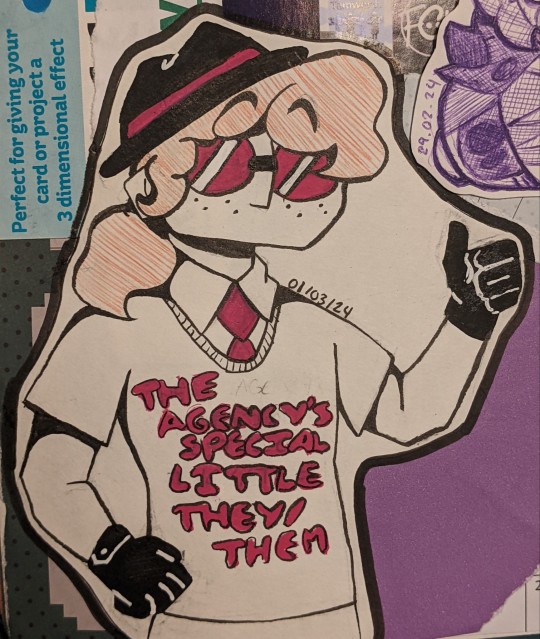

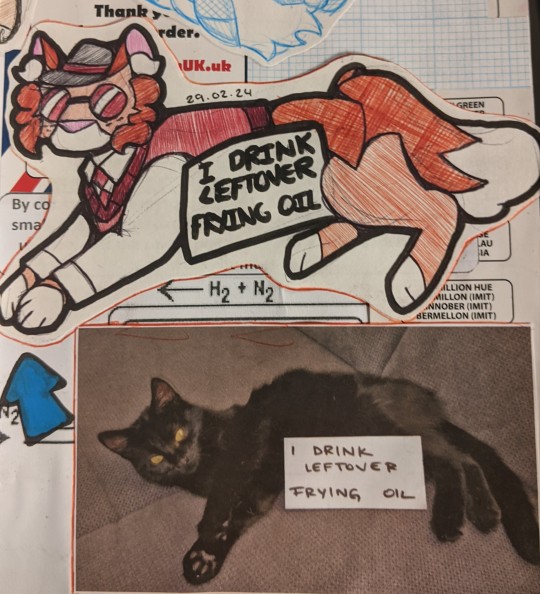

Phoenixposting..... they're shaped

#they're the only character i feel comfortable drawing....people r so scary to me because i'm a warrior cats fan in rehab#as you can probably tell by my kitty phoenix..#AUGH drawing people is so scary and UNCOMFORTABLE#it doesn't help that I can't use myself as a reliable model for some things because im hypermobile and my body does odd things#the thing is i know LOTS but I have very little confidence in my own ability because it's NEW and SCARY#I've tried so many times and always defaulted back to cats and i know i can only get better by keeping at it but the horrors persist.#i expect you to die#ieytd#[agent moose's art]#agent phoenix#ughshshdh I'll get there eventually i just get so anxious#posting helps a bit because this fandom...so kind...so gentle ....#+ i look up to a lot of artists here including ny two friends

22 notes

·

View notes

Text

gpt-4 prediction: it won't be very useful

Word on the street says that OpenAI will be releasing "GPT-4" sometime in early 2023.

There's a lot of hype about it already, though we know very little about it for certain.

----------

People who like to discuss large language models tend to be futurist/forecaster types, and everyone is casting their bets now about what GPT-4 will be like. See e.g. here.

It would accord me higher status in this crowd if I were to make a bunch of highly specific, numerical predictions about GPT-4's capabilities.

I'm not going to do that, because I don't think anyone (including me) really can do this in a way that's more than trivially informed. At best I consider this activity a form of gambling, and at worst it will actively mislead people once the truth is known, blessing the people who "guessed lucky" with an undue aura of deep insight. (And if enough people guess, someone will "guess lucky.")

Why?

There has been a lot of research since GPT-3 on the emergence of capabilities with scale in LLMs, most notably BIG-Bench.

Besides the trends that were already obvious with GPT-3 -- on any given task, increased scale is usually helpful and almost never harmful (cf. the Inverse Scaling Prize and my Section 5 here) -- there are not many reliable trends that one could leverage for forecasting.

Within the bounds of "scale almost never hurts," anything goes:

Some tasks improve smoothly, some are flatlined at zero then "turn on" discontinuously, some are flatlined at some nonzero performance level across all tested scales, etc. (BIG-Bench Fig. 7)

Whether a model "has" or "doesn't have" a capability is very sensitive to which specific task we use to probe that capability. (BIG-Bench Sections 3.4.3, 3.4.4)

Whether a model "can" or "can't do" a single well-defined task is highly sensitive to irrelevant details of phrasing, even for large models. (BIG-Bench Section 3.5)

It gets worse.

Most of the research on GPT capabilities (including BIG-Bench) uses the zero/one/few-shot classification paradigm, which is a very narrow lens that arguably misses the real potential of LLMs.

And, even if you fix some operational definition of whether a GPT "has" a given capability, the order in which the capabilities emerge is unpredictable, with little apparent relation to the subjective difficulty of the task. It took more scale for GPT-3 to learn relatively simple arithmetic than it did for it to become a highly skilled translator across numerous language pairs!

GPT-3 can do numerous impressive things already . . . but it can't understand Morse Code. The linked post was written before the release of text-davinci-003 or ChatGPT, but neither of those can do Morse Code either -- I checked.

On that LessWrong post asking "What's the Least Impressive Thing GPT-4 Won't be Able to Do?", I was initially tempted to answer "Morse Code." This seemed like as safe a guess as any, since no previous GPT was able to it, and it's certainly very unimpressive.

But then I stopped myself. What reason do I actually have to register this so-called prediction, and what is at stake in it, anyway?

I expect Morse Code to be cracked by GPTs at some scale. What basis to I have to expect this scale is greater than GPT-4's scale (whatever that is)? Like everything, it'll happen when it happens.

If I register this Morse Code prediction, and it turns out I am right, what does that imply about me, or about GPT-4? (Nothing.) If I register the prediction, and it turns out I am wrong, what does this imply . . . (Nothing.)

The whole exercise is frivolous, at best.

----------

So, here is my real GPT-4 prediction: it won't be very useful, and won't see much practical use.

Specifically, the volume and nature of its use will be similar to what we see with existing OpenAI products. There are companies using GPT-3 right now, but there aren't that many of them, and they mostly seem to be:

Established companies applying GPT in narrow, "non-revolutionary" use cases that play to its strengths, like automating certain SEO/copywriting tasks or synthetic training data generation for smaller text classifiers

Startups with GPT-3-centric products, also mostly "non-revolutionary" in nature, mostly in SEO/copywriting, with some in coding assistance

GPT-4 will get used to do serious work, just like GPT-3. But I am predicting that it will be used for serious work of roughly the same kind, in roughly the same amounts.

I don't want to operationalize this idea too much, and I'm fine if there's no fully unambiguous way to decide after the fact whether I was right or not. You know basically what I mean (I hope), and it should be easy to tell whether we are basically in a world where

Businesses are purchasing the GPT-4 enterprise product and getting fundamentally new things in exchange, like "the API writes good, publishable novels," or "the API performs all the tasks we expect of a typical junior SDE" (I am sure you can invent additional examples of this kind), and multiple industries are being transformed as a result

Businesses are purchasing the GPT-4 enterprise product to do the same kinds of things they are doing today with existing OpenAI enterprise products

However, I'll add a few terms that seem necessary for the prediction to be non-vacuous:

I expect this to be true for at least 1 year after the release of the commercial product. (I have no particular attachment to this timeframe, I just need a timeframe.)

My prediction will be false in spirit if the only limit on transformative applications of GPT-4 is monetary cost. GPT-3 is very pricey now, and that's a big limiting factor on its use. But even if its cost were far, far less, there would be other limiting factors -- primarily, that no one really knows how to apply its capabilities in the real world. (See below.)

(The monetary cost thing is why I can't operationalize this beyond "you know what I mean." It involves not just what actually happens, but what would presumably happen at a lower price point. I expect the latter to be a topic of dispute in itself.)

----------

Why do I think this?

First: while OpenAI is awe-inspiring as a pure research lab, they're much less skilled at applied research and product design. (I don't think this is controversial?)

When OpenAI releases a product, it is usually just one of their research artifacts with an API slapped on top of it.

Their papers and blog posts brim with a scientist's post-discovery enthusiasm -- the (understandable) sense that their new thing is so wonderfully amazing, so deeply veined with untapped potential, indeed so temptingly close to "human-level" in so many ways, that -- well -- it surely has to be useful for something! For numerous things!

For what, exactly? And how do I use it? That's your job to figure out, as the user.

But OpenAI's research artifacts are not easy to use. And they're not only hard for novices.

This is the second reason -- intertwined with the first, but more fundamental.

No one knows how to use the things OpenAI is making. They are new kinds of machines, and people are still making basic philosophical category mistakes about them, years after they first appeared. It has taken the mainstream research community multiple years to acquire the most basic intuitions about skilled LLM operation (e.g. "chain of thought") which were already known, long before, to the brilliant internet eccentrics who are GPT's most serious-minded user base.

Even if these things have immense economic potential, we don't know how to exploit it yet. It will take hard work to get there, and you can't expect used car companies and SEO SaaS purveyors to do that hard work themselves, just to figure out how to use your product. If they can't use it, they won't buy it.

It is as though OpenAI had discovered nuclear fission, and then went to sell it as a product, as follows: there is an API. The API has thousands of mysterious knobs (analogous to the opacity and complexity of prompt programming etc). Any given setting of the knobs specifies a complete design for a fission reactor. When you press a button, OpenAI constructs the specified reactor for you (at great expense, billed to you), and turns it on (you incur the operating expenses). You may, at your own risk, connect the reactor to anything else you own, in any manner of your choosing.

(The reactors come with built-in safety measures, but they're imperfect and one-size-fits-all and opaque. Sometimes your experimentation starts to get promising, and then a little pop-up appears saying "Whoops! Looks like your reactor has entered an unsafe state!", at which point it immediately shuts off.)

It is possible, of course, to reap immense economic value from nuclear fission. But if nuclear fission were "released" in this way, how would anyone ever figure out how to capitalize on it?

We, as a society, don't know how to use large language models. We don't know what they're good for. We have lots of (mostly inadequate) ways of "measuring" their "capabilities," and we have lots of (poorly understood, unreliable) ways of getting them to do things. But we don't know where they fit in to things.

Are they for writing text? For conversation? For doing classification (in the ML sense)? And if we want one of these behaviors, how do we communicate that to the LLM? What do we do with the output? Do they work well in conjunction with some other kind of system? Which kind, and to what end?

In answer to these questions, we have numerous mutually exclusive ideas, which all come with deep implementation challenges.

To anyone who's taken a good look at LLMs, they seem "obviously" good for something, indeed good for numerous things. But they are provably, reliably, repeatably good for very few things -- not so much (or not only) because of their limitations, but because we don't know how to use them yet.

This, not scale, is the current limiting factor on putting LLMs to use. If we understood how to leverage GPT-3 optimally, it would be more useful (right now) than GPT-4 will be (in reality, next year).

----------

Finally, the current trend in LLM techniques is not very promising.

Everyone -- at least, OpenAI and Google -- is investing in RLHF. The latest GPTs, including ChatGPT, are (roughly) the last iteration of GPT with some RLHF on top. And whatever RLHF might be good for, it is not a solution for our fundamental ignorance of how to use LLMs.

Earlier, I said that OpenAI was punting the problem of "figure out how to use this thing" to the users. RLHF effectively punts it, instead, to the language model itself. (Sort of.)

RLHF, in its currently popular form, looks like:

Some humans vaguely imagine (but do not precisely nail down the parameters of) a hypothetical GPT-based application, a kind of super-intelligent Siri.

The humans take numerous outputs from GPT, and grade them on how much they feel like what would happen in the "super-intelligent Siri" fantasy app.

The GPT model is updated to make the outputs with high scores more likely, and the ones with low scores less likely.

The result is a GPT model which often talks a lot like the hypothetical super-intelligent Siri.

This looks like an easier-to-use UI on top of GPT, but it isn't. There is still no well-defined user interface.

Or rather, the nature of the user interface is being continually invented by the language model, anew in every interaction, as it asks itself "how would (the vaguely imagined) super-intelligent Siri respond in this case?"

If a user wonders "what kinds of things is it not allowed to do?", there is no fixed answer. All there is is the LM, asking itself anew in each interaction what the restrictions on a hypothetical fantasy character might be.

It is role-playing a world where the user's question has an answer. But in the real world, the user's question does not have an answer.

If you ask ChatGPT how to use it, it will roleplay a character called "Assistant" from a counterfactual world where "how do I use Assistant?" has a single, well-defined answer. Because it is role-playing -- improvising -- it will not always give you the same answer. And none of the answers are true, about the real world. They're about the fantasy world, where the fantasy app called "Assistant" really exists.

This facade does make GPT's capabilities more accessible, at first blush, for novice users. It's great as a driver of adoption, if that's what you want.

But if Joe from Midsized Normal Mundane Corporation wants to use GPT for some Normal Mundane purpose, and can't on his first try, this role-play trickery only further confuses the issue.

At least in the "design your own fission reactor" interface, it was clear how formidable the challenge was! RLHF does not remove the challenge. It only obscures it, makes it initially invisible, makes it (even) harder to reason about.

And this, judging from ChatGPT (and Sparrow), is apparently what the makers of LLMs think LLM user interfaces should look like. This is probably what GPT-4's interface will be.

And Joe from Midsized Normal Mundane Corporation is going to try it, and realize it "doesn't work" in any familiar sense of the phrase, and -- like a reasonable Midsized Normal Mundane Corporation employee -- use something else instead.

ETA: I forgot to note that OpenAI expects dramatic revenue growth in 2023 and especially in 2024. Ignoring a few edge case possibilities, either their revenue projection will come true or the prediction in this post will, but not both. We'll find out!

231 notes

·

View notes

Text

3:53 PM 10/16/2022

I think I may switch up my Inktober drawings. I was running out of ideas, but now that dimiclaudeblaigan tag-commented about how much they love Catmitri and wondering what schemes Claude is going to do with the potions in his witch cauldron, I've suddenly got all these scenario ideas. What type of spell IS Claude casting?

Maybe Catmitri was originally a cat, Claude-witch's familiar? Then he changes to human form and doesn't understand why he can't just snuggle up with Claude anymore, like he did when in his cat form. lol

Or maybe Catmitri is witch-Dimitri, stuck in cat form, and Claude his helping him change out of it with a more manual spell? Maybe it works for a bit, but then the transformation spell doesn't hold, and he changes back into a cat. lol

So I should do a "one panel per day" comic. Each day of Inktober for the rest of the month, will be sequential, telling a story.

But that also got me thinking about how my daydreams are all in scale, not chibi, but I can't draw scale, realistic humans…unless I'm just copying a model or reference photo in front of me. It reminded me how stunted I feel when I draw. Out of all the forms of creative expression I have, drawing/illustration is the one that I can feel the weight of my restraints, the most. It is full of obstacles to my expression. I know what Flow feels like. I feel it when I write, and when I used to play fighting videogames daily. Hell, I've felt more Flow when I'm carving printmaking blocks or sculpting clay, than when I draw. Drawing is just this clunky thing where I feel I can't fully express myself. I don't have the skills in my toolbox to express the images in my mind. And it's so frustrating, when I know what seamless expression of what is in my mind, feels like, through videogames and writing. Hell, I've felt more flow in quilling and papercrafts. But illustration…? Eep. But then again, maybe being so aware of this skill/expression gap, to the point where it's painful and frustrating, is a good sign. It could mean I'm on the cusp of breaking through this barrier. Awareness is the first step in improvement, after all.

So I find myself suddenly and unusually motivated to practice some figure studies, so I can better express the daydreamed images (of DMCL, this Catmitri-human story) in my mind. I normally hate looking at humans---photorealistic humans. I couldn't even get myself to study human faces more, back in 2018, when I also knew I had to study realistic human faces, so I chose to draw portraits of tokusatsu actors that I like. I thought that drawing characters that I love would make it bearable. I did ONE sketch of Mahiro Takasugi as Micchy Kureshima---a character I love!---and I just couldn't do anymore. Then again, I do get discouraged whenever an illustration I've spent time on turns out displeasing to look at. Maybe I would have had more luck with charcoal (or pastels), since I have more experience drawing realistic humans with that medium.

At this point, I have to sneak in little bits of encouragement into every drawing I do, before I catastrophize into losing all hope in improving my illustration skills altogether. Like hiding medicine in a cat's food. lol Or alternating realistic (human) studies with fanart with reliably good results. The problem with that is trying to do 2 drawings in one day, everyday. I usually run out of time/energy by the time I get the fanart done for revitalizing my spirit and motivation. Then I end up with no time/energy left for the studies I need and really should do.

I'll figure something out. If I can simplify my drawings, then I can work faster. Maybe I'll try pencil/graphite (and watercolors) instead of charcoal. Will that go faster? I just have to make my Inktober drawings more simplistic. And I know that drawing Claude and Catmitri is faster than drawing 2 human chibi, so my idea to make the rest of Inktober a sequential comic about Claude-witch and Catmitri will already save some time. Or they can both be cats and that'll save me even more time. =^.^=

So the problem is that I'm already doing Inktober. Can I fit in 2 drawings per day? I'm a VERY slow worker, in EVERYTHING that I do. Is the rest of October going to be all-nighters? I've finally started making some progress in fixing my reversed sleep patterns. I don't want to purposefully wreck it.

#october2022 plans#drawing practice#inktober2022#daydreams#headcanons#KhalidMitya#flow#drawing#progression#processing thoughts#please ignore my idiocy#project ideas

1 note

·

View note