#nand vs nor flash

Explore tagged Tumblr posts

Text

https://www.futureelectronics.com/p/semiconductors--memory--flash--norflash--serial/s25fl256sagmfi003-infineon-3057273

Micron nor flash, SPI nor flash, memory card, Compact flash memory

S25FL256S Series 256 Mb (32M x 8) 3.6 V SMT SPI Flash Memory - SOIC-16

#Flash Memory#Serial NOR Flash (SPI) Memory#S25FL256SAGMFI003#Infineon#micron nor flash#spi nor flash#memory card#Compact#nand vs nor flash#Memory ICs#USB flash memory storage#Winbond SPI Flash#flash memory card#memory chip

1 note

·

View note

Text

NOR Flash Memory, Flash memory card, USB flash, computer flash, USB flash memory

FL-S Series 256 Mb (32 M x 8) 3.6V 133MHz Non-Volatile SPI Flash Memory - WSON-8

#Flash Memory#Serial NOR Flash (SPI) Memory#S25FL256SAGNFI001#Infineon#NOR Flash Memory#Flash memory card#USB flash#computer flash#Flash memory storage#SPI Flash Memory#What is nor flash memory#nor flash memory chip#nand vs nor flash

1 note

·

View note

Text

Choose between NAND vs. NOR flash memory

http://i.securitythinkingcap.com/SmKfdv

0 notes

Text

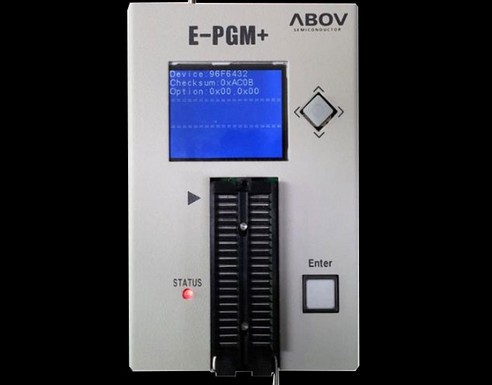

The Common Man’s Guide to Car programmer – OROD Shenzhen

In recent years, car programmer users have increased at a tremendous rate. Semiconductor technology uses car programming at an advanced level. As there are many options available in the market, it is becoming harder to choose the best universal car programmer.

In this article, we will look at some factors that you must consider before getting the programmer.

1. Programming speed In this era of the 9th generation core processor, speed matters the most. It does not matter whether you use it for IoT projects or any other thing. Consider choosing the ultra-fast programming speed that verifies 64 MB NOR flash memory and 1 GB NAND Flash. It should be able to program at the theoretical limit of the IC.

2. Mode of operation The operation of the chip like program or verification should start as soon as the disk is inserted in the socket. It helps in reducing operation time and lessens errors.

3. Pin detection and insertion check During the test, the backward insertion test is necessary. Moreover, poor pin contact between socket pins and IC pins must be detected before using the car programmer. Please note that it is an essential feature of modern production programmers, which can decrease errors caused by negligent operation.

4. Universal socket adapter vs. respective adapters Make sure to know the difference between universal socket adapters and different adapters for the same type. The main reason behind this is that they could be used with varying ICs of the same package type. On the other hand, non-universal adapters require more than one socket adapters for only one device. So, choose wisely to save money!

Whatever we discussed in this article applies to chip programmers, car programmers, universal programmers of all manufacturers.

We advise you to do your proper research before buying the ideal programmer.

If you want more information regarding the same, then visit our official website now! https://www.chip-programmer.com/.

0 notes

Text

How android is different from Linux?

Linux runs across various systems in the market and its the majority of a community-based setup. It's a monolithic OS where the operating system itself executes entirely from the kernel. Android is an open-source OS built majority for tablets & mobiles. On mobile devices, Android plays a crucial role but yet it is exactly a structure that stands on top of this Linux kernel.

Linux

Linux was generally a derived version of Unix.

Oftentimes compared with Commercial UNIX systems but it is much more reliable than desktop-oriented systems ideally built for power users and programmers. Properties of Linux systems are as follows:

Open source and can be downloaded with ease

Developed by a group of internet developers

Installation can also be performed easily

Quiet stable OS

Linux also provides trade services & database support for organizations such as Amazon, the German army, US Post Office, etc. Particularly internet service providers & internet providers have grown fond of Linux as firewall, proxy- and web server, and you’ll find a Linux box within reach of every UNIX system administrator who appreciates a comfortable management station.

Android

Android was initially to satisfy low powered devices & uses Java, executed on virtual machines. Android Inc. developed the platform initially purchased by Google.

Android OS systems are frequently updated and a new name is associated with each release. the frequent release of versions makes several pieces of information on the system obsolete and at the same pace. Common features of Android OS,

mobile development open platform

hardware reference well suitable primarily for mobile devices

Linux 2.6 is used for powering the system

an application & UI framework involved

If you want to get Android Training in Jaipur, join Techno Globe Institute.

Key Difference b/w Linux & Android

Let’s discuss some of the major differences b/w Linux & Android:

Linux is developed majorly for personal & office system users, Android is built peculiarly for tablet & mobile kind of devices.

Android holds a larger footprint in comparison to LINUX,

Generally, multiple architecture support is provided by Linux & Android supports only two major architectures, ARM & x86. The ARM platform is comprehensive on mobile phones while the Android-x86 targets mostly the Mobile Internet Devices. This functionality acts as the fundamental difference b/w the two Operating Systems, it acts as a key difference b/w Linux vs. Android.

Power management is achieved using APM, Android relies mainly on its power management module which stands close to Linux power extensions.

Linux is a famous OS whereas Android is a structure executing on top of the Linux kernel.

Linux system uses magnetic drives & standard Linux systems use the “EXT” journaling file system, to provide a robust file system, embedded systems use solid-state memory devices like NOR for code execution & NAND for storage. On the other side, android systems use flash memory for storage-related needs.

Linux systems use the GNU C library, Android uses a C library. Booting the Android device, kernel loads it just like it would on a Linux distribution. Though most of the software is diverse. A GNU C library isn’t associated with android which is used on standard Linux distributions.

Android uses Dalvik virtual machine to run its applications, several top mobile developers use JVM for its execution. Linux on the other end does not take in any VM’s for executing.

0 notes

Text

Optane 101: Memory or storage? Yes.

By now, you’ve seen the word “Optane” bandied about on VentureBeat (such as here and here) and probably countless other places — and for good reason. Intel, the maker of all Optane products, is heavily promoting the results of its decade-long R&D investment in this new memory/storage hybrid. But what exactly is Optane, and what is it good for? (Hint: Massively memory-hungry applications like analytics and AI). If you’re not feeling up to speed, don’t worry. We’ll have you covered on all the basics in the next few minutes.

The bottom line

Optane is a new Intel technology that blurs the traditional lines between DRAM memory and NAND flash storage.

Optane DC solid state drives provide super-fast data caching and agile system expansion.

Capacity up to 512GB per persistent memory module; configurable for persistent or volatile operation; ideal for applications that emphasize high capacity and low latency over raw throughput

Strong contender for data centers; future for clients. Costs and advantages are case-specific, impacted by DRAM prices. Early user experience still emerging.

Now, let’s dive into some more detail.

Media vs. memory vs. storage

First, understand that Intel Optane is neither DRAM nor NAND flash memory. It’s a new set of technologies based on what Intel calls 3D XPoint media, which was co-developed with Micron. (We’re going to stumble around here with words like media, memory, and storage, but will prefer “media.”) 3D XPoint works like NAND in that it’s non-volatile, meaning data doesn’t disappear if the system or components lose power.

However, 3D XPoint has significantly lower latency than NAND. That lets it perform much more like DRAM in some situations, especially with high volumes of small files, such as online transaction processing (OLTP). In addition, 3D XPoint features orders-of-magnitude higher endurance than NAND, which makes it very attractive in data center applications involving massive amounts of data writing.

When combined with Intel firmware and drivers, 3D XPoint gets branded as simply “Optane.”

So, is Optane memory or storage? The answer depends on where you put it in a system and how it gets configured.

Optane memory

Consider Intel Optane Memory, the first product delivered to market with 3D XPoint media. Available in 16GB or 32GB models, Optane memory products are essentially tiny PCIe NVMe SSDs built on the M.2 form factor. They serve as a fast cache for storage. Frequently loaded files get stashed on Optane memory, alleviating the need to find those files on NAND SSDs or hard drives, which will entail much higher latency. Optane memory is targeted at PCs, but therein lies the rub. Most PCs don’t pull that much file traffic from storage and don’t need that sort of caching performance, And because, unlike NAND, 3D XPoint doesn’t require an erase cycle when writing to media, Optane is strong on write performance. Still, most client applications don’t have that much high-volume, small-size writing to do.

Optane SSDs: Client and data center

Next came Intel Optane SSDs and Data Center (DC) SSDs. Today, the Intel Optane SSD 8 Series ships in 58GB to 118GB capacities, also using the M.2 form factor. The 9 Series reaches from 480GB to 1.5TB but employs the M.2, U.2, and Add In Card (AIC) form factors. Again, Intel bills these as client SSDs, and they certainly have good roles to play under certain conditions. But NAND SSDs remain the go-to for clients across most desktop-class applications, especially when price and throughput performance (as opposed to latency) are being balanced.

Things change once we step into the data center. The SKUs don’t look that different from their client counterparts — capacities from 100GB to 1.5TB across U.2, M.2, and half-height, half-length (HHHL) AIC form factors — except in two regards: price and endurance. Yes, the Intel Optane SSD DC P4800X (750GB) costs roughly double the Intel Optane SSD 905P (960GB). But look at its endurance advantage: 41 petabytes written (PBW) versus 17.52 PBW. In other words, on average, you can exhaust more than two consumer Optane storage drives — and pay for IT to replace them — in the time it takes to wear out one DC Optane drive.

Optane DC Persistent Memory

Lastly, Intel Optane DC Persistent Memory modules (DCPMM) place 3D XPoint media on DDR4 form factor memory sticks. (Note: There’s no DDR4 media on the module, but DCPMMs do insert into the DDR4 DIMM sockets on compatible server motherboards.) Again, Optane media is slower than most DDR4, but not much slower in many cases. Why use it, then? Because Optane DCPMMs come in capacities up to 512GB – much higher than DDR4 modules, which top out at 64GB each. Thus, if you have applications and workloads that prioritize capacity over speed, a common situation for in-memory databases and servers with high virtual machine density, Optane DCPMMs may be a strong fit.

The value proposition for DCPMM was stronger in early 2018 and early 2019, when DRAM prices were higher. This allowed DPCMMs to win resoundingly on capacity and per-gigabyte price. As DRAM prices have plummeted, though, the two are at near-parity, which is why you now hear Intel talking more about the capacity benefits in application-specific settings. As Optane gradually proves itself in enterprises, expect to see Intel lower DCPMM prices to push the technology into the mainstream.

As for total performance, DCPMM use case stories and trials are just emerging from the earliest enterprise adopters. Intel has yet to publish clear data showing “Optane DCPMMs show X% performance advantage over DRAM-only configurations in Y and Z environments.” This is partially because server configurations, which often employ virtualization and cross-system load sharing, can be very tricky to typify. But it’s also because the technology is so new that it hasn’t been widely tested. For now, the theory is that large DCPMM pools, while slower than DRAM-only pools, will reduce the need for disk I/O swaps. That will accelerate total performance above and beyond the levels reduced by adopting a somewhat slower media.

0 notes