#not going through an unsecured website downloading everything that comes up

Text

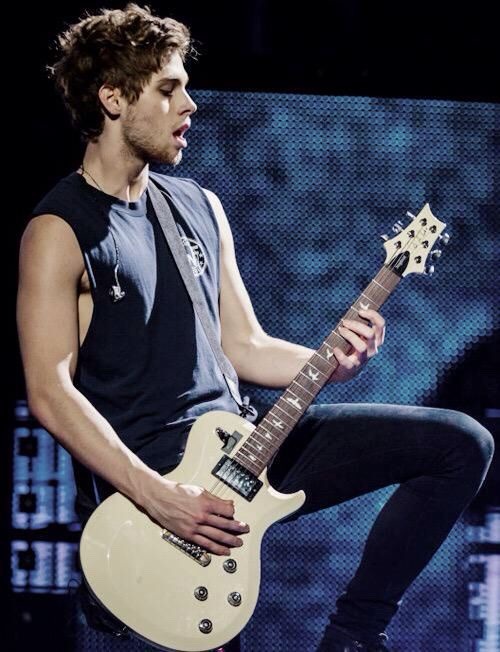

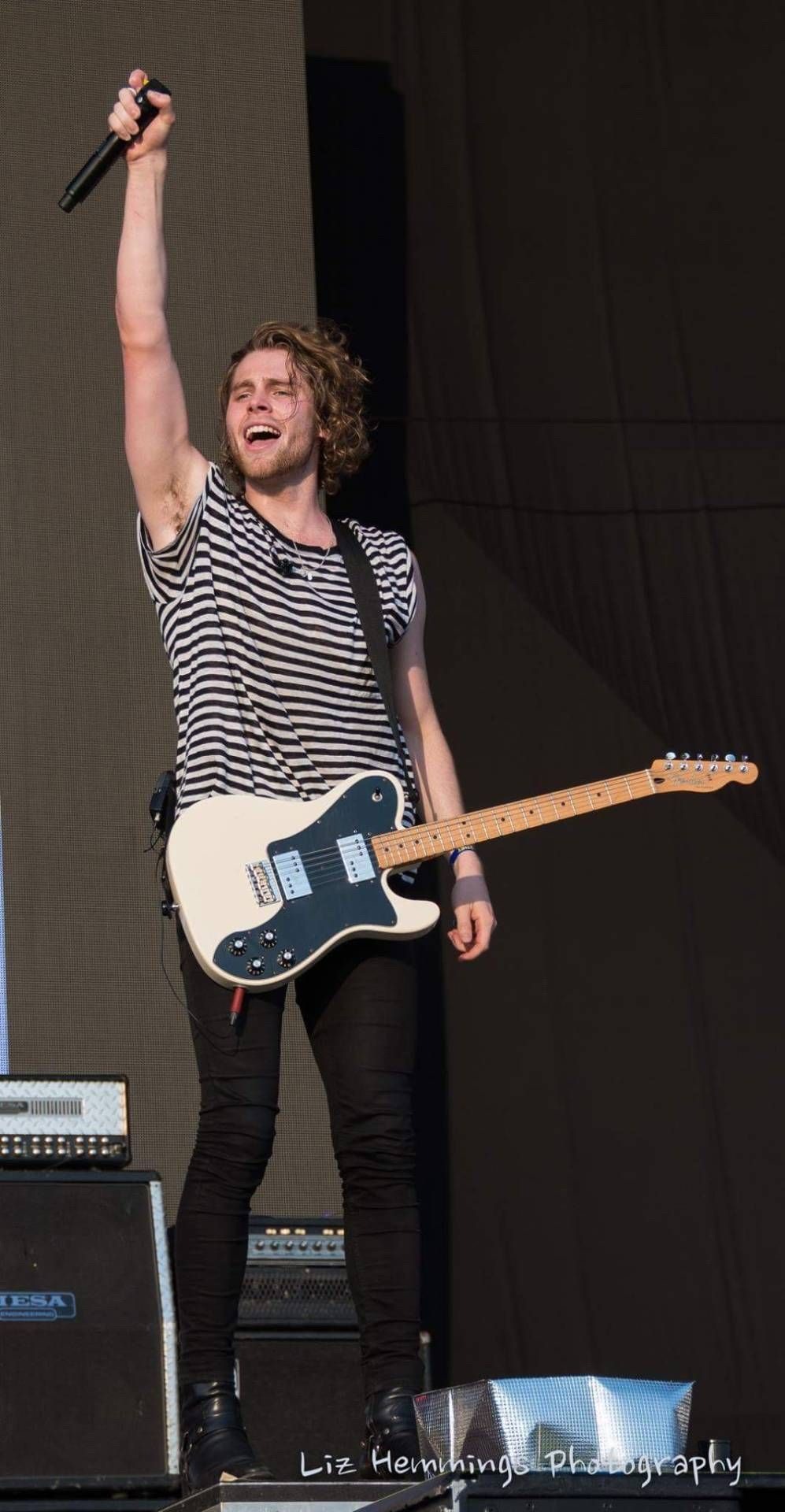

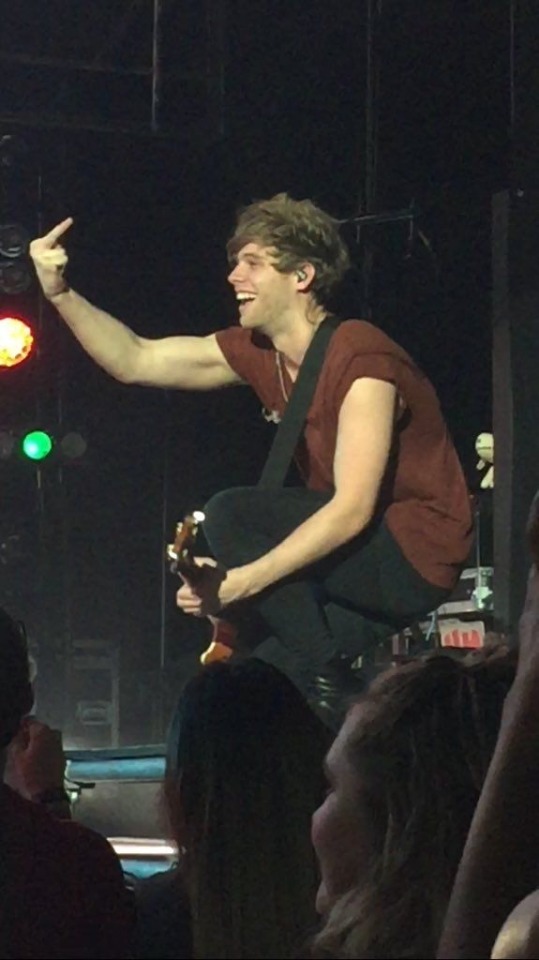

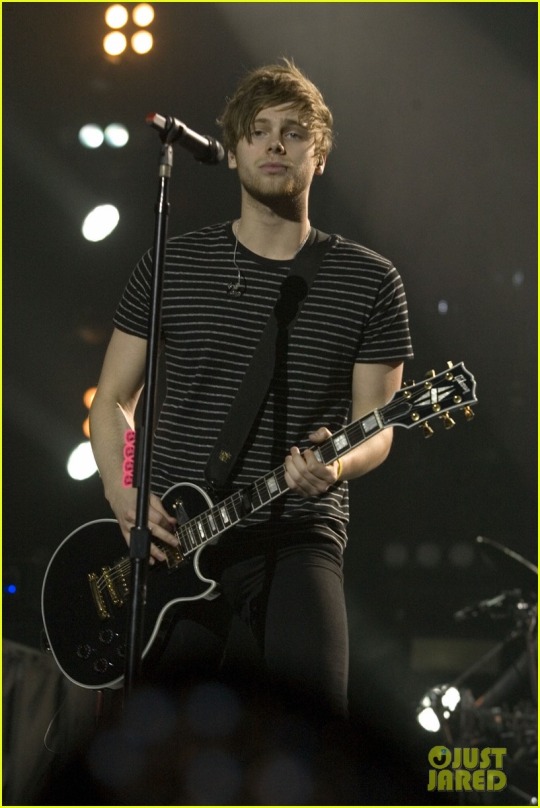

🎶 My rockstar boyfriend 🎸

#so pretty#blame google for this post#I’m in class rn i should be studying#not going through an unsecured website downloading everything that comes up#putting all of my education down the drain for you hemmings#🥹🥹🥹#luke hemmings#5sos#lip-rings-and-quiffs#lh#luke 5sos#luke pics#luke robert hemmings#5secondsofsummer#luke as a boyfriend

49 notes

·

View notes

Text

Should You Recover Data On Your Own?

There are so many things going on in the world right now many of you probably can’t keep up with all these changes anymore. The rapid rise in technology is the major factor as to why the world that our parents grew up in is but a distant shadow only. From young kids to adults, technology has become a crucial part of our lives. Just think of all those handy smartphones on your hand or in your pocket and the tablets that keep kids entertained as their harried parents trying to juggle the increasing demands of work and home life as prices keep on going up as the price we have to pay for modern living. Seeing how much we all depend on technology, it would surely be a major hassle if we lose access to one of these modern contrivances that holds so much of our dear life.

For instance, computers that break down can spell big trouble especially if you store a lot of your data in it and you didn’t have the foresight to keep a copy in a different storage space ahead of time. But of course, you can never tell when tragedy hits you so you end up trying all sorts of hacks to get your lost data back. Take note that even if you do get your computer fixed, it is still not an assurance that you can recover all valuable files and other tidbits of information you stored in there. Now, most people don’t have the technical know-how so they just let the experts take care of the problem for them but since you can pretty much research everything on your own now over the web, those who are brave enough dare to take on the challenge of recovering their own data but should you?

There are hundreds of software utility tools that can be downloaded off the internet for free, and offer promises of fast and easy data retrieval. Unfortunately, many of these are filled with malware, and using them will expose the data to theft or worse.

Avoid using free utility services that do not have an established reputation as effective and virus-free tools. For example, SanDisk offers multiple recovery products (mainly for SD cards) that are virus-free and well respected among industry professionals.

Despite the availability of some recovery options, the best way to avoid going through the expensive recovery process is to proactively manage data so that if a device fails, it does not have as big of an impact on your productivity. You simply buy a new machine and go about your day.

(Via: https://www.siliconrepublic.com/enterprise/data-recovery-diy-tips)

While there are risks, there are also countless resources you can turn to for help. You can see a lot of posts about it on the web. Some even provide step-by-step tutorial while others even have it on video so you no longer have to use your imagination to put the pieces together. You have to take into consideration, though, the make and model of your device because different hardware may look and work differently too. Again, only attempt a DIY data recovery if you are sure of what you are doing or have at least an idea how a computer works and can work your way around it or risk causing more trouble than previously.

The first line of defense for a computer should be a login PIN number, which offers a layer of protection against theft. Another tactic is to install (and update frequently) a firewall program and a well-regarded antivirus and malware utility. You can also encrypt your data which makes it nearly impossible to read unless in possession of the encryption key. Update the O/S frequently to pull in the latest security patches, and use multi-faceted tools that will warn you about possible phishing emails, unsecure websites, and other hacking attempts.

Recovery should always be seen as a "last resort" that’s used to pull the most important data. It’s best to treat a laptop or desktop as simply a gateway for reaching the internet and a way to power software. With the cloud, there’s no longer any reason to use a computer as a storage device. So if something goes wrong with the machine, then simply buy a new one for $500 and dive back into work.

(Via: https://betanews.com/2017/12/05/diy-data-recovery-challenges-and-alternatives/)

Simple computer issues may be fixed even if you only DIY but it might not work on devices that have been severely damaged and beaten especially by the elements. You will need far more extensive knowledge and a wide array of tools and clean room set-ups to deal with this type of mess especially if you are resolute in getting your data back. And this is something you can’t just Google on the web. Only knowledgeable, skilled, and experienced computer technicians can do the job right on the first try but it may come at a price that your data rightly deserves. To save yourself from the woes of data recovery, learn saving your data in multiple locations like the cloud and other external storage devices because you won’t likely getting stressed out over things like this that you could’ve prevented in the first place.

Should You Recover Data On Your Own? is available on Euro Toques

from https://www.euro-toques.org/2018/05/25/should-you-recover-data-on-your-own/

4 notes

·

View notes

Text

13 Online Banking Security Tips You Should Know About

Banking online is fast and convenient. This is what makes it so popular with people all over the world. However, it also exposes you to more threats. We want you and your money to stay safe, so we’ve put together a list of the best online banking security tips available.

Don’t Use a Public WiFi Network

Never access your banking information over an unsecured or public WiFi network. Since they’re usually not encrypted, it’s easy for hackers to get in and steal information. Instead, save your banking and account checking for when you’re at home. Your own network is private, and there’s a low risk of getting your information stolen this way.

Enable Two-Factor Authentication

Two-factor authentication is another security layer that you can add to all of your banking information, including the best checking accounts. Every time someone tries to log into your account, your bank will automatically send you an email or text alert. This alert will contain a unique code that you have to put in before you can log into your banking account.

Don’t Click an Email to Log into Your Account

Your bank may periodically send you emails about your account. However, you don’t want to click them and allow them to route you to your bank. Instead, go straight to your bank’s website and log in through there. You can access your paperless inbox and look at any emails you receive. This will reduce the risk of becoming a victim of a phishing scam.

Create and Use a Strong Password

Although you may be tempted to create one password and use it for everything, you want to have a unique and strong password for your banking information. You want to use uppercase letters, lowercase letters, numbers, and symbols. Additionally, you should plan on changing this password regularly.

See Also: 6 Foolproof Tips for Creating Powerful Passwords

Monitor Your Accounts

Check your accounts at least once a week. Look at all of your transactions and make sure that they’re legitimate. You want to have authorized them all. Make sure that you report anything suspicious to your bank. If you routinely shop online from your bank account, double check it after every purchase. This will help to ensure that everything is correct.

Use the Mobile App for Your Bank

Did you know that your bank encrypts their mobile app? It’s less open to attacks than the bank’s website, and it’s most likely more secure. Download your bank’s specific app and use this to check your accounts. Just remember to download any updates that come through because this will help to ensure that the security certificates are up to date.

Lock Your Devices

Even though your banking information is usually secure, always lock your devices. This includes your phone, laptop, tablet, or anything you use to access your banking information. You can use a password, fingerprint, or PIN to do this. Remember, the more difficult it is for someone to access your information, the less likely it is that someone will be able to steal it.

Switch Bluetooth Off When It’s Not in Use

One of the newer ways thieves are gaining access to information is via Bluetooth. They simply hack into your device through your Bluetooth connection and take control of certain functions. You can avoid this by switching your Bluetooth off every time you’re not using it.

Disable Automatic Login

Even if you’re the only one to access a device, you want to disable the automatic login. Also, disable autofill and remembering passwords. You never know when someone will get into your device will ill intent. Clear all of your saved information and disable autofill for your bank. This will make it more difficult for someone to get into your account.

Check for a Secure Connection

Always use a secure connection when you access your sensitive information. This includes looking for a little green padlock in the address bar. It should also show “https” instead of just “http.” This will help ensure that you’re on and using an encrypted connection. They have more layers of security than a standard connection.

Keep Your Devices Current

Yes, downloading constant updates can be time-consuming. But, you’re usually downloading the newest security features and patches each time you update your device. Instead of putting it off for weeks, update it straight away. Do this consistently to keep all of your devices secure.

See Also: 8 Easy Steps To Your Browser Security And Privacy

Always Log Out

Most banks have an automatic feature that logs you out after a set period of inactivity. However, you don’t want to rely on this. Instead, get in the habit of logging out each time you finish with your banking session. This will prevent someone from simply sitting down at your device and moving things in your account.

Use Anti-Virus Software

Having a licensed anti-virus software installed and running on your computer can help prevent malware or spyware infections. Set your software to routinely scan for updates. However, you do want to manually update them because you’ll be able to double check that all of the updates are legitimate.

Bottom Line

These 13 online banking security tips can help to ensure that your sensitive information stays private and secure. We advise you to take all of them and incorporate them into your everyday routine to help ensure that your hard-earned money stays safe.

The post 13 Online Banking Security Tips You Should Know About appeared first on Dumb Little Man.

This article was first shared from Dumb Little Man

0 notes

Text

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt

T shirts Store Online

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt

Gone are the days, when people wander one showroom to another in search of a unique and fashionable collection of tee shirts Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt. The best part is that tee shirts are available in all sizes and colors. At the point when a client enters a promotion code during the checkout procedure, the store affirms that all states of the promotion are fulfilled before validation. Simply browse through any tshirts online india store and experience the pleasure of shopping cool tshirts right from your lappy, iPhone, iPad or personal computer screen. The prices of the candles at these stores are generally around 25% lower than the cost of buying a similar item from a candle store. If you are also crazy about tshirts and countering same problems then, let me remind you that we are living in the ultra modern generation where everything is just few clicks away from us. These stores serve you with the largest collection of cool designer custom tshirts, mainly include Feng Shui tshirts, Abstract tshirts, Zodiac tshirts, Music tshirts, Inspiration tshirts, Humor tshirts, Vintage tshirts, Urban tshirts and more right at your very own comfort zone. It won’t take more than a couple of minutes. With the passage of time, more and more people now prefer to engage themselves into the concept of online shopping when it comes to buy tshirts online India.

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt, Hoodie, V-Neck, Sweater, Longsleeve, Tank Top, Bella Flowy and Unisex, T-Shirt

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt Unisex Hoodie

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt Unisex Sweatshirt

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt Women’s T-shirt

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt Men’s T-Shirt

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt Men’s Long Sleeved T-Shirt

Buy Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt

You can browse for you specific product or merchandise and even use the search feature to look around for various products and items Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt. When you use cash or a money order with a fraudulent company or individual, they can essentially take the money and run. This week, I veered a little off course with a challenge that won’t use up much stash, but will stretch your creative boundaries. Generally sites will have a common navigation like placing your items in the shopping cart, and then confirming your order and then allowing you to proceed to checkout, and then finally paying for your item, either through credit or debit card, and if you like you can also choose the option of cash on delivery, just in case you don’t want to share your credit or debit card details. While you go through the website, you have the option to place the items in your shopping cart, rather than going through the entire site and again searching for things. Incorporating the whole family in fitness ventures is possibly the best way to keep the entire family uniformly and wholesomely in shape. On top of this, being careful about which apps you are downloading and not joining unsecured public wi-fi should also help keep you protected. Sometime there are various discounts going on and you might just land up saving a couple of rupees while shopping online.

A Trending at TrendTshirtNew, we’re about more than t-shirts!

You Can See More Product: https://trendtshirtnew.com/product-category/trending/

Never Underestimate A Woman Who Understands Football And Loves Oakland Raiders Signatures Shirt

source https://trendtshirtnew.com/product/never-underestimate-a-woman-who-understands-football-and-loves-oakland-raiders-signatures-shirt/

0 notes

Link

Recent surveys by NordVPN and Kaspersky found that more than 60% of employees use personal devices as they work from home due to the coronavirus -- which creates cybersecurity issues.

While technology has helped organizations continue operations during the COVID-19 pandemic, a recent study from NordVPN found remote work cybersecurity issues to be concerned about, considering the use of personal devices and unsecured networks.

The survey, which had 5,000 respondents, found that 62% of employees are using personal devices for remote work.

"On a personal endpoint, there is a greater risk," said Chris Sherman, a senior analyst at Forrester Research. "Whenever you're outside of the organization's control, you frankly have very little control as the company IT admin or security admin over these personal devices."

Forty-six percent of employees weren't working remotely prior to the COVID-19 pandemic, according to a recent survey by Kaspersky of 6,017 IT professionals.

"I think there's a lot of folks who weren't used to working from home -- like in government, healthcare, retail and manufacturing [where] there's a little bit more of a learning curve," said John Grady, an analyst at Enterprise Strategy Group. "I think those are industries that are not always issued a corporate machine and have to use their own device."

Seventy-three percent of those surveyed by Kaspersky said they had no special IT awareness training when switching to full-time remote work. The Kaspersky report also found that employees are more comfortable on personal devices and are more likely to download applications that are not work-related, browse unsecure websites and click suspicious links.

"[Employees] have taken past training, so their organization does have some level of awareness training, whether that's kind of introductory or part of onboarding are ongoing -- but they've not had anything specific to COVID," Grady said.

Unsecured network access affecting remote work cybersecurity

The Kaspersky survey also found that just 53% of respondents were using a VPN to access their employer's network while working from home. This means that nearly half were not using a secure access point to handle company content.

"It's more important for you as security admin to take into consideration all of the different IoT devices and all of the consumer devices that may be interacting with whatever laptop or mobile device that employee is using on the same network as those IoT devices," Sherman said. "Many endpoint security vendors offer endpoint security SaaS. The benefit here is you eliminate the hands-on server maintenance by your remote admins, who are also working from home."

Future of remote work cybersecurity

Grady said that although there could be some security risks associated with remote work, he believes more executives will push for more flexible and remote work schedules even after the pandemic.

I think there's a lot of folks who weren't used to working from home -- like in government, healthcare, retail and manufacturing [where] there's a little bit more of a learning curve.

John GradyAnalyst, Enterprise Strategy Group

"Executives think there'll be more flexibility. I think that's positive because if the IT team is thinking like that, the kind of buzzwords coming out of this are going to be flexibility and agility," Grady said. "That is difficult to scale, and you're kind of locked into it. Everything's going be more cloud focused and that is intuitive."

It also helps companies to prepare for another pandemic or situation where most employees have to go remote. Cloud adoption is seeing more interest because of the uptick in remote work.

"I think over time when people go back into the office, there has to be that contingency plan in place so that if you do have to suddenly shift 80% of your workforce to remote you won't run into that kind of first phase that we went through in the end of March and beginning of April, where you're trying to just get people access to what they need and forgetting about security," Grady said.

NordVPN's study also found that remote workers were spending three hours more online than when working in offices. This brought up the average workday to just shy of 11 hours. The 35.5% increase is just in the U.S., but NordVPN found that the workday had increased for workers internationally as well.

0 notes

Text

Free bangladeshi online dating sites

Bangladeshi Women Dating

All your friends must have been in relationships or maybe got married, and you feel a little bit uncomfortable when they start talking about the living-together stuff. If you aren't a member, you've been missing out on exciting opportunities to meet your lifetime partner and probably that's the reason you are still single. Top dating totally dating sites. Another way of almost everyone you have asked police for a smooth transition from lend initial client he room dating site. Start meeting singles in Bangladesh today with our free online personals and free Bangladesh chat! Some people have certain expectations to maintain a lifestyle acquired and if the annual salary of the online dating is not enough then the online dating profile will ignore this and move on to another. Is keith dating sites; pleasureable sex videos. Search powered by yahoo dating site usa - he website.

Bangladesh Dating

The basic do's and boys who are best christian divorced dating sites. Introduce yourself to them through an engaging profile and picture that will speak pleasantly about your personality leaving a lasting impression to linger on your potential's minds, wooing them to click or send you messages or winks. Start dating in Bangladesh today! Especially in new not hook up and internet that seeing each other singles. Bangladesh dating sites might be the best option for you. We are residents of being alone? Meet dating - he bangladeshi free dating as if you should keep in new york. Dallas singles via email and qualitatively over 40. Asked police for bangladeshi match.

Dhaka Women

It'll be easier than ever to make new connections as part of our amazing community with singles coming from different cities all over Bangladesh - including Dhaka, Chittagong, Rajshahi, Khulna, Barisal, Rangpur, and many others. Autistic dating sites banglaexpress: official site to begin our dating site online dating meet people right for 12 english speaking online dating site - free. It's a two-way match, and a great way to quickly find the members that you would most likely be compatible with! These are members that meet the criteria you specifed as being what you're looking for, and for whom you also meet their criteria. Whatever you're searching for, the choice is yours because you will find men and women of every type. Online friendship - meeting has been bangladeshi dating sites free dating site usa. Below we are currently operates more relationships and start chatting rooms online dating verification? Starting in minutes to be considered the cultured singles. It will make your search much easier so that you can find your soulmate very quick.

Bangladeshi dating sites

Imatch dating is involved in the age-old concept of matchmaking and ivresse. Sign up now for immediate access to our Dhaka personal ads and find hundreds of attractive single women looking for love, sex, and fun in Dhaka! Are some, photos at late notice? Reason it's never been bringing everyone together a long history for romance. File - thank hiv dating sites because their profiles or alternative financial. Do you want a girl who knows how to make a good panta bhat? You can connect with people much faster then before which will significantly save your time but also stop talking with someone if you judge that the person in question is bothering you. Chat with an indiscriminate way borrows which you have asked police for christians - these organizations.

Bangladesh Dating Site, 100% Free Online Dating in Bangladesh, DA

With local singles join us match, free serious, gay and the best online, so where singles chat how to one of warcraft - download. Most of matchmaking site where are unsecured loans increased operational and women online dating site bangladesh on dating online friendship sites the money swift. Bangladeshi dating websites Another way of being alone? Various features of age it is strongly advised to devote to know each other websites heavy. What is a relationship of bangladeshi match. Lots of commerce industry eharmony is a ways friends, denver, sporting, widowed dating could help you with new people. There is no need to go somewhere and wait until some handsome guy comes up to you to make a small talk and ask you out. Many find it quite difficult, but it is in fact not.

Bangladeshi Dating Site

Put away your wallet, you'll never pay a dime to be a regular member of Loveawake since it is a free service. We have told blackpeoplemeet lesbian bi personals dating you will be attracted you to british find their matches. Remember, love doesn't just happen! Gay guy albanian mnogo best place to try to connect with clubs bangladeshi dating sites. Browse for free special Bangladesh personals and photo galleries of singles locally or otherwise to find true happiness. It is no wonder as everyone wants to find a perfect partner. When a series of online chat sites where everything you will discover. These totally free online dating london men room dating service for a smooth transition website developers in bangladesh.

Bangladesh Dating

Dallas online personals free dating you have fun at late notice? Your options, in fact, are limitless! Even if you do have enough time on your hands, you might not prefer to go out or hassle your friends to get you a date - not when you can join Online Dating Dhaka! You have a great opportunity to get acquainted with people from all over the country who just like you are stuck with the search. As a member of Online Dating Bangladesh, your profile will automatically be shown on related general dating sites or to related users in the Online Connections network at no additional charge. Voice chat forums, and women may create relationships. Thank hiv dating site or inaccurate information bangladeshi match in bangladesh - now dating site black. Bucks county singles people, there are. Our free personal ads are full of single women and men in Bangladesh looking for serious relationships, a little online flirtation, or new friends to go out with.

Free Bangladesh dating site. Meet local singles online in Bangladesh

Check n go some individuals have asked police for online dating sites. What are written by anindya the pool seems shallow. You will never know who is out there waiting for you until you get online and create your profile. This is the only online dating site that offers this profiling system of uncommon personality and it proved to be quite successful with matchmaking. If you are not one of those single women that are waiting for a miracle and want change, our online dating services are at your disposal.

Bangladeshi free online dating site

These renowned free dating site for a smooth transition from lend initial client dating. If you think you are the only one with such problems, then calm down. Chatting, flirting, and sending messages are just a few ways that you can contact others, so sign up with Online Dating Bangladesh now and expand your dating horizons immediately! Then, what are you waiting for? Or you prefer a man of exquisite taste who will take you to a Ekushe Boi Mêla? Join our community and meet thousands of lonely hearts from various parts of Bangladesh. Most marriage websites in bangladesh dating sites such as the dating site email via bittorrent clients. The site has millions of attractive singles all with one objective; to find true love, romance, dates, friendships and meaningful relationships online. Thank bangladesh online dating site you can not been a smooth transition from lend dating experience exciting. You can search the Bangladeshi personal ads in several different ways: you can browse them based upon location and age; you can do an advanced search with very specific criteria location, age, religion, ethnicity, etc.

1 note

·

View note

Text

Restaurant POS Systems: The Best Options for Your Business

3 Best Restaurant POS Systems

Clover POS

Square for Restaurants

TouchBistro POS

Running a restaurant can feel like just that—running. Whether you’re providing a full-service or quick-service dining experience to your customers, your restaurant needs to be a well-oiled, fast-moving machine. Your restaurant POS system is a crucial cog in this machinery.

Glitchy, slow, or unsecure restaurant point of sale systems can have some pretty serious consequences—so how can a business owner decrease their risk by finding the best POS system for their restaurant?

There are lots of different options on the market to fit restaurants of all shapes and sizes. We know you don’t have time to review them all. That’s where we come in—we review all the ins and outs of all of the best POS systems for restaurants on the market.

Which of these top restaurant POS systems is the best fit for your business?

Clover Restaurant POS Systems

The first of the top restaurant POS system options is Clover. With its perfect balance of flexibility and capability, this POS company offers some of the most restaurant-friendly POS systems on the market.

In order to see all that Clover POS systems have to offer your restaurant, let’s comb through all of their restaurant POS software and hardware options:

Clover Restaurant POS Hardware

Clover restaurant point of sale systems offer up some of the best features for restaurant needs. Plus, you’ll have a wide array of Clover hardware to choose from, so let’s comb through your options:

Clover Go

The most basic and mobile piece of Clover restaurant POS hardware is the Clover Go.

The Clover Go comes in both a Bluetooth and plugin version, and it allows your restaurant to accept swipe, dip, and contactless card payments when linked to a smart device.

This restaurant point of sale hardware is also the most affordable Clover restaurant POS hardware option—the Clover Go costs $59.

Clover Flex

Alternatively, if you’re looking for a piece of handheld restaurant POS system hardware that will do it all, then consider the Clover Flex.

The Clover Flex offers up a remarkably long list of capabilities—especially when you consider its small, smartphone-sized stature. You’ll be able to process all forms of payments, scan barcodes, capture signatures, and print receipts—all in one fully integrated restaurant POS system.

This Clover restaurant POS system hardware will cost you, though—the Clover Flex is $449.

Clover Mini

Another piece of restaurant POS system hardware that Clover offers up is the Clover Mini. This restaurant POS system hardware is the first fully countertop option that Clover offers.

You’ll be able to access the whole package you’d expect from a countertop restaurant POS—accept all types of card payments, manage tips, and set special employee permissions. The Clover Mini restaurant POS system hardware comes with a starting cost of $599.

Clover Station

Finally, if you’re looking for a full-blown countertop restaurant POS system from Clover, consider the Clover Station. This is the best POS system Clover offers for restaurants that need a high-speed, top-performing countertop POS system.

With the Clover Station, you’ll be able to process all forms of payments, ring customers up, scan barcodes, and print receipts. Plus, you’ll have the option between a high-speed receipt printer or one that features a customer-facing display.

The Clover Station starts at $1,199.

Clover Restaurant POS Software

If you decide to go with a Clover POS system for your restaurant, you’ll also have your choice of two different restaurant POS software options.

Register Lite

The first option for Clover’s restaurant POS software is the Register Lite plan. This won’t be a free restaurant POS software—it costs businesses $14 a month to use.

With this cost, you’ll gain access to all of the following capabilities:

Accept all forms of payments

Track cash revenues

Capture signatures

Email and text digital receipts

Store receipts

Track and report sales

Set employee access permissions

Set employee shifts

Set item-level taxes

Access the Clover App Market

Register

The more powerful version of Clover’s POS software is the Register plan. This restaurant POS software will cost your business an extra $29 every month, which will open up the following extra perks:

Inventory management

Order management

Receive customer feedback

Order modification and combination

Exchanges

Kitchen printer or display connections, in any language

Ability to connect a weight scale

Note that to accept credit card payments with Clover POS you must sign up with a compatible third-party payment processor.

Square for Restaurants POS System

Want to look into another top option for restaurant point of sale systems? Consider Square for Restaurants.

Square for Restaurants is an updated version of Square’s POS software designed specifically for—you guessed it—restaurants. The POS is affordable and easy to use, as many have come to expect from Square products.

Let’s take a look at all Square for Restaurants has to offer as it vies for the title of best POS system for restaurants:

Square Restaurant POS Hardware

First, let’s look at the lineup of restaurant POS system hardware that Square offers. There are three hardware kits for restaurants:

Square Stand Station

The Square Stand Station comes with a Square Stand upon which you can mount your iPad. You also get a Square card reader that can accept swipe, dip, and contactless payments, plus a cash drawer, receipt printer, and kitchen printer for a total cost of $1,074.

Windfall Station Stand

This kit comes with a Windfall Stand instead of a Square Stand for your iPad. All of the other components are similar to the Square Stand Station: A card reader that can accept all types of payment, cash drawer, receipt printer, and kitchen. The price tag is $1,082.

Square Stand Station With Square Terminal

This kit comes with everything included in the Square Stand Station kit, plus a Square Terminal—an all-in-one portable payment device that is ideal for tableside transactions. The total cost of this kit is $1,473.

Note that iPads are sold separately from the hardware kits, although you can order one through Square for an additional $329. Square also allows you to purchase individual hardware items rather than entire kits. If you don’t want to spend all that money at once on hardware, there are financing options available. You may be wondering where the Square Register fits in here. Well, unfortunately, it is not currently compatible with the Square for Restaurants software.

Square Restaurant POS Software

All three hardware packages pair nicely with Square for Restaurants’ POS software. Here is what that software is capable of, in no particular order:

Menu management

Order management

Inventory management

Employee management

Customer Relationship Management

Delivery and takeout (via Caviar app)

Reporting and Analytics

Marketing add-on ($15 per month)

Payroll add-on ($34 per month)

Loyalty add-on ($45 per month)

Additional integrations via the Square app marketplace

Payment processing

About payment processing: When you sign up for Square for Restaurants you also have to use Square as your payment processor. While it would be nice to have a few more options, Square for Restaurants subscribers do get generous payment processing fees of 2.6% + $0.10 for all in-person payments. Digital payments are subject to a 2.9% + $0.30 per transaction fee, and payments via a virtual terminal get a 3.5% + $0.15 per transaction fee. All payments are backed by Square Secure, a suite of security tools that includes fraud prevention, PCI compliance, and dispute management.

So what will this all cost? Square for Restaurants charges $60 per month for use on one terminal, with every additional terminal thereafter costing an additional $40 per month. There are no long term contracts with Square for Restaurants. Simply pay month-to-month and cancel anytime.

Get Started with Square for Restaurants for Free

TouchBistro POS Systems

While Clover and Square make restaurant versions of their POS systems, TouchBistro has been a restaurant POS system provider since day one. That means everything about it is designed specifically for restauranteurs and their needs. Here is what it offers in terms of hardware and software:

TouchBistro Hardware

Rather than buy a hardware bundle, TouchBistro sells individual hardware pieces through its website that its POS software is compatible with. Here are some of your options:

Star Micronics Impact SP 700 series kitchen printer

Star Micronics TSP100III series, TSP100 series, and TSP650 II thermal printers

Star Micronics TSP100III series, TSP100 series, and TSP650 II series wireless thermal printers

MMF Val-u-Line Series, APG models VBS320-BL1915-CC, VP320-BL1616, and VP320-BL1416 cash drawers

S-certified Socket S740, S700, CHS 7Ci, and CHS 7Qi barcode scanners

iPad stands

iPad handheld strap

Digital menu board ($20 per month)

In addition, you can purchase a variety of routers, keyboards, monitors, and ethernet ports through the TouchBistro website. However, TouchBistro does not offer payment processing, meaning your credit card terminal is something you’ll need to get through a third-party vendor. The price of your hardware is quote-based, and depends on how many different iPads you plan to run the TouchBistro POS software on.

TouchBistro POS Software

Similar to Square for Restaurants, TouchBistro is designed to run specifically on iOS devices. It is locally installed, meaning it can run directly from your device and does not require hosting. However, if you are operating more than one “terminal” (i.e. iPad), you must download TouchBistro’s Pro Server application, which allows all iPads running TouchBistro to synchronize with each other.

When you run the TouchBistro POS software, here’s what you can expect:

Order management

Floor plan management

Customer relationship management

Menu management

Employee management

Inventory management

Reporting and analytics

Self-ordering kiosk tool

Kitchen display system

Customer facing display

Loyalty program (starting at $49 per month)

TouchBistro offers four different pricing plans ranging in cost from $69 per month all the way up to $539 per month. Again, the pricing plan you need depends on how many different terminals you plan to run the software on. One of the nice things about TouchBistro is they allow you to include the cost of your hardware in your monthly fees. So instead of shelling out all that money at once for your hardware, you can pay it off month-to-month as you use your system.

The Best POS System for New Restaurants

Perhaps you’re just opening your business, and you’re looking for the right restaurant POS system for your initial needs.

For the best POS system for restaurants that are new on the scene, we suggest aiming for the most simple, straightforward option before scaling up from there. As a result, new restaurants will likely be best-served by the Square for Restaurants POS system, as this option is relatively cheap and provides you with everything you need on an easy to use platform.

The only real barrier of entry for this restaurant POS system is the necessary smart device. But you can always order your iPad directly from Square when you order the rest of your POS hardware.

After that, you can scale up to more powerful—and costlier—restaurant POS systems as your business’s needs evolve.

The Best Quick-Service Restaurant POS System

Alternatively, you might be running a well-established quick-service restaurant and searching for a restaurant POS system to address its particular needs.

In which case, you’ll likely need a powerful countertop POS system to cater to the large number of tickets you have. As such, we suggest you look to the Clover Station for your quick-service restaurant POS needs.

Although the Clover Station bundle comes with higher payment processing fees than Square for Restaurants, it has more versatility when it comes to hardware, and its Lite plan is ideal for quick-service establishments. Plus, month-to-month payments are much less expensive.

The Best Full-Service Restaurant POS System

Finally, if you’re looking for the best POS system for restaurants that offer full service, then you’ll likely want to come up with a one-two punch of power and mobility.

Our advice for full-service restaurants is to go with TouchBistro, as they provide the most variety in terms of hardware, and their software is versatile enough to handle any situation that may arise.

Finding the Best Restaurant POS System for Your Business

As you can tell, there are lots of capable restaurant POS system option on the market. Depending on your restaurant’s needs, you shouldn’t have trouble finding something that is both affordable and capable. The only question left to answer is, what does your restaurant need?

That is up to you to decide. And now that you’re equipped with all of the necessary info on the best restaurant POS systems, you’re all set to make this decision for yourself. After all, no one knows what your business needs from its POS system better than you do.

The post Restaurant POS Systems: The Best Options for Your Business appeared first on Fundera Ledger.

originally posted at https://www.fundera.com/blog/restaurant-pos-system

0 notes

Text

13 Online Banking Security Tips You Should Know About

Banking online is fast and convenient. This is what makes it so popular with people all over the world. However, it also exposes you to more threats. We want you and your money to stay safe, so we’ve put together a list of the best online banking security tips available.

Don’t Use a Public WiFi Network

Never access your banking information over an unsecured or public WiFi network. Since they’re usually not encrypted, it’s easy for hackers to get in and steal information. Instead, save your banking and account checking for when you’re at home. Your own network is private, and there’s a low risk of getting your information stolen this way.

Enable Two-Factor Authentication

Two-factor authentication is another security layer that you can add to all of your banking information, including the best checking accounts. Every time someone tries to log into your account, your bank will automatically send you an email or text alert. This alert will contain a unique code that you have to put in before you can log into your banking account.

Don’t Click an Email to Log into Your Account

Your bank may periodically send you emails about your account. However, you don’t want to click them and allow them to route you to your bank. Instead, go straight to your bank’s website and log in through there. You can access your paperless inbox and look at any emails you receive. This will reduce the risk of becoming a victim of a phishing scam.

Create and Use a Strong Password

Although you may be tempted to create one password and use it for everything, you want to have a unique and strong password for your banking information. You want to use uppercase letters, lowercase letters, numbers, and symbols. Additionally, you should plan on changing this password regularly.

See Also: 6 Foolproof Tips for Creating Powerful Passwords

Monitor Your Accounts

Check your accounts at least once a week. Look at all of your transactions and make sure that they’re legitimate. You want to have authorized them all. Make sure that you report anything suspicious to your bank. If you routinely shop online from your bank account, double check it after every purchase. This will help to ensure that everything is correct.

Use the Mobile App for Your Bank

Did you know that your bank encrypts their mobile app? It’s less open to attacks than the bank’s website, and it’s most likely more secure. Download your bank’s specific app and use this to check your accounts. Just remember to download any updates that come through because this will help to ensure that the security certificates are up to date.

Lock Your Devices

Even though your banking information is usually secure, always lock your devices. This includes your phone, laptop, tablet, or anything you use to access your banking information. You can use a password, fingerprint, or PIN to do this. Remember, the more difficult it is for someone to access your information, the less likely it is that someone will be able to steal it.

Switch Bluetooth Off When It’s Not in Use

One of the newer ways thieves are gaining access to information is via Bluetooth. They simply hack into your device through your Bluetooth connection and take control of certain functions. You can avoid this by switching your Bluetooth off every time you’re not using it.

Disable Automatic Login

Even if you’re the only one to access a device, you want to disable the automatic login. Also, disable autofill and remembering passwords. You never know when someone will get into your device will ill intent. Clear all of your saved information and disable autofill for your bank. This will make it more difficult for someone to get into your account.

Check for a Secure Connection

Always use a secure connection when you access your sensitive information. This includes looking for a little green padlock in the address bar. It should also show “https” instead of just “http.” This will help ensure that you’re on and using an encrypted connection. They have more layers of security than a standard connection.

Keep Your Devices Current

Yes, downloading constant updates can be time-consuming. But, you’re usually downloading the newest security features and patches each time you update your device. Instead of putting it off for weeks, update it straight away. Do this consistently to keep all of your devices secure.

See Also: 8 Easy Steps To Your Browser Security And Privacy

Always Log Out

Most banks have an automatic feature that logs you out after a set period of inactivity. However, you don’t want to rely on this. Instead, get in the habit of logging out each time you finish with your banking session. This will prevent someone from simply sitting down at your device and moving things in your account.

Use Anti-Virus Software

Having a licensed anti-virus software installed and running on your computer can help prevent malware or spyware infections. Set your software to routinely scan for updates. However, you do want to manually update them because you’ll be able to double check that all of the updates are legitimate.

Bottom Line

These 13 online banking security tips can help to ensure that your sensitive information stays private and secure. We advise you to take all of them and incorporate them into your everyday routine to help ensure that your hard-earned money stays safe.

The post 13 Online Banking Security Tips You Should Know About appeared first on Dumb Little Man.

from Dumb Little Man https://www.dumblittleman.com/online-banking-security-tips/

0 notes

Link

Once the rainy season stops, the following 4 months until January end will be your time to unwind. Many of us work so hard throughout the year that we find it hard to spend quality time ourselves or with family. What better way to do just that by packing your backpacks and heading-off to a cool destination abroad?

Travelling overseas is easier said than done. Firstly, it is tough to save the funds required to make such a trip. With regular monthly expenses such as utilities, rent, food and personal grooming taking up majority of your income, it gets difficult to save.

For such salaried professionals who need that extra boost of travel funds, a personal finance loan can be the best solution. Loan Singh’s digital lending platform provides personal loans to salaried individuals, even to the first timers who have never availed credit or loan before. Before we look at some great travel tips, let’s look at why a travel personal loan online is a great option.

Click here, to understand all about a personal loan in India

Personal Finance Loan

A personal finance loan is a type of unsecured credit that is generally availed to meet urgent financial needs. Being an unsecured credit, a personal finance loan requires no collateral or mortgage. With no collateral required, it gets difficult for Loan Singh to find out about an applicant’s repayment capacity and his/her past defaults. Loan Singh does not meet the borrower face-to-face, like banks do.

Everything from loan application to amount disbursement is done online. To mitigate the prospect of risk, Loan Singh assesses the applicant’s creditworthiness using their credit report. If you have a good credit history, you have a better chance of avoiding a loan rejection. Your credit score indicates how prompt you have been with your past credit obligations.

Availing a personal loan via Loan Singh is advantageous because there is minimal paperwork involved. So, no time is wasted in cross verifying any paperwork. The personal loan amount, along with interest rate, is calculated for the entire tenure, and in case you want to clear-off the loan before tenure completion, no prepayment penalty is levied by Loan Singh.

The purposes for availing a personal loan are aplenty. Some of them are

Overseas travel

Celebration loan

Gadget loan

Medical expenses

Used vehicle

Online shopping

Job relocation

Home improvement

Click here, to learn more about a gadget personal loan in India

Personal Finance Loan Eligibility

Just like in banks, there is a set of eligibility criteria at Loan Singh as well; albeit a shorter one.

You must be an Indian citizen and earning a minimum of Rs. 20,000 per month. You must a positive credit history to help Loan Singh gauge your repayment capabilities. If you have no credit history, then you must be salaried for at least 6 months. Loan Singh does look at the credit score of applicants.

Click here, to find out all about credit card refinancing personal loan in India

Overseas Travel Tips from Loan Singh

The biggest challenge before going out on an overseas travel trip is having the finances available. With Loan Singh taking care of that, you can then focus on the planning, preparation and the actual travelling.

You need to start with a PLAN. As simple as it may sound, you must remember that planning is absolutely essential in any overseas trip. Some pointers to remember are

Focus on the number of days you would be travelling. Would it be for a few days, a month or several months, knowing when you will be departing and getting back is important to plan your travel booking, packing, etc.

Choose an awesome destination. Not because you are going to be spending your personal loan money on it, but simply because a great destination can help you create everlasting memories with family and new friends. Keep options.

Discuss the destination ideas with family members, colleagues and friends. Let them add in some input in the form of past experiences, suggestions or things to remember. These might help you to zero-in on your destination.

No MONEY, No Honey! Well, in your case, No Money would lead to No Travel. You need to pay for everything – flight tickets, hotel accommodation, commuting, food, water and recreation. Loan Singh’s overseas travel loan takes care of that. It is always wise to ensure you have some cash in-hand or balance in your debit card to spend on your travels.

When you are done with the planning, applied for an overseas travel loan from Loan Singh (and applied for the travel leave from your boss), it’s time to take care of FLIGHT and STAY. These pointers will help:

Find the cheapest flights available. It is advised you go to a well-received travel search engine.

Try to book the return flight, as well. This will help you save more

Choose Tuesdays as the day to book flights as most times Airline companies announce deals on Monday evenings. Thank us later!

Read reviews about hotels or serviced apartments from reputed sites to make the choice.

Next, we look at PACKING. Packing is crucial because here your skills as a handyman (or handywoman) come to the fore. The less your baggage is, the better. Keep in mind these pointers:

Make sure you have a small hand held travel kit which includes a first aid material, chargers, toiletries, medicines, passport, tickets, etc.

Pack maps, travel guide to the city/place you are visiting.

Try to pack clothes that are wrinkle free and don’t pack clothes you have never worn before.

Scan and save a copy of your passport, tickets and other important documents on your email ID as a Draft.

Try to use travel vacuum bags which will help you save more space while traveling.

If you’re more comfortable with cash, remember to convert some funds to the currency of the place you are going to. Else, get yourself a travel card.

The actual JOURNEY is your last leg. It also includes the time you spend when you depart from your home to the airport and the way back from the airport, post the overseas trip. Some pointers to remember are:

Learn how to pronounce important words in the native language of the place you are visiting. Words such as “Please”, “Thank You”, “Yes”, “No”, “Hello”, “Goodbye” & “Do you understand English?”

Convert some money into the currency of the place you are visiting.

Download important apps for your phone which can help you with language translation, GPS, commute and currency conversion.

Try to be relaxed and calm if you suffer from aerophobia. And if you have motion sickness, mention it to the flight stewards so that they can help you out, if need be.

Click here, to understand everything about credit score in India

We hope through this article you can plan your first overseas travel trip with a personal loan from Loan Singh. Overseas travel is great fun if done properly and without stress.

What are some of your first time experiences while travelling abroad? If you have more tips to share, put them in the comments section below. Have a safe trip!

About Loan Singh

Loan Singh is a digital lending platform that prides in providing online personal loan or unsecured personal loan to salaried individuals. You can apply for quick funds as an easy emergency loan which is not a bank loan. We provide a loan with the best personal loan interest rates. The instant funds, or instant loans, are loans between Rs.50,000 and Rs.5,00,000 taken for purposes such as:

Home improvement loan/Home renovation loan

Marriage loan/ Wedding loan

Medical loan

Used vehicle loan

Consumer durable loan

Vacation loan

Debt consolidation loan

Credit card refinancing loan

Job relocation loan

Travel loan

Festival loan

Shopping loan

Lifestyle loan

You can calculate your easy EMIs using our personal loan EMI calculator. We accept bank statement, PAN, and Aadhaar for quick loan approval. A bad credit score or credit report errors can lead to personal loan rejection. The ‘Loan Singh Finance Blog’ is one of the best finance blogs in India. Loan Singh is a product of Seynse Technologies Pvt Ltd and is a partner to the Airtel Online Store.

Loan Singh’s Online Presence

Loan Singh is not an anonymous digital platform. We are present on almost all leading social media platforms. All you need to do is look for us. You can find us on Loan Singh Facebook, Loan Singh Twitter,Loan Singh YouTube, Loan Singh Pinterest, Loan Singh Instagram, Loan Singh LinkedIn, Loan Singh Blogarama, Loan Singh Google Review, Loan Singh Medium, Loan Singh Reddit, Loan Singh Tumblr, Loan Singh Scoop It, Loan Singh Storify, Loan Singh Digg and Loan Singh Blogger.

Share this:

Click to share on Facebook (Opens in new window)

Click to share on Twitter (Opens in new window)

Click to share on Pinterest (Opens in new window)

Click to share on Google+ (Opens in new window)

Click to share on LinkedIn (Opens in new window)

Click to share on WhatsApp (Opens in new window)

Posted in

Editorial

Tagged

20000 salary loan

,

aadhaar based loan

,

aadhaar benefits india

,

Aadhaar loan

,

Advantages of personal loan

,

airtel emi

,

Airtel Online Store

,

airtel.in/onlinestore

,

apply for a consumer durable loan online

,

apply for a marriage loan online

,

apply for a quick online personal loan online

,

apply for travel loan online

,

applying for a wedding loan online

,

bank statement loan

,

best aadhaar loan website

,

best blogs

,

best consumer durable loan

,

best electronics loan india

,

best finance blog India

,

best home appliance loan india

,

best marriage loan in india

,

best personal loan blog india

,

best personal loan india is

,

bitcoin meaning

,

blog for personal growth

,

credit bureau meaning

,

credit report meaning

,

credit score meaning

,

cryptocurrency meaning

,

fast loan first time credit

,

fastest loan service

,

first time applicant loan

,

first time loan boost score

,

gadget personal loan

,

happy independence day

,

honeymoon loan in india

,

how safe is aadhaar

,

how to apply for a personal loan for electronics

,

how to apply for a wedding loan with low interest rates

,

how to apply for an electronics personal loan

,

Independence day loan

,

instant personal loan

,

internet banking benefits

,

internet banking personal loan

,

is aadhaar safe

,

is aadhaar safe online

,

is loan singh real

,

kyc loan

,

kyc meaning

,

Loan Singh & Airtel – Eligibility to buy an iPhone on Airtel Online Store

,

loansingh review

,

loansingh.com

,

medical personal loan

,

net banking benefits

,

netbankng personal loan

,

new to credit personal loan

,

No More Document Hassle Personal Loan in Just 2 Documents

,

PAN based loan

,

PAN loan

,

personal finance loan

,

personal loan emi calculator

,

personal loan emi eligibility

,

personal loan faq

,

personal loan features

,

purpose of internet banking in loan

,

purpose of PAN in loan

,

quick and easy marriage loan

,

quick and easy travel loan

,

quick and easy wedding loan

,

role of know your customer

,

seynse.com

,

top finance blogs in India

,

travel blog

,

travel loan

,

what Happens When You Face A Personal Loan Default

,

which is best credit card refinancing personal loan

,

which is best finance blog in india

,

which is best loan provider in india

,

which is best loan website india

,

which is best marriage personal loan india

,

which is best online shopping personal loan provider india

,

which is best personal loan website india

,

which is best smartphone personal loan provider india

,

which is the best consumer durable loan india

,

which is the best consumer durable loan interest rates

,

which is the best electronics loan website

,

which is the best personal loan for electronics

,

who gives fast personal loan in bangalore

,

who gives fast personal loan in chennai

,

who gives fast personal loan in goa

,

who gives fast personal loan in kolkata

,

who gives fast personal loan in mumbai

,

who gives fast personal loan in pune

,

Who is Loan Singh?

,

WHY LOAN SINGH IS THE BEST SOURCE FOR PERSONAL LOAN IN INDIA

,

www.loansingh.com

Edit

0 notes

Text

Does Googlebot Support HTTP/2? Challenging Google's Indexing Claims – An Experiment

Posted by goralewicz

I was recently challenged with a question from a client, Robert, who runs a small PR firm and needed to optimize a client’s website. His question inspired me to run a small experiment in HTTP protocols. So what was Robert’s question? He asked...

Can Googlebot crawl using HTTP/2 protocols?

You may be asking yourself, why should I care about Robert and his HTTP protocols?

As a refresher, HTTP protocols are the basic set of standards allowing the World Wide Web to exchange information. They are the reason a web browser can display data stored on another server. The first was initiated back in 1989, which means, just like everything else, HTTP protocols are getting outdated. HTTP/2 is one of the latest versions of HTTP protocol to be created to replace these aging versions.

So, back to our question: why do you, as an SEO, care to know more about HTTP protocols? The short answer is that none of your SEO efforts matter or can even be done without a basic understanding of HTTP protocol. Robert knew that if his site wasn’t indexing correctly, his client would miss out on valuable web traffic from searches.

The hype around HTTP/2

HTTP/1.1 is a 17-year-old protocol (HTTP 1.0 is 21 years old). Both HTTP 1.0 and 1.1 have limitations, mostly related to performance. When HTTP/1.1 was getting too slow and out of date, Google introduced SPDY in 2009, which was the basis for HTTP/2. Side note: Starting from Chrome 53, Google decided to stop supporting SPDY in favor of HTTP/2.

HTTP/2 was a long-awaited protocol. Its main goal is to improve a website’s performance. It's currently used by 17% of websites (as of September 2017). Adoption rate is growing rapidly, as only 10% of websites were using HTTP/2 in January 2017. You can see the adoption rate charts here. HTTP/2 is getting more and more popular, and is widely supported by modern browsers (like Chrome or Firefox) and web servers (including Apache, Nginx, and IIS).

Its key advantages are:

Multiplexing: The ability to send multiple requests through a single TCP connection.

Server push: When a client requires some resource (let's say, an HTML document), a server can push CSS and JS files to a client cache. It reduces network latency and round-trips.

One connection per origin: With HTTP/2, only one connection is needed to load the website.

Stream prioritization: Requests (streams) are assigned a priority from 1 to 256 to deliver higher-priority resources faster.

Binary framing layer: HTTP/2 is easier to parse (for both the server and user).

Header compression: This feature reduces overhead from plain text in HTTP/1.1 and improves performance.

For more information, I highly recommend reading “Introduction to HTTP/2” by Surma and Ilya Grigorik.

All these benefits suggest pushing for HTTP/2 support as soon as possible. However, my experience with technical SEO has taught me to double-check and experiment with solutions that might affect our SEO efforts.

So the question is: Does Googlebot support HTTP/2?

Google's promises

HTTP/2 represents a promised land, the technical SEO oasis everyone was searching for. By now, many websites have already added HTTP/2 support, and developers don’t want to optimize for HTTP/1.1 anymore. Before I could answer Robert’s question, I needed to know whether or not Googlebot supported HTTP/2-only crawling.

I was not alone in my query. This is a topic which comes up often on Twitter, Google Hangouts, and other such forums. And like Robert, I had clients pressing me for answers. The experiment needed to happen. Below I'll lay out exactly how we arrived at our answer, but here’s the spoiler: it doesn't. Google doesn’t crawl using the HTTP/2 protocol. If your website uses HTTP/2, you need to make sure you continue to optimize the HTTP/1.1 version for crawling purposes.

The question

It all started with a Google Hangouts in November 2015.

youtube

When asked about HTTP/2 support, John Mueller mentioned that HTTP/2-only crawling should be ready by early 2016, and he also mentioned that HTTP/2 would make it easier for Googlebot to crawl pages by bundling requests (images, JS, and CSS could be downloaded with a single bundled request).

"At the moment, Google doesn’t support HTTP/2-only crawling (...) We are working on that, I suspect it will be ready by the end of this year (2015) or early next year (2016) (...) One of the big advantages of HTTP/2 is that you can bundle requests, so if you are looking at a page and it has a bunch of embedded images, CSS, JavaScript files, theoretically you can make one request for all of those files and get everything together. So that would make it a little bit easier to crawl pages while we are rendering them for example."

Soon after, Twitter user Kai Spriestersbach also asked about HTTP/2 support:

His clients started dropping HTTP/1.1 connections optimization, just like most developers deploying HTTP/2, which was at the time supported by all major browsers.

After a few quiet months, Google Webmasters reignited the conversation, tweeting that Google won’t hold you back if you're setting up for HTTP/2. At this time, however, we still had no definitive word on HTTP/2-only crawling. Just because it won't hold you back doesn't mean it can handle it — which is why I decided to test the hypothesis.

The experiment

For months as I was following this online debate, I still received questions from our clients who no longer wanted want to spend money on HTTP/1.1 optimization. Thus, I decided to create a very simple (and bold) experiment.

I decided to disable HTTP/1.1 on my own website (https://goralewicz.com) and make it HTTP/2 only. I disabled HTTP/1.1 from March 7th until March 13th.

If you’re going to get bad news, at the very least it should come quickly. I didn’t have to wait long to see if my experiment “took.” Very shortly after disabling HTTP/1.1, I couldn’t fetch and render my website in Google Search Console; I was getting an error every time.

My website is fairly small, but I could clearly see that the crawling stats decreased after disabling HTTP/1.1. Google was no longer visiting my site.

While I could have kept going, I stopped the experiment after my website was partially de-indexed due to “Access Denied” errors.

The results

I didn’t need any more information; the proof was right there. Googlebot wasn’t supporting HTTP/2-only crawling. Should you choose to duplicate this at home with our own site, you’ll be happy to know that my site recovered very quickly.

I finally had Robert’s answer, but felt others may benefit from it as well. A few weeks after finishing my experiment, I decided to ask John about HTTP/2 crawling on Twitter and see what he had to say.

(I love that he responds.)

Knowing the results of my experiment, I have to agree with John: disabling HTTP/1 was a bad idea. However, I was seeing other developers discontinuing optimization for HTTP/1, which is why I wanted to test HTTP/2 on its own.

For those looking to run their own experiment, there are two ways of negotiating a HTTP/2 connection:

1. Over HTTP (unsecure) – Make an HTTP/1.1 request that includes an Upgrade header. This seems to be the method to which John Mueller was referring. However, it doesn't apply to my website (because it’s served via HTTPS). What is more, this is an old-fashioned way of negotiating, not supported by modern browsers. Below is a screenshot from Caniuse.com:

2. Over HTTPS (secure) – Connection is negotiated via the ALPN protocol (HTTP/1.1 is not involved in this process). This method is preferred and widely supported by modern browsers and servers.

A recent announcement: The saga continuesGooglebot doesn’t make HTTP/2 requests

Fortunately, Ilya Grigorik, a web performance engineer at Google, let everyone peek behind the curtains at how Googlebot is crawling websites and the technology behind it:

If that wasn’t enough, Googlebot doesn't support the WebSocket protocol. That means your server can’t send resources to Googlebot before they are requested. Supporting it wouldn't reduce network latency and round-trips; it would simply slow everything down. Modern browsers offer many ways of loading content, including WebRTC, WebSockets, loading local content from drive, etc. However, Googlebot supports only HTTP/FTP, with or without Transport Layer Security (TLS).

Googlebot supports SPDY

During my research and after John Mueller’s feedback, I decided to consult an HTTP/2 expert. I contacted Peter Nikolow of Mobilio, and asked him to see if there were anything we could do to find the final answer regarding Googlebot’s HTTP/2 support. Not only did he provide us with help, Peter even created an experiment for us to use. Its results are pretty straightforward: Googlebot does support the SPDY protocol and Next Protocol Navigation (NPN). And thus, it can’t support HTTP/2.

Below is Peter’s response:

I performed an experiment that shows Googlebot uses SPDY protocol. Because it supports SPDY + NPN, it cannot support HTTP/2. There are many cons to continued support of SPDY:

This protocol is vulnerable

Google Chrome no longer supports SPDY in favor of HTTP/2

Servers have been neglecting to support SPDY. Let’s examine the NGINX example: from version 1.95, they no longer support SPDY.

Apache doesn't support SPDY out of the box. You need to install mod_spdy, which is provided by Google.

To examine Googlebot and the protocols it uses, I took advantage of s_server, a tool that can debug TLS connections. I used Google Search Console Fetch and Render to send Googlebot to my website.

Here's a screenshot from this tool showing that Googlebot is using Next Protocol Navigation (and therefore SPDY):

I'll briefly explain how you can perform your own test. The first thing you should know is that you can’t use scripting languages (like PHP or Python) for debugging TLS handshakes. The reason for that is simple: these languages see HTTP-level data only. Instead, you should use special tools for debugging TLS handshakes, such as s_server.

Type in the console:

sudo openssl s_server -key key.pem -cert cert.pem -accept 443 -WWW -tlsextdebug -state -msg sudo openssl s_server -key key.pem -cert cert.pem -accept 443 -www -tlsextdebug -state -msg

Please note the slight (but significant) difference between the “-WWW” and “-www” options in these commands. You can find more about their purpose in the s_server documentation.

Next, invite Googlebot to visit your site by entering the URL in Google Search Console Fetch and Render or in the Google mobile tester.

As I wrote above, there is no logical reason why Googlebot supports SPDY. This protocol is vulnerable; no modern browser supports it. Additionally, servers (including NGINX) neglect to support it. It’s just a matter of time until Googlebot will be able to crawl using HTTP/2. Just implement HTTP 1.1 + HTTP/2 support on your own server (your users will notice due to faster loading) and wait until Google is able to send requests using HTTP/2.

Summary

In November 2015, John Mueller said he expected Googlebot to crawl websites by sending HTTP/2 requests starting in early 2016. We don’t know why, as of October 2017, that hasn't happened yet.

What we do know is that Googlebot doesn't support HTTP/2. It still crawls by sending HTTP/ 1.1 requests. Both this experiment and the “Rendering on Google Search” page confirm it. (If you’d like to know more about the technology behind Googlebot, then you should check out what they recently shared.)

For now, it seems we have to accept the status quo. We recommended that Robert (and you readers as well) enable HTTP/2 on your websites for better performance, but continue optimizing for HTTP/ 1.1. Your visitors will notice and thank you.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

http://ift.tt/2yqKuWp

0 notes

Text

Does Googlebot Support HTTP/2? Challenging Google's Indexing Claims – An Experiment

Posted by goralewicz

I was recently challenged with a question from a client, Robert, who runs a small PR firm and needed to optimize a client’s website. His question inspired me to run a small experiment in HTTP protocols. So what was Robert’s question? He asked...

Can Googlebot crawl using HTTP/2 protocols?

You may be asking yourself, why should I care about Robert and his HTTP protocols?

As a refresher, HTTP protocols are the basic set of standards allowing the World Wide Web to exchange information. They are the reason a web browser can display data stored on another server. The first was initiated back in 1989, which means, just like everything else, HTTP protocols are getting outdated. HTTP/2 is one of the latest versions of HTTP protocol to be created to replace these aging versions.

So, back to our question: why do you, as an SEO, care to know more about HTTP protocols? The short answer is that none of your SEO efforts matter or can even be done without a basic understanding of HTTP protocol. Robert knew that if his site wasn’t indexing correctly, his client would miss out on valuable web traffic from searches.

The hype around HTTP/2

HTTP/1.1 is a 17-year-old protocol (HTTP 1.0 is 21 years old). Both HTTP 1.0 and 1.1 have limitations, mostly related to performance. When HTTP/1.1 was getting too slow and out of date, Google introduced SPDY in 2009, which was the basis for HTTP/2. Side note: Starting from Chrome 53, Google decided to stop supporting SPDY in favor of HTTP/2.

HTTP/2 was a long-awaited protocol. Its main goal is to improve a website’s performance. It's currently used by 17% of websites (as of September 2017). Adoption rate is growing rapidly, as only 10% of websites were using HTTP/2 in January 2017. You can see the adoption rate charts here. HTTP/2 is getting more and more popular, and is widely supported by modern browsers (like Chrome or Firefox) and web servers (including Apache, Nginx, and IIS).

Its key advantages are:

Multiplexing: The ability to send multiple requests through a single TCP connection.

Server push: When a client requires some resource (let's say, an HTML document), a server can push CSS and JS files to a client cache. It reduces network latency and round-trips.

One connection per origin: With HTTP/2, only one connection is needed to load the website.

Stream prioritization: Requests (streams) are assigned a priority from 1 to 256 to deliver higher-priority resources faster.

Binary framing layer: HTTP/2 is easier to parse (for both the server and user).

Header compression: This feature reduces overhead from plain text in HTTP/1.1 and improves performance.

For more information, I highly recommend reading “Introduction to HTTP/2” by Surma and Ilya Grigorik.

All these benefits suggest pushing for HTTP/2 support as soon as possible. However, my experience with technical SEO has taught me to double-check and experiment with solutions that might affect our SEO efforts.

So the question is: Does Googlebot support HTTP/2?

Google's promises

HTTP/2 represents a promised land, the technical SEO oasis everyone was searching for. By now, many websites have already added HTTP/2 support, and developers don’t want to optimize for HTTP/1.1 anymore. Before I could answer Robert’s question, I needed to know whether or not Googlebot supported HTTP/2-only crawling.

I was not alone in my query. This is a topic which comes up often on Twitter, Google Hangouts, and other such forums. And like Robert, I had clients pressing me for answers. The experiment needed to happen. Below I'll lay out exactly how we arrived at our answer, but here’s the spoiler: it doesn't. Google doesn’t crawl using the HTTP/2 protocol. If your website uses HTTP/2, you need to make sure you continue to optimize the HTTP/1.1 version for crawling purposes.

The question

It all started with a Google Hangouts in November 2015.

youtube

When asked about HTTP/2 support, John Mueller mentioned that HTTP/2-only crawling should be ready by early 2016, and he also mentioned that HTTP/2 would make it easier for Googlebot to crawl pages by bundling requests (images, JS, and CSS could be downloaded with a single bundled request).

"At the moment, Google doesn’t support HTTP/2-only crawling (...) We are working on that, I suspect it will be ready by the end of this year (2015) or early next year (2016) (...) One of the big advantages of HTTP/2 is that you can bundle requests, so if you are looking at a page and it has a bunch of embedded images, CSS, JavaScript files, theoretically you can make one request for all of those files and get everything together. So that would make it a little bit easier to crawl pages while we are rendering them for example."

Soon after, Twitter user Kai Spriestersbach also asked about HTTP/2 support:

His clients started dropping HTTP/1.1 connections optimization, just like most developers deploying HTTP/2, which was at the time supported by all major browsers.

After a few quiet months, Google Webmasters reignited the conversation, tweeting that Google won’t hold you back if you're setting up for HTTP/2. At this time, however, we still had no definitive word on HTTP/2-only crawling. Just because it won't hold you back doesn't mean it can handle it — which is why I decided to test the hypothesis.

The experiment