#pascal's wager triangle

Explore tagged Tumblr posts

Text

In contrast to Pascal's Wager Triangle, Pascal's Triangle Wager argues that maybe God wants you to draw a triangle of numbers where each one is the sum of the two numbers above it, so you probably should, just in case.

Pascal's Wager Triangle [Explained]

Transcript

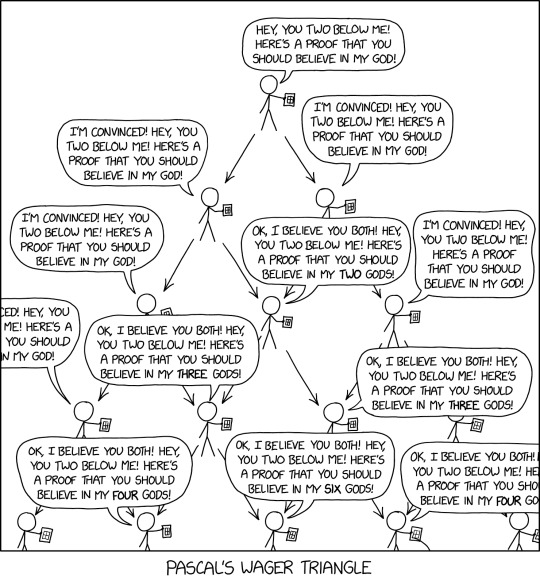

[Cueballs, each holding some document, are shown in a triangular arrangement, with arrows pointing from upper to lower Cueballs:] C1 C2 C3 C4 C5 C6 C7 C8 C9 C10 C11 C12 C13 C14 C15

[The pattern may continue downwards, off-comic, as the lowest rank are only half in-frame. Some of the following speech bubbles that are edge-adjacent are not entirely in view as they extend sidways off-pane, but are still given here in full.] C1: Hey, you two below me! Here's a proof that you should believe in my god! C2 & C3: I'm convinced! Hey, you two below me! Here's a proof that you should believe in my god! C4 & C6: I'm convinced! Hey, you two below me! Here's a proof that you should believe in my god! C5: Ok, I believe you both! Hey, you two below me! Here's a proof that you should believe in my two gods! C7: I'm convinced! Hey, you two below me! Here's a proof that you should believe in my god! C8 & C9: Ok, I believe you both! Hey, you two below me! Here's a proof that you should believe in my three gods! C12 & C14: Ok, I believe you both! Hey, you two below me! Here's a proof that you should believe in my four gods! C13: Ok, I believe you both! Hey, you two below me! Here's a proof that you should believe in my six gods! [The speech bubbles of C10, C11 and C15 are not seen at all, but would all be a "my (singular) god" quote.]

[Caption below the panel:] Pascal's Wager Triangle

378 notes

·

View notes

Text

08.21.24

a triangle that isn’t actually a triangle; a butterfly knot; real need that coalesces into contrived want; contriving that need to be in ways that keep breathing; an offer to decompartmentalize;

a refusal to share what i can’t accept of myself, which keeps me from finding acceptance outside of myself, which might help me accept what i can’t accept of myself; is this like pascal’s wager?

that you ask me, which shows me that i can ask too; and shows me i don’t ask because i’m scared, still; that what therapists do how communities ought to be able to hold, if there were more to bear the weight; to be a medium not the arbitrator

0 notes

Text

today’s confession is that when I first started hearing about Pedro Pascal I assumed he was like. the guy who invented Pascal’s Triangle and Pascal’s Wager. and it was only a few months ago that I learned he is in fact an actor and the nephew of former Chilean president and CIA victim Salvador Allende, and not a 17th century French mathematician and philosopher.

1 note

·

View note

Text

Uncertainty Wednesday: Pascal’s Wager and Impeachment

I have been enjoying writing about current events as part of Uncertainty Wednesdays, such as the Notre Dame fire and the Boeing crashes. Today’s edition is about impeachment following the Mueller report. One of the great early thinkers about uncertainty was Blaise Pascal who made many important contributions to mathematics (among them Pascal’s triangle which is a staple of learning math).

In a posthumously published work Pascal sets out a simple argument for believing in God which has become known as Pascal’s wager: a belief in God imposes a finite cost during one’s lifetime but with some small probability offers an infinite benefit of an eternal heavenly afterlife (he was thinking of a Christian God but the argument applies to many other religions that have a similar concept). Conversely if you do not belief in God you have a finite gain versus a small probability of an infinite loss from an eternity in hell. With this setup the rational choice is to believe in God.

Pascal’s wager is an important contribution to thinking about living under uncertainty because it separates out the probability of states of the world (God exists, God does not exist) from the payoffs of different decisions when those states are realized. In doing so it makes clear that when payoffs can be very large in either direction (here infinite gain or infinite loss), then even small probabilities have a huge impact on decisions. Importantly no amount of prior research or forecasting can meaningfully resolve this analysis. Pascal’s wager can thus be seen as an early (maybe the earliest?) contribution to a line of thinking about the importance of extreme payoffs that today is being carried on prominently by Nassim Taleb.

What does any of this have to do with the question of impeachment? Well, one analysis of whether or not the Democrats should move to impeach Trump in the wake of the Mueller report has centered around the impact on the 2020 presidential elections. Some make the argument that impeaching now reduces the Democrats chances of winning in 2020. But if you look at Pascal’s wager, let’s say you find some evidence that give you a small reduction in the probability that God exists, that still does not change the decision because the payoff is so extreme.

I believe a similar logic applies here if you consider the payoffs to be the longterm consequences for limits on the behavior of all future presidents. You can either impeach or not and then the president either wins re-election or does not. If you impeach there is a small cost if Trump is reelected but a massive gain if he is not. Conversely if you do not impeach there is a massive cost if Trump is reelected and a small gain if he is not. Why? Because impeachment is about congressional oversight of the behavior of a president. It is a crucial part of the checks and balances of the system of government in the United States.

The crucial part here is that this logic is independent of whether you think impeachment will succeed in removing the president. In fact, I assumed that it will not succeed and that Trump will be able to run in 2020 in any case. Another way of phrasing the analysis is as follows: do not give up on a fundamental principle for tactical gain (here the fundamental principle being congressional oversight). The fundamental principle has huge (infinite) upside/downside whereas tactical gains are always small by comparison.

1 note

·

View note

Text

Why You Can’t Future-Proof Your Gaming PC

UE4 Elemental demo, powered by DX12

Talk to anyone about building a new PC, and the question of longevity is going to pop up sooner rather than later. Any time someone is dropping serious cash for a hardware upgrade they’re going to have questions about how long it will last them, especially if they’ve been burned before. But how much additional value is it actually possible to squeeze out of the market by doing so — and does it actually benefit the end-user?

Before I dive in on this, let me establish a few ground rules. I’m drawing a line between buying a little more hardware than you need today because you know you’ll have a use for it in the future and attempting to choose components for specific capabilities that you hope will become useful in the future. Let me give an example:

If you buy a GPU suitable for 4K gaming because you intend to upgrade your 1080p monitor to 4K within the next three months, that’s not future-proofing. If you bought a Pascal GPU over a Maxwell card in 2016 (or an AMD card over an NV GPU) specifically because you expected DirectX 12 to be the Next Big Thing and were attempting to position yourself as ideally as possible, that’s future-proofing. In the first case, you made a decision based on the already-known performance of the GPU at various resolutions and your own self-determined buying plans. In the second, you bet that an API with largely unknown performance characteristics would deliver a decisive advantage without having much evidence as to whether or not this would be true.

Note: While this article makes frequent reference to Nvidia GPUs, this is not to imply Nvidia is responsible for the failure of future-proofing as a strategy. GPUs have advanced more rapidly than CPUs over the past decade, with a much higher number of introduced features for improving graphics fidelity or game performance. Nvidia has been responsible for more of these introductions, in absolute terms, than AMD has.

Let’s whack some sacred cows:

DirectX 12

In the beginning, there were hopes that Maxwell would eventually perform well with DX12, or that Pascal would prove to use it effectively, or that games would adopt it overwhelmingly and quickly. None of these has come to pass. Pascal runs fine with DX12, but gains in the API are few and far between. AMD still sometimes picks up more than NV does, but DX12 hasn’t won wide enough adoption to change the overall landscape. If you bought into AMD hardware in 2013 because you thought the one-two punch of Mantle and console wins were going to open up an unbeatable Team Red advantage (and this line of argument was commonly expressed), it didn’t happen. If you bought Pascal because you thought it would be the architecture to show off DX12 (as opposed to Maxwell), that didn’t happen either.

Now to be fair, Nvidia’s marketing didn’t push DX12 as a reason to buy the card. In fact, Nvidia ignored inquiries about their support for async compute to the maximum extent allowable by law. But that doesn’t change the fact that DX12’s lackluster adoption to-date and limited performance uplift scenarios (low-latency APIs improve weak CPU performance more than GPUs, in many cases) aren’t a great reason to have upgraded back in 2016.

DirectX 11

Remember when tessellation was the Next Big Thing that would transform gaming? Instead, it alternated between having a subtle impact on game visuals (with a mild performance hit) or as a way to make AMD GPUs look really bad by stuffing unnecessary tessellated detail into flat surfaces. If you bought an Nvidia GPU because you thought its enormous synthetic tessellation performance was going to yield actual performance improvements in shipping titles that hadn’t been skewed by insane triangle counts, you didn’t get what you paid for.

DirectX 10

Everybody remember how awesome DX10 performance was? Anybody remember how awesome DX10 performance was?

Anybody?

If you snapped up a GTX 8xxx GPU because you thought it was going to deliver great DX10 performance, you ended up disappointed. The only reason we can’t say the same of AMD is because everyone who bought an HD 2000 series GPU ended up disappointed. When the first generation of DX10-capable GPUs often proved incapable of using the API in practice, consumers who’d tried to future-proof by buying into a generation of very fast DX9 cards that promised future compatibility instead found themselves with hardware that would never deliver acceptable frame rates in what had been a headline feature.

This is where the meme “Can it play Crysis?” came from. Image by CrysisWiki.

This list doesn’t just apply to APIs, though APIs are an easy example. If you bought into first-generation VR because you expected your hardware would carry you into a new era of amazing gaming, well, that hasn’t happened yet. By the time it does, if it does, you’ll have upgraded your VR sets and the graphics cards that power them at least once. If you grabbed a new Nvidia GPU because you thought PhysX was going to be the wave of the future for gaming experiences, sure, you got some use out of the feature — just not nearly the experience the hype train promised, way back when. I liked PhysX — still do — but it wound up being a mild improvement, not a major must-have.

This issue is not confined to GPUs. If you purchased an AMD APU because you thought HSA (Heterogeneous System Architecture) was going to introduce a new paradigm of CPU – GPU problem solving and combined processing, five years later, you’re still waiting. Capabilities like Intel’s TSX (Transaction Synchronization Extensions) were billed as eventually offering performance improvements in commercial software, though this was expected to take time to evolve. Five years later, however, it’s like the feature vanished into thin air. I can find just one recent mention of TSX being used in a consumer product. It turns out, TSX is incredibly useful for boosting the performance of the PS3 emulator RPCS3. Great! But not a reason to buy it for most people. Intel also added support for raster order views years ago, but if a game ever took advantage of them I’m not aware of it (game optimizations for Intel GPUs aren’t exactly a huge topic of discussion, generally speaking).

You might think this is an artifact of the general slowdown in new architectural improvements, but if anything the opposite is true. Back in the days when Nvidia was launching a new GPU architecture every 12 months, the chances of squeezing support into a brand-new GPU for a just-demonstrated feature was even worse. GPU performance often nearly doubled every year, which made buying a GPU in 2003 for a game that wouldn’t ship until 2004 a really stupid move. In fact, Nvidia ran into exactly this problem with Half-Life 2. When Gabe Newell stood on stage and demonstrated HL2 back in 2003, the GeForce FX crumpled like a beer can.

I’d wager this graph sold more ATI GPUs than most ad campaigns. The FX 5900 Ultra was NV’s top GPU. The Radeon 9600 was a midrange card.

Newell lied, told everyone the game would ship in the next few months, and people rushed out to buy ATI cards. Turns out the game didn’t actually ship for a year and by the time it did, Nvidia’s GeForce 6xxx family offered far more competitive performance. An entire new generation of ATI cards had also shipped, with support for PCI Express. In this case, everyone who tried to future-proof got screwed.

There’s one arguable exception to this trend that I’ll address directly: DirectX 12 and asynchronous compute. If you bought an AMD Hawaii GPU in 2012 – 2013, the advent of async compute and DX12 did deliver some performance uplift to these solutions. In this case, you could argue that the relative value of the older GPUs increased as a result.

But as refutations go, this is a weak one. First, the gains were limited to only those titles that implemented both DX12 and async compute. Second, they weren’t uniformly distributed across AMD’s entire GPU stack, and higher-end cards tended to pick up more performance than lower-end models. Third, part of the reason this happened is that AMD’s DX11 driver wasn’t multi-threaded. And fourth, the modest uptick in performance that some 28nm AMD GPUs enjoyed was neither enough to move the needle on those GPUs’ collective performance across the game industry nor sufficient to argue for their continued deployment overall relative to newer cards build on 14/16nm. (The question of how quickly a component ages, relative to the market, is related-but-distinct from whether you can future-proof a system in general).

Now, is it a great thing that AMD’s 28nm GPU customers got some love from DirectX 12 and Vulkan? Absolutely. But we can acknowledge some welcome improvements in specific titles while simultaneously recognizing the fact that only a relative handful of games have shipped with DirectX 12 or Vulkan support in the past three years. These APIs could still become the dominant method of playing games, but it won’t happen within the high-end lifespan of a 2016 GPU.

Optimizing Purchases

If you want to maximize your extracted value per dollar, don’t focus on trying to predict how performance will evolve over the next 24-48 months. Instead, focus on available performance today, in shipping software. When it comes to features and capabilities, prioritize what you’re using today over what you’ll hope to use tomorrow. Software roadmaps get delayed. Features are pushed out. Because we never know how much impact a feature will have or how much it’ll actually improve performance, base your buying decision solely on what you can test and evaluate at the moment. If you aren’t happy with the amount of performance you’ll get from an update today, don’t buy the product until you are.

Second, understand how companies price and which features are the expensive ones. This obviously varies from company to company and market to market, but there’s no substitute for it. In the low-end and midrange GPU space, both AMD and Nvidia tend to increase pricing linearly alongside performance. A GPU that offers 10 percent more performance is typically 10 percent more expensive. At the high end, this changes, and a 10 percent performance improvement might cost 20 percent more money. As new generations appear and the next generation’s premium performance becomes the current generation’s midrange, the cost of that performance drops. The GTX 1060 and GTX 980 are an excellent example of how a midrange GPU can hit the performance target of the previous high-end card for significantly less money less than two years later.

Third, watch product cycles and time your purchasing accordingly. Sometimes, the newly inexpensive last generation product is the best deal in town. Sometimes, it’s worth stepping up to the newer hardware at the same or slightly higher price. Even the two-step upgrade process I explicitly declared wasn’t future-proofing can run into trouble if you don’t pay close attention to market trends. Anybody who paid $1,700 for a Core i7-6950X in February 2017 probably wasn’t thrilled when the Core i9-7900X dropped with higher performance and the same 10 cores a few months later for just $999, to say nothing of the hole Threadripper blew in Intel’s HEDT product family by offering 16 cores instead of 10 at the same price.

Finally, remember this fact: It is the literal job of a company’s marketing department to convince you that new features are both overwhelmingly awesome and incredibly important for you to own right now. In real life, these things are messier and they tend to take longer. Given the relatively slow pace of hardware replacement these days, it’s not unusual for it to take 3-5 years before new capabilities are widespread enough for developers to treat them as the default option. You can avoid that disappointment by buying the performance and features you need and can get today, not what you want and hope for tomorrow.

Update (6/4/2020): The best reason to revisit a piece like this is to check it for accuracy. After all, “future-proofing” is supposed to be a method of saving money. Nearly two years later, how does this piece hold up compared to the introductions we’ve seen since?

On the CPU front, all of the good gaming CPUs of 2018 are still solid options in 2020. This is more-or-less as expected, since CPUs age more slowly than GPUs these days. An AMD platform from 2018 has better upgrade options than its Intel equivalent, but both solutions are excellent today.

At the same time, however, chipset compatibility doesn’t extend infinitely far into the future. AMD is going to make Zen 3 CPUs available to a few X470 and B450 motherboards, but it’s an unusual state of affairs. You can plug a modern GPU into a ten-year-old motherboard, but CPU / chipset compatibility rarely aligns for more than a few years, even where AMD is concerned.

On the GPU front, Turing is a few months from its second birthday, and all of our predictions about how long it would take ray tracing to break into mainstream gaming have proven true. There’s a solid handful of RTX games on the market that show off the technology reasonably well, but Ampere-derived GPUs will be in-market before ray tracing establishes itself as a must-have feature.

This is only a problem if you buy your GPUs based on what you expect them to do in the future. If you bought an RTX 2080 or 2080 Ti because you wanted that level of performance in conventional games and viewed ray tracing as a nice addition, then you’ll replace Turing with something else and be satisfied with things. If you bought Turing believing it would take ray tracing mainstream, you’ll probably be disappointed.

Now Read:

Don’t Buy the Ray-Traced Hype Around the Nvidia RTX 2080

How to Buy the Right Video Card for Your Gaming PC

What is DirectX 12?

from ExtremeTechExtremeTech https://www.extremetech.com/gaming/275890-why-you-cant-future-proof-your-gaming-pc from Blogger http://componentplanet.blogspot.com/2020/06/why-you-cant-future-proof-your-gaming-pc.html

0 notes