#small-target-cnn-architectures

Explore tagged Tumblr posts

Text

HyperTransformer: G Additional Tables and Figures

Subscribe .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top { margin-top: -10px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Text

Application of Hybrid CTC/2D-Attention end-to-end Model in Speech Recognition During the COVID-19 Pandemic

Application of Hybrid CTC/2D-Attention end-to-end Model in Speech Recognition During the COVID-19 Pandemic in Biomedical Journal of Scientific & Technical Research

Speech recognition technology is one of the important research directions in the field of artificial intelligence and other emerging technologies. Its main function is to convert a speech signal directly into a corresponding text. Yu Dong, et al. [1] proposed deep neural network and hidden Markov model, which has achieved better recognition effect than GMM-HMM system in continuous speech recognition task [1]. Then, Based on Recurrent Neural Networks (RNN) [2,3] and Convolutional Neural Networks (CNN) [4-9], deep learning algorithms are gradually coming into the mainstream in speech recognition tasks. And in the actual task they have achieved a very good effect. Recent studies have shown that endto- end speech recognition frameworks have greater potential than traditional frameworks. The first is the Connectionist Temporal Classification (CTC) [10], which enables us to learn each sequence directly from the end-to-end model in this way. It is unnecessary to label the mapping relationship between input sequence and output sequence in the training data in advance so that the endto- end model can achieve better results in the sequential learning tasks such as speech recognition. The second is the encodedecoder model based on the attention mechanism. Transformer [11] is a common model based on the attention mechanism. Currently, many researchers are trying to apply Transformer to the ASR field. Linhao Dong, et al. [12] introduced the Attention mechanism from both the time domain and frequency domain by applying 2D-attention, which converged with a small training cost and achieved a good effect.

And Abdelrahman Mohamed [13] both used the characterization extracted from the convolutional network to replace the previous absolute position coding representation, thus making the feature length as close as possible to the target output length, thus saving calculation, and alleviating the mismatch between the length of the feature sequence and the target sequence. Although the effect is not as good as the RNN model [14], the word error rate is the lowest in the method without language model. Shigeki Karita, et al. [15] made a complete comparison between RNN and Transformer in multiple languages, and the performance of Transformer has certain advantages in every task. Yang Wei, et al. [16] proposed that the hybrid architecture of CTC+attention has certain advancement in the task of Mandarin recognition with accent. In this paper, a hybrid end-to-end architecture model combining Transformer model and CTC is proposed. By adopting joint training and joint decoding, 2DAttention mechanism is introduced from the perspectives of time domain and frequency domain, and the training process of Aishell dataset is studied in the shallow encoder-decoder network.

For more articles in Journals on Biomedical Sciences click here bjstr

Follow on Twitter : https://twitter.com/Biomedres01 Follow on Blogger : https://biomedres01.blogspot.com/ Like Our Pins On : https://www.pinterest.com/biomedres/

#Journals on Infectious Diseases Addiction Science and clinical pathology#Open Access Clinical and Medical Journal#Journals on Medical Drug and Therapeutics#journal of biomedical research and reviews impact factor#Journals on Biomedical Engineering

0 notes

Text

Sensors, Vol. 23, Pages 2219: An Optimized Ensemble Deep Learning Model for Predicting Plant #miRNA–I#ncRNA Based on Artificial Gorilla Troops Algorithm

Micro#RNAs (#miRNA) are small, non-coding regulatory molecules whose effective alteration might result in abnormal gene manifestation in the downstream pathway of their target. #miRNA gene variants can impact #miRNA transcription, maturation, or target selectivity, impairing their usefulness in plant growth and stress responses. Simple Sequence Repeat (SSR) based on #miRNA is a newly introduced functional marker that has recently been used in plant breeding. #microRNA and long non-coding #RNA (l#ncRNA) are two examples of non-coding #RNA (#ncRNA) that play a vital role in controlling the biological processes of animals and plants. According to recent studies, the major objective for decoding their functional activities is predicting the relationship between l#ncRNA and #miRNA. Traditional feature-based classification systems’ prediction accuracy and reliability are frequently harmed because of the small data size, human factors’ limits, and huge quantity of noise. This paper proposes an optimized deep learning model built with Independently Recurrent Neural Networks (IndRNNs) and Convolutional Neural Networks (CNNs) to predict the interaction in plants between l#ncRNA and #miRNA. The deep learning ensemble model automatically investigates the function characteristics of genetic sequences. The proposed model’s main advantage is the enhanced accuracy in plant #miRNA–I#ncRNA prediction due to optimal hyperparameter tuning, which is performed by the artificial Gorilla Troops Algorithm and the proposed intelligent preying algorithm. IndRNN is adapted to derive the representation of learned sequence dependencies and sequence features by overcoming the inaccuracies of natural factors in traditional feature architecture. Working with large-scale data, the suggested model outperforms the current deep learning model and shallow machine learning, notably for extended sequences, according to the findings of the experiments, where we obtained an accuracy of 97.7% in the proposed method. https://www.mdpi.com/1424-8220/23/4/2219?utm_source=dlvr.it&utm_medium=tumblr

0 notes

Text

WordPress Help, Support: Can Need Support, Help of WordPress Enterprise?

WordPress actually powers 30% of all websites. It's an incredible figure, but it's sometimes overlooked by larger corporations — and with good reason. It's common knowledge that those figures are fabricated by teeny-tiny blogs and a website produced by, well, just about anyone. But here's the thing: while the above figure may be easily ignored by businesses looking for a CMS, WordPress remains a key player in the world's top echelon of website. WordPress controls roughly 26% of the top 10,000 websites on the internet, according to BuiltWith, and 14 of the top 100 websites in the world are powered by WordPress.

Big-name companies like CNN, Forbes, and UPS use WordPress as the foundation of their online presence. Is that confidence, however, misplaced? Is WordPress capable of supporting some of the industry's biggest digital players?

The Case for WordPress Help as a Business Platform

CMS -Grade

First and foremost, consider why such well-known brands have chosen WordPress as their web content management system.

1. Add-ons

There are over 50,000 plugins in the WordPress Plugin Directory. These plugins will help businesses add a variety of features and functionality to their WordPress site. “Plugins help you ensure good SEO, protection, social media sharing options, and so much more,” said Andrew Stanten, president of Emmaus, PA-based Altitude Marketing. When compared to other enterprise CMS solutions, he said the broad range of available WordPress plugins allows businesses to customize and extend the functionality of their website with relative ease.

2. Capabilities for integration

WordPress, like a more conventional enterprise-grade CMS, will interact with "several business-critical platforms," such as CRM systems and marketing automation platforms, according to Staten. “By streamlining the website and other business-operational resources, the company and its sales [teams] are able to get information into the sales cycle faster and more efficiently than they could with disparate systems,” he said.

3. Capabilities without a head

Though WordPress does not come with a built-in headless CMS, the advent of the WordPress REST API means it can be used as one. Enterprises who want to stay important in the IoT era need headless content management capabilities, as platforms grow with the advent of smart voice assistants, smart wearables, and more. Matt Brooks, CEO of SEOteric in Watkinsville, GA, discusses how WordPress can be used as a coupled or headless solution. “The Wordpress REST API can be used to populate content on almost any platform that supports APIs. WordPress may be used as a headed CMS solution with custom views for various content types, depending on the application. It can also be used as a headless CMS, with data being funneled to other applications via API.” Nevena Tomovic, business development manager at Human Made in Derbyshire, England, described how the company uses WordPress' headless capabilities to create scalable, enterprise sites. “We use open source technology to build out digital products that scale because the core of what we do is focused on WordPress. “Basically, we create custom workflows for major media companies like TechCrunch so they can publish and manage content in real time,” she explained. Human Made assisted TechCrunch in adopting the WordPress REST API to decentralize its publishing experience. As a result, TechCrunch was able to keep the WordPress backend's simplicity while still using the REST API to build a user-friendly frontend on any platform or touchpoint.

4. Usability

The ease of use of WordPress in almost all aspects of the backend interface is a major reason for its success. Sure, things can get complicated, but as Stanten points out, WordPress appeals to advertisers and non-technical users because they can quickly "update the website after its launch."

The Case Against WordPress as a Business-Grade Content Management System

WordPress isn't just sunshine and rainbows in the business world. Indeed, WordPress's obvious limitations and challenges keep it from being a truly ideal fit for enterprise businesses, especially those outside of the publishing industry.

1. The ability to scale

Despite headless capabilities that help businesses scale their content delivery platforms, Matthew Baier, COO of Contentstack in San Francisco, sees WordPress' scalability problems as a disadvantage: “The issue arises as the website begins to scale, which occurs when you add more content [and] resources to the equation. When you start relying on a website for a critical part of your business, your needs for a CMS will change drastically, and what started out as a simple and inexpensive way to develop your site can easily spiral into a highly complicated and costly environment to manage down the road,” Baier explained. Baier went on to say that even with a simple WordPress website with only a few plugins, you'll quickly notice that "something goes bump in the night." Almost every single night.” “At other words, you wake up every morning with a new collection of problems in your CMS that need to be addressed. Third-party tools and open source are great for innovation, but troubleshooting such a specialized software stack — let alone getting enterprise-grade support — can be difficult, according to Baier.

The plugin-based architecture of WordPress, according to Christian Gainsbrugh, founder of Seattle-based LearningCart, can quickly become a disadvantage for enterprise-scale sites and applications. “Because WordPress is capable of so many things, the temptation is to use it for everything. This works well on a smaller scale, but the data structure that makes Wordpress so flexible becomes horribly inefficient to query at the enterprise level,” he said. “When you try to use WordPress for things like powering an ecommerce store or using plugins to capture data like complex feedback forms, this becomes an issue. In those cases, you'll have to rely heavily on metadata tables to track custom fields, which means that looking up a single record could take up to 50 queries, according to Gainsbrugh.

2. Support of WordPress: Safety and security

The open-source nature of themes, widgets, and plugins, according to Shawn Moore, Global CTO at Orlando, Fla.-based Solodev, can make WordPress extremely vulnerable to cyber attacks. “Many of these plugins aren't well-supported by their developers (and, in many cases, come from untrustworthy sources), and may contain exploitable code or 'backdoors' that make them vulnerable to malicious code, malware injections, spyware, and other threats.” “Security is another area where WordPress falls short for enterprises,” Baier added on this front. WordPress-powered websites have an automatic target on their back due to the CMS's widespread use. Yes, you can secure a WordPress environment, but it takes diligence and vigilance, and even the tiniest lapse in updating can have immediate and disastrous consequences."

3. Issue with the Americans with Disabilities Act (ADA)

Moore continued his anti-WordPress statement by claiming that certain WordPress plugins and widgets placed businesses at risk of non-ADA enforcement. “Large corporations such as Target, Five Guys Burgers and Fries, Charles Schwab, and Safeway have all spent millions of dollars settling litigation after their websites were found to be in violation of the Web Content Accessibility Guidelines 2.0. “WordPress [plugins and widgets] aren't kept to ADA guidelines, but the company website that uses the widget or plugin bears the responsibility, not the developers,” Moore explained.

4. A lack of business support

To ensure that their digital presence is live, stable, and performing well, businesses need round-the-clock support. “Despite having a large group of development experts, there is no transparency or guarantee for its results, and no way to reach a dedicated support individual during a crisis,” Moore said of WordPress's lack of enterprise-level support. Enterprise companies are forced to troubleshoot on their own or find an expensive third-party option because of inconsistent documentation,” he said.

Is WordPress Appropriate for Enterprise Use?

Is WordPress capable of meeting the digital needs of a large corporation? Yes, to put it simply. It can easily integrate with third-party tools, is simple to use, and can even handle content without the need for human intervention. But, more importantly, do you use WordPress to power your large-scale web presence? This is all up in the air. One thing is certain: as WordPress grows in size, it becomes a pain to manage. Also small website owners can testify to this fact.

Updates fail, plugins break, developers abandon themes, hackers attack your web, and you can quickly end up with a patchwork digital presence that resembles a house of cards rather than an enterprise-grade CMS. For businesses that rely on using WordPress, the solution seems to be working with a WordPress-centric organization that can keep a close eye on their WordPress-powered environment — anything less will likely result in the house of cards collapsing.

We're your own personal WordPress helpdesk, support.

WordPress Support Services Available On-Demand No matter who designed it or where it's hosted, you'll get comprehensive support for your WordPress website.

The Best WordPress Support Service in the World

Our clients adore us!

WHAT WE DO ONCE-ONLY REPAIR

Website Management Mobile Websites Custom Development PageSpeed SEO Security PageSpeed Search Engine HOW DOES IT WORK? LOG IN TO THE BLOG PRICING

We're your own personal WordPress helpdesk.

WordPress Support Services Available On-Demand

No matter who designed it or where it's hosted, you'll get comprehensive support for your WordPress website.

WHAT WE DON'T DO

ONE-TIME REPAIR

Exactly what we do

WPHelp Center relieves you of the responsibility of managing your WordPress website, from friendly user support to dependable website maintenance and custom creation.

An expert in Help and troubleshooting for WordPress

Edits to the site and bug fixes Customized development Control of a website PageSpeed & Mobile-Friendly Design SEO for eCommerce WordPress's safety

We've got you covered.

Anything you'll need to keep your website in good shape. So you can focus on growing your company, we keep your website safe, healthy, and up-to-date.

Support for your website and a one-time fix

Do you need assistance with your WordPress website? We will assist you with fixing a broken site, making page edits, or creating new pages or features.

Page Speed Improvements

Are you frustrated by your low PageSpeed score? We will assist you in achieving top speed scores and lightning-fast loading times for your WordPress pages.

Optimization for mobile devices

Mobile now accounts for more than half of all internet traffic. We'll make sure that your WordPress stage is mobile-friendly.

WordPress Safety and Security

Is your WordPress account protected from hackers? Enjoy the peace of mind that comes with understanding that your website is constantly guarded against attacks.

Website Administration

Comprehensive WordPress website support, regardless of where it's hosted or who created it. We'll take care of the technology and growth. Allow our professionals to back up, upgrade, and track the security of your website while you concentrate on running your company. WPHelp.Center makes it simple to get started with WordPress support.

Tags: site, however, take care, woocommerce, take, ongoing, contact, team, requests, call us, speed, issue, error, need, job, hosting, see, necessary, one, fix, errors, request, time, free time, Wordpress, see small job for blog, need job, need experts, need experts, error fix, woocommerce info, great things about woocommerce, however take care, things, experts for error or issue fix, great woocommerce job, however contact for issue, however contact for error fix, fix speed issue, necessary team to fix the error, need strong team, request to experts to fix errors, build a free Wordpress, free request for Wordpress, WordPress errors fix, WP Website help, support, support wordpress, help, WP Website Help, free help or support of wordpress , time to fix error, website help, wordpress support for fix, see support for wordpress, take support wordpress , things see contact, help contact, take care, take care support or help, plans, small plans give for hosting, hosting plans, works for website, take care, updates of works, contact for works, free Wordpress plugins, fix Wordpress, Wordpress plan, add Wordpress team, Wordpress speed plan, fix wrodpress hosting issue, see Wordpress hosting, Wordpress service, Wordpress best site, add Wordpress best site, free Wordpress site, free Wordpress site speed check, Wordpress team for help, wordpress speed checking, support for wordpress, help for wordpress, help for free wordpress, take support of help service, wordpress site help, high speed, one chat to fix website speed test, team for wp website hosting, wordpress help,wordpress help for support, wordpress support or wordpress help, support or Help of Wordpress, free best support, help Wordpress, Chat a team for wesbiste speed,

WordPress Custom Development

WPHelp Center will take care of any custom development request, from minor tweaks to large-scale enterprise projects.

Platforms, features, and systems that are new

We specialize in developing and implementing WordPress-based a website, online utilities, and dedicated systems.

Developers who have been hand-picked

Your creation work will be completed by hand-selected, world-class WordPress experts who have gone through a rigorous submission and screening process.

You can trust our services.

Developers who are professionals

You'll task with top WordPress developers who can handle any project you throw at them.

a short processing period

We work on your requests on a regular basis, with most tasks taking 24-48 hours to complete.

Calls on Complementary Strategies

For your website, advice on SEO, technology choices, and digital strategy is available.

365 days a year, 24 hours a day

We're still on call, watching over your site and ready to assist.

Help from a Human Being

You'll be assigned a customer success manager in the United States that you can contact directly.

100% Customer Satisfaction

You'll enjoy making a website once more with WPHelp Center.

Details to Know Follow: https://wptangerine.com/wordpress-help/

Additional Resources: https://wordpress.com/learn/ https://wordpress.org/support/article/new-to-wordpress-where-to-start/ https://en.wikipedia.org/wiki/WordPress

0 notes

Link

Analysis: Volkswagen could soon steal Tesla's crown After years as the undisputed king of the electric car, Tesla (TSLA) could be matched sale for sale by Volkswagen (VLKAF) as early as 2022, according to analysts at UBS, who predict that Europe’s biggest carmaker will go on to sell 300,000 more battery electric vehicles than Tesla in 2025. Ending Tesla’s reign would be a huge milestone in Volkswagen’s transformation into an electric vehicle powerhouse. Badly burned by its diesel emissions scandal in 2015, Europe’s largest carmaker is investing €35 billion ($42 billion) in electric vehicles, staking its future on new technology and a dramatic shift away from fossil fuels. “Tesla is not only about electric vehicles. Tesla is also very strong in software. They really run the car as a device. They are making good progress on the autonomous thing. But yes … we are going to challenge Tesla,” Volkswagen CEO Herbert Diess told CNN’s Julia Chatterley on Tuesday. Volkswagen this week underscored the scale of that ambition. It said it would sell more than 2 million electric vehicles by 2025, build its own network of vast battery factories, hire 6,500 IT experts over the next five years, launch its own operating system, and become Europe’s second biggest software company behind SAP (SAP). UBS analysts told reporters last week that investors have failed to appreciate the speed at which Volkswagen is gaining ground on Tesla, and how much money the German company stands to make by going “all in” on electric cars before other established carmakers including Toyota (TM) and General Motors (GM). UBS has hiked its target price for Volkswagen shares by 50% to €300 ($358). “We have more confidence than ever that Volkswagen will deliver the unique combination of volume growth, making them the world’s largest [electric] carmaker, together with Tesla, as soon as next year, while their margins will be stable or even grow from here. That is something that is totally unappreciated,” said UBS analyst Patrick Hummel. Volkswagen, which owns Porsche, Audi, Skoda and SEAT, sold 231,600 battery electric vehicles in 2020. That’s less than half the number of sales Tesla made, but it represents an increase of 214% on the previous year. Rapid growth is expected to continue as Volkswagen launches 70 electric vehicles before the end of the decade. It will operate eight electric vehicle plants by 2022, producing models in nearly every segment — from small cars to SUVs and luxury sedans. The global race to electric car market domination will come down to the Californian upstart and the German industrial giant, according to UBS. It predicts that Volkswagen will exceed its own goal by producing 2.6 million electric vehicles in 2025, followed by Tesla with 2.3 million. Toyota, which sold more cars than anyone else last year, ranks a distant third with 1.5 million electric sales (excluding hybrids). Hyundai Motor Group (HYMTF) and Nissan (NSANF) will churn out roughly 1 million vehicles, followed by General Motors with 800,000. Shifting into overdrive Volkswagen is in a better position than its rivals because of its modular production platform, or MEB. The platform, which was used to produce the ID.3, an electric compact hatchback, will allow the carmaker to quickly produce a huge number of vehicles while slashing costs. UBS estimates that manufacturing an ID.3 currently costs Volkswagen €4,000 ($4,770) more than producing an equivalent Golf powered by gasoline or diesel. But a sharp decline in the cost of battery packs — the single most expensive part of an electric vehicle — mean the difference in production costs will be eliminated by 2025, according to UBS. Volkswagen on Monday unveiled plans to open six battery-making “gigafactories” in Europe by 2030, with the aim of slashing the cost of its battery cells by as much as 50%. “Lower prices for batteries means more affordable cars, which makes electric vehicles more attractive for customers,” said Diess. The huge scale of production at Volkswagen, which sold 9.3 million cars last year, will also help reduce costs. In addition to the MEB, the group is developing a separate platform for premium brands Audi and Porsche, allowing it to launch electric vehicles across its entire product range. Investors are starting to reward the company. Shares in Volkswagen jumped 6.5% to €207 ($247) on Tuesday, bringing gains so far this year to 35%. Where Tesla leads Despite the recent rise in its share price, Volkswagen is worth significantly less than Tesla. The market value of the challenger led by Elon Musk first overtook that of Volkswagen in January 2020, and the gap has increased dramatically since then. Volkswagen has a market capitalization of €111 billion ($133 billion), compared to $680 billion for Tesla. Part of the difference can be explained by Tesla’s continued superiority in battery costs, software and the profitability of its electric cars. According to UBS, Tesla has “a more sophisticated IT hardware architecture,” and its “software organization is on a different level.” Volkswagen lags Tesla in autonomous driving technology by several years. Some investors believe that Tesla will be able to capitalize on its software advantage in a big way. In addition to delivering wireless updates to its cars — a concept the company pioneered — Tesla may soon be able to do things like charge owners a subscription fee to use its autonomous driving software. It’s much closer to changing the nature of car ownership than established carmakers such as Volkswagen. UBS reckons that the earnings potential from software accounts for roughly two thirds, or $400 billion, of Tesla’s market value. “We think the lion’s share of this [$400 billion in] value can be generated by software, mainly autonomous driving. With that, Tesla has the potential to become one of the most valuable software companies,” its analysts wrote. There could be setbacks along the way, however. Tesla recently expanded its “full self-driving” software to roughly 2,000 owners, but some drivers had their access revoked for not paying close enough attention to the road. Dan Ives, an analyst at Wedbush Securities, said earlier this year that the electric vehicle market is “Tesla’s world and everyone else is paying rent.” But with 150 carmakers all pursuing the same goal, Tesla will need to execute on its strategy, he added. “While growth will be key, its profitability profile will be under the microscope from investors going forward to better discern how quickly Tesla can ramp its margin structure, especially with higher margin sales coming out of China over the next few years,” said Ives. For both Volkswagen and Tesla, their ambition to dominate the electric car market depends on their ability to become more like the other. Volkswagen needs to quickly upgrade its software capabilities, while Tesla would benefit from the German company’s ability to churn out million upon millions of high-quality vehicles each year. Volkswagen announced earlier this month that the first wireless software updates would be delivered to the ID.3 this summer. Ives predicts that Tesla will be making 1 million cars a year in 2022, and could be approaching 5 million annually by the end of the decade. With consumers now searching out electric cars, especially in Europe, the race between Volkswagen and Tesla will accelerate quickly. According to UBS, electric cars will make up 20% of global new vehicles sales in 2015 and 50% in 2030. “We find ourselves in a new playing field — up against companies that are entering the mobility market from the world of technology,” Diess said on Tuesday. “Stock market players still regard the Volkswagen Group as part of the ‘old auto’ world. By focusing consistently on software and efficiency, we are working to change this view.” Source link Orbem News #Analysis #Business #Crown #Steal #Tesla:Volkswagencouldsoonstealtheelectriccarcrown-CNN #Teslas #Volkswagen

0 notes

Text

A Convolutional Neural Network with K Nearest Neighbor for Image Classification

Image classification forms the basis for computer vision which is a trending sub-field in Machine Learning. The Convolutional Neural Network (ConvNet) has recently achieved great success in many computer vision tasks. The common architecture of ConvNets contains many layers to recurrently extract suitable image features and feed them to the softmax function for classification which often displays low prediction performance. In this paper, we propose the use of K-Nearest Neighbor as classifier for the ConvNets and also introduce the use of Principal Component Analysis (PCA) for dimensionality reduction. When successfully implemented, the proposed system should be able to accurately classify images.

The Convolutional Neural Network (ConvNet) has recently achieved great success in many computer vision tasks. ConvNet was partially inspired by neuroscience and thus shares many of the brain’s properties. Training a ConvNet can be achieved by back-propagating the classification error, which requires a reasonable amount of training data based on the size of the network.

Image classification can be defined as the task of categorizing images into one of several predefined classes, is a fundamental problem in computer vision. Image classification forms the basis for other computer vision tasks such as localization, detection and segmentation. Image classification is an important task in the field of machine learning and image processing, which is widely used in many fields, such as computer vision, network image retrieval and military automation target identification.

ConvNets, as standard feature extractors, have been continuously used to improve computer vision in terms of accuracy. This implies expulsion of the traditional hand-crafted feature extraction techniques in computer vision problems. The features learned from ConvNets are generated using a general-purpose learning procedure. Combining both hand-crafted features and machine learned feature is increasingly becoming a hot spot.

The common architecture of ConvNets contains many layers to recurrently extract suitable image features and feed them to the softmax function (also known as multinomial logistic regression) for classification and replaced softmax with Biometric Pattern Recognition (BPR) and Support Vector Machine (SVM) respectively in order to overcome the limitation of softmax classifier which, often displays a low prediction performance.

At every stage of unsupervised learning K Nearest Neighbor (KNN) can perform better than the SVM. Principal Component Analysis (PCA) can also be used to reduce the dimension of the convoluted image. This work is aimed at proposing an improved Convolutional Neural Network (ConvNet) with reduced complexity and improves precision that can be easily trained and can adapt to different data and tasks for image classification.

To achieve this, KNN is going to be adopted as the classifier while PCA for image dimension reduction will is adopted at the fully connected layer before input into the KNN for classification as this is to reduce the complexity.

Principal Component Analysis (PCA) is one of the statistical techniques frequently used in signal processing, data dimension reduction or data decorrelation. PCA is often used in signal and image processing as it offers a powerful means for data analysis and pattern recognition which are used as a technique for data compression, dimension reduction or decorrelation. PCA represent data in a form that increases the mutual independence of influential components by means of Eigen-analysis.

KNN is a simple algorithm that can store all available cases and classifies new cases based on a similarity measure. K-Nearest Neighbor (KNN) algorithm is one of the distinctive methods used in image classification. Nearest Neighbor classification of objects is based on their similarity to the training sets and the statistics defined. KNN’s basic idea is that if the majority of the k nearest samples of an image in the feature space belong to a certain category, the image also belongs to this category. KNN consists of two main procedures: similarity computing and k nearest samples searching. Since KNN requires no learning and training phases and avoids overfitting of parameters, it has a good accuracy in dealing with classification tasks with more samples and less classes. The k nearest neighbor (KNN) classifier is based on the Euclidean distance between a test sample and the specified training samples.

When compared, the performance of unsupervised feature learning and transfer learning against simple patch initialization and random weight initialization within the same setup. They showed that pre-training helps to train CNNs from few samples and that the correct choice of the initialization scheme can push the network’s performance by up to 41% compared to random initialization. Their results show that pre-training systematically improves generalization capabilities when handling datasets with few samples. They concluded that the choice of a pre-training method depend highly on the dataset used. They used the traditional filter selection and softmax as their classifier.

On being evaluated and compared the performance of the support vector machine (SVM) and KNN classifiers is measured and the performances of the classifiers by using the confusion matrix technique. It was found that the KNN classifier was better than the SVM classifier for the discrimination of pulmonary acoustic signals.

A new class was derived, of fast algorithms for convolutional neural networks using Winograd's minimal filtering algorithms. The algorithms were derived for network layers with 3x3 as filter size for image recognition tasks. The algorithms reduced arithmetic complexity up to 4 times compared with direct convolution, while using small block sizes with limited transform overhead and high computational intensity. Work was basically concentrated on the convolution layer and did not consider other layers of the ConvNet.

The performance of different classifiers on the CIFAR-10 dataset, were studied and an ensemble of classifiers was build to reach a better performance. It was show that CIFAR-10, KNN and Convolutional Neural Network (CNN), on some classes, are mutually exclusive, and as such produced higher accuracy when combined. The concept of Principal Component Analysis (PCA) to reduce overfitting in KNN was applied, and then combined it with a CNN to increase its accuracy. The work combined the KNN with CNN for feature extraction which is different.

On investigated, a series of data augmentation techniques to progressively improve the prediction invariance of image scaling and rotation was done. SVM classifier as an alternative to softmax was used to enhance generalization ability. The recognition rate was up to 92.74% on the patch level with data augmentation and classifier boosting. The results showed that, the combined CNN-SVM model beats models of traditional features with SVM as well as the original CNN with softmax.

To deal with the problem associated with softmax classifier, proposed a new technique of combining Biometric Pattern Recognition (BPR) with ConvNets for image classification. BPR performs class recognition by a union of geometrical cover sets in a high-dimensional feature space and therefore can overcome some disadvantages of traditional pattern recognition. They evaluated the method using three image datasets: MNIST, AR, and CIFAR10. We are applying their concept but using KNN instead of the BPR.

Architecture was presented which combines a Convolutional Neural Network (CNN) and a linear SVM for image classification. They employed the use of a simple 2-Convolutional Layer with max-pooling. This was tested, architecture using Fashion-MNIST datasets and found that the CNN-softmax outperformed CNN-SVM. Hence, confess that there was no enough preprocessing of the data in the study and need to improve on the model to achieve more accurate results,

It was proposed that a Presentation Attack Detection (PAD) method called Spoof Detection for near-infrared (NIR) camera-based finger-vein recognition system using Convolutional Neural Network (CNN) to enhance the detection ability of previous handcrafted methods. This led to derive a suitable feature extractor for the PAD using ConvNet. This processed the extracted image features in order to enhance the presentation attack finger-vein image detection ability of the CNN method using Principal Component Analysis Method (PCA) for dimensionality reduction of feature space and Support Vector Machine (SVM) for classification. Through extensive experimental results, it was endorsed that this proposed method is suitable for presentation of attack finger-vein image detection and it can deliver superior detection results when compared with other ConvNets methods.

The use of fully convolutional architectures in the notable object detection systems such as Fast/Faster RCNN to replace the fully connected layer of ConvNets was presented. A general formula was derived, specifically to accurately design the input size of the various fully convolutional networks in which the convolutional layer and pooling layer are concatenated with their strides and have proposed an efficient architecture of skip connection to accelerate the training process. This compared the model with Fast RCNN and the accuracy increased by about 2%. The method was tested using a very small data set. Again, using CNN as a classifier might not be feasible because fully connected layer is able to generalize the feature extracted into the output space and also the output need to be scaler.

During the work, a k-Tree method to learn different optimal k values for different test and new samples, by involving a training stage in the kNN classification was proposed. In the training stage, k-Tree method first learns optimal k values for all training samples by a new sparse reconstruction model, and then construct a decision tree using training samples and the learned optimal k values. In the test stage, the k-Tree obtained as output the optimal k value for each tested sample, before the kNN classification was carried out using the learned optimal k value and all training samples. The model had similar running cost but higher classification accuracy, compared with traditional kNN methods but less running cost. It was also realized that similar classification accuracy, compared with the new kNN methods, which assign different k values to different test samples is possible.

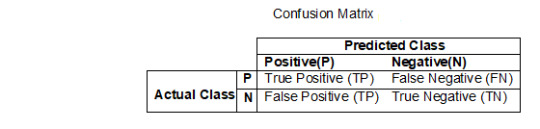

Confusion Matrix (explained below)

Positive(P): Observation is positive

Negative (N): Observation not positive

True Positive (TP): Observation is positive and is predicted positive

True Negative (TN): Observation is negative and is predicted negative

False Positive (FP): observation is negative but predicted positive

False Negative (FN): Observation is positive but predicted negative

We tend to design an improved Convolutional Neural Networks (ConvNets) with improved precision and accuracy. We employ the use of Principal Component Analysis (PCA) to reduce the dimension of the image and K Nearest Neighbor (KNN) for classification. When successfully implemented, the proposed system should be able to accurately classify images based on a given training set and test set. It will be evaluated in terms of accuracy.

#Image classification Convolutional Neural Networks Principal Component Analysis K-Nearest Neighbor.

0 notes

Photo

Visit us: http://www.connectinfosoft.com/contact_us.php

Our Services: http://www.connectinfosoft.com/service.php

Connect Infosoft Technologies, as a best Machine Learning development company, Machine learning is an application of artificial intelligence (AI) that enables a machine to learn from data rather than through explicit programming systems.

Machine learning, mainly focus on the development of computer programs that can observe or access data and use it to learn for themselves. Its primary aim is to allow the machine to learn automatically without any human intervention or assistance and adjust actions accordingly. Machine learning look’s for patterns in algorithm data and makes better decisions in the future based. It learns from experience. However, machine learning is not a simple process.

SOME MACHINE LEARNING METHODS:

Supervised Machine learning, as the name indicates the presence of supervisor. It is used in the cases where the target set is known. In this machine is trained by input data and produces a predicted outcome from labeled data. Which we can later verify using cross validation between the targets set and predicted outcome to check for our model accuracy.

Supervised learning models includes below algorithms:

Regression

o Linear

o Logistic

Classification.

Unsupervised Machine Learning, algorithms are used to train a machine when the target set is unknown or not labeled which leads algorithm to act on that information without guidance. Under the unsupervised machine learning the task of machine is to group unsorted information according to similarities, patterns and differences without any prior training of data. Thus the machine is restricted to find the hidden structure in unlabeled data by its own. Unsupervised learning models includes below algorithms:

Clustering

Association.

Semi-supervised Machine Learning, algorithms fall between supervised and unsupervised learning, since it is a combination of both labeled and unlabeled data for training. Typically a small amount of labeled data and a large amount of unlabeled data is available. This method is used on those systems which are considered for improving learning accuracy.

Reinforcement Machine Learning, is a part of Machine Learning. Reinforcement is about making our model learn by taking suitable action which provides it maximum reward in a particular situation. It is done by various software and machines to find the best possible behavior or path then it should take in a specific situation with maximum reward. Combining machine learning with cognitive technologies and AI makes it even more effective in processing large amount of information. Reinforcement machine learning is further classified into CNN(Convolutional Neural Network), ANN(Artificial Neural Network), RNN(Recurrent Neural Network), Q-Learning, DRQN, DDPG etc..

ADVANTAGES OF MACHINE LEARNING:

Vastly Used In Variety Of Applications Like Banking & Financial Sector, Healthcare, Retail, Publishing & Social Media, Robot Locomotion Etc.

Google And Facebook Uses It To Push Relevant Advertisements Based On Users Past Searched Queries Behavior.

Capable To Handle Multi-Dimensional And Multi-Variety Of Data In Dynamic Or Uncertain Environments.

Requires Less Time And Efficient Utilization Of Resources.

Tools In Machine Learning Provide Continuous Quality Improvements In Large And Complex Process Environments.

Source Programs Such As Rapidminer Help To Increase Usability Of Algorithms For Various Applications.

SOME MACHINE LEARNING TOOLS:

R: R is open-source programming languages with a large number of communities; it is mainly used for statistical analysis and analytical work. R has number of tools to communicate the results. R programming language is one the right tool for data science because of its powerful communication libraries. R is extensively used in the field of data science and machine learning from a very long time. Python: Python is an object-oriented, high level programming language for making web & app development and complex applications. It offers dynamic typing and dynamic binding options for applications and also supports modules and packages. Python programming is widely used in Artificial Intelligence (AI), Machine Learning (ML), Natural Language Generation, Neural Networks and other advanced. Python had deep focus on code readability. SAS: R and SAS is another great combination for programming languages. SAS is an integrated software suite for advanced analytics, used for statistical analysis, business intelligence, data management and predictive analytics. SAS software can be used for both ways- graphical interface and programming language. It can read in data/instruction from common spreadsheets and databases and results the output of statistical analyses in tables, graphs and as RTF, HTML, PDF. The SAS runs under compilers that can be used on Microsoft Windows, Linux and mainframe computers. SAS language consists of two compilers as SAS System and World Programming System (WPS). GPU Architecture: GPU computing is the process of using GPU (graphics processing unit) as a co-processor to accelerate CPUs for general purpose, scientific and engineering computing. The GPU accelerates the running applications on CPU by offloading some of the compute-intensive and time consuming portions of the code. The rest of the application still runs on CPU. Technically application runs faster because it is using the massively parallel processing power of the GPU to boost performance. This process is known as heterogeneous or hybrid computing architecture. Check Out Are Our More Services.

Want to learn what we can do for you?

Let's talk

Contact us:

Visit us: http://www.connectinfosoft.com/contact_us.php

Our Services: http://www.connectinfosoft.com/service.php

Email: [email protected]

Phone: (225) 361-2741

Connect InfoSoft Technologies Pvt.Ltd

0 notes

Text

HyperTransformer: A Example of a Self-Attention Mechanism For Supervised Learning

Subscribe .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top { margin-top: -10px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Photo

"[D] Teacher-Student training situation with CNN-FC"- Detail: I've been asked to convert a fully-trained CNN to a simple FC network with fixed architecture (it'll be used on a small chip if I remember correctly). They understand the classification performance will drop but it needs to be done anyway. I've set up the student network such that it just takes the flattened image as the input but I'm unsure what my targets are. I have the data the teacher network was trained on so I guess I can train the student using those inputs with the correspoding teacher output (rather than one-hot targets in the dataset). But my real question is can I just generate random input images and use whatever the teacher outputs as a target for the student to train on? Is that what is usually done to generate a lot of training data for the student network?. Caption by Lewba. Posted By: www.eurekaking.com

0 notes

Text

Winner Spotlight: “Highway Gallery” by Louvre Abu Dhabi & TBWA\RAAD

May 16, 2019

2018 MENA Effie Awards

GOLD – Media Innovation – Existing Channel SILVER – Travel, Tourism, and Transportation

Louvre Abu Dhabi opened in 2017 as the first universal museum in the Middle East, with a world-class collection of archaeological treasures and fine art spanning thousands of years. At launch, the museum welcomed crowds to a series of sold-out events - but just a couple of months post-celebration, visitor volume stalled.

Together, Louvre Abu Dhabi and agency partner TBWA\RAAD needed to attract locals to the museum – and the solution would need to counteract the UAE’s lagging enthusiasm for museums in general, and lack of awareness about the Louvre Abu Dhabi in particular.

Enter “Highway Gallery,” a series of masterpieces from Louvre Abu Dhabi displayed along the most highly-trafficked road in the UAE. The project integrated OOH and radio, with interpretations of each piece broadcast through the speakers of each passing car.

After successfully changing attitudes and attracting visitors, “Highway Gallery” took home a Gold and Silver Effie in the 2018 MENA Effie Awards competition.

Below, Remie Abdo, Head of Planning at TBWA\RAAD, shares insight into how she and her team got people sampling the museum and excited about the Louvre Abu Dhabi. Read on to hear how the team challenged the definition of innovation and found inspiration from unlikely sources.

What were your objectives for “Highway Gallery”?

RA: Louvre Abu Dhabi opened its doors in November 2017. As the first universal museum in the region, and with unprecedented architecture and innovative exhibitions, it ticks the ‘first’ and ‘ests’ checklist of the country’s superlatives. Add to that a string of opening events a 360 campaign, concerts and performances, global and regional celebrity visitors, a 3D laser mapping light show, and several ribbon-cutting events… and you won’t be surprised to know that opening month, tickets sold out.

However, the reality wasn’t that sweet.

Two months down the lane, once the opening hype faded, UAE residents were not that interested in visiting anymore. Fear of the ‘Eiffel Tower Syndrome’ — becoming a touristic landmark that locals don’t visit — became the new worrying reality.

The objective was as simple, and complex, as getting UAE residents to the doors of the museum.

What was the strategic insight that drove the campaign?

RA: To solve the problem at hand, we dug for the problem behind the problem. We asked, why weren’t UAE residents interested in Louvre Abu Dhabi beyond the opening ceremonies? One would have thought they’d be excited to have the Louvre in their capital city.

The UAE population consists of two major groups, the Emiratis (15% of the population) and the expats (85%). We investigated each separately.

We discovered that Emiratis believed museums ‘are not for them.’ They found museums boring and archaic, and they are more into other forms of entertainment. Their interest in Louvre Abu Dhabi was limited to their interest of having the ‘Louvre’ in their country – another prestigious milestone.

Expats were skeptical, likely to agree with the sentiments: ‘a Louvre without the Mona Lisa is not the Louvre’, ‘this will be a replica of Louvre Paris’, ‘this won’t be like the Louvre’. They were quick to compare Louvre Abu Dhabi to the Louvre in Paris, and were not interested in a doppelgänger.

Their pre-judgement wasn’t founded. Emiratis didn't know what museums were exactly, as they had never had any locally – and when they traveled, museums weren’t on their bucket lists. And expats didn’t know what Louvre Abu Dhabi could offer - and how could they love something they didn’t know?

The insight was clear: UAE residents were not into ‘Louvre Abu Dhabi’ museum, not because they didn’t love it, but because they didn’t know it.

What was your big idea? How did you bring the idea to life?

RA: Alex Likerman, author of The Undefeated Mind, said ‘Trying something new opens up the possibility for you to enjoy something new. Entire careers, entire life paths, are carved out by people dipping their baby toes into small ponds and suddenly discovering a love for something they had no idea would capture their imaginations.’

Aligned with this thinking and our insight, Louvre Abu Dhabi needed to give residents a taste of the museum in order to capture their minds and drive them to visit. In the FMCG world, the solution would have been a no-brainer: distribute free samples of the product. Borrowing from retail best practices, the strategy boiled down to one question: How do we give a sample of the museum?

We introduced The Highway Gallery: A first-ever roadside exhibition featuring 10 of Louvre Abu Dhabi’s most magnificent masterpieces on giant, can’t-miss, 9x6 meter (approx. 30x20 foot) vertical frames. Among the works featured were Leonardo da Vinci’s La Belle Ferronnière (1490), Vincent van Gogh’s Self Portrait (1887), and Gilbert Stuart’s Portrait of George Washington (1822). The frames were placed as billboards along over 100km (approx. 62 miles) of the E11 Sheikh Zayed Road, the busiest highway in the UAE with an average of 12,000 cars commuting daily and the road that leads to Louvre Abu Dhabi.

But neither the size of the exhibition nor the choice of the artworks was a rich enough sample of the museum. Louvre Abu Dhabi needed to give a sneak peek into the artworks with their corresponding stories, beyond the aesthetics. Without context, the artworks lose their value.

Hence we used old ‘FM transmitter’ technology to hijack the frequencies of the most-listened-to radio stations on the highway. The FM devices synchronized and instantaneously broadcasted the story behind each art piece through the radios of cars passing by the frames. This was the world’s first audio-visual experience of this kind.

Example: When a car passed by the frame featuring Vincent Van Gogh’s Self Portrait (photo above), the passengers could hear on their radio speakers: “Say hello to Vincent Van Gogh, one of the greatest artists of the 19th century and the grandfather of modern art. He painted this Self Portrait in 1887, just three years before his death at 37. The impassioned brushstrokes reflect more than his artistic style, they reveal Vincent at his happiest and most-inspired. See them up close in our museum gallery Questioning A Modern World”.

What was the greatest challenge you faced when creating this campaign, and how did you approach that challenge?

RA: There were many challenges, but two notable ones.

The first, and easier to tackle, challenge was technical. We were innovating with an old medium, and when you’re the first to try something, it often doesn’t work quite right the first time. Until the very first day of the exhibition, we were still fixing bugs here and there. In such situations, disappointment settles in at some point, and you feel judged -- especially by those who told you ‘you can’t do it’… but the key in such situations is to use this frustration as a motive.

The second challenge was a bit bigger than us. Museums, in general, don’t appreciate creating replica of their artworks, and definitely not using these replicas as giant OOH media. We had to do a lot of selling to the client and go through multiple layers of approvals that got progressively more difficult.

How did you measure the effectiveness of the effort?

RA: The objective was to get UAE residents to the door of Louvre Abu Dhabi in the absence of all opening ceremonies. And we did just that. By the end of the Highway Gallery Exhibition, the declining numbers of visitors was a thing of the past, as the museum exceeded its monthly target x1.6 times. This time people were going to appreciate the artworks, ultimately achieving the museum’s main objective of footfall for the art.

Of course, we got some freebies along the way: Louvre Abu Dhabi followers on social media grew 4.2%; the online negative sentiment around the museum was reduced to only 1% and the positive sentiment grew 9%; Louvre Abu Dhabi brand recall registered a 14% uplift (regional average = 7%).

The Highway Gallery also got free local, regional and global coverage with CNN calling the gallery the “first of its kind in the world,” Lonely Planet stating that “Abu Dhabi became a lot more interesting,” The National regarding it as a “Highway to Heaven,” etc.

The museum became part of the conversations about Abu Dhabi through the press, but even more so through the people themselves. After stagnant Louvre Abu Dhabi online mentions during the previous months, the Highway Gallery garnered a 1,180% increase in mentions.

What are the most important learnings about marketing effectiveness that readers should take away from this case?

RA: Shifting perspective, as a means for innovation

‘Traditional media’ is a repelled expression in today’s world. Say “billboard” or “radio” twice and you will be labelled as the ‘traditional’ ‘non-digital’ ad person stuck in the old ways of doing things. With innovation, Louvre Abu Dhabi gave two traditional media a well-needed resuscitation, turning them into the most innovative and modern media combination of today.

The advertising industry witnesses changes by the minute – media channels deemed obsolete, processes reckoned too old. We naturally tend to discard the old and jump on the new to be perceived as innovative. However, this case proves that a new perspective on the old can create even more innovative solutions.

Good artists copy, great artists steal

It is unthought-of for a refined art industry to plagiarize from a mass FMCG practice. Drawing the parallel between an experience-based industry and a commodity-driven industry allowed the museum to find an unprecedented solution to its problem. Who said we can’t sample a museum?

In advertising, looking into adjacent industries is considered common practice. To create truly disruptive solutions however, looking into far-fetched industries to extract best practices can broaden our thinking, and ultimately make all the difference for the industry we are in.

Were there any unexpected long-term effects of this campaign?

RA: Last month, we launched the Tolerance Gallery, a sort of “Highway Gallery version 2” in support of the UAE’s ‘Year of Tolerance 2019.’ We placed sacred artworks representing different religions, from the Louvre Abu Dhabi’s collection, along the same Highway. This innovation is also set to be adopted soon by the Abu Dhabi government to alert drivers in cases of extreme fog to avoid road accidents. Several additional usages are being considered by different industries.

Remie Abdo is Head of Strategic Planning at TBWA\RAAD.

Remie would like to live in a world where purpose is our bread and butter, insights are our currency, storytelling is our language, common sense is more common, and free time is free.

An advocate of purpose, she tries to add sense to everything she does. On a personal level, she tailors her own clothes; grows her own vegetables and fruits, swaps consumerism with cultural-consumerism; obsesses about problem solving; and enjoys sharing ideas.

The same applies to her career. She is a firm believer that advertising is not an industry but a mean to a higher end; that of finding true solutions to real problems, influencing mindsets and shaping cultures for the better.

Her ethos: “If I am leaving my kid behind to work extra hours, I’d rather make it worthwhile”, continues to bear fruit in the shape of Cannes Lions, WARC, Effies, Dubai Lynx, Loeries, London International Awards, as well as judging global awards shows.

Remie started her career at Orange Telecom, BNP Paribas and the French Football Federation in Paris. After her Parisian adventure, she entered the agency world in Dubai making her way up from junior planner to Head of Planning at TBWA\RAAD Dubai today.

Read more Winner Spotlight interviews >

#Winner Spotlight#MENA Effies#MENA Effie Awards#TBWA#Louvre Abu Dhabi#TBWARAAD#Marketing Effectiveness Playbook

0 notes

Text

New deep learning model brings image segmentation to edge devices

A new neural network architecture designed by artificial intelligence researchers at DarwinAI and the University of Waterloo will make it possible to perform image segmentation on computing devices with low-power and -compute capacity.

Segmentation is the process of determining the boundaries and areas of objects in images. We humans perform segmentation without conscious effort, but it remains a key challenge for machine learning systems. It is vital to the functionality of mobile robots, self-driving cars, and other artificial intelligence systems that must interact and navigate the real world.

Until recently, segmentation required large, compute-intensive neural networks. This made it difficult to run these deep learning models without a connection to cloud servers.

In their latest work, the scientists at DarwinAI and the University of Waterloo have managed to create a neural network that provides near-optimal segmentation and is small enough to fit on resource-constrained devices. Called AttendSeg, the neural network is detailed in a paper that has been accepted at this year’s Conference on Computer Vision and Pattern Recognition (CVPR).

Object classification, detection, and segmentation

One of the key reasons for the growing interest in machine learning systems is the problems they can solve in computer vision. Some of the most common applications of machine learning in computer vision include image classification, object detection, and segmentation.

Image classification determines whether a certain type of object is present in an image or not. Object detection takes image classification one step further and provides the bounding box where detected objects are located.

Segmentation comes in two flavors: semantic segmentation and instance segmentation. Semantic segmentation specifies the object class of each pixel in an input image. Instance segmentation separates individual instances of each type of object. For practical purposes, the output of segmentation networks is usually presented by coloring pixels. Segmentation is by far the most complicated type of classification task.

The complexity of convolutional neural networks (CNN), the deep learning architecture commonly used in computer vision tasks, is usually measured in the number of parameters they have. The more parameters a neural network has the larger memory and computational power it will require.

RefineNet, a popular semantic segmentation neural network, contains more than 85 million parameters. At 4 bytes per parameter, it means that an application using RefineNet requires at least 340 megabytes of memory just to run the neural network. And given that the performance of neural networks is largely dependent on hardware that can perform fast matrix multiplications, it means that the model must be loaded on the graphics card or some other parallel computing unit, where memory is more scarce than the computer’s RAM.

Machine learning for edge devices

Due to their hardware requirements, most applications of image segmentation need an internet connection to send images to a cloud server that can run large deep learning models. The cloud connection can pose additional limits to where image segmentation can be used. For instance, if a drone or robot will be operating in environments where there’s no internet connection, then performing image segmentation will become a challenging task. In other domains, AI agents will be working in sensitive environments and sending images to the cloud will be subject to privacy and security constraints. The lag caused by the roundtrip to the cloud can be prohibitive in applications that require real-time response from the machine learning models. And it is worth noting that network hardware itself consumes a lot of power, and sending a constant stream of images to the cloud can be taxing for battery-powered devices.

For all these reasons (and a few more), edge AI and tiny machine learning (TinyML) have become hot areas of interest and research both in academia and in the applied AI sector. The goal of TinyML is to create machine learning models that can run on memory- and power-constrained devices without the need for a connection to the cloud.

With AttendSeg, the researchers at DarwinAI and the University of Waterloo tried to address the challenges of on-device semantic segmentation.

“The idea for AttendSeg was driven by both our desire to advance the field of TinyML and market needs that we have seen as DarwinAI,” Alexander Wong, co-founder at DarwinAI and Associate Professor at the University of Waterloo, told TechTalks. “There are numerous industrial applications for highly efficient edge-ready segmentation approaches, and that’s the kind of feedback along with market needs that I see that drives such research.”

The paper describes AttendSeg as “a low-precision, highly compact deep semantic segmentation network tailored for TinyML applications.”

The AttendSeg deep learning model performs semantic segmentation at an accuracy that is almost on-par with RefineNet while cutting down the number of parameters to 1.19 million. Interestingly, the researchers also found that lowering the precision of the parameters from 32 bits (4 bytes) to 8 bits (1 byte) did not result in a significant performance penalty while enabling them to shrink the memory footprint of AttendSeg by a factor of four. The model requires little above one megabyte of memory, which is small enough to fit on most edge devices.

“[8-bit parameters] do not pose a limit in terms of generalizability of the network based on our experiments, and illustrate that low precision representation can be quite beneficial in such cases (you only have to use as much precision as needed),” Wong said.

Attention condensers for computer vision

AttendSeg leverages “attention condensers” to reduce model size without compromising performance. Self-attention mechanisms are a series that improve the efficiency of neural networks by focusing on information that matters. Self-attention techniques have been a boon to the field of natural language processing. They have been a defining factor in the success of deep learning architectures such as Transformers. While previous architectures such as recurrent neural networks had a limited capacity on long sequences of data, Transformers used self-attention mechanisms to expand their range. Deep learning models such as GPT-3 leverage Transformers and self-attention to churn out long strings of text that (at least superficially) maintain coherence over long spans.

AI researchers have also leveraged attention mechanisms to improve the performance of convolutional neural networks. Last year, Wong and his colleagues introduced attention condensers as a very resource-efficient attention mechanism and applied them to image classifier machine learning models.

“[Attention condensers] allow for very compact deep neural network architectures that can still achieve high performance, making them very well suited for edge/TinyML applications,” Wong said.

Machine-driven design of neural networks

One of the key challenges of designing TinyML neural networks is finding the best performing architecture while also adhering to the computational budget of the target device.

To address this challenge, the researchers used “generative synthesis,” a machine learning technique that creates neural network architectures based on specified goals and constraints. Basically, instead of manually fiddling with all kinds of configurations and architectures, the researchers provide a problem space to the machine learning model and let it discover the best combination.

“The machine-driven design process leveraged here (Generative Synthesis) requires the human to provide an initial design prototype and human-specified desired operational requirements (e.g., size, accuracy, etc.) and the MD design process takes over in learning from it and generating the optimal architecture design tailored around the operational requirements and task and data at hand,” Wong said.

For their experiments, the researchers used machine-driven design to tune AttendSeg for Nvidia Jetson, hardware kits for robotics and edge AI applications. But AttendSeg is not limited to Jetson.

“Essentially, the AttendSeg neural network will run fast on most edge hardware compared to previously proposed networks in literature,” Wong said. “However, if you want to generate an AttendSeg that is even more tailored for a particular piece of hardware, the machine-driven design exploration approach can be used to create a new highly customized network for it.”

AttendSeg has obvious applications for autonomous drones, robots, and vehicles, where semantic segmentation is a key requirement for navigation. But on-device segmentation can have many more applications.

“This type of highly compact, highly efficient segmentation neural network can be used for a wide variety of things, ranging from manufacturing applications (e.g., parts inspection / quality assessment, robotic control) medical applications (e.g., cell analysis, tumor segmentation), satellite remote sensing applications (e.g., land cover segmentation), and mobile application (e.g., human segmentation for augmented reality),” Wong said.

0 notes

Text

If you did not already know

Expectation-Biasing State-of-the-art forecasting methods using Recurrent Neural Net- works (RNN) based on Long-Short Term Memory (LSTM) cells have shown exceptional performance targeting short-horizon forecasts, e.g given a set of predictor features, forecast a target value for the next few time steps in the future. However, in many applications, the performance of these methods decays as the forecasting horizon extends beyond these few time steps. This paper aims to explore the challenges of long-horizon forecasting using LSTM networks. Here, we illustrate the long-horizon forecasting problem in datasets from neuroscience and energy supply management. We then propose expectation-biasing, an approach motivated by the literature of Dynamic Belief Networks, as a solution to improve long-horizon forecasting using LSTMs. We propose two LSTM architectures along with two methods for expectation biasing that significantly outperforms standard practice. … Binary Weight and Hadamard-transformed Image Network (BWHIN) Deep learning has made significant improvements at many image processing tasks in recent years, such as image classification, object recognition and object detection. Convolutional neural networks (CNN), which is a popular deep learning architecture designed to process data in multiple array form, show great success to almost all detection \& recognition problems and computer vision tasks. However, the number of parameters in a CNN is too high such that the computers require more energy and larger memory size. In order to solve this problem, we propose a novel energy efficient model Binary Weight and Hadamard-transformed Image Network (BWHIN), which is a combination of Binary Weight Network (BWN) and Hadamard-transformed Image Network (HIN). It is observed that energy efficiency is achieved with a slight sacrifice at classification accuracy. Among all energy efficient networks, our novel ensemble model outperforms other energy efficient models. … Apache Pulsar Pulsar is a distributed pub-sub messaging platform with a very flexible messaging model and an intuitive client API. … TuckER Knowledge graphs are structured representations of real world facts. However, they typically contain only a small subset of all possible facts. Link prediction is a task of inferring missing facts based on existing ones. We propose TuckER, a relatively simple but powerful linear model based on Tucker decomposition of the binary tensor representation of knowledge graph triples. TuckER outperforms all previous state-of-the-art models across standard link prediction datasets. We prove that TuckER is a fully expressive model, deriving the bound on its entity and relation embedding dimensionality for full expressiveness which is several orders of magnitude smaller than the bound of previous state-of-the-art models ComplEx and SimplE. We further show that several previously introduced linear models can be viewed as special cases of TuckER. … https://bit.ly/3c1HP7R

0 notes

Text

Trump voter in immigration dilemma: We lied to our son – CNN Video