#smartproxy

Explore tagged Tumblr posts

Text

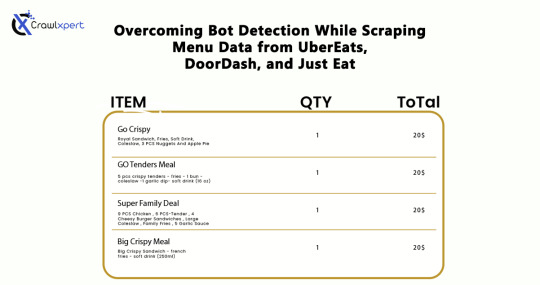

Overcoming Bot Detection While Scraping Menu Data from UberEats, DoorDash, and Just Eat

Introduction

In industries where menu data collection is concerned, web scraping would serve very well for us: UberEats, DoorDash, and Just Eat are the some examples. However, websites use very elaborate bot detection methods to stop the automated collection of information. In overcoming these factors, advanced scraping techniques would apply with huge relevance: rotating IPs, headless browsing, CAPTCHA solving, and AI methodology.

This guide will discuss how to bypass bot detection during menu data scraping and all challenges with the best practices for seamless and ethical data extraction.

Understanding Bot Detection on Food Delivery Platforms

1. Common Bot Detection Techniques

Food delivery platforms use various methods to block automated scrapers:

IP Blocking – Detects repeated requests from the same IP and blocks access.

User-Agent Tracking – Identifies and blocks non-human browsing patterns.

CAPTCHA Challenges – Requires solving puzzles to verify human presence.

JavaScript Challenges – Uses scripts to detect bots attempting to load pages without interaction.

Behavioral Analysis – Tracks mouse movements, scrolling, and keystrokes to differentiate bots from humans.

2. Rate Limiting and Request Patterns

Platforms monitor the frequency of requests coming from a specific IP or user session. If a scraper makes too many requests within a short time frame, it triggers rate limiting, causing the scraper to receive 403 Forbidden or 429 Too Many Requests errors.

3. Device Fingerprinting

Many websites use sophisticated techniques to detect unique attributes of a browser and device. This includes screen resolution, installed plugins, and system fonts. If a scraper runs on a known bot signature, it gets flagged.

Techniques to Overcome Bot Detection

1. IP Rotation and Proxy Management

Using a pool of rotating IPs helps avoid detection and blocking.

Use residential proxies instead of data center IPs.

Rotate IPs with each request to simulate different users.

Leverage proxy providers like Bright Data, ScraperAPI, and Smartproxy.

Implement session-based IP switching to maintain persistence.

2. Mimic Human Browsing Behavior

To appear more human-like, scrapers should:

Introduce random time delays between requests.

Use headless browsers like Puppeteer or Playwright to simulate real interactions.

Scroll pages and click elements programmatically to mimic real user behavior.

Randomize mouse movements and keyboard inputs.

Avoid loading pages at robotic speeds; introduce a natural browsing flow.

3. Bypassing CAPTCHA Challenges

Implement automated CAPTCHA-solving services like 2Captcha, Anti-Captcha, or DeathByCaptcha.

Use machine learning models to recognize and solve simple CAPTCHAs.

Avoid triggering CAPTCHAs by limiting request frequency and mimicking human navigation.

Employ AI-based CAPTCHA solvers that use pattern recognition to bypass common challenges.

4. Handling JavaScript-Rendered Content

Use Selenium, Puppeteer, or Playwright to interact with JavaScript-heavy pages.

Extract data directly from network requests instead of parsing the rendered HTML.

Load pages dynamically to prevent detection through static scrapers.

Emulate browser interactions by executing JavaScript code as real users would.

Cache previously scraped data to minimize redundant requests.

5. API-Based Extraction (Where Possible)

Some food delivery platforms offer APIs to access menu data. If available:

Check the official API documentation for pricing and access conditions.

Use API keys responsibly and avoid exceeding rate limits.

Combine API-based and web scraping approaches for optimal efficiency.

6. Using AI for Advanced Scraping

Machine learning models can help scrapers adapt to evolving anti-bot measures by:

Detecting and avoiding honeypots designed to catch bots.

Using natural language processing (NLP) to extract and categorize menu data efficiently.

Predicting changes in website structure to maintain scraper functionality.

Best Practices for Ethical Web Scraping

While overcoming bot detection is necessary, ethical web scraping ensures compliance with legal and industry standards:

Respect Robots.txt – Follow site policies on data access.

Avoid Excessive Requests – Scrape efficiently to prevent server overload.

Use Data Responsibly – Extracted data should be used for legitimate business insights only.

Maintain Transparency – If possible, obtain permission before scraping sensitive data.

Ensure Data Accuracy – Validate extracted data to avoid misleading information.

Challenges and Solutions for Long-Term Scraping Success

1. Managing Dynamic Website Changes

Food delivery platforms frequently update their website structure. Strategies to mitigate this include:

Monitoring website changes with automated UI tests.

Using XPath selectors instead of fixed HTML elements.

Implementing fallback scraping techniques in case of site modifications.

2. Avoiding Account Bans and Detection

If scraping requires logging into an account, prevent bans by:

Using multiple accounts to distribute request loads.

Avoiding excessive logins from the same device or IP.

Randomizing browser fingerprints using tools like Multilogin.

3. Cost Considerations for Large-Scale Scraping

Maintaining an advanced scraping infrastructure can be expensive. Cost optimization strategies include:

Using serverless functions to run scrapers on demand.

Choosing affordable proxy providers that balance performance and cost.

Optimizing scraper efficiency to reduce unnecessary requests.

Future Trends in Web Scraping for Food Delivery Data

As web scraping evolves, new advancements are shaping how businesses collect menu data:

AI-Powered Scrapers – Machine learning models will adapt more efficiently to website changes.

Increased Use of APIs – Companies will increasingly rely on API access instead of web scraping.

Stronger Anti-Scraping Technologies – Platforms will develop more advanced security measures.

Ethical Scraping Frameworks – Legal guidelines and compliance measures will become more standardized.

Conclusion

Uber Eats, DoorDash, and Just Eat represent great challenges for menu data scraping, mainly due to their advanced bot detection systems. Nevertheless, if IP rotation, headless browsing, solutions to CAPTCHA, and JavaScript execution methodologies, augmented with AI tools, are applied, businesses can easily scrape valuable data without incurring the wrath of anti-scraping measures.

If you are an automated and reliable web scraper, CrawlXpert is the solution for you, which specializes in tools and services to extract menu data with efficiency while staying legally and ethically compliant. The right techniques, along with updates on recent trends in web scrapping, will keep the food delivery data collection effort successful long into the foreseeable future.

Know More : https://www.crawlxpert.com/blog/scraping-menu-data-from-ubereats-doordash-and-just-eat

#ScrapingMenuDatafromUberEats#ScrapingMenuDatafromDoorDash#ScrapingMenuDatafromJustEat#ScrapingforFoodDeliveryData

0 notes

Text

2025年助力电商增长的最佳淘宝数据抓取工具

淘宝是全球规模最大、最具活力的在线交易平台之一,它拥有一个快速发展的生态系统,拥有数百万种产品、极具竞争力的价格和高度活跃的客户群。对于全球的电商创业者和企业来说,淘宝是一个充满机遇的宝库,等待着他们去挖掘。然而,手动浏览并从这个庞大的生态系统中提取洞察既耗时又低效。淘宝数据抓取工具的强大功能正是改变这一现状的关键所在,它使用户能够自动化并加速数据收集工作。

在当今竞争激烈的数字经济中,数据是最宝贵的财富。从分析市场趋势到解读消费者行为再到追踪竞争对手,及时准确的数据能够帮助企业做出更明智的商业决策。淘宝提供了一个动态且庞大的实时电商活动数据库,部署可靠的淘宝数据抓取工具可以帮助企业保持领先地位,优化运营并提升盈利能力。

本博客将探讨淘宝数据抓取工具为何对2025年电商增长至关重要。我们将深入探讨其运作方式、优先考虑的功能以及如何利用此类工具彻底改变您的产品采购、市场分析和定价策略。无论您是在拓展初创企业,还是管理全球零售品牌,通过有效的数据抓取获取的洞察都将是您迈向明智、数据驱动的成功之���。

了解淘宝爬虫

淘宝数据抓取工具是一款强大的工具,旨在从中国最大的 C2C 在线交易平台淘宝网 (Taobao.com) 提取结构化数据。对于电商企业、数据分析师和数字营销人员来说,这款工具提供了宝贵的资源,帮助他们深入了解淘宝网庞大的产品情报生态系统。

与传统的人工研究不同,淘宝产品数据抓取工具通过浏览淘宝网站、识别关键 HTML 元素并收集产品名称、价格、库存水平、评分和客户评论等关键数据,实现了数据抓取流程的自动化。这使得追踪趋势、监控竞争对手和优化业务策略变得更加容易。

提取的数据可以导出为 CSV 或 Excel 等便捷格式,方便分析或直接集成到您的电商平台。通过自动化数据提取,淘宝数据抓取工具可以帮助企业快速高效地做出更明智的数据驱动决策。在 2025 年竞争加剧的背景下,使用符合道德规范并遵守网站服务条款的抓取工具至关重要,这可以确保数据收集的安全、可扩展。

为什么电商玩家需要淘宝数据抓取工具

在快节奏的数字商务世界中,数据不仅实用,而且至关重要。对于瞄准2025年快速增长的企业来说,淘宝数据抓取工具不再是奢侈品,而是竞争的必需品。这款工具可以帮助电商卖家利用淘宝庞大的产品数据库,在采购、定价和产品选择方面获得优势。

以下是电商企业转向淘宝数据抓取的原因:

获取实时市场趋势:淘宝拥有数百万活跃商品,堪称消费者行为数据的金矿。淘宝数据抓取工具可以让您即时追踪热门商品、过热商品以及买家的真正需求。

更智能的产品采购:企业无需依赖猜测,而是可以通过抓取评论、评分和销售排名数据来识别高性能产品。这可以最大限度地降低风险,并有助于优先考虑更有可能成功的产品。

竞争性定价策略:电商的成功取决于价格的灵活性。数据抓取工具让您能够监控竞争对手的价格,并调整您自己的商品信息,从而在不失去吸引力的情况下实现利润最大化。

海量数据助力商品信息优化:无论您管理的是数百还是数千个 SKU,淘宝数据抓取工具都能简化内容收集流程(包括标题、图片、规格),帮助您更快、更准确地构建商品信息。

速度和可扩展性:手动研究速度慢且容易出错。数据抓取工具全天候工作,让您能够轻松扩展产品研究和更新。

淘宝爬虫的主要用例

人工智能驱动的商业模式和全球在线零售竞争的兴起,使得数据驱动的决策��为成功的必要条件。淘宝数据抓取工具在赋能电商企业方面发挥着至关重要的作用,它能够从中国最大的在线购物平台获取切实可行的洞察。以下是六个重要的用例:

1. 价格监控

在电商领域,保持价格与竞争对手保持一致至关重要。通过使用淘宝数据抓取工具,卖家可以实时跟踪数千个产品列表中的竞争对手定价。这使得品牌能够动态调整定价策略,保持竞争力并提高利润率,而无需不断进行手动检查。

2. 市场调研

了解消费者行为和市场趋势对于企业增长至关重要。通过淘宝数据抓取,企业可以提取大量产品数据(包括关键词、销量和客户偏好),从而做出明智的决策。这可以为从产品规划到战略营销活动的各个环节提供支持。

3. 产品列表优化

经过优化的产品列表能够提升曝光度和转化率。借助淘宝数据抓取工具,品牌可以提取排名靠前的产品标题、产品要点和产品描述。这些信息对于改写或优化自家电商平台的商品信息至关重要,有助于提升搜索性能和参与度。

4. 库存管理

高效管理库存可有效防止销售损失和库存积压。借助淘宝中国数据抓取工具,企业可以监控平台的库存水平和库存情况。这些洞察信息使卖家能够实时调整采购策略,避免供应问题或不必要的仓储成本。

5. 客户情绪分析

淘宝上的用户评论和评分能够揭示产品性能的关键信息。通过利用电商产品数据抓取服务,企业可以分析客户情绪,识别常见的投诉或好评,并相应地改进产品开发或客户服务策略。

6. 产品比较

知情的客户希望在购买前进行比较。使用产品数据抓取服务,企业可以收集并比较多个淘宝卖家或平台的关键信息,例如价格、功能、图片和规格。这使得构建工具或登录页面能够帮助用户更快、更自信地做出购买决策。

7 家最佳淘宝数据抓取工具提供商

找到合适的淘宝数据抓取工具,是解锁宝贵产品洞察、在电商市场取得优势的关键。以下是 7 家全球顶级的抓取工具提供商,它们可以帮助企业提取结构化的淘宝产品数据,例如商品详情、价格、评论和库存——无需编写代码。

1. TagX

TagX 是一家值得信赖的供应商,为全球电商品牌提供可扩展的淘宝数据抓取服务。无论您需要价格情报、产品洞察、评论还是库存更新,TagX 都能提供专为企业用途定制的、干净、结构化的数据集。他们擅长多语言抓取,并以精准快速地处理海量数据集而闻名。

主要特点:

专为规模化需求而打造的定制淘宝数据抓取工具。

产品详情、价格、评论和库存情况。

多语言支持和数据质量保证。

非常适合竞争对手研究和 SEO 优化。

定价:

根据数据量和频率定制

联系 TagX 获取免费咨询或演示

2. Octoparse

Octoparse 是一款用户友好、无需代码的网页数据抓取工具,广泛用于从淘宝等电商平台提取数据。它提供云端和本地两种抓取方式,并具备点击抓取、自动调度和 IP 轮换等强大功能。Octoparse 非常适合那些希望大规模抓取数据而无需编写脚本的用户。

主要功能:

预置的淘宝数据抓取模板。

云端和本地数据提取。

计划任务和智能自动检测。

抓取商品详情、图片、价格等。

价格:

免费版:功能有限

标准版:89 美元/月

专业版:249 美元/月

3. ParseHub

ParseHub 是一款功能强大的可视化数据抓取工具,专为处理淘宝等动态且 JavaScript 密集型网站而设计。凭借其直观的用户界面,用户可以抓取多个产品页面、价格、标题,甚至客户评论。它还支持自动化工作流程和多种格式的数据导出。

主要特点:

支持 AJAX 和动态页面。

以 Excel、JSON 或 API 格式导出。

分页和高级选择工具。

云端和本地数据运行。

定价:

免费套餐:基本功能

标准套餐:每月 189 美元

团队套餐:自定义定价

4. WebHarvy

WebHarvy 是一款基于 Windows 的抓取软件,可使用内置浏览器以可视化方式从淘宝等网站提取数据。对于寻求简单易用的桌面工具且无需编程的用户来说,它非常���想。它支持智能模式和正则表达式,用于结构化数据抓取。

主要特点:

点击抓取界面。

代理支持和定时抓取。

抓取文本、图片、链接和价格。

适用于多个产品页面。

定价:

标准许可证:139 美元(一次性)

企业许可证:349 美元(包含调度程序 + 代理轮换)

5. Bright Data

Bright Data(原名 Luminati)提供企业级数据抓取平台,拥有强大的可视化抓取工具和庞大的代理基础架构。它非常适合海量淘宝数据抓取,确保从复杂的网络结构中流畅地提取数据。Bright Data 深受大型企业和数据专业人士的信赖。

主要特点:

内置浏览器自动化。

住宅代理和轮换代理。

反机器人绕过和解锁。

实时数据提取。

定价:

按需付费或订阅计划。

入门计划:每月 500 美元起。

提供定制企业定价。

6. Smartproxy

Smartproxy 是一款值得信赖的代理解决方案,以能够流畅安全地访问淘宝等数据密集型网站而闻名。虽然它本身并非爬虫,但它可以与各种淘宝数据爬取工具无缝协作,从而提高成功率并避免 IP 封禁。Smartproxy 非常适合需要大规模可靠性的企业,它在全球范围内提供住宅和数据中心 IP 地址。

主要特点:

超过 6500 万个高质量住宅 IP 地址。

用户友好的控制面板和 API 访问。

高级会话控制和地理定位。

非常适合与淘宝爬取工具集成。

定价:

按用量付费:起价 8.50 美元/GB。

微型计划:每月 30 美元(2 GB)。

可根据要求提供商业计划。

7. Oxylabs

Oxylabs 是一家高级代理和网页爬取服务提供商,为企业级淘宝网页爬取需求提供定制解决方案。它既提供通过代理访问原始数据的功能,也提供即用型爬虫 API。凭借内置的轮播、防封禁措施和智能解析功能,它是从复杂的市场中提取产品级洞察的理想之选。

主要功能:

超过 1.02 亿个住宅和数据中心代理。

带有反机器人逻辑的专用爬虫 API。

自动解析和结构化数据交付。

可扩展的企业爬虫基础架构。

定价:

住宅代理:每月 99 美元起。

爬虫 API:根据使用情况定制价格。

提供免费试用和咨询。

TagX 淘宝数据抓取工具提供的数据类型

我们的淘宝数据抓取工具为每款产品提供详细的数据点,帮助您收集有价值的业务洞察。使用我们的淘宝数据抓取服务,您可以高效地收集和分析关键信息,从而做出更明智的业务决策。一些关键数据点包括:

产品名称:产品的标题或名称,确保您获得每条商品的准确标识符。

卖家名称:提供该产品的卖家信息,包括店铺详情和卖家评分,以帮助评估其信誉。

货币:产品定价的货币,使企业能够针对不同市场进行准确的财务评估。

折扣:有关产品任何可用折扣或优惠的信息,包括可能影响定价策略的季节性促销或特别促销。

价格:产品的当前价格,为您提供最新的市场趋势,并允许您相应地调整策略。

库存情况:产品是否有货,帮助您跟踪产品需求和供应链效率。

评论:客户对产品的评分和评价,提供产品质量洞察,并帮助您识别潜在问题或热门功能。

类别:产品所属的类别,帮助您了解产品的市场定位及其与您的产品的相关性。

品牌:与产品关联的品牌,可用于针对特定品牌进行分析,并识别特定类别中的热门产品品牌。

配送信息:关于配送选项和费用的详细信息,包括配送方式和配送时间,这些信息对于计算总成

本和预期交付至关重要。

产品描述:产品功能和规格的详细描述,提供全面的信息以评估产品与客户需求的相关性。

产品图片:产品图片链接,提供直观的展示,方便产品比较和提升客户体验。

URL:指向产品页面的直接 URL,可快速访问产品进行进一步分析或跟踪竞争对手。

ASIN:亚马逊产品列表的唯一标识符(如适用),允许企业在不同平台之间交叉引用数据,并促进更有效的多渠道战略。

结论

在2025年,对于希望在竞争激烈的电商环境中蓬勃发展的企业来说,获得可靠准确的淘宝数据至关重要。无论您是想优化产品列表、追踪竞争对手的定价,还是收集有价值的市场洞察,使用合适的淘宝数据抓取工具都能带来显著的优势。

本博客中提到的7家最佳淘宝数据抓取工具提供商都提供独特的功能和定价方案,以满足从小型初创企业到大型企业的广泛业务需求。评估您的具体需求至关重要,例如您需要的数据量、您的预算,以及您是喜欢DIY抓取还是完全托管服务。

在TagX,我们提供量身定制的淘宝数据抓取服务,旨在帮助电商品牌简化数据提取并充分利用其产品数据的潜力。凭借我们先进的技术、多语言支持和可扩展的解决方案,我们确保企业能够利用来自淘宝的实时、准确的数据在竞争中保持领先地位。

准备好利用可靠的淘宝数据来促进您的电商增长���吗?立即联系 TagX 获取定制演示或咨询,迈向数据驱动的成功!

原始来源,https://www.tagxdata.com/best-taobao-data-scraper-for-e-commerce-growth-2025

0 notes

Text

How to Choose the Best Proxy Marketing Platform?

Selecting the optimal proxy marketing platform requires balancing multiple factors��IP pool diversity, rotation speed, geo‑location targeting, security and compliance, integration capabilities, and transparent pricing. Advertisers leverage proxies to anonymize traffic, conduct localized ad verification, bypass geo‑restrictions, and optimize campaign performance through real‑time testing and monitoring. A robust proxy marketing strategy aligns proxy types (residential, datacenter, mobile) with campaign objectives such as VPN advertising or software marketing, while integrating with top ad networks like 7Search PPC to maximize reach and ROI. This guide explores proxy marketing fundamentals, key platform features, best practices for proxy‑powered campaigns, real‑world software advertisement examples, emerging technology advertising trends, and how 7Search PPC can amplify your proxy‑based advertising efforts.

Understanding Proxy Marketing Platforms

What Is a Proxy Marketing Platform?

A proxy marketing platform is a service that routes advertising traffic through intermediary servers—known as proxies—to mask original IP addresses, enabling geographic targeting, ad verification, and data collection without revealing the advertiser’s or user’s true location. By rotating proxies across a diverse IP pool, these platforms help advertisers conduct A/B tests, monitor ad placements, and gather competitor insights at scale without triggering anti‑fraud mechanisms.

<<Start Your Campaign – Drive Traffic Now!>>

Why Proxy Marketing Matters for Advertisers

Proxy marketing empowers advertisers to verify how ads render in different regions, detect ad fraud, and optimize bids by simulating real‑user behavior, Smartproxy. It also supports privacy‑first strategies, ensuring compliance with data protection regulations by concealing end‑user IPs while still delivering personalized content.

Key Features of Top Proxy Marketing Platforms

Robust IP Pool and Rotation

A high‑quality platform offers vast pools of residential, datacenter, and mobile proxies, ensuring reliable access and reduced risk of IP blocks. Automatic rotation mechanisms switch IPs at configurable intervals, maintaining anonymity and preventing detection during long‑running campaigns.

Types of Proxies

Residential Proxies: Originating from real user devices, they are harder for ad platforms to flag and block.

Datacenter Proxies: Offer high speed and low cost but may be more easily identified as non‑residential. Mobile Proxies: Provide the highest anonymity by leveraging ISP‑assigned IPs on cellular networks.

Geotargeting and Location Support

Effective platforms allow precise location targeting down to city or ZIP code levels, critical for VPN advertising campaigns that require local ad delivery, 7Search PPC. Geo‑specific proxies ensure ads appear genuine to target audiences in regulated or restricted markets.

Integration with Advertising Networks

Seamless integration with ad networks, such as 7Search PPC, enables direct campaign deployment, performance tracking, and optimization within the proxy platform dashboard 7Search PPC. This integration streamlines workflows for software marketing and technology advertising, consolidating proxy and ad operations in one interface.

Security and Compliance Features

Leading platforms incorporate built‑in compliance tools, like GDPR and CCPA filters, to mask sensitive user data and avoid legal penalties.s. They also offer encrypted connections (HTTPS/SSL), two‑factor authentication, and detailed audit logs to safeguard campaign integrity and data privacy.

Proxy Marketing Strategy: Best Practices

Aligning Proxies with Campaign Goals

Identify campaign objectives—fraud detection, localized ad verification, or targeted testing—and select proxy types accordingly. Residential proxies excel for VPN advertising audits, while datacenter proxies work for high‑volume scraping of software advertisement examples.

Using Residential vs. Datacenter Proxies

Residential proxies reduce block rates on premium platforms but come at a higher cost; datacenter proxies are ideal for bulk operations where occasional blocks are acceptable. Multilogin. Mobile proxies combine the benefits of both, providing strong anonymity for mobile‑first campaigns.

Proxy Marketing Strategy for VPN Advertising

When promoting VPN services, use proxies from your target markets to ensure ads display optimally and comply with regional policies. Geo‑locked content tests with local proxies help refine ad creatives and landing pages for market‑specific software marketing campaigns.

Combining Proxy Marketing with Software Marketing

Leverage proxy‑driven A/B testing to identify top‑performing software advertisement examples. Rotate proxies to simulate diverse user environments, collect engagement metrics, and refine ad copy and creatives based on real‑world feedback.

Evaluating Proxy Marketing Platforms

Performance Metrics: Speed, Reliability, Uptime

Benchmark platforms on proxy response times (ideally under 200 ms), uptime guarantees (99.9%+), and success rates for requests Intel471. Tools to visualize performance trends help identify and troubleshoot network issues proactively.

Scalability and Pricing Models

Choose a platform that offers flexible pricing—pay‑as‑you‑go, tiered subscriptions, or enterprise plans—with transparent overage rates to accommodate campaign growth without budget surprises.

Customer Support and Documentation

Robust API documentation, developer SDKs, and 24/7 support channels (chat, email, ticketing) are essential for quickly resolving integration challenges and optimizing proxy marketing workflows.

Software Advertisement Examples with Proxy Platforms

Case Study: Technology Advertising Campaigns

A cybersecurity firm used residential proxies to conduct geo‑specific ad verification across 10 countries, uncovering creative rendering issues that, once fixed, boosted click‑through rates by 25%.

Example: Promoting VPN Services with Proxies

By routing test traffic through local proxies, a VPN provider ensured its display ads aligned with regional privacy regulations and tailored messaging, increasing conversion rates by 18% in target markets.

Example: Advertising Software Solutions

An enterprise software vendor leveraged datacenter proxies to run large‑scale ad fatigue tests, identifying optimal frequency caps that reduced ad spend waste by 12% while maintaining ROI.

Technology Advertising Trends

Rise of VPN Advertising

With global privacy concerns at an all‑time high, VPN advertising spend is projected to grow by 20% year‑over‑year as consumers seek enhanced online security, 7Search PPC. Ad formats like native and in‑page push are gaining traction for unobtrusive, high‑engagement VPN campaigns.

Innovative Use Cases for Proxy Marketing

Marketers are experimenting with AI‑driven proxy rotation algorithms to optimize IP selection based on time‑of‑day traffic patterns and user demographics, significantly improving ad delivery precision.

How 7Search PPC Enhances Your Proxy Marketing Efforts

7Search PPC Overview

7Search PPC is a specialized ad network catering to advertisers in high‑privacy niches like VPNs, proxies, and security software 7Search PPC. It offers display, native, and push formats optimized for proxy‑powered campaigns.

Features Tailored for Proxy‑Based Campaigns

Built‑in geolocation controls, real‑time analytics, and a proprietary API allow seamless integration with leading proxy platforms, enabling advertisers to deploy and monitor proxy‑driven campaigns directly through 7Search PPC’s dashboard 7Search PPC.

Success Stories and ROI

Advertisers on 7Search PPC report an average 30% uplift in click‑through rates and a 22% reduction in cost‑per‑acquisition when combining proxy verification with targeted VPN advertising strategies.

Conclusion

Choosing the best proxy marketing platform hinges on matching your campaign goals with platform capabilities—diverse IP pools, precise geotargeting, performance reliability, and seamless integration with ad networks like 7Search PPC. By adopting a strategic approach to proxy selection, aligning proxy types with campaign needs, and leveraging real‑world software advertisement examples, you can enhance ad verification, optimize spend, and unlock new opportunities in VPN advertising, software marketing, and technology advertising.

Frequently Asked Questions (FAQs)

What is the difference between residential, datacenter, and mobile proxies?

Ans. Residential proxies use real user IPs and are harder to detect; datacenter proxies offer speed and volume at lower cost but can be flagged; mobile proxies use cellular networks for maximum anonymity.

How do proxies improve VPN advertising campaigns?

Ans. Proxies enable localized ad rendering tests, ensure compliance with regional policies, and help tailor creatives for audience segments, boosting relevance and conversions.

Can proxy marketing platforms integrate with any ad network

Ans. Most top platforms provide APIs and plugins for popular networks; 7Search PPC offers built‑in integration for seamless campaign deployment and analytics.

What metrics should I track when using proxies for software marketing?

Ans. Monitor proxy response time, error rates, ad viewability, click‑through rates, and conversion metrics to assess proxy performance and ad effectiveness.

Are proxy servers legal for advertising purposes?

Ans. Yes, when used ethically for ad verification, compliance testing, and market research, ensure you comply with local laws and platform terms to avoid misuse.

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Text

蜘蛛池需要哪些调试工具?TG@yuantou2048

在进行蜘蛛池的调试过程中,选择合适的调试工具可以大大提高效率。以下是一些常用的调试工具及其用途:

蜘蛛池需要哪些调试工具?TG@yuantou2048

1. 日志分析工具:用于监控和分析爬虫运行时产生的日志信息,帮助开发者快速定位问题。常用的工具有Logstash、ELK Stack等。

2. 代理管理工具:由于爬虫经常需要使用代理来避免被目标网站封禁IP,因此一个好用的代理管理工具是必不可少的。例如,ProxyRack、Smartproxy等工具可以帮助管理和测试代理的有效性。

3. 性能监控工具:如New Relic或Prometheus,这些工具能够实时监控爬虫的运行状态,确保爬虫稳定高效地工作。

4. 抓包工具:如Wireshark或Fiddler,它们可以帮助你查看网络请求和响应,对于调试爬虫非常有帮助。

5. 数据库管理工具:如果爬虫将数据存储到数据库中,那么一个好用的数据库管理工具(如DBeaver或MySQL Workbench)可以帮助你更好地管理和优化数据库查询。

6. 代码调试器:Python的pdb或者Chrome DevTools,可以帮助你调试代码,找出程序中的错误或瓶颈。

7. 分布式任务调度系统:Scrapy-Redis或Celery,用于处理大规模爬取任务的调度与执行。

8. 自动化测试工具:Selenium或PyTest,用于模拟用户行为并验证爬虫是否正确抓取了所需的数据。

8. 版本控制工具:Git,用于版本控制和协作开发。

9. 反爬虫检测工具:有些网站会设置反爬虫机制,使用像Selenium这样的工具可以帮助模拟浏览器行为,绕过一些简单的反爬虫策略。

8. 数据分析工具:如Jupyter Notebook,它支持Python脚本的编写和调试,特别适合处理复杂逻辑和数据清洗。

9. 网页抓取工具:如Scrapy,它是一个强大的爬虫框架,内置了对HTTP请求的处理,方便调试和维护爬虫项目。

10. 数据可视化工具:如Tableau或Power BI,用于分析爬取到的数据,确保数据的准确性和完整性。

11. API接口测试工具:Postman,用于测试API接口,确保爬虫能够正确解析和提取数据。

12. 虚拟化环境:如Docker,用于创建隔离的开发环境,确保不同环境下的表现一致。

13. 代码质量检查工具:如PyLint或Flake8,确保代码质量和稳定性。

14. 并发测试工具:如Locust,用于模拟大量并发请求,确保爬虫在高负载下的表现。

15. 日志记录工具:如Loguru,用于记录和追踪爬虫的运行情况,便于调试和故障排查。

16. 静态代码分析工具:如PyCharm或VSCode,提供代码编辑、调试功能,提高开发效率。

17. 异常捕捉工具:如Sentry,用于监控爬虫运行时的异常情况,确保爬虫在面对动态加载内容时能正常工作。

18. 数据抓取工具:如BeautifulSoup或Scrapy,用于解析HTML页面,提取所需数据。

19. 性能测试工具:如Apache JMeter,用于性能测试,确保爬虫在多线程或多进程环境中稳定运行。

希望以上工具列表对你有所帮助!

加飞机@yuantou2048

Google外链购买

EPS Machine

0 notes

Text

Smartproxy vs Proxy4U : Which Offers Better Performance?

youtube

0 notes

Text

A Step-by-Step Guide to Web Scraping Walmart Grocery Delivery Data

Introduction

As those who are in the marketplace know, it is today's data model that calls for real-time grocery delivery data accessibility to drive pricing strategy and track changes in the market and activity by competitors. Walmart Grocery Delivery, one of the giants in e-commerce grocery reselling, provides this data, including product details, prices, availability, and operation time of the deliveries. Data scraping of Walmart Grocery Delivery could provide a business with fine intelligence knowledge about consumer behavior, pricing fluctuations, and changes in inventory.

This guide shall give you everything you need to know about web scraping Walmart Grocery Delivery data—from tools to techniques to challenges and best practices involved in it. We'll explore why CrawlXpert provides the most plausible way to collect reliable, large-scale data on Walmart.

1. What is Walmart Grocery Delivery Data Scraping?

Walmart Grocery Delivery scraping data is the collection of the product as well as delivery information from Walmart's electronic grocery delivery service. The online grocery delivery service thus involves accessing the site's HTML content programmatically and processing it for key data points.

Key Data Points You Can Extract:

Product Listings: Names, descriptions, categories, and specifications.

Pricing Data: Current price, original price, and promotional discounts.

Delivery Information: Availability, delivery slots, and estimated delivery times.

Stock Levels: In-stock, out-of-stock, or limited availability status.

Customer Reviews: Ratings, review counts, and customer feedback.

2. Why Scrape Walmart Grocery Delivery Data?

Scraping Walmart Grocery Delivery data provides valuable insights and enables data-driven decision-making for businesses. Here are the primary use cases:

a) Competitor Price Monitoring

Track Pricing Trends: Extracting Walmart’s pricing data enables you to track price changes over time.

Competitive Benchmarking: Compare Walmart’s pricing with other grocery delivery services.

Dynamic Pricing: Adjust your pricing strategies based on real-time competitor data.

b) Market Research and Consumer Insights

Product Popularity: Identify which products are frequently purchased or promoted.

Seasonal Trends: Track pricing and product availability during holiday seasons.

Consumer Sentiment: Analyze reviews to understand customer preferences.

c) Inventory and Supply Chain Optimization

Stock Monitoring: Identify frequently out-of-stock items to detect supply chain issues.

Demand Forecasting: Use historical data to predict future demand and optimize inventory.

d) Enhancing Marketing and Promotions

Targeted Advertising: Leverage scraped data to create personalized marketing campaigns.

SEO Optimization: Enrich your website with detailed product descriptions and pricing data.

3. Tools and Technologies for Scraping Walmart Grocery Delivery Data

To efficiently scrape Walmart Grocery Delivery data, you need the right combination of tools and technologies.

a) Python Libraries for Web Scraping

BeautifulSoup: Parses HTML and XML documents for easy data extraction.

Requests: Sends HTTP requests to retrieve web page content.

Selenium: Automates browser interactions, useful for dynamic pages.

Scrapy: A Python framework designed for large-scale web scraping.

Pandas: For data cleaning and storing scraped data into structured formats.

b) Proxy Services to Avoid Detection

Bright Data: Reliable IP rotation and CAPTCHA-solving capabilities.

ScraperAPI: Automatically handles proxies, IP rotation, and CAPTCHA solving.

Smartproxy: Provides residential proxies to reduce the chances of being blocked.

c) Browser Automation Tools

Playwright: Automates browser interactions for dynamic content rendering.

Puppeteer: A Node.js library that controls a headless Chrome browser.

d) Data Storage Options

CSV/JSON: Suitable for smaller-scale data storage.

MongoDB/MySQL: For large-scale structured data storage.

Cloud Storage: AWS S3, Google Cloud, or Azure for scalable storage.

4. Building a Walmart Grocery Delivery Scraper

a) Install the Required Libraries

First, install the necessary Python libraries:

pip install requests beautifulsoup4 selenium pandas

b) Inspect Walmart’s Website Structure

Open Walmart Grocery Delivery in your browser.

Right-click → Inspect → Select Elements.

Identify product containers, pricing, and delivery details.

c) Fetch the Walmart Delivery Page

import requests from bs4 import BeautifulSoup url = 'https://www.walmart.com/grocery' headers = {'User-Agent': 'Mozilla/5.0'} response = requests.get(url, headers=headers) soup = BeautifulSoup(response.content, 'html.parser')

d) Extract Product and Delivery Data

products = soup.find_all('div', class_='search-result-gridview-item') data = [] for product in products: try: title = product.find('a', class_='product-title-link').text price = product.find('span', class_='price-main').text availability = product.find('div', class_='fulfillment').text data.append({'Product': title, 'Price': price, 'Delivery': availability}) except AttributeError: continue

5. Bypassing Walmart’s Anti-Scraping Mechanisms

Walmart uses anti-bot measures like CAPTCHAs and IP blocking. Here are strategies to bypass them:

a) Use Proxies for IP Rotation

Rotating IP addresses reduces the risk of being blocked.proxies = {'http': 'http://user:pass@proxy-server:port'} response = requests.get(url, headers=headers, proxies=proxies)

b) Use User-Agent Rotation

import random user_agents = [ 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)', 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)' ] headers = {'User-Agent': random.choice(user_agents)}

c) Use Selenium for Dynamic Content

from selenium import webdriver options = webdriver.ChromeOptions() options.add_argument('--headless') driver = webdriver.Chrome(options=options) driver.get(url) data = driver.page_source driver.quit() soup = BeautifulSoup(data, 'html.parser')

6. Data Cleaning and Storage

Once you’ve scraped the data, clean and store it:import pandas as pd df = pd.DataFrame(data) df.to_csv('walmart_grocery_delivery.csv', index=False)

7. Why Choose CrawlXpert for Walmart Grocery Delivery Data Scraping?

While building your own Walmart scraper is possible, it comes with challenges, such as handling CAPTCHAs, IP blocking, and dynamic content rendering. This is where CrawlXpert excels.

Key Benefits of CrawlXpert:

Accurate Data Extraction: CrawlXpert provides reliable and comprehensive data extraction.

Scalable Solutions: Capable of handling large-scale data scraping projects.

Anti-Scraping Evasion: Uses advanced techniques to bypass CAPTCHAs and anti-bot systems.

Real-Time Data: Access fresh, real-time data with high accuracy.

Flexible Delivery: Data delivery in multiple formats (CSV, JSON, Excel).

Conclusion

Scrape Data from Walmart Grocery Delivery: Extracting and analyzing the prices, trends, and consumer preferences can show any business the strength behind Walmart Grocery Delivery. But all the tools and techniques won't matter if one finds themselves in deep trouble against Walmart's excellent anti-scraping measures. Thus, using a well-known service such as CrawlXpert guarantees consistent, correct, and compliant data extraction.

Know More : https://www.crawlxpert.com/blog/web-scraping-walmart-grocery-delivery-data

#ScrapingWalmartGroceryDeliveryData#WalmartGroceryDeliveryDataScraping#ScrapeWalmartGroceryDeliveryData#WalmartGroceryDeliveryScraper

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes

Video

youtube

Smartproxy vs Proxy4U : Which Offers Better Performance?

0 notes