#sqoop import example

Explore tagged Tumblr posts

Text

Big Data and Data Engineering

Big Data and Data Engineering are essential concepts in modern data science, analytics, and machine learning.

They focus on the processes and technologies used to manage and process large volumes of data.

Here’s an overview:

What is Big Data? Big Data refers to extremely large datasets that cannot be processed or analyzed using traditional data processing tools or methods.

It typically has the following characteristics:

Volume:

Huge amounts of data (petabytes or more).

Variety:

Data comes in different formats (structured, semi-structured, unstructured). Velocity: The speed at which data is generated and processed.

Veracity: The quality and accuracy of data.

Value: Extracting meaningful insights from data.

Big Data is often associated with technologies and tools that allow organizations to store, process, and analyze data at scale.

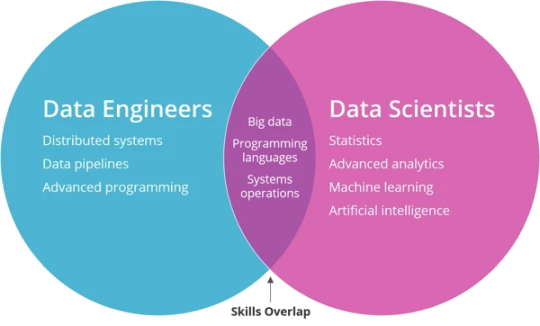

2. Data Engineering:

Overview Data Engineering is the process of designing, building, and managing the systems and infrastructure required to collect, store, process, and analyze data.

The goal is to make data easily accessible for analytics and decision-making.

Key areas of Data Engineering:

Data Collection:

��Gathering data from various sources (e.g., IoT devices, logs, APIs). Data Storage: Storing data in data lakes, databases, or distributed storage systems. Data Processing: Cleaning, transforming, and aggregating raw data into usable formats.

Data Integration:

Combining data from multiple sources to create a unified dataset for analysis.

3. Big Data Technologies and Tools

The following tools and technologies are commonly used in Big Data and Data Engineering to manage and process large datasets:

Data Storage:

Data Lakes: Large storage systems that can handle structured, semi-structured, and unstructured data. Examples include Amazon S3, Azure Data Lake, and Google Cloud Storage.

Distributed File Systems:

Systems that allow data to be stored across multiple machines. Examples include Hadoop HDFS and Apache Cassandra.

Databases:

Relational databases (e.g., MySQL, PostgreSQL) and NoSQL databases (e.g., MongoDB, Cassandra, HBase).

Data Processing:

Batch Processing: Handling large volumes of data in scheduled, discrete chunks.

Common tools:

Apache Hadoop (MapReduce framework). Apache Spark (offers both batch and stream processing).

Stream Processing:

Handling real-time data flows. Common tools: Apache Kafka (message broker). Apache Flink (streaming data processing). Apache Storm (real-time computation).

ETL (Extract, Transform, Load):

Tools like Apache Nifi, Airflow, and AWS Glue are used to automate data extraction, transformation, and loading processes.

Data Orchestration & Workflow Management:

Apache Airflow is a platform for programmatically authoring, scheduling, and monitoring workflows. Kubernetes and Docker are used to deploy and scale applications in data pipelines.

Data Warehousing & Analytics:

Amazon Redshift, Google BigQuery, Snowflake, and Azure Synapse Analytics are popular cloud data warehouses for large-scale data analytics.

Apache Hive is a data warehouse built on top of Hadoop to provide SQL-like querying capabilities.

Data Quality and Governance:

Tools like Great Expectations, Deequ, and AWS Glue DataBrew help ensure data quality by validating, cleaning, and transforming data before it’s analyzed.

4. Data Engineering Lifecycle

The typical lifecycle in Data Engineering involves the following stages: Data Ingestion: Collecting and importing data from various sources into a central storage system.

This could include real-time ingestion using tools like Apache Kafka or batch-based ingestion using Apache Sqoop.

Data Transformation (ETL/ELT): After ingestion, raw data is cleaned and transformed.

This may include:

Data normalization and standardization. Removing duplicates and handling missing data.

Aggregating or merging datasets. Using tools like Apache Spark, AWS Glue, and Talend.

Data Storage:

After transformation, the data is stored in a format that can be easily queried.

This could be in a data warehouse (e.g., Snowflake, Google BigQuery) or a data lake (e.g., Amazon S3).

Data Analytics & Visualization:

After the data is stored, it is ready for analysis. Data scientists and analysts use tools like SQL, Jupyter Notebooks, Tableau, and Power BI to create insights and visualize the data.

Data Deployment & Serving:

In some use cases, data is deployed to serve real-time queries using tools like Apache Druid or Elasticsearch.

5. Challenges in Big Data and Data Engineering

Data Security & Privacy:

Ensuring that data is secure, encrypted, and complies with privacy regulations (e.g., GDPR, CCPA).

Scalability:

As data grows, the infrastructure needs to scale to handle it efficiently.

Data Quality:

Ensuring that the data collected is accurate, complete, and relevant. Data

Integration:

Combining data from multiple systems with differing formats and structures can be complex.

Real-Time Processing:

Managing data that flows continuously and needs to be processed in real-time.

6. Best Practices in Data Engineering Modular Pipelines:

Design data pipelines as modular components that can be reused and updated independently.

Data Versioning: Keep track of versions of datasets and data models to maintain consistency.

Data Lineage: Track how data moves and is transformed across systems.

Automation: Automate repetitive tasks like data collection, transformation, and processing using tools like Apache Airflow or Luigi.

Monitoring: Set up monitoring and alerting to track the health of data pipelines and ensure data accuracy and timeliness.

7. Cloud and Managed Services for Big Data

Many companies are now leveraging cloud-based services to handle Big Data:

AWS:

Offers tools like AWS Glue (ETL), Redshift (data warehousing), S3 (storage), and Kinesis (real-time streaming).

Azure:

Provides Azure Data Lake, Azure Synapse Analytics, and Azure Databricks for Big Data processing.

Google Cloud:

Offers BigQuery, Cloud Storage, and Dataflow for Big Data workloads.

Data Engineering plays a critical role in enabling efficient data processing, analysis, and decision-making in a data-driven world.

0 notes

Text

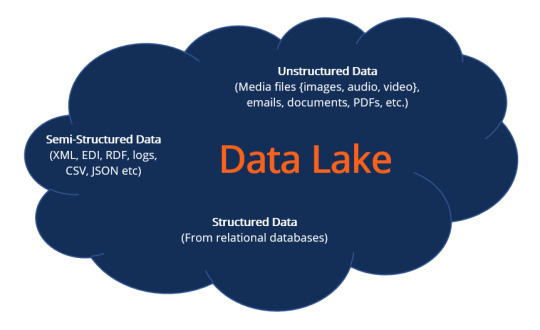

What is a Data Lake?

A data lake refers to a central storage repository used to store a vast amount of raw, granular data in its native format. It is a single store repository containing structured data, semi-structured data, and unstructured data.

A data lake is used where there is no fixed storage, no file type limitations, and emphasis is on flexible format storage for future use. Data lake architecture is flat and uses metadata tags and identifiers for quicker data retrieval in a data lake.

The term “data lake” was coined by the Chief Technology Officer of Pentaho, James Dixon, to contrast it with the more refined and processed data warehouse repository. The popularity of data lakes continues to grow, especially in organizations that prefer large, holistic data storage.

Data in a data lake is not filtered before storage, and accessing the data for analysis is ad hoc and varied. The data is not transformed until it is needed for analysis. However, data lakes need regular maintenance and some form of governance to ensure data usability and accessibility. If data lakes are not maintained well and become inaccessible, they are referred to as “data swamps.”

Data Lakes vs. Data Warehouse

Data lakes are often confused with data warehouses; hence, to understand data lakes, it is crucial to acknowledge the fundamental distinctions between the two data repositories.

As indicated, both are data repositories that serve the same universal purpose and objective of storing organizational data to support decision-making. Data lakes and data warehouses are alternatives and mainly differ in their architecture, which can be concisely broken down into the following points.

Structure

The schema for a data lake is not predetermined before data is applied to it, which means data is stored in its native format containing structured and unstructured data. Data is processed when it is being used. However, a data warehouse schema is predefined and predetermined before the application of data, a state known as schema on write. Data lakes are termed schema on read.

Flexibility

Data lakes are flexible and adaptable to changes in use and circumstances, while data warehouses take considerable time defining their schema, which cannot be modified hastily to changing requirements. Data lakes storage is easily expanded through the scaling of its servers.

User Interface

Accessibility of data in a data lake requires some skill to understand its data relationships due to its undefined schema. In comparison, data in a data warehouse is easily accessible due to its structured, defined schema. Many users can easily access warehouse data, while not all users in an organization can comprehend data lake accessibility.

Why Create a Data Lake?

Storing data in a data lake for later processing when the need arises is cost-effective and offers an unrefined view to data analysts. The other reasons for creating a data lake are as follows:

The diverse structure of data in a data lake means it offers a robust and richer quality of analysis for data analysts.

There is no requirement to model data into an enterprise-wide schema with a data lake.

Data lakes offer flexibility in data analysis with the ability to modify structured to unstructured data, which cannot be found in data warehouses.

Artificial intelligence and machine learning can be employed to make profitable forecasts.

Using data lakes can give an organization a competitive advantage.

Data Lake Architecture

A data lake architecture can accommodate unstructured data and different data structures from multiple sources across the organization. All data lakes have two components, storage and compute, and they can both be located on-premises or based in the cloud. The data lake architecture can use a combination of cloud and on-premises locations.

It is difficult to measure the volume of data that will need to be accommodated by a data lake. For this reason, data lake architecture provides expanded scalability, as high as an exabyte, a feat a conventional storage system is not capable of. Data should be tagged with metadata during its application into the data lake to ensure future accessibility.

Below is a concept diagram for a data lake structure:

Data lakes software such as Hadoop and Amazon Simple Storage Service (Amazon S3) vary in terms of structure and strategy. Data lake architecture software organizes data in a data lake and makes it easier to access and use. The following features should be incorporated in a data lake architecture to prevent the development of a data swamp and ensure data lake functionality.

Utilization of data profiling tools proffers insights into the classification of data objects and implementing data quality control

Taxonomy of data classification includes user scenarios and possible user groups, content, and data type

File hierarchy with naming conventions

Tracking mechanism on data lake user access together with a generated alert signal at the point and time of access

Data catalog search functionality

Data security that encompasses data encryption, access control, authentication, and other data security tools to prevent unauthorized access

Data lake usage training and awareness

Hadoop Data Lakes Architecture

We have singled out illustrating Hadoop data lake infrastructure as an example. Some data lake architecture providers use a Hadoop-based data management platform consisting of one or more Hadoop clusters. Hadoop uses a cluster of distributed servers for data storage. The Hadoop ecosystem comprises three main core elements:

Hadoop Distributed File System (HDFS) – The storage layer whose function is storing and replicating data across multiple servers.

Yet Another Resource Negotiator (YARN) – Resource management tool

MapReduce – The programming model for splitting data into smaller subsections before processing in servers

Hadoop supplementary tools include Pig, Hive, Sqoop, and Kafka. The tools assist in the processes of ingestion, preparation, and extraction. Hadoop can be combined with cloud enterprise platforms to offer a cloud-based data lake infrastructure.

Hadoop is an open-source technology that makes it less expensive to use. Several ETL tools are available for integration with Hadoop. It is easy to scale and provides faster computation due to its data locality, which has increased its popularity and familiarity among most technology users.

Data Lake Key Concepts

Below are some key data lake concepts to broaden and deepen the understanding of data lakes architecture.

Data ingestion – The process where data is gathered from multiple data sources and loaded into the data lake. The process supports all data structures, including unstructured data. It also supports batch and one-time ingestion.

Security – Implementing security protocols for the data lake is an important aspect. It means managing data security and the data lake flow from loading, search, storage, and accessibility. Other facets of data security such as data protection, authentication, accounting, and access control to prevent unauthorized access are also paramount to data lakes.

Data quality – Information in a data lake is used for decision making, which makes it important for the data to be of high quality. Poor quality data can lead to bad decisions, which can be catastrophic to the organization.

Data governance – Administering and managing data integrity, availability, usability, and security within an organization.

Data discovery – Discovering data is important before data preparation and analysis. It is the process of collecting data from multiple sources and consolidating it in the lake, making use of tagging techniques to detect patterns enabling better data understandability.

Data exploration – Data exploration starts just before the data analytics stage. It assists in identifying the right dataset for the analysis.

Data storage – Data storage should support multiple data formats, be scalable, accessible easily and swiftly, and be cost-effective.

Data auditing – Facilitates evaluation of risk and compliance and tracks any changes made to crucial data elements, including identifying who made the changes, how data was changed, and when the changes took place.

Data lineage – Concerned with the data flow from its source or origin and its path as it is moved within the data lake. Data lineage smoothens error corrections in a data analytics process from its source to its destination.

Benefits of a Data Lake

A data lake is an agile storage platform that can be easily configured for any given data model, structure, application, or query. Data lake agility enables multiple and advanced analytical methods to interpret the data.

Being a schema on read makes a data lake scalable and flexible.

Data lakes support queries that require a deep analysis by exploring information down to its source to queries that require a simple report with summary data. All user types are catered for.

Most data lakes software applications are open source and can be installed using low-cost hardware.

Schema development is deferred until an organization finds a business case for the data. Hence, no time and costs are wasted on schema development.

Data lakes offer centralization of different data sources.

They provide value for all data types as well as the long-term cost of ownership.

Cloud-based data lakes are easier and faster to implement, cost-effective with a pay-as-you-use model, and are easier to scale up as the need arises. It also saves on space and real estate costs.

Challenges and Criticism of Data Lakes

Data lakes are at risk of losing relevance and becoming data swamps over time if they are not properly governed.

It is difficult to ensure data security and access control as some data is dumped in the lake without proper oversight.

There is no trail of previous analytics on the data to assist new users.

Storage and processing costs may increase as more data is added to the lake.

On-premises data lakes face challenges such as space constraints, hardware and data center setup, storage scalability, cost, and resource budgeting.

Popular Data Lake Technology Vendors

Popular data lake technology providers include the following:

Amazon S3 – Offers unlimited scalability

Apache – Uses Hadoop open-source ecosystem

Google Cloud Platform (GCP) – Google cloud storage

Oracle Big Data Cloud

Microsoft Azure Data Lake and Azure Data Analytics

Snowflake – Processes structured and semi-structured datasets, notably JSON, XML, and Parquet

More Resources

To keep learning and developing your knowledge base, please explore the additional relevant resources below:

Business Intelligence

Data Mart

Scalability

Data Protection

1 note

·

View note

Text

7 Main Malaysian Universities Where You'll Be Able To Study Data Science

Data science is a vast subject and people cannot achieve experience in it inside six months or a year. Learning information science requires specialized technical skills together with information of programming basics and analytics tools to get started. However, this Data Science course explains all the relevant concepts from scratch, so you can see it easy to place your new abilities to make use of. This Data Science certification coaching will familiarize you with programming languages like Python, R, and Scala, as well as knowledge science instruments like Apache Spark, HBase, Sqoop, Hadoop, and Flume.

You’ll probably find that this profession path can generally be stuffed with frustration, so a hearty dose of stubbornness is an efficient thing. When things get powerful and it looks as if there couldn’t presumably be a solution to the problem, a great knowledge scientist will hold reorganizing, reanalyzing, and dealing the information in the hopes that a brand new perspective will lead to a “Eureka! They often create extremely superior algorithms which are used to determine patterns and take the information from a jumble of numbers and stats to something that may be helpful for a enterprise or organization. At its core, knowledge science is the follow of looking for which means in mass quantities of information.

360digitmghas top-of-the-line applications available online to earn real-world expertise that are in demand worldwide. I simply completed the Machine Learning Advanced Certification course, and the LMS was excellent. Upskilling with 360digitmgwas an excellent expertise that additionally resulted in a brand new job opportunity for me with an excellent salary hike.

R programming is most popular as a result of it's widely used for solving statistical applications. Even though it has a steep studying curve, 43% of data scientists use R for data analysis. When the quantity of knowledge is far more than the out there memory, a framework like Hadoop and Spark is used. Apart from the data of framework and programming language, having an understanding of databases is required as properly.

KnowldgeHut's training session included everything that had been promised. The trainer was very knowledgeable and the practical sessions covered each subject. My trainer was very educated and I appreciated his sensible means of instructing. The course which I took from 360digitmgwas very useful and helped me to realize my objective. The course was designed with advanced concepts and the tasks in the course of the course given by the trainer helped me to step up in my career. Virtualenv can also be used for creation of isolated python environments and python dependency supervisor referred to as pipeny.

Understand various forecasting parts similar to Level, Trend, Seasonality & Noise. Also, learn about various error capabilities and which one is the most effective given a business state of affairs. Finally, construct various forecasting models ranging from linear to exponential to additive seasonality to multiplicative seasonality. Black field machine learning algorithms are extraordinarily important in the field of machine learning.

Problem solving just isn't a task, however an intellectually-stimulating journey to a solution. Data scientists are passionate about what they do, and reap nice satisfaction in taking up challenge. For example, if a Hypermarket or Supermarket could use the data collected every day from their clients such as past shopping for history, once they shop, what they like to buy, how much they spend, age, earnings, and so forth. Then, the Hypermarket can analyse the information to make methods on which products to inventory more, the way to worth the products, when to have gross sales to extend the client walk in, and so on. Students after SPM or O-Levelsmay go for theFoundation in Computing at Asia Pacific Universityfor 1 year earlier than persevering with on to the three-12 months Bachelor of Science in Computer Science with specialism in Data Analytics diploma. To enter the Bsc Statistical Data Science degree at Heriot-Watt University Malaysia after SPM or O-Levels, students will enter theFoundation in Businessfirst.

For these specialized methods, expert Data Scientists are employed to concentrate on a selected piece of data or data which is useful. While we see advancing technologies like Artificial Intelligence and Machine Learning, this has additionally elevated the demand for expert professionals. The corporations hiring a group of individuals with some mounted set of expertise which are wanted at present will not be needed 5 to 10 years from now. This era wants a new set of abilities in a very practiced and exact way.

Learn about the implementation of a Regression methodology based mostly on the business issues to be solved. Understand about Linear Regression as well as Logistic Regression methods used to handle steady as well as discrete output prediction. Evaluation techniques by understanding the measure of Error , issues whereas constructing a Regression Model like Collinearity, Heteroscedasticity, overfitting, and Underfitting are explained in detail. They should be proficient in tools corresponding to Python, R, R Studio, Hadoop, MapReduce, Apache Spark, Apache Pig, Java, NoSQL database, Cloud Computing, Tableau, and SAS. A Data Scientist should be a person who loves taking part in with numbers and figures.

Xavier Phang, Software Engineering Graduate from Asia Pacific University Some non-public universities in Malaysia offer knowledge science levels, which is an obvious alternative. This degree will give you the mandatory skills to process and analyze a posh set of data, and will contain plenty of technical information related to statistics, computer systems, evaluation strategies, and more. Most information science applications will also have a creative and analytical element, allowing you to make judgment choices primarily based on your findings.

Explore more on -Data Science Training in Malaysia

INNODATATICS SDN BHD (1265527-M)

360DigiTMG - Data Science, IR 4.0, AI, Machine Learning Training in Malaysia

Level 16, 1 Sentral, Jalan Stesen Sentral 5, KL Sentral, 50740, Kuala Lumpur, Malaysia.

+ 601 9383 1378 / + 603 2092 9488

Hours: Sunday - Saturday 7 AM - 11 PM

#data scientist course#data science training in malaysia#data scientist certification malaysia#data science courses in malaysia

0 notes

Text

What Expertise Do You Should Turn Into A Data Scientist?

It is amongst the most important skills for Data Scientists to have hands-on expertise. Data integration is vital for organizations because it allows them to research information for business intelligence. Thus, being equipped with Data Integration will permit you to land a Data Science job in a reputed group. Data Ingestion is the process of importing, transferring, loading, and processing knowledge for later use or storage in a database. Being in a position to carry out Data Ingestion is likely certainly one of the most important Data Scientist ability sets you have to turn out to be a Data Scientist. Apache Flume and Apache Sqoop are two most popular knowledge ingestion instruments you would want to master.

visit to know more about : data science training in hyderabad To start with you must be conversant in plots like Histogram, Bar charts, pie charts, after which transfer on to advanced charts like waterfall charts, thermometer charts, and so on. These plots are available very useful during the stage of exploratory knowledge analysis. The univariate and bivariate analyses become much simpler to grasp utilizing colourful charts. So, on this article, I am mentioning 14 abilities you'll require to turn into a profitable information scientist and a few Data Science Training Online to perform them. The world machine studying market is predicted to succeed in $20.83 Billion by the 12 months 2024. For example, if a knowledge scientist is engaged on a project to assist the advertising group present insightful research, the skilled must be nicely adept at handling social media as well. Moving ahead, allow us to discuss what are the technical skills required for a data scientist function. Nowadays, every organization is deploying Deep Learning fashions as it possesses the flexibility to solve limitations of traditional Machine Learning approaches. One of the important abilities for Data Scientist is Data Manipulation. It includes the method of fixing and organizing knowledge to make it simpler to read. To start, you must be snug with basic plots similar to histograms, bar charts, and pie charts, before moving on to extra superior charts such as waterfall charts, thermometer charts, and so forth. During the exploratory data analysis stage, these graphs are extraordinarily helpful. Colorful graphics make univariate and bivariate research much simpler to comprehend. R is an integrated suite of software facilities for information manipulation, calculation, and graphical display. Since data scientists are knee-deep in techniques designed to analyze and course of knowledge, they have to additionally understand the systems’ internal workings. Learn and apply the languages which might be most relevant to your position, industry, and business challenges. Data science is a continuously evolving subject, and it is rather important to keep updating your knowledge science expertise to turn out to be an professional in the domain. Now that you are aware of the talents required to turn into a knowledge scientist, under is tips on how to make a profession in knowledge science. Companies are sitting over a mine of knowledge, for which they want people with data science skills. Here are some in style information science jobs in demand in the present business milieu. To be an information scientist you’ll want a stable understanding of the industry you’re working in, and know what business issues your company is trying to unravel. Data Wrangling is the process of cleaning and unifying messy and sophisticated knowledge collections for straightforward access and evaluation. You’ll save a few minutes, but it’s not probably the most environment friendly method, and your clothes shall be ruined as well. Instead, spend a couple of minutes ironing and stacking your garments. It might be significantly more environment friendly, and your clothing will last more. S disseminating to your group what steps you wish to observe to get from A to B with the data, or giving a presentation to business leadership, communication could make all the distinction in the outcome of a project. Like most careers, the extra advanced your position, the higher suite of abilities you’ll must be profitable. You grasp the tool in the future and it will get run over by a sophisticated device the following day. The selections that they take at the moment are solely dependent on the proposed data and they’re serving to them to take useful choices. This has triggered the massive leap of such professionals over the past few years and continues to be dominating the business. Due to this, the pay scale is fairly respectable for data scientists and that’s one of the main the cause why people are paving their method towards this area. Having a deep understanding of machine studying and artificial intelligence is a should to need to implement tools and techniques in numerous logic, decision timber, etc. Having these skill units will enable any knowledge scientist to work and clear up complex issues particularly which might be designed for predictions or for deciding future objectives. Those who possess these abilities will surely stand out as proficient professionals. But for being proficient would require having a particular aligned course for knowledge science similar to Data Science – Live Course that is well tailor-made to prepare any individual right from scratch. The major motive for deep studying being profitable with NLP is its accuracy in supply. One should perceive that deep learning is an artwork that requires a set of particular tools to show its caliber. Companies mostly use data science to improve their business and decision-making capabilities. Data science can also be used to get correct insights into totally different processes and capabilities of a business, spot problems, make predictions, and suggest ways to improve. Essentially, you will be collaborating together with your team members to develop use cases in order to know the business objectives and information that might be required to resolve issues. You will need to know the right approach to deal with the use circumstances, the info that is wanted to unravel the problem and the method to translate and present the result into what can easily be understood by everybody involved. You will literally have to work with everyone in the group, together with your customers. This knowledge needs to be translated right into a format that will be straightforward to comprehend.

Statistics is defined as the study of the collection, evaluation, interpretation, presentation, and organizing of knowledge, according to Wikipedia. As a end result, it ought to come as no shock that data scientists require statistical knowledge in their profession. It is important to know the ideas of descriptive statistics similar to imply, median, mode, variance, and normal deviation. Then there are likelihood distributions, pattern and inhabitants, CLT, skewness and kurtosis, and inferential statistics, such as speculation testing and confidence intervals. Therefore, information scientists want enterprise acumen to make a difference in the company. Having business acumen will help them acquire a greater understanding in regards to the enterprise and make higher knowledge and predictive fashions to extend efficiency. Data scientists convert giant knowledge sets into easy-to-understand info that can be utilized to make important enterprise choices. Therefore, data scientists should know the method to simplify complex ideas and information findings and convey the same to different departments. Data scientists want a strong basis in mathematics and statistics. The most common fields of study in information science are mathematics, statistics, pc science, and engineering. In this article, we discussed the 14 most necessary expertise needed to become a profitable knowledge scientist. Data Science remains to be evolving and it let me inform you crucial thing – Learning by no means stops on this area.

For more information: best institute for data science in hyderabad 360DigiTMG - Data Analytics, Data Science Course Training Hyderabad Address - 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081 099899 94319

Visit on map : https://g.page/Best-Data-Science

0 notes

Text

Benefits of Taking Up a Data Science Course

Data science is the process of cleansing, aggregating, and altering data in order to do advanced data analysis. The results can then be reviewed by analytic apps and data scientists to uncover patterns and allow company leaders to make informed decisions.

Companies have access to a wealth of information. Data volumes have expanded as contemporary technology has facilitated the creation and storage of ever-increasing amounts of data. 90% of the world's data was created in the last two years, according to estimates. Every hour, for example, Facebook users post 10 million images.

Data Science Certification equips you with 'in-demand' Big Data technologies

Data Science Training prepares students for the growing demand for Big Data skills and technologies. It provides experts with data management tools such as Hadoop, R, Flume, Sqoop, Machine Learning, Mahout, and others. Knowledge and experience in the abilities are an extra benefit for a more successful and competitive career.

Data Scientists: Job Titles

Database Administrators

They are those in charge of databases. Managing the obtained data is a crucial role for making decisions in businesses. They store and arrange data using a variety of software tools in preparation for subsequent investigation.

Data Architects

Data Architects must have a foundation in traditional programming and Business Intelligence, as well as the ability to cope with data ambiguity. They are frequently exposed to unstructured and unclear data and statistics. Data Architects are also capable of using data in novel ways in order to gain fresh insights.

Data Visualizers

Data Visualizers are technicians who convert data analytics into useful knowledge for businesses. They are able to communicate the results of data analytics in layman's terms to all parts of the firm.

Data Engineers

The heart and soul of 'Data Science' are data engineers.. They are in charge of planning, constructing, and maintaining the Big Data infrastructure. They play an important role in building the architecture for analysing and processing data based on business requirements.

Data Ecologists

They come in handy when you're having trouble finding a specific file on your overburdened system! Data ecologists create and manage data on both public and private clouds, ensuring that it is easily available.

Data Scientist Salary in India

An entry-level Data Scientist with less than one year of experience may expect to make a total remuneration of $529,677 (including tips, bonuses, and overtime pay). The average total compensation for a Data Scientist with 1-4 years of experience is $787,149. The average total income for a mid-career Data Scientist with 5-9 years of experience is $1,384,025. A Data Scientist with 10 to 19 years of experience makes an average of $1,759,961 in total compensation. Employees with a late career (20 years or more) earn an average total remuneration of $1,100,000.

Benefits of taking a Data science course

1. High demand

2. Abundant number of positions.

3. A high paying profession.

4. Data science can be applied to a wide range of situations.

5. Data Science Improves the quality of information.

6. Data Scientists have a high salary.

7. You won't have to deal with any tedious tasks any longer.

8. Data Science enables smarter products.

9. Data science can help people live longer.

10. Data Science can improve your personality

11. Career growth.

12. Flexibility, freedom and options.

13. Keeps you updated on the latest industry trends.

14. Easily showcase your expertise.

However, most of this data is languishing untouched in databases and data lakes. The vast amounts of data collected and saved by these technologies have the potential to revolutionise businesses and communities all around the world but only if we can understand it.

0 notes

Text

Data Scientist course

Data Science Course In city

As a Business System Engineer, I’m chargeable for coming up with, developing and maintaining applications software system and integrations for internal solutions. we tend to ought to realize this job chance through prof Hindu deity Narvekar (Assistant prof – information Science, SP Jain). The interview was rigorous and targeted on reasoning, industrial information and technical information.

FrequenciesIn this lesson, you'll currently learn to calculate the frequency of information and analyze the info once dynamic it from frequency to density. ImagesIn this lesson, you'll learn to gift the info within the image format. so as to try to to that, you'll 1st import the info within the image type then gift the info through the image.

As a school with WILP, he teaches courses like Introduction to applied mathematics ways, Advanced applied mathematics Techniques for Analytics, data processing and Machine Learning for varied programmes. Dr Y V K is AN prof with the reckon Science and knowledge Systems cluster of labor Integrated Learning Programmes Division, BITS - Pilani. He did his M.Sc from Sri Venkateswara University, A.P and PhD from Osmania University, Hyderabad. He has printed regarding fifty analysis papers in varied national and international journals.

Data Scientist course

Enrolling yourself for the most effective information Science program offered by a trustworthy institute would be the most effective thanks to build your dream come back true. Constant learning and follow would get you the work of your dreams. Also, students following or holding management degrees like BBA or Master in Business Administration will apply for information science and analytics courses. Our teaching assistants square measure a zealous team of material specialists to assist you get certified in information Science on your 1st try. They interact students proactively to confirm the course path is being followed and assist you enrich your learning expertise, from category onboarding to project mentoring and job help. This information Science certification coaching can familiarise you with programming languages like Python, R, and Scala, additionally as information science tools like Apache Spark, HBase, Sqoop, Hadoop, and Flume.

The content of this Datacamp machine learning track looks very comprehensive and covers loads of ground that isn’t sometimes educated in alternative courses. As an information scientist/analyst, filtering information supported shopper necessities are some things I do on a usual, therefore the content of this course is de facto necessary to know. the most important face of this course is that it teaches you loads of information assortment and storage techniques that square measure essential for an information soul to understand. Next, you'll learn to access and manipulate databases with Python. you'll learn to figure with SQL databases with a Python library known as SQLite3. No previous SQL or info expertise is needed to require this course.

He worked on a range of model validation comes to spot key risks for money models utilized in USAA, as well as P&C models, operational risk models, investment models, market risk models and member demographic models. Learn what being a Lambda faculty student is de facto like from the those who grasp our information science courses the most effective – our alumni. the type of content it provides extremely helps in building your logic and the way to approach a drag in world too. Ankush sir has done a beautiful job in explaining the core thought of exhausting topics. You study ideas and ways primarily through keynote lectures and tutorials victimization case studies and examples. important reflection is vital to eminent drawback determination and essential to the inventive method.

Once you graduate, you belong to a worldwide school community and have access to our on-line platform to stay learning and growing. At the top of the bootcamp, you're welcome to affix our Career Week. on provides you the tools you wish to require ensuing steps in your career, whether or not it's finding your 1st job in school, building a contract career, or launching a start-up. Unveil the magic behind Deep Learning by understanding the design of neural networks and their parameters . Become autonomous to make your own networks, particularly to figure with pictures, times and text, whereas learning the techniques and tricks that build Deep Learning work. find out how to formulate a decent question and the way to answer it by building the correct SQL question.

This was done by making a Machine Learning Model victimization varied supervised, unsupervised and Reinforcement Learning techniques like Neural Network, Random Forest, call Tree, KNN etc. Master analytical tools and gain experience in one amongst the most effective skills of today’s market. thorough program designed to change the candidates with information and experience. select the program that matches your specifications with regards to the course length, timings, and more.

Excelr on-line course contains all the topics that square measure needed and vital to find out so you'll master this technology. This information Science course contains each basic and advanced-level ideas concerned during this technology so you'll learn them and master the talents to pursue a career during this domain. Moreover, the trainers of this course square measure specialists within the domain UN agency pay time and energy to show you all the ideas very well. Some folks believe that it's potential to become an information soul while not knowing a way to code, however others disagree. you may argue that it's a lot of necessary to know a way to use the algorithms than a way to code them yourself.

For More details visit us:

Name: ExcelR Solutions

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 09632156744

direction : Data Scientist course

0 notes

Text

How to use data analytics to improve your business operations

There is a hovering need for professionals with Analytics capacities that can find, examine, and also interpret Big Data. The need for logical experts in Hyderabad is on a higher swing. Presently, there is a large shortage within the variety of experienced Big Data experts. We at 360DigiTMG layout the educational program around trending topics for data analytics course in hyderabad so that it'll be very easy for professionals to obtain a work. The Qualified Data Analytics program in Hyderabad from 360DigiTMG used in-depth understanding about Analytics by palms-on experience like study as well as campaigns from diverse sectors.

We do give Fast-Track Big Information Analytics Training in Hyderabad and One-to-One data analytics courses in hyderabad. 360DigiTMG presents added programs in Tableau and also Service Analytics with R to enhance your research as well as obtain you on the career path to becoming an Information Expert. This training program provides you step-by-step details to master all the subjects that a Data Expert needs to understand.

360DigiTMG Course in Information Analytics

This Tableau certification training course assists you understand Tableau Desktop 10, a global utilized understanding visualization, reporting, and also organization knowledge tool. Advance your occupation in analytics by discovering Tableau and just how to ideal use this coaching in your job.

In addition to the academic data, you'll deal with tasks which can make you business-prepared. data analytics course hyderabad is specifically curated to convey these expertise that employers actually consider beneath data expert credentials.

We got to discover every module of Digital marketing together with live examples right below. 360DigiTMG is a remarkable location where you can be instructed to complete & every concept of digital advertising and marketing. A committed online survey was developed and the link was sent to above 30 knowledge scientific research colleges, of which 21 responded throughout the stated time.

360DigiTMG data analytics training

Throughout the initial couple of weeks, you'll study the important suggestions of Big Data, and also you they'll carry on to learning about different Big Information engineering systems, Big Information handling as well as Big Data Analytics. You'll work with Big Information tools, including Hadoop, Hive, Hbase, Flicker, Sqoop, Scala, Tornado, as well as.

The need for Service analytics is huge in both home and also global job markets. According to Newscom, India's analytics market would certainly boost two circumstances to INR Crores by the end of 2019.

The EMCDSA accreditation demonstrates a person's capacity to participate and add as an information scientific research staff member on huge understanding tasks. Python is coming to be significantly popular due to a lot of reasons. It is also thought-about that it is mandatory to understand the Python phrase structure earlier than doing something interesting like info scientific research. Though, there are a lot of causes to find out about Python, nevertheless one of the vital reasons is that it's the greatest language to comprehend if you want to examine the data or enter the sphere of understanding assessment and scientific research. In order to start your detailed science journey, you will need to initially learn the naked minimum phrase structure.

Machine learning algorithms are used to develop anticipating styles utilizing Regression Evaluation and a Data Researcher has to develop experience in Neural Networks and Feature Design. 360DigiTMG provides an excellent Accreditation Program on Life Sciences and Healthcare Analytics meant for medical professionals. Medical professionals will certainly find out to analyze Electronic Health and wellness Record (EHR) information types and also buildings and also apply anticipating modelling on the same. Along with this, they'll be educated to make use of artificial intelligence methods to healthcare understanding.

But, discovering Python in order to use it for information sciences would possibly take a while, although, it totally rates it. Most entry level details experts work a minimum of a bachelor's diploma. Nonetheless, having a master's level in understanding analytics is helpful. Many folks from technological histories start at entry-level settings similar to an analytical aide, venture help analyst, procedures expert, or others, which supply them invaluable on-the-job training as well as experience.

For more information

360DigiTMG - Data Analytics, Data Science Course Training Hyderabad

Address - 2-56/2/19, 3rd floor,, Vijaya towers, near Meridian school,, Ayyappa Society Rd, Madhapur,, Hyderabad, Telangana 500081

Call us@+91 99899 94319

youtube

0 notes

Photo

Hadoop Big data Training at RM Infotech Laxmi Nagar Delhi NCR

History:

In 2006, the hadoop project was founded by Doug Cutting, It is an open source implementations of internal systems which is now being used to manage and process massive data(s) volumes. In short,

With Hadoop , a large amount of data of all varieties is continually stored and added multiple processing and analytics frameworks rather than moving the data(s) because moving data is typical and very expensive.

What are the career prospects in Hadoop?

Do you know that in next 3 years more than half of the data in this world will move to Hadoop? No wonder McKinsey Global Institute estimates shortage of 1.7 million Big Data professionals over next 3 years.

Hadoop Market is expected to reach $99.31B by 2022 at a CAGR of 42.1% -Forbes

Average Salary of Big Data Hadoop Developers is $135k (Indeed.com salary data)

According to experts – India alone will face a shortage of close to 2 Lac Data Scientists. Experts predict, a significant gap in job openings and professionals with expertise in big data skills. Thus, this is the right time for IT professionals to make the most of this opportunity by sharpening their big data skill set.

Who should take this course?

This course is designed for anyone who:-

wants to get into a career in Big Data

wants to analyse large amounts of unstructured data

wants to architect a big data project using Hadoop and its eco system components

Why from RM Infotech

100% Practical and Job Oriented

Experienced Training having 8+ yrs of Industry Expertise in Big Data.

Learn how to analyze large amounts of data to bring out insights

Relevant examples and cases make the learning more effective and easier

Gain hands-on knowledge through the problem solving based approach of the course along with working on a project at the end of the course

Placement Assistance

Training Certificate

Course Contents

Lectures 30 X 2 hrs. (60 hrs) Weekends. Video 13 Hours Skill level all level Languages English Includes Lifetime access Money back guarantee! Certificate of Completion * Hadoop Distributed File System * Hadoop Architecture * MapReduce & HDFS * Hadoop Eco Systems * Introduction to Pig * Introduction to Hive * Introduction to HBase * Other eco system Map * Hadoop Developer * Moving the Data into Hadoop * Moving The Data out from Hadoop * Reading and Writing the files in HDFS using java * The Hadoop Java API for MapReduce o Mapper Class o Reducer Class o Driver Class * Writing Basic MapReduce Program In java * Understanding the MapReduce Internal Components * Hbase MapReduce Program * Hive Overview * Working with Hive * Pig Overview * Working with Pig * Sqoop Overview * Moving the Data from RDBMS to Hadoop * Moving the Data from RDBMS to Hbase * Moving the Data from RDBMS to Hive * Market Basket Algorithms * Big Data Overview * Flume Overview * Moving The Data from Web server Into Hadoop * Real Time Example in Hadoop * Apache Log viewer Analysis * Introduction In Hadoop and Hadoop Related Eco System. * Choosing Hardware For Hadoop Cluster nodes * Apache Hadoop Installation o Standalone Mode o Pseudo Distributed Mode o Fully Distributed Mode * Installing Hadoop Eco System and Integrate With Hadoop o Zookeeper Installation o Hbase Installation o Hive Installation o Pig Installation o Sqoop Installation o Installing Mahout * Horton Works Installation * Cloudera Installation * Hadoop Commands usage * Import the data in HDFS * Sample Hadoop Examples (Word count program and Population problem) * Monitoring The Hadoop Cluster o Monitoring Hadoop Cluster with Ganglia o Monitoring Hadoop Cluster with Nagios o Monitoring Hadoop Cluster with JMX * Hadoop Configuration management Tool * Hadoop Benchmarking 1. PDF Files + Hadoop e Books 2. Life time access to videos tutorials 3. Sample Resumes 4. Interview Questions 5. Complete Module & Frameworks Code

Hadoop Training Syllabus

Other materials provided along with the training

* 13 YEARS OF INDUSTRY EXPERIENCE * 9 YEARS OF EXPERIENCE IN ONLINE AND CLASSROOM TRAINING

ABOUT THE TRAINER

Duration of Training

Duration of Training will be 12 Weeks (Weekends) Saturday and Sunday 3 hrs.

Course Fee

Course Fee in 15,000/- (7,500/- X 2 installments) 2 Classes are Free as Demo. 100% Money back Guarantee if not satisfied with Training. Course Fee includes Study Materials, Videos, Software support, Lab, Tution Fee.

Batch Size

Maximum 5 candidates in a single batch.

Contact Us

To schedule Free Demo Kindly Contact :-

Parag Saxena.

RM Infotech Pvt Ltd,

332 A, Gali no - 6, West Guru Angad Nagar,

Laxmi Nagar, Delhi - 110092.

Mobile : 9810926239.

website : http://www.rminfotechsolutions.com/javamain/hadoop.html

#hadoop big data training#Hadoop Big data Training in Delhi#Hadoop Big data Training in Laxmi Nagar#Hadoop Course Content#Hadoop Course Fees in Delhi#Hadoop Jobs in Delhi#Hadoop Projects in Delhi

3 notes

·

View notes

Text

300+ TOP BIG DATA Interview Questions and Answers

BIG Data Interview Questions for freshers experienced :-

1. What is Big Data? Big Data is relative term. When Data can’t be handle using conventional systems like RDBMS because Data is generating with very high speed, it is known as Big Data. 2. Why Big Data? Since Data is growing rapidly and RDBMS can’t control it, Big Data technologies came into picture. 3. What are 3 core dimension of Big Data. Big Data have 3 core dimensions: Volume Variety Velocity 4. Role of Volume in Big Data Volume: Volume is nothing but amount of data. As Data is growing with high speed, a huge volume of data is getting generated every second. 5. Role of variety in Big Data Variety: So many applications are running nowadays like mobile, mobile sensors etc. Each application is generating data in different variety. 6. Role of Velocity in Big Data Velocity: This is speed of data getting generated. for example: Every minute, Instagram receives 46,740 new photos. So day by day speed of data generation is getting higher. 7. Remaining 2 less known dimension of Big Data There are two more V’s of Big Data. Below are less known V’s: Veracity Value 8. Role of Veracity in Big Data Veracity: Veracity is nothing but the accuracy of data. Big Data should have some accurate data in order to process it. 9. Role of Value in Big Data Value: Big Data should contain some value to us. Junk Values/Data is not considered as real Big Data. 10. What is Hadoop? Hadoop: Hadoop is a project of Apache. This is a framework which is open Source. Hadoop is use for storing Big data and then processing it.