#subproofs

Explore tagged Tumblr posts

Text

finished my homework with 19 minutes to spare

remind me to stop fucking . procrastinating my homework . for the love of God

#speculation nation#it took me almost 9 hours again. this homework was Entirely proofs.#i learned. i fucking learned. but at what cost.#subproofs and nested subproofs my beloathed. every time i think it's bad enough it gets worse#need to. detox. desperately. god help me.

5 notes

·

View notes

Text

A fresh start

"Making files is easy under the UNIX operating system. Therefore, users tend to create numerous files using large amounts of file space. It has been said that the only standard thing about all UNIX systems is the MOTD telling users to clean up their files."—SVR2 admin's guide (1984)

There's something very appealing about starting with a new blank page. Computer software often provide the facility of starting a new document at any moment, typically with a particular set of properties acquired from a template. A word processor, spreadsheet or image editor offers this. So does a web browser, in the form of new browser windows or new tabs. A private browsing tab represents a completely fresh context that will disappear when the user is finished with it. Such fresh contexts have many uses, beyond privacy, because their properties are simple and reproducible.

On the infrastructural side, cloud computing depends on virtualization-based technologies such as containerization. In order to use cloud processing power, one spins up a new virtual machine or container. The difference is a question of how much of the underlying machine is virtualised—the hardware in a hypervisor-based VM, and just the Application Binary Interface (ABI) in a container system.

Instead of virtualizing the underlying hardware, containers virtualize the operating system (typically Linux or Windows) so each individual container contains only the application and its libraries and dependencies. Containers are small, fast, and portable because, unlike a virtual machine, containers do not need to include a guest OS in every instance and can, instead, simply leverage the features and resources of the host OS.

By providing the ability to easily create and destroy virtual servers, such systems make it possible for a server application's access to computational resources to expand and contract elastically.

The implicit zeroth level of virtualization is real physical hardware. The maintenance of an operating system on a particular physical computer (whether it's a laptop or a server in a data centre) is a task that can get complex, particularly when there are multiple computers with configurations that naturally diverge incrementally over time. It's far preferable to work with a freshly installed operating system (in the case of hypervisors) or to create a pristine container from a stored image.

The need for certainty and reproducibility has roots going back long before computing. The German mathematician Landau reportedly "could not do mathematics, or anything, unless his desk was completely clear, with a notepad and a pencil and a ruler set precisely. Only when that had been fulfilled could the mathematics begin."

This knolling of the mathematian's physical workspace could be likened to the statement of assumptions in a logical proof (the ground rules for what follows). Needless to say, in proofs, it's always possible to start a new subproof with additional assumptions, which only hang around until they are discharged at the end of the subproof.

The artificial and temporary nature of the workspace makes it a bit like a magic circle or suspension of disbelief in which (possibly unlikely, but logically consistent) assumptions can be entertained and their implications examined. This is presumably the (as yet unfulfilled) attraction of virtual reality.

0 notes

Text

Universal Introduction: Key To Understanding Quantifier Rules | PhilosophyStudent.org #shorts

Universal Introduction in symbolic logic, a fundamental rule in quantifier logic. Please Visit our Website to get more information: https://ift.tt/B69Qxn0 In symbolic logic, a valid inference is in a system of quantifier rules. A universal claim is derived from the assumption of a hypothetical or arbitrarily chosen name (object) at the outset of a subproof. An instance is derived from this…

View On WordPress

0 notes

Text

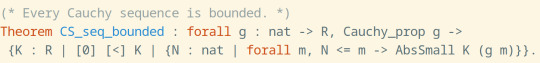

Every Cauchy Sequence is Bounded, a Coq Proof

AKA "wow, who'd've thought that if a sequence gets arbitrarily close together that it couldn't blow up". The proof is here. I rewrote it with comments and bullet points and some slight changes of notation.

Any surprises in the notation?

We have a weird notation for existence. We're saying "Exists K in R, K positive, and for this K there exists an N such that past that N, |g_m| <= K." (this is what AbsSmall means)

Why brackets around [0] and [<]? This is mostly to signify that these are the elements and relations from our ordered field R.

Basically we're going to use the Cauchy criterion to find that N past which all the terms of the sequence are within 1 of each other. Then we're going to show that all the terms past that point are bounded in magnitude by g_N^2 - g_N + 2.

In order for this to be an acceptable K, we need to show:

it's positive.

it's actually a bound for all g_m with m >= N.

The K-is-positive proof:

Fairly simple. 0 < 7/4, and also 7/4 <= g_N^2 - g_N + 2, because we can rewrite this inequality as 0 <= (g_N-1/2)^2.

The K-is-a-bound proof is still fairly long. We start by:

declaring that the bound is effective past N,

introducing the m in question and the fact that N<=m,

and destructing the fact that |g_m - g_N | <= 1 into two inequalities that can be used more effectively, -1 <= g_m - g_N and 1 <= g_m - g_N.

Now we split the inequality to be shown, |g_m| <= (g_N)^2 - g_N + 2, into the negative and positive components.

Here's the negative subproof, -((g_N)^2 - g_N +2) <= g_m:

To prove, this, the high-level steps were to convert this into the inequality -(g_N)^2 + 2 <= g_m - g_N, and then proving the two subinequalities -(g_N)^2 + 2 <= -1 and -1 <= g_m - g_N. The second of these is from the Cauchy criterion and the first ends up coming from rearranging to prove 0 <= (g_N)^2 + 1 based on the nonnegativity of squares and the fact that 1 is positive.

The positive subproof, g_m <= (g_N)^2 - g_N + 2, comes from:

Basically,

rearrange into g_m - g_N <= g_N * g_N + 1 - 2*g_N + 1,

and then prove that g_m - g_N <= 1 and 1 <= g_N*g_N + 1 - 2*g_N + 1.

The first of these two is just from the Cauchy criterion and the second comes from manipulating to the point where we are only showing 0 <= (g_N-1)^2, which comes from the fact that squares are nonnegative.

There are some troubling things about this proof. First, the positive and negative branches have exactly the same flavor, but the proof steps aren't "mirror images" of each other. It'd be nice if we could facilitate code reuse. If we used semicolon tactic chaining this would probably look (a) much smaller and (b) clear-as-mud, but perhaps there's a helping lemma that could be used to massage this into the appropriate format?

5 notes

·

View notes

Text

RT @ThaIlluminator: what happens in subproofs, stays in subproofs

what happens in subproofs, stays in subproofs

— The Illumin∀tor (@ThaIlluminator) March 4, 2019

from Twitter https://twitter.com/Metalogical

0 notes

Photo

subproofs hieroglyphy by Jared Haer Tempests Unresistedness Study #me #Python #art #design #digitalart #creativecode #GIMP

0 notes

Text

Referential Transparency and Mathematical Proofs

There’s a lot of excitement, in parts of the mathematics world, about formalizing mathematical proofs using proof assistants. In particular, the mathematicians Terence Tao and (independently) Kevin Buzzard are keen and vocal supporters of the Lean programming language/proof assistant. Part of the excitement concerns the tangible process of specifying and then proving propositions which serve as lemmas/subproofs in the development towards an ambitious goal (say, a formal proof of Fermat’s Last Theorem, in the case of Buzzard’s current research project).

It’s significant that these high-profile mathematicians are users, not inventors, of the Lean system. Buzzard describes himself as “just a consumer”. A tool, called Blueprint, has been created by Patrick Massot for converting programs in Lean into standard human-readable mathematical form, typeset via TeX. Blueprint also generates dependency graphs, which display progress on the proof via colour coding. Each proposition to be proved appears as a blue bubble, which gains a green border when it has been formalized, and turns green overall when the proposition has been proved. Terence Tao has written a blog post describing the process.

Tao has a tendency to romanticise mathematical research. Here’s his narration of the progress of a major mathematical research project, which makes it sound like the plot of a science fiction novel:

Actual solutions to a major problem tend to be arrived at by a process more like the following (often involving several mathematicians over a period of years or decades, with many of the intermediate steps described here being significant publishable papers in their own right):

Isolate a toy model case x of major problem X.

Solve model case x using method A.

Try using method A to solve the full problem X.

This does not succeed, but method A can be extended to handle a few more model cases of X, such as x’ and x”.

Eventually, it is realised that method A relies crucially on a property P being true; this property is known for x, x’, and x”, thus explaining the current progress so far.

Conjecture that P is true for all instances of problem X.

Discover a family of counterexamples y, y’, y”, … to this conjecture. This shows that either method A has to be adapted to avoid reliance on P, or that a new method is needed.

Take the simplest counterexample y in this family, and try to prove X for this special case. Meanwhile, try to see whether method A can work in the absence of P.

Discover several counterexamples in which method A fails, in which the cause of failure can be definitively traced back to P. Abandon efforts to modify method A.

Realise that special case y is related to (or at least analogous to) a problem z in another field of mathematics. Look up the literature on z, and ask experts in that field for the latest perspectives on that problem.

Learn that z has been successfully attacked in that field by use of method B. Attempt to adapt method B to solve y.

After much effort, an adapted method B’ is developed to solve y.

Repeat the above steps 1-12 with A replaced by B’ (the outcome will of course probably be a little different from the sample storyline presented above). Continue doing this for a few years, until all model special cases can be solved by one method or another.

Eventually, one possesses an array of methods that can give partial results on X, each of having their strengths and weaknesses. Considerable intuition is gained as to the circumstances in which a given method is likely to yield something non-trivial or not.

Begin combining the methods together, simplifying the execution of these methods, locating new model problems, and/or finding a unified and clarifying framework in which many previous methods, insights, results, etc. become special cases.

Eventually, one realises that there is a family of methods A^* (of which A was the first to be discovered) which, roughly speaking, can handle all cases in which property P^* (a modern generalisation of property P) occurs. There is also a rather different family of methods B^* which can handle all cases in which Q^* occurs.

From all the prior work on this problem, all known model examples are known to obey either P^* or Q^*. Formulate Conjecture C: all cases of problem X obey either P^* or Q^*.

Verify that Conjecture C in fact implies the problem. This is a major reduction!

Repeat steps 1-18, but with problem X replaced by Conjecture C. (Again, the storyline may be different from that presented above.) This procedure itself may iterate a few times.

Finally, the problem has been boiled down to its most purified essence: a key conjecture K which (morally, at least) provides the decisive input into the known methods A^*, B^*, etc. which will settle conjecture C and hence problem X.

A breakthrough: a new method Z is introduced to solve an important special case of K.

The endgame: method Z is rapidly developed and extended, using the full power of all the intuition, experience, and past results, to fully settle K, then C, and then at last X.

The technology developed to solve major problem X is adapted to solve other related problems in the field. But now a natural successor question X’ to X arises, which lies just outside of the reach of the newly developed tools… and we go back to Step 1.

(From Terence Tao's blog.)

The dependency graph produced by Blueprint is really only capable of telling a very simplified version of a small part of a research process—an essentially linear, cumulative progression toward a formalized proof. Yet it seems to have enthralled Tao, Buzzard and others. One thing that is clearly going on here is that the distinction between formalizing the statement of a proposition, and formalizing its proof, is powerful. As Kevin Buzzard wrote on Twitter:

“The very nature of writing mathematics in Lean is that you can leave very precisely-stated results (which might be mathematically "standard") unproved and then someone else (perhaps who has no interest or understanding of the full FLT proof) can pick them off later.”

This is not an abstruse mathematical property of the proofs: it’s more like an attribute of Lean’s design. One way of describing it is to say that proofs are modular. Once a proposition has been formalized, the mathematician has a place to stand and can use the proposition (as an assumption) in a new part of the proof. Conversely, a mathematician or group of mathematicians can abandon a project, but still have established a clear set of results.

The design aspect of Lean’s modularity is reminiscent of Herbert Simon’s parable of the watchmakers:

There once were two watchmakers, named Hora and Tempus, who made very fine watches. The phones in their workshops rang frequently and new customers were constantly calling them. However, Hora prospered while Tempus became poorer and poorer. In the end, Tempus lost his shop. What was the reason behind this? The watches consisted of about 1000 parts each. The watches that Tempus made were designed such that, when he had to put down a partly assembled watch, it immediately fell into pieces and had to be reassembled from the basic elements. Hora had designed his watches so that he could put together sub-assemblies of about ten components each, and each sub-assembly could be put down without falling apart. Ten of these sub-assemblies could be put together to make a larger sub-assembly, and ten of the larger sub-assemblies constituted the whole watch.

Proving a proposition is like completing one of the sub-assemblies. It is a tangible goal or checkpoint, a place where it's possible to save your progress, so the project isn’t at risk of dissolving back into confusion. It’s conceivable, in the terms of the parable, that some components (sub-assemblies) would be put together in advance, or bought off the shelf, or even that a watch might wait for some time in an incomplete state, for a particular sub-assembly to become available. It’s clear that this system makes it much easier to stop work, and then later restart work, on a project.

To mix metaphors, the ability to formalize propositions creates lasting “footholds” for the mathematician, much as fixed aids in mountain climbing consolidate a route that has been soloed. But not only can subsequent mathematicians take advantage of results that have already been established, they can also, as Buzzard comments, postulate yet-to-be-proven results, and build on those.

From a computer science perspective, it is tempting to recognize this design feature as an example of referential transparency. Proofs and propositions are well known to be referentially transparent, thanks to the very limited logical syntax in which they are expressed. Referential transparency is not as simple as it is sometimes made out to be, but it essentially means, in this case, that proofs are “transparent” windows on propositions. We see straight through the proof to the proposition it establishes, and any proof will do: they're all interchangeable.

By the Curry-Howard isomorphism, we can extend the discussion to the example of types and values. A type abstracts over some set of values. In terms of referential transparency, any value in a program is, in one sense, a kind of pointer to a type. The type signature of a function makes no distinction between different values. Usually, a type system will have the principal type property, which guarantees that every term has just one unambiguous type (https://en.wikipedia.org/wiki/Principal_type) and which makes this all work.

In natural language, the question of referential transparency is complicated (see https://iep.utm.edu/referential-opacity/). This is due to various things such as the distinction between using and mentioning a name, aliasing, the intricacies of propositional attitudes and the fact that names can be ill-defined (take the example of “The King” in the phrase “The King is dead, long live the King!”.

Formal languages typically avoid all of this complexity, although the precise conditions necessary for referential transparency are subtle. The Wikipedia page provides a helpful link to a 1990 paper by Harald Søndergaard and Peter Sestoft which tries to clarify the situation: http://www.itu.dk/people/sestoft/papers/SondergaardSestoft1990.pdf

The convenient property of proofs and propositions which gives these large mathematical projects their modular structure (and which Lean and other proof assistants take advantage of) is apparently a new discovery for mathematicians. But, in the 19th century, Hegel seems to have sensed it. In the preface to the Phenomenology of Mind, he wrote:

All the same, while proof is essential in the case of mathematical knowledge, it still does not have the significance and nature of being a moment in the result itself; the proof is over when we get the result, and has disappeared. Qua result the theorem is, no doubt, one that is seen to be true. But this eventuality has nothing to do with its content, but only with its relation to the knowing subject. The process of mathematical proof does not belong to the object; it is a function that takes place outside the matter in hand.

(From the Phenomenology of Mind.)

If I understand this correctly, Hegel’s observation that the proof “disappears”, leaving only the result, is an early example of something like referential transparency, and also of a clear formal distinction between results and their proofs.

A proof is a kind of path leading to a proposition, a paper trail. This is a more general phenomenon than “reference”. Fir example, a set of architect’s drawings and models may lead up to the construction of a building, but they don’t refer to it. So, while referential transparency may apply to proofs and propositions, it’s a bit of a misnomer.

In the construction of a proof, mathematicians are involved with matters of design, organization, patterning and planning. They are not just consumers of the proof assistant software: they are engaged in an enterprise of their own. Perhaps a proof could be likened to a plinth which supports and displays a particular result. It is a parergon, extraneous, “outside the matter in hand” (the truth of the proposition), even a kind of redundant appendage, but a necessary part of the mathematical activity.

0 notes

Text

What Is A Sub Proof? Inside The World Of Complex Logic | PhilosophyStudent.org #shorts

Explore the intricate world of sub-proofs – the nested proofs within larger logical sequences. Please Visit our Website to get more information: https://ift.tt/kpMnYOw #subproof #logic #nestedproof #philosophy #reasoning #shorts from Philosophy Student https://www.youtube.com/watch?v=GztQjmSjBQM

View On WordPress

0 notes