Photo

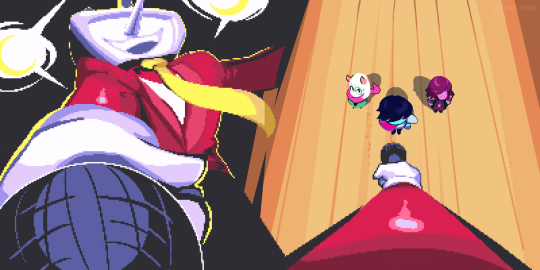

The entire “Noelle hyped for Xmas” saga is complete~ Glad you enjoyed, and Happy Holidays!!

37K notes

·

View notes

Text

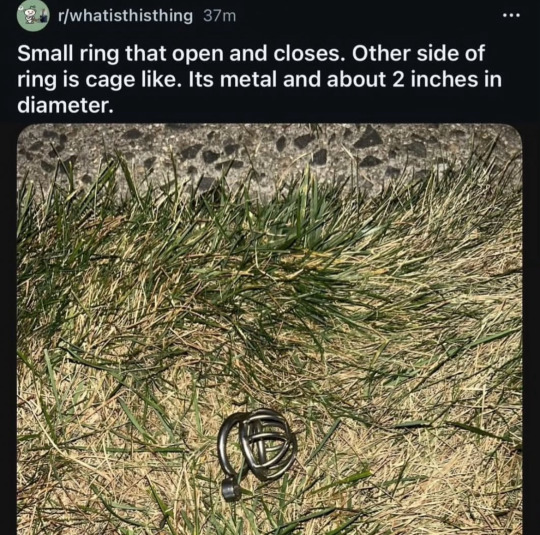

when things are already pretty horsey but then the situation

4K notes

·

View notes

Text

This ^

What does not, will never, and CAN NOT help sex workers is pushing them to the margins of society, stigmatized and unsupported, with the police breathing down their necks and at CONSTANT risk of losing their housing, income and social safety nets.

Universal basic income, healthcare, housing, education, labor rights and legal protection, these are the kinds of things which will help sex workers gain autonomy from the industry they are exploited within, same as any literally other worker.

Personally I think sex work is fine, actually, and people should be allowed to do it if they want to, and trying to "eliminate" it is a stupid idea that has consistently failed for all of recorded history, but if you want to reduce the number of people doing sex work, then your political project MUST BE to make sex workers safer, healthier, happier, and more free as sex workers. Only full legal protections and recognition of their status as workers will make it possible to address the structural issues of the industry they work in.

Let me reiterate though: your project must be to make sex workers safer and more free - not the imaginary civilian that you have in your head, the purified person which you are certain the sex worker will definitely become once you have rescued them from their degradation. You have to care for sex workers as human beings AS THEY ARE, HERE AND NOW.

You cannot help sex workers if your only goal is to make them stop existing. If you cannot extend your care to sex workers, but only to the imaginary people you think they ought to be, then you are fundamentally incapable of doing anything to help solve the problems of the sex work industry.

You don't have to like sex work, you don't have to encourage it or admire it, but if you want to do a damn thing to help the human beings who do sex work, you have to stop seeing them as a problem to be eliminated and start seeing them as equals who deserve your solidarity.

someone got really mad at me for being pro-pornography so i'd like to be annoying for a little longer:

-there are enormous problems with exploitation in the porn industry that harm and endanger the people within it, and that harm is carried out mostly against women and minorities

-this is Bad

-however the last century of passing laws against pornography hasn't actually helped any of those problems, and what sex workers tend to advocate for is the legitimization of their labor, so that they can then access the same protections and regulations that people in other industries can access

-for instance football players, miners, roofers, and warehouse workers are also exploited and endangered by their professions, have to work long hours, and can end up traumatized and disabled by unregulated and unsafe working conditions. these people are used up and thrown away by powerful bosses they can't individually challenge.

-however because these industries are not de facto illegal to participate in, when these people form unions and demand better working conditions, they can at least fight for their rights.

-sex workers, who engage in heavily stigmatized work that's also often illegal, have little recourse to demand better treatment.

-even if you don't like porn, and especially if you don't like porn, if you care about the women who are exploited in pornography, you need to advocate for the legality of pornography.

-the more illegal the porn industry is, the less safe and fair it is, and people will still be working in it, no matter how illegal it is.

-again: the porn industry should be regulated like any other industry and subject to laws guaranteeing fair compensation for labor, safe working conditions, and legal resources for workers suffering exploitation and abuse.

-once it is legal to do sex work, then women can bring charges against the men who have broken their contracts and abused them.

-and that is why i push back against posts saying that pornography is evil. it is an entertainment product, made by people, to meet an ongoing demand. criminalizing the consumption and production of it may slightly lessen the demand at the incredible cost of endangering everyone involved. and i think that is what's evil.

5K notes

·

View notes

Text

someone got really mad at me for being pro-pornography so i'd like to be annoying for a little longer:

-there are enormous problems with exploitation in the porn industry that harm and endanger the people within it, and that harm is carried out mostly against women and minorities

-this is Bad

-however the last century of passing laws against pornography hasn't actually helped any of those problems, and what sex workers tend to advocate for is the legitimization of their labor, so that they can then access the same protections and regulations that people in other industries can access

-for instance football players, miners, roofers, and warehouse workers are also exploited and endangered by their professions, have to work long hours, and can end up traumatized and disabled by unregulated and unsafe working conditions. these people are used up and thrown away by powerful bosses they can't individually challenge.

-however because these industries are not de facto illegal to participate in, when these people form unions and demand better working conditions, they can at least fight for their rights.

-sex workers, who engage in heavily stigmatized work that's also often illegal, have little recourse to demand better treatment.

-even if you don't like porn, and especially if you don't like porn, if you care about the women who are exploited in pornography, you need to advocate for the legality of pornography.

-the more illegal the porn industry is, the less safe and fair it is, and people will still be working in it, no matter how illegal it is.

-again: the porn industry should be regulated like any other industry and subject to laws guaranteeing fair compensation for labor, safe working conditions, and legal resources for workers suffering exploitation and abuse.

-once it is legal to do sex work, then women can bring charges against the men who have broken their contracts and abused them.

-and that is why i push back against posts saying that pornography is evil. it is an entertainment product, made by people, to meet an ongoing demand. criminalizing the consumption and production of it may slightly lessen the demand at the incredible cost of endangering everyone involved. and i think that is what's evil.

5K notes

·

View notes

Text

Do you block people in the same fandom as you just because you don't like their takes?

85K notes

·

View notes

Text

finally watched some video of the Weird Route differences in Chapter 3 & 4 and JESUS FUCKING CHRIST

i think i see the shape of where Weird Route is going, and I really, really do not like it (in the sense of it is terrifying and heartbreaking, not in the sense that it is bad storytelling)

49 notes

·

View notes

Text

There’s a new (unreviewed draft of a) scientific article out, examining the relationship between Large Language Model (LLM) use and brain functionality, which many reporters are incorrectly claiming shows proof that ChatGPT is damaging people’s brains.

As an educator and writer, I am concerned by the growing popularity of so-called AI writing programs like ChatGPT, Claude, and Google Gemini, which when used injudiciously can take all of the struggle and reward out of writing, and lead to carefully written work becoming undervalued. But as a psychologist and lifelong skeptic, I am forever dismayed by sloppy, sensationalistic reporting on neuroscience, and how eager the public is to believe any claim that sounds scary or comes paired with a grainy image of a brain scan.

So I wanted to take a moment today to unpack exactly what the study authors did, what they actually found, and what the results of their work might mean for anyone concerned about the rise of AI — or the ongoing problem of irresponsible science reporting.

If you don’t have time for 4,000 lovingly crafted words, here’s the tl;dr.

The major caveats with this study are:

This paper has not been peer-reviewed, which is generally seen as an essential part of ensuring research quality in academia.

The researchers chose to get this paper into the public eye as quickly as possible because they are concerned about the use of LLMs, so their biases & professional motivations ought to be taken into account.

Its subject pool is incredibly small (N=54 total).

Subjects had no reason to care about the quality of the essays they wrote, so it’s hardly surprising the ones who were allowed to use AI tools didn’t try.

EEG scans only monitored brain function while writing the essays, not subjects’ overall cognitive abilities, or effort at tasks they actually cared about.

Google users were also found to utilize fewer cognitive resources and engage in less memory retrieval while writing their essays in this study, but nobody seems to hand-wring about search engines being used to augment writing anymore.

Cognitive ability & motivation were not measured in this study.

Changes in cognitive ability & motivation over time were not measured.

This was a laboratory study that cannot tell us how individuals actually use LLMs in their daily life, what the long-term effects of LLM use are, and if there are any differences in those who choose to use LLMs frequently and those who do not.

The researchers themselves used an AI model to analyze their data, so staunch anti-AI users don’t have support for there views here.

Brain-imaging research is seductive and authoritative-seeming to the public, making it more likely to get picked up (and misrepresented) by reporters.

Educators have multiple reasons to feel professionally and emotionally threatened by widespread LLM use, which influences the studies we design and the conclusions that we draw on the subject.

Students have very little reason to care about writing well right now, given the state of higher ed; if we want that to change, we have to reward slow, painstaking effort.

The stories we tell about our abilities matter. When individuals falsely believe they are “brain damaged” by using a technological tool, they will expect less of themselves and find it harder to adapt.

Head author Nataliya Kosmyna and her colleagues at the MIT Media Lab set out to study how the use of large language models (LLMs) like ChatGPT affects students’ critical engagement with writing tasks, using electroencephalogram scans to monitor their brains’ electrical activity as they were writing. They also evaluated the quality of participants’ papers on several dimensions, and questioned them after the fact about what they remembered of their essays.

Each of the study’s 54 research subjects were brought in for four separate writing sessions over a period of four months. It was only during these writing tasks that students’ brain activity was monitored.

Prior research has shown that when individuals rely upon an LLM to complete a cognitively demanding task, they devote fewer of their own cognitive resources to that task, and use less critical thinking in their approach to that task. Researchers call this process of handing over the burden of intellectually demanding activities to a large language model cognitive offloading, and there is a concern voiced frequently in the literature that repeated cognitive offloading could diminish a person’s actual cognitive abilities over time or create AI dependence.

Now, there is a big difference between deciding not to work very hard on an activity because technology has streamlined it, and actually losing the ability to engage in deeper thought, particularly since the tasks that people tend to offload to LLMs are repetitive, tedious, or unfulfilling ones that they’re required to complete for work and school and don’t otherwise value for themselves. It would be foolhardy to assume that simply because a person uses ChatGPT to summarize an assigned reading for a class that they have lost the ability to read, just as it would be wrong to assume that a person can’t add or subtract because they have used a calculator.

However, it’s unquestionable that LLM use has exploded across college campuses in recent years and rendered a great many introductory writing assignments irrelevant, and that educators are feeling the dread that their profession is no longer seen as important. I have written about this dread before — though I trace it back to government disinvestment in higher education and commodification of university degrees that dates back to Reagan, not to ChatGPT.

College educators have been treated like underpaid quiz-graders and degrees have been sold with very low barriers to completion for decades now, I have argued, and the rise of students submitting ChatGPT-written essays to be graded using ChatGPT-generated rubrics is really just a logical consequence of the profit motive that has already ravaged higher education. But I can’t say any of these longstanding economic developments have been positive for the quality of the education that we professors give out (or that it’s helped students remain motivated in their own learning process), so I do think it is fair that so many academics are concerned that widespread LLM use could lead to some kind of mental atrophy over time.

This study, however, is not evidence that any lasting cognitive atrophy has happened. It would take a far more robust, long-term study design tracking subjects’ cognitive engagement against a variety of tasks that they actually care about in order to test that.

Rather, Kosmyna and colleagues brought their 54 study participants into the lab four separate times, and assigned them SAT-style essays to write, in exchange for a $100 stipend. The study participants did not earn any grade, and having a high-quality essay did not earn them any additional compensation. There was, therefore, very little personal incentive to try very hard at the essay-writing task, beyond whatever the participant already found gratifying about it.

I wrote all about the viral study supposedly linking AI use to cognitive decline, and the problem of irresponsible, fear-mongering science reporting. You can read the full piece for free on my Substack.

1K notes

·

View notes

Text

i know ralsei technically has little paws but i wanted to draw him with little hooves

551 notes

·

View notes