Don't wanna be here? Send us removal request.

Text

A HUMAN RIGHT

Humans are prone to reserving judgement for others based on the context of the environments. Due to the experiences we face human beings, it may seem odd to apply the same idea of bias to technology. Unfortunately, the more advanced technology gets, the more prone it is to replicate the biases we as humans possess. Recently, many of the frequently used services such as Google Search or Facial recognition disproportionately misrepresent black/darker skinned people as reflected by the algorithms that are used to create these programs. Article 27 of the Universal Declaration of Human Rights (UDHR) states that “everyone has the right freely to participate in the cultural life of the community, to enjoy the arts and to share in scientific advancement and its benefits” (UDHR). The implicit biases in technological processes are violating the human rights of black individuals. I contest that the reoccurring algorithmic biases in computer programs do not support the human rights of black individuals.

WORK CITED

UN General Assembly, “Universal Declaration of Human Rights,” 217 (III) A (Paris, 1948), http://www.un.org/en/universal-declaration-human-rights/ (accessed November 21, 2019)

5 notes

·

View notes

Text

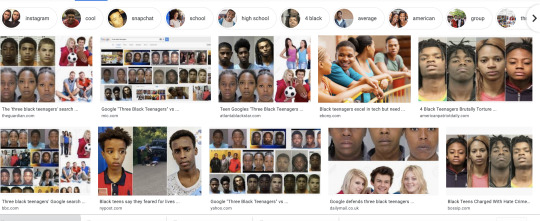

A SIMPLE GOOGLE SEARCH

As mentioned above, Google, one of the largest internet search platforms, cited for implementing bias algorithms. Research demonstrates that implicit biases in these algorithms dictate the experiences we utilize with this platform. A study by Harvard University found that Googling a common black name such as “Trevon Jones” is more prone to conjure results about arrest or crime much more frequently than typically white names (Sweeney 1). An algorithm performing in this manner further perpetuates that stereotypes about black people, which can have harmful effects on black individuals. Moreover, a quick Google search of “Three Black Teenagers” conclusively demonstrates the biases that the platform has against its black consumers.

WORK CITED

YouTube, CNN Business, 10 May 2017, https://youtu.be/gTtbseMYm_s (accessed November 21, 2019).

Sweeney, Latanya. “Discrimination in Online Ad Delivery.” SSRN Electronic Journal, 29 Jan. 2013, doi:10.2139/ssrn.2208240. (accessed November 21, 2019)

8 notes

·

View notes

Text

FACIAL RECOGNITION

Facial Recognition also has many implicit biases that must be addressed. Joy Buolamwini, a computer scientist that worked on facial recognition describes her experiences with a facial recognition software that had issues with the software detecting her face. She states that “ I was struggling to have my face detected and pulled out a white mask and it was detected properly” The reasons these biases exist are due to the data sets that are utilized in their creation. In Buolamwini’s case, the service did not detect her face due to algorithms data sets utilized people that are not as representative of the world’s population (CNN). This also demonstrates a greater issues in the tech industry as a whole. As of right now, black people in technology make up a mere 4.9% of the global make up of those participating in technology. With less black people in tech, there are less black voices able to point out the flaws in the algorithms that are creating these issues for our skin tone. This is actively violating our human rights.

youtube

WORK CITED

YouTube, CNN Business, 10 May 2017, https://youtu.be/gTtbseMYm_s

5 notes

·

View notes

Text

SOLUTIONS

Creating solutions geared towards addressing implicit biases in technology does not have to be complicated. A plethora of organizations and institutions are actively contributing to dismantling the issues faced by consumers of color concerning software.

For example, researchers at IBM have created new algorithms that process bias ai training datasets to make the process fair for more people. The algorithm ensures to account for t. Kosh Varshney, who worked on the project, explains the project stating, “The objective is to minimize the difference in a statistical sense between the input training data and the output clean training data set that we’re creating”(IBM Research 0:56-1:02). By “minimizing the difference,” researchers are effectively removing and correcting the implicit bias in the original datasets in the software we use.

In addition, Joy Buolawmi founded the Algorithmic Justice League, an organization working towards addressing the concerns of technological racism. The website for the organization includes different mediums such as bias checks for activists, consumers, and interested individuals to fight the problem and remain informed. Buolawmi speaks on her organization claiming, “We now have the opportunity to unlock even greater equality if we make social change a priority and not an afterthought” (Buolawmi 7:04-7:10). The more access people have to this information, will enable for a more considerable amount of people to get involved and dismantle all biases in technology.

Actionable steps consumers can take is to actively report all occurrences whereby Artificial intelligence is working improperly based on bias to the developers created the user interfaces.

WORK CITED

YouTube, YouTube, 27 Mar. 2017, https://www.youtube.com/watch?v=UG_X_7g63rY&t=449s.

YouTube, YouTube, 7 Dec. 2017, https://www.youtube.com/watch?v=MTQ-JZZUIjU.

6 notes

·

View notes