A blog about technology in general and nonprofit technology in particular - musings, stories, tutorials, and links. Written by Jason Samuels, an IT professional working in the nonprofit sector. Any opinions stated here are my own.

Don't wanna be here? Send us removal request.

Text

Blackthorn Events for Salesforce how-to: build a screen flow to upload and manage attendee groups

My last blog post was about implementing a CSV import feature. This blog post documents utilizing that screen flow feature to facilitate attendee group management for Blackthorn Events.

The scenario is an events center that uses Blackthorn Events and Salesforce for their day-to-day operations. Most of their event registrations are processed in their own system, but some events they host are run by partner organizations who handle attendee registration independently. In those cases the partner organization sends the event center a list of registered attendees to upload, and the event center later issues one large invoice to the partner org for attendee lodging and other charges.

Administering these attendee groups and invoicing them back to the partner organization has been difficult, and we are on a path to improve this. The Blackthorn Events data model contains an attendee group object which it automatically utilizes when multiple people are registered in a single web transaction. That object provided a nice starting point to extend to more robust attendee group management functionality.

This was a technically challenging project that turned out to be the most complex thing I've yet built with Salesforce Flow Builder. The resulting Flow leverages brand new features and overcomes difficulties of working with a managed package's objects. Note that custom automations are specifically outside of the scope of Blackthorn support, so they cannot assist if you decide to leverage this in another Blackthorn org. The tricks and lessons in this post should be applicable to other non-Blackthorn use cases as well.

A reason why it's important to note all that up front is because before building this Flow, I questioned whether it was a good idea to build. Our consulting team inherited a client environment where the existing attendee import process was to use the data import wizard to upload a list of Attendee records with a custom field called Imported Attendee marked TRUE. That boolean value activated a record-triggered flow which would create the Attendee's associated Line Item, Invoice, and Transaction records.

That system fell short largely because of the governor limit errors that staff regularly encountered. It was initially my opinion that we'd be better off improving the existing system's efficiency and implementing Apsona as a better pre-built upload tool with batch size control to mitigate the governor limit errors. But my opinion changed when I saw the added value in how a Flow could accomplish tying together the attendee group upload, group deposit, and group payoff processes. In other words it made sense to do once it addressed a larger business process need than just the upload function.

An early build paired those three functions, but still relied on a CSV upload targeting the Attendee object to salvage the existing record-triggered Flow. That actually did work but only with the batch size set to 2 and there's a 30+ second wait between batches. That poor performance was the deal-breaker which brought me around to deprecating the record-triggered flow and rebuilding its functionality within the screen flow.

The core performance issue in the record-triggered flow was that it chained together multiple write operations on managed package objects. Each of those objects trigger some rollups and other custom automation that we know about. And each of those objects also trigger some Apex code that we don't have any visibility into. But the cumulative impact of it all counts towards the flow interview limits. And I don't think Salesforce bulkifies downstream write operations which are initiated when a record-triggered flow is invoked multiple times by a bulkified write of a collection of new records.

So I took on rebuilding the record-triggered flow functionality within this screen flow, staging each object (attendee, invoice, and line item) within a collection to bulkify the database writes for better performance, and utilizing batch sizes and pause screens throughout to stay within limits. I created a custom object to target the upload to, designed the system to write related object IDs back to the custom object record to track upload progress, and built an additional branch of functionality to resume an upload using those records if needed.

Screen flow user experience

Before diving deep into how the flow is built, here's a tour of what the screen flow does and how it works for the person running it. I like to think that the simplicity of this interface belies the complexity going on behind it.

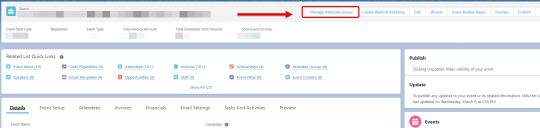

The screen flow is designed to launch from a button on the Event screen, which also passes the Event ID into a parameter.

The flow first executes a lookup for an existing attendee group. In our use case we are only ever expecting to manage one corporate group per event, so if a group is found then it is selected. If no group is found, then the user is prompted to input an Account ID to create a new group. When a new group is created, a group invoice is initialized.

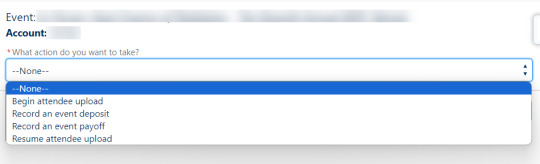

The four actions that the user can take are all connected back to selected attendee group or main invoice:

Begin attendee upload

Resume attendee upload

Record group deposit

Record group payoff

Begin attendee upload

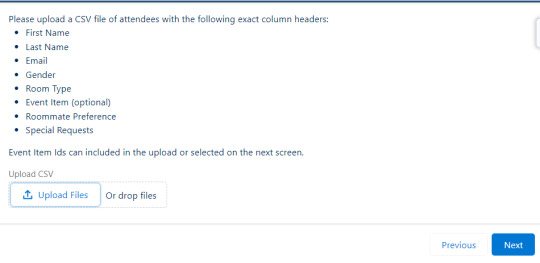

When a user selects Begin attendee upload they are first presented with a screen detailing the exact column headers that need to be in the CSV file.

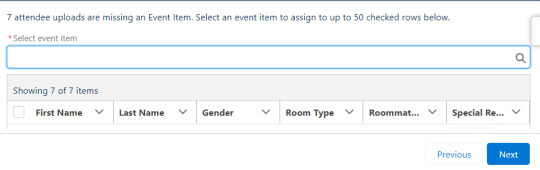

The user uploads their CSV file and the next screen shows a preview of records in the file. The file may contain an Event Item ID column, but if that's not included then rows which need an event item assigned are displayed along with a dropdown menu to select an event item to assign them.

After all rows have an event item assigned, then the user selects the batch size to proceed with creating attendees end clicks next.

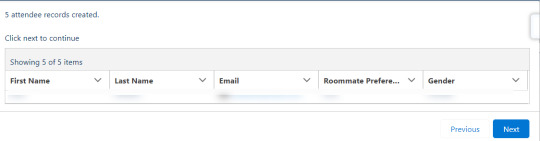

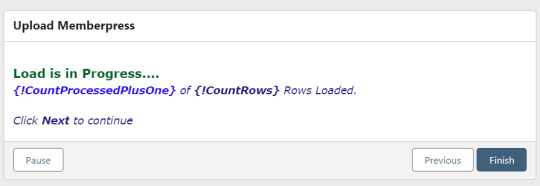

If the number of rows in the file exceeds the batch size then a pause screen will appear informing the user of the number of records created and prompting them to click next to continue. This will continue until the batch size is complete.

After this process is completed for attendees, it's repeated for invoices and then for line items.

Resume attendee upload

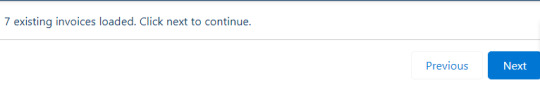

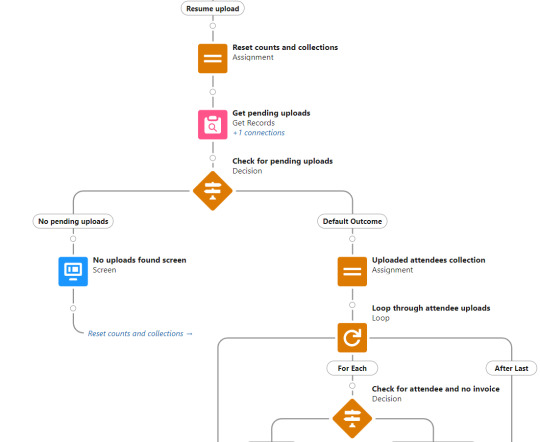

If an attendee upload is interrupted for any reason, it might result in a situation where some attendees were created with invoices but no line items, or attendees with no invoices at all, or a batch where some attendees were created and others weren't. All of those scenarios would be bad, so there is a resume attendee upload feature.

When a user selects Resume attendee upload, the flow queries for any attendee upload temp objects which are missing a corresponding attendee, invoice, or line item object. If any are found, then those records are loaded into the begin upload branch of the flow to finish processing them.

Record group deposit

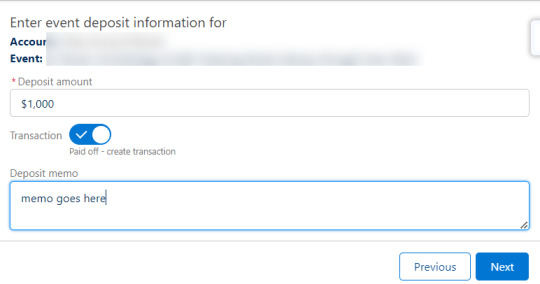

Group deposits are pretty straightforward. When the user selects Record a group deposit, they are taken to a screen prompting them to record the amount of the deposit, a deposit memo, and indicate whether the deposit was paid or needs to be invoiced.

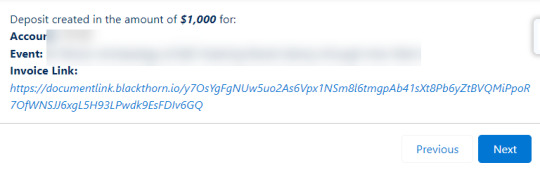

After clicking Next to continue, the flow creates an event item for the deposit then a line item attached to the invoice. If the user indicates that the deposit was paid, then the flow also creates a transaction. If the deposit was not paid then the invoice link displayed on screen can be sent to facilitate paying by credit card.

Record group payoff

Group payoffs are recorded after the event takes place, when the organization is ready to generate a final invoice for the group.

On the first screen in this interface the user is prompted to select any available deposits and attendees in the group that they would like to apply or transfer to the main invoice. A batch size selector allows for controlling the number of credits/charges to transfer in each batch.

For any deposits that are selected, the flow reverses the line item on the main invoice. It's honestly a little bit awkward that there is a line item for the deposit amount in the first place, given that it shouldn't be recognized as a charge - just as a payment credited towards their eventual final balance. But it's necessary create a deposit line item for the deposit amount in order to support invoicing deposits via Documentlink. So we set a line item for the deposit amount, back that line item out at the point when it's applied, and have our rollups at the event level set to make sure deposit line charges are excluded from invoice totals where that's necessary.

For all attendee line items that the user selects, a loop sends each one through a subflow that reverses each line item on their respective invoices and tabulates the total amount of attendee charges that needs to get added to the main group invoice.

When that process completes, the user is presented with a screen showing the total amount of attendee charges to be added, and is prompted to add the total amount of any miscellaneous charges (typically audiovisual and catering) to the invoice.

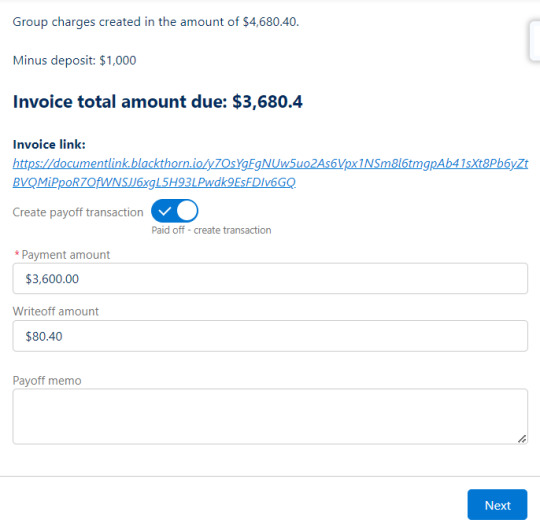

After clicking Next, the total amount due of the invoice is shown on screen, and the user is prompted to enter a payoff memo along with the total amount being collected and any amount being written off.

Screen flow architecture

This screen flow has a lot going on, and it's a little bit intimidating when you pull back and look at the whole thing. It isn't as bad as it looks though, much of this is repetitive, and I'll break it down piece-by-piece.

Flow intro

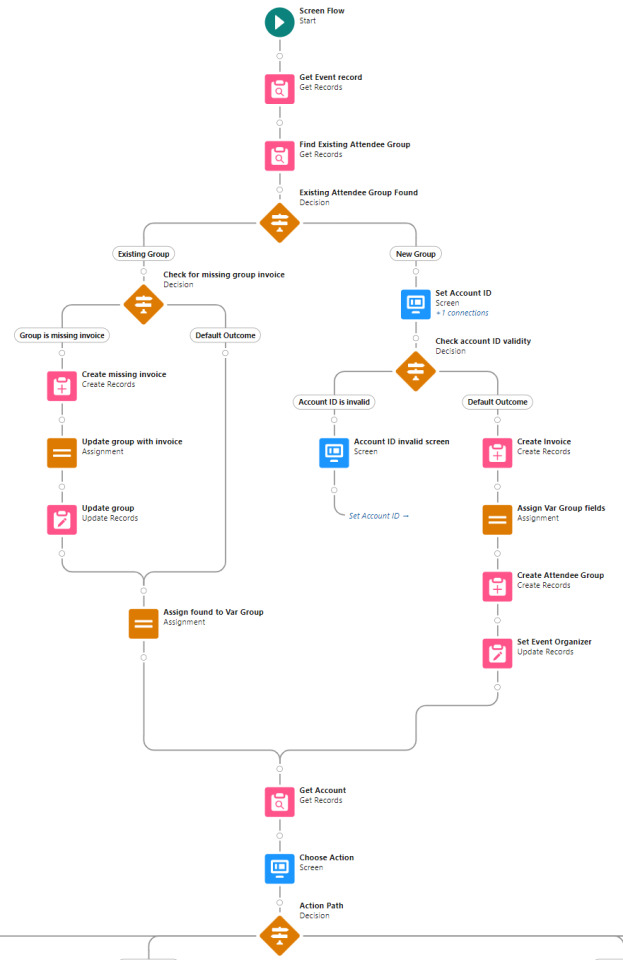

The first part of this flow loads in the Event record using the ID passed into the flow, then searches for an existing attendee group associated with the event.

If a group is found then it makes sure the group has a main group invoice (initializing one if missing) then assigns this group to the Var Group variable used later in the flow.

If no group is found, then the user is prompted to input an Account ID to create a new group. A validation check ensures that an 18 digit string starting with "001" was entered, then it creates a main group invoice billed to that account, sets the invoice on the Var Group variable, and writes that variable to the database.

With these initial steps completed, the user is then presented with four options:

Begin attendee upload

Resume attendee upload

Record group deposit

Record group payoff

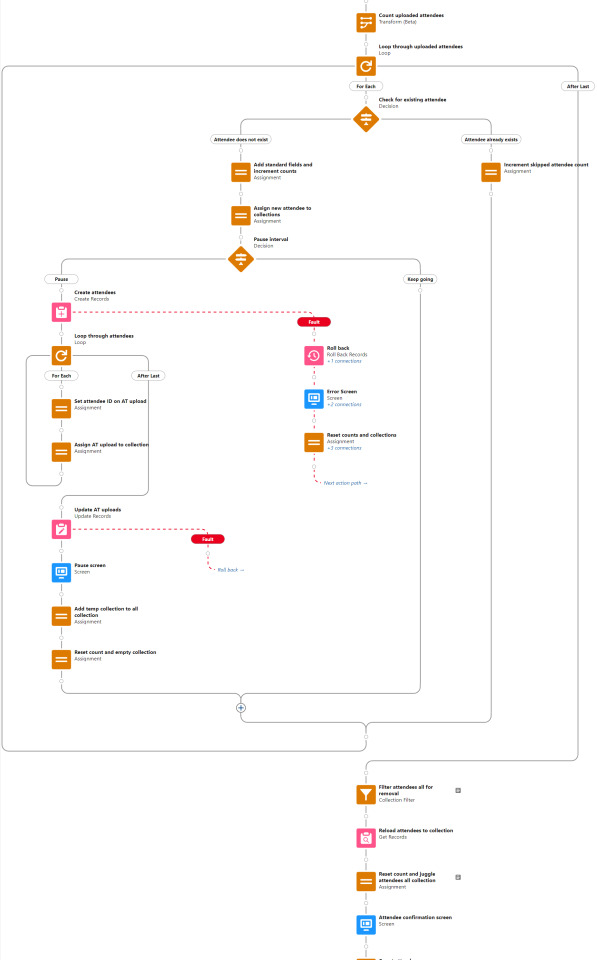

Begin attendee upload

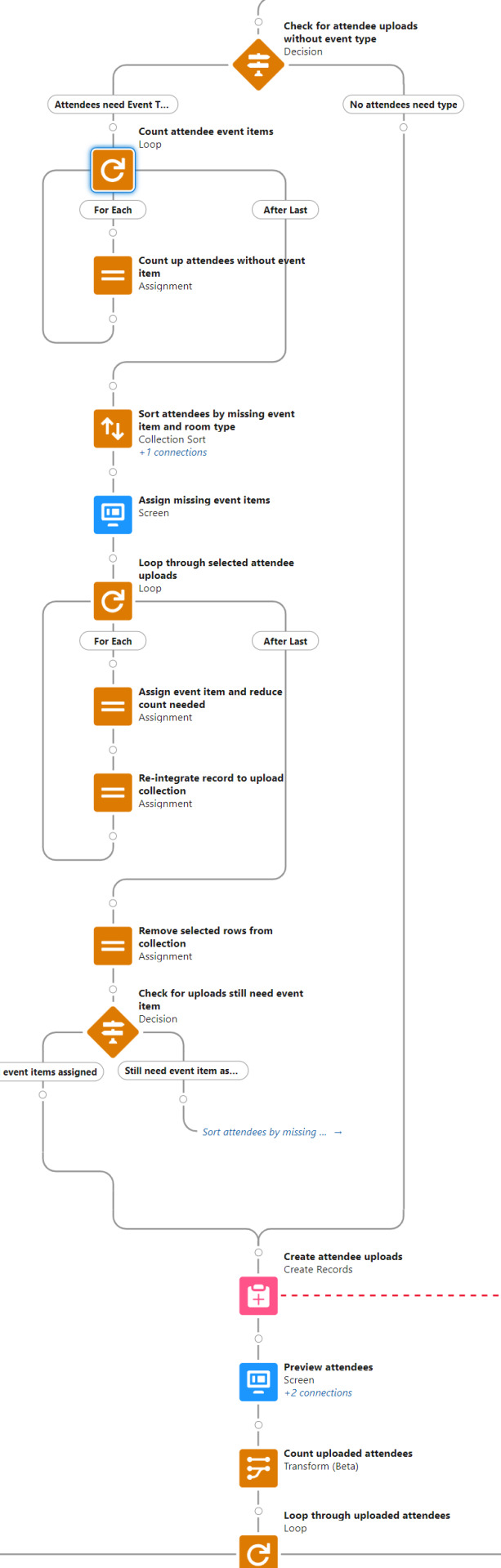

A custom object called Attendee Uploads is employed. When the user uploads a CSV file, that custom object is targeted. The uploaded records are assigned to an Uploaded attendees collection and that collection is looped through.

Each row is assigned the Event ID and the flow start timestamp is set as a batch identifier. Then the row is evaluated to determine if it has or needs an Event Item ID. Rows that do and do not need an Event ID are assigned to separate collections. This decision step also looks for the absence of the Last Name field, which if found is taken as evidence of a blank row that is filtered out and not assigned to any collection.

The next steps deal with assigning missing Event Item IDs. A Record Choice Set serves a dropdown of all of the valid event item types for the event. The user is prompted to choose an item, select the rows to apply it to, then repeat until all rows have an Event Item ID assigned. If all rows already have an Event Item ID, then these steps are skipped and the Attendee Upload objects are written to the database.

Now that the attendee uploads are in, the flow proceeds to looping through them. The first decision, which evaluates if an attendee is already associated with the upload row, only applies to resumed uploads. No attendees already exist for new uploads, so the attendee record fields are assigned in the following step and the staged attendee record is then assigned to the collection.

At this point there's a Pause Interval decision. This is where the flow evaluates if either the batch interval or the end of the batch has been reached. If it's time to pause, then a pause screen is displayed which commits the flow interview (writing attendee records in the collection to the database), the user clicks Next to continue, then the loop proceeds to staging further attendee records until either the next pause or the end of the batch. A second loop and write operation then loops through the new attendee records to stage an update batch to write attendee IDs back to the Attendee Upload records which created them, then update those Attendee Upload records in the database.

While useful for mitigating governor limits, there are drawbacks to saving work to the database mid-operation. If an error occurs or if the user simply navigates away from the flow prior to completion, the dataset they're uploading could be left in an inconsistent state. For that reason, each step where the attendees, invoices, and line items are committed to the database is accompanied by a step that updates the AT upload objects with those new IDs, and both of these write operations happen within the same flow interview with a roll back function included to help ensure the IDs tracked on the Attendee Upload object remain accurate in case an error occurs.

After the attendee creation loop is completed, there's a little bit of collection juggling done before moving on. The attendee record creation was bulkified by batching them into a collection and processing a single write operation. That's a vital practice for flow performance, but comes with the wrinkle that the newly created object IDs aren't immediately available in the flow the same way they are when objects are created individually. I get around that by using the batch identifier to empty the records out of the collection and then query the newly created records in order to reload them into the collection. It's also a nice trick for being able to reference the contact or account record that Blackthorn links with the attendee record after save.

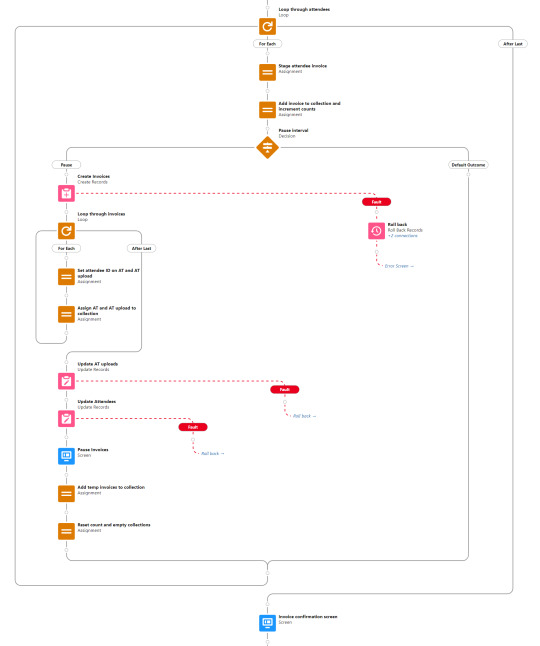

The remainder of this branch pretty much repeats the process from attendee creation - the attendees are looped through to create invoices for them, then the invoices are looped through to create line items. At the end of it, the user is returned to the menu asking what they'd like to do next.

Resume upload

The resume upload function exists to ensure that all attendee uploads are complete and accounted for. The first step searches for any pending uploads - attendee upload records where the event ID matches and the related ID either attendee, invoice, or line item is null. If none are found, the user is simply returned to the menu. If any are found, they are looped through twice.

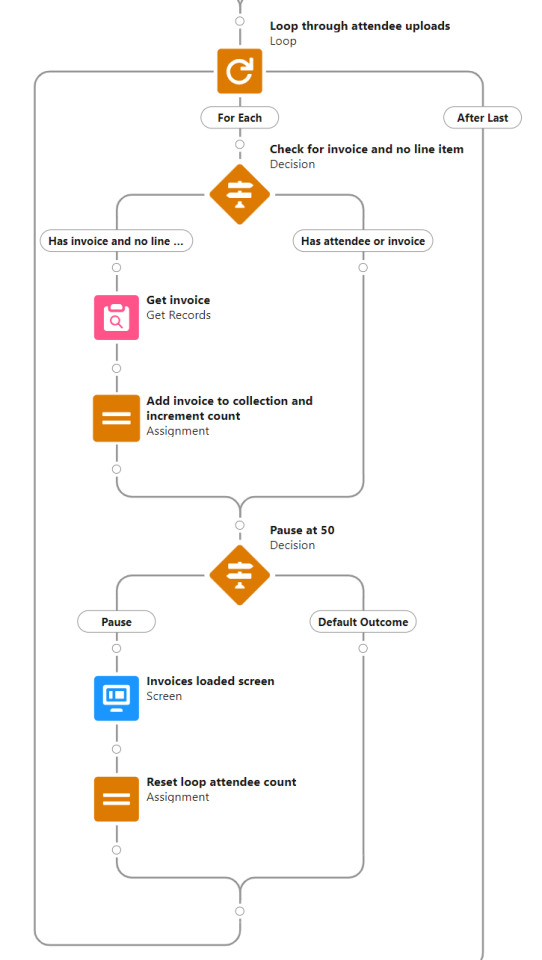

The first loop evaluates if the object has a related attendee but no related invoice, and for each of those records it gets the attendee record and adds it to a collection. A pause interval of 50 is built in.

The second loop evaluates if the object has a related invoice but no related line item, and for each of those records it gets the invoice record and adds it to a collection. A pause interval of 50 is built in.

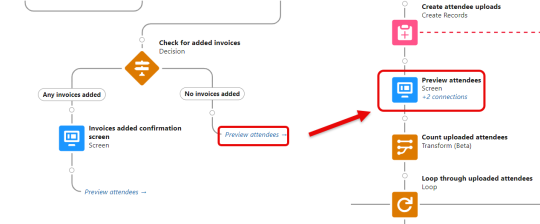

Those two loops exist to patch in related object IDs to the same collections used in the Begin attendee upload branch, before incomplete attendee upload objects are passed to the Preview attendees step in the Begin upload branch. The rest of the workflow for completing an upload is identical to a new upload as the flow proceeds through the new upload branch.

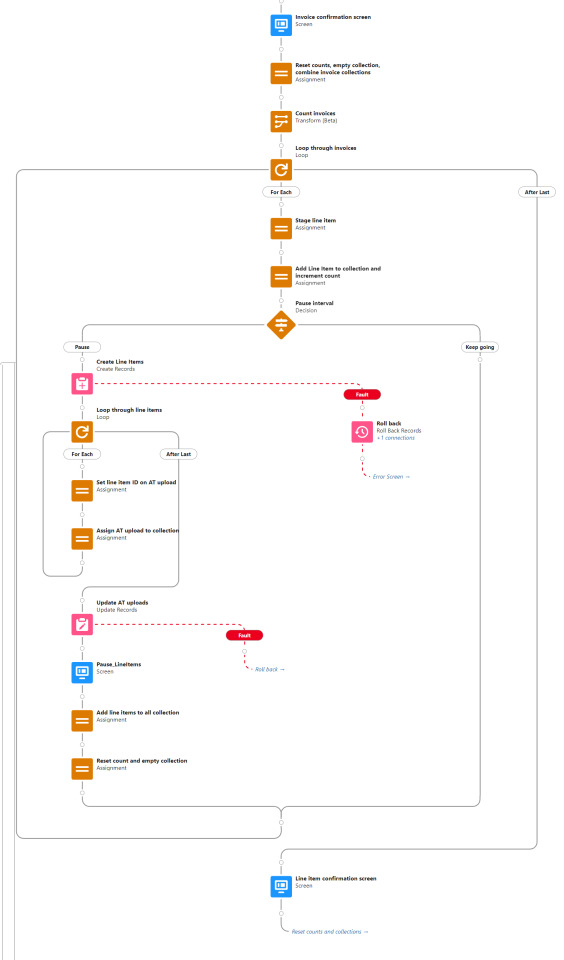

Record group deposit

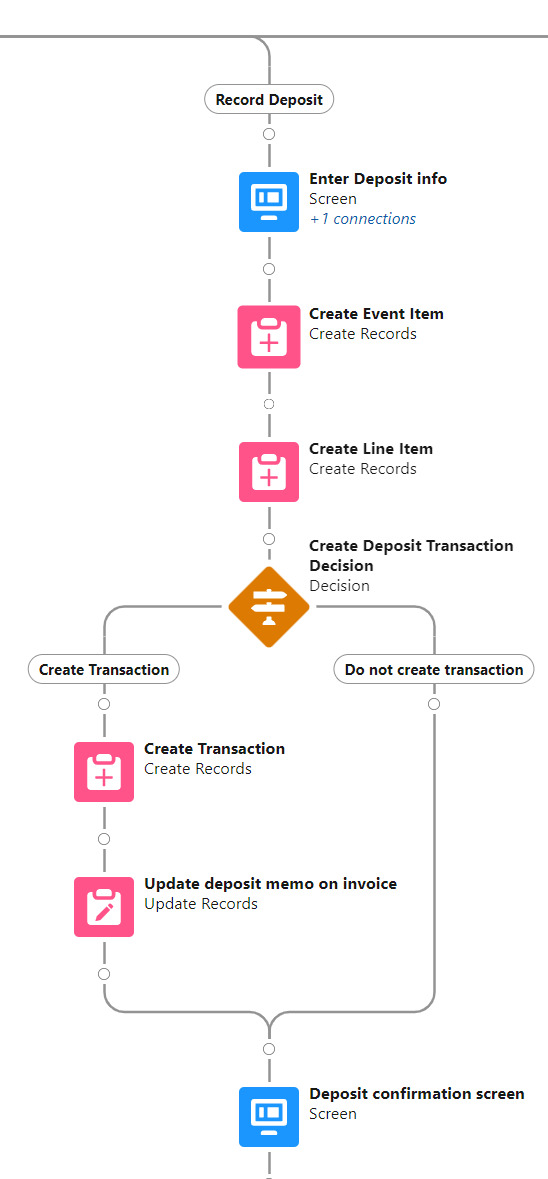

Record Deposit is the shortest and simplest branch of this flow. The user is presented with a screen where deposit information is entered, the flow then creates an event item, a line item (on the group invoice), and optionally a transaction record and note on the invoice. The user is then shown a confirmation screen and taken back to the menu.

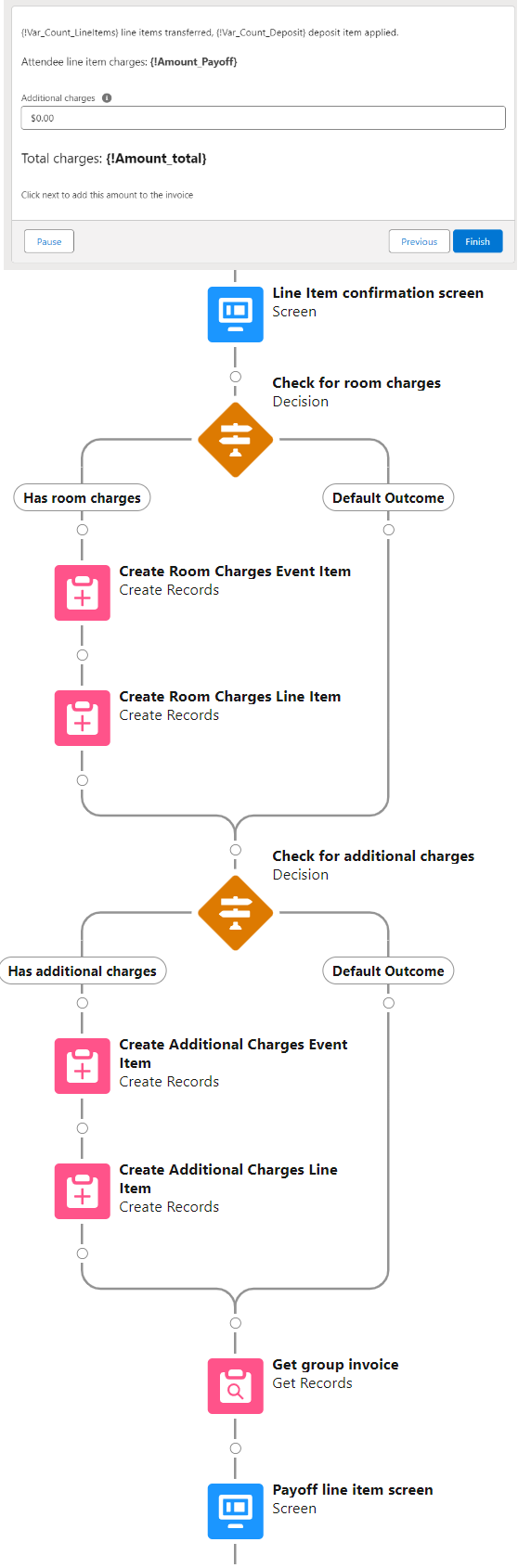

Record group payoff

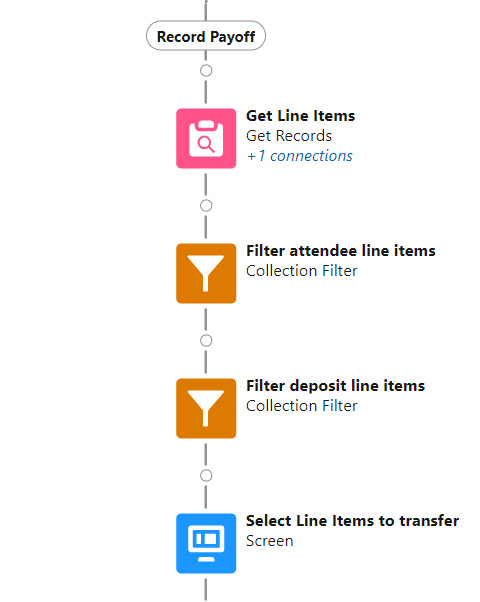

The Record Payoff branch ties it all together at the conclusion of an event. The first step in this branch is a query that loads in all line items associated with the attendee group. Collection filters are then applied to parse those line item records out to a deposit collection and an attendee lines collection. A screen is then presented to the user enabling them to select which of those records to proceed with.

Selected objects from each of the filtered collections are then looped through independently. In both cases a Subflow is invoked which creates an offsetting line item to effectively zero out the charge. The difference between the loops is that the deposit loop sums up the amount to display as a credit, whereas the attendee lines are tabulated to sum up a new line item amount to be added to the group invoice.

The user is then presented with a confirmation screen that states the number of line items charges transferred, the sum of those line item charges, and the deposit amount applied. A fill-in field allows the user to input additional charges (audiovisual, catering, etc.).

When the user clicks Next, the flow creates an event item and line item for the room charges, then an event item and line item for the additional charges.

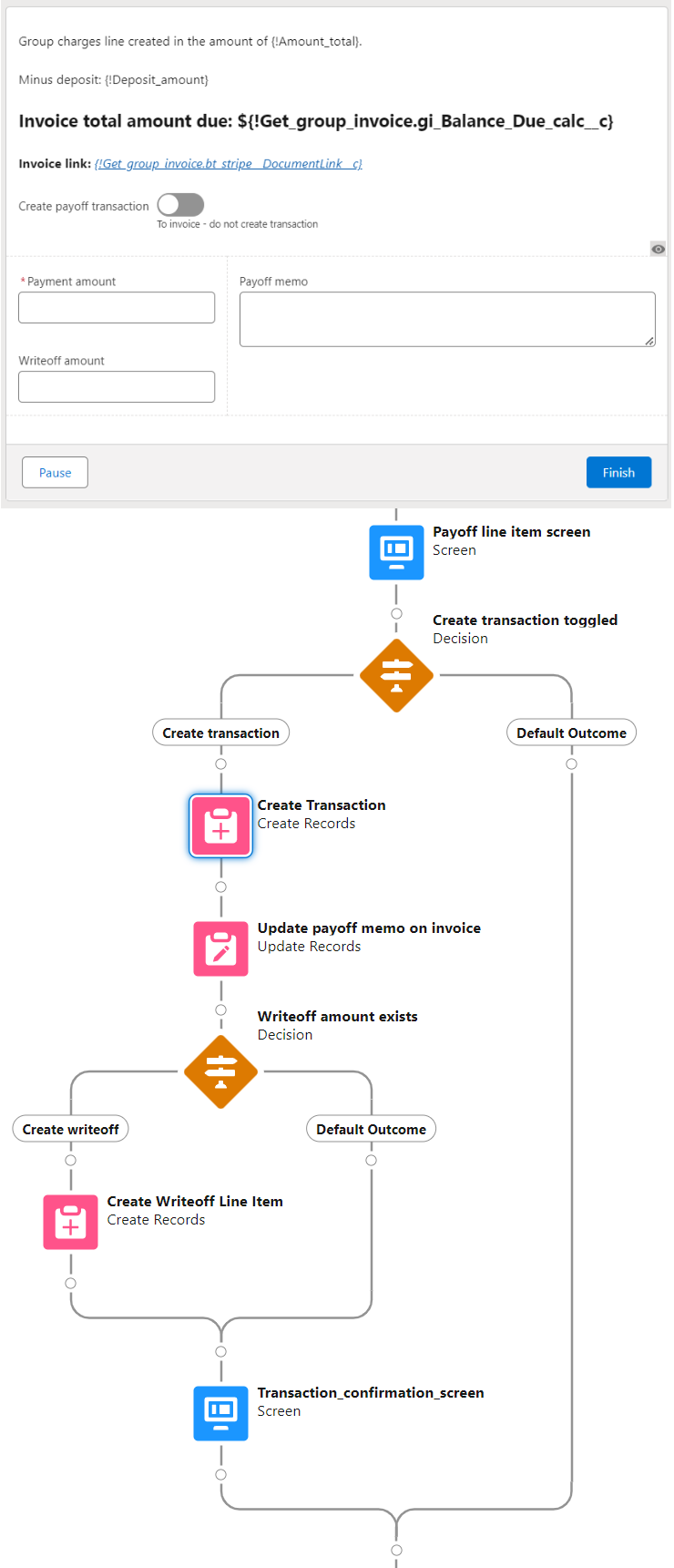

Finally, the Payoff line item screen displays the total charges on the invoice minus the deposit to arrive at an invoice total due. The flow may be ended here and the Documentlink invoice link sent to collect payment. Or if payment was already submitted, then the user may toggle on Create payoff transaction and additional fields are exposed to input the payment amount, payoff memo, and writeoff amount (if applicable).

When the user clicks Next then the flow either completes if no payment was entered, or creates the payoff transaction, updates the invoice with the memo, and creates a writeoff transaction if applicable.

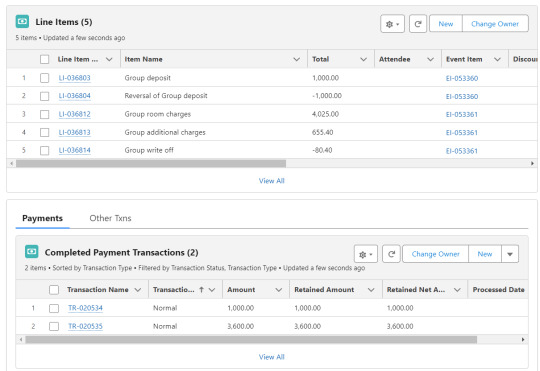

When this is all done the main group invoice contains multiple line items for attendee room charges, additional charges, group deposit, and writeoff amount. Transactions for the deposit and payoff are applied against these charges.

0 notes

Text

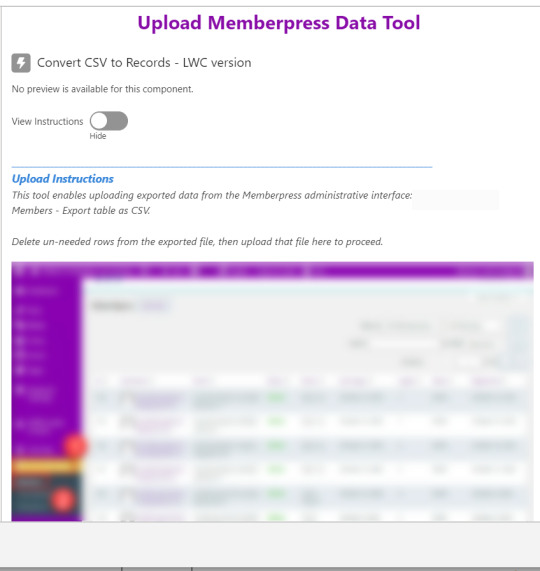

Enabling CSV data uploads via a Salesforce Screen Flow

This is a tutorial for how to build a Salesforce Screen Flow that leverages this CSV to records lightning web component to facilitate importing data from another system via an export-import process.

My colleague Molly Mangan developed the plan for deploying this to handle nonprofit organization CRM import operations, and she delegated a client buildout to me. I’ve built a few iterations since.

I prefer utilizing a custom object as the import target for this Flow. You can choose to upload data to any standard or custom object, but an important caveat with the upload LWC component is that the column headers in the uploaded CSV file have to match the API names of corresponding fields on the object. Using a custom object enables creating field names that exactly match what comes out of the upstream system. My goal is to enable a user process that requires zero edits, just simply download a file from one system and upload it to another.

The logic can be as sophisticated as you need. The following is a relatively simple example built to transfer data from Memberpress to Salesforce. It enables users to upload a list that the Flow then parses to find or create matching contacts.

Flow walkthrough

To build this Flow, you have to first install the UnofficialSF package and build your custom object.

The Welcome screen greets users with a simple interface inviting them to upload a file or view instructions.

Toggling on the instructions exposes a text block with a screenshot that illustrates where to click in Memberpress to download the member file.

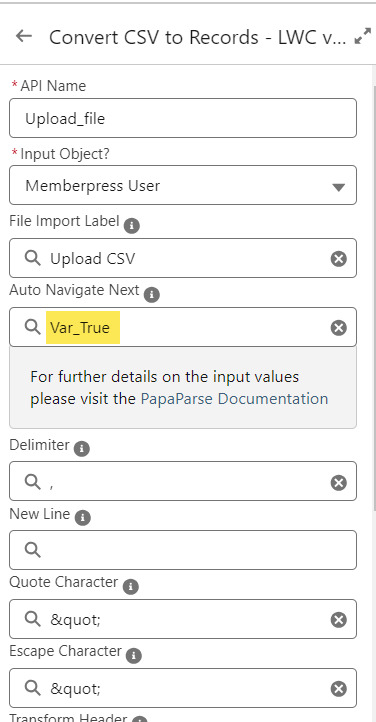

Note that the LWC component’s Auto Navigate Next option utilizes a Constant called Var_True, which is set to the Boolean value True. It’s a known issue that just typing in “True” doesn’t work here. With this setting enabled, a user is automatically advanced to the next screen upon uploading their file.

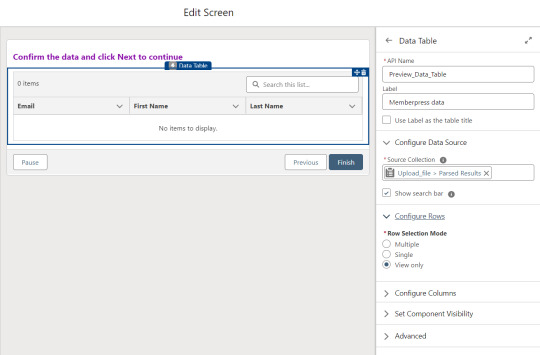

On the screen following the file upload, a Data Table component shows a preview of up to 1,500 records from the uploaded CSV file. After the user confirms that the data looks right, they click Next to continue.

Before entering the first loop, there’s an Assignment step to set the CountRows variable.

Here’s how the Flow looks so far..

With the CSV data now uploaded and confirmed, it’s time to start looping through the rows.

Because I’ve learned that a CSV file can sometimes unintentionally include some problematic blank rows, the first step after starting the loop is to check for a blank value in a required field. If username is null then the row is blank and it skips to the next row.

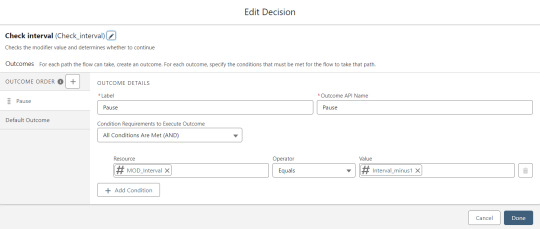

The next step is another decision which implements a neat trick that Molly devised. Each of our CSV rows will need to query the database and might need to write to the database, but the SOQL 100 governor limit seriously constrains how many can be processed at one time. Adding a pause to the Flow by displaying another screen to the user causes the transaction in progress to get committed and governor limits are reset. There’s a downside that your user will need to click Next to continue every 20 or 50 or so rows. It’s better than needing to instruct them to limit their upload size to no more than that number.

With those first two checks done, the Flow queries the Memberpress object looking for a matching User ID. If a match is found, the record has been uploaded before. The only possible change we’re worried about for existing records is the Memberships field, so that field gets updated on the record in the database. The Count_UsersFound variable is also incremented.

On the other side of the decision, if no Memberpress User record match is found then we go down the path of creating a new record, which starts with determining if there’s an existing Contact. A simple match on email address is queried, and Contact duplicate detection rules have been set to only Report (not Alert). If Alert is enabled and a duplicate matching rule gets triggered, then the Screen Flow will hit an error and stop.

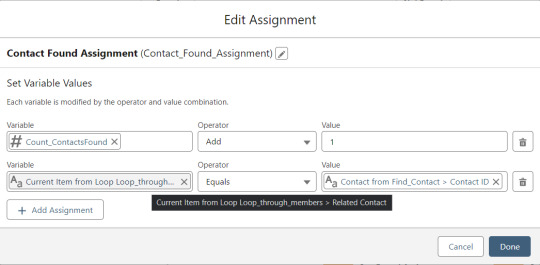

If an existing Contact is found, then that Contact ID is written to the Related Contact field on the Memberpress User record and the Count_ContactsFound variable is incremented. If no Contact is found, then the Contact_Individual record variable is used to stage a new Contact record and the Count_ContactsNotFound variable is incremented.

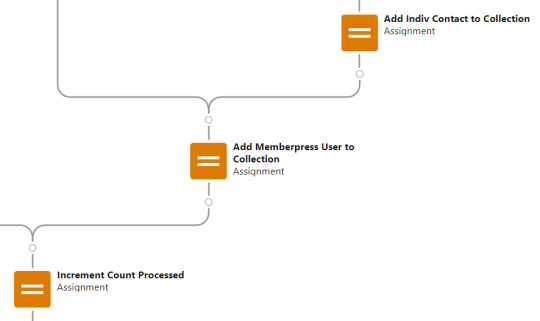

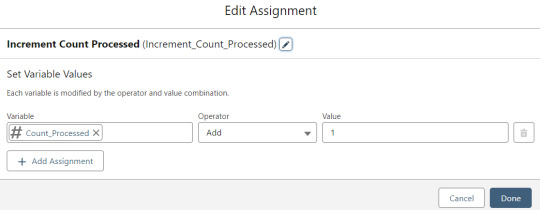

Contact_Individual is then added to the Contact_Collection record collection variable, the current Memberpress User record in the loop is added to the User_Collection record collection variable, and the Count_Processed variable is incremented.

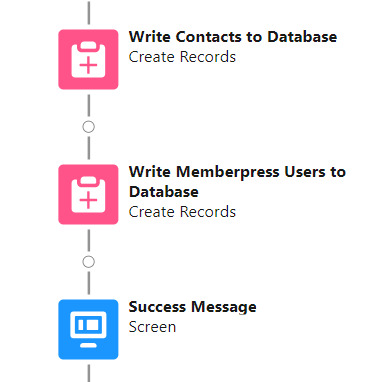

After the last uploaded row in the loop finishes, then the Flow is closed out by writing Contact_Collection and User_Collection to the database. Queueing up individuals into collections in this manner causes Salesforce to bulkify the write operations which helps avoid hitting governor limits. When the Flow is done, a success screen with some statistics is displayed.

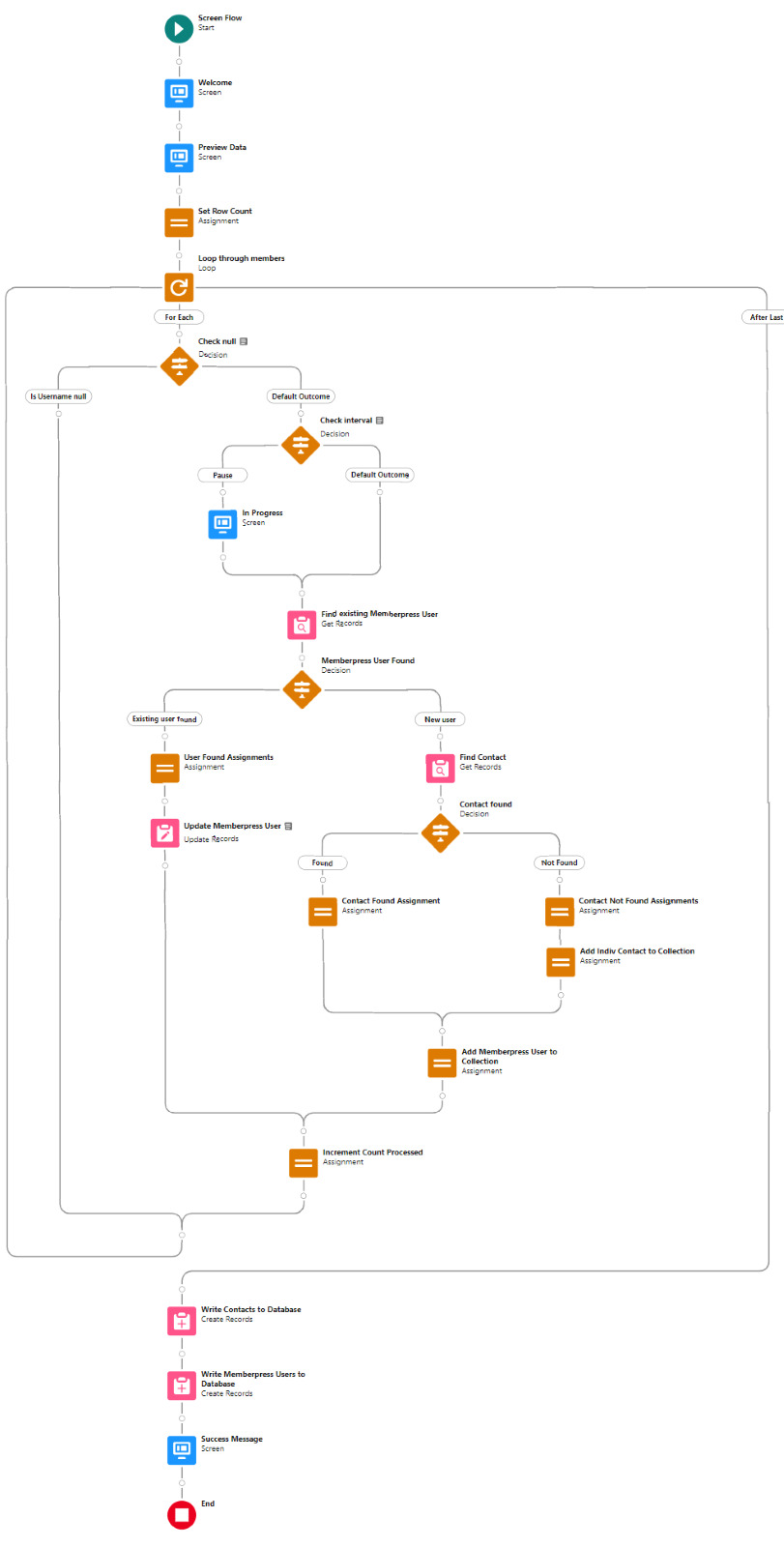

The entire Flow looks like this:

Flow variables

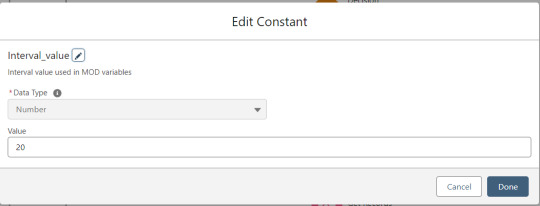

Interval_value determines the number of rows to process before pausing and prompting the user to click next to continue.

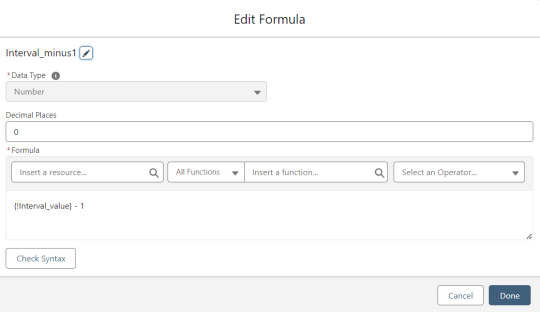

Interval_minus1 is Interval_value minus one.

MOD_Interval is the MOD function applied to Count_Processed and Interval_value.

The Count_Processed variable is set to start at -1.

Supporting Flows

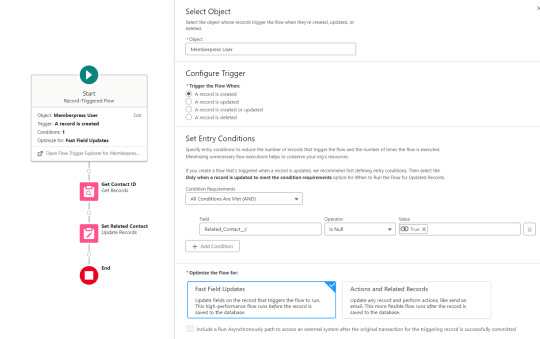

Sometimes one Flow just isn’t enough. In this case there are three additional record triggered Flows configured on the Memberpress User object to supplement Screen Flow data import operations.

One triggers on new Memberpress User records only when the Related Contact field is blank. A limitation of the way the Screen Flow batches new records into collections before writing them to the database is that there’s no way to link a new contact to a new Memberpress User. So instead when a new Memberpress User record is created with no Related Contact set, this Flow kicks in to find the Contact by matching email address. This Flow’s trigger order is set to 10 so that it runs first.

The next one triggers on any new Memberpress User record, reaching out to update the registration date and membership level fields on the Related Contact record

The last one triggers on updated Memberpress User records only when the memberships field has changed, reaching out to update the membership level field on the Related Contact record

0 notes

Text

Practical Python in Power BI: Cleaning constituent data using dataprep.ai

Power BI is a powerful tool. In my consulting work I utilize Power Query for Power BI to transform and prepare constituent data for system migrations. One recent breakthrough in regards to making that even more powerful and efficient was the implementation of Python scripting and the dataprep library.

The following articles were very helpful for figuring out how to do this:

How to Use Python in Power BI - freeCodeCamp

Run Python scripts in Power BI Desktop - Microsoft

There's a major discrepancy between those articles - the freeCodeCamp article provides instructions on how to use a Python environment managed via Anaconda in Power BI; whereas Microsoft's documentation warns that Python distributions requiring an extra step to prepare the environment, such as Conda, might fail to run. They advise to instead use the official Python distribution from python.org.

I've tried both, and as far as I can tell both methods seem to work for this purpose. When installing the official Python distribution, the only pre-packaged installer available is for the current version (currently 3.11.4) which requires a little bit of dataprep debugging post-install to get it working. Anaconda makes it easier to install prior Python versions and to switch between multiple Python environments (I successfully tested this in a Python 3.9 installation running in Anaconda). The following instructions are written for the former method though, using the latest version of Python installed via their Windows executable per Microsoft's recommendation.

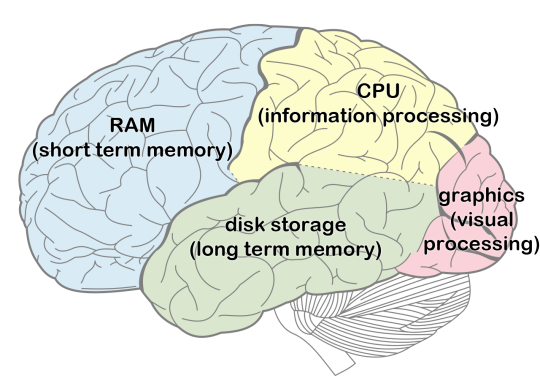

To conceptualize how Power BI works with Python, it's important to understand them as entirely separate systems. For the purpose of data transformation, a Power Query Python scripting step loads the previous query step into a pandas dataframe for Python to execute, then loads the output of that back to the next query step.

So with that context, the way we'll approach this install is like so:

Set up a local Python development environment

Install the Dataprep library within that

Utilize a test script to debug and verify that the Python environment is working as expected

Configure Power BI to tap into the Python environment

1. Set up a local Python development environment

The first step is easy, navigate to python.org/downloads, click the Download button, and execute the installer keeping all the default settings.

Once you have Python installed, then open a command prompt and run the following commands:

py -m pip install pandas

py -m pip install matplotlib

After installing these two libraries, you've now got the basics set to use Python in Power BI.

2. Install the Dataprep library

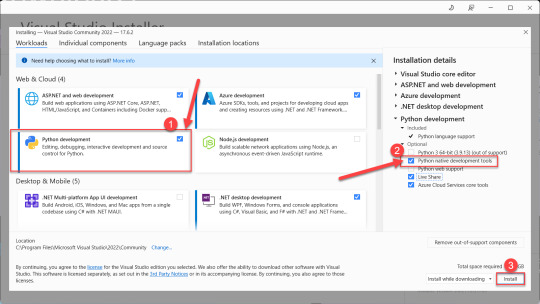

Installing the Dataprep library comes next, and to do that you need Microsoft Visual C++ 14.0 installed as a prerequisite. Navigate on over to the Microsoft Visual Studio downloads page and download the free Community version installer.

Launch the Visual Studio installer, and before you click the install button select the box to install the Python development workload, then also check the box to install optional Python native development tools. Then click install and go get yourself a cup of coffee - it's a large download that'll take a few minutes.

After the Visual Studio installation completes, then head back to your command prompt and run the following command to install Dataprep:

py -m pip install dataprep

3. Utilize a test script to debug and validate the Python environment

With the local Python development environment and Dataprep installed, you can try to execute this test script by running the following command in your command prompt window:

py "C:\{path to script}\Test python pandas script.py"

In practice this script will fail if you try to run it using Python 3.11 (it might work in Python 3.9 via Anaconda). It seems that the reason the script fails is because of a couple of minor incompatibilities in the latest versions of a couple packages used by Dataprep. They're easily debugged and fixed though:

The first error message reads: C:\Users\yourname\AppData\Local\Programs\Python\Python311\Lib\site-packages\dask\dataframe\utils.py:367: FutureWarning: pandas.Int64Index is deprecated and will be removed from pandas in a future version. Use pandas.Index with the appropriate dtype instead.

To fix this error, simply navigate to that file location in Windows Explorer, open the utils.py file, and comment out line 367 by adding a pound sign at the beginning. While you're in there, also comment out lines 409-410 which might produce another error because they reference that function from line 367.

After making that adjustment, if you return to the command line and try to execute the test script you'll encounter another error message. This time it reads: cannot import name 'soft_unicode' from 'markupsafe'

Googling that message turns up a lot of discussion threads from people who encountered the same problem, the upshot of which is that the soft_unicode function was deprecated in markupsafe as of version 2.1.0, and the fix is a simple matter of downgrading that package by running this command in your command line window: py -m pip install markupsafe==2.0.1

After those adjustments have been made, you should be able to run the test script in your command line window and see this successful result:

4. Configure Power BI to tap into the Python environment

You're so close! Now that you have Dataprep working in Python on your local machine, it's time to configure Power BI to leverage it.

In Power BI Desktop - Options - Python scripting, ensure that your Python installation directory is selected as the home directory. Note that if you manage multiple environments via Anaconda, this is where you would instead select Other and paste an environment file path.

Now in Power Query, select Transform - Run Python Script to add a step to your query. The script used here differs in a couple key ways from the test Python script run via your command prompt:

omit import pandas and import numpy

instead of defining df as your pandas dataframe, use the predefined dataset dataframe

My final script, pasted below, leverages the phone and email cleanup functions in Dataprep, as well as leveraging Python to calculate when a proper case cleanup is needed in a slightly more efficient manner than my previous PBI steps to clean that up.

Scripts

Power BI Python script syntax

# 'dataset' holds the input data for this script dataset['FirstName Lower'] = dataset['FirstName'] == dataset['FirstName'].str.lower() dataset['FirstName Upper'] = dataset['FirstName'] == dataset['FirstName'].str.upper() dataset['FirstName Proper'] = dataset['FirstName'].str.title() dataset['LastName Lower'] = dataset['LastName'] == dataset['LastName'].str.lower() dataset['LastName Upper'] = dataset['LastName'] == dataset['LastName'].str.upper() dataset['LastName Proper'] = dataset['LastName'].str.title() from dataprep.clean import validate_phone dataset['Valid Phone'] = validate_phone(dataset["Phone"]) from dataprep.clean import clean_phone dataset = clean_phone(dataset, "Phone") from dataprep.clean import validate_email dataset['Valid Email'] = validate_phone(dataset["Email"]) from dataprep.clean import clean_email dataset = clean_email(dataset, "Email", remove_whitespace=True, fix_domain=True)

Python test script syntax

import pandas as pd import numpy as np df = pd.DataFrame({ "phone": [ "555-234-5678", "(555) 234-5678", "555.234.5678", "555/234/5678", 15551234567, "(1) 555-234-5678", "+1 (234) 567-8901 x. 1234", "2345678901 extension 1234" ], "email": [ "[email protected]", "[email protected]", "y [email protected]", "[email protected]", "H [email protected]", "hello", np.nan, "NULL" ] }) from dataprep.clean import validate_phone df["valid phone"] = validate_phone(df["phone"]) from dataprep.clean import clean_phone df = clean_phone(df, "phone") from dataprep.clean import validate_email df["valid email"] = validate_phone(df["email"]) from dataprep.clean import clean_email df = clean_email(df, "email", remove_whitespace=True, fix_domain=True) print(df)

0 notes

Text

Lessons learned from my dumb smart home

So, uh, long time no blog. It's been over four years since my last post. A few things have happened since.

I figure the best way to brush off the digital cobwebs is to share something amusing. I like gadgets. I'm not embarrassed to admit that, except when I think back to my Google Glass year a decade ago.

Most of my technology purchases now are more reasonably priced, everyday tech, like smart home devices. I've had an Amazon Echo since the first generation was released, and accumulated them in most rooms of our house since. We have a Hue lighting system, and a Ring alarm, and assorted other small connected devices.

There are things I really enjoy about these amenities. I highly recommend the premium lighting package. I enjoy the convenience of controlling lights by voice commands, and calling out for a weather forecast or a song. It’s also neat that my devices have APIs which can talk to each other, allowing me to program custom automations and integrations between them.

But there’s another side to this technology. And I’m not talking about the level of access and control of our personal data we’ve handed to Amazon. *shudder* No, the other side I’m talking about is when the technology just doesn’t work. When my smart home gets really dumb, sometimes comically so.

Too many Alexas

The idea was to have a voice assistant system that works through the whole house. Turns out, “works” is doing a lot of work there. It does not work well when multiple Echos hear the same command, then a timer starts on the wrong device, or a song starts playing in adjoining rooms a couple seconds off from each other.

Zombie automations

A couple years ago when we took a holiday trip, I enabled a Phillips Hue routine which turns the lights on and off on a schedule with some randomness. I thought the latter part meant it would vary which lights came on, but it actually meant that all the lights in the house come on between 3:30 and 4pm and all the lights in the house turn off between midnight and 12:30am.

Five hundred plus days later I still haven’t gotten around to finding the setting to disable that automation. I just got in the habit of calling out “Alexa turn the lights off” in the late-afternoon, and reaching for the dimmer switch on my desk to turn them back on when I'm up late.

Janky integrations

A few years ago, I found that I could interface a motion sensor from the Ring Alarm with the Hue lights so that between 11pm and 6am motion detection would turn on a path of dimmed lights between the bedroom and bathroom. A second automation trigger on a ten minute delay would then turn the lights off. Well, it worked great, except those odd early mornings when a small child wants to hang out in that area before dawn, then the lights start turning off repeatedly.

When the kids get ahold of it

Smart home amenities are cool when you set them up and know how to use them, but can produce some unexpected results once the kids start playing with them. They push the Ring doorbell and yell into the camera every time we come in the front door. If a Hue dimmer switch isn’t working, we have to make sure it’s not controlling another room’s lights because a giggling child moved the switches around. And if we’re hearing Alexa play Who Let the Dogs Out for the thousandth time, I'm just thankful it isn’t Poop Poop Poop, by Poop Man in Fart Land. Again.

When the technology just doesn’t work

This anecdote isn’t about a smart home device, but another kind of home technology. Several years ago I needed to replace a broken toilet valve, and upgraded to a fancier "water saving" mechanism. It made sense to spend a little extra on something that would conserve resources and save money in the long run. Except it didn’t work as advertised. A few months after installing it, I noticed water continuously leaking into the toilet from around the valve seal. I tried a couple times to uninstall and reinstall to get it to seal properly, but each time it would soon go back to leaking again.

Finding parallels and lessons

I like to think that lessons learned from my personal tech fails can help inform my work, and can attempt to draw some parallels here:

Too many Alexas

Once upon a time, I built out a bunch of back end data flows to support my organization’s eCommerce initiative. Zapier was my best friend, and every Shopify transaction triggered a half-dozen different Zaps for different purposes. It was effective but messy, inefficient, and sometimes the nuance of how integrations differed resulted in confusing inconsistencies. We built something amazing with a minimal budget, but I regret the substantial technical debt.

Kind of like accumulating too many Echo devices, the results can be undesirable when an action triggers too many different processes. At home I addressed that by reprogramming the Echo devices to listen for different wake words, proving yet again that doing some basic analysis and disambiguation of tasks can result in low-effort high-impact improvements.

Zombie automations

Have you ever encountered a situation where nobody can quite explain how a particular type of data is showing up in a system? It just is. Probably got set up by a former co-worker, nobody knows which service account is running it, no less how to log in. And there’s a formula misconfigured so a calculated field is often wrong, but current staff are aware of what to look for and used to fixing them so it's all good.

Sometimes we just get too comfortable with inefficient systems, and the inefficiency leads to time constraints that push everyone to the point where nobody has bandwidth to consider change. You know what can be a great impetus for breaking the inertia? Talking through a business process and writing it down!

Business process documentation is a valuable exercise on its own, and it’s a powerful change driver when you look at a difficult system mapped out and go OMG I can’t just document this broken thing, let's actually fix it. Kind of like how writing this story finally prompted me to find and turn off that old holiday light schedule.

Janky integrations

Automations can get stuck in loops. A misconfiguration can trigger repeatedly and eat your entire Zapier monthly task cap in a day. Or sometimes you have a mandate to get something done, but the tools aren't well suited to the challenge, so you do your best to make them work and have to live with the poor result.

Years ago my then-new org wanted member data integrated with the CRM, but memberships were sold through a magazine fulfillment house whose only viable integration method was sending a nightly series of CSV files to an FTP server. The vendor had no way of receiving automated updates back or even bulk updating from a spreadsheet. Dirty data we’d corrected kept showing up. New duplicate customer records ran rampant. Transaction rows contained no unique identifier. I eventually dubbed that integration my river of shit, and wished I could get a do-over to narrow its scope and impact.

You’re best off identifying situations like that early, and rethinking them before they become fully implemented problems. My motion sensor / lights hack didn’t last long. Another Hue presence sensor purchase could have worked better, but wasn’t needed after I just put a cheap dumb motion sensor on the light in the laundry closet that we also walk by on the way to the bathroom.

When the kids get ahold of it

Your co-workers obviously aren’t small children, but there is an aspect of deploying systems that involves giving people new toys and then watching how they use them in unexpected ways. Observing behavior, adapting systems to meet it, and building in appropriate guardrails are all tactics that help serve your users better and prevent them from breaking new systems.

Conversely, our light switches are all labeled on the back in sharpie marker so we know where they’re supposed to go.

When the technology just doesn’t work

Sometimes you need to acknowledge when a system isn’t living up to expectations. After finally cutting my losses and getting rid of the “water saving” toilet valve in favor of a standard flap and pull chain, our water bill dropped by $5 to $10 a month.

Organizations experience extreme examples of that when an enterprise system choice isn’t aligned with their operations needs. It’s difficult to walk away from large sunk costs, but every single time you don’t it turns into an ineffective money pit and even larger opportunity cost.

0 notes

Text

Creating a box office member lookup app with Glide using data from Salesforce

It's a pretty cool feeling when you're looking to build something very specific and you find a platform that does that specific thing really well. I recently had that experience and as a result have become a fast fan of Glide Apps.

The use case at hand is for box office staff working at the American Craft Show to look up and check in members from our database. Free admission to the show is a benefit of membership in the American Craft Council, and a decent chunk of those member tickets are claimed on site the day of the show.

There's a longer backstory to how this evolved, but the pertinent part is that we have a relatively new Salesforce CRM database that we're now able to leverage to build systems on top of to make tasks like this more efficient. The first iteration of this app was built on a platform which enabled querying on the last name field to pull contact records into a grid, from which box office staff could then double tap a small edit button next to any one of them, which opened a modal window where they check a box and then the Update button to send that data back to the CRM.

It was a good first effort, but had some pretty glaring shortcomings. In particular we faced adoption challenges with box office staff, who had been used to a different vendor platform for years that required them to click through multiple screens to verify membership and then offered no way to check them in. We succeeded at provisioning an interface that made the lookup portion more efficient, but staff weren't used to checking people in and the mechanism for that was just too clunky. Four taps may not seem like much, but it's a big deal in a fast-paced environment with customers lined up, and especially when the edit button is too small and requires a quick double tap on just the right spot to work.

So I went back to the drawing board, and at a point in that process it occurred to me that I might be better off moving the data out of Salesforce to broaden the pool of potential options. That led me think of the Google Sheets Data connector for Salesforce, followed by a brief flirtation with building something native in there using Google Apps Script, followed quickly by the revelation that's beyond my technical depth and I'm not up for the learning curve right now, but hey maybe there's something else out there which can help leverage this.

Enter Glide Apps. They're a pretty new startup that promises the ability to create a code-free app from a Google Sheet in 5 minutes. And even better, Soliudeen Ogunsola wrote this tutorial on Creating an Event Check-in App with Glide. It was a magic "this looks perfect" moment, and sure enough after having spent hours trying a few things that didn't work I gave this a shot and within a half hour had a functional prototype of something that worked perfectly.

Some of the things that I learned while building this out and getting it ready to use in production:

It's important for both consistency and efficiency's sake to make the data refresh process as easy and repeatable as possible. With that in mind, it's really important to consider that the Salesforce Data Connector deletes and reloads all data in the spreadsheet whenever it's refreshed. On the up side though, as long as the column headings remain consistent then the mappings set up in Glide Apps continue working seamlessly after the spreadsheet data is refreshed.

Because of that, I created a custom checkbox field on the Contact object for ticket pickup which is set to FALSE by default. That enabled it to be included in the query rather than added manually each time the data is refreshed.

Glide allows only two fields to be displayed in the List layout. In our case I wanted to display the member's full name as the title, then their City, State, and ZIP Code as the subtitle. I initially concatenated those fields in the Google Sheet, but to make it more easily repeatable I subsequently created a formula field on the Contact object in Salesforce that did the same concatenation and then included that field in the Data Connector query.

Adding a Switch to the Details layout in Glide enables the user to edit only that data point. The allow editing option can remain off so they can't change any other details on the contact record - the only interactive element on screen is the button to check them in.

I attempted to build a connection back into Salesforce to record the ticket pickup on their record via Zapier. However, when I tested checking one person in it triggered thousands of Zapier tasks. Something in the architecture is causing Zapier to think lots of rows were updated when I think they maybe had just been crawled. Point is that didn't work and thank goodness Zapier automatically holds tasks when it detects too high a volume all at once.

The volume of records that we're dealing with (in the tens of thousands) causes the Glide App to take a couple minutes to load when it's first opened. It requires coaching a little patience up front, but the good news is that's a one time deal when the app is initially opened. From that point forward everything works instantaneously. It's ultimately an improvement over the previous iteration, which would load quickly but then took several seconds to bring contact data into the grid on every member lookup.

I'm not on site at our San Francisco show, but can see that on day one of the show there are dozens of members recorded in the spreadsheet as having checked in, and I haven't gotten any frantic phone calls about it not working, so at this point I'm going to assume that means it's a success. But then I was pretty confident it would work. When it's just this simple to use, the odds seem good:

0 notes

Text

Super simple read more link using only CSS

Had an interesting challenge to work through the other day - I wanted to hide some description text on a webpage behind a Show Description link, but had to accomplish that using only CSS animation because our CMS (rightfully) will not allow me to inject arbitrary Javascript into a page via the WYSIWYG.

I mean I didn’t really have to, I could have gone through the process of staging an update and pushing code to production. It’s more accurate to say I wanted to avoid that for this particular one-off project.

So while I had a general idea of how this could be built using a display:none property that toggles on/off by clicking another element, I did a quick web search for a code sample before reinventing any wheels. And sure enough, found this Codepen - Pure CSS read more toggle by Kasper Mikiewicz

Using that as a starting point, I stripped down the code and it works like a charm. The end result can be seen on my org’s conference schedule page. And here’s the code sample:

<style> .read-more-target { display: none; }

.read-more-state:checked ~ .read-more-target { display: block !important; margin-top: 1em; }

.read-more-state ~ .read-more-trigger:before { content: 'Show description'; }

.read-more-state:checked ~ .read-more-trigger:before { content: 'Hide description'; }

.read-more-trigger { cursor: pointer; color: #0000FF; font-size: 0.85em; } </style>

<input class="read-more-state" id="item1" type="checkbox" /> <span class="read-more-target"><i>Description:</i>Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.</span> <label class="read-more-trigger" for="item1"> </label>

0 notes

Text

How to configure Salesforce Customizable Rollups to roll up contact soft credits to Households

My org cut over from Raiser's Edge to Salesforce in January 2018, and one of the pain points we encountered early on was that donation soft credits were recorded at the contact level but not at their household account level. A structural solution to this issue became available with the launch of NPSP Customizable Rollups in June 2018, and I'm now in process of building them out for my org. The process was confusing at first, but once I figured it out it was surprisingly straightforward and easy to do. This blog will lay out the process in concise and easy-to-follow steps.

What's a soft credit?

Before diving into the steps, a quick review of terminology.. Soft credits are when a donor is credited with a donation that they didn't actually write the check for. A common example is when the check comes from a family foundation or donor advised fund. The hard credit for that donation belongs to the entity that sent the check, but the soft credit goes to the donor who directed them to cut that check. Other examples can include matching gift campaigns or crowdfunding efforts. Guidelines for what gets counted as a soft credit vary at different nonprofit organizations, and consequently Salesforce provides some flexibility around how to configure them as referenced in the documentation on Soft Credit and Matching Gift Setup.

Contact soft credits vs. Account soft credits

A key concept that tripped me up at first is the distinction between Account Contact Soft Credits and Account Soft Credits. Finding this description in the NPSP Soft Credits Overview documentation was my aha moment where how to do this really clicked: "If you enable Customizable Rollups, you can create Contact Soft Credit rollup fields at the Account level. ... With Account Contact Soft Credits, it is now possible to see the total giving for all Household members."

In short, Account Contact Soft Credits are exactly what I wanted to configure. Account Soft Credits on the other hand are a very different thing, they're an entirely separate feature which enables directly soft crediting an Account for a donation made by another entity.

On to the tutorial

Based on the aforementioned aha moment, and on information gleaned from each of the articles linked to above, it dawned on me that the process of configuring Salesforce Customizable Rollups to roll up contact soft credits to Households is really quite straightforward:

Create new custom fields on the Account object

Add those fields to the desired page layouts

Configure customizable rollups to populate data to those fields

1. Create new custom fields on the Account object

First step in creating any customizable rollup is to create a field to populate the field to. In this case I'm creating fields on the Account object which mirror existing fields on the Contact object, so I just place two windows side-by-side - both in Setup - Object Manager, one to reference the existing Contact fields and the other to create new corresponding Account fields of the same field type. Then just copy-paste the title over, mirror the other details, save, and repeat for the remaining fields.

2. Add those fields to the desired page layouts

In Setup - Object Manager - Account, open the page layouts that you want to display Soft Credit info on and add them to the page layout. In the example here, I've created a new section on the page titled Soft Credit Totals and dragged the fields into them. If you want this to mirror how it's setup on the Contact page layout, you could also pull that up in another window and mirror the setup.

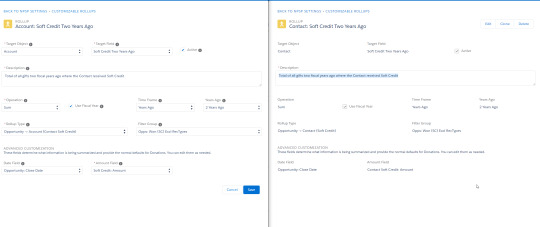

3. Configure customizable rollups to populate data to those fields

Now to configure the Customizable Rollup, would you believe that I'm about to tell you that's as easy as pulling up the existing Contact rollups in one window and mirroring the configuration in another window for new Account rollups? Because it's actually that easy. In NPSP Settings - Donations - Customizable Rollups, click Configure Customizable Rollups, then New Rollup. Target Object = Account, Target Field = a field you created in Step 1, Rollup Type = Opportunity -> Account (Contact Soft Credit). All other settings should simply mirror the Contact rollup in your other window.

Conclusion

That really doesn't look so hard, does it? I'm here to tell you it wasn't. Now go take two hours to implement it at your org and don't keep your co-workers waiting like I did (sorry Bekka).

0 notes

Text

Tips and tools to migrate data from Raiser’s Edge to Salesforce

This blog post is overdue. My organization cut over from Raiser’s Edge to Salesforce early this year. Data conversion was a huge challenge, and this post aims to pay forward some tips, scripts, and spreadsheets for tackling an RE migration.

It needs to start with some acknowledgements. These SQL scripts derive from the scripts in Jon Goldberg’s post on Exporting Raiser’s Edge for CiviCRM. I don’t know how we would have completed our migration without that resource. And these field mapping spreadsheets derive from templates provided by Andrea Hanson of Redpath Consulting Group. Andrea and the whole team over at Redpath have been amazing implementation partners.

Downloads

In case you’re just here for the downloads, and you didn’t spot the links in the preceding paragraph, here you go:

Download Raiser’s Edge extraction SQL scripts (.zip)

Download Raiser’s Edge to Salesforce field mapping templates (.zip)

These resources were used to migrate into Salesforce from The Raiser’s Edge Version: 7.93.5782.1

In order to execute the SQL queries, you will need access to the server on which RE is running and administrator credentials for SQL Server Management Studio. My preferred method to pull data out is to copy the query results in the Remote Desktop window then paste them into an Excel sheet on my local desktop.

Recommended Tools

Ablebits Ultimate Suite for Excel is an essential tool for comparing and merging tables in Excel.

A programmable keyboard helps automate repeated keystroke routines to cut through data combing tasks like a warm knife through butter. My go to model is the Logitech G610. That’s been a solid choice that was reasonably priced and serves me well, though I miss the dedicated macro record key from my trusty old G710 (now discontinued). The more expensive Logitech G910 appears to have a macro record key and could be a better choice for beginners.

DemandTools is a data quality power toolset that is essential for deduplicating data once it's in Salesforce. Up until mid-2018 the product was available via donation for most c3 nonprofits. The vendor's current NFP program offers a 20% discount to most nonprofits and via donation to nonprofits with less than $250,000 in net assets.

Tips

Like I wrote to open this blog post, it’s overdue. I have my excuses - another, even more daunting data migration came on the heels of this one; I also had a grad school program to contend with. But ultimately it’s been so long since our RE migration, and there were so many quirks involved, I would be hard pressed to write up a full case study at this point. But I still remember some of the pain points and can provide some tips here on what to watch out for.

Raiser's Edge has a Records table and a Constituents table. Constituents are basically a full-fledged customer. Records include constituents plus other leaner contacts in the system, such as spouses or affiliated contacts of a constituent.

In many of the back end RE tables, the primary key field is labeled CONSTIT_ID. This usually corresponds to the ID field from the Records table, not the CONSTITUENT_ID field. Except in a couple of cases where it doesn't

The ID fields in both the Records and Constituent tables are sequential numbers which began at 1, and for a significant chunk of customers who were imported into RE years ago when that was deployed, these two ID numbers are exactly the same. This can be a huge gotcha when figuring out how to join data between tables, because if you join the tables on the wrong ID it may appear accurate at first until you find later that it wasn't. For this reason, it's also important to check a true random sample of data, don't only spot check the longtime members who you know well.

Check your work thoroughly at each step to ensure the data is coming together as expected. Save your work often. Save a new version of the files you're working at least once a day. File system space and organization be damned, it's just more important to have point in time copies you can revert back to because at some point something will goes sideways and you'll need it.

Several of the SQL scripts are just a SELECT * FROM {table} function. These are pretty straightforward data points that I would extract with the basic query and then subsequently use Ablebits to merge into the import template by matching the ID number up.

Email addresses are technically a type of phone number in RE's data structure. In order to export them you have to join the records table to the constituent address table, joined to the address table, joined to the constituent address phones table, joined to the phones table. But on the bright side, once you get there you can export phone numbers as well

I counted 594 distinct tables in our Raiser's Edge SQL database. Of those 308 were completely empty. That has no real bearing on anything, but it's interesting trivia

The way that spouse relationships are recorded is a challenge. In some cases they each have a relationship pointing in the other's direction, others only have one relationship recorded moving in one direction. In our case about a third of them had it going both ways, which resulted in some duplication that I had to sort out. I don't remember exactly how I did that, just that it was tricky and time consuming and generally made me grouchy

Do not deduplicate customer records prior to import. There's too much risk that trying to do so could result in orphaned donation records, it's less risky and just easier to put them all into Salesforce first and then use DemandTools to merge the dupes. Also, if your RE deployment was anything like ours, expect a significant number of duplicate contacts to result because a contituent was also been recorded as an affiliated contact on other consitutent records in multiple instances.

The TABLEENTRIES table in Raiser's Edge is the key to translating many of the fields located in other tables. The other tables often contain a mysterious numeric value in places where you might expect to see actual data, that value needs to be matched to the data via TABLEENTRIES. Some of the data which this applies to are salutation types, campaign types, address types, countries, phone types, payment types, and relationship types. I used Ablebits to merge these in Excel as opposed to incorporating those mappings in the SQL scripts.

The way that campaigns are structured in Raiser's Edge is fundamentally different from how that works in Salesforce. This presents a challenge in terms of converting the data to fit the new system, but also presents an opportunity to streamline your campaign data structure!

In Raiser's Edge there are four defined levels - campaign, fund, appeal, and package. Objects at each level exist in a many-to-many relationship (i.e. a fund can be associated with multiple child appeals, and an appeal can be associated with multiple parent funds). Individual donations can be tied to an object at any level.

In Salesforce there is only one type of object, called a campaign. Campaigns can be structured in hierarchical relationships in a one-to-many relationship (i.e. a campaign can be associated with multiple child campaigns, but a campaign can only have one parent campaign). Individual donations can be tied to an object at any level.

Our legacy Raiser's Edge database contained hundreds of distinct campaigns, many of which were entered years ago and only used for a single donation or handful of donations. Through consolidation we reduced the number of campaigns by over 70%, then mapped legacy campaigns to new campaigns on the gift records prior to importing those so that they all magically landed where they were supposed to in the new structure

A breakthrough in our cleanup process came when we realized that most of the appeals and packages that were associated with multiple parent campaigns could be migrated to picklist values on the Salesforce campaigns rather than needing to remain as a distinct campaign in the new schema.

As part of this transformation effort, we implemented a canonical naming structure so that child campaigns incorporate the parent campaign's name. That makes them all line up hierarchically when viewed in a flat alphabetical list, which is nice, but due to Salesforce's 80 character limitation for campaign names required abbreviating in some cases.

The spreadsheet which I created to analyze and consolidate RE campaigns ended up being twenty feet long printed out - 46 11x17” pages taped together 2 across and 23 down. We rolled that out on the conference room table so that development and finance staff could work through it together, making final changes in red ink that I then incorporated into the data staged for import. The photo of them working through that ginormous spreadsheet is my favorite image from this project.

Raiser's Edge SQL extract queries

You can use this link to download the entire set of SQL queries as a zip file, or for your copy-pasting convenience they're also listed below. These are just the ones that involve table joins, the zip file also includes single table exports, most of which are simply SELECT * from {table name}.

Address - phones

USE RE7 SELECT RECORDS.id , RECORDS.is_constituent , RECORDS.last_name , RECORDS.first_name , RECORDS.org_name , PHONES.phonetypeid , PHONES.num , PHONES.DO_NOT_CALL , ADDRESS.address_block , ADDRESS.city , ADDRESS.state , ADDRESS.post_code , ADDRESS.country , CONSTIT_ADDRESS.type , CONSTIT_ADDRESS.indicator , CONSTIT_ADDRESS.preferred , CONSTIT_ADDRESS.sendmail , CONSTIT_ADDRESS.seasonal , CONSTIT_ADDRESS.seasonal_from , CONSTIT_ADDRESS.seasonal_to FROM RECORDS LEFT JOIN CONSTIT_ADDRESS ON RECORDS.id = CONSTIT_ADDRESS.constit_id LEFT JOIN dbo.ADDRESS ON CONSTIT_ADDRESS.id = ADDRESS.id LEFT JOIN CONSTIT_ADDRESS_PHONES ON ADDRESS.id = CONSTIT_ADDRESS_PHONES.constitaddressid LEFT JOIN PHONES ON CONSTIT_ADDRESS_PHONES.phonesid = PHONES.phonesid where num is not null order by records.last_name asc, records.first_name asc, records.org_name asc

Address - seasonal

use RE7 SELECT RECORDS.id , RECORDS.last_name , RECORDS.first_name , RECORDS.org_name , CONSTITUENT.records_id , CONSTITUENT.id , CONSTITUENT.key_name , CONSTITUENT.first_name , ADDRESS.address_block , ADDRESS.city , ADDRESS.state , ADDRESS.post_code , ADDRESS.country , CONSTIT_ADDRESS.type , CONSTIT_ADDRESS.indicator , CONSTIT_ADDRESS.preferred , CONSTIT_ADDRESS.sendmail , CONSTIT_ADDRESS.seasonal , CONSTIT_ADDRESS.seasonal_from , CONSTIT_ADDRESS.seasonal_to FROM RECORDS LEFT JOIN CONSTITUENT ON RECORDS.id = CONSTITUENT.records_id LEFT JOIN CONSTIT_ADDRESS ON CONSTITUENT.id = CONSTIT_ADDRESS.constit_id LEFT JOIN dbo.ADDRESS ON CONSTIT_ADDRESS.address_id = ADDRESS.id where seasonal = -1 order by records.last_name asc, records.first_name asc, records.org_name asc

Address

use RE7 SELECT RECORDS.id , RECORDS.last_name , RECORDS.first_name , RECORDS.org_name , CONSTITUENT.records_id , CONSTITUENT.id , CONSTITUENT.key_name , CONSTITUENT.first_name , ADDRESS.address_block , ADDRESS.city , ADDRESS.state , ADDRESS.post_code , ADDRESS.country , CONSTIT_ADDRESS.type , CONSTIT_ADDRESS.indicator , CONSTIT_ADDRESS.preferred , CONSTIT_ADDRESS.sendmail , CONSTIT_ADDRESS.seasonal , CONSTIT_ADDRESS.seasonal_from , CONSTIT_ADDRESS.seasonal_to , CONSTIT_ADDRESS.date_from as valid_from , CONSTIT_ADDRESS.date_to as valid_to FROM RECORDS LEFT JOIN CONSTITUENT ON RECORDS.id = CONSTITUENT.records_id LEFT JOIN CONSTIT_ADDRESS ON CONSTITUENT.records_id = CONSTIT_ADDRESS.constit_id LEFT JOIN dbo.ADDRESS ON CONSTIT_ADDRESS.address_id = ADDRESS.id

Attributes

SELECT DESCRIPTION , at.CODETABLESID , LONGDESCRIPTION FROM TABLEENTRIES te LEFT JOIN AttributeTypes at ON te.CODETABLESID = at.CODETABLESID ORDER BY DESCRIPTION --- SELECT DISTINCT * FROM AttributeTypes at LEFT JOIN TABLEENTRIES te ON te.CODETABLESID = at.CODETABLESID ORDER BY DESCRIPTION --- SELECT * FROM ConstituentAttributes

Constituent Codes

USE RE7 SELECT cc.constit_id as record_id, t.LONGDESCRIPTION, cc.DATE_FROM, cc.DATE_TO, c.NAME FROM dbo.Constituent_Codes AS cc INNER JOIN dbo.TABLEENTRIES AS t ON cc.CODE = t.TABLEENTRIESID INNER JOIN dbo.CODETABLES AS c ON c.NAME = 'Constituent Codes' AND t.CODETABLESID = c.CODETABLESID

Gifts

use RE7 SELECT gs.GiftId , g.CONSTIT_ID as records_id , p.APPEAL_ID as appeal_id , p.ID as package_id , gs.Amount , g.RECEIPT_AMOUNT , g.DTE as gift_date , g.DATE_1ST_PAY , g.DATEADDED , CAMPAIGN.DESCRIPTION as campaign , FUND.DESCRIPTION as fund , APPEAL.DESCRIPTION as appeal , p.DESCRIPTION as package , g.PAYMENT_TYPE , g.ACKNOWLEDGE_FLAG , g.CHECK_NUMBER , g.CHECK_DATE , g.BATCH_NUMBER , g.ANONYMOUS , gst.LONGDESCRIPTION as giftsubtype , g.TYPE , DBO.TranslateGiftType(g.TYPE) as type2 , g.REF , g.REFERENCE_DATE , g.REFERENCE_NUMBER , g.ANONYMOUS , g.ACKNOWLEDGE_FLAG , g.AcknowledgeDate , g.GiftSubType FROM GiftSplit gs LEFT JOIN FUND on gs.FundId = FUND.id LEFT JOIN APPEAL on gs.AppealId = APPEAL.id LEFT JOIN CAMPAIGN on gs.CampaignId = CAMPAIGN.id LEFT JOIN GIFT g on gs.GiftId = g.ID LEFT JOIN Package p on gs.PackageId = p.ID LEFT JOIN TABLEENTRIES gst on g.GIFTSUBTYPE = gst.TABLEENTRIESID

Pledge payments

/* Find all pledge installments, and their related payments if they exist. */ SELECT i.InstallmentId , i.PledgeId , i.AdjustmentId , i.Amount as scheduled_amount , i.Dte , ip.Amount as actual_amount , ip.PaymentId , g.CONSTIT_ID , g.RECEIPT_AMOUNT , g.DTE as receive_date , g.TYPE , DBO.TranslateGiftType(g.TYPE) as type FROM Installment i LEFT JOIN InstallmentPayment ip ON i.InstallmentId = ip.InstallmentId LEFT JOIN GIFT g ON ip.PaymentId = g.ID /* Adjustments are stored in here too - when an adjustment happens, the pledge ID of the original value is blanked */ WHERE i.PledgeId IS NOT NULL ORDER BY i.AdjustmentId /* Write-off Types: Covenant WriteOff, MG Write Off, Write Off */

Pledges

/* Find all GIFT records with one or more associated Installment records. These are pledges OR recurring gifts. */ SELECT DISTINCT g.CONSTIT_ID , g.ID as GiftId , g.Amount , g.DTE as receive_date , FUND.DESCRIPTION as fund , FUND.FUND_ID , CAMPAIGN.DESCRIPTION as campaign , APPEAL.DESCRIPTION as appeal , g.PAYMENT_TYPE , g.ACKNOWLEDGEDATE , DBO.TranslateGiftType(g.TYPE) as type , g.REF as note ,DATE_1ST_PAY ,g.DATEADDED ,g.DATECHANGED ,INSTALLMENT_FREQUENCY ,NUMBER_OF_INSTALLMENTS ,POST_DATE ,POST_STATUS ,REMIND_FLAG ,Schedule_Month ,Schedule_DayOfMonth ,Schedule_MonthlyDayOfWeek ,Schedule_Spacing ,Schedule_MonthlyType ,Schedule_MonthlyOrdinal ,Schedule_WeeklyDayOfWeek ,Schedule_DayOfMonth2 ,Schedule_SMDayType1 ,Schedule_SMDayType2 ,NextTransactionDate ,Schedule_EndDate ,FrequencyDescription , r.CONSTITUENT_ID FROM Gift g LEFT JOIN GiftSplit gs on g.ID = gs.GiftId LEFT JOIN FUND on gs.FundId = FUND.id LEFT JOIN APPEAL on gs.AppealId = APPEAL.id LEFT JOIN CAMPAIGN on gs.CampaignId = CAMPAIGN.id LEFT JOIN RECORDS r ON g.CONSTIT_ID = r.ID JOIN Installment i ON g.ID = i.PledgeId

Salutations

SELECT R.ID, R.Constituent_ID, R.FIRST_NAME, R.LAST_NAME, R.[DATE_LAST_CHANGED], case when R.PRIMARY_ADDRESSEE_EDIT = -1 then R.PRIMARY_ADDRESSEE else dbo.GetSalutation(R.PRIMARY_ADDRESSEE_ID,R.ID,'',0,0,0,getdate()) end as 'PRIMARY_ADDRESSEE', case when R.PRIMARY_SALUTATION_EDIT = -1 then R.PRIMARY_SALUTATION else dbo.GetSalutation(R.PRIMARY_SALUTATION_ID,R.ID,'',0,0,0,getdate()) end as 'PRIMARY_SALUTATION', ADDSAL_TYPE.LONGDESCRIPTION as 'ADDSAL_TYPE_DESC',CS.SALUTATION_ID, CS.SEQUENCE, CS.EDITABLE, case when CS.EDITABLE = -1 then CS.SALUTATION else dbo.GetSalutation(CS.SALUTATION_ID,R.ID,'',0,0,0,getdate()) end as 'SALUTATION_CORRECT', CS.SALUTATION as 'SALUTATION_FIELD_INCORRECT' FROM [RECORDS] R left outer join CONSTITUENT_SALUTATION AS CS ON R.ID=CS.CONSTIT_ID left outer join TABLEENTRIES AS TE ON R.TITLE_1=TE.TABLEENTRIESID left outer join TABLEENTRIES ADDSAL_TYPE on CS.SAL_TYPE=ADDSAL_TYPE.TABLEENTRIESID ORDER BY CS.SEQUENCE

Soft credits

USE RE7 SELECT GiftId , ConstitId , Amount , 'Soft Credit' as soft_credit_type FROM GiftSoftCredit

Solicitor relationship

SELECT CONSTIT_ID , SOLICITOR_ID , TABLEENTRIES.LONGDESCRIPTION as solicitor_type , AMOUNT , NOTES , cs."SEQUENCE" as weight FROM CONSTIT_SOLICITORS cs LEFT JOIN TABLEENTRIES ON cs.SOLICITOR_TYPE = TABLEENTRIES.TABLEENTRIESID ORDER BY weight

Additional resources

In addition to the essential Exporting Raiser’s Edge for CiviCRM post mentioned above, Accessing the Raiser’s Edge database using SQL by SmartTHING was a useful reference during our migration.

Salesforce Data Migration - Raisers Edge by Larry Bednar is insightful reading for planning an RE migration. The NW Data Centric downloads page is also a treasure trove, and I wish I’d found their Standard NWDC Raisers Edge to Salesforce Data Processing Download sooner.

These Do’s and Don’ts of Data Migration by Megaphone Technology Consulting provide some very sound advice on planning for the human side for a data migration.

Another great resource on change management and planning for the human side of a database implementation is Your CRM Is Failing and It’s All Your Fault, a session presented by Karen Graham, Danielle Gangelhoff, Kelly Kleppe, and Libby Nickel Baker at the 2017 Minnesota Council of Nonprofits Communications and Technology Conference.

If you've read this far, I hope it was helpful and worth your time. Good luck on your Raiser's Edge migration journey!

0 notes

Text

File downloads restored

Throughout the years I've written numerous case study blog posts which then contain a file download link to obtain a template of the work described. It recently came to my attention that most of my download links were broken. Turns out I missed this annoucement about Dropbox shutting down links from the old Public folder as of September 1. The links have been restored and files can be downloaded again now.

(P.S. if you find a link that's still broken please let me know about it)

0 notes

Text

How to export contacts and notes from ACT!

My organization is in the midst of a Salesforce implementation. We're going in phases, replacing systems in order and getting departments live as they're built out and ready to go. Our first department that went live on Salesforce converted over from a legacy ACT! database. This is a case study of the steps taken to get contacts and notes data out of that system.

The department that we migrated into Salesforce first was chosen in part because their data needs were relatively simple. The department consists of just one employee (ah, small staff nonprofit life) and their customer data was stored in an older ACT! database where the only features being used were contacts and notes. The simplicity of this data model made for a relatively low bar for entry into the new system. The version they were on at migration was Sage ACT! Pro 2011, and the age of that software already presented a couple of challenges. For instance, after upgrading that person's computer earlier this year I found that the version of ACT! required Microsoft Office 2010 to export reports to Excel. And for months afterwards their old computer stayed running under my desk so it could be RDP'd onto for that purpose. *sigh*

So the environment for this case study is a client computer running Windows 7 Professional, Microsoft Office 2010, and Sage ACT! Pro 2011.

I researched how to get data out of ACT! by scouring support articles and forum posts, and what I found was that there seemed to be a couple of methods for getting customer data out but couldn't find any successful methods for exporting notes data. I did find a couple of products for sale in the $300-$500 range which could ostensibly do that for me, but for a one-time deal like this I prefer elbow grease to tapping my limited available budget. The method I landed on as the best choice for getting data out of ACT! was to setup a connection to the source database file in Excel. Once again, the version of ACT! I'm working with requires Excel 2010. Along the way I did try running the data connection in Excel 2016 and it did not work.

The steps for setting up the data connection in Excel are as follows:

In the Data tab select From Other Sources - From Data Connection Wizard

Select Other/Advanced and click Next

Select ACT! OLE DB Provider for Reporting and click Next

In the Connection tab, select your database file (.PAD file extension) from wherever on your network that's stored, enter your ACT! user credentials, and click Test Connection to ensure that you're able to connect. Then click OK.

Select the desired table to export

Export into new worksheet

And boom, there I was able to get data out from the underlying contacts (VRP_CONTACT) and contact notes (VRP_CONTACT_NOTE) tables. Maybe it won't be so painful to extract that notes data after all. Eeeeeeexxxcellent!

With the data exported to Excel, I got to work writing formulas to convert it into our staging worksheets for upload into Salesforce. A detailed explanation of how those formulas work is beyond the scope of this case study, but you're welcome to download a copy of my ACT! data conversion template and pick them apart. The general idea is that the formulas get the data you need into the right columns, then select all and copy, paste values over the formulas, get to work cleaning it up, and once it's nice and pretty push it in to the new system.

It's important to note that in my experience the data connection proved to be brittle. It crashes easily, including every time I clicked the Refresh All button in Excel's Data tab. Therefore to update the data it was necessary to import each table again into a new tab, then go into the staging worksheets and run find-replace operations to modify the formulas to reference the new tables.

So I was chugging along getting data transferred from the source tables into the staging sheets when I got to the notes data and noticed something awry. That VRP_CONTACT_NOTE table had exported all of the notes data (enclosed in a ton of artifact coding), but there's no column indicating which customer each note belongs to. *commences swearing under my breath* So I had all the notes data in hand but no way of linking it to the proper customer records. That's a problem.

I started searching for a solution. First course was testing a bunch ACT! table exports, but nothing I found contained the data I needed to link notes back to their customers. I finally figured out a way to do it. It's not an elegant solution, nor for the faint of heart, but in the end this got the job done.

Step one is getting the notes data out of ACT!, which I accomplished via the Notes-History canned report inside of ACT! like so:

In ACT! navigate to Reports

Select Notes-History from the list

In the General tab select HTML File for the report output, Create report for All Contacts, and uncheck Exclude 'My Record'

In the History tab, uncheck all of the boxes (my use case didn't include this data)

In the Note tab, ensure that the Notes box is checked and click Custom for the Date Range

Click OK to export the report to an HTML file

Important caveat here, trying to export too much data at once via this method causes ACT! to hang. On my first attempt I tried to export everything and left it running at the end of the workday only to check several hours later and find that exported an HTML report with all of the customers listed but every notes field blank. So I started chunking it out a year at a time. If it doesn't spit out the report within a few minutes, then I'd cancel the operation, shorten the date range, and try again. At a point I realized that it was hanging on shorter and shorter date ranges, and figured out that I seemed to get better results by closing and re-opening ACT! after each export. And after rinse-wash-repeating that process through 15+ years worth of notes data, it was all successfully exported from the system. On to the challenge of parsing the HTML into usable data.

When you look at the HTML export it has a header at the top stating what the report is and who ran it, and a footer stating when it was run and the page number. In between it lists the date, time, and content of each note grouped together by contact. The contact's organization name is listed along with their name. The first step to extrapolating this is to remove the header and footer information. Open the HTML file in a text editor or code editor. The header is the first 94 lines of the file. The footer is the last 10 lines. Delete those, repeat for each of your export files, then copy-paste what remains into a single combined file.