Don't wanna be here? Send us removal request.

Text

Introduction

With the advancement of computer graphics technology, landscapes and games that can be seen with reality are becoming more familiar, and technologies for bridging the world with reality are also VR (Virtual Reality), AR (Augumented Reality), or MR (Mixed Reality). In general, VR is a technology that cuts off the real landscape by wearing dedicated goggles, and is a technology that more immerses the world. Specifically, it displays images on a panel inside the goggles and sees them with both eyes. By projecting images with parallax, you can get a real sense of depth and stereoscopic effect, but on the other hand, you can not have real eyes, pan-focus images (all in focus), The reality is that it is a video game with a fixed focus at a certain distance. It has been announced that major IT companies such as Google, Facebook, and VR manufacturer Oculus are researching and developing this issue.

youtube

How our eyes see the world?

In fact, how do our eyes perceive the world? When we look around, we feel that everything around us is in focus, but theoretically it is impossible. If our eye lens is a pinhole, it is not possible in principle, but in fact it is not. There are parallaxes depending on the positions of the left and right eyes, but usually they do not look double. This seems to be a state in which the correction is quite effective due to the complementary function of the brain. Similarly, it may be due to the function of the brain that colors appear to change, such as optical illusions, or that still images appear to move.

In the case of VR, the screen that displays the image is only a few centimeters from the lens surface of the eye. By viewing the image through a convex lens, you can see a landscape that expands to the full field of view, but the focal point is on the screen plane, so a strange situation arises where the focal point is close to what should have been seen far away. The eye obtains information not only by binocular parallax and aerial perspective recognition, but also by the degree of lens adjustment (muscle tension), so the visible image and the actual physical (physical) information This divergence appears in the form of so-called VR sickness.

When our eyes see an object, they try to focus instantly, but what is needed in that case is a landmark for focusing. It may be a part of an object, or a wall pattern or scratch can be clearly recognized. However, in the case of a wire mesh fence or a repetitive pattern on the wall, the two patterns (graphics) are focused on the left and right sides, and the perspective may not match. In addition, there is a phenomenon that it is difficult or impossible to grasp the distance on a white shining wall without shadows or patterns because there is no mark for measuring the distance.

CG space and focus

On the other hand, in the CG space, setting of the camera (focal length) is arbitrary. In other words, with pan focus, the lens can be focused from near the lens surface to infinity, and can be freely set to focus at a certain distance from the center by setting the depth of field and focal length. In CG animation etc., the focus can be arbitrarily changed according to the scene and camera movement, but it is difficult to set the distance to the object to be focused when the angle of view moves in real time such as VR . Therefore, based on one hypothesis, we will experiment with the autofocus function in VR space in a relatively easy way.

Principle and experiment of autofocus function

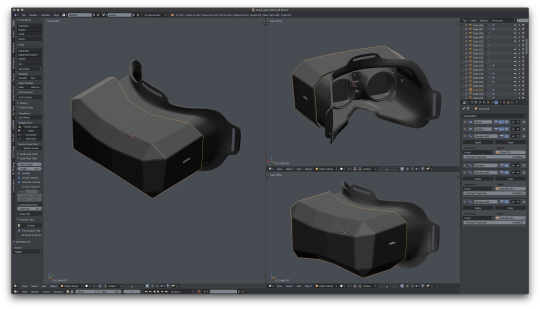

The open source 3D software “Blender” was used as an experimental environment. This software is very suitable for such experiments because it is quite powerful for modeling and animation production, and it is easy to control objects with Python.

The program is divided into two parts. One is to import the orientation of the headset (goggles) into Blender via serial communication. The other is focus control. I made a simple headset this time, but with two square LCDs and a 6-axis gyro sensor and Arduino for communication, I decided to send the video via an HDMI cable and serial communication via a USB cable. . From the gyro sensor, the value is taken in as a quaternion value using an internal library. In normal (X, Y, Z), the error when returning the direction was large, and the correct direction could not be obtained. The problem of orientation was solved by taking in the quaternion and controlling it with that value.

The second part, consider the focus issue. As described earlier, the camera settings in CG are free. The focus can be set even in the “empty space” that our eyes cannot do. So where do you focus on the screen you are looking at? Some major IT companies are researching methods to check where they are currently looking on the screen by eye tracking in goggles and focus on it. There are doubts about how. This is because people are not looking at moving their eyes only, but they must always turn their faces to accurately capture the object. In that case, the object is always near the center of the field of view. Then, it is possible to focus on an object near the center of the current field of view.

The problem is how to detect objects in the currently displayed screen (view). In addition, the focus is on the detected object. The first problem is that by entering the coordinates of the object using the commands in Blender's API (Application Programming Interface), the position in the current view can be obtained. The second problem is a little tricky. Given a large building and a small apple in front of it, the apple can be in the center of the object. Depending on the distance to the camera, the difference in the distance between the apple's sticky part and the front skin part is very small, but the distance between the center of the building and the door, rooftop edge, window frame, etc. is very large. It is impossible to detect at the center of the building. It is possible to detect the position not only at the center of the object but also at the vertex of the object or the center of the polygon. However, in the case of a complicated curved surface, the number of polygons may become enormous and the detection may take too long. is there. In the first place, when our eyes focus on an object, it seems that they are looking for some feature point. For example, it is a flower that blooms in a tree, or a part that has a distinct characteristic such as a cloud, a corner of a building, or a window frame. Then, I thought that the most efficient method would be to place an object just for that purpose at the part where the target object is focused and detect them. In other words, special objects for position detection are arranged at corners of buildings, door windows (window frames), corners and centers of posters affixed to walls, and only these are checked. A special object called “Empty” was used as the special object. Empty has no actual shape, only information such as position, size, and angle. Three lines in the XYZ direction can be seen on the screen, but they are not displayed at the time of rendering or can be hidden, so there is no hindrance to the expression. These are checked in the loop of the program, and those existing in the target area are picked up and listed. As a next step, if the camera is set as the distance to focus on the object closest to the camera among the objects in the list, it will be in focus. These functions were written and executed as Python code. As the display method, Blender version 2.8 (beta) EEVEE was used, but this function is effective for future video expression and experiments because high-quality images can be obtained in real time without time-consuming ray tracing rendering.

As a result of the experiment, very good results were obtained. Since the lens is not mechanically moved like the autofocus function of a digital camera, the focus can be switched instantaneously. The focus can be switched in the same manner even if the camera is moved or the empty object is linked and moved. If there is no object to be focused in the view, it is programmed to be set to infinity, so the image pasted on the background is focused. As a disadvantage of this experiment, the density of information from the gyro sensor is coarse and the tracking of the camera is coarse. In addition, there are many unexamined parts, such as how to avoid performance degradation when detecting a larger amount of empty.

[ Prototype 1 ]

youtube

[ Prototype 2 ]

youtube

[ Prototype 3 ]

youtube

youtube

[ Prototype 4 (on going) ]

0 notes