Don't wanna be here? Send us removal request.

Photo

(vía Yandex updates their version of PageRank named Thematic Index of Citation (TIC))

0 notes

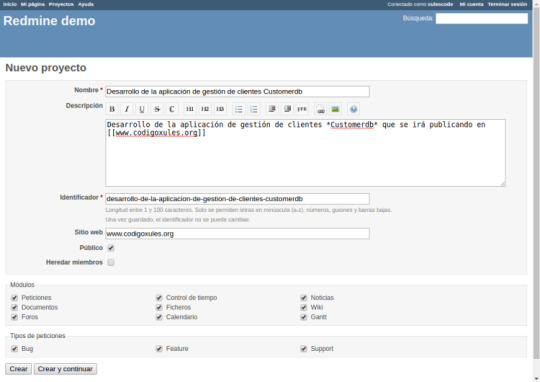

Photo

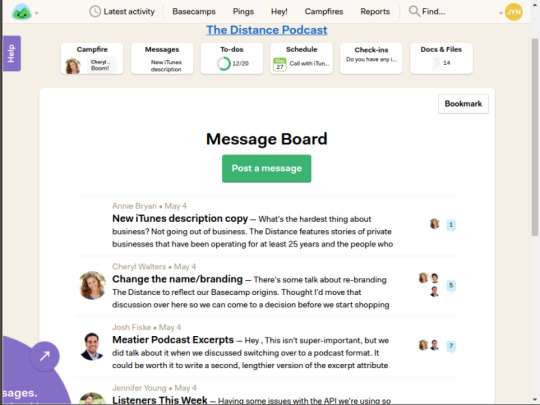

(vía Herramientas de gestión de proyectos que deberías conocer - Código Xules)

0 notes

Text

Instalación LAMP (Linux + Apache + MySQL + PHP) en Ubuntu 15.10 - Código Xules

See on Scoop.it - Web Hosting, Linux y otras Hierbas… LAMP UBUNTU 15.10 - Instalación del conjunto Linux Apache MySQL PHP - LAMP - es uno de los conjuntos de software libre que más se utilizan en servidores web See on codigoxules.org

2 notes

·

View notes

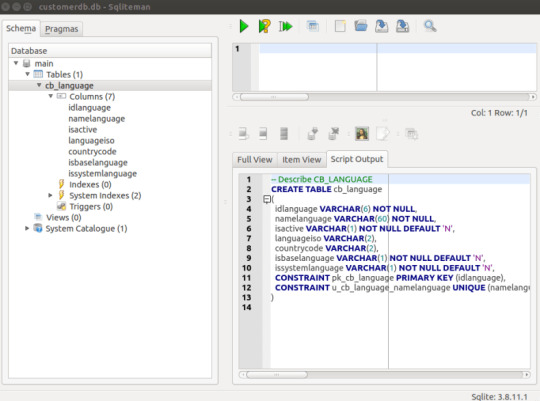

Photo

(vía Primeros pasos con SQLite con ejemplos sencillos - Guía SQLite 1 - Código Xules)

0 notes

Photo

How we got higher retention by making sure everyone understands what the app is for from the first moment: https://t.co/9K2LUq1EZN

8 notes

·

View notes

Link

Why Marketing Agencies Should Use Call Tracking Analytics

0 notes

Text

CaffeOnSpark Open Sourced for Distributed Deep Learning on Big Data Clusters

By Andy Feng(@afeng76), Jun Shi and Mridul Jain (@mridul_jain), Yahoo Big ML Team

Introduction

Deep learning (DL) is a critical capability required by Yahoo product teams (ex. Flickr, Image Search) to gain intelligence from massive amounts of online data. Many existing DL frameworks require a separated cluster for deep learning, and multiple programs have to be created for a typical machine learning pipeline (see Figure 1). The separated clusters require large datasets to be transferred among them, and introduce unwanted system complexity and latency for end-to-end learning.

Figure 1: ML Pipeline with multiple programs on separated clusters

As discussed in our earlier Tumblr post, we believe that deep learning should be conducted in the same cluster along with existing data processing pipelines to support feature engineering and traditional (non-deep) machine learning. We created CaffeOnSpark to allow deep learning training and testing to be embedded into Spark applications (see Figure 2).

Figure 2: ML Pipeline with single program on one cluster

CaffeOnSpark: API & Configuration and CLI

CaffeOnSpark is designed to be a Spark deep learning package. Spark MLlib supported a variety of non-deep learning algorithms for classification, regression, clustering, recommendation, and so on. Deep learning is a key capacity that Spark MLlib lacks currently, and CaffeOnSpark is designed to fill that gap. CaffeOnSpark API supports dataframes so that you can easily interface with a training dataset that was prepared using a Spark application, and extract the predictions from the model or features from intermediate layers for results and data analysis using MLLib or SQL.

Figure 3: CaffeOnSpark as a Spark Deep Learning package

1: def main(args: Array[String]): Unit = {

2: val ctx = new SparkContext(new SparkConf())

3: val cos = new CaffeOnSpark(ctx)

4: val conf = new Config(ctx, args).init()

5: val dl_train_source = DataSource.getSource(conf, true)

6: cos.train(dl_train_source)

7: val lr_raw_source = DataSource.getSource(conf, false)

8: val extracted_df = cos.features(lr_raw_source)

9: val lr_input_df = extracted_df.withColumn(“Label”, cos.floatarray2doubleUDF(extracted_df(conf.label)))

10: .withColumn(“Feature”, cos.floatarray2doublevectorUDF(extracted_df(conf.features(0))))

11: val lr = new LogisticRegression().setLabelCol(“Label”).setFeaturesCol(“Feature”)

12: val lr_model = lr.fit(lr_input_df)

13: lr_model.write.overwrite().save(conf.outputPath)

14: }

Figure 4: Scala application using CaffeOnSpark both MLlib

Scala program in Figure 4 illustrates how CaffeOnSpark and MLlib work together:

L1-L4 … You initialize a Spark context, and use it to create CaffeOnSpark and configuration object.

L5-L6 … You use CaffeOnSpark to conduct DNN training with a training dataset on HDFS.

L7-L8 …. The learned DL model is applied to extract features from a feature dataset on HDFS.

L9-L12 … MLlib uses the extracted features to perform non-deep learning (more specifically logistic regression for classification).

L13 … You could save the classification model onto HDFS.

As illustrated in Figure 4, CaffeOnSpark enables deep learning steps to be seamlessly embedded in Spark applications. It eliminates unwanted data movement in traditional solutions (as illustrated in Figure 1), and enables deep learning to be conducted on big-data clusters directly. Direct access to big-data and massive computation power are critical for DL to find meaningful insights in a timely manner.

CaffeOnSpark uses the configuration files for solvers and neural network as in standard Caffe uses. As illustrated in our example, the neural network will have a MemoryData layer with 2 extra parameters:

source_class specifying a data source class

source specifying dataset location.

The initial CaffeOnSpark release has several built-in data source classes (including com.yahoo.ml.caffe.LMDB for LMDB databases and com.yahoo.ml.caffe.SeqImageDataSource for Hadoop sequence files). Users could easily introduce customized data source classes to interact with the existing data formats.

CaffeOnSpark applications will be launched by standard Spark commands, such as spark-submit. Here are 2 examples of spark-submit commands. The first command uses CaffeOnSpark to train a DNN model saved onto HDFS. The second command is a custom Spark application that embedded CaffeOnSpark along with MLlib.

First command:

spark-submit \ –files caffenet_train_solver.prototxt,caffenet_train_net.prototxt \ –num-executors 2 \ –class com.yahoo.ml.caffe.CaffeOnSpark \ caffe-grid-0.1-SNAPSHOT-jar-with-dependencies.jar \ -train -persistent \ -conf caffenet_train_solver.prototxt \ -model hdfs:///sample_images.model \ -devices 2

Second command:

spark-submit \ –files caffenet_train_solver.prototxt,caffenet_train_net.prototxt \ –num-executors 2 \ –class com.yahoo.ml.caffe.examples.MyMLPipeline \

caffe-grid-0.1-SNAPSHOT-jar-with-dependencies.jar \

-features fc8 \ -label label \ -conf caffenet_train_solver.prototxt \ -model hdfs:///sample_images.model \ -output hdfs:///image_classifier_model \ -devices 2

System Architecture

Figure 5: System Architecture

Figure 5 describes the system architecture of CaffeOnSpark. We launch Caffe engines on GPU devices or CPU devices within the Spark executor, via invoking a JNI layer with fine-grain memory management. Unlike traditional Spark applications, CaffeOnSpark executors communicate to each other via MPI allreduce style interface via TCP/Ethernet or RDMA/Infiniband. This Spark+MPI architecture enables CaffeOnSpark to achieve similar performance as dedicated deep learning clusters.

Many deep learning jobs are long running, and it is important to handle potential system failures. CaffeOnSpark enables training state being snapshotted periodically, and thus we could resume from previous state after a failure of a CaffeOnSpark job.

Open Source

In the last several quarters, Yahoo has applied CaffeOnSpark on several projects, and we have received much positive feedback from our internal users. Flickr teams, for example, made significant improvements on image recognition accuracy with CaffeOnSpark by training with millions of photos from the Yahoo Webscope Flickr Creative Commons 100M dataset on Hadoop clusters.

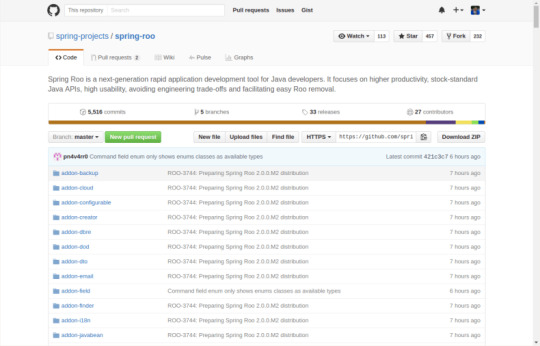

CaffeOnSpark is beneficial to deep learning community and the Spark community. In order to advance the fields of deep learning and artificial intelligence, Yahoo is happy to release CaffeOnSpark at github.com/yahoo/CaffeOnSpark under Apache 2.0 license.

CaffeOnSpark can be tested on an AWS EC2 cloud or on your own Spark clusters. Please find the detailed instructions at Yahoo github repository, and share your feedback at [email protected]. Our goal is to make CaffeOnSpark widely available to deep learning scientists and researchers, and we welcome contributions from the community to make that happen. .

37 notes

·

View notes

Link

Probablemente este conjunto Linux + Apache + MySQL + PHP, o también conocido como LAMP, sea uno de los conjuntos de software libre que más se utilizan en servidores web.

Esta publicación se incluye dentro de la preparación de próximas publicaciones sobre programación con PHP, así que, como en todos los proyectos que se realizan en Código Xules se parte desde cero, encuadro esta publicación dentro de lapreparación del entorno de programación para futuras publicaciones.

Próximamente, empezaré con una aplicación PHP con acceso a base de datos sin utilizar ningún framework PHP, para después ir desarrollando la aplicación Learning Project con algún framework PHP, el primero será Symfony con el que ya he trabajado y que acaba de sacar su nueva versión mayor Symfony 3.

0 notes

Link

Java iText PDF – Creando un pdf en Java con iText http://codigoxules.org/java-itext-pdf-creando-pdf-java-itext/ #java pdf con @iText ¿Qué nos proporciona iText ? iText es una librería PDF que nos permite crear, adaptar, revisar y mantener documentos en el formato de documento PDF. Java iText PDF – Creando un pdf en Java con iText- Añadimos las librerías y creamos la clase- Creación del documento y metadatos- Creamos nuestra primera página- Añadimos más elementos de iText a nuestro PDF- Uso de tablas en iText: PDFPTable- Resultado Final (Código completo)- Documentación utilizada

0 notes

Link

Recién salida del horno mi última publicación: Creando informes en #Javacon #JasperReports desde @TIBCO #JasperSoft Studio

Con JasperReports podemos crear informes complejos que incluyan gráficos, imágenes, subinformes, … Podemos acceder a nuestros a través de JDBC, TableModels, JavaBeans, XML, Hibernate, CSV,… La publicación y exportación de los informes puedes a ser una gran variedad de formatos, desde el clásico PDF a RTF, XML, XLS, CSV, HTML, XHTML, text, DOCX o OpenOffice.

#JasperReports #Java #JasperSoft

0 notes

Link

0 notes

Link

JSF 2 creando un Managed Bean con Netbeans en 4 pasos http://codigoxules.org/jsf-2-creando-managed-bean-netbeans-4-pasos/

0 notes

Link

#Java - Tip of the day - Imprimir JTable directamente en Java – Java Swing #java basic #javaswing print

0 notes

Link

Para empezar mis publicaciones sobre Wordpress una facilita, como usar el plugin Remove Category URL para quitar "category" en los enlaces de nuestras categorías.

#blogging #wordpress

0 notes