Don't wanna be here? Send us removal request.

Text

Plan-The Final work

I have completed the performance work.

There were some changes. For a hand-made phone frame model is easily been broken, I use the door frame as a metaphor of the phone frame. Door is an important element in many performance to be a sign of space change. Use door can let audience notice the change of the video sources.

This assessment really let me know more about the modern networked performance, I will practice my skills in the future.

0 notes

Text

Research-Ryan Trecartin

Ryan Trecartin is an famous Postmodern artist, who is known for his video works that combine a plenty of sound, narrative, images and computer effects into an information-saturated world. Critic Peter Schjeldahl praised that Trecartin is one of the most vital artists since the 1980s. Trecartin works with an expansive team of collaborators, also does he have a huge studio in L.A.

As an Postmodern artist, most of works created by Trecartin are based on the pictures, texts and videos from the internet, with a lot of editing and collage, erasing the characteristics of the original material, adding some original performance by the author, re-producing a Deja Vu but cannot be accurately understood the environment of it.

A Family Finds Entertainment is a camp extravaganza of epic proportions directed by Ryan Trecartin. The main characters are Trecartin’s family and friends, and the artist himself dressed in multiple absurd roles. A Family Finds Entertainment tells the story of Skippy and his adventures in “Coming Out”. Trecartin’s film was with the help of an obvious metaphor of “Bad Tv” to seek the bizarre and endearing things. Vulgar video effects, chess-like costumes, desperate scripts, and “after school special” melodrama present the youth-culture, reflecting a generation both damaged and affirmed by modern media.

All in all, the creativity in Trecartin’s work has an impact on how to understand the significance of technology and meida to the society.

Reference:

Saatchi Gallery. Ryan Trecartin. Retrieved from:https://www.saatchigallery.com/artist/ryan_trecartin

Artsy. Ryan Trecartin. Retrieved from:https://www.artsy.net/artist/ryan-trecartin

1 note

·

View note

Text

Research-Mirror Magic

The Spring Festival Gala is a regular program in every Spring Festival in China. At the night of the Spring Festival, people will sit together in front of the TV to watch the Spring Festival Gala. If the audience is asked which is the most anticipated performance, it must be the magic show.

Liu Qian, who is a well-known magician in China, was the frequenter of Spring festival Gala. His magic performance are always dazzling. In the Spring Festival Gala of 2012, Liu Qian conducted a performance with the theme of the mirror, which broke the boundary between the mirror world and the real world.

In a magic show used a group of mirror as props, Liu Qian did some incredible things through these mirrors. First, he used a straw to drink the coffee in the mirror. Then, he took out an inverted card with a signature from the mirror. The most amazing was that, from the mirror a swinging hand stretched out, holding a red cake. The audience exclaimed that they dared not to look in the mirror any more.

In fact, this magic show made full use of the reflection principle of the mirror. The space under the table is not empty, and actually half of it was a box covered with mirrors. Audience can find that the color of it is a little different from the ground if they observe carefully. Besides, the mirror on the table could move up and down. When Liu Qian closed the cloth curtain in front of the mirror, the assistant hidden in the box would stretch out the hand through the hole.

In this magic show, the realization requires several important conditions. First, the viewing angle of the audience is the premise of creating the visual illusion. The audience were unable to see the assistant behind the table. In addition, the position of the mirrors was also very important. Special designs allow the mirrors under the table to reflect the color of the ground, which successfully used the visual illusion to deceive the audience's eyes. Finally, it was the magician's performance that successfully put the audience's sight away from the props.

In a magic show, magician, assistants and audience form a special network. Among them, the relationship between the magician and the audience is the most unique. On the one hand, the magician should be against the audience. He must hide the magic skills used in the show, to prevent the audience finding out the secrets behind the magic. On the other hand, the magician must cooperate with the audience. He must guide the audience into the trap, and let the audience become a link of the performance. Without the audience’s cooperation, the magic show will be impossible to complete.

Yangcheng Evening News. (2012). Reveal the secrets of Liu Qian’s mirror magic on the Spring Festival Gala. Retrieved from: http://news.sohu.com/20120123/n332914092.shtml

0 notes

Text

Research-Disco Diffusion

Recently, an online image generation program called "Disco Diffusion" has sparked heated discussion. Some use it to paint for their dreams, while others use it to reproduce the works of those great painters in history.

The process of drawing a painting by Disco Diffusion can be divided into three steps: First, enter the website of the program. Then, set the basic parameters of the image, such as size, number of pictures generated, storage location and so on. Finally, write the text description and run the program. After 20 minutes, an image will be completed.

AI drawing is not new, but Disco diffusion is different: if AI was a painting child, Disco diffusion has grown into a professional painter who can challenge human painters. That is why I want to try the Disco Diffusion. In fact, I have not tried any AI drawing tools before. I really want to know what a amazing painting AI will present to me.

In the early May, I access to the Disco diffusion for the first time. After a few hours of configuration, I randomly typed some words in the prompts grid, getting the first AI work generated with Disco Diffusion.

But the results of the test were not ideal. I was actually disappointed the first time I got the work, for it was fuzzy and ugly.

Although the drawing was a failure, it was not matter. For a human painter, a failed work means a waste of time, energy, and confidence; while for a computer, it just need to run the program again and let the AI draw another one. I don't have to worry about the code getting tired, that is the advantage of computer.

After constantly trying and repeatedly modifying the input description, I finally gained a lot of satisfactory works.

Key words-Red mountains

Key words-Forest

Key words-Blue jellyfish

AI can easily imitate the style of artists, and can even use these pictures to make video animation. I let it draw a painting in the style of Da Vinci.

Actually, their are still some disadvantages with the Disco Diffusion. For people who don’t good at painting, it may be difficult to find. But for professional graphic desighers and artists, they can find a plenty of wrongs with it. First, the building drawed by AI like Disco Diffusion often has a wrong perspective structure. For example, a building may consist of a top in an overlook view and a bottom in an upward view. This is because the pattern painting by AI is only a combination of materials, and it don’t really see the objects in a significant way. Similarly, the humans draw by AI often seem like monsters, for they often have three eyes, or with no hands. Maybe these problems can be solved in the future.

All in all, I think AI will be widely used in the networked performance, for it has huge productivity and unique views which are compeletely different from human artists.

Address of the Disco Diffusion: https://colab.research.google.com/github/alembics/disco-diffusion/blob/main/Disco_Diffusion.ipynb#scrollTo=Prompts

0 notes

Text

Plan-Network Elements

I want to discuss the network elements in my performance work.

In my work, multiple phones form a phone network, through which I can shuttle from a screen to another. When the camera points at the screen, these phones link to each other, creating the channels for the videos to spread in this network. At the same time, I get the oppotunities to travel to multiple virtual worlds created by the network. During the process of spreading, the videos were distorted into other shapes. “I’ in the screens’ virtual worlds also become differnet along with the change of videos.

Look back to the “net”, the net in the natural environment is the net weaved by spiders. The net help spiders to move, hunt and avoid predators. When a net is created, it can link every part of its to be more functional. The network in the performance also have similar functions to the natural net. I would continue to explore the application of network in the performance in the future.

0 notes

Text

Plan-Tools

These are the list of the tools I need in my performance.

Software

I will use some vedio editing softwares, such as Adobe Premiere Pro, to do some post processing for the video clips. To keep the original shape of the videos, I will not edit the color or add some effects to the video. But I will adjust the duration of videos to make sure they are eight-minute long.

Hardware

In order to complete this performance, I need to prepare enough electronic equipment with cameras. Part of those will from my existing devices in my home. And I plan to seek help from my team members and friends to find the other devices.

Props

In order to make different devices able to capture the screen of other devices, I also need to prepare some suitable brackets to adjust the positions between different devices.

In addition, I should make a model of a phone frame. For I should cross it, the model should higher than me. I use the black PVC to make it.

0 notes

Text

Research-Type of networked performance

There are several dimensions to identify the types of the networked performance.

First, considering the media of the nerworked performance:

Online performance,

Video art,

telematic performance,

Virtual theatre,

Cyberformance,

Digital performance,

Digital theatre,

Multimedia performance,

Intermedial performance,

New media dramaturgy,

VR performance,

AR performance,

MR performance.

Besides, we can consider the way it showed to audience:

Live,

Pre-made.

Then, the purpose of the networked performance.

For artistic purpose,

For commercial purpose.

In addition, we can also distinguish the networked performance on its Language-Based aspects.

Poetry,

Spoken word,

Storytelling,

Protest.

There are also other ways to identify the types, I will try to find more.

0 notes

Text

Research-Sander Vee

Sander Veenhof is an artist and researcher. He was born in 1973. Compared to nature world, he may prefer to spend time in a semi-digital world. Although he falls into a mixed reality universe which we will live in a far away future, his art practice is based on the present. Through developing AR technology in the modern world, he is able to stage his own speculative real life versions of an imaginary universe, and live in it. By this way he has oppotunities to explore what it is like to become a semi-digital being.

Veenhof started creating cross-reality projects a decade ago in the avatar world Second Life, then focused on AR. Last year, he put his researches on the Zoom reality. It turned us all into digital appearances, and Veenhof feel that he must to do something.

During the past decade, Veenhof has been devoted to the application of AR and VR in the modern art. He has a plenty of works about AR in the exhibitions around the world, including Mirror Sports, Be your own robot, Patent Alert, Augmented reality fashion, Exploring the future of identity and Avatar Says No.

Now, he is working at the NFT. He has made a NFT product called Beachingball. It is a gamefied filter on the Snap Camera. Users can use the patterns to unlock this filter in the app. To play with this filter, people will find a ball and two baffles on the screen. The movement of the baffles is related to the movement of users’ own eyebrows, so they can bounce the ball through squeezing their eyebrows. This add a lot of fun to the digital appearence. As it is an NFT product, buyers can be the unique owners of it.

Veenhof also has some predictions about the future of AR.

In the next two years, we will get a better understanding of the types of AR wearables on the market. People can choose which one to wear in which situation and for which purpose. The heavy duty glasses equipped with 3D tracking sensors will be a good choicel when we need virtual items positioned at specific spots in our physical surroundings. lightweight AR glasses will be sufficient for most occasions due to its convenience.

After a decade, some people will be wearing their AR glasses once in a while. But they will find that the usefulness of the devices will increase rapidly if they use it more often. AR wearables will learn from their users by analyzing their environment and their behavior. As a result, the recommendations will become better, more relevant and more valuable. And that will be an incentive to wear the the device more often, ending in a situation in which we will wear our glasses most of the time.

In 25 years, the last hardware hurdles will be solved. People who objected against wearing AR glasses will eventually be equipped with build-in displays in their retina. Living in an augmented reality is going to be default for those that can afford it. The type of software and the clouds you are in will define your quality of life. There will be a micropayment mechanism for the moments when AR will intervene in your life. And hopefully there will also be a public domain zone, where both creators and users can use AR with all of its powerful features without the constraints imposed by the commercial Big Tech companies. Their role of patent fighting entities and gatekeepers of our augmented world is one to be wary of.

All in all, Veenhof is optimitic to the development of AR in the future society, and he is expecting a semi-digital world.

Reference:

Veenhof S. SNDRV. Retrived from: https://sndrv.nl/index.php?index=contact

Veenhof S. (2019). Interview: what to expect from AR in the near future?. Retrived from: https://sndrv.nl/blog/interview-what-to-expect-from-ar-in-the-near-future/

Veenhof S. NFT. Retrived from: https://sndrv.nl/nft/index.php?page=unlock

0 notes

Text

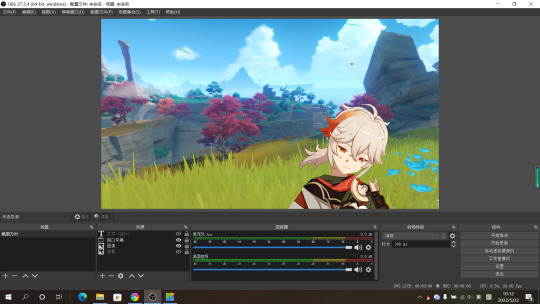

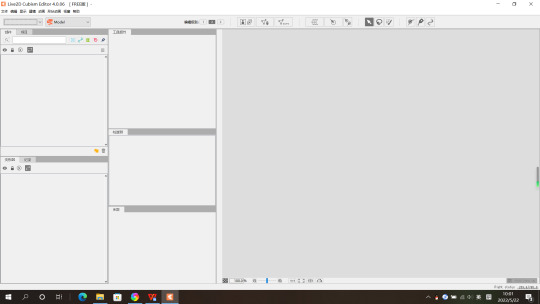

Research-OBS Studio and Live 2D

OBS Studio is a live video recording software, providing users with video, text, images and other capture and recording functions. Today, many vups and Youtubers use OBS studio to live and record the videos, which help a lot for their career experience.

In my opinion, OBS studio has many advantages compared to other similar software.

First, OBS studio provide High-performance real-time video/audio capture and mixing. Users can create scenes consisting of multiple sources, including window capture, images, text, browser windows, webcam and so on.

Second, It can set up an unlimited number of scenes. Users can easily change from a scene to another.

Third, it has visual audio mixer, including noise gate, noise suppression and gain, which can help users to avoid the disturbing of noise.

Next, it has owerful and easy-to-use configuration options.Users can add a new source, copy an existing source, and easily adjust their properties.

In addition, its simplified settings panel gives users access to various configuration options to adjust every aspects of broadcast or recording.

Finally, OBS Studio is equipped with a powerful API that enables plug-ins and scripts to provide further customization functions to meet users specific needs. Users can interact with existing resources using scripts written in Lua or Python.

In the classes of CAT206, we have learn the basic use of the OBS. But during the process of live, we often use a wide range of tools and get them together to bring a better effect.

To test the futher use of the OBS Studio, I bought a software called Live2Dexpert on the Steam. This software provide many live2D models, which can move with the change of the users’ expression. These models can be put on the desktop of PC, and they can also be used in the virtual camera in the OBS Studio.

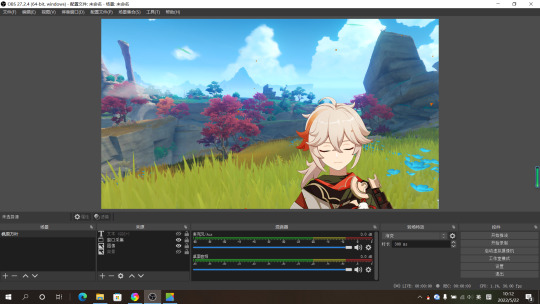

For example, this is a free Live2D model of a character from the game Genshin Impact. I create a new window to open the model, and then I set up a new scene in the OBS Studio. Choosing the window capture in the OBS Studio, the character will appear at the virtual camera. With this virtual camera, I can play the character from the game during a live. It really helps in some role playing performance.

Of course, the models provided by the Live 2D ViewerEX may not feed the need of the users. There is a also a software called Live2D Cubism which aim to help people to make Live 2D models. With the help of the Live2D Cubism Editor, I can even make a Live2D model of my own character, and regard it as aa avator in the virtual world.

0 notes

Text

Planning-Improved

After last week's test, I have spent two days reflecting my performance plan and improving it.

First, the name of the project has changed. It was ‘A Screen in a screen’, and now it is called ‘From a screen to a screen’. It sounds more like a motion. To coincide with the new name, the content and shooting way of the performance are also changed.

In addition, now my performance has a clearer content. In previous plans, inspired by some online performances, I decided to just present the changes of the videos that caused by multiple cameras. In the performance, the specific content of the performance is not the most important. As for the performance content, I once wanted to introduce my project to the audience during the performance, which enabled the audience to understand the focus of my work. But the performance like this may be too boring, and the audience may not want to watch the whole introduction. After critical thinking, I think I should add some plots to make the whole performance process more interesting, while telling the audience what my performance was doing.

After the modification, my performance content is like this: I will set up a huge mobile phone frame model on the ground. During the performance, I will cross the phone frame model again and again, and then go back. My behavior is a metaphor for myself moving from one screen to another new screen, which viewers can easily see. Every time I enter the phone frame model, it means that I arrive at a new screen.

Finally, the shooting way has also changed. Because of the light and the space, I decided not to shoot with multiple devices simultaneously. Instead, I will use only one mobile phone to shoot my performance process. After shooting, I will re-shoot the video using other equipments in a soft light room. Eventually I will get multiple videos which were distorted by the screen to varying degrees. This can avoid the problem of the dim videos when I shoot outside, while retaining the distortion effect of the screen on the videos. For these videos, I will use the editing software to process them. I will combine the pieces from different videos based on my action in the performance: First, I will appear in the first video. Then, I will try to cross the phone frame model. After I cross it, at the same time, the video played will be replaced with the piece from the second video, which represents that I have come to the second screen. And so on, whenever I cross the phone frame model, the source of the video will be replaced with a new piece of the next video. All in all, the change of the source of the video is synchronized with my action in the performance. This makes the audience directly see the video’s change as I keep crossing the phone frame model. This will strengthen the expressive force, for my action become the sign of the change. A gradually changing videos can make the audience pay more attention to the impacts caused by the re-shooting than multiple different videos.

Example 1-The first video, try to cross it

Example 2-The second video, re-shot, have crossed it

Of course, new plan will need more editing works, and I must make some props. But I think it is worth.

0 notes

Text

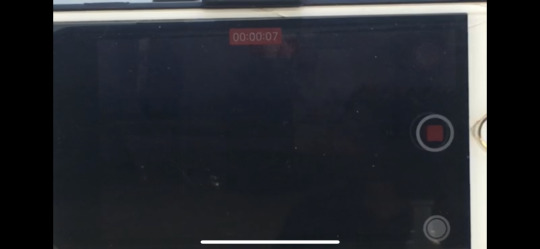

Planning-Test

Last week, I had take a first test for my networked performance. The shooting was taken in a park near my home. I carried a total of six devices and six mobile phone stands to put them in a row. My test was to see what happens when the image is taken multiple times. Because this was a test, I didn't appear in the video, instead of a simple view of environment around.

I used these devices to record a video about three minutes. In fact, the shooting effect was not very ideal. The reason for the unsatisfactory shooting effect is the light from the outdoor environment. In the first video, because the image was taken directly from the real world, it looks fine. But when it came to the second device, there were some problems. Since the shooting site was outside, the phone's screen was very dark compared to the sun shine. When I shot the screen of the previous phone after using the second device, the image became very dim. When the image reached the third phone, it was almost unrecognizable. This made subsequent images meaningless, as subsequent images remain a darkness.

Screenshot 1

Screenshot 2

Screenshot 3-Just a black

The solution I came up with for this situation is to shoot indoors.The light inside is not as strong as outside, and does not cause the image to be too dark.But when shooting indoors, the space will be a new problem.Due to the current epidemic, I can't find a suitable studio for shooting, so I have to shoot at home.It is difficult to put so much equipment in the small space of the home, and adjusting the distance between the equipment will be more troublesome.

The results of this test showed me that I might have to make some changes to the way the performance was filmed.

0 notes

Text

Planning-Origin

In this network performance project, I will plan to use nine electronic devices with camera to record a series of videos. One of them is used to shoot the natural environment, and the other eight are used to record my shooting process. Before the process of recording, I will adjust the locations of different devices to let each camera aim at a screen. So the screen of the front device will appear in the screen of the device behind it. Then, I will use them to shoot a video lasting about eight minutes. The video recorded by the phone in my hand will not be used. After post processing, I will post these eight videos on a video website and form a series.

Generally, people believe that what they see is the real world, because everything we see seems so real. If we don't believe in this premise, we will meet a plenty of difficulties in our daily life.

In the modern society, electronic devices such as mobile phones have become very popular. One of the most useful functions of mobile phones is to take pictures. Benefiting of its portable volume, people can use mobile phones to take pictures anytime and anywhere, and post these picture on social media to share their lives. Why most people like to take pictures is that they believe human’s memory is not reliable, while pictuces have ability to accurately records the life to help them avoid forgetting significant memories.

For people who have multiple electronic devices, it is easy to notice that different devices make differences on their images. Because of the chromatic aberration, some devices take a brighter photo color, while other devices take a darker color. For old cameras of poor quality, we can even see pixels on the pictures so that we sometimes can't identify the objects. But it is difficult to say whether the brighter one or the darker one is more similar to the real world.

This makes me think that if the photos really record the real world situation. If the camera records the real world, how much does it reflect? How similar is the photo to the real world? Baudrillard (1981) said in his book that public medias had make the simulation replace the reality. Pictures are only the vestiges of the real objects, and they have lost their prototypes. In another perspective, we can say that every time we shoot the real world with our camera, we create a new virtual world in the photos. Therefore, different photos reflect different virtual worlds.

When I take a picture of myself with a mobile phone, an image of myself will appear in the screen of the mobile phone. And when I use the second mobile phone to shoot, both myself and the screen of the first phone will appear in the screen of the second mobile phone. Is the image of me from the real world or the first phone;s screen? Are they have any differences? As this action repeats, I will finally have an image that passes through multiple screens. Perhaps it will be very different from the original reality.

My idea is partly inspired by the work of Cloninger (2018), which calls Shapes of You. In this work, the author edit the song of Shape of you and publish them to the internet, which were warned by the AI copyright detection. In my performance, I have no tend to artificially edit the videos, but to make the videos seem different to each others by the imperfection of cameras.

When I put these videos together and form a series, the audience can very clearly observe the differences between the videos. The first video is the closest to the original scene, while the second video shows the influence of both the first device and the second device. It is like that evey device add a filter to the video. Finally, the influence add together and makes the video beyond all recognition.

Considering the exhibition’s theme is about virtual world, I hope through watching and comparing this series of videos, people can think about the relationship between the virtual world of pictures to the real world.

Reference:

Baudrillard, J. (1981) Simulacra and Simulation. Michigan: University of Michigan Press

Cloninger, C. (2018) Shapes of You. Availble at:

http://lab404.com/shapes/ (Accessed: 29 March 2022).

0 notes

Text

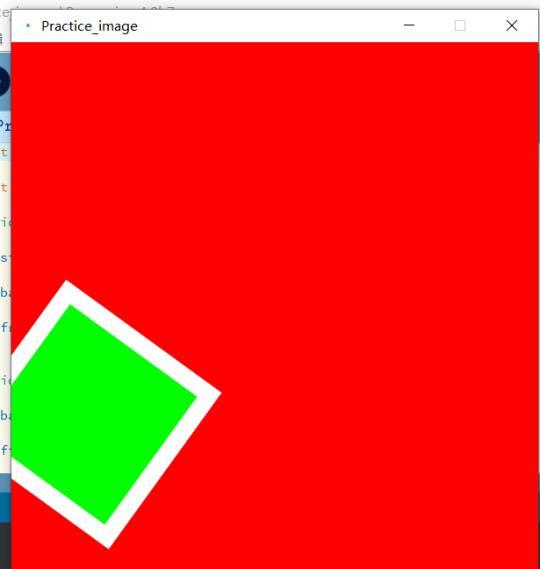

The First day of learning Processing

On today’s lecture we learned how to code in the Processing. I haven’t known this application before, but I found it is not so difficult as I thought to code using the Processing.

To get familiar with the Processing, I searched for some video tutorials on the internet, and I found that Processing can also be used in artistic installation, interaction design and visual communication. For I am studying on interaction design, I think Process will play an important role in my future works.

Below is my practice after the lecture, it’s simple and I will improve it in the future.

int xposition = 0;

int rotate = 0;

void setup() { size(600, 600);

background(255, 0, 0);

frameRate(20); }

void draw() { background(255, 0, 0);

fill(0, 255, 0);

stroke(255, 255, 255);

strokeWeight(20);

translate(width / 2, height /2);

float r = radians(rotate);

rotate(r);

rect(xposition, 0, 200, 200);

if (rotate > 360) { rotate = 0; } else { rotate = rotate + 2; }

if (xposition > 600) { xposition = 0; } else { xposition = xposition + 2; } }

1 note

·

View note

Text

Research-Shape of You

I’ve seen Curt Cloninger’s networked performance “Shape of You”. To be honest, I went into the website because I was very familiar with the song. Ed Sheeran's song is so famous that I often hear it in public places. One of my junior high school classmates liked English songs, especially this song. I’m sure I’ll recognize the shape of “Shape of You” no matter how its shape changes.

Clearly, the central concern of this performance is identity and change. This is clear from the author’s question: At what point does a song become other than itself? When you drum along with a song that is becoming other than itself, what do you become?

Actually, this is not a new question. The question of identity has been discussed by countless philosophers throughout history. And the most classic pardoxes about it is the Ship of Theseus. If all parts of a ship have been replaced, is it still the same ship? Maybe it is and maybe it isn’t.

People have been fighting about this question for thousands of years, but no one has ever asked for the advice of copyright detection algorithm. Instead, the author wants to test if AI can answer the question by challenging the intelligence of an artificial detective designed by brilliant computer scientists. Will Facebook’s copyright Detection Algorithm answer this question better than a human?

At first, the author was warned by the AI. As the author processed Shape of you, AI finally surrendered. It’ was like that AI was saying: Yes, this song isn’t Shape of you anymore. Whether or not AI’s answer is worth believing, Curt Cloninger’s performance is an excellent artistic exercise in the subject of traditional philosophy.

Cloninger, C. (2018). Shapes of you Retrieved from: http://lab404.com/shapes/

1 note

·

View note

Text

Research-Virtual Singer

In 2007, a Japan company publish a audio library based on Yamaha's Vocaloid series of voice synthesis program, which called Hatsune Miku. Now, she has been the most famous virtual singer around the world. Some fans even celebrate Hatsune Miku’s birthday in the reality.

After introducing the most famous virtual idol, I would like to briefly review the development of Chinese virtual idols. China's first virtual idol is an imitation of Hatsune Miku, and her name is Oriental Gardenia. She has a double ponytail hairstyle similar to Hatsune Miku's, but her hair is orange. People regarded Oriental Gardenia as a failed idol, and she was soon forgotten.

It was followed by Vocaloid China, a virtual singer group launched by Shanghai Henian. The members of this virtual group include Luo Tianyi, Yuezheng Ling, Yuezheng Longya, Zhiyu Moke, Mo Qingxuan, and later Yan He. Vocaloid China is gaining popularity in China, and Luo Tianyi is the most famous virtual singer in the country.

Despite being virtual isingerls, they also participate in offline activities. At the CCTV’s 2022 Lantern Festival gala on Feb 15, virtual singer Luo Tianyi and real singer Liu Yuning sang Time to Shine, an excellent song about winter Olympics originally sung by Luo. The video of the show has been viewed more than 100 million times online.

How does a virtual singer perform with a real person? With the help of modern entertainment technology, there are mainly two ways to achieve. One is through holographic projection technology, the virtual image is directly projected onto the stage, so the audience can directly see the virtual idol. The other way uses augmented reality technology to superimpose virtual characters on the video of live, and the audience can't see them directly.

Compared with traditional singer’s activities, fans have more participation in every link of performance activities, which is the possibility brought by modern network performance technology. Whether it is Luo Tianyi or Hatsune Miku, they are virtual singers created by the public. The virtual singers themselves are just audio library, brought to life by countless network producers behind them. Some of these fans use their musical talents to create songs with strong self-style, and then sing them through the mouth of virtual singers; Some use 3D modeling software to create exquisite models of the virtual idol, allowing them to display wonderful dance on the screen.

Fans of virtual singers are not only fans but also the creators. They regard virtual singer as a channel to show themselves. In other words, the fans of virtual singers create their ideal self.

Manxiaomi. (2022). Luo Tianyi on 2022 Lantern Festival gala. Retrieved from: https://www.sohu.com/a/523229520_121034403

2 notes

·

View notes

Text

The first impression of the network performance

In today world, network performance has become popular among young people. This thanks to the effective way for information transmission of the internet.

When it came to the start of this semester’s class, the first concept appeared in my mind which was closely linked with “Network Performance” is “Virtual singer”.

I had known virtual singer when I was in junior high school. Compared to that period, virtual singer isn’t a narrow thing now.

I will discuss the virtual singer in the next post in my spare time.

2 notes

·

View notes

Text

The introduction of this blog

This blog is created to post the class notes and study materials related to CAT206TC Technology and Performance.

1 note

·

View note