Don't wanna be here? Send us removal request.

Text

Unlock the full potential of AI by harnessing the power of sound. High-quality audio datasets are the foundation for groundbreaking innovations in speech recognition, voice assistants, and more. By using diverse and meticulously curated audio data, your AI models can achieve superior accuracy and responsiveness. At GTS.ai, we specialize in providing the audio datasets that drive AI advancements, enabling your systems to interact more naturally and effectively with users. Elevate your AI projects with the sound data they need to innovate and succeed.

0 notes

Text

Unlock the potential of Japanese OCR technology with our meticulously crafted dataset, designed to enhance text recognition accuracy. This dataset features high-quality images paired with precise transcriptions, tackling the unique challenges of the Japanese language. By improving OCR performance, this data collection effort ensures reliable results in AI-powered text recognition systems. Whether for research, application development, or AI model training, this dataset is a valuable asset for advancing OCR technology in complex scripts. Explore the future of text recognition with a focus on quality and precision in data.

0 notes

Text

Empower your machine learning projects with the perfect dataset from GTS.ai. Our meticulously curated datasets are designed to optimize model performance, minimize bias, and support robust AI development. Whether you're training or testing, GTS.ai provides the high-quality data you need to drive innovation and achieve reliable, scalable results.

0 notes

Text

Harness the power of AI and machine learning to unlock the full potential of video transcription. Our advanced video transcription services deliver unparalleled accuracy and efficiency, transforming spoken words into text with precision. This innovative solution enhances the performance of your machine learning models, providing high-quality data for better insights and decision-making. Ideal for industries such as media, education, healthcare, and legal, our AI-driven transcription services streamline data processing, saving time and reducing costs. Experience the future of video transcription and elevate your data-driven projects with our cutting-edge technology.

0 notes

Text

OCR Datasets

0 notes

Text

The Role of AI in Video Transcription Services

Introduction

In today's fast-changing digital world, the need for fast and accurate video transcription services has grown rapidly. Businesses, schools, and creators are using more video content, so getting transcriptions quickly and accurately is really important. That's where Artificial Intelligence (AI) comes in – it's changing the video transcription industry in a big way. This blog will explain how AI is crucial for video transcription services, showing how it’s transforming the industry and the many benefits it brings to users in different fields.

AI in Video Transcription: A Paradigm Shift

The use of AI in video transcription services is a big step forward for making content easier to access and manage. By using machine learning and natural language processing, AI transcription tools are becoming much faster, more accurate, and efficient. Let’s look at why AI is so important for video transcription today:

Unparalleled Speed and Efficiency

AI-powered Automation: AI transcription services can process hours of video content in a fraction of the time required by human transcribers, dramatically reducing turnaround times.

Real-time Transcription: Many AI systems offer real-time transcription capabilities, allowing for immediate access to text versions of spoken content during live events or streaming sessions.

Scalability: AI solutions can handle large volumes of video content simultaneously, making them ideal for businesses and organizations dealing with extensive media libraries.

Enhanced Accuracy and Precision

Advanced Speech Recognition: AI algorithms are trained on vast datasets, enabling them to recognize and transcribe diverse accents, dialects, and speaking styles with high accuracy.

Continuous Learning: Machine learning models powering AI transcription services continuously improve their performance through exposure to more data, resulting in ever-increasing accuracy over time.

Context Understanding: Sophisticated AI systems can grasp context and nuances in speech, leading to more accurate transcriptions of complex or technical content.

Multilingual Capabilities

Language Diversity: AI-driven transcription services can handle multiple languages, often offering translation capabilities alongside transcription.

Accent and Dialect Recognition: Advanced AI models can accurately transcribe various accents and regional dialects within the same language, ensuring comprehensive coverage.

Code-switching Detection: Some AI systems can detect and accurately transcribe instances of code-switching (switching between languages within a conversation), a feature particularly useful in multilingual environments.

Cost-effectiveness

Reduced Labor Costs: By automating the transcription process, AI significantly reduces the need for human transcribers, leading to substantial cost savings for businesses.

Scalable Pricing Models: Many AI transcription services offer flexible pricing based on usage, allowing businesses to scale their transcription needs without incurring prohibitive costs.

Reduced Time-to-Market: The speed of AI transcription can accelerate content production cycles, potentially leading to faster revenue generation for content creators.

Enhanced Searchability and Content Management

Keyword Extraction: AI transcription services often include features for automatic keyword extraction, making it easier to categorize and search through large video libraries.

Timestamping: AI can generate accurate timestamps for transcribed content, allowing users to quickly navigate to specific points in a video based on the transcription.

Metadata Generation: Some advanced AI systems can automatically generate metadata tags based on the transcribed content, further enhancing searchability and content organization.

Accessibility and Compliance

ADA Compliance: AI-generated transcripts and captions help content creators comply with accessibility guidelines, making their content available to a wider audience, including those with hearing impairments.

SEO Benefits: Transcripts generated by AI can significantly boost the SEO performance of video content, making it more discoverable on search engines.

Educational Applications: In educational settings, AI transcription can provide students with text versions of lectures and video materials, enhancing learning experiences for diverse learner types.

Integration with Existing Workflows

API Compatibility: Many AI transcription services offer robust APIs, allowing for seamless integration with existing content management systems and workflows.

Cloud-based Solutions: AI transcription services often leverage cloud computing, enabling easy access and collaboration across teams and locations.

Customization Options: Advanced AI systems may offer industry-specific customization options, such as specialized vocabularies for medical, legal, or technical fields.

Quality Assurance and Human-in-the-Loop Processes

Error Detection: Some AI transcription services incorporate error detection algorithms that can flag potential inaccuracies for human review.

Hybrid Approaches: Many services combine AI transcription with human proofreading to achieve the highest levels of accuracy, especially for critical or sensitive content.

User Feedback Integration: Advanced systems may allow users to provide feedback on transcriptions, which is then used to further train and improve the AI models.

Future of AI in Video Transcription: Navigating Opportunities

Looking ahead, the role of AI in video transcription services is poised for further expansion and refinement. As natural language processing technologies continue to advance, we can anticipate:

Enhanced Emotion and Sentiment Analysis: Future AI systems may be able to detect and annotate emotional tones and sentiments in speech, adding another layer of context to transcriptions.

Improved Handling of Background Noise: Advancements in audio processing may lead to AI systems that can more effectively filter out background noise and focus on primary speakers.

Real-time Language Translation: The integration of real-time translation capabilities with transcription services could break down language barriers in live international events and conferences.

Personalized AI Models: Organizations may have the opportunity to train AI models on their specific content, creating highly specialized and accurate transcription systems tailored to their needs.

Conclusion: Embracing the AI Advantage in Video Transcription

Integrating AI into video transcription services represents a major step forward in improving content accessibility, management, and utilization. AI-driven solutions provide unparalleled speed, accuracy, and cost-effectiveness, making them indispensable in today's video-centric environment. Businesses, educational institutions, and content creators can leverage AI for video transcription to boost efficiency, expand their audience reach, and derive greater value from their video content. As AI technology advances, the future of video transcription promises even more innovative capabilities, reinforcing its role as a critical component of contemporary digital content strategies.

0 notes

Text

The Basics of Speech Transcription

Speech transcription is the process of converting spoken language into written text. This practice has been around for centuries, initially performed manually by scribes and secretaries, and has evolved significantly with the advent of technology. Today, speech transcription is used in various fields such as legal, medical, academic, and business environments, serving as a crucial tool for documentation and communication.

At its core, speech transcription can be categorized into three main types: verbatim, edited, and intelligent transcription. Verbatim transcription captures every spoken word, including filler words, false starts, and non-verbal sounds like sighs and laughter. This type is essential in legal settings where an accurate and complete record is necessary. Edited transcription, on the other hand, omits unnecessary fillers and non-verbal sounds, focusing on producing a readable and concise document without altering the speaker's meaning. Intelligent transcription goes a step further by paraphrasing and rephrasing content for clarity and coherence, often used in business and academic settings where readability is paramount.

The Process of Transcription

The transcription process typically begins with recording audio. This can be done using various devices such as smartphones, dedicated voice recorders, or software that captures digital audio files. The quality of the recording is crucial as it directly impacts the accuracy of the transcription. Clear audio with minimal background noise ensures better transcription quality, whether performed manually or using automated tools.

Manual vs. Automated Transcription

Manual transcription involves a human transcriber listening to the audio and typing out the spoken words. This method is highly accurate, especially for complex or sensitive content, as human transcribers can understand context, accents, and nuances better than machines. However, manual transcription is time-consuming and can be expensive.

Automated transcription, powered by artificial intelligence (AI) and machine learning (ML), has gained popularity due to its speed and cost-effectiveness. AI-driven transcription software can quickly convert speech to text, making it ideal for situations where time is of the essence. While automated transcription has improved significantly, it may still struggle with accents, dialects, and technical jargon, leading to lower accuracy compared to human transcription.

Tools and Technologies

Several tools and technologies are available to aid in speech transcription. Software like Otter.ai, Rev, and Dragon NaturallySpeaking offer various features, from real-time transcription to integration with other productivity tools. These tools often include options for both manual and automated transcription, providing flexibility based on the user’s needs.

In conclusion, speech transcription is a versatile and essential process that facilitates accurate and efficient communication across various domains. Whether done manually or through automated tools, understanding the basics of transcription can help you choose the right approach for your needs.

0 notes

Text

AI-powered speech transcription is revolutionizing industries by providing accurate, real-time voice-to-text conversion. This technology enhances accessibility, customer service, and data analysis across sectors like healthcare, legal, and education. By leveraging advancements in natural language processing and machine learning, AI speech transcription systems can handle diverse accents, languages, and speech patterns with increasing precision. As the technology continues to evolve, future trends include improved real-time capabilities, enhanced security measures, and broader applications in smart devices and virtual assistants, paving the way for a more interconnected and efficient digital world.

1 note

·

View note

Text

Unlock the potential of your AI projects with high-quality data. Our comprehensive data solutions provide the accuracy, relevance, and depth needed to propel your AI forward. Access meticulously curated datasets, real-time data streams, and advanced analytics to enhance machine learning models and drive innovation. Whether you’re developing cutting-edge applications or refining existing systems, our data empowers you to achieve superior results. Stay ahead of the competition with the insights and precision that only top-tier data can offer. Transform your AI initiatives and unlock new possibilities with our premium data annotation services. Propel your AI to new heights today.

0 notes

Text

Unlock the potential of your NLP and speech recognition models with our high-quality text and audio annotation services. GTS offer precise transcription, sentiment analysis, entity recognition, and more. Our expert annotators ensure that your data is accurately labeled, helping your AI understand and process human language better. Enhance your chatbots, virtual assistants, and other language-based applications with our reliable and comprehensive annotation solutions.

0 notes

Text

Challenges and Best Practices in Data Annotation

Data annotation is a crucial step in training machine learning models, but it comes with its own set of challenges. Addressing these challenges effectively through best practices can significantly enhance the quality of the resulting AI models.

Challenges in Data Annotation

Consistency and Accuracy: One of the major challenges is ensuring consistency and accuracy in annotations. Different annotators might interpret data differently, leading to inconsistencies. This can degrade the performance of the machine learning model.

Scalability: Annotating large datasets manually is time-consuming and labor-intensive. As datasets grow, maintaining quality while scaling up the annotation process becomes increasingly difficult.

Subjectivity: Certain data, such as sentiment in text or complex object recognition in images, can be highly subjective. Annotators’ personal biases and interpretations can affect the consistency of the annotations.

Domain Expertise: Some datasets require specific domain knowledge for accurate annotation. For instance, medical images need to be annotated by healthcare professionals to ensure correctness.

Bias: Bias in data annotation can stem from the annotators' cultural, demographic, or personal biases. This can result in biased AI models that do not generalize well across different populations.

Best Practices in Data Annotation

Clear Guidelines and Training: Providing annotators with clear, detailed guidelines and comprehensive training is essential. This ensures that all annotators understand the criteria uniformly and reduces inconsistencies.

Quality Control Mechanisms: Implementing quality control mechanisms, such as inter-annotator agreement metrics, regular spot-checks, and using a gold standard dataset, can help maintain high annotation quality. Continuous feedback loops are also critical for improving annotator performance over time.

Leverage Automation: Utilizing automated tools can enhance efficiency. Semi-automated approaches, where AI handles simpler tasks and humans review the results, can significantly speed up the process while maintaining quality.

Utilize Expert Annotators: For specialized datasets, employ domain experts who have the necessary knowledge and experience. This is particularly important for fields like healthcare or legal documentation where accuracy is critical.

Bias Mitigation: To mitigate bias, diversify the pool of annotators and implement bias detection mechanisms. Regular reviews and adjustments based on detected biases are necessary to ensure fair and unbiased data.

Iterative Annotation: Use an iterative process where initial annotations are reviewed and refined. Continuous cycles of annotation and feedback help in achieving more accurate and reliable data.

For organizations seeking professional assistance, companies like Data Annotation Services provide tailored solutions. They employ advanced tools and experienced annotators to ensure precise and reliable data annotation, driving the success of AI projects.

#datasets for machine learning#Data Annotation services#data collection#AI for machine learning#business

0 notes

Text

Visualize the essential steps involved in AI data collection to streamline your data-driven projects. Follow these four key steps to ensure you gather, prepare, and validate the highest-quality data for your AI models.

0 notes

Text

Unlock the full potential of your AI and machine learning models with GTS expert data annotation services. Our team specializes in precise and accurate labeling, ensuring your data is meticulously prepared for high-performance algorithms. From image and video annotation to text and audio tagging, we offer a comprehensive range of services tailored to meet your specific needs. Trust us to deliver the quality and reliability that your AI projects demand.

1 note

·

View note

Text

Building a dataset for machine learning involves several key steps:

Define Objectives:

Clearly outline the purpose and goals of your dataset. Determine what problem you are trying to solve and what type of data is needed.

Data Collection:

Gather data from various sources such as web scraping, APIs, sensors, surveys, or existing databases. Ensure the data is relevant and sufficient for your objectives.

Data Annotation:

Label the data accurately. This might involve tagging images, transcribing audio, or categorizing text. Annotation tools and services can assist in this process.

Data Cleaning:

Clean the data to remove any errors, duplicates, or irrelevant information. This step is crucial to improve the quality and reliability of the dataset.

Data Preprocessing:

Transform the raw data into a suitable format for machine learning. This can include normalizing values, encoding categorical variables, and splitting data into training, validation, and test sets.

Data Augmentation (if applicable):

For tasks like image recognition, augment the data by applying transformations (e.g., rotations, flips) to increase the dataset's size and variability.

Validation:

Ensure the dataset is balanced and representative of the real-world scenario. Validate the data distribution and check for any biases.

Documentation:

Document the dataset creation process, including data sources, preprocessing steps, and any assumptions made. This aids in reproducibility and future use.

By following these steps, you can create a robust and reliable dataset tailored to your machine learning project's needs.

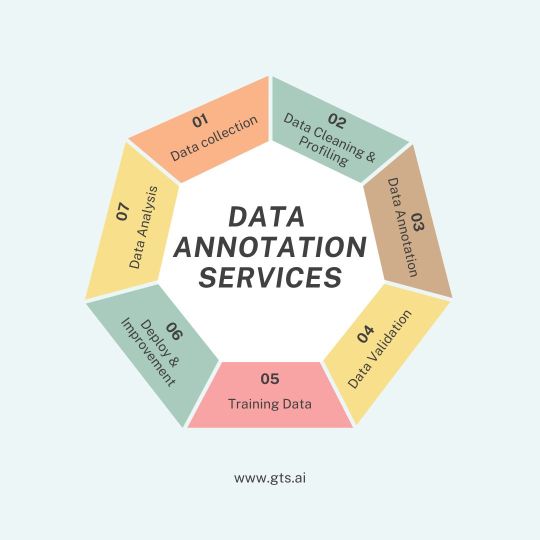

AI Dataset collection and Data Annotation Services

1 note

·

View note

Text

The image presents various types of data annotations used in machine learning and data analysis. From simple bounding boxes to intricate segmentation masks, each annotation method is crucial for accurately labeling data and improving model performance.

AI Dataset Collection and Data Annotation Services

1 note

·

View note

Text

Unlocking the Potential: Why ML Datasets for Computer Vision Are Crucial

The Foundation of Computer Vision

At its core, computer vision seeks to replicate the human visual system, allowing machines to identify objects, classify images, and even understand complex scenes. The journey towards achieving these capabilities begins with ML datasets. These datasets, which are collections of annotated images or videos, provide the raw material needed for training computer vision models. The quality, diversity, and size of these datasets directly influence the performance of AI systems in real-world applications, from autonomous vehicles navigating roads to automated medical diagnosis tools.

Enhancing Model Accuracy

One of the primary reasons ML datasets are crucial for computer vision is their role in enhancing model accuracy. A dataset that accurately represents the variety of the real world — encompassing different lighting conditions, angles, backgrounds, and object variations — trains models to be more robust and reliable. For instance, an autonomous driving system trained on a diverse dataset including images of roads under various weather conditions, at different times of the day, and in multiple geographical locations, is more likely to accurately recognize and respond to real-world driving scenarios.

Overcoming Bias

Another critical aspect of ML datasets for computer vision is their ability to help overcome bias. Bias in AI can lead to skewed or unfair outcomes, such as facial recognition systems that perform poorly on certain demographic groups. By ensuring datasets are inclusive and representative of the global population, developers can create computer vision models that are fair and equitable. This requires a deliberate effort to include a wide range of ethnicities, genders, ages, and other variables in the training data.

Facilitating Innovation

The availability of comprehensive and specialized ML datasets also facilitates innovation in computer vision. As researchers and developers gain access to datasets focused on specific challenges or domains, such as drone imagery for agricultural monitoring or x-ray images for healthcare, they can develop tailored solutions that address unique needs. This specialization enables the creation of cutting-edge applications that push the boundaries of what computer vision can achieve.

Challenges and Solutions

Despite their importance, the development and utilization of ML datasets for computer vision come with challenges. One major hurdle is the time and resources required to collect and annotate datasets. Annotation, the process of labeling images or videos to indicate what they contain, is particularly labor-intensive but crucial for training accurate models. Solutions to this challenge include leveraging crowdsourcing platforms, employing automated annotation tools, and fostering community-driven dataset-creation efforts. Moreover, it is crucial to prioritize privacy and ethical concerns when acquiring and utilizing visual data. Adhering to data protection regulations and ethical standards in the collection and utilization of datasets is imperative to uphold the trustworthiness and credibility of computer vision applications.

The Future of ML Datasets in Computer Vision

Moreover, the significance of privacy and ethical considerations cannot be overstated in the collection and utilization of visual data. It is vital to ensure that datasets are gathered and employed in accordance with data protection laws and ethical guidelines to uphold the trustworthiness and integrity of computer vision applications. Source - https://gts.ai/blog/unlocking-the-potential-why-ml-datasets-for-computer-vision-are-crucial/

1 note

·

View note