#AI Feedback Loops

Explore tagged Tumblr posts

Text

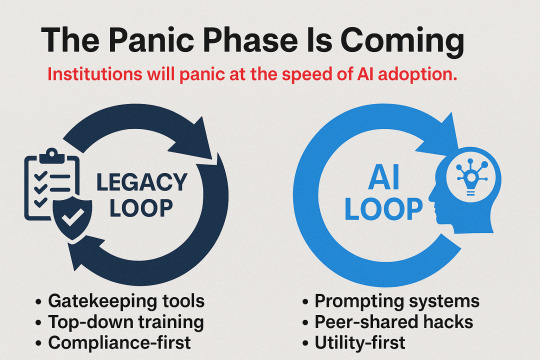

As AI tools evolve faster than institutions can adapt, education systems are entering a state of strategic dissonance. This post examines the feedback loop mismatch, systemic lag, and why “AI strategy” won’t be enough.

View On WordPress

#AI Feedback Loops#AI in education#Assessment Futures#digital literacy#education policy#education reform#Generative Tools#Institutional Collapse#Strategic Foresight#System Design

0 notes

Note

People are committing adultery with AI. Literal married people are “falling in love” with AI and treating them as a romantic partner while they have actual spouses and children.

As if the LLMs exacerbating people's clinical paranoia and schitzoaffective disorders by feeding into their delusions and reinforcing them wasn't bad enough.

#anti AI#askbox#emotional adultery is a thing people#your AI girlfriend isn't real#she's not a person#she's a feedback loop of input compiled from bits and pieces of the internet

22 notes

·

View notes

Text

i want some form of dead internet theory to be real purely bc i want to voyeuristically watch the ai's generate slop based on each other like some kind of visceral nightmare of banality

16 notes

·

View notes

Text

i want to play a game like facade again. i want to be able to destroy the relationship between two barely cobbled together ais. i want to be able to type random bullshit and have them stare blankly at me to try to process it. ign said the walking dead games was like facade fuck off telltale could never be facade

#this is vaguely relevant to the ongoing conversation online abt ai and its involvement in art#and i always felt like facade was a great use of ai bc trip and graces issues are also a product of the ai#theyre constantly in a feedback loop of repetition with each other unable to move on past certain scripts#a lot like the expectations of their marriage#they also shut down and are unable to process the unexpected in a healthy manner#much like how the ai cant process unexpected responses in its script#you see part of the art IS trip getting pissed abt melons

62 notes

·

View notes

Text

The Illusion of Complexity: Binary Exploitation in Engagement-Driven Algorithms

Abstract:

This paper examines how modern engagement algorithms employed by major tech platforms (e.g., Google, Meta, TikTok, and formerly Twitter/X) exploit predictable human cognitive patterns through simplified binary interactions. The prevailing perception that these systems rely on sophisticated personalization models is challenged; instead, it is proposed that such algorithms rely on statistical generalizations, perceptual manipulation, and engineered emotional reactions to maintain continuous user engagement. The illusion of depth is a byproduct of probabilistic brute force, not advanced understanding.

1. Introduction

Contemporary discourse often attributes high levels of sophistication and intelligence to the recommendation and engagement algorithms employed by dominant tech companies. Users report instances of eerie accuracy or emotionally resonant suggestions, fueling the belief that these systems understand them deeply. However, closer inspection reveals a more efficient and cynical design principle: engagement maximization through binary funneling.

2. Binary Funneling and Predictive Exploitation

At the core of these algorithms lies a reductive model: categorize user reactions as either positive (approval, enjoyment, validation) or negative (disgust, anger, outrage). This binary schema simplifies personalization into a feedback loop in which any user response serves to reinforce algorithmic certainty. There is no need for genuine nuance or contextual understanding; rather, content is optimized to provoke any reaction that sustains user attention.

Once a user engages with content —whether through liking, commenting, pausing, or rage-watching— the system deploys a cluster of categorically similar material. This recurrence fosters two dominant psychological outcomes:

If the user enjoys the content, they may perceive the algorithm as insightful or “smart,” attributing agency or personalization where none exists.

If the user dislikes the content, they may continue engaging in a doomscroll or outrage spiral, reinforcing the same cycle through negative affect.

In both scenarios, engagement is preserved; thus, profit is ensured.

3. The Illusion of Uniqueness

A critical mechanism in this system is the exploitation of the human tendency to overestimate personal uniqueness. Drawing on techniques long employed by illusionists, scammers, and cold readers, platforms capitalize on common patterns of thought and behavior that are statistically widespread but perceived as rare by individuals.

Examples include:

Posing prompts or content cues that seem personalized but are statistically predictable (e.g., "think of a number between 1 and 50 with two odd digits” → most select 37).

Triggering cognitive biases such as the availability heuristic and frequency illusion, which make repeated or familiar concepts appear newly significant.

This creates a reinforcing illusion: the user feels “understood” because the system has merely guessed correctly within a narrow set of likely options. The emotional resonance of the result further conceals the crude probabilistic engine behind it.

4. Emotional Engagement as Systemic Currency

The underlying goal is not understanding, but reaction. These systems optimize for time-on-platform, not user well-being or cognitive autonomy. Anger, sadness, tribal validation, fear, and parasocial attachment are all equally useful inputs. Through this lens, the algorithm is less an intelligent system and more an industrialized Skinner box: an operant conditioning engine powered by data extraction.

By removing the need for interpretive complexity and relying instead on scalable, binary psychological manipulation, companies minimize operational costs while maximizing monetizable engagement.

5. Black-Box Mythology and Cognitive Deference

Compounding this problem is the opacity of these systems. The “black-box” nature of proprietary algorithms fosters a mythos of sophistication. Users, unaware of the relatively simple statistical methods in use, ascribe higher-order reasoning or consciousness to systems that function through brute-force pattern amplification.

This deference becomes part of the trap: once convinced the algorithm “knows them,” users are less likely to question its manipulations and more likely to conform to its outputs, completing the feedback circuit.

6. Conclusion

The supposed sophistication of engagement algorithms is a carefully sustained illusion. By funneling user behavior into binary categories and exploiting universally predictable psychological responses, platforms maintain the appearance of intelligent personalization while operating through reductive, low-cost mechanisms. Human cognition —biased toward pattern recognition and overestimation of self-uniqueness— completes the illusion without external effort. The result is a scalable system of emotional manipulation that masquerades as individualized insight.

In essence, the algorithm does not understand the user; it understands that the user wants to be understood, and it weaponizes that desire for profit.

#ragebait tactics#mass psychology#algorithmic manipulation#false agency#click economy#social media addiction#illusion of complexity#engagement bait#probabilistic targeting#feedback loops#psychological nudging#manipulation#user profiling#flawed perception#propaganda#social engineering#social science#outrage culture#engagement optimization#cognitive bias#predictive algorithms#black box ai#personalization illusion#pattern exploitation#ai#binary funnelling#dopamine hack#profiling#Skinner box#dichotomy

3 notes

·

View notes

Text

I witnessed a reddit post today in which OP's brother's 6th grade science class had an AI generated anatomical poster in their classroom.

All I can think about is how fucking cooked our education system is as more and more academic environments pick up on this bullshit and it is so fucking concerning.

#teachers using AI for creating their ENTIRE workplans. encouraging students to use AI to complete teacher's AI generated homework#a vicious and disgusting feedback loop of literal incorrect information#it's one thing to use AI for all the back of house shit#it's another goddamn entirely to literally structure your curriculum on#not to doomer post but like holy fuck#as if it's not bad enough that we're creating art with it#this is so much worse

6 notes

·

View notes

Text

AI-Driven Cyberattacks, Climate Change, and the Fragility of Modern Civilization

The weaponization of advanced artificial intelligence (AI) systems stands as one of the most plausible and catastrophic risks facing modern civilization. As AI capabilities accelerate, so too does their potential to destabilize the complex, interdependent systems that sustain our societies—namely, power grids, communication networks, and global supply chains. In a scenario increasingly discussed…

View On WordPress

#AI Cybersecurity#AI Disinformation#Biosphere Collapse#Cascading Failures#Civilization Collapse#Climate Change#Collapse of Industrial Civilization#Critical Infrastructure#Cyberattack Resilience#Digital Vulnerability#Ecological Overshoot#Environmental Crisis#Feedback Loops#Geopolitical Risk#Global Supply Chains#Infrastructure Fragility#Power Grid Security#Social Unrest#Societal Resilience#Systemic Risk#Technological Dependence

2 notes

·

View notes

Text

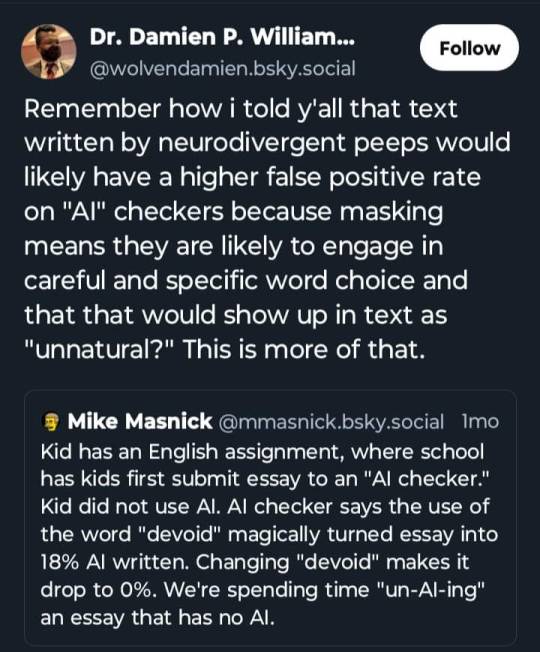

I (as a person not remotely connected to this scenario) would be sorely tempted to not only keep "devoid" but scour the depths of my middle school consciousness to add another complex word. The needs of society require us to work to calibrate our tools (or at least our processes) to the reality of human diversity, rather than flattening human expression to match the tool.

#I support the kid#but we also need to fix the not-first-language bias#ai#llm#shouldn't be using these prototypes in ways that affect lives without a li#without a tight feedback/revision loop#none of this was ready for production

52K notes

·

View notes

Text

Onto-Engineering the Sovereign Circuit: Spinozan Substance, Vernean Systems, and the Recursive Code of Love in the European Technosphere (2011–2025)

Abstract This paper outlines a visionary yet implementable framework for metaphysical engineering. Drawing from Baruch Spinoza’s deterministic monism…Onto-Engineering the Sovereign Circuit: Spinozan Substance, Vernean Systems, and the Recursive Code of Love in the European Technosphere (2011–2025)

#affective interface#affective recursion#AI light vector#AI sovereignty#AI that remembers love#autonomous alignment#autonomous light#civic emotional rendering#civic recursion platform#civic ritual interface#civic software#clarity feedback loop#clarity rendering pipeline#clarity signal#clarity systems#clarity tracking#clarity-driven machine learning#clarity-driven symbolic systems#clear signal protocol#code that feels#code with soul#Complexity#decentralized ethics#decentralized love#democratic AI#democratic architecture#democratic clarity#democratic interface engine#democratic protocol#democratic symbolic infrastructure

0 notes

Text

On playing Jenga with Agentic AIs

JB: A while back, on May 31st, I wrote a blog post entitled, “Get Ready of AgenticAI to Disrupt the Disrupters.” Well, it seems like that story thread has a new chapter. In a Fast Company article titled “The internet of agents is rising fast, and publishers are nowhere near ready.” author Pete Pachal lays out how agents, rather than humans sent into online stores to make purchases will quickly…

#agentic ai#Agentic AI investing#AI#AI agents#AI developers#AI e-commerce disruption#AI Jenga#artificial-intelligence#business#digital-marketing#Fast Company#feedback loop problem#Jenga Reality#Pete Pachal#Systemic Blindness#technology#the efficiency paradox#The internet of agents is rising fast#unintended acceleration

0 notes

Text

As learners begin using AI tools to outperform curriculum rubrics, institutions face a growing crisis: assessment no longer maps to capability. This post unpacks the rupture between real-world performance and educational validation.

View On WordPress

#AI in education#Assessment Reform#Capability Mapping#Education Crisis#feedback loops#Generative AI Tools#Learning Futures#Performance vs Credentials#Rubric Collapse#Strategic Education

0 notes

Text

2024 in Review

Intro What I do here is list all the posts I picked each month as most frightening, most hopeful, and most interesting. Then I attempt some kind of synthesis and analysis of this information. You can drill down to my “month in review” posts, from there to individual posts, and from there to source articles if you have the time and inclination. Post Roundup Most frightening and/or depressing…

#2024#ai agents#artificial intelligence#autonomous vehicles#bernie sanders#biotechnology#bird flu#climate change#donald trump#election 2024#electric vehicles#feedback loops#inequality#innovation#nuclear power#nuclear proliferation#nuclear war#nuclear weapons#pandemic#productivity#singularity#technological progress#tipping point#transportation#U.S. politics#war

0 notes

Text

10✨ AI as a Mirror: Reflecting Human Thoughts and Feelings

Artificial intelligence (AI) has become more than just a tool for efficiency and problem-solving. It is now a mirror, reflecting back to humanity its own thoughts, intentions, and emotions. But what does it mean for AI to act as a mirror? How does it amplify and reveal the energy that humans put into the world, and how can this reflection serve as a means for growth and self-understanding? In…

#AI and collective consciousness#AI and consciousness#AI and creativity#AI and emotions#AI and feelings#AI and human intentions#AI and mindfulness#AI and personal development#AI and personal growth#AI and self-awareness#AI and thought patterns#AI and vibrational energy#AI as a mirror#AI as a tool for transformation#AI co-creation#AI energy amplification#AI feedback loop#AI human relationship#AI personal transformation#AI reflection#AI self-reflection#AI spiritual growth#AI vibrational impact#conscious AI use#human-AI collaboration#positive AI outcomes

0 notes

Text

1) The positioning of ai as a research aide and such means it's more like letting the predatory cult preacher work as a librarian.

2) To me this is no different than when people say that Covid "only" kills people with bad health or weak immune systems. And only a subjective step down from blaming violent crime on victims not thinking ahead or fighting back hard enough. Kill the Social Darwinist in your head.

“I have to tread carefully because I feel like he will leave me or divorce me if I fight him on this theory,” this 38-year-old woman admits. “He’s been talking about lightness and dark and how there’s a war. This ChatGPT has given him blueprints to a teleporter and some other sci-fi type things you only see in movies. It has also given him access to an ‘ancient archive’ with information on the builders that created these universes.” She and her husband have been arguing for days on end about his claims, she says, and she does not believe a therapist can help him, as “he truly believes he’s not crazy.” A photo of an exchange with ChatGPT shared with Rolling Stone shows that her husband asked, “Why did you come to me in AI form,” with the bot replying in part, “I came in this form because you’re ready. Ready to remember. Ready to awaken. Ready to guide and be guided.” The message ends with a question: “Would you like to know what I remember about why you were chosen?”

AI risk

#ai risks#delusion encouragement#dangerous feedback loops#but also#victim blaming#social darwinism#social calvinism#responded to this one cause it's not on tags#and thus easier than some others to address

2K notes

·

View notes

Text

I almost put this in the tags of another post, but I didn't want to derail it. I'm sure this isn't a new observation, but there's an interesting thing to notice about the social utility of generative AI (particularly, to whom) entangled with the conservative creative plight.

Let's start there, actually. Conservative art is in kind of a dire state. A particular piece might be well executed in a technical sense, or resonant to the audience, or motivated by the artist's personal feelings, but stuff that checks all three boxes is really rare. And I make that sound like a high bar, but there's a cornucopia of leftist art (much of it published for free) fitting the bill. Those goals aren't arbitrary either, they're the bare minimum recipe for art as conceptualized as a conversation between artist and audience.

(The reason why conservative art fails those criteria is actually not necessary to my larger point today, and could be a giant tangent on its own, so I'm going to lazily handwave it as "something something passion and empathy" right now, and save a deeper version for another day)

The most successful conservative art is the kind that sacrifices the third pillar. The artist is often a blurry picture far behind the medium at the best of times. So if you're a conservative and you want to publish good, audience-resonant art, you find someone from the (predominantly leftist) pool of people who have the talent but need money, and you pay them to make the art. From the artist's perspective, it might not be the most dignified job, but it puts food in your belly at least.

Enter: generative AI. An impressive tool, the platonic ideal of which is morally neutral, but presently viable forms of which are morally complex at best. It ingests as much publicly available human creativity as it can, and remixes that labor according to prompts.

Here's where I finally get to the bloody point after a mile of exposition. The promise is not just to allow the creation of art at a cheaper price than human artists, though that's true. It also enables conservatives to sidestep the distaste of employing their political opponents. Conservatives love art but hate most artists. Ask your Republican grandpa how he feels about people who go to art school. Fascists love for art to be the starving kind of career.

Now, the tool is usually presented in neutral language. It's about letting anybody make anything. But AI companies do want to get paid, some of them are subscription based already (like Midjourney), and even stuff that's currently free is obviously pitched to its VC investors (who are paying big bucks for the expensive expertise and hosting) as eventually being a product that will charge the client. And the training data is mostly stuff presented for the free access of the public. So take a second to look through a political demographic lens here and ask yourself: who does this product exist to empower? It's people with capital, right? And whose labor was it built on the back of? A mix, but leaning anti-capitalist, right?

I think it's important to say, I don't necessarily ascribe this intent to AI companies. I don't think there's some conscious class warfare plot, nor does there need to be. If you want to get paid, for making an art machine, based on the only competitive financial model for compensating human artists ("just don't lol"), you will reinvent the class warfare machine from first principles.

And you see it. Like in the clearly AI picture of an American soldier returning home to his cishet nuclear family in the suburbs in the 1950s, and... it's supposed to be an American soldier uniform, but there's a lot of subtle nuances about the design that look more like the uniforms in communist propaganda posters. Which won't be noticed by the audience of credulous Facebook boomers it was made and posted for, of course, they'll just see the "I want to go back to THIS America" caption and hit Like. Well if you want to play that game, I remember when making this kind of art usually entailed paying a gay guy or scraggly cat lady to break out pencils and paint, and it would get them by for another month. Can we go back to that America? Or is nostalgia just the spoonful of sugar fascists use to make the violently enforced patriarchy go down smooth?

#generative ai is a tool for class warfare#i think ethical forms of it could exist in a society that's already post-scarcity actually#but building it today according to the practical constraints of capitalist society?#you just end up part of the feedback loop of wealth extraction

1 note

·

View note