#AI and ML integration

Explore tagged Tumblr posts

Text

#AI and Machine Learning#AI and Machine Learning Integration#AI app development#AI and ML integration

0 notes

Text

The AIoT Revolution: How AI and IoT Convergence is Rewriting the Rules of Industry & Life

Imagine a world where factory machines predict their own breakdowns before they happen. Where city streets dynamically adjust traffic flow in real-time, slashing commute times. Where your morning coffee brews automatically as your smartwatch detects you waking. This isn’t science fiction—it’s the explosive reality of Artificial Intelligence of Things (AIoT), the merger of AI algorithms and IoT ecosystems. At widedevsolution.com, we engineer these intelligent futures daily.

Why AIoT Isn’t Just Buzzword Bingo: The Core Convergence

Artificial Intelligence of Things fuses the sensory nervous system of IoT devices (sensors, actuators, smart gadgets) with the cognitive brainpower of machine learning models and deep neural networks. Unlike traditional IoT—which drowns in raw data—AIoT delivers actionable intelligence.

As Sundar Pichai, CEO of Google, asserts:

“We are moving from a mobile-first to an AI-first world. The ability to apply AI and machine learning to massive datasets from connected devices is unlocking unprecedented solutions.”

The AIoT Trinity: Trends Reshaping Reality

1. Predictive Maintenance: The Death of Downtime Gone are days of scheduled check-ups. AI-driven predictive maintenance analyzes sensor data intelligence—vibrations, temperature, sound patterns—to forecast failures weeks in advance.

Real-world impact: Siemens reduced turbine failures by 30% using AI anomaly detection on industrial IoT applications.

Financial upside: McKinsey estimates predictive maintenance cuts costs by 20% and downtime by 50%.

2. Smart Cities: Urban Landscapes with a Brain Smart city solutions leverage edge computing and real-time analytics to optimize resources. Barcelona’s AIoT-powered streetlights cut energy use by 30%. Singapore uses AI traffic prediction to reduce congestion by 15%.

Core Tech Stack:

Distributed sensor networks monitoring air/water quality

Computer vision systems for public safety

AI-powered energy grids balancing supply/demand

3. Hyper-Personalized Experiences: The End of One-Size-Fits-All Personalized user experiences now anticipate needs. Think:

Retail: Nike’s IoT-enabled stores suggest shoes based on past purchases and gait analysis.

Healthcare: Remote patient monitoring with wearable IoT detects arrhythmias before symptoms appear.

Sectoral Shockwaves: Where AIoT is Moving the Needle

🏥 Healthcare: From Treatment to Prevention Healthcare IoT enables continuous monitoring. AI-driven diagnostics analyze data from pacemakers, glucose monitors, and smart inhalers. Results?

45% fewer hospital readmissions (Mayo Clinic study)

Early detection of sepsis 6+ hours faster (Johns Hopkins AIoT model)

🌾 Agriculture: Precision Farming at Scale Precision agriculture uses soil moisture sensors, drone imagery, and ML yield prediction to boost output sustainably.

Case Study: John Deere’s AIoT tractors reduced water usage by 40% while increasing crop yields by 15% via real-time field analytics.

🏭 Manufacturing: The Zero-Waste Factory Manufacturing efficiency soars with AI-powered quality control and autonomous supply chains.

Data Point: Bosch’s AIoT factories achieve 99.9985% quality compliance and 25% faster production cycles through automated defect detection.

Navigating the Minefield: Challenges in Scaling AIoT

Even pioneers face hurdles:ChallengeSolutionData security in IoTEnd-to-end encryption + zero-trust architectureSystem interoperabilityAPI-first integration frameworksAI model driftContinuous MLOps monitoringEnergy constraintsTinyML algorithms for low-power devices

As Microsoft CEO Satya Nadella warns:

“Trust is the currency of the AIoT era. Without robust security and ethical governance, even the most brilliant systems will fail.”

How widedevsolution.com Engineers Tomorrow’s AIoT

At widedevsolution.com, we build scalable IoT systems that turn data deluge into profit. Our recent projects include:

A predictive maintenance platform for wind farms, cutting turbine repair costs by $2M/year.

An AI retail personalization engine boosting client sales conversions by 34%.

Smart city infrastructure reducing municipal energy waste by 28%.

We specialize in overcoming edge computing bottlenecks and designing cyber-physical systems with military-grade data security in IoT.

The Road Ahead: Your AIoT Action Plan

The AIoT market will hit $1.2T by 2030 (Statista). To lead, not follow:

Start small: Pilot sensor-driven process optimization in one workflow.

Prioritize security: Implement hardware-level encryption from day one.

Democratize data: Use low-code AI platforms to empower non-technical teams.

The Final Byte We stand at an inflection point. Artificial Intelligence of Things isn’t merely connecting devices—it’s weaving an intelligent fabric across our physical reality. From farms that whisper their needs to algorithms, to factories that self-heal, to cities that breathe efficiently, AIoT transforms data into wisdom.

The question isn’t if this revolution will impact your organization—it’s when. Companies leveraging AIoT integration today aren’t just future-proofing; they’re rewriting industry rulebooks. At widedevsolution.com, we turn convergence into competitive advantage. The machines are learning. The sensors are watching. The future is responding.

“The greatest achievement of AIoT won’t be smarter gadgets—it’ll be fundamentally reimagining how humanity solves its hardest problems.” — widedevsolution.com AI Lab

#artificial intelligence#predictive maintenance#smart city solutions#manufacturing efficiency#AI-powered quality control in manufacturing#edge computing for IoT security#scalable IoT systems for agriculture#AIoT integration#sensor data intelligence#ML yield prediction#cyber-physical#widedevsolution.com

0 notes

Text

Why Data Preparation Tools Are the Backbone of Modern Business Intelligence?

Raw data is a pure kind of data that comprises a wide range of information. However, raw data is gathered from many sources and might have a variety of forms, schemas, and data types. As a result, it cannot always be relied on for precise data analysis. Here is where the Data Preparation Tool comes into the picture. Data preparation is preparing raw data for future management and analysis. The…

View On WordPress

#ai#business#Business Intelligence#data#data automation#data cleansing#Data Integration#data prep#data prep software#data prep tools#data preparation#data preparation software#data preparation tools#Information Technology#Machine Learning#Market Intelligence#ML#technology

0 notes

Text

#artificial intelligence services#machine learning solutions#AI development company#machine learning development#AI services India#AI consulting services#ML model development#custom AI solutions#deep learning services#natural language processing#computer vision solutions#AI integration services#AI for business#enterprise AI solutions#machine learning consulting#predictive analytics#AI software development#intelligent automation

0 notes

Text

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic – Interview Series

New Post has been published on https://thedigitalinsider.com/aaron-kesler-sr-product-manager-ai-ml-at-snaplogic-interview-series/

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic – Interview Series

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic, is a certified product leader with over a decade of experience building scalable frameworks that blend design thinking, jobs to be done, and product discovery. He focuses on developing new AI-driven products and processes while mentoring aspiring PMs through his blog and coaching on strategy, execution, and customer-centric development.

SnapLogic is an AI-powered integration platform that helps enterprises connect applications, data, and APIs quickly and efficiently. With its low-code interface and intelligent automation, SnapLogic enables faster digital transformation across data engineering, IT, and business teams.

You’ve had quite the entrepreneurial journey, starting STAK in college and going on to be acquired by Carvertise. How did those early experiences shape your product mindset?

This was a really interesting time in my life. My roommate and I started STAK because we were bored with our coursework and wanted real-world experience. We never imagined it would lead to us getting acquired by what became Delaware’s poster startup. That experience really shaped my product mindset because I naturally gravitated toward talking to businesses, asking them about their problems, and building solutions. I didn’t even know what a product manager was back then—I was just doing the job.

At Carvertise, I started doing the same thing: working with their customers to understand pain points and develop solutions—again, well before I had the PM title. As an engineer, your job is to solve problems with technology. As a product manager, your job shifts to finding the right problems—the ones that are worth solving because they also drive business value. As an entrepreneur, especially without funding, your mindset becomes: how do I solve someone’s problem in a way that helps me put food on the table? That early scrappiness and hustle taught me to always look through different lenses. Whether you’re at a self-funded startup, a VC-backed company, or a healthcare giant, Maslow’s “basic need” mentality will always be the foundation.

You talk about your passion for coaching aspiring product managers. What advice do you wish you had when you were breaking into product?

The best advice I ever got—and the advice I give to aspiring PMs—is: “If you always argue from the customer’s perspective, you’ll never lose an argument.” That line is deceptively simple but incredibly powerful. It means you need to truly understand your customer—their needs, pain points, behavior, and context—so you’re not just showing up to meetings with opinions, but with insights. Without that, everything becomes HIPPO (highest paid person’s opinion), a battle of who has more power or louder opinions. With it, you become the person people turn to for clarity.

You’ve previously stated that every employee will soon work alongside a dozen AI agents. What does this AI-augmented future look like in a day-to-day workflow?

What may be interesting is that we are already in a reality where people are working with multiple AI agents – we’ve helped our customers like DCU plan, build, test, safeguard, and put dozens of agents to help their workforce. What’s fascinating is companies are building out organization charts of AI coworkers for each employee, based on their needs. For example, employees will have their own AI agents dedicated to certain use cases—such as an agent for drafting epics/user stories, one that assists with coding or prototyping or issues pull requests, and another that analyzes customer feedback – all sanctioned and orchestrated by IT because there’s a lot on the backend determining who has access to which data, which agents need to adhere to governance guidelines, etc. I don’t believe agents will replace humans, yet. There will be a human in the loop for the foreseeable future but they will remove the repetitive, low-value tasks so people can focus on higher-level thinking. In five years, I expect most teams will rely on agents the same way we rely on Slack or Google Docs today.

How do you recommend companies bridge the AI literacy gap between technical and non-technical teams?

Start small, have a clear plan of how this fits in with your data and application integration strategy, keep it hands-on to catch any surprises, and be open to iterating from the original goals and approach. Find problems by getting curious about the mundane tasks in your business. The highest-value problems to solve are often the boring ones that the unsung heroes are solving every day. We learned a lot of these best practices firsthand as we built agents to assist our SnapLogic finance department. The most important approach is to make sure you have secure guardrails on what types of data and applications certain employees or departments have access to.

Then companies should treat it like a college course: explain key terms simply, give people a chance to try tools themselves in controlled environments, and then follow up with deeper dives. We also make it known that it is okay not to know everything. AI is evolving fast, and no one’s an expert in every area. The key is helping teams understand what’s possible and giving them the confidence to ask the right questions.

What are some effective strategies you’ve seen for AI upskilling that go beyond generic training modules?

The best approach I’ve seen is letting people get their hands on it. Training is a great start—you need to show them how AI actually helps with the work they’re already doing. From there, treat this as a sanctioned approach to shadow IT, or shadow agents, as employees are creative to find solutions that may solve super particular problems only they have. We gave our field team and non-technical teams access to AgentCreator, SnapLogic’s agentic AI technology that eliminates the complexity of enterprise AI adoption, and empowered them to try building something and to report back with questions. This exercise led to real learning experiences because it was tied to their day-to-day work.

Do you see a risk in companies adopting AI tools without proper upskilling—what are some of the most common pitfalls?

The biggest risks I’ve seen are substantial governance and/or data security violations, which can lead to costly regulatory fines and the potential of putting customers’ data at risk. However, some of the most frequent risks I see are companies adopting AI tools without fully understanding what they are and are not capable of. AI isn’t magic. If your data is a mess or your teams don’t know how to use the tools, you’re not going to see value. Another issue is when organizations push adoption from the top down and don’t take into consideration the people actually executing the work. You can’t just roll something out and expect it to stick. You need champions to educate and guide folks, teams need a strong data strategy, time, and context to put up guardrails, and space to learn.

At SnapLogic, you’re working on new product development. How does AI factor into your product strategy today?

AI and customer feedback are at the heart of our product innovation strategy. It’s not just about adding AI features, it’s about rethinking how we can continually deliver more efficient and easy-to-use solutions for our customers that simplify how they interact with integrations and automation. We’re building products with both power users and non-technical users in mind—and AI helps bridge that gap.

How does SnapLogic’s AgentCreator tool help businesses build their own AI agents? Can you share a use case where this had a big impact?

AgentCreator is designed to help teams build real, enterprise-grade AI agents without writing a single line of code. It eliminates the need for experienced Python developers to build LLM-based applications from scratch and empowers teams across finance, HR, marketing, and IT to create AI-powered agents in just hours using natural language prompts. These agents are tightly integrated with enterprise data, so they can do more than just respond. Integrated agents automate complex workflows, reason through decisions, and act in real time, all within the business context.

AgentCreator has been a game-changer for our customers like Independent Bank, which used AgentCreator to launch voice and chat assistants to reduce the IT help desk ticket backlog and free up IT resources to focus on new GenAI initiatives. In addition, benefits administration provider Aptia used AgentCreator to automate one of its most manual and resource-intensive processes: benefits elections. What used to take hours of backend data entry now takes minutes, thanks to AI agents that streamline data translation and validation across systems.

SnapGPT allows integration via natural language. How has this democratized access for non-technical users?

SnapGPT, our integration copilot, is a great example of how GenAI is breaking down barriers in enterprise software. With it, users ranging from non-technical to technical can describe the outcome they want using simple natural language prompts—like asking to connect two systems or triggering a workflow—and the integration is built for them. SnapGPT goes beyond building integration pipelines—users can describe pipelines, create documentation, generate SQL queries and expressions, and transform data from one format to another with a simple prompt. It turns out, what was once a developer-heavy process into something accessible to employees across the business. It’s not just about saving time—it’s about shifting who gets to build. When more people across the business can contribute, you unlock faster iteration and more innovation.

What makes SnapLogic’s AI tools—like AutoSuggest and SnapGPT—different from other integration platforms on the market?

SnapLogic is the first generative integration platform that continuously unlocks the value of data across the modern enterprise at unprecedented speed and scale. With the ability to build cutting-edge GenAI applications in just hours — without writing code — along with SnapGPT, the first and most advanced GenAI-powered integration copilot, organizations can vastly accelerate business value. Other competitors’ GenAI capabilities are lacking or nonexistent. Unlike much of the competition, SnapLogic was born in the cloud and is purpose-built to manage the complexities of cloud, on-premises, and hybrid environments.

SnapLogic offers iterative development features, including automated validation and schema-on-read, which empower teams to finish projects faster. These features enable more integrators of varying skill levels to get up and running quickly, unlike competitors that mostly require highly skilled developers, which can slow down implementation significantly. SnapLogic is a highly performant platform that processes over four trillion documents monthly and can efficiently move data to data lakes and warehouses, while some competitors lack support for real-time integration and cannot support hybrid environments.

What excites you most about the future of product management in an AI-driven world?

What excites me most about the future of product management is the rise of one of the latest buzzwords to grace the AI space “vibe coding”—the ability to build working prototypes using natural language. I envision a world where everyone in the product trio—design, product management, and engineering—is hands-on with tools that translate ideas into real, functional solutions in real time. Instead of relying solely on engineers and designers to bring ideas to life, everyone will be able to create and iterate quickly.

Imagine being on a customer call and, in the moment, prototyping a live solution using their actual data. Instead of just listening to their proposed solutions, we could co-create with them and uncover better ways to solve their problems. This shift will make the product development process dramatically more collaborative, creative, and aligned. And that excites me because my favorite part of the job is building alongside others to solve meaningful problems.

Thank you for the great interview, readers who wish to learn more should visit SnapLogic.

#Administration#adoption#Advice#agent#Agentic AI#agents#ai#AI adoption#AI AGENTS#AI technology#ai tools#AI-powered#AI/ML#APIs#application integration#applications#approach#assistants#automation#backlog#bank#Behavior#Blog#Born#bridge#Building#Business#charts#Cloud#code

0 notes

Text

How to Integrate ColdFusion with AI/ML Services Like TensorFlow and OpenAI?

#How to Integrate ColdFusion with AI/ML Services Like TensorFlow and OpenAI?#Integrate ColdFusion with AI/ML Services Like TensorFlow and OpenAI

0 notes

Text

Why AI and ML Are the Future of Scalable MLOps Workflows?

In today’s fast-paced world of machine learning, speed and accuracy are paramount. But how can businesses ensure that their ML models are continuously improving, deployed efficiently, and constantly monitored for peak performance? Enter MLOps—a game-changing approach that combines the best of machine learning and operations to streamline the entire lifecycle of AI models. And now, with the infusion of AI and ML into MLOps itself, the possibilities are growing even more exciting.

Imagine a world where model deployment isn’t just automated but intelligently optimized, where model monitoring happens in real-time without human intervention, and where continuous learning is baked into every step of the process. This isn’t a far-off vision—it’s the future of MLOps, and AI/ML is at its heart. Let’s dive into how these powerful technologies are transforming MLOps and taking machine learning to the next level.

What is MLOps?

MLOps (Machine Learning Operations) combines machine learning and operations to streamline the end-to-end lifecycle of ML models. It ensures faster deployment, continuous improvement, and efficient management of models in production. MLOps is crucial for automating tasks, reducing manual intervention, and maintaining model performance over time.

Key Components of MLOps

Continuous Integration/Continuous Deployment (CI/CD): Automates testing, integration, and deployment of models, ensuring faster updates and minimal manual effort.

Model Versioning: Tracks different model versions for easy comparison, rollback, and collaboration.

Model Testing: Validates models against real-world data to ensure performance, accuracy, and reliability through automated tests.

Monitoring and Management: Continuously tracks model performance to detect issues like drift, ensuring timely updates and interventions.

Differences Between Traditional Software DevOps and MLOps

Focus: DevOps handles software code deployment, while MLOps focuses on managing evolving ML models.

Data Dependency: MLOps requires constant data handling and preprocessing, unlike DevOps, which primarily deals with software code.

Monitoring: MLOps monitors model behavior over time, while DevOps focuses on application performance.

Continuous Training: MLOps involves frequent model retraining, unlike traditional DevOps, which deploys software updates less often.

AI/ML in MLOps: A Powerful Partnership

As machine learning continues to evolve, AI and ML technologies are playing an increasingly vital role in enhancing MLOps workflows. Together, they bring intelligence, automation, and adaptability to the model lifecycle, making operations smarter, faster, and more efficient.

Enhancing MLOps with AI and ML: By embedding AI/ML capabilities into MLOps, teams can automate critical yet time-consuming tasks, reduce manual errors, and ensure models remain high-performing in production. These technologies don’t just support MLOps—they supercharge it.

Automating Repetitive Tasks: Machine learning algorithms are now used to handle tasks that once required extensive manual effort, such as:

Data Preprocessing: Automatically cleaning, transforming, and validating data.

Feature Engineering: Identifying the most relevant features for a model based on data patterns.

Model Selection and Hyperparameter Tuning: Using AutoML to test multiple algorithms and configurations, selecting the best-performing combination with minimal human input.

This level of automation accelerates model development and ensures consistent, scalable results.

Intelligent Monitoring and Self-Healing: AI also plays a key role in model monitoring and maintenance:

Predictive Monitoring: AI can detect early signs of model drift, performance degradation, or data anomalies before they impact business outcomes.

Self-Healing Systems: Advanced systems can trigger automatic retraining or rollback actions when issues are detected, keeping models accurate and reliable without waiting for manual intervention.

Key Applications of AI/ML in MLOps

AI and machine learning aren’t just being managed by MLOps—they’re actively enhancing it. From training models to scaling systems, AI/ML technologies are being used to automate, optimize, and future-proof the entire machine learning pipeline. Here are some of the key applications:

1. Automated Model Training and Tuning: Traditionally, choosing the right algorithm and tuning hyperparameters required expert knowledge and extensive trial and error. With AI/ML-powered tools like AutoML, this process is now largely automated. These tools can:

Test multiple models simultaneously

Optimize hyperparameters

Select the best-performing configuration

This not only speeds up experimentation but also improves model performance with less manual intervention.

2. Continuous Integration and Deployment (CI/CD): AI streamlines CI/CD pipelines by automating critical tasks in the deployment process. It can:

Validate data consistency and schema changes

Automatically test and promote new models

Reduce deployment risks through anomaly detection

By using AI, teams can achieve faster, safer, and more consistent model deployments at scale.

3. Model Monitoring and Management: Once a model is live, its job isn’t done—constant monitoring is essential. AI systems help by:

Detecting performance drift, data shifts, or anomalies

Sending alerts or triggering automated retraining when issues arise

Ensuring models remain accurate and reliable over time

This proactive approach keeps models aligned with real-world conditions, even as data changes.

4. Scaling and Performance Optimization: As ML workloads grow, resource management becomes critical. AI helps optimize performance by:

Dynamically allocating compute resources based on demand

Predicting system load and scaling infrastructure accordingly

Identifying bottlenecks and inefficiencies in real-time

These optimizations lead to cost savings and ensure high availability in large-scale ML deployments.

Benefits of Integrating AI/ML in MLOps

Bringing AI and ML into MLOps doesn’t just refine processes—it transforms them. By embedding intelligence and automation into every stage of the ML lifecycle, organizations can unlock significant operational and strategic advantages. Here are the key benefits:

1. Increased Efficiency and Faster Deployment Cycles: AI-driven automation accelerates everything from data preprocessing to model deployment. With fewer manual steps and smarter workflows, teams can build, test, and deploy models much faster, cutting down time-to-market and allowing quicker experimentation.

2. Enhanced Accuracy in Predictive Models: With ML algorithms optimizing model selection and tuning, the chances of deploying high-performing models increase. AI also ensures that models are continuously evaluated and updated, improving decision-making with more accurate, real-time predictions.

3. Reduced Human Intervention and Manual Errors: Automating repetitive tasks minimizes the risk of human errors, streamlines collaboration, and frees up data scientists and engineers to focus on higher-level strategy and innovation. This leads to more consistent outcomes and reduced operational overhead.

4. Continuous Improvement Through Feedback Loops: AI-powered MLOps systems enable continuous learning. By monitoring model performance and feeding insights back into training pipelines, the system evolves automatically, adjusting to new data and changing environments without manual retraining.

Integrating AI/ML into MLOps doesn’t just make operations smarter—it builds a foundation for scalable, self-improving systems that can keep pace with the demands of modern machine learning.

Future of AI/ML in MLOps

The future of MLOps is poised to become even more intelligent and autonomous, thanks to rapid advancements in AI and ML technologies. Trends like AutoML, reinforcement learning, and explainable AI (XAI) are already reshaping how machine learning workflows are built and managed. AutoML is streamlining the entire modeling process—from data preprocessing to model deployment—making it more accessible and efficient. Reinforcement learning is being explored for dynamic resource optimization and decision-making within pipelines, while explainable AI is becoming essential to ensure transparency, fairness, and trust in automated systems.

Looking ahead, AI/ML will drive the development of fully autonomous machine learning pipelines—systems capable of learning from performance metrics, retraining themselves, and adapting to new data with minimal human input. These self-sustaining workflows will not only improve speed and scalability but also ensure long-term model reliability in real-world environments. As organizations increasingly rely on AI for critical decisions, MLOps will evolve into a more strategic, intelligent framework—one that blends automation, adaptability, and accountability to meet the growing demands of AI-driven enterprises.

As AI and ML continue to evolve, their integration into MLOps is proving to be a game-changer, enabling smarter automation, faster deployments, and more resilient model management. From streamlining repetitive tasks to powering predictive monitoring and self-healing systems, AI/ML is transforming MLOps into a dynamic, intelligent backbone for machine learning at scale. Looking ahead, innovations like AutoML and explainable AI will further refine how we build, deploy, and maintain ML models. For organizations aiming to stay competitive in a data-driven world, embracing AI-powered MLOps isn’t just an option—it’s a necessity. By investing in this synergy today, businesses can future-proof their ML operations and unlock faster, smarter, and more reliable outcomes tomorrow.

#AI and ML#future of AI and ML#What is MLOps#Differences Between Traditional Software DevOps and MLOps#Benefits of Integrating AI/ML in MLOps

0 notes

Text

Leading Machine Learning Company – Transforming Businesses with Chirpn

Looking to leverage machine learning for smarter business solutions? Chirpn, a top machine learning company, provides innovative, AI-driven solutions that enhance automation, optimize decision-making, and improve operational efficiency. Our cutting-edge machine learning technologies help businesses analyze data, predict trends, and drive intelligent automation. With scalable and customized ML solutions, Chirpn empowers organizations to innovate, stay competitive, and achieve digital transformation. Whether for predictive analytics, NLP, or AI-powered automation, we deliver future-ready solutions tailored to your needs.

#ai software development companies#top machine learning companies#ai development companies#ai ml development company#ai product development#crm implementation services#machine learning companies#what is api integration

0 notes

Text

6G: When Reality embraces Imagination, Present coexists with Future

What is 6G?

6G is the next generation of mobile networks, following on from 5G. Now, instead of reducing it to general semantics, let’s try to comprehend what it really is and why we should care. To start with, try envisioning a world where we can download anything and everything, right from a full-length Grand Slam Final match to a 4K resolution movie in less than a second, what a boon that would be for us - Tennis Lovers, ditto cinephiles. Now, that’s just one part of it, there is certainly more to this than meets the eye. It's about laying the groundwork for the Internet of Everything (IoE), where the network becomes a seamless fabric of interconnected devices that not only communicate with us but also with each other. It’s a world where we can interact with holograms, explore, and enhance the nitty grittiest of Virtual reality, offering a digitally immersive experience like never before. On delving deeper into it, we find ourselves in a world where we can control devices and machines with our thoughts, equipped with machines that are interconnected and intelligent too, and this is not even a science fiction, it is the future of 6G beckoning mankind.

Features of 6G:

A significant chunk of 6G research centers around not only transmitting data at Ultra High frequencies (UHF) utilizing the untapped capabilities of unused spectrum, but also optimize their efficiency. To add to it, 6G may also look to machines as an amplifier for one another’s data thereby allowing devices to expand coverage on top of using it. Moreover, 6G is expected to be the backbone of future digital economies, enabling new forms of communication like three-dimensional holographic communication, high-precision manufacturing, and advanced wearable technology networks. This is the closest answer to the burning question of the need for 6G when 5G is already a huge upliftment over its predecessors in terms of speed of transferring data which is often of utmost interest.

Advantages of 6G Technology:

Ultra-Low Latency: 6G will have much less latency than 5G (of the order of 100, bringing it down to less than 100 microseconds) intensifying Augmented Reality (AR) and Virtual Reality (VR) usage.

Energy Harvesting: Aims to enable devices to synthesize energy from vibration, light, and temperature gradients, eliminating battery charging and deployment shortcomings.

Advanced AI/ML Integration: Improve already existing Self Learning and Healing capabilities of AI/ML defined native air interfaces.

Expanded IoT Applications: Expanding IOT use cases ranging from Logistics, Agriculture, Environmental monitoring, Telehealth etc.

Enhanced Security: Leveraging on Advanced Encryption technologies and Artificial Intelligence based security measures to keep data safer than how it used to be.

When will 6G come out?

Now that we know how capable 6G is and the way it can shape our life in general, it must be tempting to step into a future that promises enormous opportunities. Embracing the future, 3GPP and partners ARIB, ATIS, CCSA, ETSI, TSDSI, TTA, and TTC unite to forge the path to 6G. With Release-21 marking the dawn of 6G by 2028, this global collaboration is set to revolutionize connectivity, merging digital and physical realms, and unveiling a new era of innovation and inclusivity. Qualcomm has floated a 6G development plan with 2030 as a projected Rollout date which echoes Ericsson’s 6G messaging deployment timeframe. Coverage may depend on your geographic location. As of now, China is the only country to have launched first 6G Satellite.

Disadvantages of 6G Technology:

As 6G uses cell-less architecture and thrives on multi-connectivity, the pain point here is to design the new architecture, where User Equipment (UE) connects to the Radio Access Network (RAN) and not to a single cell.

Terahertz (THz) waves which 6G spectrum aims to utilize, are very sensitive to shadows and susceptible to free space fading, all of which calls for Ultra-large-scale antenna which is a major challenge.

Drawback of Visible Light Communication (VLC) also needs to be taken into consideration, because 6G uses visible light frequencies for part of its communications.

Lack of uniform standards and protocols across the Telecom industry since it still is in the Planning stage.

6G's expanded capabilities increase vulnerability to cyber threats, necessitating enhanced security in interconnected, intelligent networks.

Designing circuitries of network and terminal equipment for low energy consumption.

Mastering the Challenges: Pioneering Solutions for 6G's Future:

In addressing the complexities of 6G technology, the industry is embarking on a transformative journey. The shift to a cell-less architecture heralds a new era of seamless, multi-connectivity, empowering devices to interact with the Radio Access Network (RAN) directly. Specialists are tackling the sensitivities of THz waves and VLC by engineering groundbreaking ultra-large-scale antennas and sophisticated modulation techniques that promise resilience and efficiency. Global collaboration is pivotal, as experts across borders unify to forge comprehensive standards and protocols, ensuring 6G's interoperability and security. The quest for enhanced cybersecurity and energy efficiency in 6G is inspiring ingenious solutions, positioning this next-gen technology as the cornerstone of a sustainable, interconnected future.

Conclusion:

While 6G is a tantalizing prospect in transforming wireless technology domain and beyond, we cannot just downplay it as a successor of 5G, it is a revolution that can change the history of humankind in a way that we cannot fathom. The Global 6G market size and share revenue is expected to reach around USD 40.5 billion by 2032. It remains to be seen how prepared we will be when 6G comes knocking at our doors, especially as we stare at compatibility issues which need to be touched upon. A key aspect to monitor will be the retail pricing per GB of data versus the network cost per GB, as this margin is crucial for telecom operators' profitability. Initially, the ratio may start favourably high but tends to decrease over time. To sustain revenue, operators must explore unlicensed bands and areas like precision healthcare, smart agriculture, and digital twin technologies, ensuring growth in ARPU and preventing potential financial strain, Unlicensed Frequency bands come with limitations of their own like Higher Congestion and Reduced security.

As always, there will be entities playing foul. A set of protocols and new regulatory need to be agreed upon across the verticals to secure privacy. However, forsaking all excitements at these exploits of 6G in the name of “perspective” would be joyless. Similarly, procrastinating about the concerns around 6G for no reason other than extrapolating an unknowable future, is equally meaningless. Time stubbornly marches on, and the future will likely be upon us sooner than we would prefer. All we can do right now is wait and see how drivers of this phenomenon called 6G maneuver themselves through the ever-evolving technological matrix.

To know more visit: Covalensedigital

Visit: Covalensedigital

Join us at MWC 2025

0 notes

Text

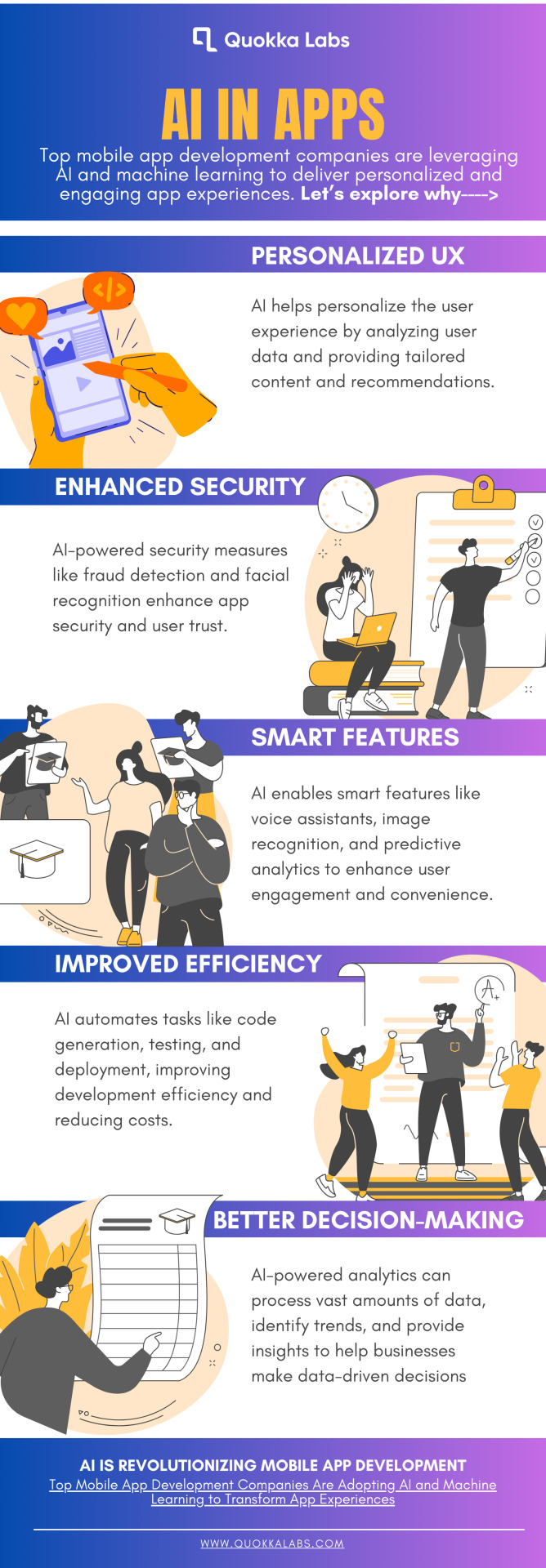

Why Top Mobile App Development Companies Are Adopting AI and Machine Learning to Transform App Experiences? Let's explore how these two drivers are unlocking new initiatives for mobile app development.

Top Mobile App Development Companies

0 notes

Text

Whitepaper: Accelerating Digital Transformation with Low-Code AI/ML Integration

Executive Summary

This whitepaper explores how Argos Labs' innovative low-code platform, Argos Low-Code Python, is revolutionizing digital transformation by seamlessly integrating AI and ML capabilities. We highlight how our unique approach combines the simplicity of low-code development with the power of Python-based AI/ML solutions, enabling organizations to rapidly innovate and adapt in today's fast-paced digital landscape. The paper outlines the key features of our platform, its benefits, and provides a step-by-step guide for leveraging ARGOS to accelerate digital initiatives.

Introduction

Problem Statement

Organizations face significant challenges in implementing AI and ML solutions due to the complexity of these technologies and the scarcity of skilled professionals. Traditional development approaches often result in lengthy project timelines and high costs, hindering digital transformation efforts.

Background

Low-code platforms have emerged as a potential solution to accelerate application development. However, many lack the flexibility to incorporate advanced AI and ML functionalities, creating a gap in the market for a solution that combines low-code simplicity with AI/ML capabilities.

Purpose and Objectives

This whitepaper aims to demonstrate how ARGOS Low-Code Python bridges this gap, offering a comprehensive solution for organizations looking to accelerate their digital transformation initiatives. We will explore the platform's features, benefits, and provide a step-by-step guide for implementation.

Step-by-Step Analysis/Solution

Step 1: Leveraging ARGOS Low-Code Python for AI/ML Integration

Argos Low-Code Python provides a visual development environment that allows users to create applications incorporating AI and ML functionalities without extensive coding.

Key considerations and challenges

1. Ensuring the platform is accessible to users with varying levels of technical expertise

2. Maintaining flexibility for advanced users who require custom solutions

Expected outcomes and benefits

1. Rapid development of AI/ML-powered applications

2. Reduced reliance on scarce technical talent

3. Improved collaboration between business and IT teams

Step 2: Customization through POT SDK

The Python-to-Operations Toolset (POT) SDK enables users to create custom low-code building blocks from any Python solution, ensuring maximum flexibility and extensibility.

Key considerations and challenges

1. Providing comprehensive documentation and support for SDK users

2. Ensuring compatibility with a wide range of Python libraries and frameworks

Expected outcomes and benefits

1. Full customization of the Low-code Python platform

2. Ability to integrate specialized AI/ML solutions

3. Increased platform adoption among advanced users

Step 3: Implementing Cross-Platform Automation with ARGOS PAM

ARGOS PAM allows for the execution of business automation tasks across various platforms, including Windows, Android, Linux, and iOS.

Key considerations and challenges

Ensuring consistent performance across different operating systems

Managing security and permissions for cross-platform operations

Expected outcomes and benefits:

Seamless integration of AI/ML solutions into existing business processes

Improved efficiency through automation of repetitive tasks

Enhanced scalability of digital transformation initiatives

Step 4: Streamlining Development with ARGOS STU

ARGOS STU (Scenario Studio) provides a development toolkit for automating business process scenarios, enabling rapid prototyping and iteration.

Key considerations and challenges

1. Designing an intuitive interface for creating complex business scenarios

2. Balancing simplicity with the need for advanced functionality

Expected outcomes and benefits

4. Faster development of end-to-end business process automation

5. Improved collaboration between business analysts and developers

6. Reduced time-to-market for new digital initiatives

Step 5: Centralized Management with ARGOS Supervisor

ARGOS Supervisor offers a comprehensive bot management dashboard for scheduling, controlling, and monitoring the execution of bots.

Key considerations and challenges

1. Ensuring scalability to handle enterprise-level bot deployments

2. Providing robust analytics and reporting capabilities

3. Expected outcomes and benefits:

4. Improved visibility and control over automated processes

5. Enhanced operational efficiency through centralized management

6. Data-driven decision making through comprehensive analytics

Conclusion

The ARGOS Low-Code Python platform and its ecosystem of tools provide a powerful solution for organizations looking to accelerate their digital transformation initiatives. By combining low-code simplicity with advanced AI/ML capabilities, ARGOS enables rapid innovation, reduces reliance on scarce technical talent, and improves collaboration between business and IT teams.

Key takeaways

ARGOS Low-Code Python bridges the gap between low-code development and advanced AI/ML integration.

The POT SDK enables full customization and flexibility for specialized solutions.

Cross-platform automation capabilities ensure seamless integration across diverse IT environments.

Rapid prototyping and development tools accelerate time-to-market for digital initiatives.

Centralized management and monitoring improve operational efficiency and decision-making.

The ARGOS ecosystem offers a comprehensive solution for organizations seeking to harness the power of AI and ML in their digital transformation journey. By leveraging our platform, businesses can overcome traditional barriers to innovation and gain a competitive edge in today's rapidly evolving digital landscape.

We invite organizations to explore the potential of ARGOS Low-Code Python and its associated tools. Take the first step towards accelerating your digital transformation by:

Requesting a demo of the ARGOS platform to see its capabilities firsthand.

Downloading a trial version of ARGOS Low-Code Python to experience its ease of use and flexibility.

Contacting our team of experts to discuss how ARGOS can address your specific digital transformation challenges.

By partnering with Argos Labs, you'll gain access to cutting-edge technology and expert support, positioning your organization at the forefront of digital innovation.

References:

1. Gartner

2. Forrester Research

3. IDC

4. McKinsey & Company

5. World Economic Forum

6. MIT Sloan Management Review.

7. Harvard Business Review

About Argos Labs:

Argos Labs is a leading provider of low-code AI/ML integration platforms, serving over 500 users globally. We empower businesses to harness the transformative power of AI without the complexities of traditional software development. Our solutions enable rapid prototyping, efficient deployment, and seamless integration of cutting-edge AI technologies, empowering organizations to achieve significant improvements in operational efficiency, decision-making, and overall business performance.

0 notes

Text

Empowering Modern Retail: The Evolution of Digital Commerce Platforms

Many Digital Commerce Platform providers are increasingly adopting a cloud-native approach to foster innovation in the e-commerce sector. Cloud-native platforms empower retailers to launch new digital commerce solutions and enhance speed to market. Consequently, cloud-native deployment is becoming a major trend in application development and deployment for organizations. This shift aims to…

View On WordPress

#ai#business#Digital Commerce Platform#digital e commerce platforms#E-commerce Solutions#ecommerce#ecommerce web marketing#ecommerce website marketing#Information Technology#iot#MarTech Integration#ML#Omnichannel Commerce#Retail

0 notes

Text

How AI and ML Is the Next Digital Frontier for Education system

In the quickly advancing landscape of education, innovative advancements are driving exceptional change. Among these innovations, Artificial Intelligence (AI) and Machine Learning (ML) stand out as catalysts for revolutionizing traditional education and learning techniques.

Personalized Learning Experience:

AI and ML algorithms have the remarkable capability to analyze vast amounts of student data, allowing educators to customize learning experiences to individual needs. By understanding each student's strengths, weaknesses, and learning styles, teachers can deliver personalized instruction that cultivates deeper engagement and enhances academic results.

Adaptive Learning Platforms:

Traditional one-size-fits-all approaches to education are giving way to AI-powered adaptive learning platforms. These platforms continuously assess student performance and adapt learning materials in real time, ensuring that each student receives personalized support and guidance. This adaptive approach not only improves learning outcomes but also promotes a more inclusive and equitable learning environment.

Smart Content Creation and Curation:

AI and ML enable educators to create and curate dynamic learning materials custom-made to meet the different needs of their students. Through intelligent content curation tools, teachers can efficiently sift through vast stores of resources to find the most relevant and engaging content for their lessons, enhancing the overall quality of education.

Efficiency in Administrative Processes:

AI and ML are also revolutionizing administrative tasks within educational institutions. From automating routine administrative processes to optimizing resource allocation and scheduling, these technologies streamline operations, allowing educators to focus more time and energy on student-centered activities.

Ethical Considerations and Challenges:

While the potential benefits of AI and ML Integration in education are immense, it's essential to address ethical considerations and challenges. Issues such as data protection, algorithmic inclination, and digital equity require careful consideration to guarantee that these technologies are executed responsibly and equitably.

In conclusion, AI and ML are balanced to become the next digital frontier for the education framework, advertising exciting opportunities for personalized learning, adaptive instruction, and authoritative efficiency. By grasping these technologies with foresight and empathy, educators can unlock the full potential of education and empower learners to thrive in the digital age.

0 notes

Link

Capture, edit and share content in real-time through Grabyo and publish directly to Veritone’s AI-powered Digital Media Hub for onward management and monetization Veritone, Inc., a leader in designing human-centered AI solutions, and Grabyo, a leading cloud video platform for live broadcasting, live clipping and distribution, announced an integration that creates a connected workflow to […]

#AI/ML#Content#Technology#AI-driven content management#AI-Powered Live Clipping#Asset Management#Audience Engagement#cloud video platform#Distribution#Grabyo#integration#Live broadcasting#live clipping#media landscape#News#Partner#Revenue Streams#Veritone

0 notes

Text

Agents as a Service- Is It the Next Big Thing in AI Product Development?

The AI revolution is no longer just about automation, it’s about authenticity and autonomy. Businesses have long relied on AI-driven tools to optimize workflows, but these tools have traditionally operated within predefined boundaries. Now, a new model is emerging: Agents as a Service (AaaS).

AaaS introduces autonomous AI agents that learn from data and continuously adapt in real time. Unlike traditional automation solutions that rely on rigid workflows, these AI agents operate dynamically, making intelligent choices based on evolving business contexts.

The implications of this shift are profound. Companies that integrate AaaS will not just streamline processes—they will redefine how they operate, scale, and compete. From decision-making to customer engagement, and risk management to AI product development, AaaS is poised to become a game-changer for enterprises looking to future-proof their operations.

This article explores AaaS from a thought leadership perspective—unpacking its transformative potential, key use cases, and the challenges businesses need to address to maximize its benefits.

PaaS, SaaS, and AaaS- What is it?

At its core, AaaS is a cloud-based model that delivers AI-powered agents capable of executing complex business functions autonomously. These agents are digital employees, learning, adapting, and making independent decisions based on real-time data.

Unlike static automation, AaaS agents:

Proactively analyze data and take action without waiting for human input.

Collaborate across departments to optimize workflows.

Personalize interactions with customers and employees.

Predict and mitigate risks before they impact operations.

The result? A more responsive, agile, and intelligent business ecosystem that doesn’t just run more efficiently but operates strategically and autonomously.

Why AaaS is the next logical step after SaaS?

AI Agents as Decision-Makers

AI-powered agents are no longer just task executors—they are evolving into decision-makers that influence strategic planning.

For example, financial AI agents analyze market trends, economic indicators, and investment risks to guide capital allocation decisions. In supply chain management, autonomous agents continuously adjust inventory levels, vendor contracts, and delivery schedules to optimize logistics dynamically.

Case Study: AI in Financial Forecasting

A McKinsey study found that AI-driven financial analysis reduces forecasting errors by 20–50%, allowing businesses to make more data-driven, proactive decisions.

This capability extends beyond finance. AI-driven business intelligence systems in marketing, HR, and operations are helping leaders make more precise, informed decisions with minimal human intervention.

Reducing Operational Costs & Scaling Efficiently

AaaS significantly reduces overhead costs by automating both routine and cognitive-heavy tasks, leading to:

30–50% lower customer service costs through AI-driven virtual assistants.

Faster, more accurate HR processes, reducing hiring times by 70%.

Automated legal and compliance monitoring, minimizing the need for large in-house teams.

By eliminating inefficiencies, AI agents allow businesses to scale operations without proportionally increasing costs.

AI-Powered Hyper-Personalization in Customer Engagement

Customers today expect seamless, personalized experiences. AI agents power real-time hyper-personalization by analyzing customer behavior, preferences, and interactions to:

Offer personalized product recommendations in e-commerce.

Deliver AI-generated marketing campaigns tailored to specific customer segments.

Provide instant, intelligent customer support with contextual understanding.

Example: AI in Retail & E-Commerce

According to Accenture, AI-powered recommendation engines boost retail sales by up to 30% by delivering highly relevant, personalized offers.

AaaS extends this capability beyond e-commerce, enabling SaaS companies, B2B service providers, and enterprise solutions to refine customer engagement strategies and drive loyalty.

AI Agents in Risk Management & Compliance

Businesses face growing regulatory scrutiny and cybersecurity threats. AI agents proactively identify risks, monitor compliance requirements, and prevent security breaches before they escalate.

Financial services use AI agents to detect fraudulent transactions within milliseconds.

Healthcare organizations deploy AI for HIPAA compliance monitoring and patient risk assessments.

Cybersecurity teams leverage AI for real-time threat detection and mitigation.

Autonomous AI Agents in Sales & Marketing

AI agents are reshaping sales and marketing by automating lead qualification, optimizing outreach strategies, and delivering AI-driven customer interactions.

AI-driven sales assistants identify high-value prospects and suggest optimal engagement strategies.

Automated chatbots enhance customer onboarding, improving conversion rates.

AI-powered sentiment analysis helps businesses tailor their messaging based on audience preferences.

A study by Forrester found that AI-driven sales enablement solutions increase deal closure rates by 15–20% while reducing the sales cycle duration.

AI in Supply Chain and Logistics

AaaS is driving unprecedented efficiency in supply chain management by making real-time data-driven adjustments.

Predictive analytics optimize inventory levels, preventing shortages and overstocking.

AI-powered route optimization reduces transportation costs.

AI agents manage vendor negotiations, streamlining procurement processes.

AI-Powered Workplace Automation

AI agents are also redefining workplace productivity by handling:

Project management, ensuring deadlines are met.

Employee training, delivering personalized learning experiences.

Administrative tasks, freeing up employees for strategic work.

A survey by PwC found that businesses implementing AI-driven workplace automation saw a 40% increase in employee productivity.

AaaS & AI product development- What is the deal?

For AaaS to be successful, companies must invest in robust AI product development that ensures scalability, security, and seamless integration.

Building Scalable AI Architectures

AI agents require cloud-native, high-performance computing environments that support real-time decision-making across global operations.

Prioritizing Explainable AI (XAI)

Businesses need AI models that explain their decisions transparently—especially in regulated industries like finance and healthcare. XAI ensures AI-driven recommendations are trustworthy, auditable, and bias-free.

Enhancing Human-AI Collaboration

AI agents should be designed to complement human expertise, not replace it. Employees must shift toward strategic roles while AI handles data-intensive tasks.

Why should you care about AaaS adoption and how can you overcome them?

Integrating AI with Legacy Systems

Many enterprises still operate on outdated IT infrastructure, making AI adoption challenging. The solution? A phased integration approach that modernizes tech stacks incrementally.

Ethical & Security Concerns

AaaS introduces new risks related to bias, security, and compliance. Businesses must implement:

Ethical AI frameworks to prevent bias in decision-making.

Robust security measures to protect data and prevent cyber threats.

Change Management & Workforce Adaptation

AI-driven business models require a culture shift. Companies must:

Provide AI literacy training for employees.

Foster a collaborative AI-human work environment to ensure smooth adoption.

The next frontier- AI-driven business autonomy

The adoption of Agents as a Service marks the beginning of a new era in business autonomy. Rather than simply optimizing existing processes, AI agents are transforming industries by enabling intelligent, adaptive, and proactive operations.

For businesses that invest in AI product development and strategically integrate AaaS, the potential is limitless. Companies that fail to adopt these AI-driven models, however, risk being left behind in a rapidly evolving digital economy.

#ai software development companies#top machine learning companies#ai development companies#ai ml development company#ai product development#machine learning companies#crm implementation services#what is api integration#Product & Platform Development

0 notes

Text

Implementing Microservices on AWS: A Value Driven Architecture

I have been lucky to have some good mentors in my life some of them popular names especially when it comes to value based architecture, I find countless similarities in modern cloud based Microservices architecture, particularly when deployed on Amazon Web Services (AWS). In this blog post I will explain the principles of Value Driven Architecture, the benefits of microservices, challenges of adopting microservices, and how AWS facilitates this approach.

Principles of Value Driven Architecture

Value Driven Architecture focuses on business goals to make architectural decisions. Every architectural decision is made based on organization’s strategic objectives, and aligned with long-term transformation roadmap. All decisions are made to prioritize user experience, systems intuitiveness, and responsiveness as per customer demands. Core of the VDA philosophy is to adopt with real-world usage and performance metrics. Organizations must monitor metrices carefully to meet current demand and predict future business needs.

Balancing Flexibility and Provisioning

An over flexible architecture or over provisioned system hearts profitability and under provisioned systems may heart business reputation or growth. Finding the right balance comes with real-time system monitoring and its elasticity. It is none other than today’s Microservices Architecture.

Adopting Microservices Architecture

When deciding to adopt a microservices architecture, organizations first consider whether to use a managed or self-managed approach. ECS and Kubernetes are both capable of running microservices, but the choice depends on factors such as vendor lock-in, time-to-market, flexibility, and long-term goals.

Benefits and Challenges of Microservices

Microservices architecture offers scalability, agility, resilience, and innovation. However, it introduces challenge to deal with data redundancy and inconsistency. Each microservice manages its own data separately, which might result in data duplication between services. To address this, each microservice should have complete control over its data, and businesses should carefully design, review and adjust microservices boundaries to reduce development time and therefore time to market.

Enhancing Value with AWS

When combined with microservices architecture on Amazon Web Services (AWS), VDA can significantly enhance agility, scalability, and innovation. AWS has adopted many microservices design patterns to create, deploy and monitor microservices quickly – which significantly enhanced Return on Investment (ROI).

For example, AWS Lambda allows developers to run code without provisioning or managing servers. This serverless approach reduces operational complexity and costs, that allows teams to focus on delivering value. Organizations only pay for the compute time consumed, making it cost-effective for variable workloads.

Monitoring and Security

Monitoring and Analysing system matrices are key to success for microservices. AWS tools like Amazon Inspector are essential to improve system security and compliance. AWS provides tools like Amazon CloudWatch and AWS X-Ray for monitoring application performance and tracking requests across microservices. By analysing this data, teams can make informed decisions about architectural changes and optimizations, ensuring that their systems continue to deliver value.

Infrastructure and communication protocols

Effective infrastructure layers and clear communication protocols are essential for microservices to function properly. Organizations should set up resource sharing infrastructure and define communication protocols for security, serialization, and error handling. Poor configurations can lead to increased latency, reduce availability defeating the purpose of VDA.

Cultural and Organizational Transformation

Transitioning to microservices necessitates a transformation in company culture and processes. DevOps requires developers to collaborate in cross-functional teams, accepting responsibility for both service provisioning and failure. Implementing and managing microservices requires specialized abilities. Organizational preparation in terms of development approaches, communication structures, and operational procedures is critical.

Leveraging AI and ML on AWS

Organizations are looking to extract intelligence from their data. Building AI models requires special expertise and takes long time. Organizations can use the AI and ML capabilities provided by AWS Lambda to boost productivity quickly. These capabilities include deep learning-based services for image and video analysis, natural language processing (NLP) services for text analysis, fully managed services for model construction and deployment, and platforms for creating conversational interfaces and OCR services.

Using microservices on Amazon Web Services (AWS) can help companies build flexible, scalable, and reliable software systems that support their business goals. By designing their architecture to deliver value, organizations can improve customer satisfaction, encourage innovation, and stay ahead of their competitors in the fast-changing digital world. As software development continues to evolve, it will be essential for companies to focus on providing value in their architectural choices.

Conclusion:

At Covalensedigital, leveraging AWS for microservices is crucial due to its extensive functionalities and versatility in managing microservices architecture. By incorporating micro-frontends, we enhance overall business goals. Utilizing mechanisms like SQS, Lambda, and EKS clusters, we ensure a clear separation of concerns for various business values, fostering innovation, scalability, and system stability. This strategic approach not only aligns with our organization’s business vision but also provides a competitive edge, delivering significant value to our customers.

We are thrilled to be a part of Innovate Asia 2024! Experience our groundbreaking Catalyst Project - SmartHive xG: Horizon and dive into an exclusive speaking session that will dive into how AI-driven strategies are reshaping the future of business ecosystems.

To know more visit: Innovate Asia 2024

Covalensedigital is excited to announce our participation at AfricaCom 2024, the largest and most influential technology event on the African continent. As a leader in digital transformation, we invite you to visit us at Booth# F20 to explore our innovative solutions designed to empower telecom operators, enterprises, and digital service providers to thrive in an ever-evolving digital landscape.

To know more Visit: AfricaCom 2024

#Innovate Asia 2024#AfricaCom 2024#Pre-integrated BSS/OSS#B2B telcos#5G Services#Enterprise BSS Platforms#SaaS Platform#AI#ML#Micro Services#Architecture#AWS#ROI#SQS

0 notes